An Improved YOLOv7-Tiny Method for the Segmentation of Images of Vegetable Fields

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

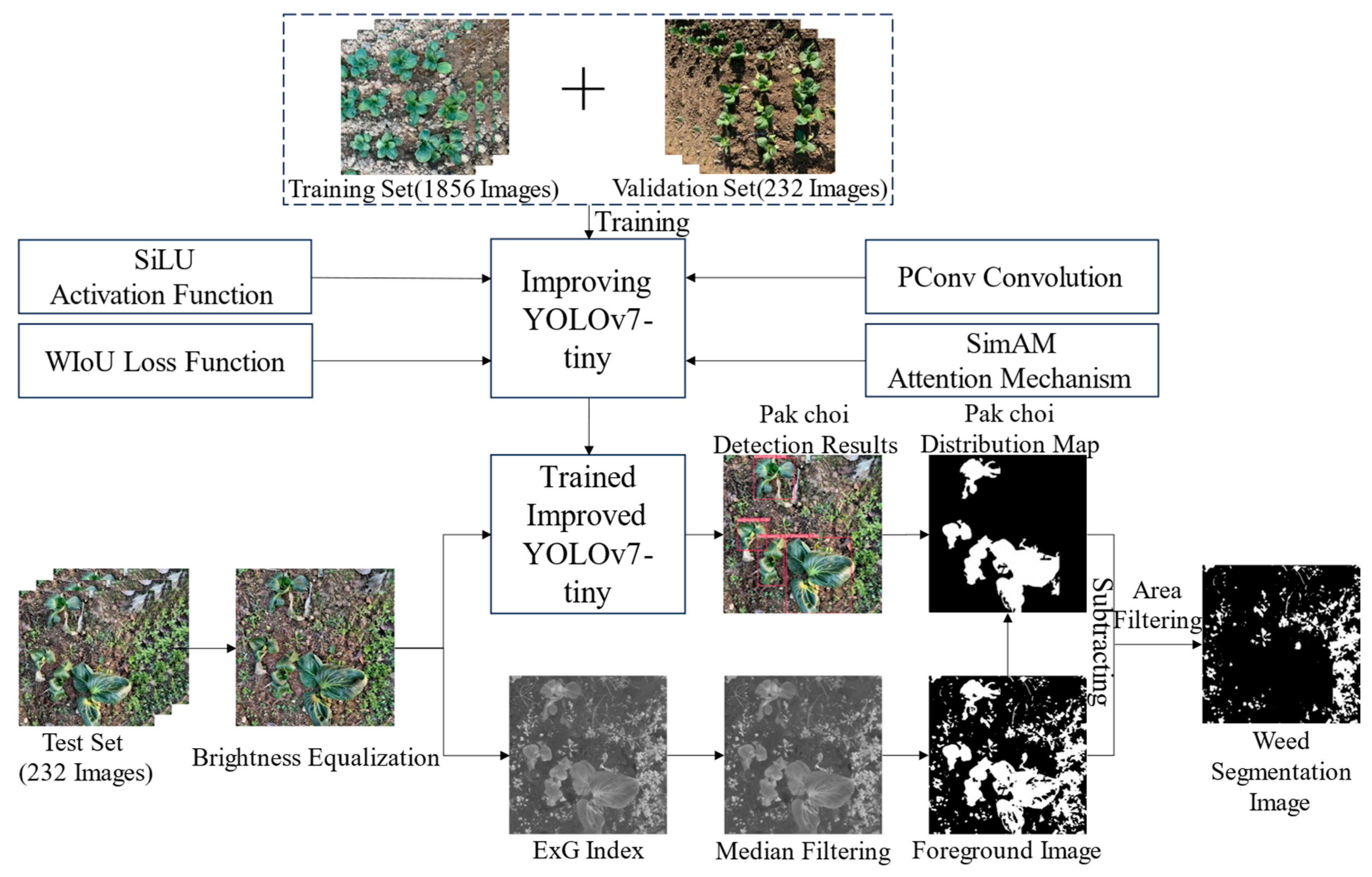

2.2. Methods

2.2.1. Improving YOLOv7-Tiny

- 1.

- WIoUv3 Loss Function

- 2.

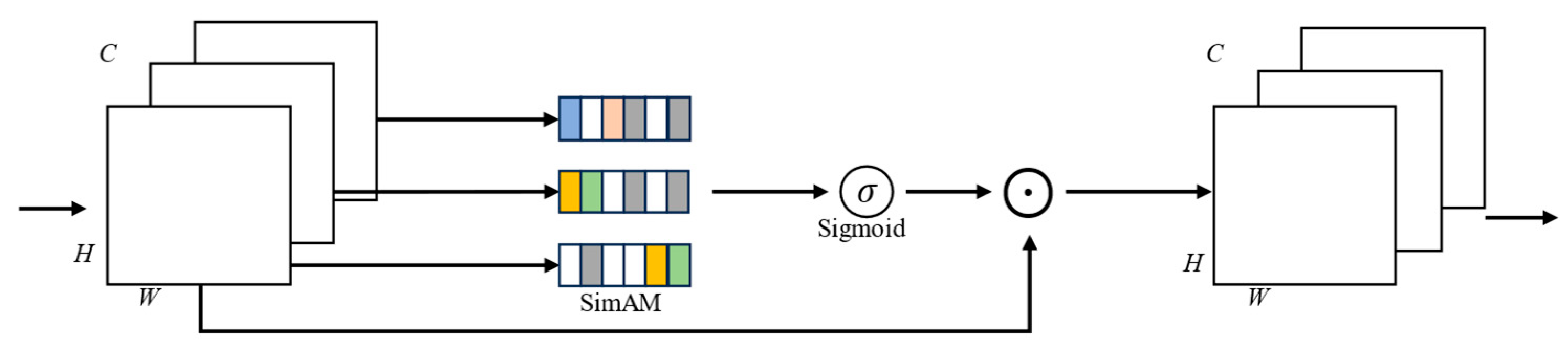

- SimAM Parameter-Free Attention Mechanism

- 3.

- SiLU Activation Function

- 4.

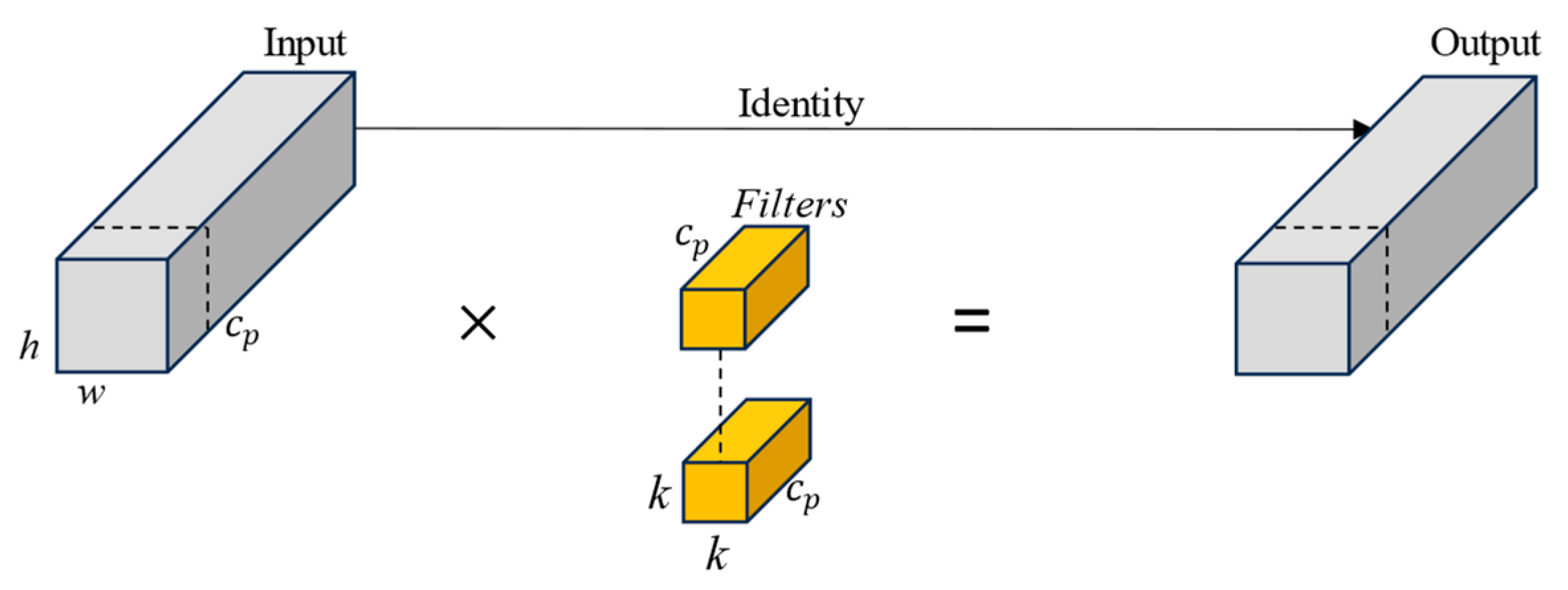

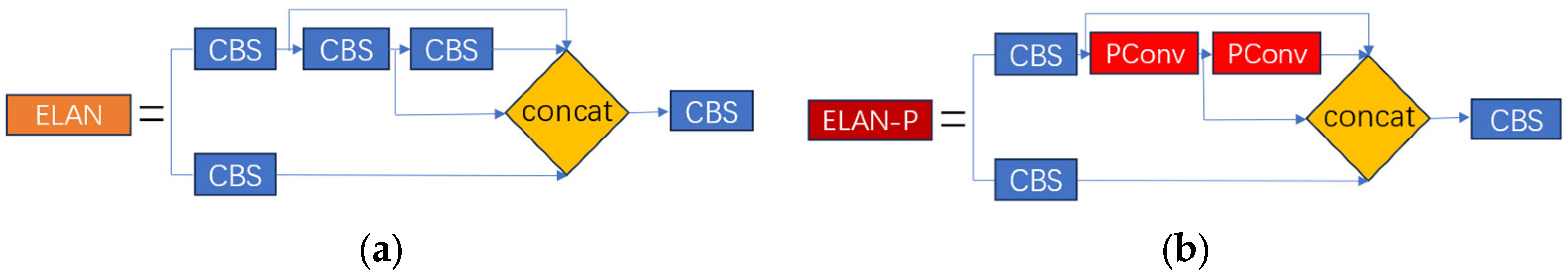

- Design of ELAN-P Module Based on Pconv

2.2.2. Image Segmentation Algorithm

- 1.

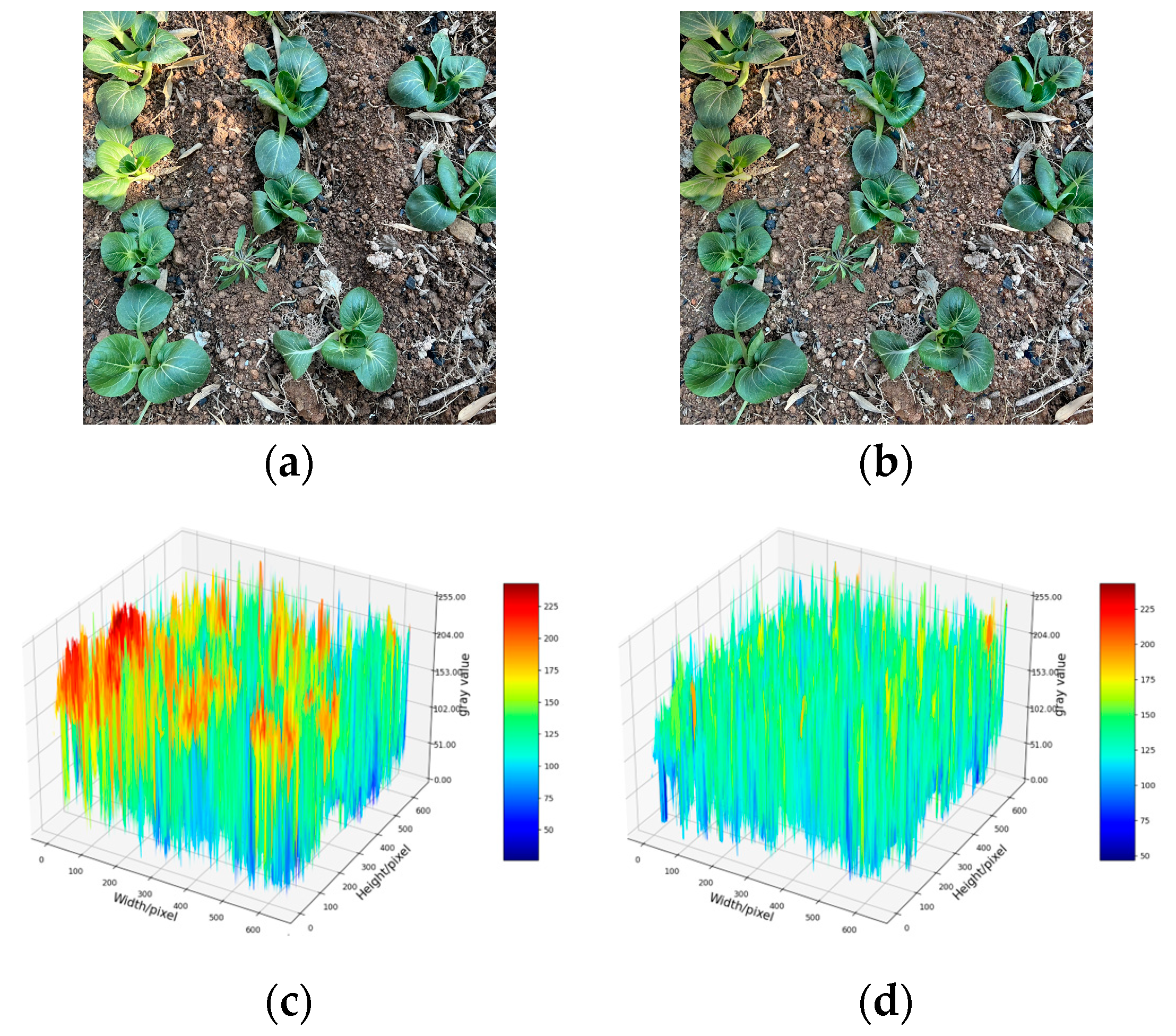

- Image Brightness Equalization

- 2.

- Pak Choi Detection and the ExG Index

- 3.

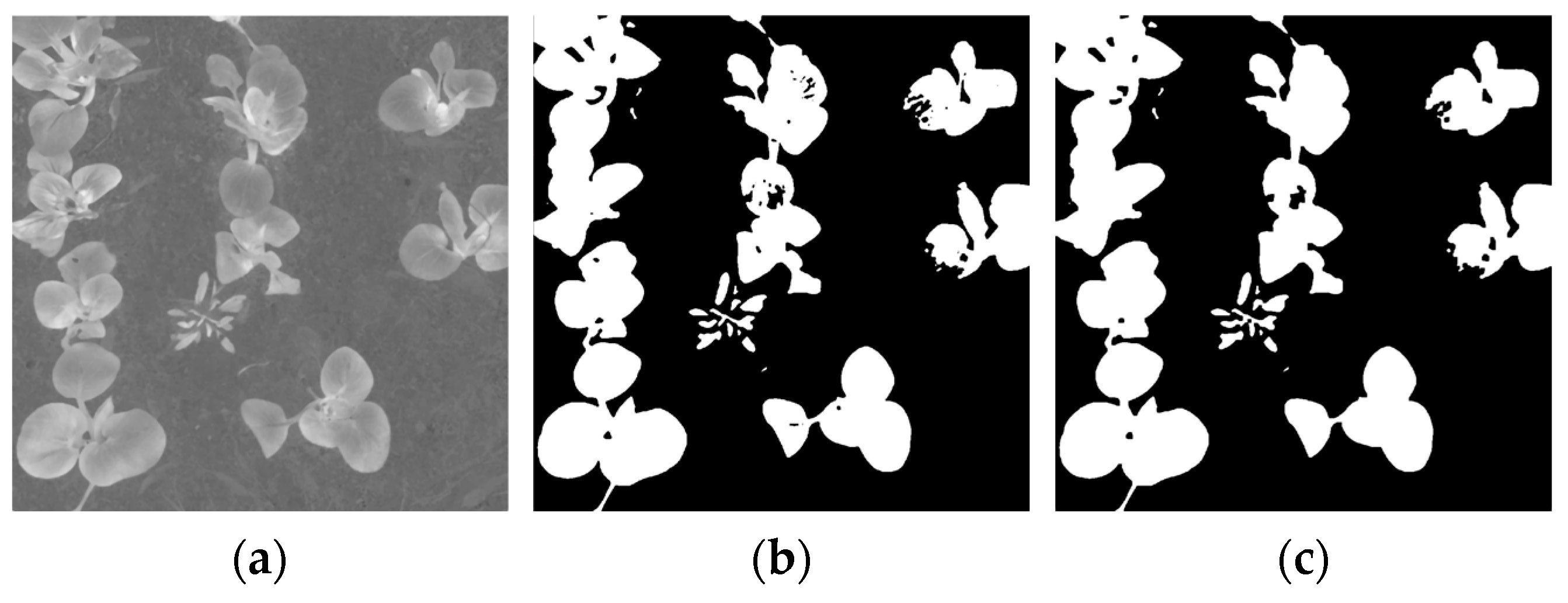

- Binarized Foreground Image

- 4.

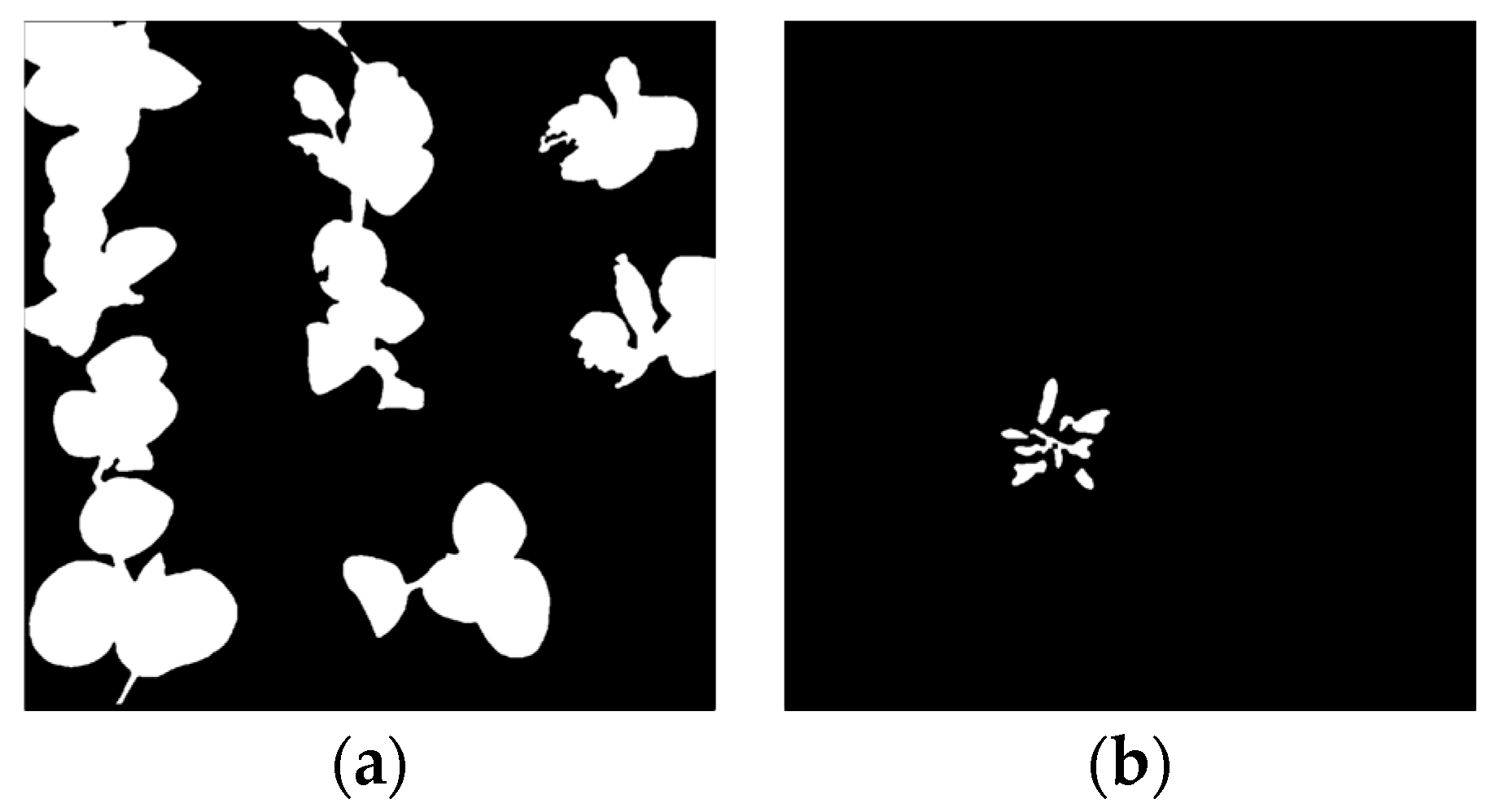

- Pak Choi and Weed Segmentation

2.3. Experimental Environment

2.4. Evaluation Metrics

2.4.1. Evaluation Metrics for Improved YOLOv7-Tiny

2.4.2. Evaluation Metrics for Image Segmentation Algorithms

3. Results and Discussion

3.1. Experiment and Analysis of Improved YOLOv7-Tiny

3.1.1. Different Loss Functions

3.1.2. Incorporating SimAM at Different Locations

3.1.3. Ablation Experiments

3.2. Image Segmentation Experiments and Analysis

- Building a semantic segmentation dataset that includes various types of weeds is an extremely cumbersome task. By contrast, the method outlined in this paper only requires the creation of a target detection dataset for crops to train the model, significantly reducing the cost and difficulty of dataset construction.

- This method segments crops from weeds indirectly by detecting the crops, thereby eliminating the need to segment each type of weed individually. This reduces the complexity of segmentation and enhances the robustness compared to direct segmentation of crops and weeds.

4. Conclusions

- This paper focuses on pak choi and its accompanying weeds as the subjects of study and proposes an image segmentation method based on an improved YOLOv7-tiny. It detects pak choi and segments it and the weeds, effectively reducing the complexity of segmentation.

- Building on the original YOLOv7-tiny, the WIoU loss function and SiLU activation function are adopted to replace the existing loss function and activation function, the SimAM attention mechanism is introduced into the neck network, and the PConv convolution module is integrated into the backbone network. Compared to the original YOLOv7-tiny, the improved YOLOv7-tiny has an increased AP by 3.1%, increased fps by 12%, reduced Params by 29%, and decreased FLOPs by 17%. These improvements reduce the model’s consumption and significantly enhance the detection accuracy and speed for pak choi.

- The improved YOLOv7-tiny is used to identify individual pak choi targets in farmland, combined with the ExG index and OTSU method, to obtain a foreground image containing both pak choi and weeds. A pak choi distribution map is created by combining the target detection results of pak choi with the foreground image. Subsequently, a single weed target is obtained using the pak choi distribution map to remove pak choi targets from the foreground image, achieving precise segmentation between pak choi and weeds. The specific evaluation metrics for image segmentation are an mIoU of 85.3%, an mPA of 97.8%, and fps of 62.5. These results validate the efficiency of the proposed method in terms of segmentation accuracy and real-time performance.

- Despite this method showing strong feasibility, there are still some limitations: firstly, the accuracy of the improved YOLOv7-tiny in detecting pak choi at the edges of images needs to be improved; secondly, weeds that touch pak choi and are within the detection frame can be incorrectly identified as pak choi pixels; finally, debris such as branches and soil clumps on the pak choi may result in incomplete segmented pak choi targets. Future research will seek improvement measures for these shortcomings to enhance the segmentation accuracy further.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Han, J.; Luo, Y.; Yang, L.; Liu, X.; Wu, L.; Xu, J. Acidification and salinization of soils with different initial pH under greenhouse vegetable cultivation. J. Soils Sediments 2014, 14, 1683–1692. [Google Scholar] [CrossRef]

- Sharpe, S.M.; Schumann, A.W.; Yu, J.; Boyd, N.S. Vegetation detection and discrimination within vegetable plasticulture row-middles using a convolutional neural network. Precis. Agric. 2020, 21, 264–277. [Google Scholar] [CrossRef]

- Su, W.-H.; Fennimore, S.A.; Slaughter, D.C. Development of a systemic crop signalling system for automated real-time plant care in vegetable crops. Biosyst. Eng. 2020, 193, 62–74. [Google Scholar] [CrossRef]

- Zhang, Y.; Staab, E.S.; Slaughter, D.C.; Giles, D.K.; Downey, D. Automated weed control in organic row crops using hyperspectral species identification and thermal micro-dosing. Crop Prot. 2012, 41, 96–105. [Google Scholar] [CrossRef]

- Franco, C.; Pedersen, S.M.; Papaharalampos, H.; Ørum, J.E. The value of precision for image-based decision support in weed management. Precis. Agric. 2017, 18, 366–382. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Slaughter, D.C.; Fennimore, S.A. Real-time weed-crop classification and localisation technique for robotic weed control in lettuce. Biosyst. Eng. 2020, 192, 257–274. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Rehman, T.U.; Zaman, Q.U.; Chang, Y.K.; Schumann, A.W.; Corscadden, K.W. Development and field evaluation of a machine vision based in-season weed detection system for wild blueberry. Comput. Electron. Agric. 2019, 162, 1–13. [Google Scholar] [CrossRef]

- Tang, J.-L.; Chen, X.-Q.; Miao, R.-H.; Wang, D. Weed detection using image processing under different illumination for site-specific areas spraying. Comput. Electron. Agric. 2016, 122, 103–111. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Xu, K.; Shu, L.; Xie, Q.; Song, M.; Zhu, Y.; Cao, W.; Ni, J. Precision weed detection in wheat fields for agriculture 4.0: A survey of enabling technologies, methods, and research challenges. Comput. Electron. Agric. 2023, 212, 108106. [Google Scholar] [CrossRef]

- Albahar, M. A survey on deep learning and its impact on agriculture: Challenges and opportunities. Agriculture 2023, 13, 540. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A review of deep learning techniques used in agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Li, Y.; Ma, R.; Zhang, R.; Cheng, Y.; Dong, C. A tea buds counting method based on YOLOV5 and Kalman filter tracking algorithm. Plant Phenomics 2023, 5, 0030. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Wu, D.; Zheng, X. TBC-YOLOv7: A refined YOLOv7-based algorithm for tea bud grading detection. Front. Plant Sci. 2023, 14, 1223410. [Google Scholar] [CrossRef] [PubMed]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Milioto, A.; Stachniss, C. Fully convolutional networks with sequential information for robust crop and weed detection in precision farming. IEEE Robot. Autom. Lett. 2018, 3, 2870–2877. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Kamilaris, A.; Andreasen, C. Deep neural networks to detect weeds from crops in agricultural environments in real-time: A review. Remote Sens. 2021, 13, 4486. [Google Scholar] [CrossRef]

- Zhuang, J.; Li, X.; Bagavathiannan, M.; Jin, X.; Yang, J.; Meng, W.; Li, T.; Li, L.; Wang, Y.; Chen, Y. Evaluation of different deep convolutional neural networks for detection of broadleaf weed seedlings in wheat. Pest Manag. Sci. 2022, 78, 521–529. [Google Scholar] [CrossRef]

- Zou, K.; Liao, Q.; Zhang, F.; Che, X.; Zhang, C. A segmentation network for smart weed management in wheat fields. Comput. Electron. Agric. 2022, 202, 107303. [Google Scholar] [CrossRef]

- Wu, H.; Wang, Y.; Zhao, P.; Qian, M. Small-target weed-detection model based on YOLO-V4 with improved backbone and neck structures. Precis. Agric. 2023, 24, 2149–2170. [Google Scholar] [CrossRef]

- Wang, Q.; Cheng, M.; Huang, S.; Cai, Z.; Zhang, J.; Yuan, H. A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 2022, 199, 107194. [Google Scholar] [CrossRef]

- Yang, J.; Chen, Y.; Yu, J. Convolutional neural network based on the fusion of image classification and segmentation module for weed detection in alfalfa. Pest Manag. Sci. 2024, 80, 2751–2760. [Google Scholar] [CrossRef] [PubMed]

- Sahin, H.M.; Miftahushudur, T.; Grieve, B.; Yin, H. Segmentation of weeds and crops using multispectral imaging and CRF-enhanced U-Net. Comput. Electron. Agric. 2023, 211, 107956. [Google Scholar] [CrossRef]

- Cui, J.; Tan, F.; Bai, N.; Fu, Y. Improving U-net network for semantic segmentation of corns and weeds during corn seedling stage in field. Front. Plant Sci. 2024, 15, 1344958. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Dong, C.; Duoqian, M. Control distance IoU and control distance IoU loss for better bounding box regression. Pattern Recognit. 2023, 137, 109256. [Google Scholar] [CrossRef]

- Hu, D.; Yu, M.; Wu, X.; Hu, J.; Sheng, Y.; Jiang, Y.; Huang, C.; Zheng, Y. DGW-YOLOv8: A small insulator target detection algorithm based on deformable attention backbone and WIoU loss function. IET Image Process. 2024, 18, 1096–1108. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Cui, M.; Duan, Y.; Pan, C.; Wang, J.; Liu, H. Optimization for anchor-free object detection via scale-independent GIoU loss. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Input Image Size/Pixel | 640 × 640 |

| Batch Size | 16 |

| Momentum | 0.937 |

| Initial Learning Rate | 0.01 |

| Weight Decay | 0.0005 |

| Warmup | 3 |

| Confidence Threshold | 0.5 |

| Non-Maximum Suppression IoU Threshold | 0.5 |

| Epoch | 300 |

| Loss Function | Precision/% | Recall/% | AP/% |

|---|---|---|---|

| CIoU | 92.4 | 92.7 | 93.4 |

| SIoU | 91.4 | 92.9 | 93.1 |

| Focal EIoU | 91.1 | 93.1 | 92.3 |

| WIoUv3 | 92.8 | 92.5 | 93.7 |

| Model | Precision/% | Recall/% | AP/% | Params/M | FLOPs/G |

|---|---|---|---|---|---|

| Original YOLOv7-tiny | 92.4 | 92.7 | 93.4 | 11.6 | 13.2 |

| +SimAM(Backbone) | 91.2 | 90.1 | 92.3 | 7.3 | 9.1 |

| +SimAM(Neck) | 93.1 | 94.8 | 94.2 | 11.0 | 13.6 |

| Combination Number | Structural Configuration | Evaluation Metrics | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Leaky ReLU | CIoU | SILU | WIoUv3 | SimAM | PConv | AP/% | FPS | Params/M | FLOPs/G | |

| 1 | ✓ | ✓ | × | × | × | × | 93.4 | 79.4 | 11.6 | 13.2 |

| 2 | × | ✓ | ✓ | × | × | × | 94.8 | 85.2 | 11.6 | 13.2 |

| 3 | × | × | ✓ | ✓ | × | × | 95.3 | 89.3 | 11.6 | 13.2 |

| 4 | × | × | ✓ | ✓ | ✓ | × | 96.4 | 89.3 | 11.0 | 13.6 |

| 5 | × | × | ✓ | ✓ | ✓ | ✓ | 96.5 | 89.3 | 8.2 | 10.9 |

| Type | IoU/% | PA/% | mIoU/% | mPA/% | FPS |

|---|---|---|---|---|---|

| Weeds | 76.5 | 97.2 | 84.8 | 97.8 | 62.5 |

| Pak choi | 93.1 | 98.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Yao, L.; Xu, L.; Hu, D.; Zhou, J.; Chen, Y. An Improved YOLOv7-Tiny Method for the Segmentation of Images of Vegetable Fields. Agriculture 2024, 14, 856. https://doi.org/10.3390/agriculture14060856

Wang S, Yao L, Xu L, Hu D, Zhou J, Chen Y. An Improved YOLOv7-Tiny Method for the Segmentation of Images of Vegetable Fields. Agriculture. 2024; 14(6):856. https://doi.org/10.3390/agriculture14060856

Chicago/Turabian StyleWang, Shouwei, Lijian Yao, Lijun Xu, Dong Hu, Jiawei Zhou, and Yexin Chen. 2024. "An Improved YOLOv7-Tiny Method for the Segmentation of Images of Vegetable Fields" Agriculture 14, no. 6: 856. https://doi.org/10.3390/agriculture14060856

APA StyleWang, S., Yao, L., Xu, L., Hu, D., Zhou, J., & Chen, Y. (2024). An Improved YOLOv7-Tiny Method for the Segmentation of Images of Vegetable Fields. Agriculture, 14(6), 856. https://doi.org/10.3390/agriculture14060856