High-Precision Detection for Sandalwood Trees via Improved YOLOv5s and StyleGAN

Abstract

1. Introduction

2. Materials and Methods

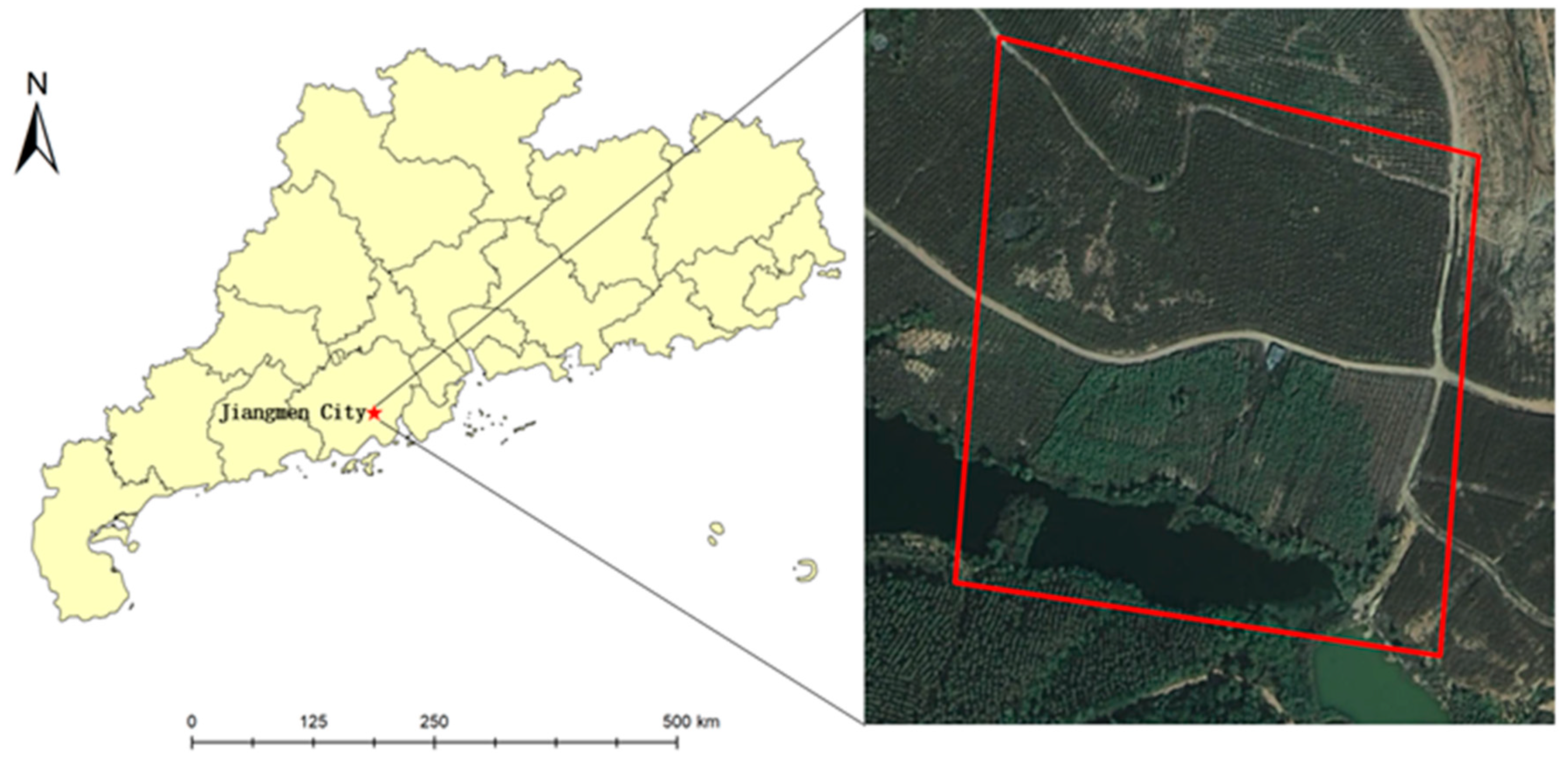

2.1. Data Acquisition and Preprocessing

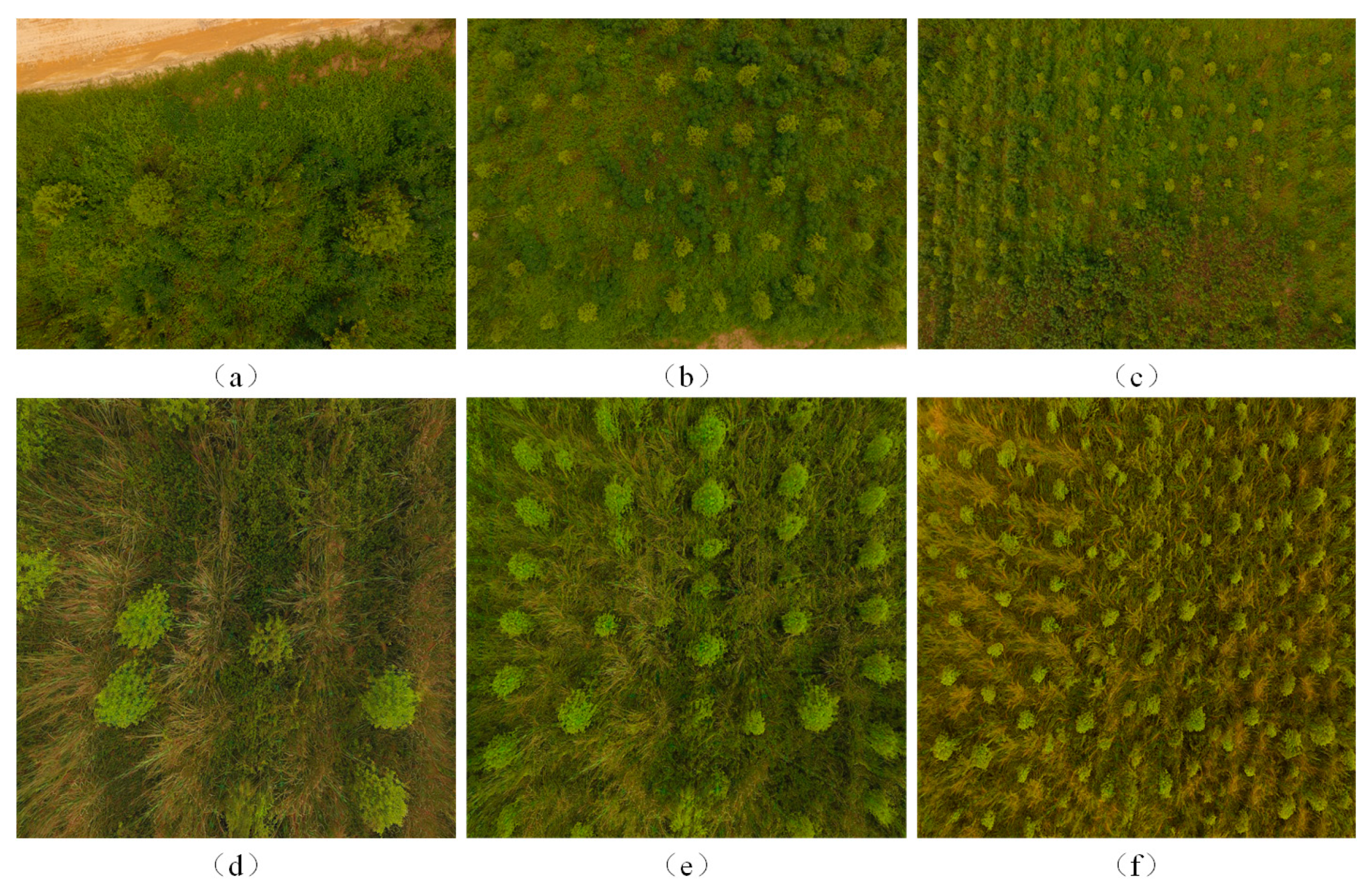

2.1.1. Data Collection

2.1.2. Dataset Construction

2.2. Research Methodology

StyleGAN Data Augmentation

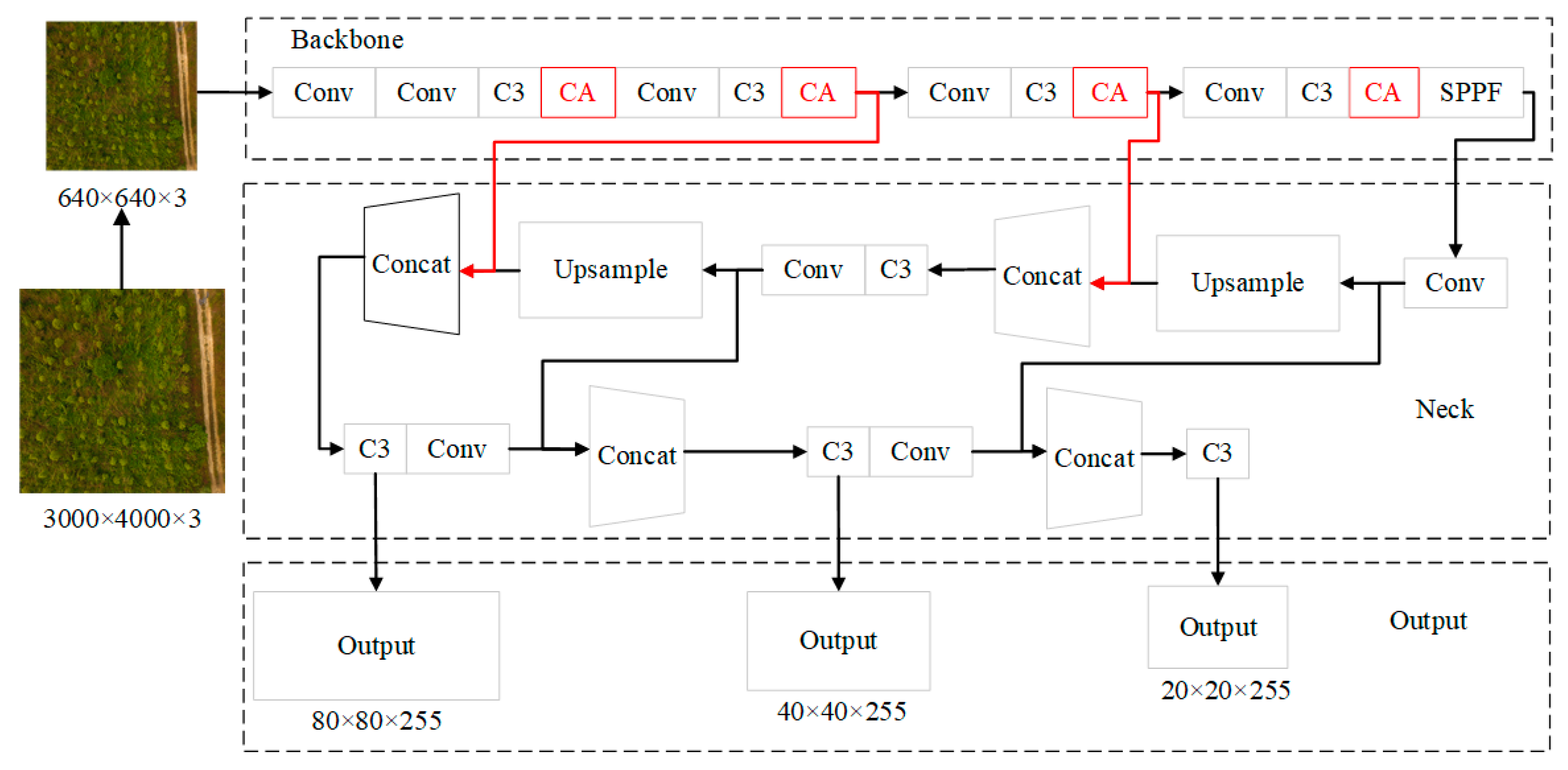

2.3. Improved Lightweight Sandalwood Detection Algorithm

2.3.1. YOLOv5s Object Detection Model

2.3.2. Introduction of CA Module

2.3.3. Improved Boundary Box Regression Loss Function

3. Results and Discussion

3.1. Experimental Platform

3.2. Training Settings

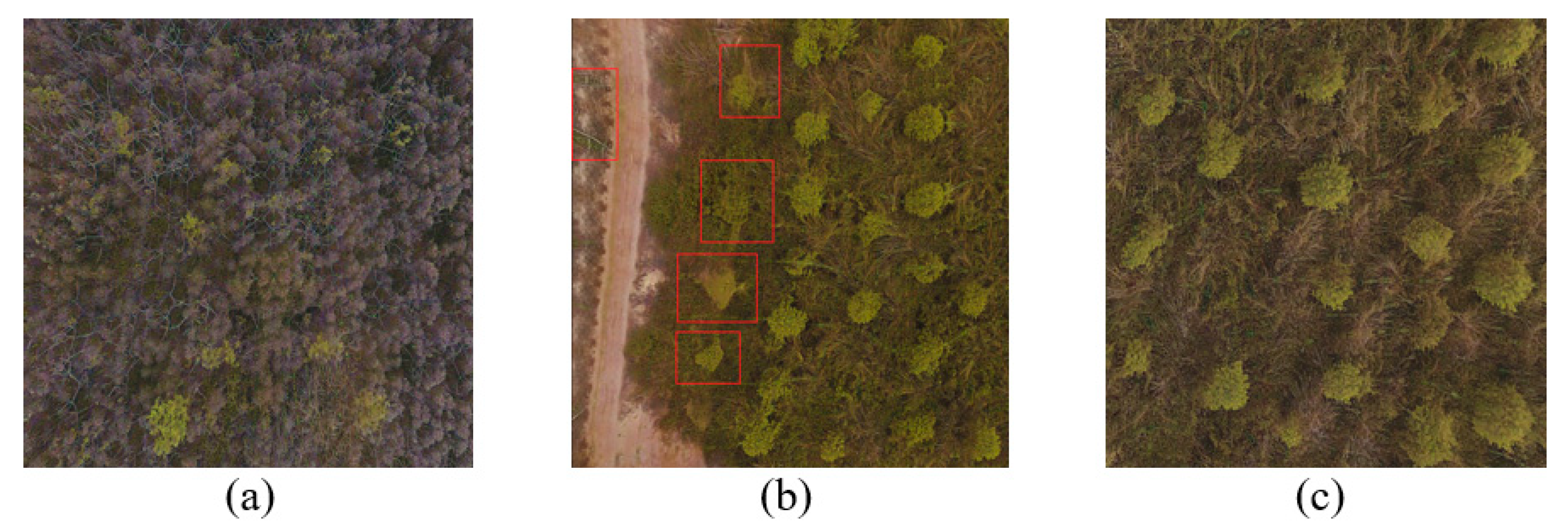

3.3. Generation of Realistic Samples

3.4. Evaluation Metrics

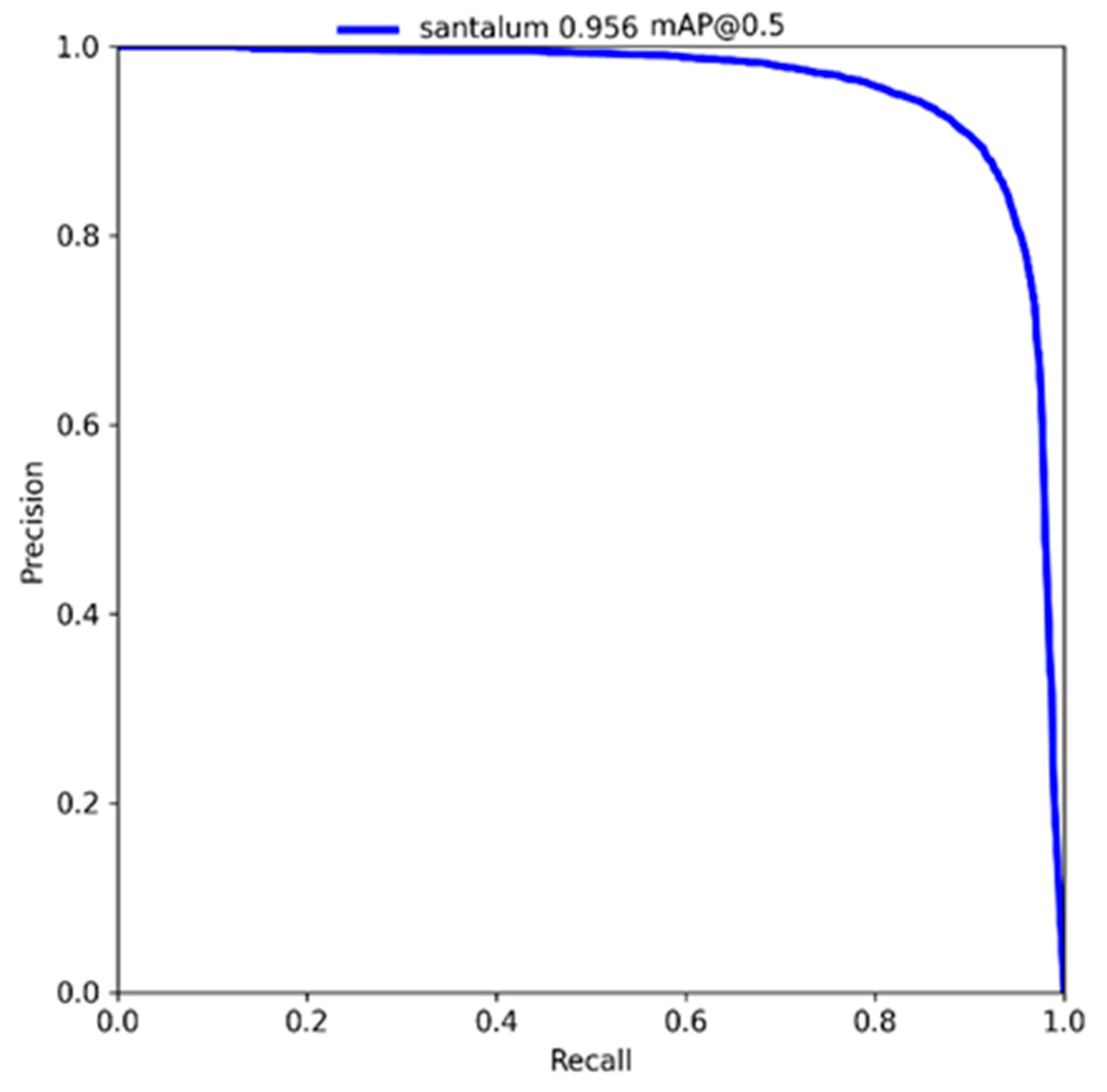

3.5. Experimental Analysis

3.6. Validating the Effectiveness of Strategies

3.7. Experimental Comparison

3.8. Discussion on Impact of Flight Altitude on Model Accuracy

3.9. Methodology Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Kumar, A.A.; Joshi, G.; Ram, H.M. Sandalwood: History, Uses, Present Status and the Future. Curr. Sci. 2012, 103, 1408–1416. [Google Scholar]

- Das, S.C. Silviculture, Growth and Yield of Sandalwood. In Sandalwood: Silviculture, Conservation and Applications; Springer: Singapore, 2021; pp. 111–138. [Google Scholar]

- Liu, X.J.; Xu, D.P.; Zhang, N.N.; Xie, Z.S.; Chen, H.F. Effect of Pot Host Configuration on the Growth of Indian Sandalwood (Santalum album) Seedings in South China. For. Res. 2010, 23, 924–927. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, H.; Niu, J.; Tu, S.; Zhao, W. Missing Seedling Localization Method for Sandalwood Trees in Complex Environment Based on YOLOv4 and Double Regression Strategy. Trans. Chin. Soc. Agric. Mach. 2022, 53, 299–305. [Google Scholar] [CrossRef]

- Liu, X.; Xu, D.; Yang, Z.; Zhang, N. Effects of Plant Growth Regulators on Growth, Heartwood Formation and Oil Composition of Young Santalum Album. Sci. Silvae Sin. 2013, 49, 143–149. [Google Scholar]

- Yu, X.; Hyyppä, J.; Litkey, P.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Single-Sensor Solution to Tree Species Classification Using Multispectral Airborne Laser Scanning. Remote Sens. 2017, 9, 108. [Google Scholar] [CrossRef]

- Lin, Z.; Ding, Q.; Tu, W.; Lin, J.; Liu, J.; Huang, Y. Vegetation Type Recognition Based on Multivariate HoG and Aerial Image Captured by UAV. J. For. Environ. 2018, 38, 444. [Google Scholar]

- Hu, G.; Yin, C.; Zhang, Y.; Fang, Y.; Zhu, Y. Identification of diseased pine trees by fusion convolutional neural network and Adaboost algorithm. J. Anhui Univ. (Nat. Sci. Ed.) 2019, 43, 44–53. [Google Scholar] [CrossRef]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The Application of Unmanned Aerial Vehicles (UAVs) to Estimate above-Ground Biomass of Mangrove Ecosystems. Remote Sens. Environ. 2020, 242, 111747. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Zhu, X.; Ma, H.; Ji, J.; Jin, X.; Zhao, K.; Zhang, K. Detecting and Identifying Blueberry Canopy Fruits Based on Faster R-CNN. J. South. Agric. 2020, 51, 1493–1501. [Google Scholar]

- Sun, J.; He, X.; Ge, X.; Wu, X.; Shen, J.; Song, Y. Detection of Key Organs in Tomato Based on Deep Migration Learning in a Complex Background. Agriculture 2018, 8, 196. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Montes, D.; Nadar, J.; Skalski, P.; et al. Ultralytics/Yolov5: V6. 1-TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference. Zenodo 2022. [Google Scholar] [CrossRef]

- Jin, Y.; Xu, M.; Zheng, J. A Dead Tree Detection Algorithm Based on Improved YOLOv4-Tiny for UAV Images. Remote Sens. Nat. Resour. 2023, 35, 90. [Google Scholar]

- Lin, Y.; Chen, T.; Liu, S.; Cai, Y.; Shi, H.; Zheng, D.; Lan, Y.; Yue, X.; Zhang, L. Quick and Accurate Monitoring Peanut Seedlings Emergence Rate through UAV Video and Deep Learning. Comput. Electron. Agric. 2022, 197, 106938. [Google Scholar] [CrossRef]

- Wu, T.; Zhang, Q.; Wu, J.; Liu, Q.; Su, J.; Li, H. An Improved YOLOv5s Model for Effectively Predict Sugarcane Seed Replenishment Positions Verified by a Field Re-Seeding Robot. Comput. Electron. Agric. 2023, 214, 108280. [Google Scholar] [CrossRef]

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification Using Deep Learning. arXiv 2017, arXiv:171204621. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning Augmentation Strategies from Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 113–123. [Google Scholar]

- Choi, J.; Kim, T.; Kim, C. Self-Ensembling with Gan-Based Data Augmentation for Domain Adaptation in Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 6830–6840. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Detection of Apple Lesions in Orchards Based on Deep Learning Methods of Cyclegan and Yolov3-Dense. J. Sens. 2019, 2019, 7630926. [Google Scholar] [CrossRef]

- Wang, C.; Xiao, Z. Lychee Surface Defect Detection Based on Deep Convolutional Neural Networks with Gan-Based Data Augmentation. Agronomy 2021, 11, 1500. [Google Scholar] [CrossRef]

- Zeng, M.; Gao, H.; Wan, L. Few-Shot Grape Leaf Diseases Classification Based on Generative Adversarial Network. IOP Publ. 2021, 1883, 012093. [Google Scholar] [CrossRef]

- Das, S.C. Cultivation of Sandalwood Under Agro-Forestry System. In Sandalwood: Silviculture, Conservation and Applications; Springer: Singapore, 2021; pp. 139–162. [Google Scholar]

- Karras, T.; Aittala, M.; Laine, S.; Härkönen, E.; Hellsten, J.; Lehtinen, J.; Aila, T. Alias-Free Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2021, 34, 852–863. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:220512740. [Google Scholar]

| Type | 10 m | 20 m | 30 m | Total |

|---|---|---|---|---|

| Original training samples | 295 | 120 | 82 | 497 |

| Generated samples | 30 | 290 | 305 | 625 |

| Final training samples | 425 | 410 | 387 | 1122 |

| Testing samples | 20 | 20 | 20 | 60 |

| StyleGAN | CA | SIOU | P/(%) | R/(%) | AP/(%) | Memory Size/(Mb) | FPS | |

|---|---|---|---|---|---|---|---|---|

| 1 | - | - | - | 94.0 | 95.0 | 93.0 | 14.1 | 62.8 |

| 2 | √ | - | - | 86.2 | 98.0 | 94.5 | 14.1 | 62.1 |

| 3 | - | √ | - | 88.9 | 98.0 | 95.0 | 14.4 | 58.9 |

| 4 | - | - | √ | 89.0 | 97.0 | 94.5 | 14.1 | 61.3 |

| 5 | √ | √ | √ | 90.1 | 99.0 | 95.6 | 14.5 | 56.8 |

| Network Model | P/(%) | R/(%) | AP/(%) | FPS |

|---|---|---|---|---|

| YOLOv4-tiny | 85.2 | 92.2 | 88.7 | 48.9 |

| YOLOXs | 85.9 | 89.7 | 92.0 | 73.5 |

| YOLOv5s | 94.0 | 95.0 | 93.0 | 62.8 |

| YOLOv5-mobilenet2 | 85.9 | 97.0 | 93.3 | 64.5 |

| YOLOv5-ghost | 86.2 | 94.8 | 92.7 | 63.1 |

| GAN-YOLOv5s-CA-SIOU | 90.1 | 99.0 | 95.6 | 56.8 |

| Network Model | 10 m (AP/%) | 20 m (AP/%) | 30 m (AP/%) |

|---|---|---|---|

| YOLOv5s | 95.1 | 93.6 | 90.2 |

| GAN-YOLOv5s-CA-SIOU | 97.3 | 94.1 | 95.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Niu, J.; Huang, Z.; Pan, C.; Xue, Y.; Tan, F. High-Precision Detection for Sandalwood Trees via Improved YOLOv5s and StyleGAN. Agriculture 2024, 14, 452. https://doi.org/10.3390/agriculture14030452

Zhang Y, Niu J, Huang Z, Pan C, Xue Y, Tan F. High-Precision Detection for Sandalwood Trees via Improved YOLOv5s and StyleGAN. Agriculture. 2024; 14(3):452. https://doi.org/10.3390/agriculture14030452

Chicago/Turabian StyleZhang, Yu, Jiajun Niu, Zezhong Huang, Chunlei Pan, Yueju Xue, and Fengxiao Tan. 2024. "High-Precision Detection for Sandalwood Trees via Improved YOLOv5s and StyleGAN" Agriculture 14, no. 3: 452. https://doi.org/10.3390/agriculture14030452

APA StyleZhang, Y., Niu, J., Huang, Z., Pan, C., Xue, Y., & Tan, F. (2024). High-Precision Detection for Sandalwood Trees via Improved YOLOv5s and StyleGAN. Agriculture, 14(3), 452. https://doi.org/10.3390/agriculture14030452