Computer Vision-Based Measurement Techniques for Livestock Body Dimension and Weight: A Review

Abstract

1. Introduction

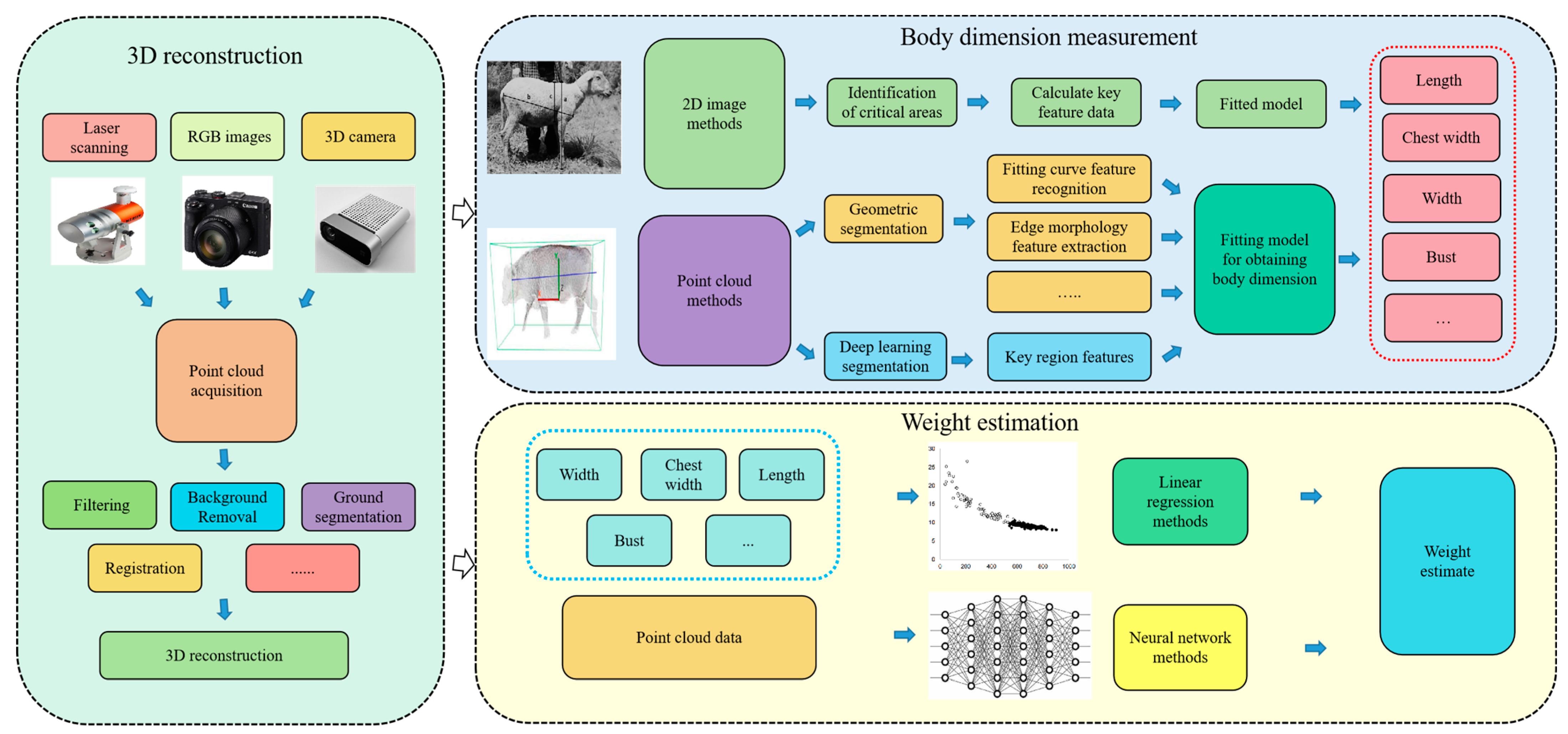

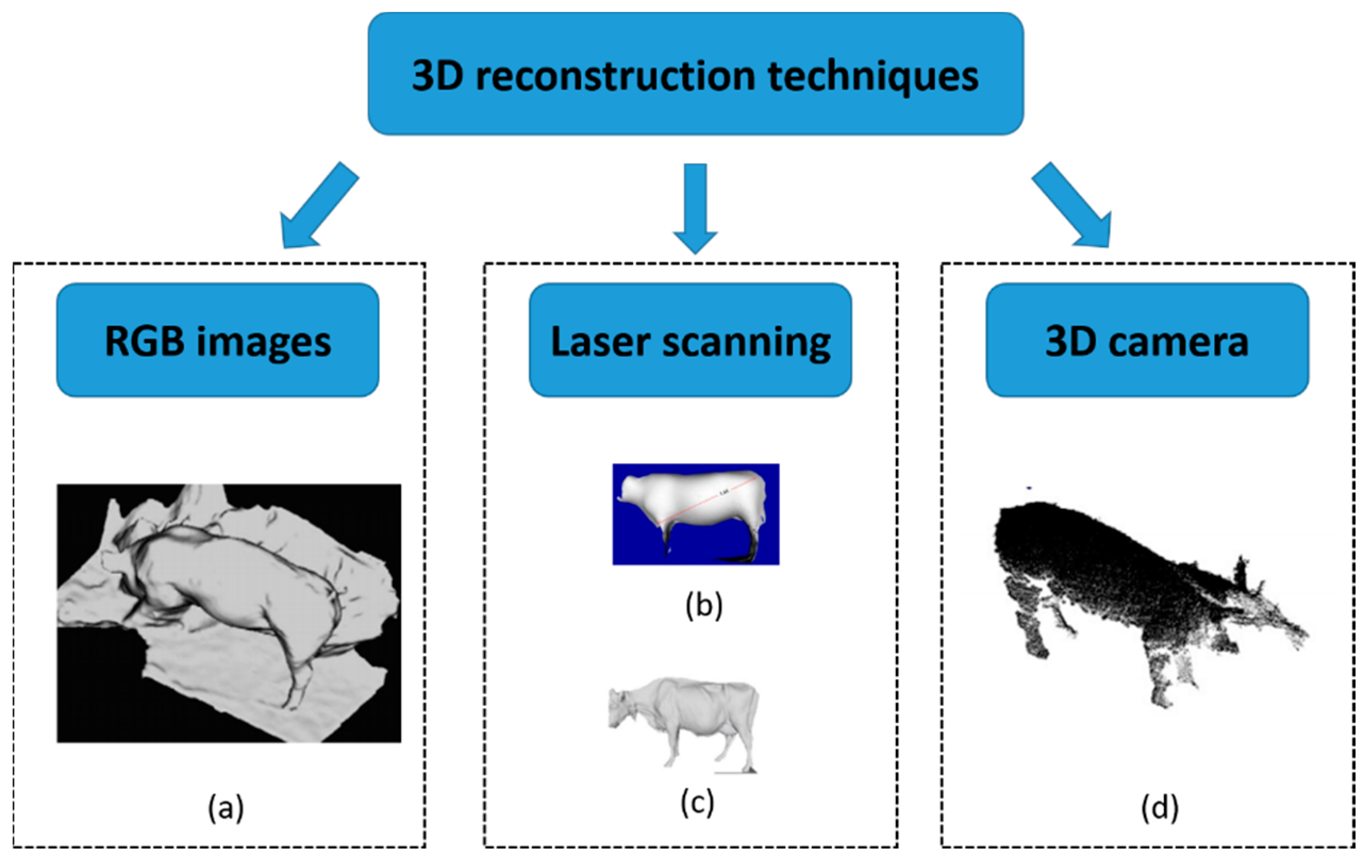

2. Computer Vision-Based Livestock 3D Reconstruction Technology

2.1. 3D Reconstruction of Livestock Based on RGB Images

2.2. Livestock 3D Reconstruction Based on Laser Scanning

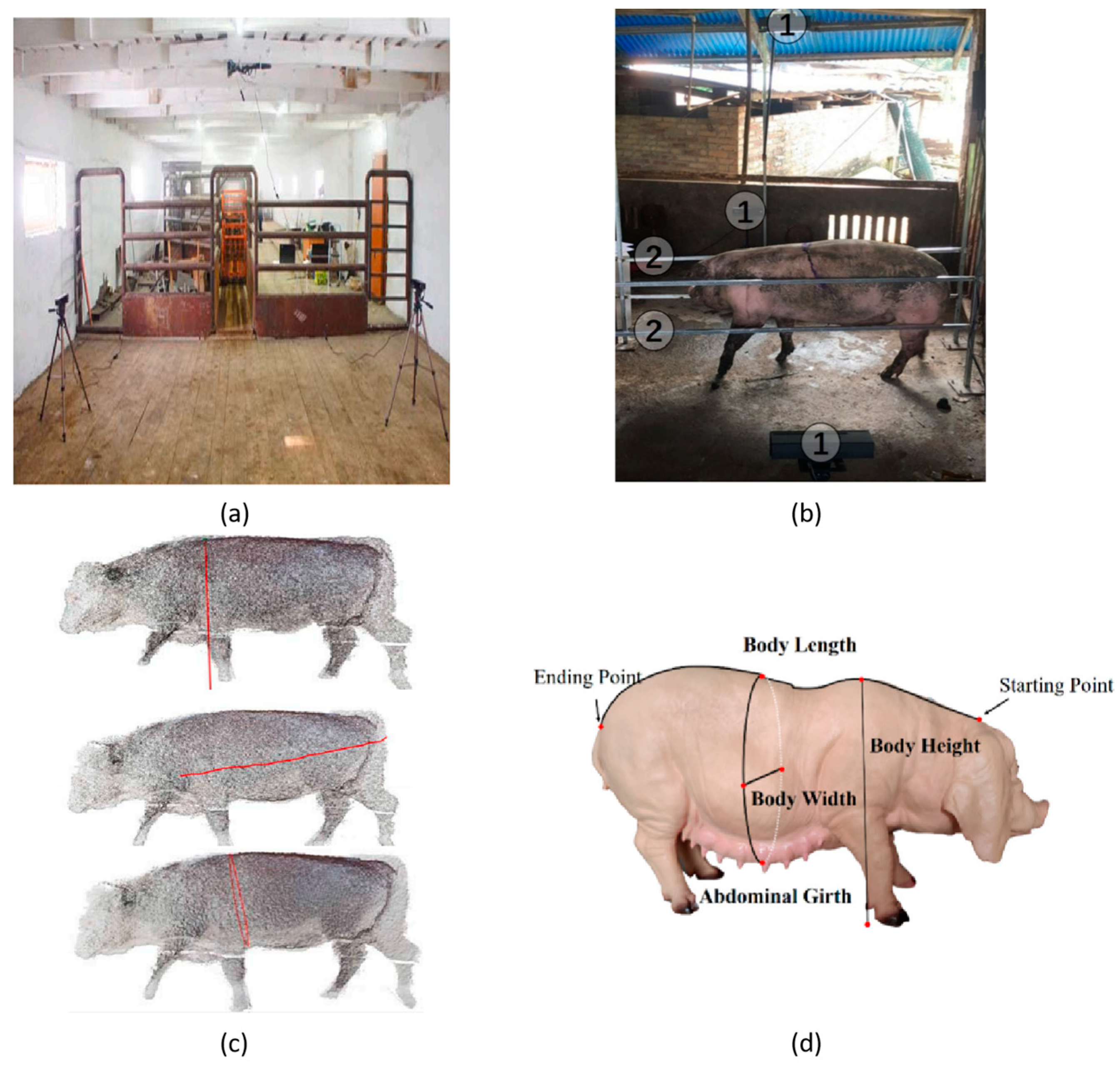

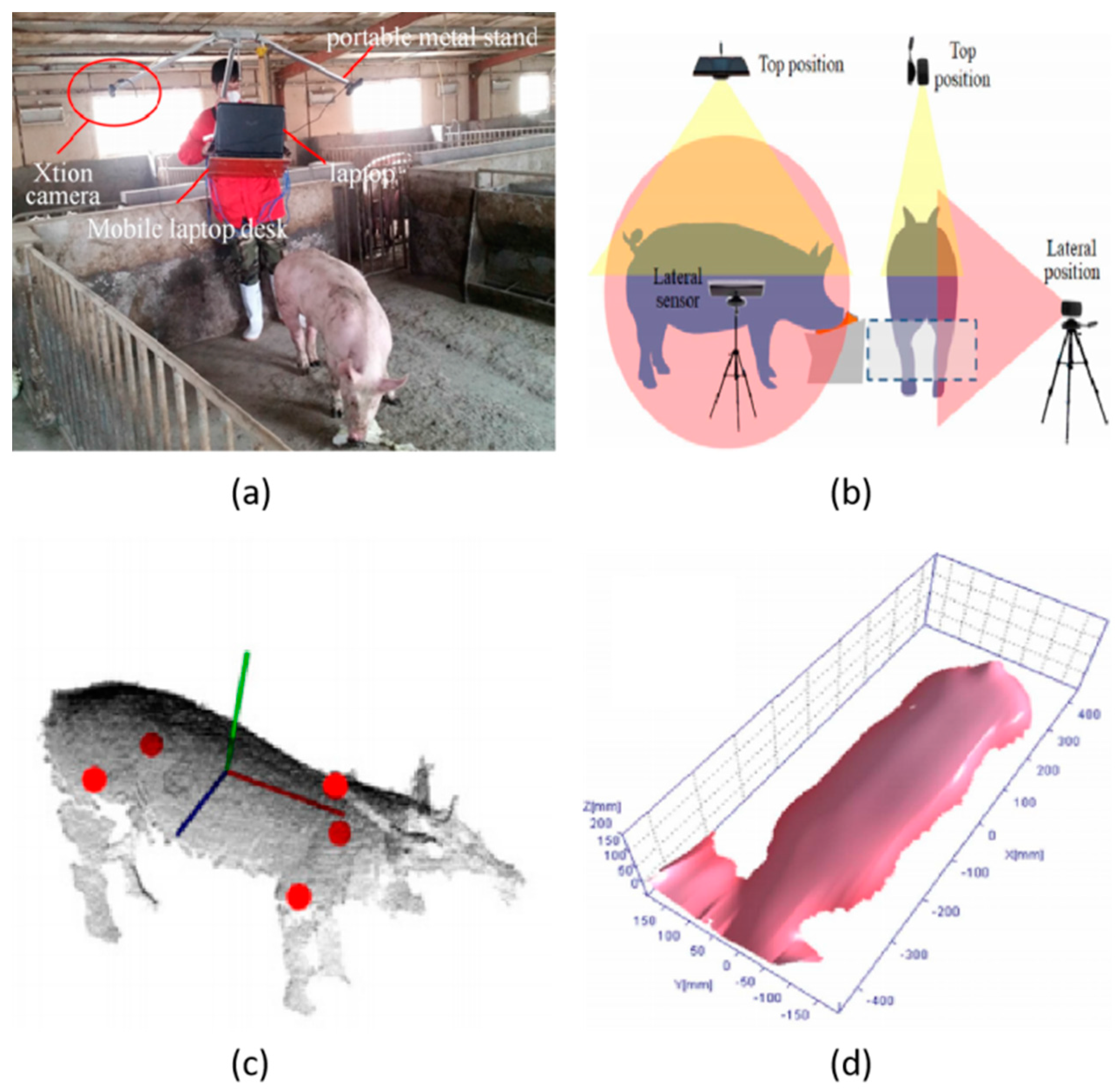

2.3. Depth-Camera-Based 3D Reconstruction

2.4. Summary of 3D Reconstruction Technology

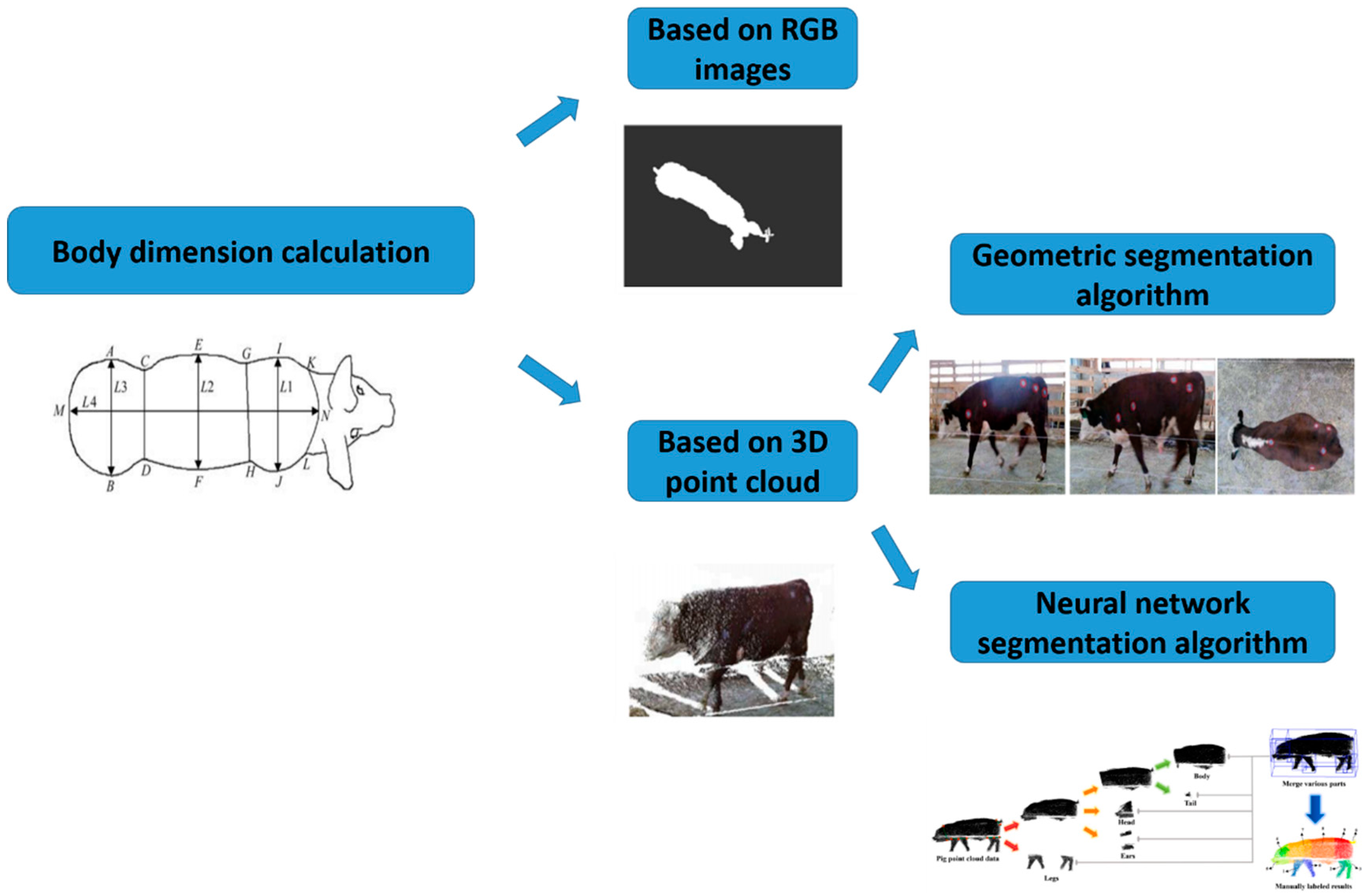

3. Computer Vision-Based Livestock Body Dimension Acquisition Technology

3.1. RGB Image-Based Body Dimension Measurement Method

3.2. 3D Point Cloud Body Dimension Measurement Method

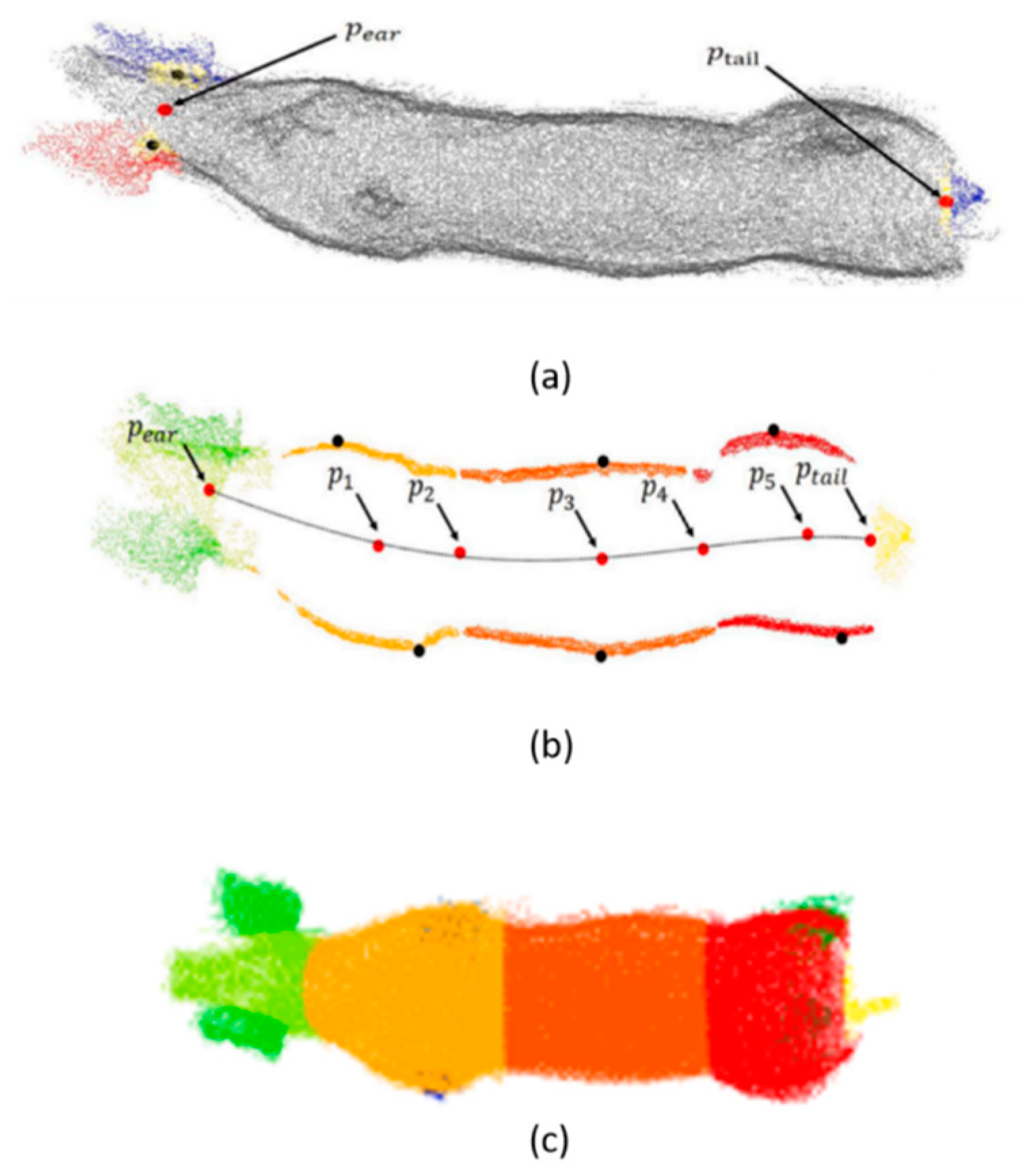

3.2.1. Geometry-Based 3D Point Cloud Segmentation

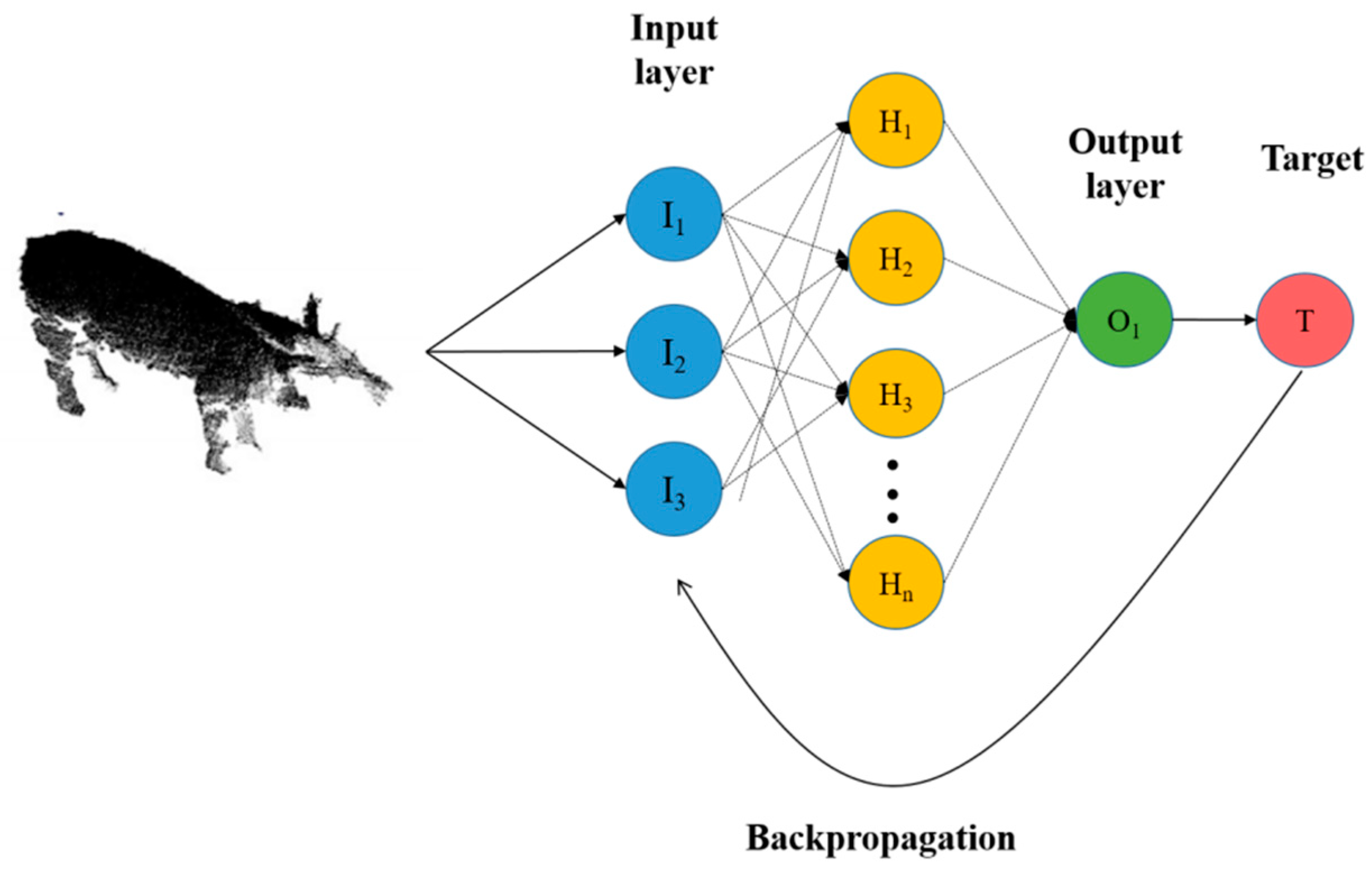

3.2.2. Neural Network-Based 3D Point Cloud Segmentation

3.3. Summary of Body Dimension Acquisition Technology

4. Computer Vision-Based Livestock Weight Estimation Technology

4.1. Linear Regression Weight Estimation-Based Body Weight Measurement

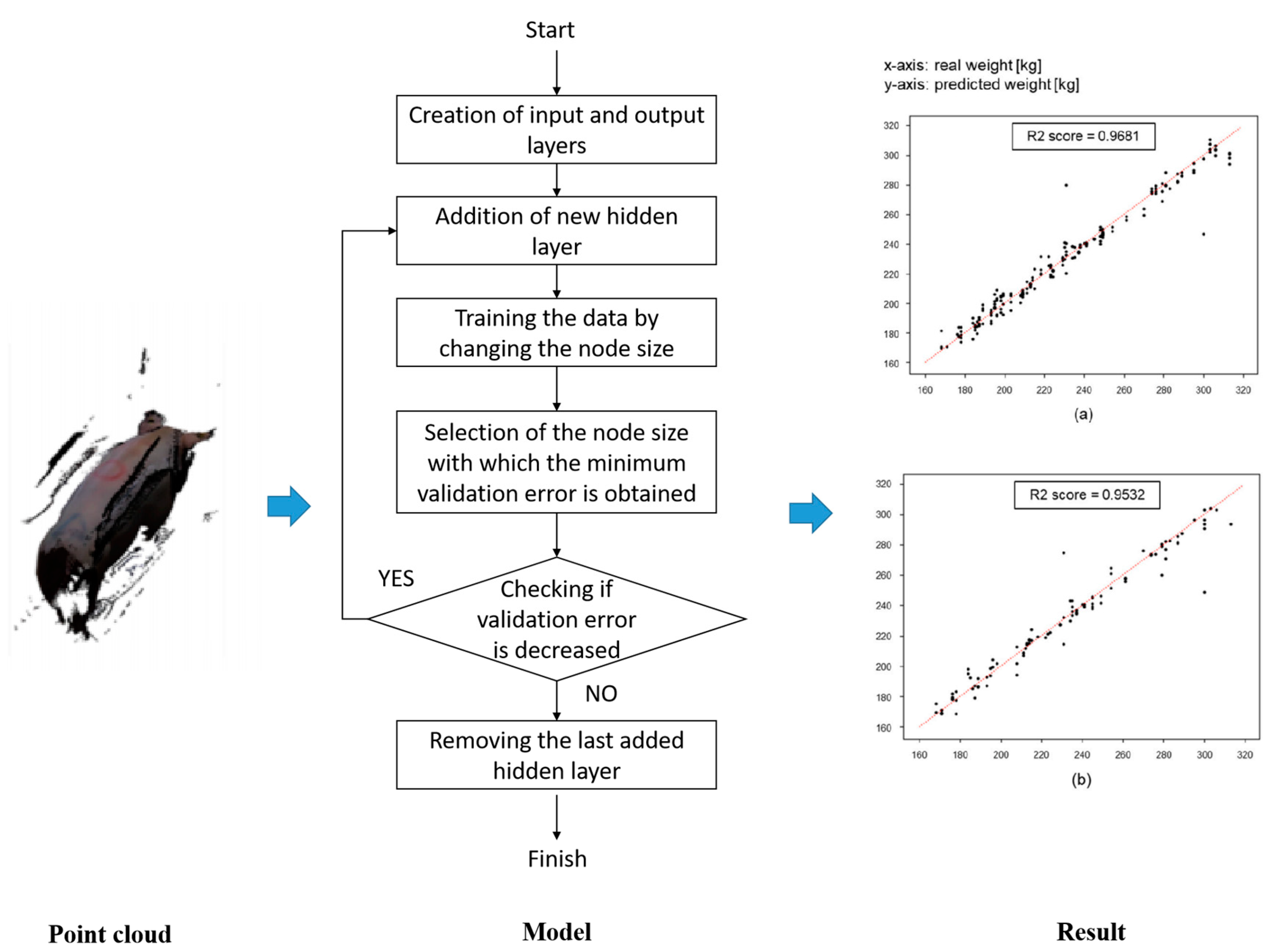

4.2. Neural Network-Based Visual Weight Estimation

4.3. Summary of Weight Estimation Technology

5. Challenges and Trends

5.1. The Main Challenges

- (1)

- Lack of sufficiently accurate 3D reconstruction models: Despite the success of using 3D cameras for point cloud acquisition in recent research, it is crucial to note that non-contact point cloud acquisition of livestock mostly occurs in ideal laboratory settings. However, actual production environments are more complex, with more interference and noise in the point cloud data. Common point cloud registration algorithms may not perform optimally in these conditions. Moreover, variations in lighting conditions in farm environments can result in incomplete point cloud information, limiting accuracy.

- (2)

- Absence of high-quality publicly available livestock point cloud datasets: Massive datasets are crucial for training machine learning or deep learning models in methods related to phenotypic data acquisition and weight estimation. However, due to ownership and confidentiality issues, farms and commercial entities seldom release their collected data into the public domain. Existing datasets might also exhibit differences in format, structure, or type, necessitating standardization and consistency to ensure data comparability and usability.

- (3)

- Inefficient point cloud acquisition methods: In many existing studies, the commonly employed channel, suspended, or handheld point cloud acquisition devices result in a single point cloud capture per livestock. Additionally, when using 3D cameras, livestock must remain still for extended periods during data capture. Otherwise, non-rigid deformation could severely affect the reconstruction process, leading to issues like “ghosting”. These inefficiencies limit the scalability of these methods in large-scale farming applications.

- (4)

- Low cost-effectiveness: While acquisition equipment continues to evolve, high-quality 3D cameras still tend to be relatively expensive, which could pose a barrier for some farms and breeding facilities. Furthermore, processing non-contact data often requires complex deep learning models, whose training and fine-tuning might necessitate expensive hardware, potentially reducing cost-effectiveness.

5.2. Future Development

- (1)

- Enhancement of the accuracy of neural network models: Future developments will involve more machine learning and neural network technologies to handle and analyze large volumes of non-contact data. Improving the accuracy and usability of models can significantly enhance efficiency and make scientifically informed decisions.

- (2)

- Data integration and standardization: To achieve the sharing and comparison of livestock point cloud data across different breeds and batches, more efforts are needed in data integration and standardization. This will aid in establishing a global livestock phenotypic database, fostering collaboration and development in PLF.

- (3)

- Efficient data collection: In the future, there will be the use of smaller, lighter, lower-power, and high-resolution 3D cameras for data collection. Additionally, simultaneous data collection from multiple livestock heads could be a direction for improving data collection efficiency.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zheleuova, Z.S.; Uzakov, Y.M.; Shingisov, A.U.; Alibekov, R.S.; Khamitova, B.M. Development of halal cooked smoked beef and turkey sausage using a combined plant extracts. J. Food Process. Preserv. 2020, 45, e15028. [Google Scholar] [CrossRef]

- Cheng, M.; Yuan, H.B.; Wang, Q.F.; Cai, Z.J.; Liu, Y.Q.; Zhang, Y.J. Application of deep learning in sheep behaviors recognition and influence analysis of training data characteristics on the recognition effect. Comput. Electron. Agric. 2022, 198, 107010. [Google Scholar] [CrossRef]

- Zhang, X.W.; Xuan, C.Z.; Ma, Y.H.; Su, H.; Zhang, M.Q. Biometric facial identification using attention module optimized YOLOv4 for sheep. Comput. Electron. Agric. 2022, 203, 107452. [Google Scholar] [CrossRef]

- Weng, Z.; Fan, L.Z.; Zhang, Y.; Zheng, Z.Q.; Gong, C.L.; Wei, Z.Y. Facial Recognition of Dairy Cattle Based on Improved Convolutional Neural Network. IEICE Trans. Inf. Syst. 2022, 105, 1234–1238. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, S.; Wang, C.; Zhang, Y.; Zong, Z.; Wang, H.; Su, L.; Du, Y. A Non-Contact Cow Estrus Monitoring Method Based on the Thermal Infrared Images of Cows. Agriculture 2023, 13, 385. [Google Scholar] [CrossRef]

- Wu, D.; Han, M.; Song, H.; Song, L.; Duan, Y. Monitoring the respiratory behavior of multiple cows based on computer vision and deep learning. J. Dairy Sci. 2023, 106, 2963–2979. [Google Scholar] [CrossRef]

- Zhao, J.; Li, A.; Jin, X.; Pan, L. Technologies in individual animal identification and meat products traceability. Biotechnol. Biotechnol. Equip. 2020, 34, 48–57. [Google Scholar] [CrossRef]

- Entwisle, B. Population responses to environmental change: Looking back, looking forward. Popul. Environ. 2021, 42, 431–444. [Google Scholar] [CrossRef] [PubMed]

- El Sabry, M.; Romeih, Z.; Stino, F.; Khosht, A.; Aggrey, S. Water scarcity can be a critical limitation for the poultry industry. Trop. Anim. Health Prod. 2023, 55, 215. [Google Scholar] [CrossRef]

- Zhang, H.; Li, J.; Quan, T. Strengthening or Weakening: The Impact of an Aging Rural Workforce on Agricultural Economic Resilience in China. Agriculture 2023, 13, 1436. [Google Scholar] [CrossRef]

- Akinyemi, B.E.; Vigors, B.; Turner, S.P.; Akaichi, F.; Madonna, B.; Johnson, A.K.; Pairis-Garcia, M.D.; Rozeboom Dale, W.; Steibel, J.P.; Thompson, D.P.; et al. Precision livestock farming: A qualitative exploration of swine industry stakeholders. Front. Anim. Sci. 2023, 4, 2673–6225. [Google Scholar] [CrossRef]

- Park, M.; Britton, D.; Daley, W.; McMurray, G.; Navaei, M.; Samoylov, A.; Usher, C.; Xu, J. Artificial intelligence, sensors, robots, and transportation systems drive an innovative future for poultry broiler and breeder management. Anim. Front. 2022, 12, 40–48. [Google Scholar] [CrossRef] [PubMed]

- Tolybek, S.; Rzabayev, T.; Assanbayev, S.; Serikbay, R.; Bazargaliyev, A.; Rzabayev, K.S. Linebreeding as a system of stock breeding to improve the productive qualities of horses of the Kushum breed. Reprod. Domest. Anim. 2022, 57, 1584–1592. [Google Scholar]

- Palma-Molina, P.; Hennessy, T.; O’Connor, A.; Onakuse, S.; O’Leary, N.; Moran, B.; Shalloo, L. Factors associated with intensity of technology adoption and with the adoption of 4 clusters of precision livestock farming technologies in Irish pasture-based dairy systems. J. Dairy Sci. 2023, 106, 2498–2509. [Google Scholar] [CrossRef] [PubMed]

- Schofield, C.P.; Marchant, J.A.; White, R.P.; Brandl, N.; Wilson, M. Monitoring pig growth using a prototype imaging system. J. Agric. Eng. Res. 1999, 72, 205–210. [Google Scholar] [CrossRef]

- Enevoldsen, C.; Kristensen, T. Estimation of Body Weight from Body Size Measurements and Body Condition Scores in Dairy Cows. J. Dairy Sci. 1997, 80, 1988–1995. [Google Scholar] [CrossRef] [PubMed]

- Zulkifli, I. Review of human-animal interactions and their impact on animal productivity and welfare. J. Anim. Sci. Biotechnol. 2013, 4, 25. [Google Scholar] [CrossRef] [PubMed]

- Sun, D.; Webb, L.; van der Tol, P.P.J.; van Reenen, K. A Systematic Review of Automatic Health Monitoring in Calves: Glimpsing the Future from Current Practice. Front. Vet. Sci. 2021, 8, 761468. [Google Scholar] [CrossRef]

- Ren, K.; Karlsson, J.; Liuska, M.; Hartikainen, M.; Hansen, I.; Jørgensen, G. A sensor-fusion-system for tracking sheep location and behaviour. Int. J. Distrib. Sens. Netw. 2020, 16, 155014772092177. [Google Scholar] [CrossRef]

- Besteiro, R.; Arango, T.; Ortega, J.; Fernández, M.D.; Rodríguez, M.R. Long-Term Measurement of Piglet Activity Using Passive Infrared Detectors. Animals 2021, 11, 1607. [Google Scholar] [CrossRef]

- Okayama, T.; Kubota, Y.; Toyoda, A.; Kohari, D.; Noguchi, G. Estimating body weight of pigs from posture analysis using a depth camera. Anim. Sci. J. 2021, 92, e13626. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Li, R.; Zhang, S.; Wen, Y.; Xu, X.; Song, H. Extracting cow point clouds from multi-view RGB images with an improved YOLACT++ instance segmentation. Expert Syst. Appl. 2023, 230, 120730. [Google Scholar] [CrossRef]

- González-Baldizón, Y.; Pérez-Patricio, M.; Camas-Anzueto, J.L.; Rodríguez-Elías, O.M.; Escobar-Gómez, E.N.; Vazquez-Delgado, H.D.; Guzman-Rabasa, J.A.; Fragoso-Mandujano, J.A. Lamb Behaviors Analysis Using a Predictive CNN Model and a Single Camera. Appl. Sci. 2022, 12, 4712. [Google Scholar] [CrossRef]

- Bhole, A.; Udmale, S.; Falzon, O.; Azzopardi, G. CORF3D contour maps with application to Holstein cattle recognition from RGB and thermal images. Expert Syst. Appl. 2022, 192, 116354. [Google Scholar] [CrossRef]

- Jia, N.; Kootstra, G.; Koerkamp, P.G.; Shi, Z.; Du, S. Segmentation of body parts of cows in RGBdepth images based on template matching. Comput. Electron. Agric. 2021, 180, 105897. [Google Scholar] [CrossRef]

- Rosell-Polo, J.R.; Cheein, F.A.; Gregorio, E.; Andujar, D.; Puigdomenech, L.; Masip, J.; Escola, A. Advances in structured light sensors applications in precision agriculture and livestock farming. Adv. Agron. 2015, 133, 71–112. [Google Scholar]

- Simianer, H. Decision making in livestock conservation. Ecol. Econ. 2005, 53, 559–572. [Google Scholar] [CrossRef]

- Li, X.; Zhang, H.; Wang, M. Analysis of Factors Influencing the Decision-Making Behavior of Beef Cattle Farmers: An Empirical Analysis Based on Logit-ISM Model. Animals 2022, 12, 3470. [Google Scholar] [CrossRef]

- Wu, J.H.; Tillett, R.; McFarlane, N.; Ju, X.Y.; Siebert, J.P.; Schofield, P. Extracting the 3D shape of live pigs using stereo photogrammetry. Comput. Electron. Agric. 2004, 44, 203–222. [Google Scholar] [CrossRef]

- Huang, L.; Li, S.; Zhu, A.; Fan, X.; Zhang, C.; Wang, H. Non-Contact Body Measurement for Qinchuan Cattle with LiDAR Sensor. Sensors 2018, 18, 3014. [Google Scholar] [CrossRef]

- Le Cozler, Y.; Allain, C.; Caillot, A.; Delouard, J.M.; Delattre, L.; Luginbuhl, T.; Faverdin, P. High-precision scanning system for complete 3D cow body shape imaging and analysis of morphological traits. Comput. Electron. Agric. 2019, 157, 447–453. [Google Scholar] [CrossRef]

- Lu, M.; Norton, T.; Youssef, A.; Radojkovic, N.; Fernández, A.P.; Berckmans, D. Extracting body surface dimensions from top-view images of pigs. Int. J. Agric. Biol. Eng. 2018, 11, 182–191. [Google Scholar] [CrossRef]

- Khojastehkey, M.; Aslaminejad, A.A.; Shariati, M.M.; Dianat, R. Body size estimation of new born lambs using image processing and its effect on the genetic gain of a simulated population. J. Appl. Anim. Res. 2016, 44, 326–330. [Google Scholar] [CrossRef][Green Version]

- Menesatti, P.; Costa, C.; Antonucci, F.; Steri, R.; Pallottino, F.; Catillo, G. A low-cost stereovision system to estimate size and weight of live sheep. Comput. Electron. Agric. 2014, 103, 33–38. [Google Scholar] [CrossRef]

- Lee, J.; Jin, L.; Park, D.; Chung, Y. Automatic recognition of aggressive behavior in pigs using a kinect depth sensor. Sensors 2016, 16, 631. [Google Scholar] [CrossRef]

- Dufaux, F. Grand Challenges in Image Processing. Front. Signal Process. 2021, 1, 675547. [Google Scholar] [CrossRef]

- Kashiha, M.A.; Bahr, C.; Ott, S.; Moons, C.P.; Niewold, T.A.; Tuyttens, F.; Berckmans, D. Automatic monitoring of pig locomotion using image analysis. Livest. Sci. 2014, 159, 141–148. [Google Scholar] [CrossRef]

- Zhong, Z.; Wang, M.; Gao, W.; Zheng, L. A novel multisource pig-body multifeature fusion method based on Gabor features. Multidimens. Syst. Signal Process. 2020, 32, 381–404. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, H.; Mbachu, J. Multi-Sensor Data Fusion for 3D Reconstruction of Complex Structures: A Case Study on a Real High Formwork Project. Remote Sens. 2023, 15, 1264. [Google Scholar] [CrossRef]

- Thapar, G.; Biswas, T.K.; Bhushan, B.; Naskar, S.; Kumar, A.; Dandapat, P.; Rokhade, J. Accurate estimation of body weight of pigs through smartphone image measurement app. Smart Agric. Technol. 2023, 4, 100194. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Milani, V.; Zhu, D.; Guo, H.; Guercini, S.; Marinello, F. On-Barn Pig Weight Estimation Based on Body Measurements by Structure-from-Motion (SfM). Sensors 2018, 18, 3603. [Google Scholar] [CrossRef]

- Los, S.; Mücher, C.A.; Kramer, H.; Franke, G.J.; Kamphuis, C. Estimating body dimensions and weight of cattle on pasture with 3D models from UAV imagery. Smart Agric. Technol. 2023, 4, 100167. [Google Scholar] [CrossRef]

- Kongsro, J. Estimation of pig weight using a Microsoft Kinect prototype imaging system. Comput. Electron. Agric. 2014, 109, 32–35. [Google Scholar] [CrossRef]

- Jiao, L.; Dong, D.; Zhao, X.; Han, P. Compensation method for the influence of angle of view on animal temperature measurement using thermal imaging camera combined with depth image. J. Therm. Biol. 2016, 62, 15–19. [Google Scholar] [CrossRef]

- Kulikov, V.A.; Khotskin, N.V.; Nikitin, S.V.; Lankin, V.S.; Kulikov, A.V.; Trapezov, O.V. Application of 3-D imaging sensor for tracking minipigs in the open field test. J. Neurosci. Methods 2014, 235, 219–225. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Guarino, M.; Dartori, L.; Gonzalez, L.A.; Marinello, F. On-barn pig weight estimation based on body measurements by a Kinect v1 depth camera. Comput. Electron. Agric. 2018, 148, 29–36. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Guarino, M.; Sartori, L.; Marinello, F. A Feasibility Study on the Use of a Structured Light Depth-Camera for 3D Body Measurements of Dairy Cows in Free-Stall Barns. Sensors 2018, 18, 673. [Google Scholar] [CrossRef] [PubMed]

- Spoliansky, R.; Edan, Y.; Parmet, Y.; Halachmi, I. Development of automatic body condition scoring using a low-cost 3-dimensional Kinect camera. J. Dairy Sci. 2016, 99, 7714–7723. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Guo, H.; Ma, Q.; Su, W.; Chen, L.; Zhu, D. A portable and automatic Xtion-based measurement system for pig body size. Comput. Electron. Agric. 2018, 148, 291–298. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Giora, D.; Guo, H.; Ma, Q.; Guercini, S.; Marinello, F. A comparison of low-cost techniques for 3D animal body measurement in livestock buildings. IOP Conf. Ser. Earth Environ. Sci. 2019, 275, 012015. [Google Scholar] [CrossRef]

- Li, G.; Liu, X.; Ma, Y.; Wang, B.; Zheng, L.; Wang, M. Body size measurement and live body weight estimation for pigs based on back surface point clouds. Biosyst. Eng. 2022, 218, 10–22. [Google Scholar] [CrossRef]

- Aparecida de Moraes Weber, V.; de Lima Weber, F.; da Costa Gomes, R.; da Silva Oliveira, A., Jr.; Vilharva Menezes, G.; Gomes Pinto de Abreu, U.; de Souza Belete, N.A.; Pistori, H. Prediction of Girolando cattle weight by means of body measurements extracted from images. Rev. Bras. Zootec. 2020, 49, e20190110. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, J.; Teng, G. Mobile measuring system based on LabVIEW for pig body components estimation in a large-scale farm. Comput. Electron. Agric. 2019, 156, 399–405. [Google Scholar] [CrossRef]

- Yongsheng, S.; Lulu, A.; Gang, L.; Baocheng, L. Ideal Posture Detection and Body Size Measurement of Pig Based on Kinect. Trans. Chin. Soc. Agric. Mach. 2019, 50, 58–65. [Google Scholar]

- Shuai, S.; Ling, Y.; Shihao, L.; Haojie, Z.; Xuhong, T.; Caixing, L.; Aidong, S.; Hanxing, L. Research on 3D surface reconstruction and body size measurement of pigs based on multi-view RGB-D cameras. Comput. Electron. Agric. 2020, 2020, 175. [Google Scholar] [CrossRef]

- Hao, H.; Jincheng, Y.; Ling, Y.; Gengyuan, C.; Sumin, Z.; Huan, Z. An improved PointNet++ point cloud segmentation model applied to automatic measurement method of pig body size. Comput. Electron. Agric. 2023, 205, 107560. [Google Scholar] [CrossRef]

- Li, J.; Li, Q.; Ma, W.; Xue, X.; Zhao, C.; Tulpan, D.; Yang, S.X. Key Region Extraction and Body Dimension Measurement of Beef Cattle Using 3D Point Clouds. Agriculture 2022, 12, 1012. [Google Scholar] [CrossRef]

- Chen, S.; Zhuo, G.T. An approach of pig weight estimation using binocular stereo system based on LabVIEW. Comput. Electron. Agric. 2016, 129, 37–43. [Google Scholar]

- Tasdemir, S.; Urkmez, A.; Inal, S. Determination of body measurements on the Holstein cows using digital image analysis and estimation of live weight with regression analysis. Comput. Electron. Agric. 2011, 76, 189–197. [Google Scholar] [CrossRef]

- Zhang, R.; Wu, Y.; Jin, W.; Meng, X. Deep-Learning-Based Point Cloud Semantic Segmentation: A Survey. Electronics 2023, 12, 3642. [Google Scholar] [CrossRef]

- He, H.; Qiao, Y.; Li, X.; Chen, C.; Zhang, X. Automatic weight measurement of pigs based on 3D images and regression network. Comput. Electron. Agric. 2021, 187, 106299. [Google Scholar] [CrossRef]

- Grilli, E.M.; Fabio, F.R. A review of point clouds segmentation and classification algorithms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 339–344. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Ruchay, A.; Kober, V.; Dorofeev, K.; Kolpakov, V.; Miroshnikov, S. Accurate body measurement of live cattle using three depth cameras and non-rigid 3-D shape recovery. Comput. Electron. Agric. 2020, 179, 105821. [Google Scholar] [CrossRef]

- Wang, K.; Zhu, D.; Guo, H.; Ma, Q.; Su, W.; Su, Y. Automated calculation of heart girth measurement in pigs using body surface point clouds. Comput. Electron. Agric. 2019, 156, 565–573. [Google Scholar] [CrossRef]

- Guo, H.; Li, Z.B.; Ma, Q.; Zhu, D.H.; Su, W.; Wang, K.; Marinello, F. A bilateral symmetry based pose normalization framework applied to livestock body measurement in point clouds. Comput. Electron. Agric. 2019, 160, 59–70. [Google Scholar] [CrossRef]

- Du, R.; Ma, Z.; Xie, P.; Cen, H.; He, Y. PST: Plant Segmentation Transformer Enhanced Phenotyping of MLS Oilseed Rape Point Cloud. ISPRS J. Photogramm. Remote Sens. 2023, 195, 380–392. [Google Scholar] [CrossRef]

- Guo, R.; Xie, J.; Zhu, J.; Cheng, R.; Zhang, Y.; Zhang, X.; Gong, X.; Zhang, R.; Wang, H.; Meng, F. Improved 3D point cloud segmentation for accurate phenotypic analysis of cabbage plants using deep learning and clustering algorithms. Comput. Electron. Agric. 2023, 211, 108014. [Google Scholar] [CrossRef]

- Li, Y.; Wen, W.; Miao, T.; Wu, S.; Yu, Z.; Wang, X.; Guo, X.; Zhao, C. Automatic organ-level point cloud segmentation of maize shoots by integrating high-throughput data acquisition and deep learning. Comput. Electron. Agric. 2022, 193, 106702. [Google Scholar] [CrossRef]

- Le Cozler, Y.; Allain, C.; Xavier, C.; Depuille, L.; Caillot, A.; Delouard, J.M.; Delattre, L.; Luginbuhl, T.; Faverdin, P. Volume and surface area of Holstein dairy cows calculated from complete 3D shapes acquired using a high-precision scanning system: Interest for body weight estimation. Comput. Electron. Agric. 2019, 165, 104977. [Google Scholar] [CrossRef]

- Le Cozler, Y.; Brachet, E.; Bourguignon, L.; Delattre, L.; Luginbuhl, T.; Faverdin, P. Three-Dimensional (3D) Imaging Technology to Monitor Growth and Development of Holstein Heifers and Estimate Body Weight, a Preliminary Study. Sensors 2022, 22, 4635. [Google Scholar] [CrossRef] [PubMed]

- Yan, Q.; Ding, L.; Wei, H.; Wang, X.; Jiang, C.; Degen, A. Body weight estimation of yaks using body measurements from image analysis. Measurement 2019, 140, 76–80. [Google Scholar] [CrossRef]

- Zhang, J.; Zhuang, Y.; Ji, H.; Teng, G. Pig Weight and Body Size Estimation Using a Multiple Output Regression Convolutional Neural Network: A Fast and Fully Automatic Method. Sensors 2021, 21, 3218. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, A.H.; Holt, J.P.; Knauer, M.T.; Abner, V.A.; Lobaton, E.J.; Young, S.N. Towards rapid weight assessment of finishing pigs using a handheld, mobile RGB-D camera. Biosyst. Eng. 2023, 226, 155–168. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, H.; Wang, S.; Xu, C.; Lv, Y. Design and Research of Low-Cost and Self-Adaptive Terrestrial Laser Scanning for Indoor Measurement Based on Adaptive Indoor Measurement Scanning Strategy and Structural Characteristics Point Cloud Segmentation. Adv. Civ. Eng. 2022, 2022, 5681771. [Google Scholar] [CrossRef]

- Li, J.; Ma, W.; Bai, Q.; Tulpan, D.; Gong, M.; Sun, Y.; Xue, X.; Zhao, C.; Li, Q. A posture-based measurement adjustment method for improving the accuracy of beef cattle body size measurement based on point cloud data. Biosyst. Eng. 2023, 230, 171–190. [Google Scholar] [CrossRef]

- Kiyoun, K.; Ahram, P.; Lee, H.; Mun, D. Deep learning-based weight estimation using a fast-reconstructed mesh model from the point cloud of a pig. Comput. Electron. Agric. 2023, 210, 107903. [Google Scholar]

- He, C.; Qiao, Y.; Mao, R.; Li, M.; Wang, M. Enhanced LiteHRNet based sheep weight estimation using RGB-D images. Comput. Electron. Agric. 2023, 206, 107667. [Google Scholar] [CrossRef]

- Okinda, C.; Liu, L.; Zhang, G.; Shen, M. Swine live weight estimation by adaptive neuro-fuzzy inference system. Indian J. Anim. Res. 2018, 52, 923–928. [Google Scholar]

- Dang, C.; Choi, T.; Lee, S.; Lee, S.; Alam, M.; Park, M.; Han, S.; Lee, J.; Hoang, D. Machine Learning-Based Live Weight Estimation for Hanwoo Cow. Sustainability 2022, 14, 12661. [Google Scholar] [CrossRef]

- Buayai, P.; Piewthongngam, K.; Leung, C.K.; Saikaew, K.R. Semi-Automatic Pig Weight Estimation Using Digital Image Analysis. Appl. Eng. Agric. 2019, 35, 521–534. [Google Scholar] [CrossRef]

- Du, A.; Guo, H.; Lu, J.; Su, Y.; Ma, Q.; Ruchay, A.; Marinello, F.; Pezzuolo, A. Automatic livestock body measurement based on keypoint detection with multiple depth cameras. Comput. Electron. Agric. 2022, 198, 107059. [Google Scholar] [CrossRef]

| Work | Breed | Device | Method | Animal Numbers | Year |

|---|---|---|---|---|---|

| [29] | Live pigs | RGB camera | Binocular stereo vision technology | 32 | 2004 |

| [30] | Live cows | LiDAR | Statistical outliers and voxel grid filtering methods | 3 | 2018 |

| [31] | Live cows | LiDAR | Fusion | 30 | 2019 |

| [32] | Live pigs | 3D camera | Point cloud registration | 20 | 2018 |

| [33] | Newborn lambs | RGB camera | Digital image processing | 158 | 2015 |

| [34] | Live sheep | RGB camera | Binocular stereo vision technology | 27 | 2014 |

| [35] | Live cows | LiDAR | Image fusion | 25 | 2023 |

| [36] | Live pigs | 3D camera | Point cloud registration | 78 | 2018 |

| [37] | Live cows | 3D camera | Point cloud registration | 101 | 2016 |

| [38] | Live pigs | Visible image and infrared image sensor | Multi-source image fusion | N/A | 2020 |

| Device | Measurement Range | Measure Targets | Advantage | Disadvantage |

|---|---|---|---|---|

| 3D camera | From several centimeters to several meters | Acquiring spatial coordinate information of an object | Provide depth information and high-resolution depth information | Limited measurement range, susceptible to lighting and environmental conditions, higher costs, and rather intricate 3D reconstruction |

| Laser scanning | Ranges from several meters to several kilometers | Generate high-precision geometric surface point clouds of objects | Obtain highly accurate depth information of the measured object, with relatively minor susceptibility to lighting effects | Lack of color information, lower resolution, and relatively higher cost |

| RGB camera | Between tens of centimeters to several meters | Using electronic sensors to convert optical images into electronic data | Lower cost, well-developed application scenarios | Lack of depth information and susceptible to lighting and environmental conditions |

| Device Type | Breed | Data Type | Method | Error | Reference | Year |

|---|---|---|---|---|---|---|

| RGB camera | Live pigs | RGB images | Area method | Less than 2.94% | [32] | 2018 |

| Live pigs | RGB images | Image processing | Less than 10.2% | [37] | 2014 | |

| 3D camera | Live cows | Point cloud | Geometric segmentation | Less than 3% | [52] | 2020 |

| Live pigs | Video | Geometric segmentation | Less than 4.5% | [53] | 2019 | |

| Live pigs | Point cloud | Geometric segmentation | Less than 7.87% | [54] | 2019 | |

| Live pigs | Point cloud | Geometric segmentation | Less than 4.67% | [55] | 2020 | |

| Live pigs | Point cloud | Neural network segmentation | Less than 5.26% | [56] | 2023 | |

| Live cows | Point cloud | Geometric segmentation | Less than 3.47% | [57] | 2023 |

| Methods | Advantage | Disadvantage |

|---|---|---|

| RGB Image | Lower cost, more mature image processing techniques, and simpler algorithms | Lack of depth information, restricted by viewing angle |

| Geometric segmentation methods in 3D imaging | Requires minimal annotated data, high computational efficiency, and superior segmentation precision | Highly dependent on geometric accuracy, susceptible to individual animal variations |

| Neural network methods in 3D imaging | Adapts to intricate information and structures, learns crucial insights | Large data requirements, limited public datasets, and relatively high computing resource demands exist |

| Breed | Device | Data Type | Method | Error | Reference | Time |

|---|---|---|---|---|---|---|

| Live pigs | 3D camera | Point cloud | Linear regression | 4.87% | [21] | 2021 |

| Live pigs | 3D camera | Point cloud | Linear regression | 0.48 kg | [41] | 2018 |

| Live pigs | 3D camera | Point cloud | Linear regression | 2.961 kg | [51] | 2022 |

| Live pigs | 3D camera | 3D images and 2D images | Neural network | MAE = 6.366 | [61] | 2021 |

| Live cows | 3D scanning device | 3D images | Linear regression | 20–30 kg | [70] | 2019 |

| Live cows | 3D scanning device | 3D images | Linear regression | 9.7% | [71] | 2022 |

| Live cows | RGB camera, infrared rangefinder | RGB images | Linear regression | LBW = 86.3–97.2% | [72] | 2019 |

| Live pigs | 3D camera | 3D images and 2D images | Neural network | 1.16 kg | [73] | 2021 |

| Live pigs | 3D camera | Point cloud | Neural network | MAE = 9.25, RMSE = 12.3 kg | [74] | 2023 |

| Methods | Advantage | Disadvantage |

|---|---|---|

| Estimation of weight based on body dimension | Simple, convenient, and rapid approach, supported by existing research foundations | Relatively lower precision; heavily reliant on the accuracy of 3D reconstruction |

| Weight estimation based on neural network | Strong generalization for diverse livestock batches and types, more accurate precision | Larger data requirements; higher computational resource |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, W.; Qi, X.; Sun, Y.; Gao, R.; Ding, L.; Wang, R.; Peng, C.; Zhang, J.; Wu, J.; Xu, Z.; et al. Computer Vision-Based Measurement Techniques for Livestock Body Dimension and Weight: A Review. Agriculture 2024, 14, 306. https://doi.org/10.3390/agriculture14020306

Ma W, Qi X, Sun Y, Gao R, Ding L, Wang R, Peng C, Zhang J, Wu J, Xu Z, et al. Computer Vision-Based Measurement Techniques for Livestock Body Dimension and Weight: A Review. Agriculture. 2024; 14(2):306. https://doi.org/10.3390/agriculture14020306

Chicago/Turabian StyleMa, Weihong, Xiangyu Qi, Yi Sun, Ronghua Gao, Luyu Ding, Rong Wang, Cheng Peng, Jun Zhang, Jianwei Wu, Zhankang Xu, and et al. 2024. "Computer Vision-Based Measurement Techniques for Livestock Body Dimension and Weight: A Review" Agriculture 14, no. 2: 306. https://doi.org/10.3390/agriculture14020306

APA StyleMa, W., Qi, X., Sun, Y., Gao, R., Ding, L., Wang, R., Peng, C., Zhang, J., Wu, J., Xu, Z., Li, M., Zhao, H., Huang, S., & Li, Q. (2024). Computer Vision-Based Measurement Techniques for Livestock Body Dimension and Weight: A Review. Agriculture, 14(2), 306. https://doi.org/10.3390/agriculture14020306