Abstract

The evaluation of rice disease severity is a quantitative indicator for precise disease control, which is of great significance for ensuring rice yield. In the past, it was usually done manually, and the judgment of rice blast severity can be subjective and time-consuming. To address the above problems, this paper proposes a real-time rice blast disease segmentation method based on a feature fusion and attention mechanism: Deep Feature Fusion and Attention Network (abbreviated to DFFANet). To realize the extraction of the shallow and deep features of rice blast disease as complete as possible, a feature extraction (DCABlock) module and a feature fusion (FFM) module are designed; then, a lightweight attention module is further designed to guide the features learning, effectively fusing the extracted features at different scales, and use the above modules to build a DFFANet lightweight network model. This model is applied to rice blast spot segmentation and compared with other existing methods in this field. The experimental results show that the method proposed in this study has better anti-interference ability, achieving 96.15% MioU, a speed of 188 FPS, and the number of parameters is only 1.4 M, which can achieve a high detection speed with a small number of model parameters, and achieves an effective balance between segmentation accuracy and speed, thereby reducing the requirements for hardware equipment and realizing low-cost embedded development. It provides technical support for real-time rapid detection of rice diseases.

1. Introduction

Rice, as the main food crop for human beings, is widely cultivated around the world. Rice diseases affect its yield as well as its quality, which, in turn, has an impact on food security [1]. Among the rice diseases, rice blast is one of the most devastating rice diseases, which can cause severe yield losses and even crop failure in rice fields [2]. In China, about 15% of the annual rice yield loss is caused by rice blast disease. The severity of rice blast is generally judged by the size of the area occupied by the disease spots on the leaves, and the traditional identification method is usually done manually, which can be subjective and time-consuming for determining the severity of rice blast. Therefore, rapid and accurate segmentation of rice blast spots is of great significance for ensuring rice yield and food security.

Sick spot segmentation methods generally include three types of image segmentation: traditional image segmentation, image segmentation by machine learning, and image segmentation by deep learning. Traditional disease spot segmentation is based on the color and texture features of the image. Ma et al. [3] used integrated color features and an interactive region growth method for spot segmentation of cucumber downy mildew. Jothiaruna et al. [4] proposed an advanced color feature method consisting of a color space, color index, and singular value decomposition for leaf spot segmentation, with an average accuracy of 87%. Deng et al. [5] proposed an interactive color image segmentation method with grasping segmentation for spot segmentation of diseases such as maize black cob disease, which achieved better results in complex backgrounds. However, these traditional segmentation methods are susceptible to environmental changes, low segmentation accuracy, and low generality.

In terms of image segmentation for machine learning, Wang et al. [6] proposed a segmentation method based on k-means clustering, which combines the color space components and features, iterates the clustering, and corrects the clustering results, finally conducting segmentation experiments on images of cucumber diseases and soybean diseases. Khan et al. [7] used a hybrid contrast stretching technique for image enhancement, and then proposed a strong correlation-based segmentation method based on the HSI color space and optimized it with an expectation maximization algorithm to achieve good results in apple disease image segmentation. Trivedi et al. [8] used an ROF filter to filter and denoise the disease images, and then proposed a plant leaf disease spot segmentation method based on a color histogram and k-mean clustering algorithm. Bai et al. [9] proposed a labeled watershed algorithm based on the HSI space and an improved fuzzy C-mean algorithm for segmenting cucumber disease images, with an average segmentation error of 0.12%. Yu et al. [10] proposed an improved gray wolf optimization algorithm for multi-threshold segmentation of maize leaf spots under different thresholds. Waldamichal et al. [11] used the HSV color segmentation algorithm to separate the disease spots from the leaf background by automatically finding the optimal threshold for the saturation channel in color space, and the final test was performed on the coffee disease dataset with an average cross-merge ratio of 72.13%. Patil et al. [12] used a modified sunflower optimization algorithm to enhance the radial basis function neural network by removing noise from the image. Chen et al. [13] used a non-local mean filtered two-dimensional histogram to remove the noise in the corn disease image, followed by an improved particle swarm optimization algorithm to find the optimal segmentation threshold to segment the corn leaf disease. However, the segmentation method under machine learning requires complex image preprocessing steps, and designing the feature extractors, which makes the segmentation work, can be complicated and time-consuming.

With the continuous development of deep learning, it is also gradually applied to the direction of disease spot segmentation. Chen et al. [14] proposed a method based on an improved u-net network to segment rice bacterial streak disease with an accuracy of 95.6%. Wang et al. [15] used a two-stage sequential segmentation method to segment cucumber downy mildew images by first mining the features through the DeepLabV3+ network and then further determining the disease spot region through the U-Net network, with a 93.27% accuracy in leaf segmentation. Yuan et al. [16] improved the DeepLabV3+ network with ResNet101 as the backbone network and added a channel attention module in the residual block for the segmentation of black rot spots on grape leaves, with 84.8% accuracy. Wang et al. [17] used a segmentation method based on the U-Net network for segmentation of visible spectrum images of cucumber brown spot disease, with a maximum accuracy of 91.59%. Ji et al. [18] used resnet50 as the backbone network to construct a DeepLabV3+ semantic segmentation model and developed a fuzzy rule-based system to predict the damage level of black measles disease in grapes. Goncalves et al. [19] used multiple architectures for semantic segmentation of soybean rust images and, finally, FPN, U-Net, and DeepLabV3+ performed the best among the architectures, with an accuracy of 95%, 96% and 98%, respectively. Hu et al. [20] segmented tea wilt leaves by combining U-Net and a fully connected conditional random field, with an average cross-merger ratio of 91.4%. Yuan et al. [21] constructed cascaded convolutional neural networks by connecting a three-level convolutional neural network model and adding spatial pyramidal pooling layers to each network to segment the crop disease images under different conditions, with a final result of 95%. The above study illustrates that the deep learning method for disease spot segmentation is feasible, omitting the preprocessing and feature extraction processes under the traditional and machine learning methods.

However, existing deep learning-based spot segmentation methods pursue high accuracy while leading to a large number of network model parameters, complex network structures, and high training and detection time costs, which cannot achieve real-time and fast detection. To address this problem, this study proposes a real-time semantic segmentation method called DFFANet, which achieves a balance between accuracy and efficiency through a multi-scale feature fusion and attention mechanism.

2. Materials and Methods

2.1. Experimental Method

2.1.1. DFFANet Network Model

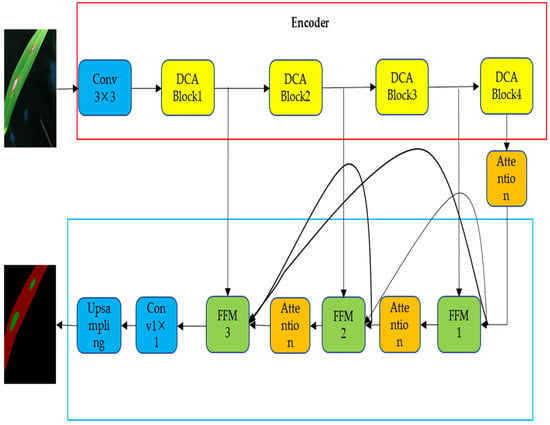

The overall network structure of the multi-scale feature fusion and attention mechanism (DFFANet)-based rice paddy disease segmentation method is shown in Figure 1. The network is implemented based on an encoder and decoder structure, which mainly consists of a feature extraction (abbreviated to DCABlock) module, a feature fusion (FFM) module, and an attention mechanism (Attention) module. The encoder module is used to extract the shallow and deep features of rice blast disease, which mainly consists of a 3 × 3 convolution operation with four DCABlock modules. The decoder module mainly consists of three feature fusion modules, FFM, and Attention, which further fuse the multi-level features and connect the output of each stage of the preorder to other stages of the postorder through dense connections to achieve reuse of features, which can integrate features at different scales and preserve richer rice blast information. The attention module refines the features in the decoding process to avoid the loss of valid information during decoding. Finally, the fused feature maps are subjected to convolution operation, and then the spatial information of the images is recovered by quadruple upsampling.

Figure 1.

Overall network structure of DFFANet, which is mainly composed of two parts: Encoder and decoder.

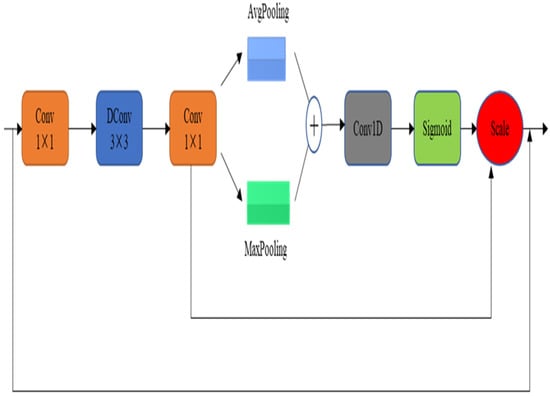

2.1.2. Feature Extraction (DCABlock) Module

In the encoder stage, in order to better extract the shallow and deep features of rice blast disease, a four-layer DCABlock module is designed in this paper for feature extraction and learning, and the module structure is shown in Figure 2. Firstly, a 1 × 1 convolution operation is performed to up-dimension the number of feature channels to 4 times the original number, and then the perceptual field is increased by a 3 × 3 expansion convolution, and then another 1 × 1 convolution is performed to down-dimension the feature channels to restore the original number of channels. The information expressed in the feature map of each channel is different after the above multiple convolution operations. In order to pay more attention to the semantic information that is meaningful to the current task, this paper aggregates more global information and edge texture information by global maximum pooling, Maxpooling, and global average pooling, AvgPooling, and fuses the feature information obtained from these two operations together. The fused information is subjected to a one-dimensional convolution operation to generate more critical feature vectors, and the channel weights are multiplied by the Sigmoid function, and the obtained feature vectors are combined with the input features element by element to obtain the output features.

Figure 2.

Structure of the DCABlock module for feature extraction and learning. Two pooling layers are used to aggregate the different semantic information.

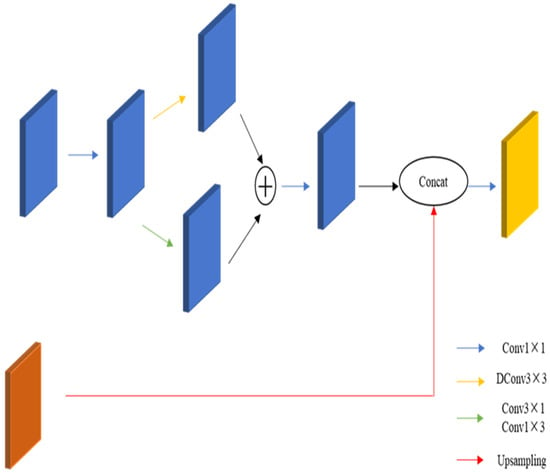

2.1.3. Feature Fusion (FFM) Module

Shallow features, which contain more detailed information, but their semantic information is less and noisier, while deep features have richer semantic information, but low resolution and less attention to detail, so multi-scale feature fusion can effectively integrate contextual and spatial information detail information [22].

Some existing segmentation networks such as FCN [23] and U-Net [24] fuse multi-scale features by jumping connections, but this also brings a large number of parameters, which is not conducive to real-time segmentation. Aiming at the above problems, a new feature fusion module is designed, which can achieve the purpose of feature fusion with less parameters.

As shown in Figure 3, the feature fusion module adopts the design of a bottleneck structure. Then, the top and bottom outputs are added together, and the number of channels is restored by a 1 × 1 convolution operation. After this process, the shallow features are fused with the up-sampled deep features and then subjected to 1 × 1 convolution.

Figure 3.

FFM structure used for multi-scale feature fusion, extracting shallow information using asymmetric convolution to reduce the number of parameters.

For the problem of gradient disappearance due to the deepening of the network layers, we use the densely connected feature fusion module, in which the output of each stage is used as input for the other stages. This kind of connection can play the role of feature reuse, which, in turn, alleviates the gradient vanishing [25].

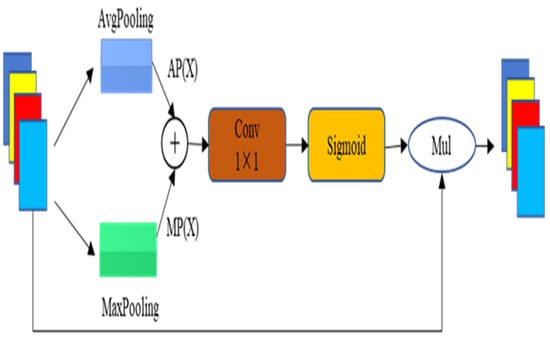

2.1.4. Attention Module

Attentional mechanisms can use deep information to guide feature learning and have also been shown to facilitate semantic segmentation [26]. In this study, the DFFANet network structure is designed with an attention module with only 0.15 M parameters to focus on the valuable parts of the current segmentation task for rice pest feature fusion, as shown in Figure 4. Let the input feature map X ∈ RC × H × W (where C represents the number of channels, H represents the feature map height, and W represents the feature map width); then, first generate the weight vectors AP(X) and MP(X) by global average pooling and global maximum pooling, respectively, and then fuse them together and pass the 1 × 1 convolution operation, followed by mapping them to the 0–1 interval using the Sigmoid function, and finally multiply the weight vectors with the input features by multiplying them.

where Wc denotes the output feature, σ denotes the Sigmoid function, and fConv denotes the 1 × 1 convolution.

Figure 4.

Attention structure.

2.2. Experimental Materials and Evaluation Indicators

2.2.1. Experimental Materials and Experimental Environment

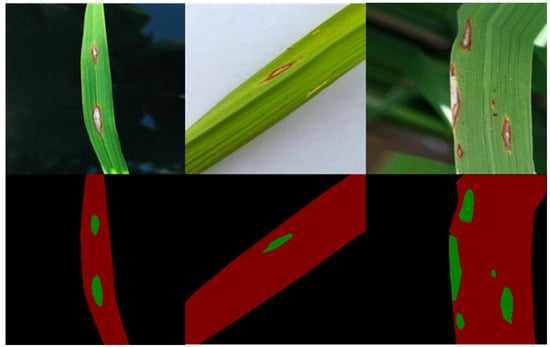

In this paper, an open dataset is used for experiments, and labeling was performed by LabelMe software, mainly labeled as rice blast disease spots and healthy leaves; the data labels are shown in Figure 5. In this study, 800 rice leaf images were selected for the experiment, and the ratio of 6:2:2 was adopted to divide the training set, validation set, and test set, in which 398 images were in the training set, 199 in the validation set, and 199 in the test set, and the resolution of all images was 256 × 256. The model was trained on a NVIDIA Tesla A100 GPU, using an SGD optimizer and cross-entropy loss function.

Figure 5.

Data and labeled data: black indicates background, red indicates healthy leaves, and green indicates rice blast spots.

2.2.2. Evaluation Indicators

The average intersection rate (abbreviated as MioU), inference speed (abbreviated as FPS), and number of parameters (abbreviated as Params) were used as quantitative metrics to evaluate the segmentation model. IoU is the ratio of the intersection and union of the prediction result of a certain category and the real value. The higher the IoU value, the higher the degree of overlap between the prediction result and the real value. MIoU is the most commonly used evaluation criterion in the field of semantic segmentation at present; it is the sum of the IoU of each category and then averaged.

where M is the tagging category, pii is the true value for category i and the predicted value for category I, pji is the prediction of category i to category j (i.e., false negative), and pij is the prediction of category j to category i (i.e., false positive).

3. Results and Discussion

3.1. Ablative Experiments

In order to verify the performance of the DFFANet network model, and the effectiveness of each module, an ablation experiment was designed. By gradually increasing the DCABlock module, the FFM feature fusion module, and the attention module, the respective effectiveness and performance of the network were verified, and the experimental results are shown in Table 1.

Table 1.

Results of the ablation experiments.

First, the DCABlock module is added to the base network to extract and learn features and to upsample them for output; the average cross-merge ratio of the model is 85.94%, the FPS is 390, and the number of parameters is 1.11 M. Further, by adding the FFM1 module to the base network, the average cross-merge ratio of the model is 93.11%, which is improved by 7.17%, the FPS is 329, and the number of parameters is 1.17 M. After further adding FFM2 and FFM3, the model’s average cross-merge ratio also gradually increased to 94.91% and 95.17%, FPS decreased to 265 and 221, and the number of covariates was 1.2 M and 1.22 M, respectively.

Adding the attention module again, the final average cross-merge ratio of the model reached 96.15%, which is 10.21% higher than the initial network model with only the DCABlock module, with an FPS of 188 and a number of parameters of 1.4 M, which is only 0.29 M higher. The connection for optimal results was borrowed from DenseNet [27] and DenseAspp [28], where the neurons of each intermediate feature map encode semantic information from multiple scales through a series of feature tandems. Since the higher the number of network layers, the higher the running time and memory requirements, which contradicts our aim of real-time lightweighting, only three feature fusion modules were used. The inclusion of the attention module allows to assign different weights to different feature channels and obtain the importance of each feature channel by automatic learning [29].

The above experiments show that the DFFANet network model using the feature extraction module, feature fusion module, and attention mechanism module, designed in Section 2.1.2, Section 2.1.3 and Section 2.1.4 of this study, can provide faster detection while effectively improving recognition accuracy and precision, significantly reducing the detection time, making the detection model easier to train, and occupying less running memory. Achieving the goal of effectiveness and lightness for rice blast disease identification provides the possibility of real-time detection.

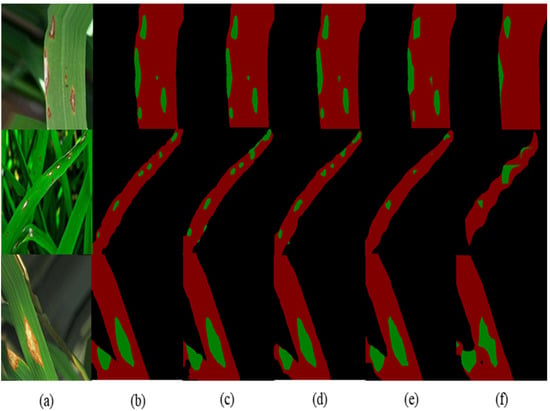

Figure 6 shows the effect of rice blast disease segmentation and recognition, which more visually represents the performance of the modules and models designed in this study. As shown in the Figure 6f is the network with only a DCABlock module, whose segmentation effect is the worst, not segmenting out all the disease spots. With the increase in feature fusion modules, with Figure 6e showing the addition of the FFM1 module, Figure 6d showing the addition of the FFM1 and FFM2 modules, and Figure 6c showing the addition of the FFM1, FFM2, and FFM3 modules, the segmentation effect becomes better and better, indicating that by adding the feature fusion modules and using a dense connection to integrate the contextual and spatial information of the rice blast disease images, we can better perform the segmentation. Thus, the content of rice blast images can be better recognized and perceived by adding the feature fusion module. Figure 6b shows the segmentation effect after further addition of the attention mechanism module, where the third row in Figure 6, due to the similarity of the shaded leaf in the lower left corner of the original image Figure 6a with the background environment, causes this area to be more difficult to be segmented.

Figure 6.

Segmentation effect of rice blast disease: (a) original image of disease spot; (b) segmentation effect of the DCABlock + FFM1 + FFM2 + FFM3 + Attention model; (c) segmentation effect of the DCABlock + FFM1 + FFM2 + FFM3 model; (d) segmentation effect of the DCABlock + FFM1 + FFM2 model; (e) the DCABlock + FFM1 model segmentation effect; (f) the DCABlock model segmentation effect.

3.2. Comparison with Existing Models

In order to verify the performance of the method in this study, the results were compared with existing semantic segmentation models under the same data set and the same experimental parameter configuration as shown in Table 2.

Table 2.

Performance comparison with existing models.

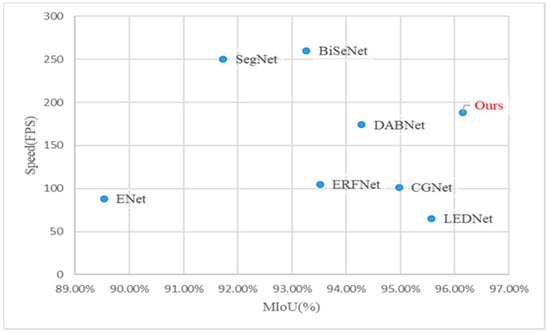

The segmentation accuracy, model running speed, and number of parameters were used to compare the performance between the models. The segmentation accuracy of the method designed in this study is 96.15%, the speed is 188 FPS, and the parameter size is 1.4 M. Compared with the ENet model, the accuracy is increased by 6.61%, the speed is increased by 100 FPS, and the number of model parameters is increased by 1.04 M. Compared with the SegNet model, the accuracy is increased by 4.42%, the speed is increased by 62 FPS, and the number of model parameters is increased by 0.46 M. Compared with the BiSeNet model, the accuracy is increased by 2.89%, the speed is increased by 72 FPS, and the amount of model parameters is reduced by 4.4 M. Compared with ERFNet, the accuracy is increased by 2.63%, the speed is increased by 83 FPS, and the amount of model parameters is reduced by 0.66 M. Compared with the CGNet model, the accuracy is increased by 1.18%, the running speed is increased by 87 FPS, and the number of model parameters is increased by 0.91 M. Compared with the LEDNet model, the accuracy is increased by 0.58%, the running speed is increased by 123 FPS, and the amount of model parameters is increased by 0.49 M. Compared with the DABNet model, the accuracy is increased by 1.87%, the running speed is increased by 13 FPS, and the number of model parameters is increased by 0.65 M.

In summary, although the model proposed is not the best in terms of speed and number of parameters, it has the highest accuracy and achieves a better balance of accuracy, speed, and number of parameters. The accuracy of the LEDNet model is similar to that of DFFANet, but the speed is only 65 FPS. The DFFANet model is better than the faster models, such as BiSeNet and SegNet, in terms of accuracy and number of parameters.

In order to compare the test results of different models more intuitively, scatter plots of the accuracy and speed of each of the above models are given in this study, as shown in Figure 7. From the figure, it can be seen that the model proposed achieves real-time segmentation of rice blast spots while also maintaining an excellent segmentation accuracy, achieving a faster detection speed while maintaining a good segmentation accuracy, significantly reducing the detection time, and occupying less running memory.

Figure 7.

Comparison of the accuracy and speed of the different methods.

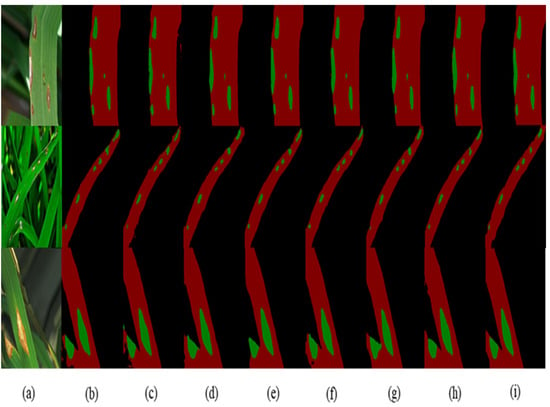

From the segmentation results in Figure 8, it can be seen that the method can achieve accurate identification of the location of the disease spots and can segment the spots from the leaves. In the first row, the segmentation results are similar, but the segmentation results of ENet, SegNet, CGNet, and DABNet have slight errors in the edge part. The segmentation results in the second row show that the segmentation results of the method in this paper are also good for smaller lesions. In the segmentation results of the third row, the segmentation results of this study method are more complete and better for the similar parts of the leaves and backgrounds, indicating the effectiveness of this method in disease segmentation in natural environments, with better interference resistance.

Figure 8.

Segmentation results of different methods. (a) Sick spot image; (b) Enet segmentation result; (c) SegNet segmentation result; (d) BiseNet segmentation result; (e) ERFNet segmentation result; (f) CGNet segmentation result; (g) LEDNet segmentation result; (h) DABNet segmentation result; (i) DFFANet segmentation result.

4. Discussion

In the existing rice disease research, most of the research is to discriminate rice diseases and identify rice disease types, and there are few studies on disease segmentation and subsequent disease severity estimation [37,38,39]. In the literature [14], the segmentation of bacterial leaf disease in rice has been studied, and the final segmentation effect is as follows: the average intersection ratio is 95.6%, and the inference speed is 48 FPS. Compared with our research, the average intersection ratio differs by 0.55%, but the inference speed differs by 132 FPS. The results show that our research can help accurately segment rice diseases in real time; it is also a lightweight method, which lays the foundation for evaluating the severity of rice diseases. At the same time, the real-time and lightweight features can facilitate our research on future desktop applications.

5. Conclusions

To address the problem that some existing disease spot segmentation methods cannot achieve an effective balance between real-time and segmentation accuracy, this study proposes a lightweight, real-time rice blast disease spot segmentation method by designing a feature extraction module, DCABlock, a multi-scale feature fusion module, FFM, and an attention module, Attention, as well as constructing a lightweight network model of DFFANet using the above modules. Our proposed method has a Miou of 96.15%, an inference speed of 180 FPS, and a model parameter number of 1.4 M, which is better than current segmentation models such as Enet, DABNet, and BiSeNet, and lays the foundation for subsequent disease classification. At the same time, the method has better interference resistance and is lightweight in terms of parameters, which provides technical support for the model to be deployed on IoT and mobile devices, and provides technical support for real-time segmentation and identification operations of rice blast disease in the field in a practical context, achieving high efficiency, high accuracy, and low hardware cost for automated rice disease detection. The method in this paper considers only one type of rice disease, and the next step will be to extend the application of the model to multiple types of rice diseases as well as diseases of multiple crops.

Author Contributions

Conceptualization, M.J. and C.F.; methodology, M.J.; software, C.F.; validation, Q.H. and C.F.; formal analysis, L.Z.; investigation, Q.H.; resources, C.Z.; data curation, C.F.; writing—original draft preparation, C.F.; writing—review and editing, M.J.; visualization, Y.F.; supervision, C.Z.; project administration, Y.F. and L.Z.; funding acquisition, C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (41575046) and by the Talents Scheme in Zhejiang Province (2021R404065), as well as the General scientific research project of Zhejiang Provincial Department of Education (Y202045743).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

If scholars need more specific data, they can send an email to the corresponding author or the first author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Asibi, A.E.; Chai, Q.; Coulter, J.A. Rice Blast: A Disease with Implications for Global Food Security. Agriculture 2019, 9, 451. [Google Scholar] [CrossRef]

- Xiao, N.; Wu, Y.Y.; Li, A.L. Strategy for Use of Rice Blast Resistance Genes in Rice Molecular Breeding. Rice Sci. 2020, 27, 263–277. [Google Scholar]

- Ma, J.; Du, K.; Zhang, L.; Zheng, F.; Chu, J.; Sun, Z. A segmentation method for greenhouse vegetable foliar disease spots images using color information and region growing. Comput. Electron. Agric. 2017, 142, 110–117. [Google Scholar] [CrossRef]

- Jothiaruna, N.; Sundar, K.J.A.; Karthikeyan, B. A segmentation method for disease spot images incorporating chrominance in Comprehensive Color Feature and Region Growing. Comput. Electron. Agric. 2019, 165, 104934. [Google Scholar] [CrossRef]

- Deng, L.; Wang, Z.; Wang, C.; He, Y.; Huang, T.; Dong, Y.; Zhang, X. Application of agricultural insect pest detection and control map based on image processing analysis. J. Intell. Fuzzy Syst. 2020, 38, 379–389. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, K.; Pan, S.; Han, Y. Segmentation of Crop Disease Images with an Improved K-means Clustering Algorithm. Appl. Eng. Agric. 2018, 34, 277–289. [Google Scholar] [CrossRef]

- Khan, M.A.; Lali, M.I.U.; Sharif, M.; Javed, K.; Aurangzeb, K.; Haider, S.I.; Altamrah, A.S.; Akram, T. An Optimized Method for Segmentation and Classification of Apple Diseases Based on Strong Correlation and Genetic Algorithm Based Feature Selection. IEEE Access 2019, 7, 46261–46277. [Google Scholar] [CrossRef]

- Trivedi, V.K.; Shukla, P.K.; Pandey, A. Automatic segmentation of plant leaves disease using min-max hue histogram and k-mean clustering. Multimed. Tools Appl. 2022, 81, 20201–20228. [Google Scholar] [CrossRef]

- Bai, X.; Li, X.; Fu, Z.; Lv, X.; Zhang, L. A fuzzy clustering segmentation method based on neighborhood grayscale information for defining cucumber leaf spot disease images. Comput. Electron. Agric. 2017, 136, 157–165. [Google Scholar] [CrossRef]

- Yu, H.; Song, J.; Chen, C.; Heidari, A.A.; Liu, J.; Chen, H.; Zaguia, A.; Mafarja, M. Image segmentation of Leaf Spot Diseases on Maize using multi-stage Cauchy-enabled grey wolf algorithm. Eng. Appl. Artif. Intell. 2022, 109, 104653. [Google Scholar] [CrossRef]

- Waldamichael, F.G.; Debelee, T.G.; Ayano, Y.M. Coffee disease detection using a robust HSV color-based segmentation and transfer learning for use on smartphones. Int. J. Intell. Syst. 2022, 37, 4967–4993. [Google Scholar] [CrossRef]

- Patil, M.A.; Manohar, M. Enhanced radial basis function neural network for tomato plant disease leaf image segmentation. Ecol. Inf. 2022, 70, 101752. [Google Scholar] [CrossRef]

- Chen, C.; Wang, X.; Heidari, A.A.; Yu, H.; Chen, H. Multi-Threshold Image Segmentation of Maize Diseases Based on Elite Comprehensive Particle Swarm Optimization and Otsu. Front. Plant Sci. 2021, 12, 789911. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Zhang, K.; Zhao, Y.; Sun, Y.; Ban, W.; Chen, Y.; Zhuang, H.; Zhang, X.; Liu, J.; Yang, T. An Approach for Rice Bacterial Leaf Streak Disease Segmentation and Disease Severity Estimation. Agriculture 2021, 11, 420. [Google Scholar] [CrossRef]

- Wang, C.; Du, P.; Wu, H.; Li, J.; Zhao, C.; Zhu, H. A cucumber leaf disease severity classification method based on the fusion of DeepLabV3+ and U-Net. Comput. Electron. Agric. 2021, 189, 106373. [Google Scholar] [CrossRef]

- Yuan, H.; Zhu, J.; Wang, Q.; Cheng, M.; Cai, Z. An Improved DeepLab v3+ Deep Learning Network Applied to the Segmentation of Grape Leaf Black Rot Spots. Front. Plant Sci. 2022, 13, 795410. [Google Scholar] [CrossRef]

- Wang, X.; Lu, H.; Lu, L.; Han, D.; Wang, Z. Segmentation of Cucumber Target Leaf Spot Based on U-Net and Visible Spectral Images. Spectrosc. Spect. Anal. 2021, 41, 1499–1504. [Google Scholar]

- Ji, M.; Wu, Z. Automatic detection and severity analysis of grape black measles disease based on deep learning and fuzzy logic. Comput. Electron. Agric. 2022, 193, 106718. [Google Scholar] [CrossRef]

- Gonçalves, J.P.; Pinto, F.A.; Queiroz, D.M.; Villar, F.M.; Barbedo, J.G.; Del Ponte, E.M. Deep learning architectures for semantic segmentation and automatic estimation of severity of foliar symptoms caused by diseases or pests. Biosyst. Eng. 2021, 210, 129–142. [Google Scholar] [CrossRef]

- Hu, G.; Wei, K.; Zhang, Y.; Bao, W.; Liang, D. Estimation of tea leaf blight severity in natural scene images. Precis. Agric. 2021, 22, 1239–1262. [Google Scholar] [CrossRef]

- Yuan, Y.; Xu, Z.; Lu, G. SPEDCCNN: Spatial Pyramid-Oriented Encoder-Decoder Cascade Convolution Neural Network for Crop Disease Leaf Segmentation. IEEE Access 2021, 9, 14849–14866. [Google Scholar] [CrossRef]

- Li, H.; Qiu, K.; Chen, L.; Mei, X.; Hong, L.; Tao, C. SCAttNet: Semantic Segmentation Network With Spatial and Channel Attention Mechanism for High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 905–909. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–15 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Li, Y.; Li, M.; Li, Z.; Xiao, C.; Li, H. EFRNet: Efficient Feature Reuse Network for Real-time Semantic Segmentation. Neural Process. Lett. 2022, 1–13. [Google Scholar] [CrossRef]

- Zhang, K.; Liao, Q.; Zhang, J.; Liu, S.; Ma, H.; Xue, J.-H. EFRNet: A Lightweight Network with Efficient Feature Fusion and Refinement for Real-Time Semantic Segmentation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Beijing, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Yang, M.; Yu, K.; Zhang, C.; Li, Z.; Yang, K. DenseASPP for Semantic Segmentation in Street Scenes. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3684–3692. [Google Scholar]

- Huang, M.; Xu, G.; Li, J.; Huang, J. A Method for Segmenting Disease Lesions of Maize Leaves in Real Time Using Attention YOLACT++. Agriculture 2021, 11, 1216. [Google Scholar] [CrossRef]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. In Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the Computer Vision–ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 334–349. [Google Scholar] [CrossRef]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. ERFNet: Efficient Residual Factorized ConvNet for Real-Time Semantic Segmentation. IEEE Trans. Intell. Transp. 2018, 19, 263–272. [Google Scholar] [CrossRef]

- Wu, T.; Tang, S.; Zhang, R.; Cao, J.; Zhang, Y. CGNet: A Light-Weight Context Guided Network for Semantic Segmentation. IEEE Trans. Image Process. 2021, 30, 1169–1179. [Google Scholar] [CrossRef]

- Wang, Y.; Zhou, Q.; Liu, J.; Xiong, J.; Gao, G.; Wu, X.; Latecki, L.J. Lednet: A Lightweight Encoder-Decoder Network for Real-Time Semantic Segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1860–1864. [Google Scholar]

- Li, G.; Jiang, S.; Yun, I.; Kim, J.; Kim, J. Decoder for Real-Time Semantic Segmentation in Urban Scenes. IEEE Access 2020, 8, 27495–27506. [Google Scholar] [CrossRef]

- Krishnamoorthy, N.; Prasad, L.V.N.; Kumar, C.S.P.; Subedi, B.; Abraha, H.B.; Sathishkumar, V.E. Rice leaf diseases prediction using deep neural networks with transfer learning. Environ. Res. 2021, 198, 111275. [Google Scholar] [CrossRef]

- Narmadha, R.P.; Sengottaiyan, N.; Kavitha, R.J. Deep Transfer Learning Based Rice Plant Disease Detection Model. Intell. Autom. Soft Comput. 2022, 31, 1257–1271. [Google Scholar] [CrossRef]

- Patil, R.R.; Kumar, S. Rice-Fusion: A Multimodality Data Fusion Framework for Rice Disease Diagnosis. IEEE Access 2022, 10, 5207–5222. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).