Prediction of Submucosal Invasion for Gastric Neoplasms in Endoscopic Images Using Deep-Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Collection of Data

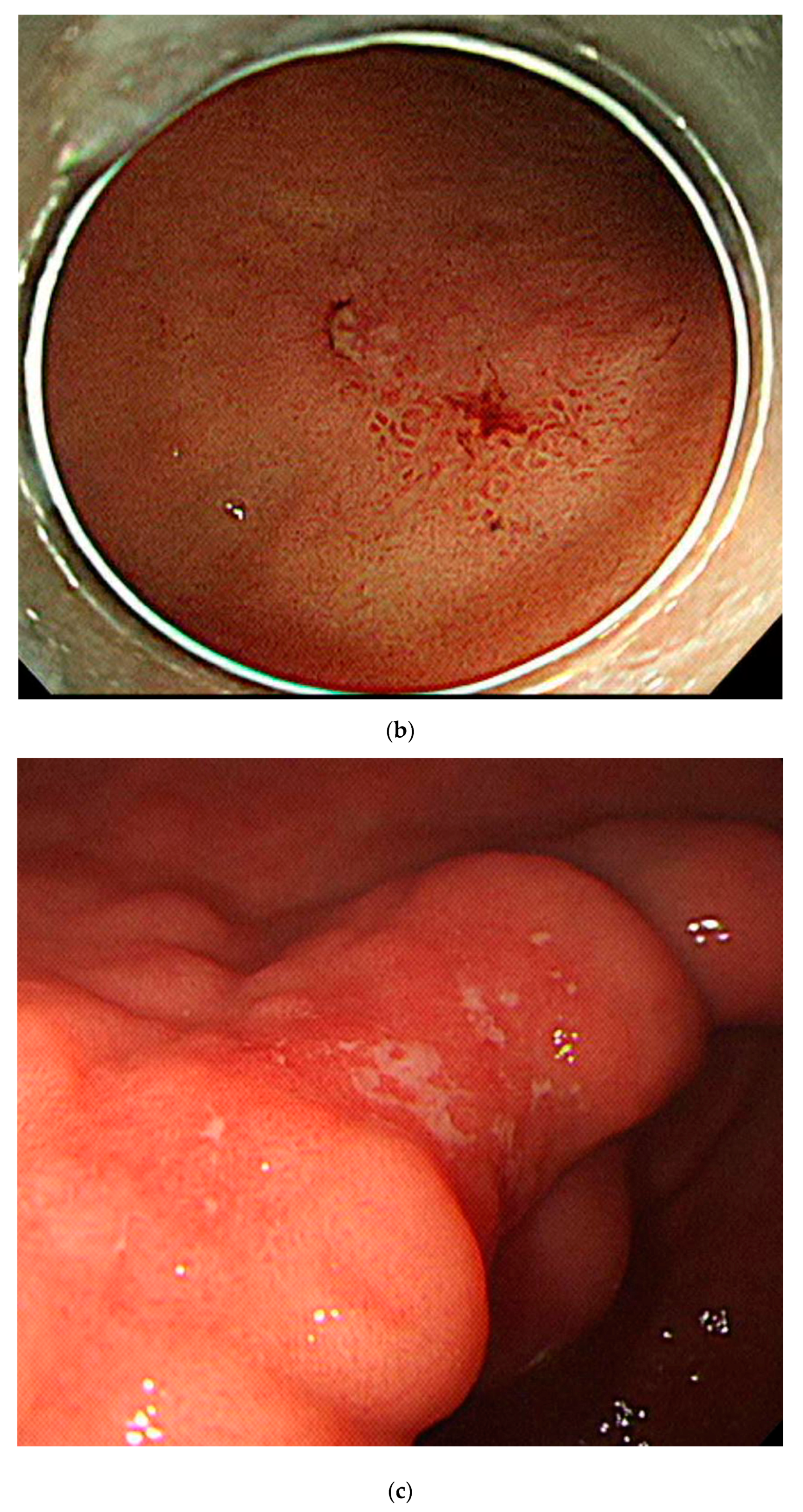

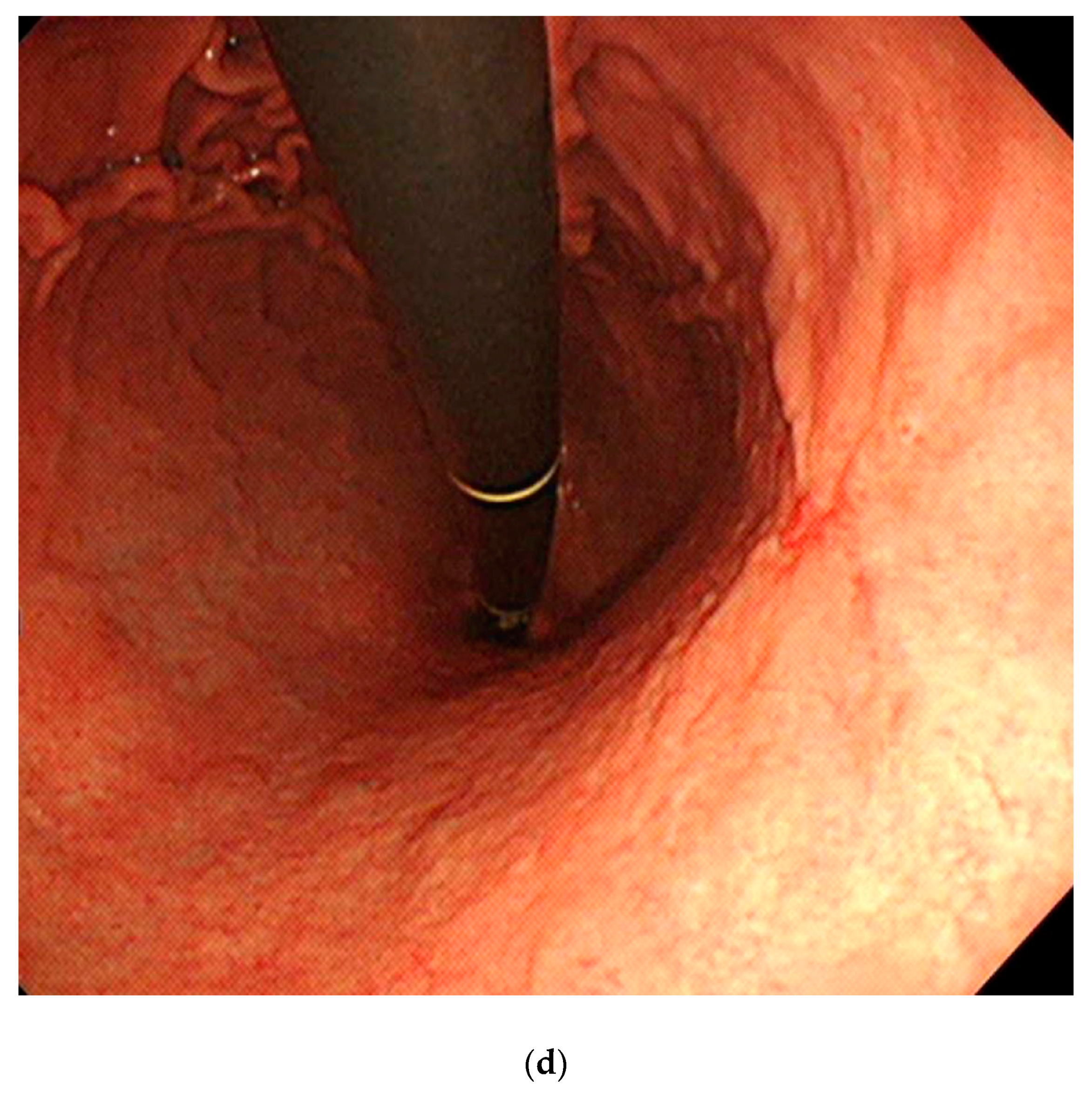

2.2. Construction of Dataset

2.3. Preprocessing of Datasets

2.4. Training of CNN Models

2.5. Main Outcome Measurements and Statistics

3. Results

3.1. Composition of Datasets

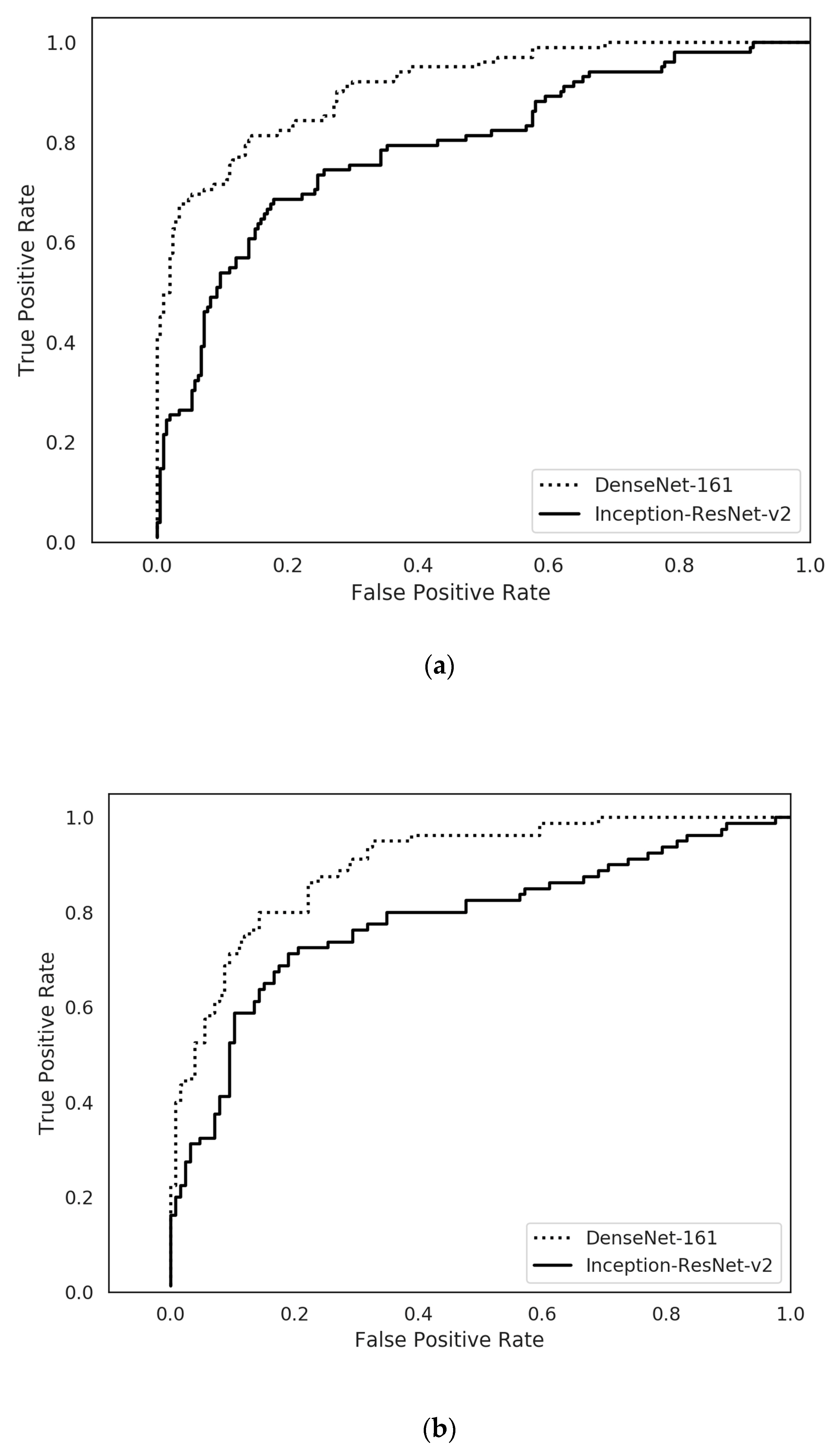

3.2. Prediction of Submucosal Invasion in any Given Gastric Neoplastic Lesions

3.3. Prediction of Submucosal Invasion in Subgroup of EGCs

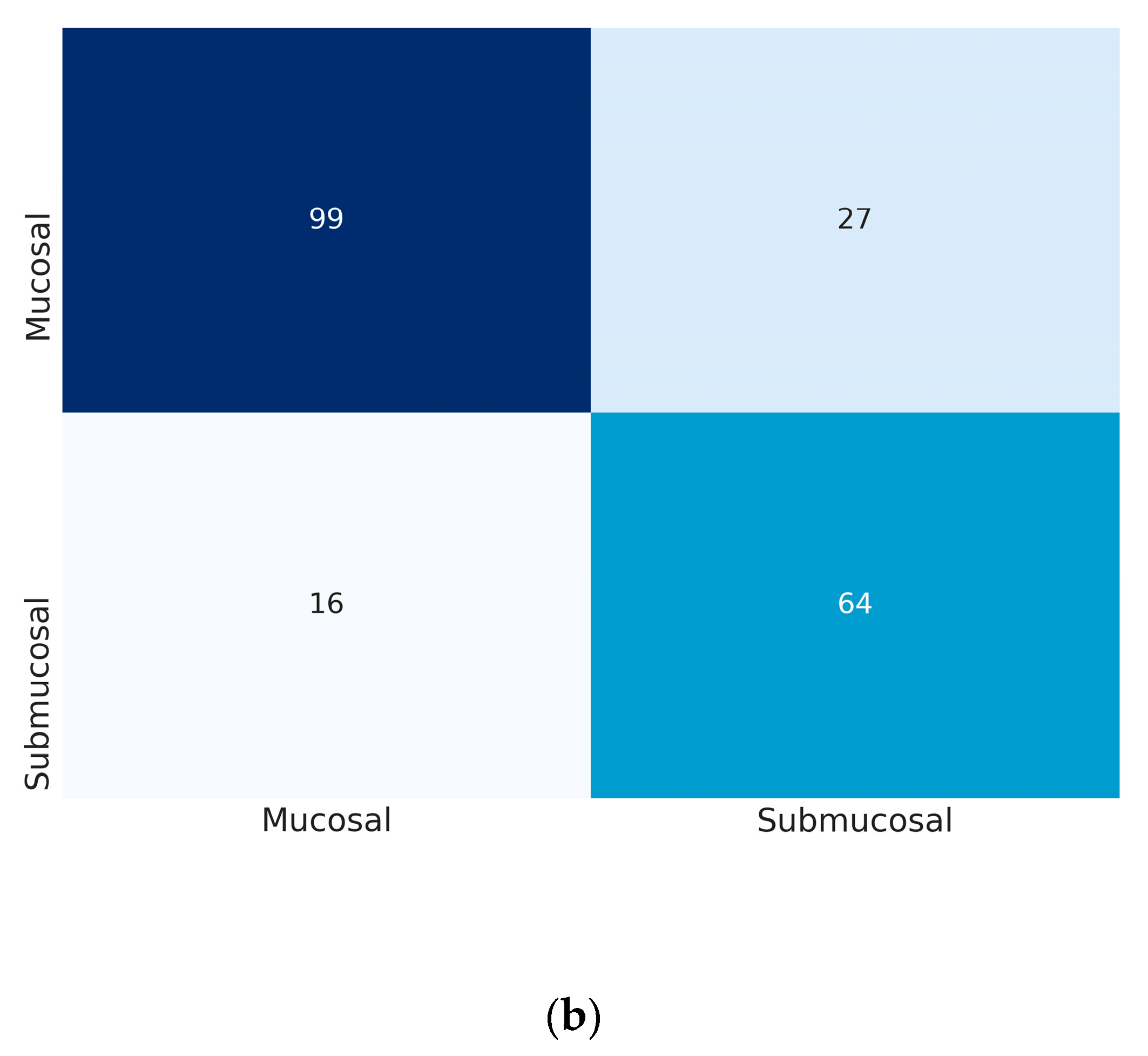

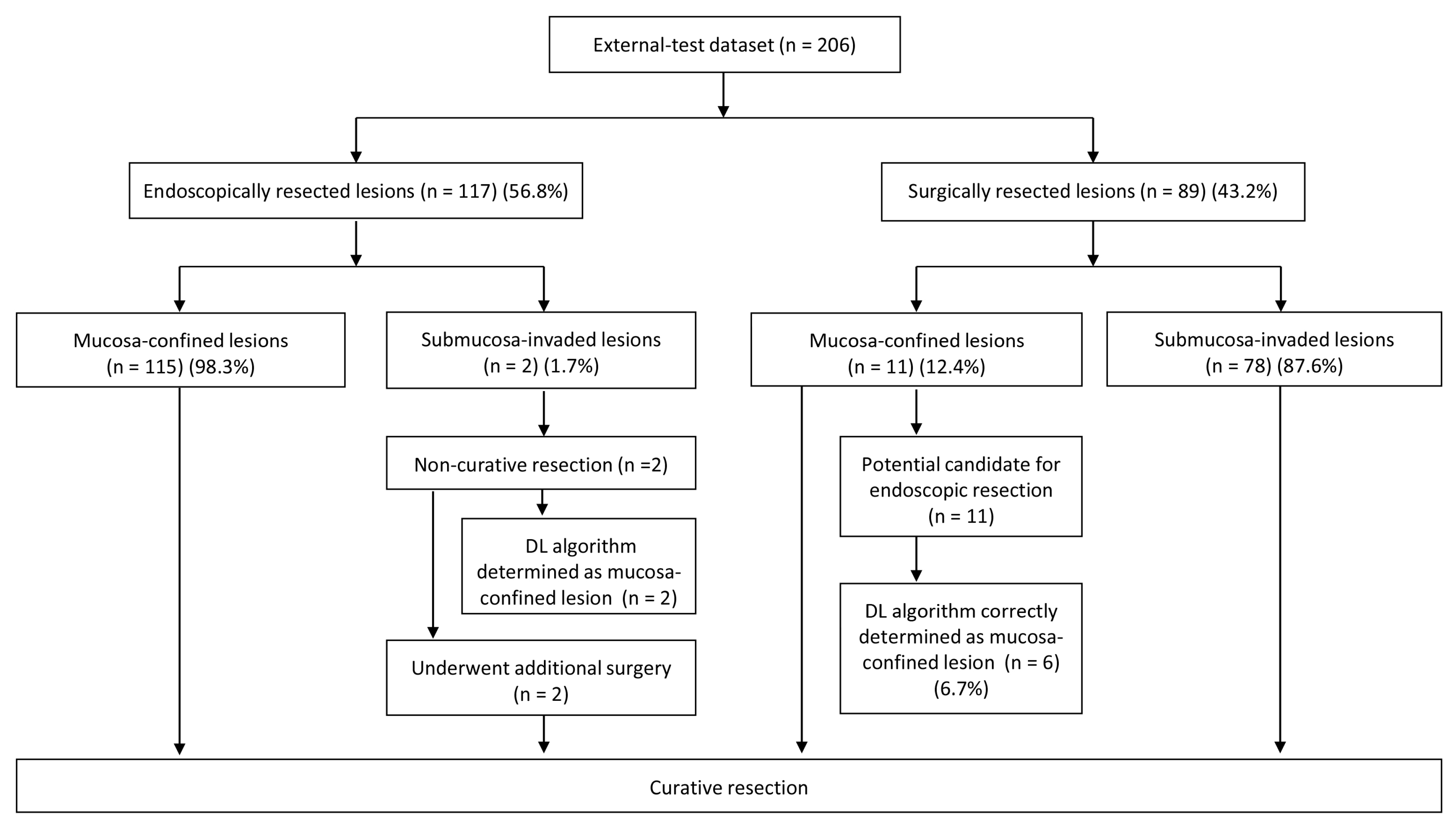

3.4. Clinical Simulation in the Application of DL Algorithm for the Determination of Therapeutic Strategy

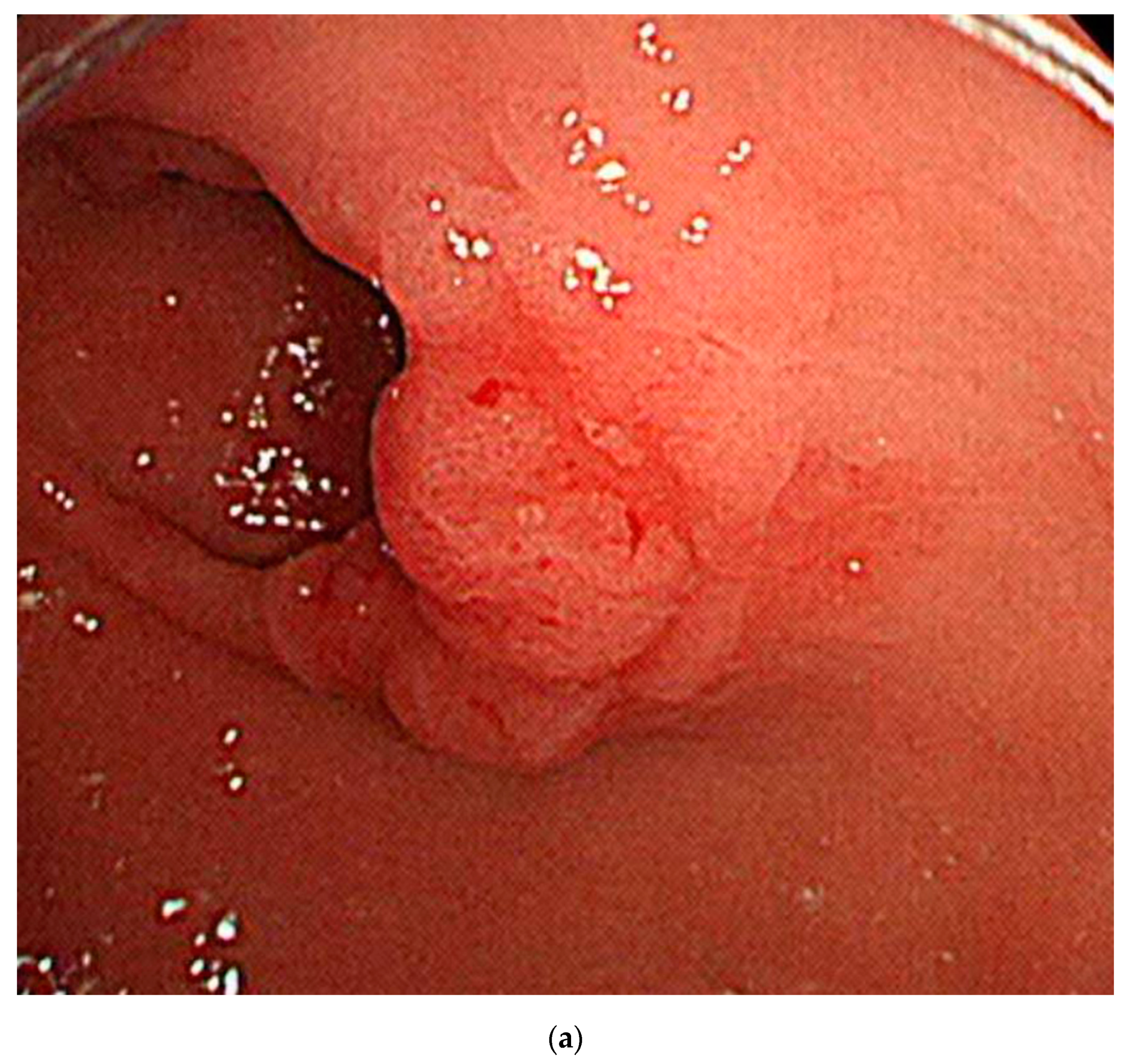

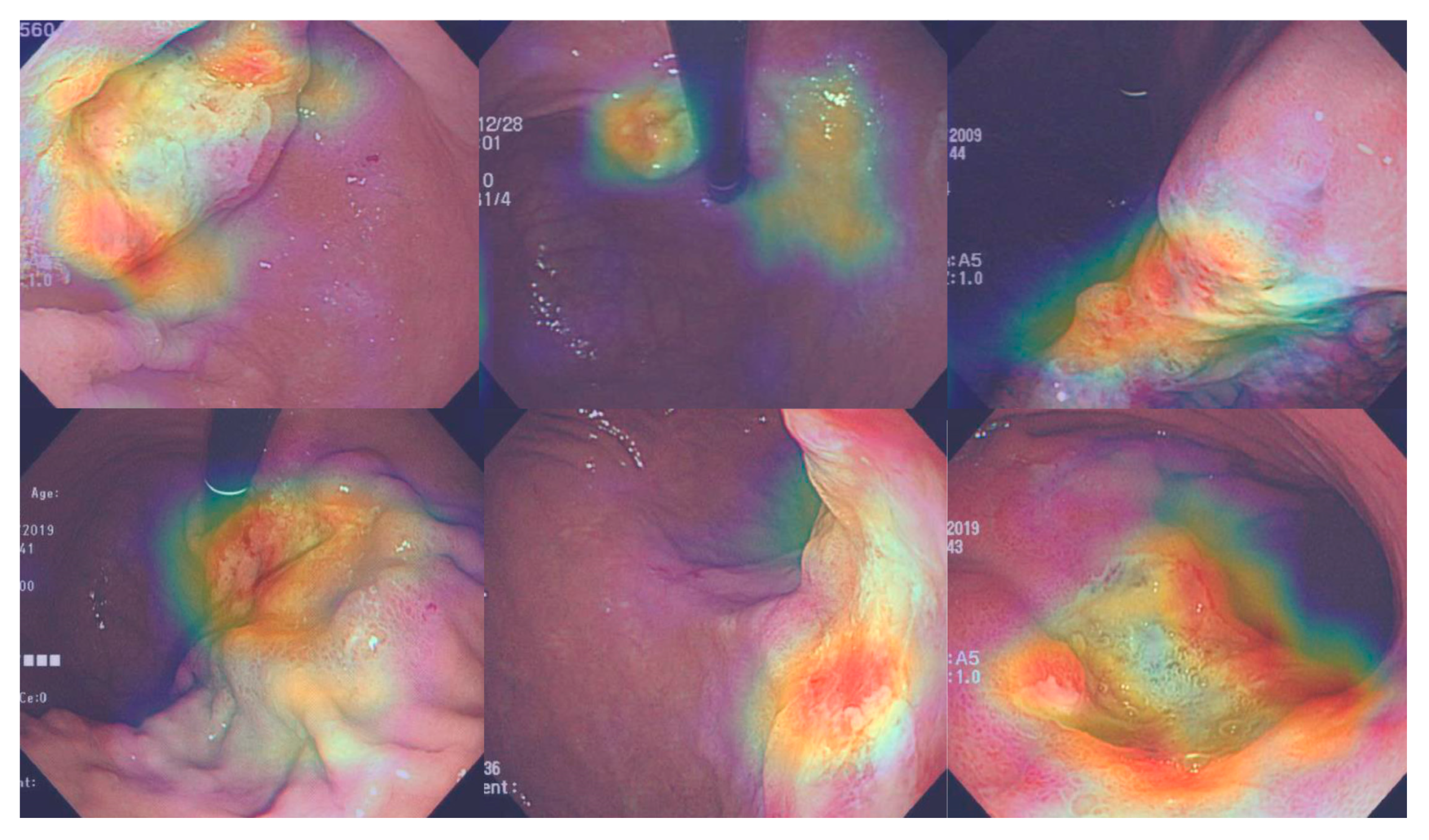

3.5. Attention Maps

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DL | deep-learning |

| LNM | lymph node metastasis |

| EGC | early gastric cancer |

| CNN | Convolutional Neural Network |

| ESD | endoscopic submucosal dissection |

| WLI | white-light imaging |

| AGC | advanced gastric cancer |

| CAM | class activation map |

| AUC | area under the curve |

| CI | confidence interval |

References

- Bang, C.S.; Yang, Y.J.; Lee, J.J.; Baik, G.H. Endoscopic submucosal dissection of early gastric cancer with mixed-type histology: A systematic review. Dig. Dis. Sci. 2020, 65, 276–291. [Google Scholar] [CrossRef] [PubMed]

- Bang, C.S.; Baik, G.H. Using big data to see the forest and the trees: Endoscopic submucosal dissection of early gastric cancer in Korea. Korean J. Intern. Med. 2019, 34, 772–774. [Google Scholar] [CrossRef] [PubMed]

- Gotoda, T. Endoscopic resection of early gastric cancer. Gastric Cancer 2007, 10, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Gotoda, T.; Yanagisawa, A.; Sasako, M.; Ono, H.; Nakanishi, Y.; Shimoda, T.; Kato, Y. Incidence of lymph node metastasis from early gastric cancer: Estimation with a large number of cases at two large centers. Gastric Cancer 2000, 3, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Soetikno, R.; Kaltenbach, T.; Yeh, R.; Gotoda, T. Endoscopic mucosal resection for early cancers of the upper gastrointestinal tract. J. Clin. Oncol. 2005, 23, 4490–4498. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Kim, S.G.; Im, J.P.; Kim, J.S.; Jung, H.C.; Song, I.S. Comparison of endoscopic ultrasonography and conventional endoscopy for prediction of depth of tumor invasion in early gastric cancer. Endoscopy 2010, 42, 705–713. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Kim, S.G.; Im, J.P.; Kim, J.S.; Jung, H.C.; Song, I.S. Is endoscopic ultrasonography indispensable in patients with early gastric cancer prior to endoscopic resection? Surg. Endosc. 2010, 24, 3177–3185. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.-I.; Kim, H.S.; Kook, M.-C.; Cho, S.-J.; Lee, J.Y.; Kim, C.G.; Ryu, K.W.; Choi, I.J.; Kim, Y.-W. Discrepancy between clinical and final pathological evaluation findings in early gastric cancer patients treated with endoscopic submucosal dissection. J. Gastric Cancer 2016, 16, 34–42. [Google Scholar] [CrossRef] [PubMed]

- Cho, B.-J.; Bang, C.S. Artificial intelligence for the determination of a management strategy for diminutive colorectal polyps: Hype, hope, or help. Am. J. Gastroenterol. 2020, 115, 70–72. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Hu, W.; Chen, F.; Liu, J.; Yang, Y.; Wang, L.; Duan, H.; Si, J. Gastric precancerous diseases classification using CNN with a concise model. PLoS ONE 2017, 12, e0185508. [Google Scholar] [CrossRef] [PubMed]

- Taha, B.; Dias, J.; Werghi, N. Convolutional neural networkasa feature extractor for automatic polyp detection. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Tajbakhsh, N.; Gurudu, S.R.; Liang, J. Automatic polyp detection in colonoscopy videos using an ensemble of convolutional neural networks. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), New York, NY, USA, 16–19 April 2015. [Google Scholar]

- Hirasawa, T.; Aoyama, K.; Tanimoto, T.; Ishihara, S.; Shichijo, S.; Ozawa, T.; Ohnishi, T.; Fujishiro, M.; Matsuo, K.; Fujisaki, J.; et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer 2018, 21, 653–660. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Bang, C.S. Deep learning in upper gastrointestinal disorders: Status and future perspectives. Korean J. Gastroenterol. 2020, 75, 120–131. [Google Scholar] [CrossRef] [PubMed]

- Kubota, K.; Kuroda, J.; Yoshida, M.; Ohta, K.; Kitajima, M. Medical image analysis: Computer-aided diagnosis of gastric cancer invasion on endoscopic images. Surg. Endosc. 2012, 26, 1485–1489. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Wang, Q.-C.; Xu, M.-D.; Zhang, Z.; Cheng, J.; Zhong, Y.-S.; Zhang, Y.-Q.; Chen, W.; Yao, L.-Q.; Zhou, P.-H.; et al. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest. Endosc. 2019, 89, 806–815. [Google Scholar] [CrossRef] [PubMed]

- Yoon, H.J.; Kim, S.; Kim, J.-H.; Keum, J.-S.; Oh, S.-I.; Jo, J.; Chun, J.; Youn, Y.H.; Park, H.; Kwon, I.G.; et al. A lesion-based convolutional neural network improves endoscopic detection and depth prediction of early gastric cancer. J. Clin. Med. 2019, 8, 1310. [Google Scholar] [CrossRef] [PubMed]

- Cho, B.-J.; Bang, C.S.; Park, S.W.; Yang, Y.J.; Seo, S.I.; Lim, H.; Shin, W.G.; Hong, J.T.; Yoo, Y.T.; Hong, S.H.; et al. Automated classification of gastric neoplasms in endoscopic images using a convolutional neural network. Endoscopy 2019, 51, 1121–1129. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.J.; Bang, C.S. Application of artificial intelligence in gastroenterology. World J. Gastroenterol. 2019, 25, 1666–1683. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Min, Y.W.; Lee, J.H.; Kim, E.R.; Lee, H.; Min, B.-H.; Kim, J.J.; Jang, K.-T.; Kim, K.-M.; Park, C.K. Diagnostic group classifications of gastric neoplasms by endoscopic resection criteria before and after treatment: Real-world experience. Surg. Endosc. 2016, 30, 3987–3993. [Google Scholar] [CrossRef] [PubMed]

- Abadir, A.P.; Ali, M.F.; Karnes, W.; Samarasena, J.B. Artificial intelligence in gastrointestinal endoscopy. Clin. Endosc. 2020, 53, 132–141. [Google Scholar] [CrossRef] [PubMed]

| Whole Dataset | Training Set | Internal Test Set | External Test Set | |||||

|---|---|---|---|---|---|---|---|---|

| Number of Images | Number of Patients | Number of Images | Number of Patients | Number of Images | Number of Patients | Number of Images | Number of Patients | |

| Overall | 2899 | 846 | 2590 | 762 | 309 | 85 | 206 | 197 |

| Mucosa-confined lesions | 1900 | 580 | 1693 | 522 | 207 | 58 | 126 | 119 |

| Low-grade dysplasia | 727 | 233 | 630 | 205 | 97 | 28 | 68 | 66 |

| High-grade dysplasia | 421 | 131 | 390 | 123 | 31 | 8 | 21 | 21 |

| EGC | 752 | 230 | 673 | 205 | 79 | 25 | 37 | 37 |

| Submucosa-invaded lesion | 999 | 270 | 897 | 243 | 102 | 27 | 80 | 78 |

| EGC | 282 | 81 | 242 | 71 | 40 | 10 | 23 | 23 |

| AGC | 717 | 189 | 655 | 172 | 62 | 17 | 57 | 55 |

| Model | AUC | Accuracy (%) | Sensitivity (%) | Specificity (%) | Positive Predictive Value (%) | Negative Predictive Value (%) |

|---|---|---|---|---|---|---|

| Whole dataset | ||||||

| Inception-Resnet-v2 | 0.786 (0.779–0.793) | 77.4 (76.7–78.0) | 72.5 (71.5–73.6) | 72.9 (71.3–74.6) | 56.9 (55.2–58.7) | 84.4 (83.6–85.1) |

| DenseNet−161 | 0.887 (0.849–0.924) | 84.1 (81.6–86.7) | 78.8 (75.4–82.2) | 80.0 (76.8–83.2) | 66.1 (61.6–70.6) | 88.4 (86.4–90.4) |

| EGC (n = 119) | ||||||

| Inception-Resnet-v2 | 0.612 (0.599–0.626) | 66.1 (65.0–67.2) | 56.7 (55.0–58.3) | 57.0 (54.1–59.9) | 40.0 (38.3–41.8) | 72.2 (70.9–73.4) |

| DenseNet−161 | 0.694 (0.607–0.781) | 71.4 (67.1–75.8) | 60.8 (54.9–66.7) | 61.6 (54.2–69.0) | 44.7 (37.7–51.7) | 75.5 (70.4–80.6) |

| Model | AUC | Accuracy (%) | Sensitivity (%) | Specificity (%) | Positive Predictive Value (%) | Negative Predictive Value (%) |

|---|---|---|---|---|---|---|

| Whole dataset | ||||||

| Inception-Resnet-v2 | 0.769 (0.755–0.783) | 74.1 (71.0–77.2) | 72.5 (72.5–72.5) | 74.3 (73.0–75.7) | 64.2 (62.9–65.5) | 81.0 (80.7–81.3) |

| DenseNet−161 | 0.887 (0.863–0.910) | 77.3 (75.4–79.3) | 80.4 (79.6–81.3) | 80.7 (78.5–83.0) | 72.6 (70.1–75.1) | 86.6 (85.9–87.4) |

| EGC (n = 60) | ||||||

| Inception-Resnet-v2 | 0.609 (0.572–0.647) | 65.0 (61.7–68.3) | 58.0 (55.1–60.8) | 62.2 (56.9–67.5) | 52.2 (40.8–63.6) | 70.4 (67.3–73.4) |

| DenseNet−161 | 0.747 (0.712–0.782) | 67.2 (64.4–70.1) | 65.2 (65.2–65.2) | 70.3 (67.2–73.4) | 57.8 (55.3–60.3) | 76.5 (75.7–77.2) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, B.-J.; Bang, C.S.; Lee, J.J.; Seo, C.W.; Kim, J.H. Prediction of Submucosal Invasion for Gastric Neoplasms in Endoscopic Images Using Deep-Learning. J. Clin. Med. 2020, 9, 1858. https://doi.org/10.3390/jcm9061858

Cho B-J, Bang CS, Lee JJ, Seo CW, Kim JH. Prediction of Submucosal Invasion for Gastric Neoplasms in Endoscopic Images Using Deep-Learning. Journal of Clinical Medicine. 2020; 9(6):1858. https://doi.org/10.3390/jcm9061858

Chicago/Turabian StyleCho, Bum-Joo, Chang Seok Bang, Jae Jun Lee, Chang Won Seo, and Ju Han Kim. 2020. "Prediction of Submucosal Invasion for Gastric Neoplasms in Endoscopic Images Using Deep-Learning" Journal of Clinical Medicine 9, no. 6: 1858. https://doi.org/10.3390/jcm9061858

APA StyleCho, B.-J., Bang, C. S., Lee, J. J., Seo, C. W., & Kim, J. H. (2020). Prediction of Submucosal Invasion for Gastric Neoplasms in Endoscopic Images Using Deep-Learning. Journal of Clinical Medicine, 9(6), 1858. https://doi.org/10.3390/jcm9061858