KidneyNeXt: A Lightweight Convolutional Neural Network for Multi-Class Renal Tumor Classification in Computed Tomography Imaging

Abstract

1. Introduction

1.1. Literature Review

1.2. Motivation and Our Model

1.3. Novelties and Contributions

- KidneyNeXt presents a novel CNN architecture that integrates parallel convolutional pathways, group convolutions, and modules capable of extracting multiscale features. This design enables the model to effectively capture both fine grained local features and global structural information.

- The model is trained and tested on three distinct datasets collected from geographically and clinically diverse sources. This strategy enhances the model’s robustness against inter-institutional variability and improves its generalizability in real-world clinical settings.

- Initialized with ImageNet 1K pre-trained weights, the model benefits from transfer learning to achieve high accuracy even with a relatively limited number of CT images. Despite its strong performance, the architecture remains lightweight, comprising approximately 7.1 million parameters, which supports faster inference and reduced computational burden.

- The model achieved high accuracy, F1 score, and recall across benign, malignant, and normal kidney tissue classes, outperforming several existing models reported in the literature.

- The balance between accuracy and computational efficiency demonstrated by KidneyNeXt suggests its potential utility as a semi-automated decision support tool in clinical workflows.

2. Materials and Methods

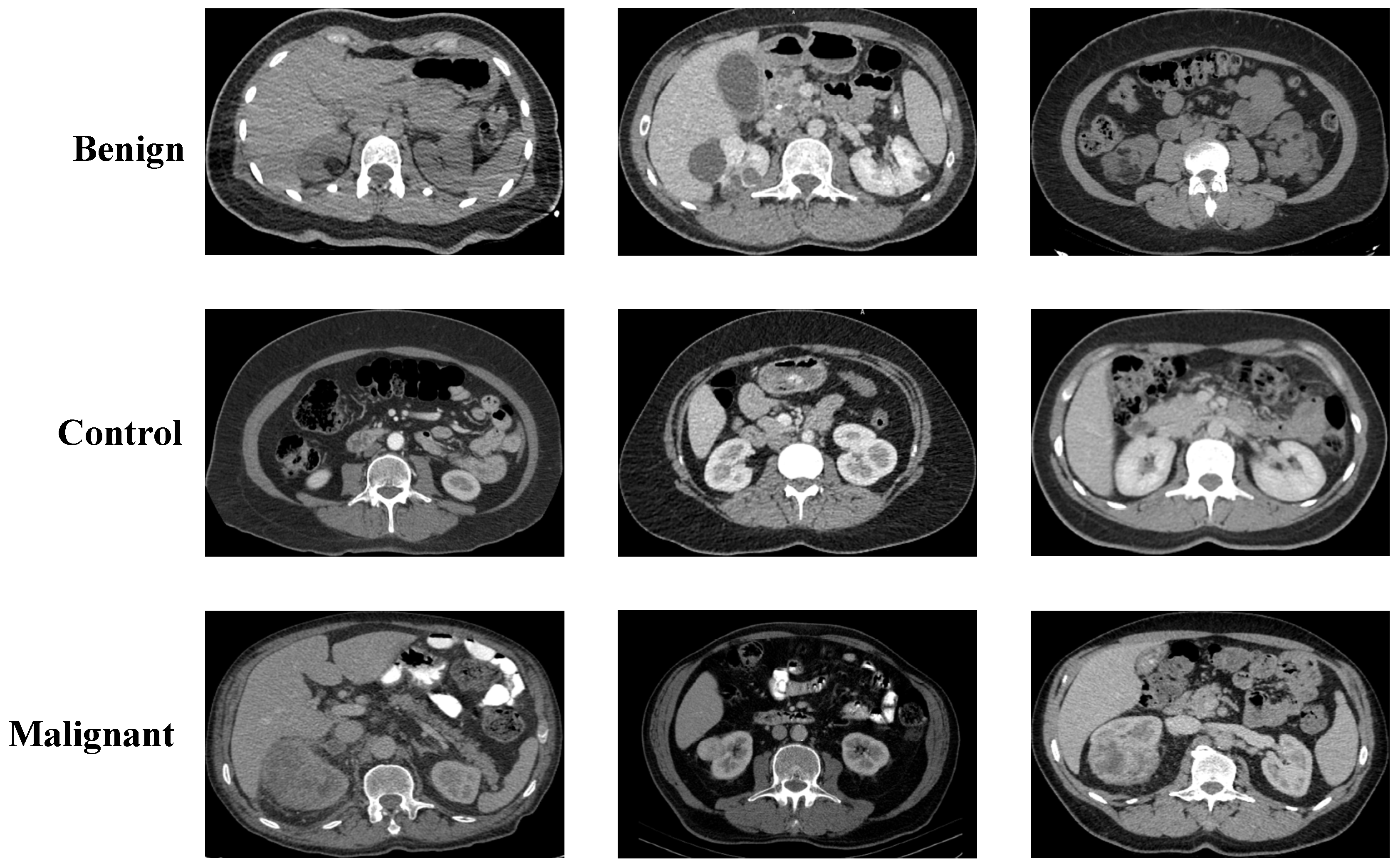

2.1. Collected Dataset

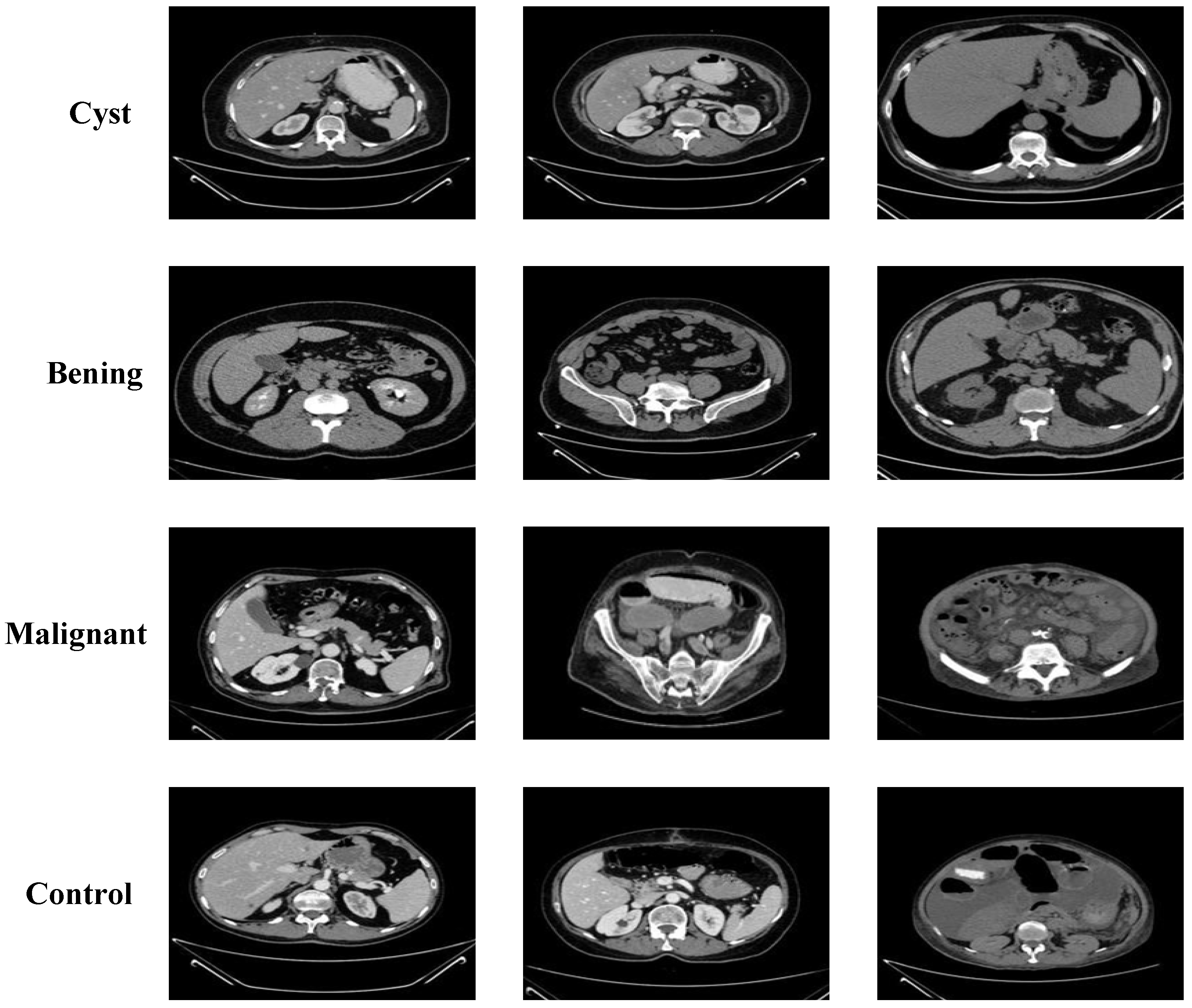

2.2. Kaggle CT KIDNEY Dataset

2.3. KAUH: Jordan Dataset

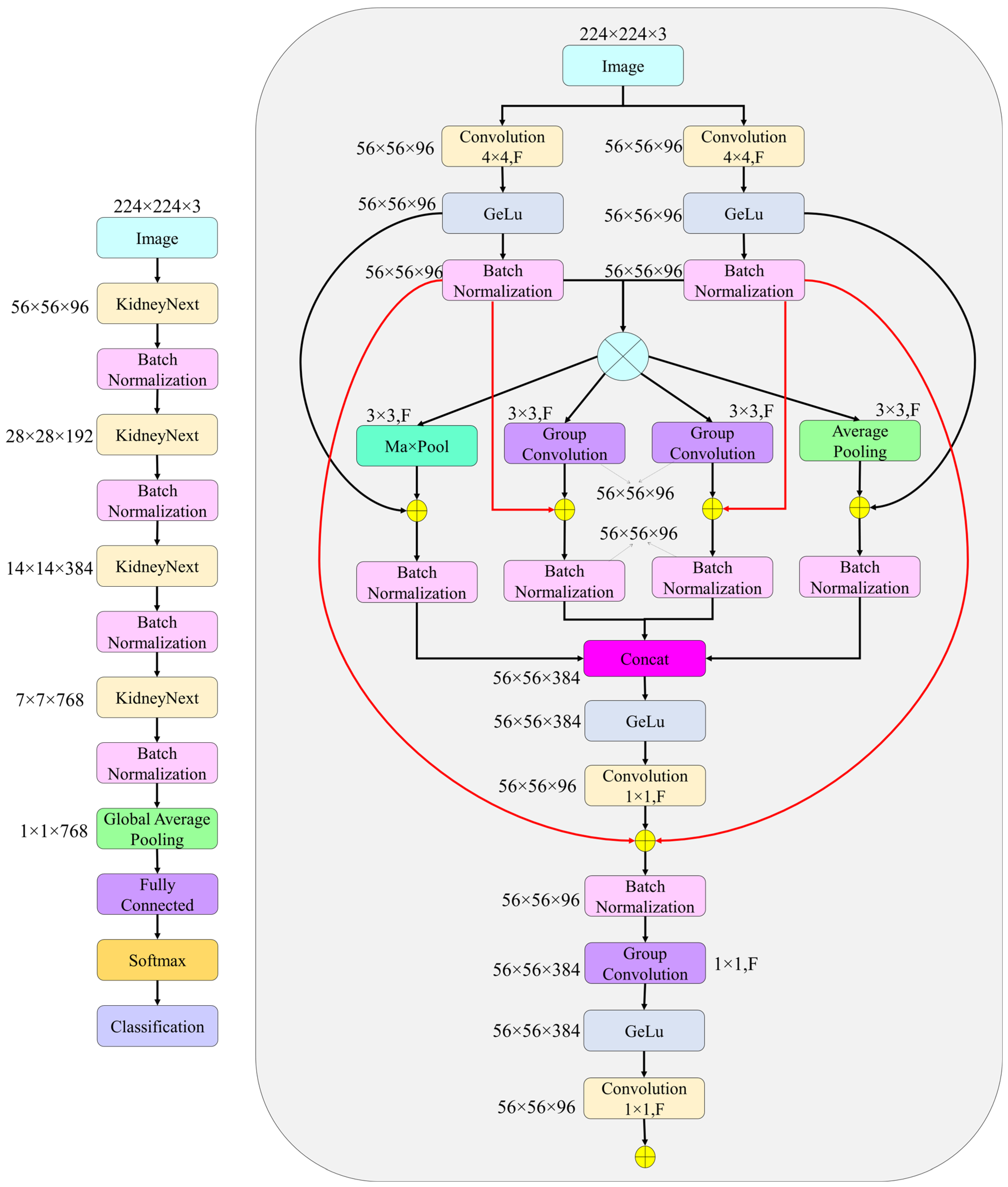

2.4. The Proposed KidneyNeXt

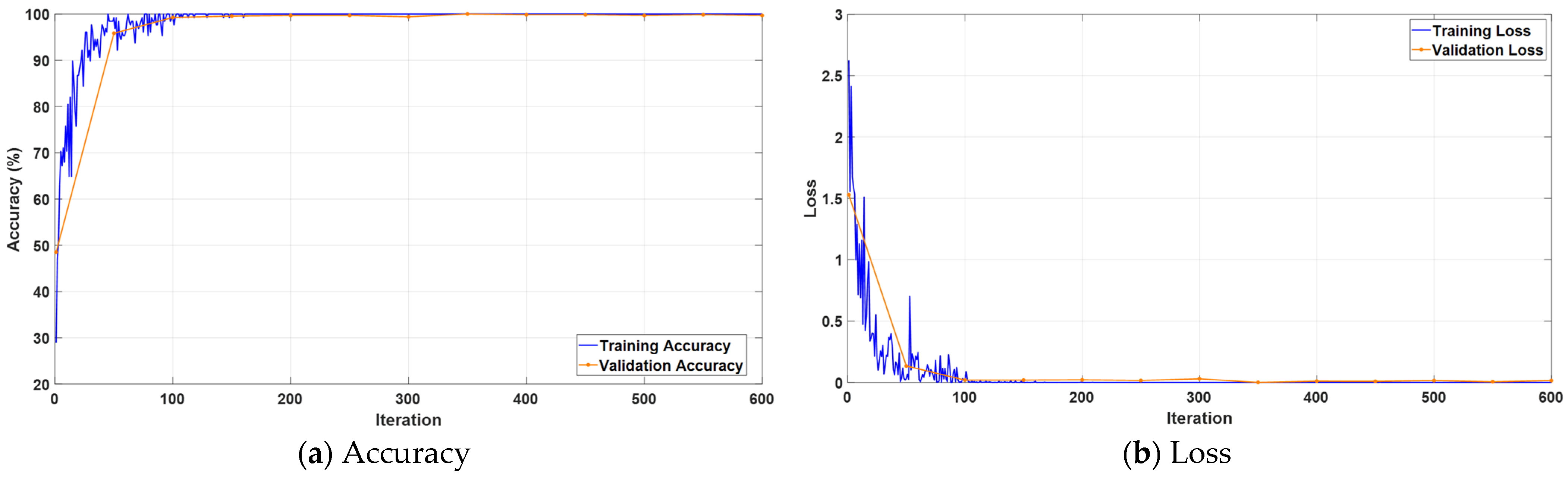

3. Experimental Results

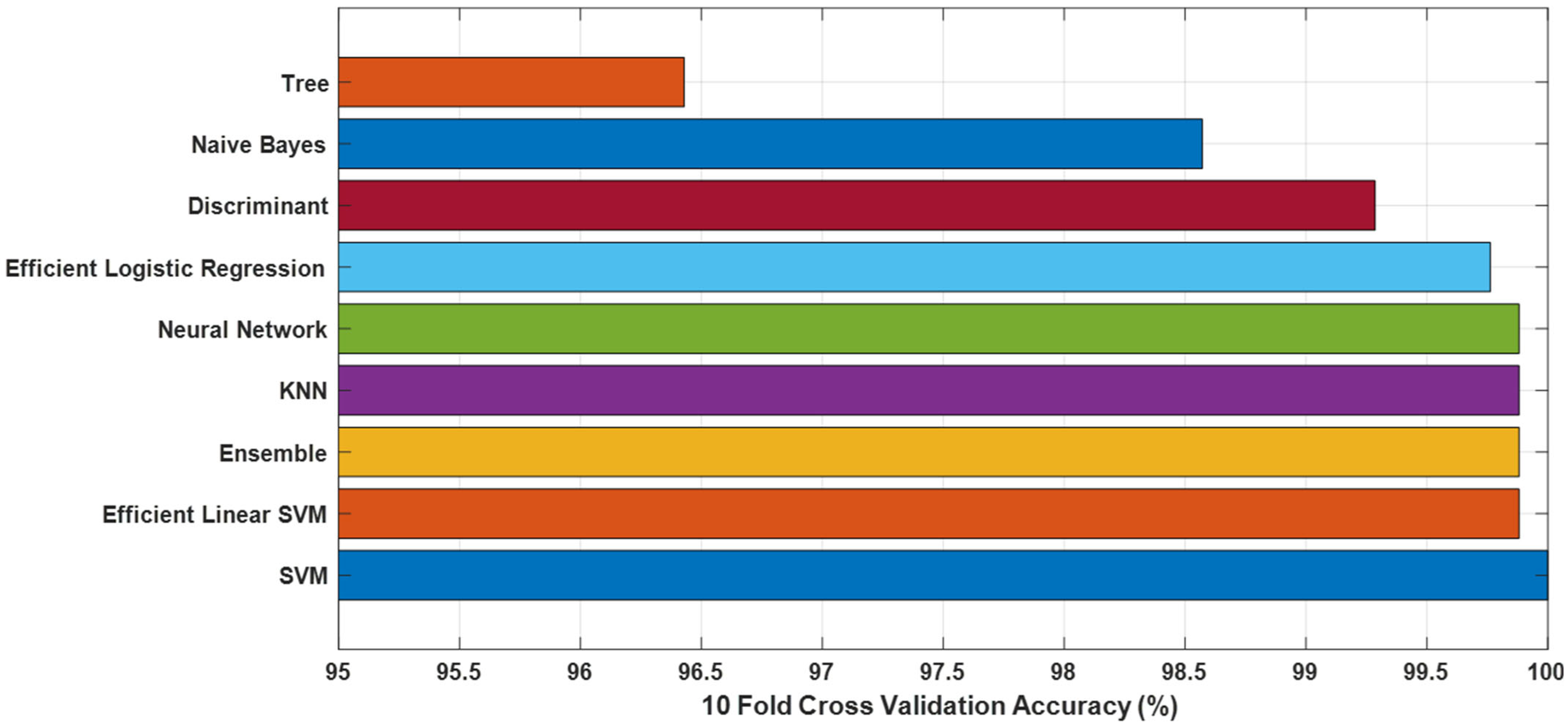

3.1. Performance Evaluation on Collected Dataset

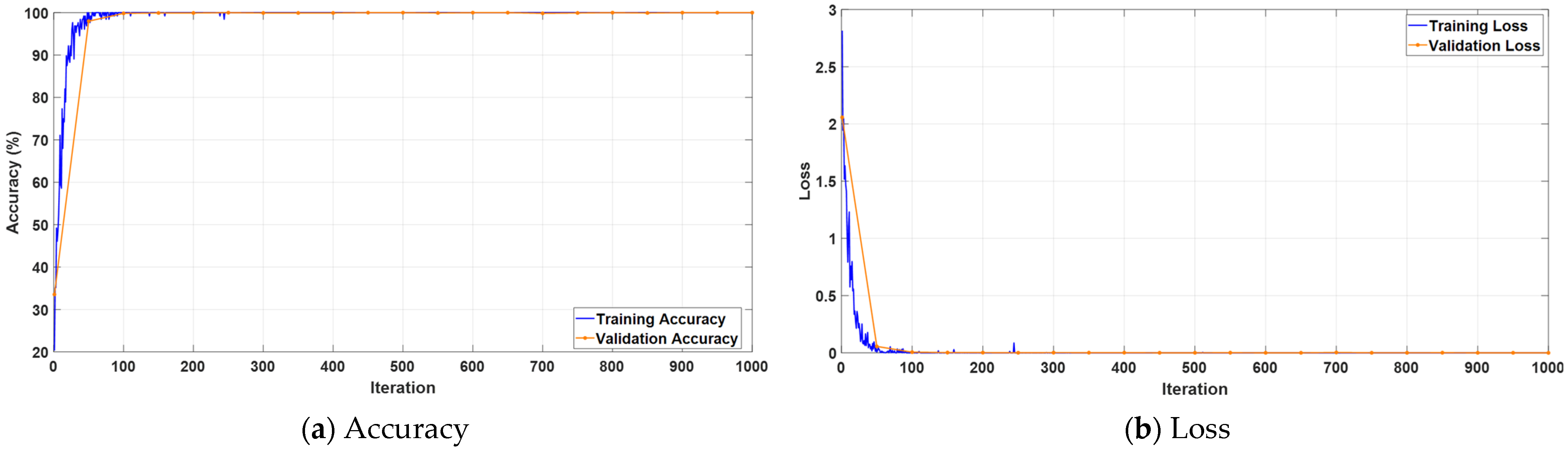

3.2. Performance Evaluation on Kaggle CT KIDNEY Dataset

3.3. Performance Evaluation on KAUH: Jordan Dataset

4. Discussion

- The datasets employed in this study were not sourced from a multi-center framework, which may limit the diversity of the study population. This lack of multi-institutional representation could introduce population bias and affect the model’s generalizability to broader clinical settings.

- The current approach relies exclusively on imaging data. Important clinical variables such as sex, body mass index (BMI), and renal function indicators were not included, which may constrain the model’s ability to account for inter-individual variability in real-world diagnostic settings.

- Future research efforts will aim to evaluate the generalizability of the proposed model using external datasets collected from institutions across varied geographic locations and population groups. Emphasis will be placed on including cohorts that represent diverse ethnic, demographic, and clinical profiles to ensure robustness and fairness across different real-world scenarios.

- Upcoming work will also aim to incorporate structured clinical metadata, including demographic and laboratory information, into the classification pipeline. This integration is expected to improve the model’s interpretability, clinical relevance, and overall diagnostic robustness.

- Future research will explore the practical integration of the KidneyNeXt model into clinical workflows, including inference time analysis, PACS compatibility, and system interoperability. Pilot studies and usability evaluations in real clinical settings will be conducted to assess feasibility and acceptance.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Linehan, W.M.; Vasselli, J.; Srinivasan, R.; Walther, M.M.; Merino, M.; Choyke, P.; Vocke, C.; Schmidt, L.; Isaacs, J.S.; Glenn, G. Genetic basis of cancer of the kidney: Disease-specific approaches to therapy. Clin. Cancer Res. 2004, 10, 6282S–6289S. [Google Scholar] [CrossRef]

- Petejova, N.; Martinek, A. Renal cell carcinoma: Review of etiology, pathophysiology and risk factors. Biomed. Pap. Med. Fac. Palacky Univ. Olomouc 2016, 160, 183–194. [Google Scholar] [CrossRef] [PubMed]

- Venyo, A.K.-G. Rare Tumours of the Kidney: Review and Update; Cambridge Scholars Publishing: Newcastle upon Tyne, UK, 2025. [Google Scholar]

- Linehan, W.M.; Rathmell, W.K. Kidney cancer. In Proceedings of the Urologic Oncology: Seminars and Original Investigations; Elsevier: Amsterdam, The Netherlands, 2012; pp. 948–951. [Google Scholar]

- Du, Z.; Chen, W.; Xia, Q.; Shi, O.; Chen, Q. Trends and projections of kidney cancer incidence at the global and national levels, 1990–2030: A Bayesian age-period-cohort modeling study. Biomark. Res. 2020, 8, 16. [Google Scholar] [CrossRef]

- Cirillo, L.; Innocenti, S.; Becherucci, F. Global epidemiology of kidney cancer. Nephrol. Dial. Transplant. 2024, 39, 920–928. [Google Scholar] [CrossRef] [PubMed]

- Jung, M.; Li, M.; Choo, E.; Lee, S.; Spiegel, D.; Baiocchi, M.; Deng, Z.; Li, J.; Langston, M.E.; Bondy, M.L. Incidence of depression and anxiety in kidney cancer survivors: A nationwide population-based cohort study. Cancer Epidemiol. Biomark. Prev. 2025, 34, OF1–OF9. [Google Scholar] [CrossRef] [PubMed]

- Roychowdhury, M.; Bansal, R. The Importance of Routine Check-Ups as the Incidence of Renal Tumors Increases in Younger Populations. Institute of Urology. 2024. Available online: https://www.instituteofurology.in/the-importance-of-routine-check-ups-as-the-incidence-of-renal-tumors-increases-in-younger-populations/ (accessed on 23 June 2025).

- Chow, W.-H.; Dong, L.M.; Devesa, S.S. Epidemiology and risk factors for kidney cancer. Nat. Rev. Urol. 2010, 7, 245–257. [Google Scholar] [CrossRef]

- Scelo, G.; Larose, T.L. Epidemiology and risk factors for kidney cancer. J. Clin. Oncol. 2018, 36, 3574–3581. [Google Scholar] [CrossRef]

- Pascual, D.; Borque, A. Epidemiology of kidney cancer. Adv. Urol. 2008, 2008, 782381. [Google Scholar] [CrossRef]

- Moore, L.E.; Wilson, R.T.; Campleman, S.L. Lifestyle factors, exposures, genetic susceptibility, and renal cell cancer risk: A review. Cancer Investig. 2005, 23, 240–255. [Google Scholar] [CrossRef]

- Wang, S.; Galbo, M.D.L.; Blair, C.; Thyagarajan, B.; Anderson, K.E.; Lazovich, D.; Prizment, A. Diabetes and kidney cancer risk among post-menopausal women: The Iowa women’s health study. Maturitas 2021, 143, 190–196. [Google Scholar] [CrossRef]

- Aganovic, L.; Nörenberg, D. Benign and Malignant Renal Disease. In Diseases of the Abdomen and Pelvis 2023–2026: Diagnostic Imaging; Hodler, J., Kubik-Huch, R.A., Roos, J.E., von Schulthess, G.K., Eds.; Springer International Publishing: Cham, Germany, 2023; pp. 153–168. [Google Scholar]

- Lyske, J.; Mathew, R.P.; Hutchinson, C.; Patel, V.; Low, G. Multimodality imaging review of focal renal lesions. Egypt. J. Radiol. Nucl. Med. 2021, 52, 14. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Abdelrahman, A.; Viriri, S. EfficientNet family U-Net models for deep learning semantic segmentation of kidney tumors on CT images. Front. Comput. Sci. 2023, 5, 1235622. [Google Scholar] [CrossRef]

- Bolocan, V.-O.; Secareanu, M.; Sava, E.; Medar, C.; Manolescu, L.S.C.; Cătălin Rașcu, A.-Ș.; Costache, M.G.; Radavoi, G.D.; Dobran, R.-A.; Jinga, V. Convolutional Neural Network Model for Segmentation and Classification of Clear Cell Renal Cell Carcinoma Based on Multiphase CT Images. J. Imaging 2023, 9, 280. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, A.; Chaki, J. Fuzzy enhanced kidney tumor detection: Integrating Machine Learning Operations for a fusion of twin transferable network and weighted ensemble machine learning classifier. IEEE Access 2025, 13, 7135–7159. [Google Scholar] [CrossRef]

- Anush, A.; Rohini, G.; Nicola, S.; WalaaEldin, E.M.; Eranga, U. Deep-learning-based ensemble method for fully automated detection of renal masses on magnetic resonance images. J. Med. Imaging 2023, 10, 024501. [Google Scholar] [CrossRef]

- Kittipongdaja, P.; Siriborvornratanakul, T. Automatic kidney segmentation using 2.5 D ResUNet and 2.5 D DenseUNet for malignant potential analysis in complex renal cyst based on CT images. EURASIP J. Image Video Process. 2022, 2022, 5. [Google Scholar] [CrossRef]

- Magherini, R.; Servi, M.; Volpe, Y.; Campi, R.; Buonamici, F. Distinguishing Kidney Tumor Types Using Radiomics Features and Deep Features. IEEE Access 2024, 12, 2169–3536. [Google Scholar] [CrossRef]

- Pavarut, S.; Preedanan, W.; Kumazawa, I.; Suzuki, K.; Kobayashi, M.; Tanaka, H.; Ishioka, J.; Matsuoka, Y.; Fuji, Y. Improving kidney tumor classification with multi-modal medical images recovered partially by conditional CycleGAN. IEEE Access 2023, 11, 146250–146261. [Google Scholar] [CrossRef]

- Khan, Z.A.; Waqar, M.; Khan, H.U.; Chaudhary, N.I.; Khan, A.T.; Ishtiaq, I.; Khan, F.A.; Raja, M.A.Z. Fine-tuned deep transfer learning: An effective strategy for the accurate chronic kidney disease classification. PeerJ Comput. Sci. 2025, 11, e2800. [Google Scholar] [CrossRef]

- Patel, V.V.; Yadav, A.R.; Zhao, W.; Kumar, R. Adaptive 3D Trans-ResUnet and Multiscale Trans-RAN for Enhanced Kidney Tumor Segmentation and Classification. Trait. Du Signal 2025, 42, 311–331. [Google Scholar] [CrossRef]

- Zhao, T.; Sun, Z.; Guo, Y.; Sun, Y.; Zhang, Y.; Wang, X. Automatic renal mass segmentation and classification on CT images based on 3D U-Net and ResNet algorithms. Front. Oncol. 2023, 13, 1169922. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.N.; Hasan, M.; Hossain, M.K.; Alam, M.G.R.; Uddin, M.Z.; Soylu, A. Vision transformer and explainable transfer learning models for auto detection of kidney cyst, stone and tumor from CT-radiography. Sci. Rep. 2022, 12, 11440. [Google Scholar] [CrossRef] [PubMed]

- Alzu’bi, D.; Abdullah, M.; Hmeidi, I.; AlAzab, R.; Gharaibeh, M.; El-Heis, M.; Almotairi, K.H.; Forestiero, A.; Hussein, A.M.; Abualigah, L. Kidney tumor detection and classification based on deep learning approaches: A new dataset in CT scans. J. Healthc. Eng. 2022, 2022, 3861161. [Google Scholar] [CrossRef] [PubMed]

- Vapnik, V. The support vector method of function estimation. In Nonlinear Modeling: Advanced Black-Box Techniques; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–85. [Google Scholar]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Olson, M.; Wyner, A.; Berk, R. Modern neural networks generalize on small data sets. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Kingsford, C.; Salzberg, S.L. What are decision trees? Nat. Biotechnol. 2008, 26, 1011–1013. [Google Scholar] [CrossRef]

- Ng, A.; Jordan, M. On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes. Adv. Neural Inf. Process. Syst. 2001, 14. [Google Scholar]

- Khan, S.U.R. Multi-level feature fusion network for kidney disease detection. Comput. Biol. Med. 2025, 191, 110214. [Google Scholar]

- Loganathan, G.; Palanivelan, M. An explainable adaptive channel weighting-based deep convolutional neural network for classifying renal disorders in computed tomography images. Comput. Biol. Med. 2025, 192, 110220. [Google Scholar] [CrossRef]

- Rehman, A.; Mahmood, T.; Saba, T. Robust kidney carcinoma prognosis and characterization using Swin-ViT and DeepLabV3+ with multi-model transfer learning. Appl. Soft Comput. 2025, 170, 112518. [Google Scholar] [CrossRef]

- Prabhu, A.; Nedungatt, S.; Lal, S.; Kini, J. ProsGradNet: An effective and structured CNN approach for prostate cancer grading from histopathology images. Biomed. Signal Process. Control. 2025, 105, 107626. [Google Scholar] [CrossRef]

- Yan, J.; Liu, P.; Xiong, T.; Han, M.; Jia, Q.; Gao, Y. LRCTNet: A lightweight rectal cancer T-staging network based on knowledge distillation via a pretrained swin transformer. Biomed. Signal Process. Control. 2025, 105, 107696. [Google Scholar] [CrossRef]

- Ayogu, I.; Daniel, C.; Ayogu, B.; Odii, J.; Okpalla, C.; Nwokorie, E. Investigation of Ensembles of Deep Learning Models for Improved Chronic Kidney Diseases Detection in CT Scan Images. Frankl. Open 2025, 11, 100298. [Google Scholar] [CrossRef]

- Shanmathi, K.; Shri Varshini, S.; Varsha, M.; Indrakumar, S.; PraveenKumar, G. Deep Neural Network Models for Comprehensive Kidney Stone Prediction. In Proceedings of the International Conference on Artificial Intelligence and Smart Energy, Coimbatore, Tamilnadu, India, 30–31 January 2025; pp. 304–319. [Google Scholar]

- Kashyap, K.T.; Bhuvi, M.; Sunayana, P.; Dattu, D.N.; Chato, L. Classification of Kidney Diseases Using Deep Neural Networks. In Proceedings of the 2025 IEEE 15th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2025; pp. 00045–00050. [Google Scholar]

- Kulandaivelu, G.; Suchitra, M.; Pugalenthi, R.; Lalit, R. An Implementation of Adaptive Multi-CNN Feature Fusion Model with Attention Mechanism with Improved Heuristic Algorithm for Kidney Stone Detection. Comput. Intell. 2025, 41, e70028. [Google Scholar] [CrossRef]

- Hossain, M.J.; Monir, M.F.; Ahmed, T. Optimized ROI Extraction and Pixel Reduction Methods for Kidney Abnormality Detection. In Proceedings of the SoutheastCon 2025, Concord, NC, USA, 22–30 March 2025; pp. 1091–1096. [Google Scholar]

- Kulkarni, S.; Rao, S.; Chopra, T.K.; SP, Y.; KJ, B.; KR, S. Optimizing Kidney Stone Detection with Vision Transformers and CNNs: A Transfer Learning Strategy. In Proceedings of the 2025 International Conference on Pervasive Computational Technologies (ICPCT), Greater Noida, India, 8–9 February 2025; pp. 829–833. [Google Scholar]

- Sharon, J.J.; Anbarasi, L.J. An attention enhanced dilated bottleneck network for kidney disease classification. Sci. Rep. 2025, 15, 9865. [Google Scholar] [CrossRef] [PubMed]

- Pimpalkar, A.; Saini, D.K.J.B.; Shelke, N.; Balodi, A.; Rapate, G.; Tolani, M. Fine-tuned deep learning models for early detection and classification of kidney conditions in CT imaging. Sci. Rep. 2025, 15, 10741. [Google Scholar] [CrossRef]

- Zain, R.H.; Defit, S. Development of an Ultrasound Image Extraction Method for Detection and Classification of Kidney Abnormalities Using a Convolutional Neural Network. Int. J. Online Biomed. Eng. 2025, 2, 150–170. [Google Scholar] [CrossRef]

- Chaki, J.; Uçar, A. An efficient and robust approach using inductive transfer-based ensemble deep neural networks for kidney stone detection. IEEE Access 2024, 12, 32894–32910. [Google Scholar] [CrossRef]

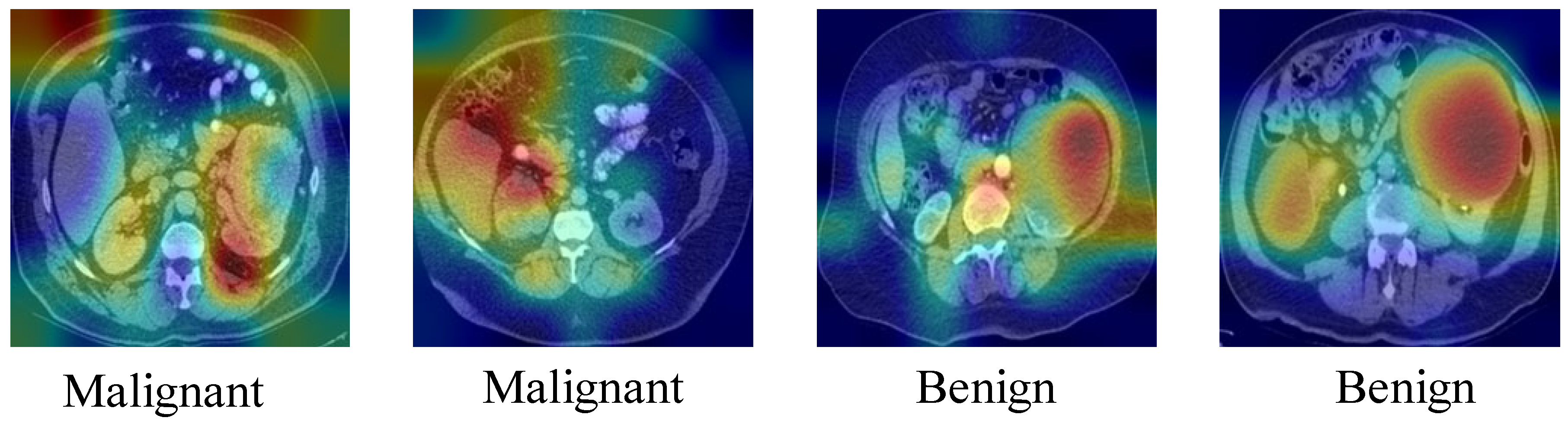

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Layer | Operation | Input | Output |

|---|---|---|---|

| Stem | Two parallel conv layers (4 × 4), BN, GELU | 224 × 224 | 56 × 56 × 96 |

| KidneyNeXt 1 | 3 × 3MaxPool, 3 × 3AvgPool, GroupConv(×2), BN, GELU, 1× | 56 × 56 × 96 | 28 × 28 × 192 |

| KidneyNeXt 2 | 3 × 3MaxPool, 3 × 3AvgPool, GroupConv(×2), BN, GELU, 1× | 28 × 28 × 192 | 14 × 14 × 384 |

| KidneyNeXt 3 | 3 × 3MaxPool, 3 × 3AvgPool, GroupConv(×2), BN, GELU, 1× | 14 × 14 × 384 | 7 × 7 × 768 |

| KidneyNeXt 4 | 3 × 3MaxPool, 3 × 3AvgPool, GroupConv(×2), BN, GELU, 1× | 7 × 7 × 768 | 1 × 1 × 768 |

| Output | Global Average Pooling, FC, Softmax, Classification | 1 × 1 × 768 | Number of classes |

| Class | Train Image Count | Test Image Count |

|---|---|---|

| Benign | 1535 | 384 |

| Control | 906 | 227 |

| Malignant | 918 | 229 |

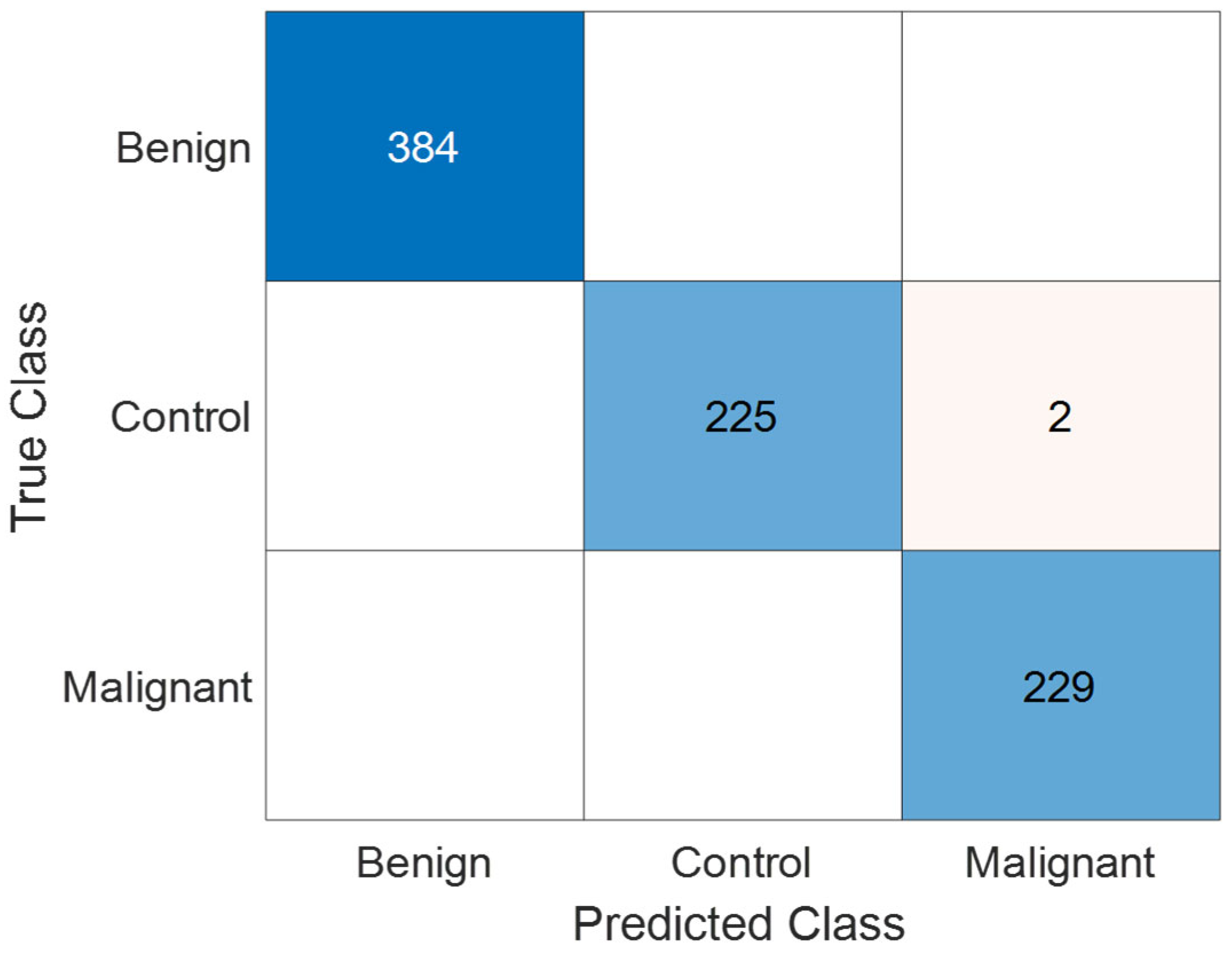

| TP | TN | FP | FN | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | |

|---|---|---|---|---|---|---|---|---|

| Benign | 384 | 456 | 0 | 0 | 100 | 100 | 100 | 100 |

| Control | 225 | 613 | 0 | 2 | 100 | 99.12 | 99.56 | 99.76 |

| Malignant | 229 | 609 | 2 | 0 | 99.13 | 100 | 99.57 | 99.76 |

| Overall | 99.71 | 99.71 | 99.71 | 99.76 |

| Class | Train Image Count | Test Image Count |

|---|---|---|

| Cyst | 3557 | 1332 |

| Normal | 4875 | 1828 |

| Stone | 1329 | 502 |

| Tumor | 2187 | 818 |

| TP | TN | FP | FN | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | |

|---|---|---|---|---|---|---|---|---|

| Cyst | 1331 | 3147 | 1 | 1 | 99.92 | 99.92 | 99.92 | 99.96 |

| Normal | 1828 | 2652 | 0 | 0 | 100 | 100 | 100 | 100 |

| Stone | 501 | 3978 | 0 | 1 | 100 | 99.8 | 99.9 | 99.98 |

| Tumor | 818 | 3661 | 1 | 0 | 99.88 | 100 | 99.94 | 99.98 |

| Overall | 99.95 | 99.93 | 99.94 | 99.96 |

| Class | Train Image Count | Test Image Count |

|---|---|---|

| Benign | 2128 | 532 |

| Cyst | 1064 | 266 |

| Malignant | 1232 | 308 |

| Normal | 1792 | 448 |

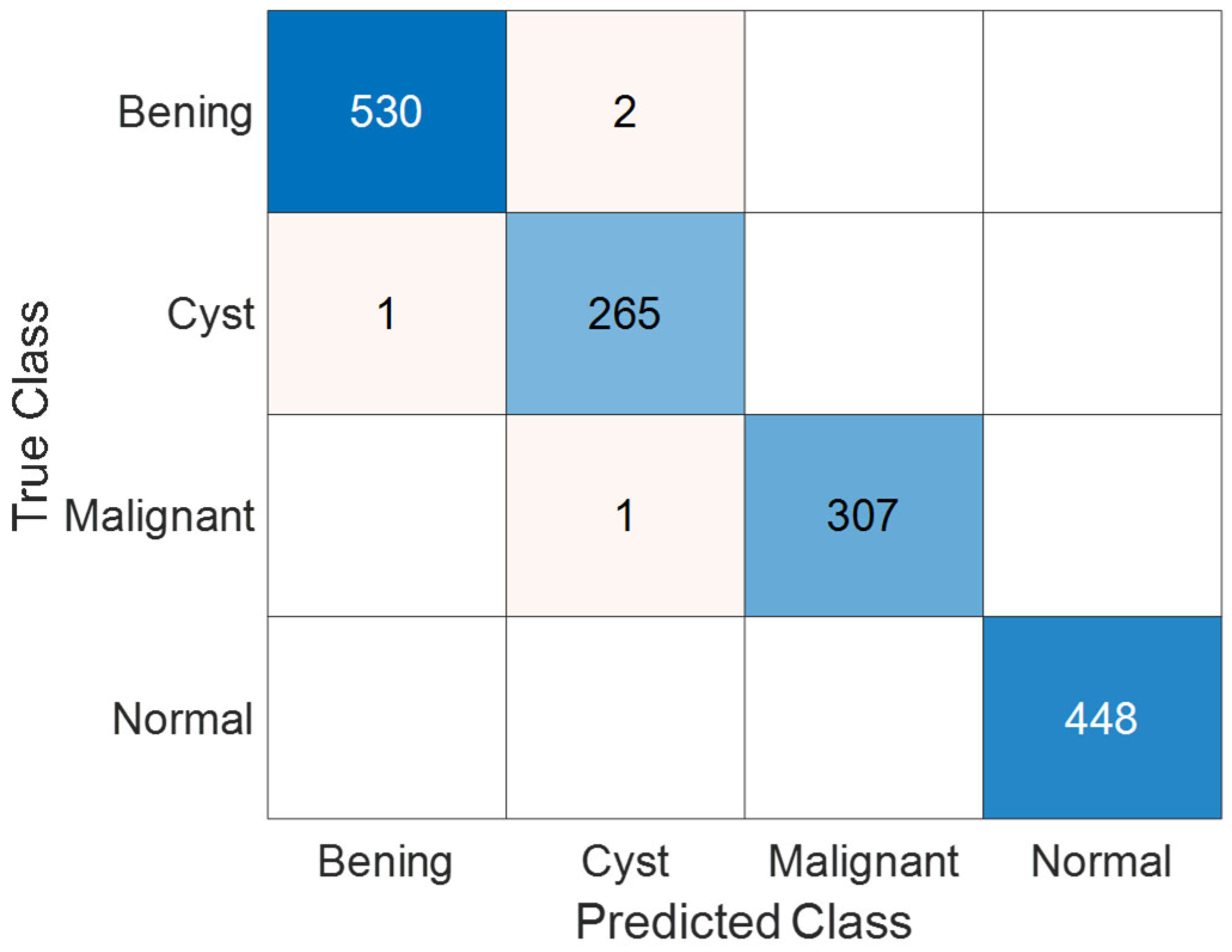

| TP | TN | FP | FN | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | |

|---|---|---|---|---|---|---|---|---|

| Benign | 530 | 1020 | 2 | 2 | 99.62 | 99.62 | 99.62 | 99.74 |

| Cyst | 265 | 1286 | 2 | 1 | 99.25 | 99.62 | 99.44 | 99.81 |

| Malignant | 307 | 1246 | 0 | 1 | 100 | 99.68 | 99.84 | 99.94 |

| Normal | 448 | 1106 | 0 | 0 | 100 | 100 | 100 | 100 |

| Overall | 99.72 | 99.73 | 99.72 | 99.74 |

| Study | Methodology | Number of Samples | Limitation | Results (%) |

|---|---|---|---|---|

| Islam et al. (2022) [27] | Swin Transformer, VGG16, CCT, ResNet, InceptionV3, EANet | Dataset1: 12,446; Dataset2: 9212 | Weaker DL models showed poor performance | Dataset1-Swin: Acc 99.30%, Prec 99.30–99.60%, Rec 98.10–100.00%, F1 98.50–99.60%; Densenet201+RF: Acc 99.44%. Dataset2-Swin: Acc 99.52%, VGG16: 97.15% |

| Alzu’bi et al. (2022) [28] | 2D CNN (6-layer), ResNet50, VGG16 | 4800 CT images (210 patients) | Low VGG16 performance | CNN-6: 97.00%, ResNet50: 96.00%, VGG16: 60.00% |

| Khan et al. (2025) [35] | ConvLSTM + Inception + Fusion | Dataset1: 2886; Dataset2: 12,446 | Class imbalance; no direct limitation specified | Dataset1-Acc 99.30%, Prec 98.00%, Rec 100.00%, F1 99.00%. Dataset2-Acc 91.31%, Prec 69–100%, Rec 75–100%, F1 76–96% |

| Loganathan et al. (2025) [36] | EACWNet (CNN + SA-CAM) | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | Low precision in stone class; inter-class variation | Acc 98.87%, Prec 98.25%, Rec 98.71%, F1 98.48% |

| Rehman et al. (2025) [37] | Swin ViT + DeepLabV3+ + TL | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | High time/memory cost in some models; ground-truth absence | Acc 99.20%, F1 99.50%, Prec 99.10%, Spec 99.30% |

| Prabhu et al. (2025) [38] | ProsGradNet (CNN + CGSCR + Context Block) | Prostate: 11,684 train/2854 test/2939 val; KMC: 3432 train/506 test/503 val | High inference time; needs optimization | Prostate-Acc 92.88%, F1 92.92%, Prec 92.91%, Rec 92.93%. KMC-Acc 92.68%, F1 92.63%, Prec 92.76%, Rec 92.73% |

| Yan et al. (2025) [39] | LRCTNet (LightFire + ResLightFire + Swin distillation) | 3090 from 318 patients | Single-center data; poor T1-T2 differentiation; CT only | Acc 95.79%, Prec 93.91%, Rec 93.48%, F1 93.70%, MCC 94.38% |

| Ayogu et al. (2025) [40] | Ensembles: InceptionV3, CCT, SwinT + VGG16, EANet, ResNet50 | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | Stone class most challenging; low recall in some models | Acc 99.67%, Prec 99.10%, Rec 100.00% |

| Shanmathi et al. (2025) [41] | Deep Neural Network (DNN) | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | Only CT-based; no additional biological data | Acc 96.31%, Prec 94.10%, Rec 96.31% |

| Kashyap et al. (2025) [42] | CNN (custom), ViT (scratch & TL), VGG19, ResNet50 | 12,446 (Train: 10,955; Test: 1249; Val: 1242) | ViT scratch failed; inter-class similarity; data imbalance | ViT-TL: Acc 99.60%, CNN: 98.16%, ResNet50: 98.48%, VGG19: 94.64% |

| Kulandaivelu et al. (2025) [43] | AMC-AM (VGG16 + ResNet + Inception) + MSD-CMPA | Not specified in abstract | Poor small-stone detection; weak localization | Acc 95.44%, Prec 95.13%, Rec 95.44%, F1 95.29%, MCC 90.87% |

| Hossain et al. (2025) [44] | EfficientNet-B7 + ROI + Pixel Reduction | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | InceptionV3 weak; pixel reduction added limited value | Acc 99.75%, Prec 98.45%, Rec 99.02%, F1 98.78%, AUC 95.78% |

| Kulkarni et al. (2025) [45] | ResNet-50 + Vision Transformer (Hybrid) | 9410 (5915 Normal, 3495 Stone) | Overfitting in some models; data imbalance; explainability emphasized | Acc 99.50%, Loss 2.83% (ResNet+ViT); XResNet 99.36%, MobileNet 98.69%, SwinT 98.13% |

| Sharon & Anbarasi (2025) [46] | DBAR-Net (attention + dilated CNN) | 8750 | High class overlap; some class identification difficult | Acc 96.86%, Prec 98.00%, Rec 98.00%, F1 98.00% |

| Pimpalkar et al. (2025) [47] | Fine-tuned CNNs (VGG16, ResNet50, AlexNet, InceptionV3) | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | Extremely high accuracy may risk generalizability; high resource demand | InceptionV3: 99.96%, VGG16: 100.00%, ResNet50: 99.85%, AlexNet: 100.00%, CNN: 60.89% |

| Zain et al. (2025) [48] | CGPCAP (Canny + GLCM + PCA) + CNN classifier | 200 (Train: 160, Test: 40) | Small sample size; variable ultrasound quality | Acc 97.50%, Prec 93.75%, Rec 93.75%, F1 93.75%, Spec 98.43% |

| Chaki & Uçar (2025) [49] | DarkNet19, InceptionV3, ResNet101 + Ensemble + KNN + Bayesian CV | 12,446 (5077 Normal, 3709 Cyst, 2283 Tumor, 1377 Stone) | Performance varies by data quality; sample size may be limited | Acc 99.80% (clean), 96.70% (noisy) |

| Proposed Model | KidneyNeXt | Dataset1: 3199 (Train: 1535/906/918; Test: 384/227/229); Dataset2: 12,446 (Train: 11,948; Test: 1332/1828/502/818); Dataset3: 7770 (Train: 7216; Test: 532/266/308/448) | Dataset1-Acc 99.76%, Prec 99.71%, Rec 99.71%, F1 99.71%. Dataset2-Acc 99.96%, Prec 99.95%, Rec 99.93%, F1 99.94%. Dataset3-Acc 99.74%, Prec 99.72%, Rec 99.73%, F1 99.72% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maçin, G.; Genç, F.; Taşcı, B.; Dogan, S.; Tuncer, T. KidneyNeXt: A Lightweight Convolutional Neural Network for Multi-Class Renal Tumor Classification in Computed Tomography Imaging. J. Clin. Med. 2025, 14, 4929. https://doi.org/10.3390/jcm14144929

Maçin G, Genç F, Taşcı B, Dogan S, Tuncer T. KidneyNeXt: A Lightweight Convolutional Neural Network for Multi-Class Renal Tumor Classification in Computed Tomography Imaging. Journal of Clinical Medicine. 2025; 14(14):4929. https://doi.org/10.3390/jcm14144929

Chicago/Turabian StyleMaçin, Gulay, Fatih Genç, Burak Taşcı, Sengul Dogan, and Turker Tuncer. 2025. "KidneyNeXt: A Lightweight Convolutional Neural Network for Multi-Class Renal Tumor Classification in Computed Tomography Imaging" Journal of Clinical Medicine 14, no. 14: 4929. https://doi.org/10.3390/jcm14144929

APA StyleMaçin, G., Genç, F., Taşcı, B., Dogan, S., & Tuncer, T. (2025). KidneyNeXt: A Lightweight Convolutional Neural Network for Multi-Class Renal Tumor Classification in Computed Tomography Imaging. Journal of Clinical Medicine, 14(14), 4929. https://doi.org/10.3390/jcm14144929