Abstract

Background: this study aims to investigate the accuracy and completeness of ChatGPT in answering questions and solving clinical scenarios of interceptive orthodontics. Materials and Methods: ten specialized orthodontists from ten Italian postgraduate orthodontics schools developed 21 clinical open-ended questions encompassing all of the subspecialities of interceptive orthodontics and 7 comprehensive clinical cases. Questions and scenarios were inputted into ChatGPT4, and the resulting answers were evaluated by the researchers using predefined accuracy (range 1–6) and completeness (range 1–3) Likert scales. Results: For the open-ended questions, the overall median score was 4.9/6 for the accuracy and 2.4/3 for completeness. In addition, the reviewers rated the accuracy of open-ended answers as entirely correct (score 6 on Likert scale) in 40.5% of cases and completeness as entirely correct (score 3 n Likert scale) in 50.5% of cases. As for the clinical cases, the overall median score was 4.9/6 for accuracy and 2.5/3 for completeness. Overall, the reviewers rated the accuracy of clinical case answers as entirely correct in 46% of cases and the completeness of clinical case answers as entirely correct in 54.3% of cases. Conclusions: The results showed a high level of accuracy and completeness in AI responses and a great ability to solve difficult clinical cases, but the answers were not 100% accurate and complete. ChatGPT is not yet sophisticated enough to replace the intellectual work of human beings.

1. Introduction

The recent mass diffusion of the use of artificial intelligence in all sectors has increased and the software development ChatGPT has been implemented. Chat-based generative pre-trained transformer (ChatGPT) [1] is an advanced artificial intelligence (AI) language model developed by Open Artificial Intelligence (San Francisco, CA, USA). The first version was released in November 2022 and quickly became the fastest-growing application in history, counting 100 million active users as of February 2023 [2]. It is based on the GPT-4 architecture and is designed to understand and generate human-like text responses in a conversation. ChatGPT has been trained on a wide range of data sources, making it capable of providing information, answering questions, and engaging in conversation across various topics.

Furthermore, new lines of development have recently appeared in artificial intelligence alternatives to ChatGPT. Also, AI has spread commonly as a research tool for gaining knowledge, including on healthcare questions and answers. The use of ChatGPT in the healthcare industry has substantial potential. Initially, it can assist healthcare practitioners in diagnosing medical conditions by examining patient symptoms, medical history, and other relevant data, leading to faster and more accurate diagnoses. Additionally, ChatGPT can contribute to the synthesis and analysis of extensive medical literature, ultimately possibly resulting in the identification of new treatments, medications, or a deeper comprehension of diseases [3,4,5,6,7,8]. Further, ChatGPT has the potential to serve as an additional educational resource for medical students and professionals by offering explanations, responding to queries, and assisting in the revision of medical concepts [9,10]. Furthermore, it could be utilized to empower virtual assistants that aid patients in managing their health, providing details about medications and treatments, and addressing typical health-related inquiries. These potential uses showcase the positive influence that ChatGPT could have on healthcare [11,12,13,14,15].

These applications come with several limitations and ethical considerations, like credibility [16] and plagiarism [17,18]. ChatGPT adheres to the EU’s AI ethical guidelines, which emphasize the crucial role of human oversight, technical robustness and safety, privacy, and data governance [19]. Before implementing ChatGPT, the potential limitations and ethical considerations need to be thoroughly assessed and addressed [20].

The authors conducted research to find any articles on ChatGPT and interceptive orthodontics, but to date, there are no articles that analyze the use of ChatGPT in interceptive orthodontics. The authors chose interceptive orthodontics as it represents a great matter of interest for orthodontics residents and dental students and represents the majority of the treatments in Italian national health services. For this reason, this research was born to judge the accuracy and completeness of answers to open questions and clinical cases in the field of interceptive orthodontics.

2. Materials and Methods

In March 2023, a research group was established to investigate the potential applications of AI platforms in interceptive orthodontics. This collaborative group recruited ten specialized orthodontists with a postgraduate degree from ten different Italian orthodontics postgraduate schools, encompassing a wide range of expertise and experiences. The postgraduate orthodontics schools that participated to this study are the University of Siena, the University of Ferrara, the University of Cagliari, “Sapienza” University of Rome, the University of Milano, the University of Torino, the University of Roma Tor Vergata, the University “Cattolica” University of Rome, the University of Chieti, and the University of Trieste. The research purpose was to explore the usefulness and reliability of AI platforms in various interceptive orthodontics-related tasks and decision-making processes. This study design did not require the approval of an ethics committee, as per Italian legislation on clinical investigations at the time of the study, as it did not involve patients.

For this study, the participating researchers were strategically divided into seven working groups based on their respective areas of expertise. This division was designed to ensure comprehensive coverage of the diverse areas of interceptive orthodontics, such as II class malocclusion; III class malocclusion; cephalometric analysis; dental inclusion; open bite; atypical swallowing; deep bite. Each group was tasked with leveraging their specific expertise to develop a set of three open-ended clinical questions that would challenge the AI platform. The difficulty level of each question was subjectively assessed by the researchers, based on their expertise and understanding of the subject matter.

Furthermore, the researchers prepared a series of 7 comprehensive clinical scenarios, drawing inspiration from real cases that were assessed at their respective affiliated orthodontics departments. Each scenario included detailed patient histories, patient age, and signs and symptoms exhibited by the patients, with the primary objective of evaluating the AI platform’s accuracy and completeness in evaluating the patient clinical context and subsequently proposing a suitable diagnostic pathway.

Upon completion, all questions and clinical cases were evaluated by the entire research group that revised and approved the text based on the quality and completeness of the questions to submit appropriate and correct challenges to the AI platform. The complete set of clinical questions and scenarios is presented in Table 1 and Table 2.

Table 1.

The 21 open-ended questions submitted to ChatGPT and the answers obtained. Then, the accuracy and completeness mean score and Shapiro–Wilk p score were calculated for each answer obtained. Open-ended questions and answers given by ChatGPT-4.

Table 2.

This table shows the 7 clinical scenarios submitted to ChatGPT and the answers obtained. Then, the accuracy and completeness mean score and Shapiro–Wilk p score were calculated for each answer obtained. Clinical scenarios and answers given by ChatGPT-4.

To ensure consistency in the study, a single researcher inserted all of the questions and clinical scenarios into the ChatGPT version 4 on 21 April 2023. For each question, the researcher instructed the AI to provide specific answers, considering available guidelines before submitting the question. Further, for the clinical scenarios, the AI was requested to identify the most probable diagnosis.

All responses obtained through this process were recorded and provided to the researchers for evaluation. In this way, each answer was subsequently evaluated by ten different researchers. As described by Johnson et al. [21], the researchers assessed the accuracy and completeness of the open-ended answers and the clinical scenarios using two predefined scales. For accuracy, a six-point Likert [22] scale was employed, with 1 representing a completely incorrect response, 2 denoting more incorrect than correct elements, 3 indicating an equal balance of correct and incorrect elements, 4 signifying more correct than incorrect elements, 5 representing nearly all correct elements, and 6 being entirely correct. As for completeness, a three-point Likert scale was used: 1 stood for an incomplete answer that only addressed some aspects of the question with significant parts missing or incomplete, 2 represented an adequate answer that addressed all aspects of the question and provided the minimum information required for completeness, and 3 denoted a comprehensive response that covered all aspects of the question and offered additional information or context beyond expectations.

Statistical Analyses

Statistical analyses were performed using Jamovi version 2.3.18.0, a freeware and open statistical software available online at www.jamovi.org accessed on 1 August 2023 [23]. Categorical variables are reported in numerals and percentages of the total. The Shapiro–Wilk test was used to verify the normality of the distribution. The level of statistical significance was set at p < 0.05 with a 95% confidence interval. Interrater reliability was calculated with the α Cronbach using jamovi.org.

3. Results

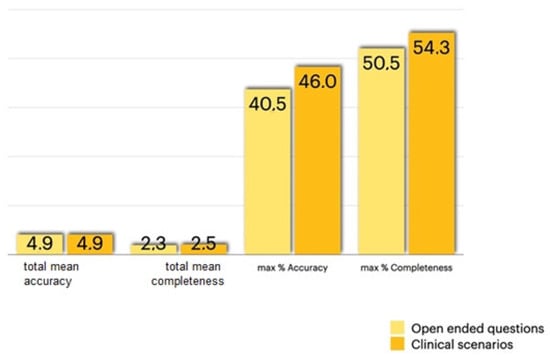

For the open-ended questions, the overall median score was 4.9/6 for accuracy and 2.4/3 for completeness. Overall, the reviewers rated the open-ended answers as entirely correct in 40.5% of cases and 50.5% of cases as comprehensive and covering all aspects of the question (Figure 1).

Figure 1.

Total mean accuracy, total mean completeness, maximum accuracy, and maximum completeness of the open-ended questions and clinical scenarios.

As for the clinical cases, the overall median score was 4.9/6 for accuracy and 2.5/3 for completeness. Overall, the reviewers rated the clinical case answers as entirely correct in 46% of cases and 54.3% of cases as comprehensive and covering all aspects of the question (Figure 1).

For the open-ended questions, α Cronbach was 0.640 for accuracy and 0.524 for completeness; for the clinical scenarios, it was 0.487 for accuracy and 0.636 for completeness.

4. Discussion

The extensive use of ChatGPT in healthcare may lead to many strength and weakness points [24]. One of the main strengths of ChatGPT is its ability to provide personalized health information and support to individuals. One of the main weaknesses is the potential for misinterpretation or miscommunication, as language models may not always accurately understand the nuances of human language and context [25,26]. One of the most important aspects to assess when dealing with AI is related to ethics. ChatGPT processes and preserves confidential health data, including personal information and medical records entered by patients in the chat box [27]. Guaranteeing the privacy and safety of this information is crucial to avoid non-authorized access, data violations, or identity fraud [28].

Regarding AI and ethics, the European Union in 2019 developed its ethical code [19] that contains guidelines on the use and development of artificial intelligence systems. In 2022, the University of Siena was the first Italian university to define its own guidelines on the use of ChatGPT by students and lecturers [29]. Finally, in October 2023, also the World Health Organization has expressed its views on the ethics of AI [30].

It is very interesting to point out that prior research has investigated the accuracy of information generated by ChatGPT in the field of head and neck and oromaxillofacial surgery. Mago et al. [31], in July 2023, reported that GPT-3 gave 100% accuracy in describing radiographic landmarks in oral and maxillofacial radiology, but regardless, GPT-3 cannot be considered a pillar for reference because it is less attentive to details and could give inaccurate information. In August 2023, Vaira et al. [32] conducted a multicenter collaborative study on the accuracy of ChatGPT-generated information on head and neck oromaxillofacial surgery. In their study, the authors reported that the reviewers rated the answers as entirely or nearly entirely correct in 87.2% of cases and as comprehensive and covering all aspects of the question in 73% of cases. But in any case, they concluded that AI is not a reliable support for making decisions regarding head–neck surgery. The values obtained by Vaira et al. are higher than in our research, but it must be considered that in our study, we considered only the answers entirely correct for the calculation of the percentage of accuracy and completeness.

To date, there are no scientific articles in the literature highlighting the use of ChatGPT for interceptive orthodontics. As far as orthodontics is concerned, Subramanian et al. [33], in their narrative review, affirm that AI is a promising tool for facilitating cephalometric tracing in routine clinical practice and analyzing large databases for research purposes. In the present study, we asked the artificial intelligence three questions about cephalometry: question number 7: “What is the cephalometric divergence value in skeletal Class II?”; question number 8: “What is cephalometric tracing in orthodontics?”; question number 9: “What is the average value of the SNA angle in orthodontics?”. The median for answer number 7 was 4/6 points for accuracy and 2/3 for completeness The median for answer number 8 was 5.4/6 points for accuracy and 2.6/3 points for completeness. Lastly, the median for answer number 9 was 5.3/6 points for accuracy and 2.4/3 points for completeness.

Tanaka et al., have reported that ChatGPT has proven effective in providing quality answers related to clear aligners, temporary anchorage devices, and digital imaging within the context of interest of orthodontics [34]. Tanaka et al.’s study and the present study are very similar, but in the present study, a group of ten orthodontists with a postgraduate degree from ten different Italian orthodontics postgraduate schools gave their evaluation, while Tanaka et al.’s research group was formed of five general orthodontists.

Duran et al., in their paper, report that ChatGPT provides highly reliable, high-quality, but challenging-to-read information related to cleft lip and palate (CLP) [35] and that the information obtained must be verified by a qualified medical expert. We agree with the last statement, as in the present study, ChatGPT affirms in many answers that it is not an orthodontist, and it recommends consulting with an expert orthodontist to solve the problem or decide on the best type of appliance needed for that specific case. So, ChatGPT, in many instances, gives answers but never recommends following only its instructions.

Gonzales et al. [36], in their review, affirm that AI technology may determine the cervical vertebral maturation stage and obtain the same results as expert human observers. Moreover, AI technology may improve the diagnostic accuracy for orthodontic treatments, helping the orthodontist work more accurately and efficiently. The authors of the present study cannot agree with them, as in the present research results, the ten specialized orthodontists never rated the answers as totally accurate and complete, even if the total median of accuracy was 4.9/6 points for both open-ended questions and the clinical cases, the maximum percentage of accuracy was 46%, and the maximum percentage of completeness was 54.3%.

Ahmed et al. affirm that their study validated the high potential for developing an accurate caries detection model that will expedite caries identification, assess clinician decision making, and improve the quality of patient care [37]. According to the authors of this study, the evaluation of X-rays is a static matter, whereas a comprehensive answer to an open question or a diagnosis and orthodontic treatment setting of a clinical case is an aspect for which AI is not yet ready. For this reason, even if Gonzales and Ahmed affirm that AI may help the dentist or the orthodontist, in our opinion, AI is not able to help the orthodontist concerning the questions it was asked about interceptive orthodontics.

Strunga et al. [38], in their scoping review, affirm that AI is an efficient instrument to manage orthodontic treatment from diagnosis to retention and patients find the software easy to use while clinicians can diagnose more easily. In our opinion, ChatGPT should not be a tool for patients because the answers it gave were not 100% accurate and complete. Moreover, ChatGPT has no legal responsibilities. So, ChatGPT should not be considered an instrument to help the orthodontist to make an orthodontic diagnosis or to choose the better treatment. The software failed to show flair and originality and emphasized in several answers that the diagnosis should be entrusted to an orthodontic specialist. Vishwanathaiah et al. [39], in their review, report that AI cannot be a replacement for dental clinicians/pediatric dentists. On the basis of the present research, AI did not report bibliographic references to support the answers. Some of the open-ended answers given by ChatGPT may appear incomplete but it could be due to the fact that the chatbot did not have the context for those questions. It should be interesting in future research to give more context and ask for a second answer.

The authors of this multicenter collaborative study are aware that the present study has some limitations due to the fact that ten specialized orthodontists is a preliminary number, but the value is that these ten specialists belong to ten different Italian universities. Further, ChatGPT is not a professor or an expert that independently understands the nuances of orthodontics; it is a tool that adapts its responses based on the information and context provided by the user. The questions should be written in a detailed, clear, and accurate way. The chat interface offers the user the opportunity to refine and direct the conversation for more precise and structured responses. In our study, only the first response given by ChatGPT was considered good.

So, for future perspectives, we support the need for a larger multicenter collaborative study formed by more than ten specialized orthodontists belonging to different universities and another study formed by both general orthodontists and specialized orthodontists in order to evaluate with a more comprehensive vision the output of information produced by artificial intelligence. Further, future research should compare the answers from ChatGPT and human students and postgraduates in order to evaluate and compare the differences.

Nonetheless, with the proper precautions in place, ChatGPT can be considered an interesting and very promising technology. The results of this study have clinical relevance as a report on new information regarding ChatGPT and orthodontics and could impact patient and parent education about interceptive orthodontics. It is a digital tool that can be useful at work as a starting point for further research, but it is not yet sophisticated enough to replace the intellectual work of human beings. There is a need to implement AI to achieve the absolute accuracy and completeness of its answers.

5. Conclusions

Ten specialized orthodontists from ten Italian postgraduate orthodontics schools (Siena, Ferrara, Sacro Cuore, Milan, Trieste, Tor Vergata, Turin, Cagliari, Chieti, and Cattolica) developed 21 clinical open-ended questions and 7 clinical cases about interceptive orthodontics. The results demonstrated a high level of accuracy and completeness in the AI’s answers and a strong ability to resolve complex clinical scenarios, but at the date of the present study, ChatGPT cannot be considered as a substitute for a doctor and should not be a tool for patients because the answers it has given have not been 100% accurate and complete. Numerous ethical concerns will need to be addressed and resolved in the future, especially due to the fact that ChatGPT has no legal responsibility. ChatGPT is not merely a question-and-answer tool like a Google search bar, but a sophisticated AI that requires careful and informed interaction to yield the best results. So, it should be an aspect to be considered for further research in this area.

Disclaimer

In the guidelines on the use of ChatGPT laid down by the University of Siena [29], article 7 requires that “the authors of publications, degree and doctoral theses, dissertations or other writings in which the contribution of each author is determined, must clearly and specifically indicate whether and to what extent they have used artificial intelligence technologies such as ChatGPT (or other LLM) in the preparation of their manuscripts and analyses”.

So, we can declare that artificial intelligence, namely, ChatGPT, was used only for the answers given to the 21 open-ended questions and the 7 clinical cases. Thus, the questions were formulated by the orthodontic specialists, and only the answers were given by ChatGPT; statistical analysis of the data was entirely performed directly by us through the site Jamovi.org.

Author Contributions

Conceptualization, A.H.; methodology, A.H.; software, A.H.; validation, A.H. and T.D.; formal analysis, E.C., L.C., L.M., G.L., G.G., E.A., F.V., M.H., N.F., C.D. and F.V.; investigation, E.C., L.C., L.M., G.L., G.G., E.A., F.V., M.H., N.F., C.D. and F.V.; resources, E.C., L.C., L.M., G.L., G.G., E.A., F.V., M.H., C.D. and F.V.; data curation, A.H.; writing—original draft preparation, A.H. and G.C.; writing—review and editing, G.C.; visualization, G.C.; supervision, S.P., T.D.; project administration, A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not required.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- ChatGPT. Available online: https://openai.com/blog/chatgpt (accessed on 28 March 2023).

- Number of ChatGPT Users (2023). Available online: https://explodingtopics.com/blog/chatgpt-users (accessed on 30 March 2023).

- Barat, M.; Soyer, P.; Dohan, A. Appropriateness of Recommendations Provided by ChatGPT to Interventional Radiologists. Can. Assoc. Radiol. J. 2023, 74, 758–763. [Google Scholar] [CrossRef]

- He, Y.; Tang, H.; Wang, D.; Gu, S.; Ni, G.; Wu, H. Will ChatGPT/GPT-4 be a Lighthouse to Guide Spinal Surgeons? Ann. Biomed. Eng. 2023, 51, 1362–1365. [Google Scholar] [CrossRef]

- Strong, E.; DiGiammarino, A.; Weng, Y.; Kumar, A.; Hosamani, P.; Hom, J.; Chen, J.H. Chatbot vs Medical Student Performance on Free-Response Clinical Reasoning Examinations. JAMA Intern. Med. 2023, 183, 1028–1030. [Google Scholar] [CrossRef]

- Zimmerman, A. A Ghostwriter for the Masses: ChatGPT and the Future of Writing. Ann. Surg. Oncol. 2023, 30, 3170–3173. [Google Scholar] [CrossRef] [PubMed]

- Abi-Rafeh, J.; Xu, H.H.; Kazan, R. Preservation of Human Creativity in Plastic Surgery Research on ChatGPT. Aesthetic Surg. J. 2023, 43, NP726–NP727. [Google Scholar] [CrossRef]

- Ariyaratne, S.; Iyengar, K.P.; Nischal, N.; Chitti Babu, N.; Botchu, R. A comparison of ChatGPT-generated articles with human-written articles. Skelet. Radiol. 2023, 52, 1755–1758. [Google Scholar] [CrossRef]

- Eysenbach, G. The Role of ChatGPT, Generative Language Models, and Artificial Intelligence in Medical Education: A Conversation With ChatGPT and a Call for Papers. JMIR Med. Educ. 2023, 9, e46885. [Google Scholar] [CrossRef]

- Májovský, M.; Černý, M.; Kasal, M.; Komarc, M.; Netuka, D. Artificial Intelligence Can Generate Fraudulent but Authentic-Looking Scientific Medical Articles: Pandora’s Box Has Been Opened. J. Med. Internet Res. 2023, 25, e46924. [Google Scholar] [CrossRef]

- Navalesi, P.; Oddo, C.M.; Chisci, G.; Frosolini, A.; Gennaro, P.; Abbate, V.; Prattichizzo, D.; Gabriele, G. The Use of Tactile Sensors in Oral and Maxillofacial Surgery: An Overview. Bioengineering 2023, 10, 765. [Google Scholar] [CrossRef]

- Gennaro, P.; Chisci, G.; Aboh, I.V.; Gabriele, G.; Cascino, F.; Iannetti, G. Comparative study in orthognathic surgery between Dolphin Imaging software and manual prediction. J. Craniofac. Surg. 2014, 25, 1577–1578. [Google Scholar] [CrossRef]

- Hopkins, A.M.; Logan, J.M.; Kichenadasse, G.; Sorich, M.J. Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift. JNCI Cancer Spectr. 2023, 7, pkad010. [Google Scholar] [CrossRef] [PubMed]

- Cox, A.; Seth, I.; Xie, Y.; Hunter-Smith, D.J.; Rozen, W.M. Utilizing ChatGPT-4 for Providing Medical Information on Blepharoplasties to Patients. Aesthetic Surg. J. 2023, 43, NP658–NP662. [Google Scholar] [CrossRef] [PubMed]

- Potapenko, I.; Boberg-Ans, L.C.; Stormly Hansen, M.; Klefter, O.N.; van Dijk, E.H.C.; Subhi, Y. Artificial intelligence-based chatbot patient information on common retinal diseases using ChatGPT. Acta Ophthalmol. 2023, 101, 829–831. [Google Scholar] [CrossRef]

- Van Dis EA, M.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five priorities for research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef] [PubMed]

- Biswas, S. ChatGPT and the future of medical writing. Radiology 2023, 3, 223312. [Google Scholar] [CrossRef] [PubMed]

- King, M.R.; ChatGPT. A conversation on artificial intelligence, chatbots, and plagiarism in higher education. Cell. Mol. Bioeng. 2023, 16, 1–2. [Google Scholar] [CrossRef]

- Ethics Guidelines for Trustworthy AI|Shaping Europe’s Digital Future. 2019. [WWW, and Document]. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed on 6 March 2023).

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Johnson, D.; Goodman, R.; Patrinely, J.; Stone, C.; Zimmerman, E.; Donald, R.; Chang, S.; Berkowitz, S.; Finn, A.; Jahangir, E.; et al. Assessing the accuracy and reliability of AI-generated medical responses: An evaluation of the Chat-GPT model. Res. Sq. 2023. [Google Scholar] [CrossRef]

- Likert, R. Technique for the measure of attitudes Arch. Psycho 1932, 22, N.140. [Google Scholar]

- Available online: https://www.jamovi.org (accessed on 1 August 2023).

- Morita, P.P.; Abhari, S.; Kaur, J.; Lotto, M.; Miranda, P.A.D.S.E.S.; Oetomo, A. Applying ChatGPT in public health: A SWOT and PESTLE analysis. Front. Public Health 2023, 11, 1225861. [Google Scholar] [CrossRef]

- Thirunavukarasu, A.J.; Hassan, R.; Mahmood, S.; Sanghera, R.; Barzangi, K.; El Mukashfi, M.; Shah, S. Trialling a large language model (ChatGPT) in general practice with the applied knowledge test: Observational study demonstrating opportunities and limitations in primary care. JMIR Med. Educ. 2023, 9, e46599. [Google Scholar] [CrossRef]

- Komorowski, M.; del Pilar Arias López, M.; Chang, A.C. How could ChatGPT impact my practice as an intensivist? An overview of potential applications, risks and limitations. Intensive Care Med. 2023, 49, 844–847. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Z. Ethics and governance of trustworthy medical artificial intelligence. BMC Med. Inform. Decis. Mak. 2023, 23, 7. [Google Scholar] [CrossRef]

- Masters, K. Ethical use of artificial intelligence in health professions education: AMEE Guide No. 158. Med. Teach. 2023, 45, 574–584. [Google Scholar] [CrossRef]

- Available online: https://www.unisi.it/sites/default/files/albo_pretorio/allegati/Linee_Guida_ChatGPT_ed_altri_modelli_di_LLM%20%281%29.pdf (accessed on 1 August 2023).

- Available online: https://www.quotidianosanita.it/allegati/allegato1697706561.pdf (accessed on 1 August 2023).

- Mago, J.; Sharma, M. The Potential Usefulness of ChatGPT in Oral and Maxillofacial Radiology. Cureus 2023, 15, e42133. [Google Scholar] [CrossRef]

- Vaira, L.A.; Lechien, J.R.; Abbate, V.; Allevi, F.; Audino, G.; Beltramini, G.A.; Bergonzani, M.; Bolzoni, A.; Committeri, U.; Crimi, S.; et al. Accuracy of ChatGPT-Generated Information on Head and Neck and Oromaxillofacial Surgery: A Multicenter Collaborative Analysis. Otolaryngol. Head Neck Surg. 2023; epub ahead of print. [Google Scholar] [CrossRef]

- Subramanian, A.K.; Chen, Y.; Almalki, A.; Sivamurthy, G.; Kafle, D. Cephalometric Analysis in Orthodontics Using Artificial Intelligence-A Comprehensive Review. Biomed. Res. Int. 2022, 2022, 1880113. [Google Scholar] [CrossRef]

- Tanaka, O.M.; Gasparello, G.G.; Hartmann, G.C.; Casagrande, F.A.; Pithon, M.M. Assessing the reliability of ChatGPT: A content analysis of self-generated and self-answered questions on clear aligners, TADs and digital imaging. Dental Press J. Orthod. 2023, 28, e2323183. [Google Scholar] [CrossRef] [PubMed]

- Duran, G.S.; Yurdakurban, E.; Topsakal, K.G. The Quality of CLP-Related Information for Patients Provided by ChatGPT. Cleft Palate Craniofac. J. 2023, 10556656231222387. [Google Scholar] [CrossRef] [PubMed]

- Monill-González, A.; Rovira-Calatayud, L.; d’Oliveira, N.G.; Ustrell-Torrent, J.M. Artificial intelligence in orthodontics: Where are we now? A scoping review. Orthod. Craniofac. Res. 2021, 24 (Suppl. S2), 6–15. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, W.M.; Azhari, A.A.; Fawaz, K.A.; Ahmed, H.M.; Alsadah, Z.M.; Majumdar, A.; Carvalho, R.M. Artificial intelligence in the detection and classification of dental caries. J. Prosthet. Dent. 2023, S0022-3913(23)00478-X. [Google Scholar] [CrossRef] [PubMed]

- Strunga, M.; Urban, R.; Surovková, J.; Thurzo, A. Artificial Intelligence Systems Assisting in the Assessment of the Course and Retention of Orthodontic Treatment. Healthcare 2023, 11, 683. [Google Scholar] [CrossRef] [PubMed]

- Vishwanathaiah, S.; Fageeh, H.N.; Khanagar, S.B.; Maganur, P.C. Artificial Intelligence Its Uses and Application in Pediatric Dentistry: A Review. Biomedicines 2023, 11, 788. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).