Development of Bleeding Artificial Intelligence Detector (BLAIR) System for Robotic Radical Prostatectomy

Abstract

:1. Introduction

2. Materials and Methods

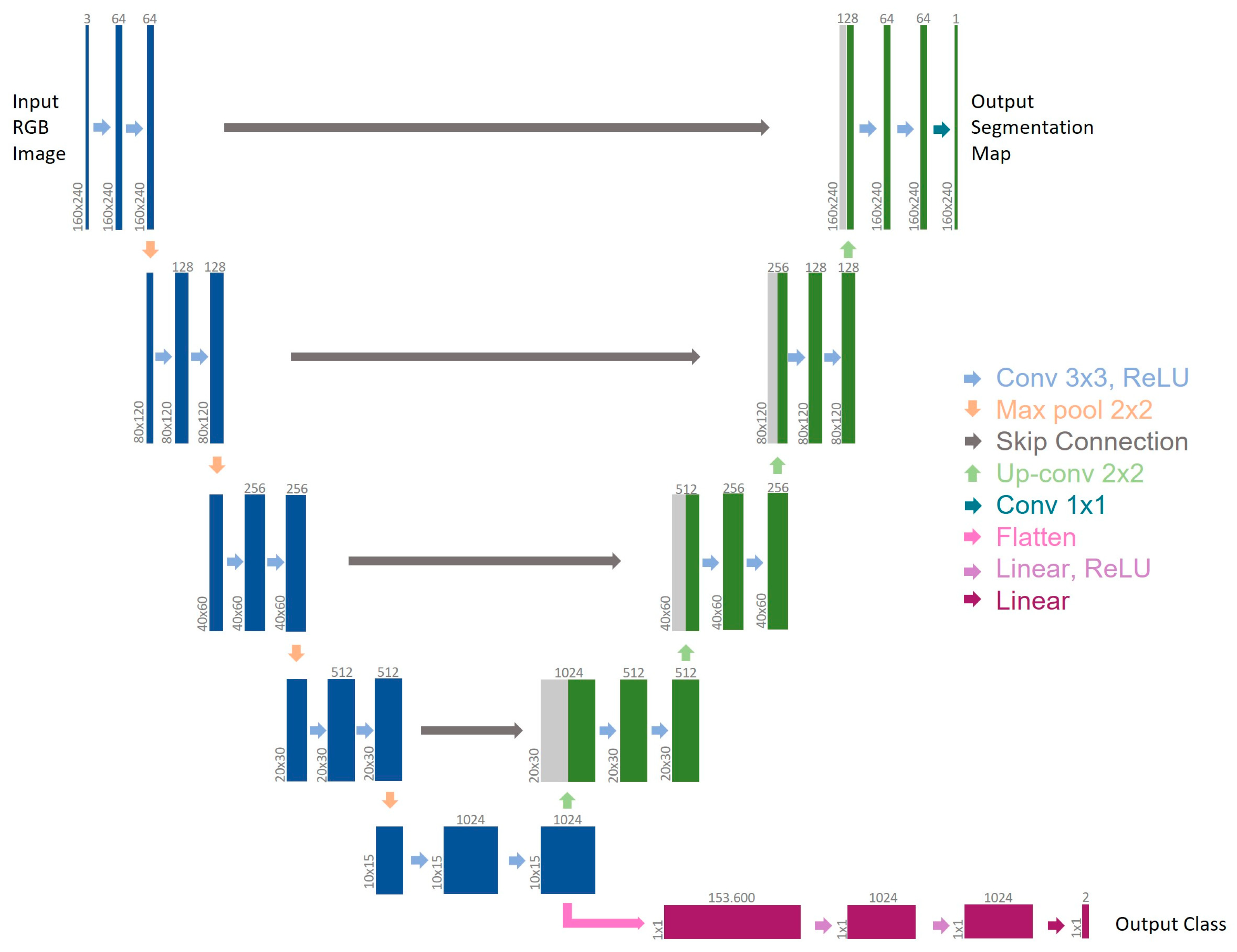

2.1. MTL-CNN Development

2.2. BLeeding Artificial Intelligence DetectoR (BLAIR) Software Implementation

2.3. Clinical Evaluation of BLAIR Software Performances

2.4. Data Collection and Statistical Analysis

3. Results

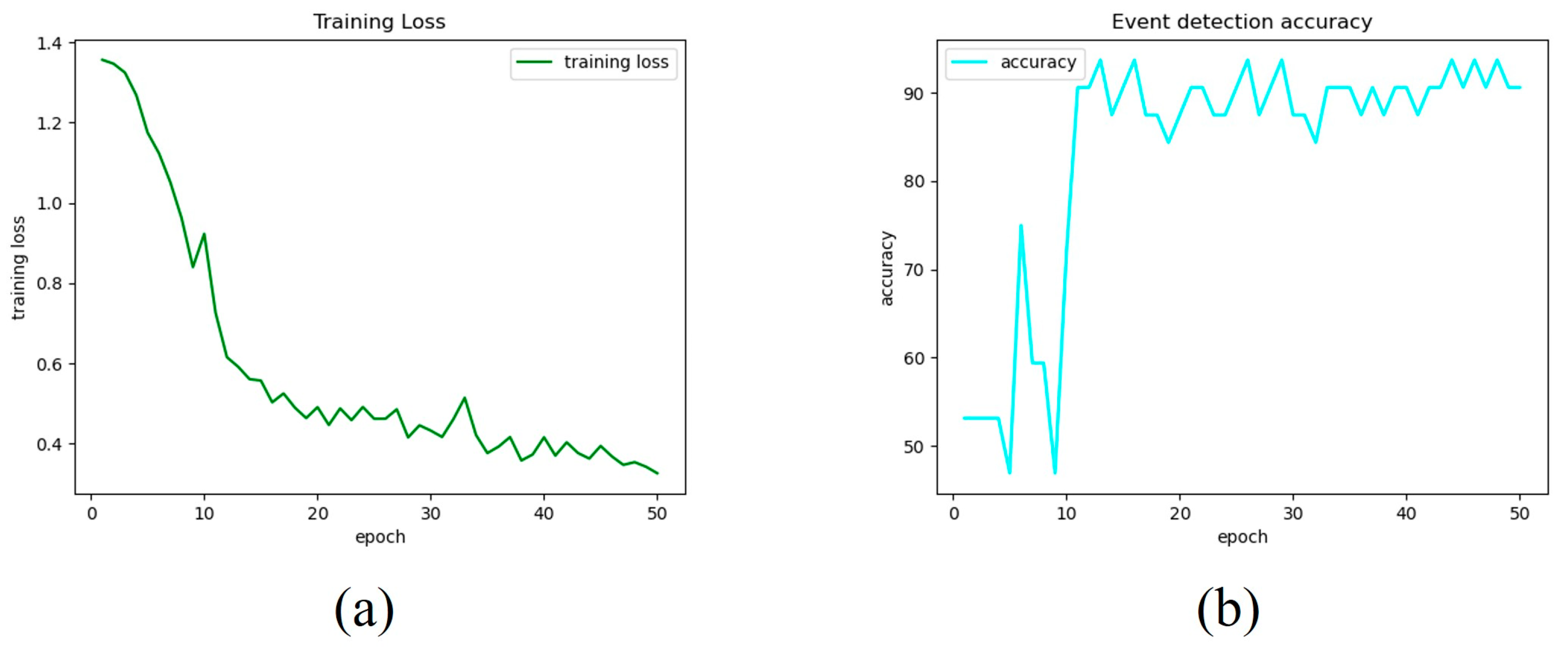

3.1. MTL-CNN Training Phase Findings

3.2. Clinical Evaluation of BLAIR Software Performances

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mottet, N.; Bellmunt, J.; Bolla, M.; Briers, E.; Cumberbatch, M.G.; De Santis, M.; Fossati, N.; Gross, T.; Henry, A.M.; Joniau, S.; et al. EAU-ESTRO-SIOG Guidelines on Prostate Cancer. Part 1: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur. Urol. 2017, 71, 618–629. [Google Scholar] [CrossRef] [PubMed]

- Nik-Ahd, F.; Souders, C.P.; Houman, J.; Zhao, H.; Chughtai, B.; Anger, J.T. Robotic Urologic Surgery: Trends in Food and Drug Administration-Reported Adverse Events Over the Last Decade. J. Endourol. 2019, 33, 649–654. [Google Scholar] [CrossRef] [PubMed]

- Amparore, D.; Checcucci, E.; Serni, S.; Minervini, A.; Gacci, M.; Esperto, F.; Fiori, C.; Porpiglia, F.; Campi, R.; European Society of Residents in Urology (ESRU). Urology Residency Training at the Time of COVID-19 in Italy: 1 Year After the Beginning. Eur. Urol. Open Sci. 2021, 17, 37–40. [Google Scholar] [CrossRef] [PubMed]

- Okhunov, Z.; Safiullah, S.; Patel, R.; Juncal, S.; Garland, H.; Khajeh, N.R.; Martin, J.; Capretz, T.; Cottone, C.; Jordan, M.L.; et al. Evaluation of Urology Residency Training and Perceived Resident Abilities in the United States. J. Surg. Educ. 2019, 76, 936–948. [Google Scholar] [CrossRef]

- Garcia-Martinez, A.; Vicente-Samper, J.M.; Sabater-Navarro, J.M. Automatic detection of surgical haemorrhage using computer vision. Artif. Intell. Med. 2017, 78, 55–60. [Google Scholar] [CrossRef] [PubMed]

- Okamoto, T.; Ohnishi, T.; Kawahira, H.; Dergachyava, O.; Jannin, P.; Haneishi, H. Real-time identification of blood regions for hemostasis support in laparoscopic surgery. Signal Image Video Process. 2019, 13, 405–412. [Google Scholar] [CrossRef]

- Marullo, G.; Tanzi, L.; Piazzolla, P.; Vezzetti, E. 6D object position estimation from 2D images: A literature review. Multimed. Tools Appl. 2022, 82, 24605–24643. [Google Scholar] [CrossRef]

- Wei, H.; Rudzicz, F.; Fleet, D.; Grantcharov, T.; Taati, B. Intraoperative Adverse Event Detection in Laparoscopic Surgery: Stabilized Multi-Stage Temporal Convolutional Network with Focal-Uncertainty Loss. In Proceedings of the 6th Machine Learning for Healthcare Conference, Virtual, 6–7 August 2021; pp. 283–307. [Google Scholar]

- Jia, X.; Meng, M.Q.-H. A deep convolutional neural network for bleeding detection inWireless Capsule Endoscopy images. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 639–642. [Google Scholar]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2021, 34, 5586–5609. [Google Scholar] [CrossRef]

- Padovan, E.; Marullo, G.; Tanzi, L.; Piazzolla, P.; Moos, S.; Porpiglia, F.; Vezzetti, E. A deep learning framework for real-time 3D model registration in robot-assisted laparoscopic surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2022, 18, e2387. [Google Scholar] [CrossRef]

- Ji, J.; Buch, S.; Soto, A.; Niebles, J.C. End-to-End Joint Semantic Segmentation of Actors and Actions in Video. In The European Conference on Computer Vision (ECCV) 2018; Ferrari, M., Hebert, C., Sminchisescu, E.Y., Weiss, A.C., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 734–749. [Google Scholar] [CrossRef]

- Marullo, G.; Tanzi, L.; Ulrich, L.; Porpiglia, F.; Vezzetti, E. A Multi-Task Convolutional Neural Network for Semantic Segmentation and Event Detection in Laparoscopic Surgery. J. Pers. Med. 2023, 13, 413. [Google Scholar] [CrossRef]

- Crawshaw, M. Multi-Task Learning with Deep Neural Networks: A Survey. arXiv 2020, arXiv:2009.09796. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Checcucci, E.; De Cillis, S.; Amparore, D.; Gabriele, V.; Piramide, F.; Piana, A.; Fiori, C.; Piazzolla, P.; Porpiglia, F. Artificial Intelligence alert systems during robotic surgery: A new potential tool to improve the safety of the intervention. Urol. Video J. 2023, 18, 100221. [Google Scholar] [CrossRef]

- Alhuzali, T.; Beh, E.J.; Stojanovski, E. Multiple correspondence analysis as a tool for examining Nobel Prize data from 1901 to 2018. PLoS ONE. 2022, 17, e0265929. [Google Scholar] [CrossRef]

- Rassweiler, J.J.; Autorino, R.; Klein, J.; Mottrie, A.; Goezen, A.S.; Stolzenburg, J.U.; Rha, K.H.; Schurr, M.; Kaouk, J.; Patel, V.; et al. Future of robotic surgery in urology. BJU Int. 2017, 120, 822–841. [Google Scholar] [CrossRef]

- Samreen, S.; Fluck, M.; Hun, S.; Wild, J.; Blasfield, J. Laparoscopic versus robotic adrenalectomy. J. Robot. Surg. 2018, 13, 69–75. [Google Scholar] [CrossRef]

- Rosen, M.A.; DiazGranados, D.; Dietz, A.S.; Benishek, L.E.; Thompson, D.; Pronovost, P.J.; Weaver, S.J. Teamwork in healthcare: Key discoveries enabling safer, high-quality care. Am. Psychol. 2018, 73, 433–450. [Google Scholar] [CrossRef]

- Nwoye, E.; Woo, W.L.; Gao, B.; Anyanwu, T. Artificial Intelligence for Emerging Technology in Surgery: Systematic Review and Validation. IEEE Rev. Biomed. Eng. 2023, 16, 241–259. [Google Scholar] [CrossRef]

- Cool, D.; Downey, D.; Izawa, J.; Chin, J.; Fenster, A. 3D prostate model formation from non-parallel 2D ultrasound biopsy images. Med. Image Anal. 2006, 10, 875–887. [Google Scholar] [CrossRef]

- Toth, D.; Pfister, M.; Maier, A.; Kowarschik, M.; Hornegger, J. Adap- tion of 3D models to 2D X-ray images during endovascular abdominal aneurysm repair. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 339–346. [Google Scholar]

- Checcucci, E.; Piana, A.; Volpi, G.; Piazzolla, P.; Amparore, D.; De Cillis, S.; Piramide, F.; Gatti, C.; Stura IBollito, E.; Massa, F.; et al. Three-dimensional automatic artificial intelligence driven augmented-reality selective biopsy during nerve sparing robot-assisted radical prostatectomy: A feasibility and accuracy study. Asian J. Urol. 2023, 10, 407–415. [Google Scholar] [CrossRef]

- De Backer, P.; Eckhoff, J.A.; Simoens, J.; Müller, D.T.; Allaeys, C.; Creemers, H.; Hallemeesch, A.; Mestdagh, K.; Van Praet, C.; Debbaut, C.; et al. Multicentric exploration of tool annotation in robotic surgery: Lessons learned when starting a surgical artificial intelligence project. Surg. Endosc. 2022, 36, 8533–8548. [Google Scholar] [CrossRef]

- De Backer, P.; Van Praet, C.; Simoens, J.; Peraire Lores, M.; Creemers, H.; Mestdagh, K.; Allaeys, C.; Vermijs, S.; Piazza, P.; Mottaran, A.; et al. Improving Augmented Reality Through Deep Learning: Real-time Instrument Delineation in Robotic Renal Surgery. Eur. Urol. 2023, 84, 86–91. [Google Scholar] [CrossRef]

- Napolitano, L.; Maggi, M.; Sampogna, G.; Bianco, M.; Campetella, M.; Carilli, M.; Lucci Chiarissi, M.; Civitella, A.; DEVita, F.; DIMaida, F.; et al. A survey on preferences, attitudes, and perspectives of Italian urology trainees: Implications of the novel national residency matching program. Minerva Urol. Nephrol. 2023. [Google Scholar] [CrossRef]

- Cacciamani, G.E.; Eppler, M.; Sayegh, A.S.; Sholklapper, T.; Mohideen, M.; Miranda, G.; Goldenberg, M.; Sotelo, R.J.; Desai, M.M.; Gill, I.S. Recommendations for Intraoperative Adverse Events Data Collection in Clinical Studies and Study Protocols. An ICARUS Global Surgical Collaboration Study. Int. J. Surg. Protoc. 2023, 27, 23–83. [Google Scholar] [CrossRef]

- Madad Zadeh, S.; Francois, T.; Calvet, L.; Chauvet, P.; Canis, M.; Bartoli, A.; Bourdel, N. SurgAI: Deep learning for computerized laparoscopic image understanding in gynaecology. Surg. Endosc. 2020, 34, 5377–5383. [Google Scholar] [CrossRef]

| Patient ID | Age at Time of Surgery | BMI | LND | Operative Time | EBL | Nerve Sparing | Pathological GS | pT | Prostate Volume |

|---|---|---|---|---|---|---|---|---|---|

| #1 | 69 | 27 | YES | 119 | 350 | Full | 3 + 4 | pT3a | 30 |

| #2 | 67 | 33 | YES | 124 | 400 | NO | 4 + 5 | pT3a | 38 |

| #3 | 71 | 31 | YES | 127 | 500 | NO | 3 + 4 | pT2c | 55 |

| #4 | 71 | 27 | YES | 134 | 300 | Partial | 3 + 4 | pT2c | 118 |

| #5 | 75 | 29 | YES | 128 | 400 | NO | 4 + 4 | pT3a | 32 |

| #6 | 52 | 24 | YES | 149 | 400 | Full | 3 + 4 | pT3a | 15 |

| #7 | 65 | 24 | YES | 114 | 300 | Partial | 3 + 4 | pT2c | 68 |

| #8 | 69 | 23 | YES | 175 | 500 | NO | 4 + 5 | pT3b | 35 |

| #9 | 62 | 27 | YES | 132 | 350 | Partial | 4 + 3 | pT2c | 38 |

| #10 | 70 | 28 | YES | 135 | 400 | Partial | 3 + 4 | pT2c | 59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Checcucci, E.; Piazzolla, P.; Marullo, G.; Innocente, C.; Salerno, F.; Ulrich, L.; Moos, S.; Quarà, A.; Volpi, G.; Amparore, D.; et al. Development of Bleeding Artificial Intelligence Detector (BLAIR) System for Robotic Radical Prostatectomy. J. Clin. Med. 2023, 12, 7355. https://doi.org/10.3390/jcm12237355

Checcucci E, Piazzolla P, Marullo G, Innocente C, Salerno F, Ulrich L, Moos S, Quarà A, Volpi G, Amparore D, et al. Development of Bleeding Artificial Intelligence Detector (BLAIR) System for Robotic Radical Prostatectomy. Journal of Clinical Medicine. 2023; 12(23):7355. https://doi.org/10.3390/jcm12237355

Chicago/Turabian StyleCheccucci, Enrico, Pietro Piazzolla, Giorgia Marullo, Chiara Innocente, Federico Salerno, Luca Ulrich, Sandro Moos, Alberto Quarà, Gabriele Volpi, Daniele Amparore, and et al. 2023. "Development of Bleeding Artificial Intelligence Detector (BLAIR) System for Robotic Radical Prostatectomy" Journal of Clinical Medicine 12, no. 23: 7355. https://doi.org/10.3390/jcm12237355

APA StyleCheccucci, E., Piazzolla, P., Marullo, G., Innocente, C., Salerno, F., Ulrich, L., Moos, S., Quarà, A., Volpi, G., Amparore, D., Piramide, F., Turcan, A., Garzena, V., Garino, D., De Cillis, S., Sica, M., Verri, P., Piana, A., Castellino, L., ... Porpiglia, F. (2023). Development of Bleeding Artificial Intelligence Detector (BLAIR) System for Robotic Radical Prostatectomy. Journal of Clinical Medicine, 12(23), 7355. https://doi.org/10.3390/jcm12237355