Abstract

Postoperative pulmonary complications (PPCs) are significant causes of postoperative morbidity and mortality. This study presents the utilization of machine learning for predicting PPCs and aims to identify the important features of the prediction models. This study used a retrospective cohort design and collected data from two hospitals. The dataset included perioperative variables such as patient characteristics, preexisting diseases, and intraoperative factors. Various algorithms, including logistic regression, random forest, light-gradient boosting machines, extreme-gradient boosting machines, and multilayer perceptrons, have been employed for model development and evaluation. This study enrolled 111,212 adult patients, with an overall incidence rate of 8.6% for developing PPCs. The area under the receiver-operating characteristic curve (AUROC) of the models was 0.699–0.767, and the f1 score was 0.446–0.526. In the prediction models, except for multilayer perceptron, the 10 most important features were obtained. In feature-reduced models, including 10 important features, the AUROC was 0.627–0.749, and the f1 score was 0.365–0.485. The number of packed red cells, urine, and rocuronium doses were similar in the three models. In conclusion, machine learning provides valuable insights into PPC prediction, significant features for prediction, and the feasibility of models that reduce the number of features.

1. Introduction

Pulmonary complications are the main causes of postoperative morbidity and mortality [1]. The reported incidence of postoperative lung complications varies depending on the patient population and the criteria used to define the complications [2]. Postoperative pulmonary complications (PPCs) include almost all complications affecting anesthesia and the postoperative respiratory tract. These complications are heterogeneous and commonly defined, have significant adverse effects on patients, and are difficult to predict [3]. Predicting PPCs can play an important role in postoperative patient care because it reduces the risk of potentially serious complications and allows doctors to plan appropriate strategies. By identifying patients with potential PPC outbreaks, health-care providers can prepare alternative technologies, equipment, and personnel to ensure successful postoperative patient care and avoid complications, such as ventilator care, intensive care, and death. Thus, for PPC management, preventive strategies may be more effective than treating established PPCs. Identifying the risk of PPCs before surgery is important to guide preventive interventions to reduce the risk and incidence of PPCs [4,5].

Machine learning (ML), a subfield of artificial intelligence (AI), has shown remarkable potential in various medical applications, including predictive analytics [6,7,8,9]. Using ML algorithms, health-care providers can analyze large volumes of patient data, identify patterns, and generate accurate predictions [10]. This study focuses on the possibility of utilizing ML techniques to predict postoperative pulmonary complications to aid clinicians in risk assessment, conduct early intervention, and improve patient outcomes.

2. Materials and Methods

2.1. Data Collection

This retrospective cohort study protocol was approved by the Clinical Research Ethics Committee of Chuncheon Sacred Heart Hospital, Hallym University. The need for informed consent was waived due to the retrospective study design. The medical records of patients treated between 1 January 2011 and 15 November 2021 were obtained from the clinical data warehouses of two hospitals affiliated with Hallym University Medical Center. One hospital (Hallym University Sacred Heart Hospital) is located in a metropolitan area, and the other (Chuncheon Sacred Heart Hospital) is located in a nonmetropolitan area.

2.2. Patients and Postoperative Pulmonary Complications

This study included adult patients aged ≥ 18 years who did not exhibit any preoperative pulmonary complications. Patients with missing or outlier data were excluded. PPCs include atelectasis, pulmonary edema, pleural effusion, pneumothorax, pulmonary embolism, respiratory failure, pneumonia, and acute respiratory distress. The determination of pulmonary complications is presented in Supplementary Table S1. All radiological findings were confirmed by radiologists.

2.3. Dataset

The dataset included 102 perioperative variables, including patient characteristics, preexisting diseases, and intraoperative factors. The definitions of the variables are summarized in Supplementary Table S2. Before model learning, the datasets were standardized using min–max scaling. The dataset was divided into training and test datasets. The training set comprised 20% of the total dataset. The training and test sets were stratified so that they included PPCs at the same rate.

2.4. Machine Learning

During supervised learning, an ML method was used to infer a function from the training data, and a classification method was used to mark the value of a given input vector. We used five ML algorithms, which contained the following: logistic regression, random forest, light-gradient boosting machine, extreme-gradient boosting machine, and multilayer perceptron (MLP) [11,12,13,14,15,16,17,18].

Developing classification models using imbalanced datasets poses the risk of yielding ineffectual models that exhibit an inability to accurately classify minority classes of substantive interest. Moreover, a small number of observations in a minority class is insufficient to represent a small number of observations that sample the population distribution of a minority group, thus risking overfitting the classification model. Therefore, even if minority groups are classified in the training dataset, there is a risk that they will not be classified properly in the new data. Therefore, we use the synthetic minority oversampling technique for all algorithms [19].

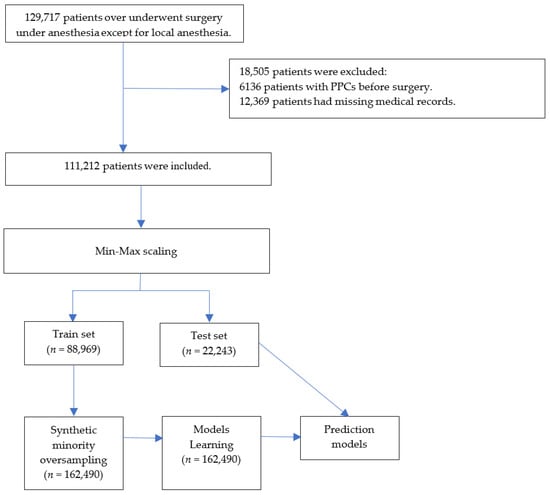

ML models have hyperparameters that must be set to customize the model to the dataset. Although the general effects of hyperparameters on models are known, establishing a combination of hyperparameters that interact with them in a given dataset is difficult. A better approach is to objectively search for different values of model hyperparameters and select a subset that generates models that achieve the best performance on a given dataset. This is known as hyperparameter optimization or hyperparameter tuning [20]. We used a random search for hyperparameter optimization [16], in which each dimension represents a hyperparameter and each point represents a single model configuration, defining the volume to be searched as a restricted domain of hyperparameter values and random samples from that domain. The data processing and the ML processes are summarized in Figure 1.

Figure 1.

Flowchart. PPCs = postoperative pulmonary complications.

2.5. Feature Importance and Simplified Models

The feature importance of the models was obtained using a built-in function after a random search for hyperparameter optimization. Models with ten important features were created and evaluated to assess the performance of the simplified models for practicality.

2.6. Statistics and Metrics

Descriptive analyses were performed to compare the characteristics and perioperative data of patients with and without PPCs. Categorical variables are presented as numbers (%), and continuous variables are presented as medians (interquartile ranges). Differences were evaluated as absolute standardized differences. Five metrics were calculated to assess model performance. The area under the receiver-operating characteristic curve (AUROC) was used as a metric. The recall, precision, f1 score, and accuracy were calculated. Bootstrapping (n = 20,000) was performed to calculate 95% confidence intervals. Python (version 3.7; Python Software Foundation, Beaverton, OR, USA) was used to calculate model metrics.

3. Results

3.1. Patient Characteristics

This study enrolled 129,717 patients aged ≥ 18 years who did not exhibit preoperative pulmonary complications. After excluding 18,505 patients with missing (n = 12,369) and preoperative pulmonary complication (n = 6136) data, 111,212 patients were included in model development and evaluation (Figure 1). The patient characteristics and perioperative data are summarized in Table 1 and Table 2. Among the encompassing array of patient characteristics and perioperative data, those features demonstrating an absolute standardized difference of less than 0.1 were as follows: Male, body mass index, hypothyroidism, peptic ulcer disease, acquired immunodeficiency syndrome/human immunodeficiency virus, lymphoma, rheumatoid arthritis/collagen vascular diseases, obesity, weight loss, blood loss, anemia, drug abuse, psychoses, alcohol, smoking amount, inhalation anesthetics, N2O, intraoperative cryoprecipitate, succinylcholine, pethidine, activated partial thromboplastin time, uric acid, laparoscopic surgery, and musculoskeletal surgery, urogenital surgery.

Table 1.

Patient characteristics.

Table 2.

Perioperative data.

The percentage of patients who developed PPCs was 8.6%. The training set included 88,969 patients and was oversampled from 162,490 patients. The test set comprised 22,243 patients.

3.2. Model Performance

The AUROC of the models was 0.699–0.767, and that of the logistic regression model was the highest. The F1 score of the models was 0.446–0.456, and that of the random forest model was the highest. The details of the model performance are summarized in Table 3.

Table 3.

Performance metrics of each model for predicting postoperative pulmonary complications.

3.3. Feature Importance

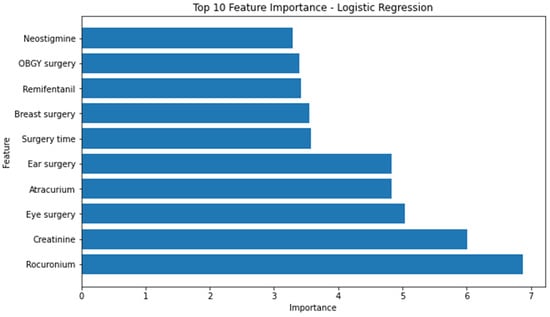

Using the built-in function, we obtained the feature importance of each model except the MLP model. The ten most significant attributes extracted from the logistic regression model, characterized by the highest area under the receiver-operating characteristic curve (AUROC), encompassed the following variables: rocuronium, creatinine, eye surgery, atracurium, ear surgery, duration of surgery, breast surgery, administration of remifentanil, obstetrics and gynecology surgery, and administration of neostigmine (Figure 2).

Figure 2.

Top 10 feature importance of logistics regression model. OBGY = obstetrics and gynecology.

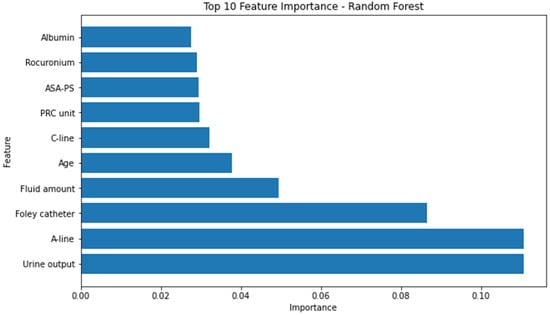

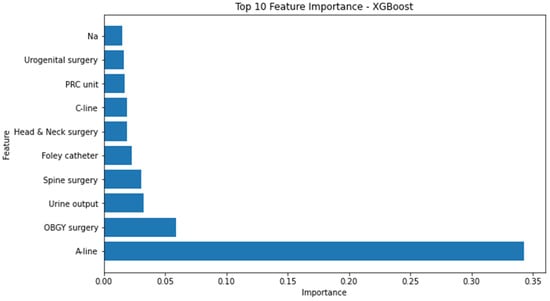

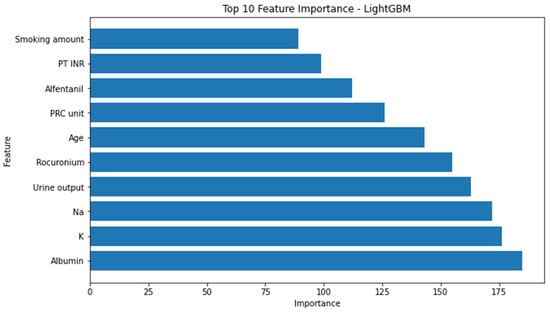

Conversely, the attributes identified as the most influential within the random forest model, as determined by the highest F1 score, consisted of the following factors: urine output, utilization of arterial continuous monitoring line, presence of foley catheter, volume of administered fluids, patient age, employment of central venous pressure monitoring line, transfusion of packed red blood cells, American Society of Anesthesiologists physical status classification, administration of rocuronium, and serum albumin levels. (Figure 3) The significance of the arterial continuous monitoring line stood out conspicuously in comparison to other attributes within the XG boosting model. Notably, albumin, sodium, and potassium, among others, emerged as pivotal features in the light-gradient boosting machine. A comprehensive overview of the feature importance rankings for both the XG boosting and light-gradient boosting machine models is provided in Appendix A and Appendix B, respectively.

Figure 3.

Top 10 feature importance of random forest model. A-line = arterial continuous monitoring line, ASA-PS = American Society of Anesthesiologists physical status, C-line = central venous pressure monitoring line, PRC = packed red cell.

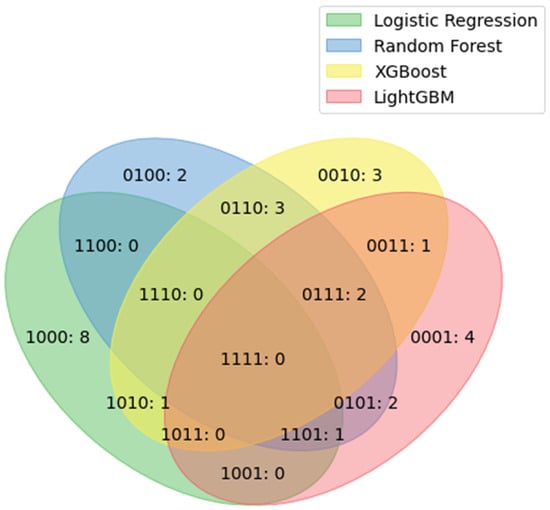

Figure 4 lists the important duplicated features of the models. No features among the top 10 features were included in all of the models. Three features were included in the top 10 important features of the three models: rocuronium, urine output, and packed red cells (PRCs).

Figure 4.

Duplicated features among the top 10 important features of each model. The four numbers represent the logistic regression, random forest, XG boosting, and LGBM models, respectively, in order from the beginning. Zero indicates none, and 1 indicates present. 1101: rocuronium; 0111: urine output and PRC unit. LGBM = light-gradient boosting machine, XG = extreme gradient, PRC = packed red cell.

3.4. Evaluation of Simplicity Model

Table 4 shows the evaluation of models, including the top 10 features. Generally, the performance of the simplicity model is lower than models including all features. However, AUROC is highest in the random forest model, unlike the results of models including all features.

Table 4.

Performance metrics of models including top 10 features for predicting postoperative pulmonary complications.

4. Discussion

This cohort study used five ML algorithms with 102 features, including preoperative and intraoperative data, to predict the occurrence of PPCs. Furthermore, we identified the important factors according to the algorithm and developed simpler models that included the top 10 important features. Some factors are common as the major features of several models. Although the accuracy of the prediction models was high, metrics such as AUROC and f1 score were not excellent in all models.

The area of prediction of PPCs using AI has been extended. Recent studies have demonstrated the emergence of a new paradigm. However, previous studies were limited to specific groups or pulmonary diseases. Peng et al. conducted a multicenter study that focused on geriatric patients and utilized a deep neural network model to predict PPCs [7]. Although it provides valuable insights into a specific patient population, its generalizability to other age groups and surgical specialties remains uncertain. Xue et al. developed a model using preoperative and intraoperative data to identify the risk of postoperative pneumonia [8]. Their study investigated the use of ML to predict various postoperative complications, including pneumonia, among PPCs. However, they did not specifically highlight the distinct characteristics and risk factors associated with PPCs other than pneumonia, which potentially diluted the focus on PPCs. Xue et al. focused on predicting pulmonary complications, specifically in emergency gastrointestinal surgery [9]. However, this study did not explore the broader landscape of PPCs across different surgical procedures and settings.

In this study, we aimed to address the limitations of previous research and provide novel insights into the prediction of PPCs using ML techniques. Our study’s primary outcome focused exclusively on PPCs, allowing for a comprehensive evaluation of the risk factors specific to this complication. We collected a large and diverse dataset of patients with various surgical specialties, age, and comorbidity profiles. This enabled a more comprehensive understanding of the risk factors associated with PPCs in different patient populations. We employed advanced feature-selection techniques to identify the most relevant predictors of PPCs, ensuring optimal model performance and reducing the potential for overfitting. This approach can enhance the accuracy and generalizability of predictive models [21,22]. However, despite the development of a simplified model, it did not exhibit better performance. Although we used a method to enhance performance, such as oversampling, hyperparameter tuning, data preprocessing, and min–max scaling, it was insufficient to obtain the best performance.

The choice of features or variables used in models can significantly affect their performance. If the selected features do not capture the relevant patterns or contain noise, suboptimal performance can be achieved [23]. Using only the top 10 features may not capture the relevant patterns of PPCs. In our models, the top 10 features were usually considered factors associated with PPCs. In this study, urine output [24,25], transfusion of red blood cells [26,27], and rocuronium [28] were the top 10 important features in three models, and most models have several features associated with PPC as the top 10 important features. This suggests that ML algorithms may use generally known factors associated with PPC. To improve the performance in future studies, we can add data to these features or consider enhanced feature engineering techniques.

Machine learning, a constituent of artificial intelligence, has showcased immense potential across diverse medical domains, notably in predictive analytics. This technological facet holds the capacity to revolutionize health care by amplifying the precision of diagnoses, treatment strategies, and overall patient well-being. An area of profound application lies in intraoperative management, with specific emphasis on anesthesia depth and hemodynamics, resulting in notable reductions in postoperative complications. In the context of anesthesia depth regulation, AI emerges as a pivotal player, ensuring patient safety and optimizing anesthesia administration during surgical procedures. By adeptly analyzing an array of physiological parameters, AI algorithms dynamically calibrate anesthesia dosages, mitigating the risks of both insufficient and excessive sedation. Consequently, this proactive management approach contributes to the minimization of complications linked to anesthesia administration. Leveraging techniques such as machine learning and signal processing, AI effectively discerns patients’ anesthesia responsiveness, affording anesthesiologists the means to finely adjust drug delivery, thereby maintaining optimal levels throughout surgery [29,30]. Hemodynamics, another critical aspect, also shows substantial AI potential for refining intraoperative blood pressure and circulatory management, translating to diminished postoperative complications. Through real-time amalgamation of diverse data streams, AI algorithms provide valuable insights into patients’ cardiovascular status, enabling timely interventions to uphold stable hemodynamics and consequently abate the prospects of adverse postoperative outcomes. By scrutinizing patient-specific variables and perpetually monitoring vital indicators, AI prognosticates the likelihood of hypotensive episodes, empowering medical teams to adopt preemptive measures and alleviate potential complications [31,32,33]. In a holistic evaluation of patient data and the employment of predictive models, AI may serve as a tool for clinicians to navigate judicious decisions concerning fluid administration and cardiovascular support, ultimately culminating in heightened patient outcomes. The convergence of AI and medical science is expected as a transformative juncture, holding substantial promise for advancing the standards of intraoperative care and fortifying postoperative convalescence.

Although our study on the prediction of postoperative pulmonary complications using ML provided valuable insights, acknowledging its limitations is crucial. These limitations include the following. First, the quality and availability of the dataset used in our study may impact the generalizability of the results. If the dataset is limited in terms of sample size, representation of diverse patient populations, or specific surgical procedures, predictive models may not accurately capture the complexities and variability of PPCs across different settings. Furthermore, the dataset used in our study may not reflect the current medical practices and advancements, potentially affecting the applicability of our findings to contemporary health-care settings [34]. Second, while our study conducted internal validation procedures, including cross-validation, external validation of independent datasets is essential to assess the generalizability of the predictive models. If external validation is not performed, or the results from the external validation dataset are not reported, the reliability and robustness of our models may be uncertain [35]. Third, while our study included a substantial number of patients, it is important to acknowledge that the data were drawn from a single center and the study was retrospectively conducted. This may raise questions about the diversity of patient populations and medical practices, potentially affecting the generalizability of the models to other health-care settings. Additionally, the retrospective nature of the study introduces potential sources of bias and limitations in data collection. Finally, ML models predict outcomes; however, they may not provide causal explanations or elucidate the underlying mechanisms of the predicted outcomes [36]. Although our study identified the risk factors associated with PPCs, it may not definitively establish causality or provide deep insights into the biological or physiological pathways involved when considering the present performance of our models.

Our study represents an advancement in the prediction of PPCs using ML. By specifically addressing the limitations of previous research and providing novel insights into this complication, we contribute to the development of more accurate and clinically relevant prediction models, optimize resource allocation, and have the potential to guide perioperative management strategies for mitigating the risk of PPCs. However, obstacles to the realization of prediction models remain, and further studies are needed to overcome them.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcm12175681/s1, Table S1: Methods used to determine pulmonary complications. Table S2: Definitions of demographic characteristics and perioperative covariates.

Author Contributions

Conceptualization, Y.-S.K. and J.-J.L.; methodology, J.-H.K. and Y.-S.K.; software, M.-G.K. and B.-R.C.; validation, Y.-S.K.; formal analysis, S.-M.H.; investigation, B.-R.C. and M.-G.K.; resources, J.-J.L. and S.-Y.L.; data curation, J.-H.K.; writing—original draft preparation, Y.-S.K. and J.-H.K.; writing—editing, all authors; visualization, J.-H.K.; supervision, J.-J.L. and S.-Y.L.; project administration, J.-J.L. and S.-M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a grant from the Medical Data-Driven Hospital Support Project through the Korea Health Information Service (KHIS), funded by the Ministry of Health and Welfare, Republic of Korea.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and was approved by the Clinical Research Ethics Committee of Chuncheon Sacred Hospital (2023-05-003). Date of approval: 29 May 2023.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to data availability. Data were obtained from the Hallym Medical Center and are available from its clinical data warehouse, with permission from the Hallym Medical Center.

Conflicts of Interest

The authors declare that this research was conducted in the absence of commercial or financial relationships that could be construed as potential conflict of interest.

Appendix A

Figure A1.

Top 10 feature importance of XG boosting model. A-line = arterial continuous monitoring line, ASA-PS = American Society of Anesthesiologists physical status, C-line = central venous pressure monitoring line, OBGY = obstetrics and gynecology, PRC = packed red cell, XG = extreme gradient.

Appendix B

Figure A2.

Top 10 feature importance of light-gradient boosting machine model. INR = international normalized ratio, GBM = gradient boosting machine, PT = prothrombin time, PRC = packed red cell.

References

- Lawrence, V.A.; Cornell, J.E.; Smetana, G.W. Strategies to reduce postoperative pulmonary complications after noncardiothoracic surgery: Systematic review for the American College of Physicians. Ann. Intern. Med. 2006, 144, 596–608. [Google Scholar] [CrossRef] [PubMed]

- Fisher, B.W.; Majumdar, S.R.; McAlister, F.A. Predicting pulmonary complications after nonthoracic surgery: A systematic review of blinded studies. Am. J. Med. 2002, 112, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Miskovic, A.; Lumb, A.B. Postoperative pulmonary complications. Br. J. Anaesth. 2017, 118, 317–334. [Google Scholar] [CrossRef] [PubMed]

- Gupta, H.; Gupta, P.K.; Fang, X.; Miller, W.J.; Cemaj, S.; Forse, R.A.; Morrow, L.E. Development and validation of a risk calculator predicting postoperative respiratory failure. Chest 2011, 140, 1207–1215. [Google Scholar] [CrossRef]

- Qaseem, A.; Snow, V.; Fitterman, N.; Hornbake, E.R.; Lawrence, V.A.; Smetana, G.W.; Weiss, K.; Owens, D.K.; for the Clinical Efficacy Assessment Subcommittee of the American College of Physicians. Risk assessment for and strategies to reduce perioperative pulmonary complications for patients undergoing noncardiothoracic surgery: A guideline from the American College of Physicians. Ann. Intern. Med. 2006, 144, 575–580. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, Y.; Yoo, K.; Kim, M.; Kang, S.S.; Kwon, Y.-S.; Lee, J.J. Prediction of Postoperative Pulmonary Edema Risk Using Machine Learning. J. Clin. Med. 2023, 12, 1804. [Google Scholar] [CrossRef]

- Peng, X.; Zhu, T.; Chen, G.; Wang, Y.; Hao, X. A multicenter prospective study on postoperative pulmonary complications prediction in geriatric patients with deep neural network model. Front. Surg. 2022, 9, 976536. [Google Scholar] [CrossRef]

- Xue, B.; Li, D.; Lu, C.; King, C.R.; Wildes, T.; Avidan, M.S.; Kannampallil, T.; Abraham, J. Use of machine learning to develop and evaluate models using preoperative and intraoperative data to identify risks of postoperative complications. JAMA Netw. Open 2021, 4, e212240. [Google Scholar] [CrossRef]

- Xue, Q.; Wen, D.; Ji, M.-H.; Tong, J.; Yang, J.-J.; Zhou, C.-M. Developing machine learning algorithms to predict pulmonary complications after emergency gastrointestinal surgery. Front. Med. 2021, 8, 655686. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Pratap Singh, R.; Suman, R.; Rab, S. Significance of machine learning in healthcare: Features, pillars and applications. Int. J. Intell. Netw. 2022, 3, 58–73. [Google Scholar] [CrossRef]

- LightGBM. Available online: https://lightgbm.readthedocs.io/en/v3.3.2/ (accessed on 30 January 2023).

- Scalable and Flexible Gradient Boosting. Available online: https://xgboost.ai/ (accessed on 30 January 2023).

- XGBoost. Available online: https://xgboost.readthedocs.io/en/stable/ (accessed on 30 January 2023).

- sklearn.neural_network.MLPClassifier. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html (accessed on 30 January 2023).

- sklearn.linear_model.LogisticRegression. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html (accessed on 30 January 2023).

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K.; Mitchell, R.; Cano, I.; Zhou, T. Xgboost: Extreme gradient boosting. R Package Version 0.4–2 2015, 1, 1–4. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3149–3157. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Feurer, M.; Hutter, F. Hyperparameter optimization. In Automated Machine Learning; Springer: Cham, Switzerland, 2019; pp. 3–33. [Google Scholar]

- Pudjihartono, N.; Fadason, T.; Kempa-Liehr, A.W.; O’Sullivan, J.M. A review of feature selection methods for machine learning-based disease risk prediction. Front. Bioinform. 2022, 2, 927312. [Google Scholar] [CrossRef]

- Wang, Q.; Lu, Y.; Zhang, X.; Hahn, J. Region of Interest Selection for Functional Features. Neurocomputing 2021, 422, 235–244. [Google Scholar] [CrossRef]

- Abawajy, J.; Darem, A.; Alhashmi, A.A. Feature Subset Selection for Malware Detection in Smart IoT Platforms. Sensors 2021, 21, 1374. [Google Scholar] [CrossRef]

- Faubel, S. Pulmonary complications after acute kidney injury. Adv. Chronic Kidney Dis. 2008, 15, 284–296. [Google Scholar] [CrossRef]

- Turcios, N.L. Pulmonary complications of renal disorders. Paediatr. Respir. Rev. 2012, 13, 44–49. [Google Scholar] [CrossRef]

- Benson, A.B. Pulmonary complications of transfused blood components. Crit. Care Nurs. Clin. N. Am. 2012, 24, 403–418. [Google Scholar] [CrossRef][Green Version]

- Grey, S.; Bolton-Maggs, P. Pulmonary complications of transfusion: Changes, challenges, and future directions. Transfus. Med. 2020, 30, 442–449. [Google Scholar] [CrossRef] [PubMed]

- Cammu, G. Residual Neuromuscular Blockade and Postoperative Pulmonary Complications: What Does the Recent Evidence Demonstrate? Curr. Anesth. Rep. 2020, 10, 131–136. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, D.A.; Witkowski, E.; Gao, L.; Meireles, O.; Rosman, G. Artificial Intelligence in Anesthesiology: Current Techniques, Clinical Applications, and Limitations. Anesthesiology 2020, 132, 379–394. [Google Scholar] [CrossRef]

- Shalbaf, R.; Behnam, H.; Sleigh, J.W.; Steyn-Ross, A.; Voss, L.J. Monitoring the depth of anesthesia using entropy features and an artificial neural network. J. Neurosci. Methods 2013, 218, 17–24. [Google Scholar] [CrossRef] [PubMed]

- Murabito, P.; Astuto, M.; Sanfilippo, F.; La Via, L.; Vasile, F.; Basile, F.; Cappellani, A.; Longhitano, L.; Distefano, A.; Li Volti, G. Proactive Management of Intraoperative Hypotension Reduces Biomarkers of Organ Injury and Oxidative Stress during Elective Non-Cardiac Surgery: A Pilot Randomized Controlled Trial. J. Clin. Med. 2022, 11, 392. [Google Scholar] [CrossRef]

- Sanfilippo, F.; La Via, L.; Dezio, V.; Amelio, P.; Genoese, G.; Franchi, F.; Messina, A.; Robba, C.; Noto, A. Inferior vena cava distensibility from subcostal and trans-hepatic imaging using both M-mode or artificial intelligence: A prospective study on mechanically ventilated patients. Intensive Care Med. Exp. 2023, 11, 40. [Google Scholar] [CrossRef]

- Wijnberge, M.; Geerts, B.F.; Hol, L.; Lemmers, N.; Mulder, M.P.; Berge, P.; Schenk, J.; Terwindt, L.E.; Hollmann, M.W.; Vlaar, A.P.; et al. Effect of a Machine Learning–Derived Early Warning System for Intraoperative Hypotension vs Standard Care on Depth and Duration of Intraoperative Hypotension During Elective Noncardiac Surgery: The HYPE Randomized Clinical Trial. JAMA 2020, 323, 1052–1060. [Google Scholar] [CrossRef]

- Khan, B.; Fatima, H.; Qureshi, A.; Kumar, S.; Hanan, A.; Hussain, J.; Abdullah, S. Drawbacks of Artificial Intelligence and Their Potential Solutions in the Healthcare Sector. In Biomedical Materials & Devices; Springer: Cham, Switzerland, 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Takada, T.; Nijman, S.; Denaxas, S.; Snell, K.I.E.; Uijl, A.; Nguyen, T.-L.; Asselbergs, F.W.; Debray, T.P.A. Internal-external cross-validation helped to evaluate the generalizability of prediction models in large clustered datasets. J. Clin. Epidemiol. 2021, 137, 83–91. [Google Scholar] [CrossRef]

- Boge, F.J.; Grünke, P.; Hillerbrand, R. Minds and Machines Special Issue: Machine Learning: Prediction Without Explanation? Minds Mach. 2022, 32, 1–9. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).