Reliability of Universal-Platform-Based Voice Screen Application in AVQI Measurements Captured with Different Smartphones

Abstract

:1. Introduction

2. Materials and Methods

2.1. Original Voice Recordings

2.2. Auditory-Perceptual Evaluation

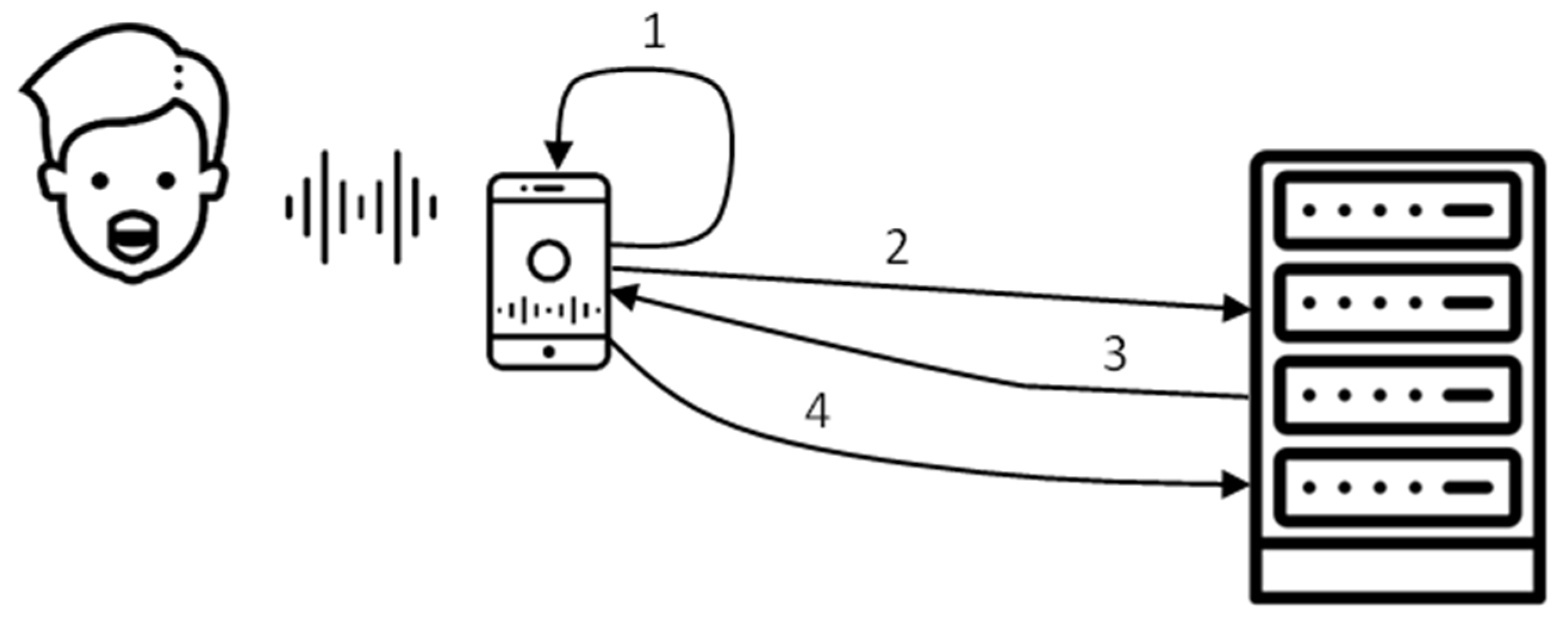

2.3. Transmitting Studio Microphone Voice Recordings to Smartphones

2.4. AVQI Estimation

2.5. Development of a Universal-Platform-Based “Voice Screen” Application for Automated AVQI Estimation

2.6. Statistical Analysis

3. Results

3.1. Raters’ Perceptual Evaluation Outcomes

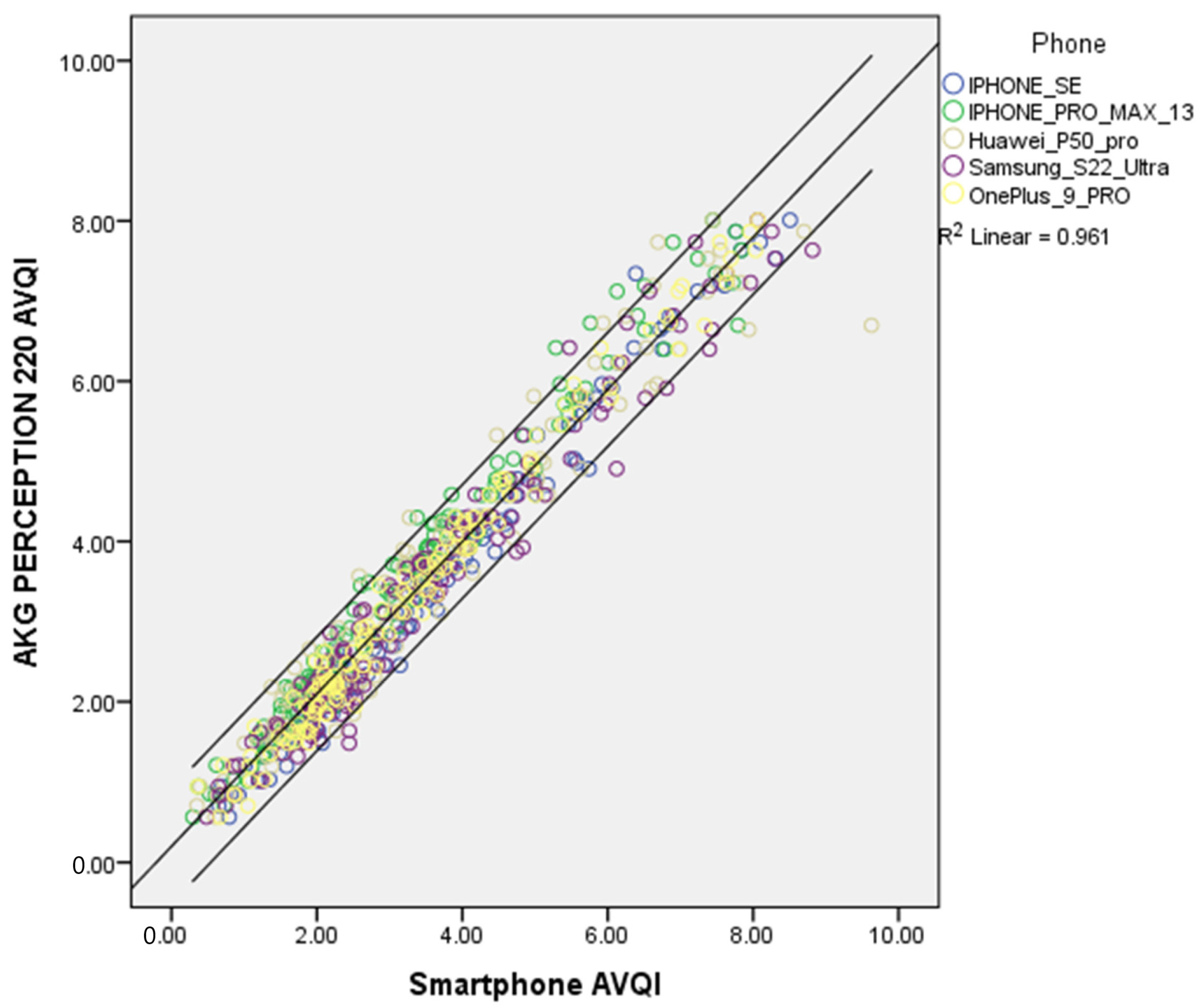

3.2. AVQI Evaluation Outcomes

3.3. The Normal vs. Pathological Voice Diagnostic Accuracy of the AVQI Using Different Smartphones

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mobile Network Subscriptions Worldwide. 2028. Available online: https://www.statista.com/statistics/330695/number-of-smartphone-users-worldwide/ (accessed on 3 April 2023).

- Casale, M.; Costantino, A.; Rinaldi, V.; Forte, A.; Grimaldi, M.; Sabatino, L.; Oliveto, G.; Aloise, F.; Pontari, D.; Salvinelli, F. Mobile applications in otolaryngology for patients: An update. Laryngoscope Investig. Otolaryngol. 2018, 3, 434. [Google Scholar] [CrossRef] [Green Version]

- Eleonora, M.C.T.; Lonigro, A.; Gelardi, M.; Kim, B.; Cassano, M. Mobile Applications in Otolaryngology: A Systematic Review of the Literature, Apple App Store and the Google Play Store. Ann. Otol. Rhinol. Laryngol. 2021, 130, 78–91. [Google Scholar] [CrossRef]

- Grillo, E.U.; Wolfberg, J. An Assessment of Different Praat Versions for Acoustic Measures Analyzed Automatically by VoiceEvalU8 and Manually by Two Raters. J. Voice 2020, 37, 17–25. [Google Scholar] [CrossRef] [PubMed]

- Boogers, L.S.; Chen, B.S.J.; Coerts, M.J.; Rinkel, R.N.P.M.; Hannema, S.E. Mobile Phone Applications Voice Tools and Voice Pitch Analyzer Validated with LingWAVES to Measure Voice Frequency. J. Voice 2022. Available online: https://www.sciencedirect.com/science/article/pii/S0892199722003186 (accessed on 3 April 2023). [CrossRef]

- Kojima, T.; Hasebe, K.; Fujimura, S.; Okanoue, Y.; Kagoshima, H.; Taguchi, A.; Yamamoto, H.; Shoji, K.; Hori, R. A New iPhone Application for Voice Quality Assessment Based on the GRBAS Scale. Laryngoscope 2021, 131, 580–582. [Google Scholar] [CrossRef]

- Fahed, V.S.; Doheny, E.P.; Busse, M.; Hoblyn, J.; Lowery, M.M. Comparison of Acoustic Voice Features Derived from Mobile Devices and Studio Microphone Recordings. J. Voice 2022. Available online: https://www.sciencedirect.com/science/article/pii/S0892199722003125 (accessed on 3 April 2023). [CrossRef] [PubMed]

- Awan, S.N.; Shaikh, M.A.; Awan, J.A.; Abdalla, I.; Lim, K.O.; Misono, S. Smartphone Recordings are Comparable to “Gold Standard” Recordings for Acoustic Measurements of Voice. J. Voice 2023. Available online: https://www.sciencedirect.com/science/article/pii/S0892199723000310 (accessed on 10 April 2023). [CrossRef]

- Uloza, V.; Ulozaite-Staniene, N.; Petrauskas, T. An iOS-based VoiceScreen application: Feasibility for use in clinical settings-a pilot study. Eur. Arch. Otorhinolaryngol. 2023, 280, 277–284. [Google Scholar] [CrossRef]

- Munnings, A.J. The Current State and Future Possibilities of Mobile Phone “Voice Analyser” Applications, in Relation to Otorhinolaryngology. J. Voice 2020, 34, 527–532. Available online: https://www.sciencedirect.com/science/article/pii/S0892199718302595 (accessed on 3 April 2023). [CrossRef] [PubMed]

- Maryn, Y.; Ysenbaert, F.; Zarowski, A.; Vanspauwen, R. Mobile Communication Devices, Ambient Noise, and Acoustic Voice Measures. J. Voice 2017, 31, 248.e11–248.e23. Available online: https://www.sciencedirect.com/science/article/pii/S0892199716301965 (accessed on 10 April 2023). [CrossRef] [PubMed]

- Kardous, C.A.; Shaw, P.B. Evaluation of smartphone sound measurement applications. J. Acoust. Soc. Am. 2014, 135, EL186–EL192. [Google Scholar] [CrossRef] [Green Version]

- Manfredi, C.; Lebacq, J.; Cantarella, G.; Schoentgen, J.; Orlandi, S.; Bandini, A.; DeJonckere, P.H. Smartphones Offer New Opportunities in Clinical Voice Research. J. Voice 2017, 31, 111.e1–111.e7. Available online: https://www.sciencedirect.com/science/article/pii/S0892199716000059 (accessed on 10 April 2023). [CrossRef] [Green Version]

- Grillo, E.U.; Brosious, J.N.; Sorrell, S.L.; Anand, S. Influence of Smartphones and Software on Acoustic Voice Measures. Int. J. Telerehabil. 2016, 8, 9–14. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.J.; Lee, K.Y.; Choi, H.; Lee, S.J.; Lee, K.Y.; Choi, H. Clinical Usefulness of Voice Recordings using a Smartphone as a Screening Tool for Voice Disorders. Commun. Sci. Disord. 2018, 23, 1065–1077. Available online: http://www.e-csd.org/journal/view.php?doi=10.12963/csd.18540 (accessed on 13 June 2023). [CrossRef] [Green Version]

- Schaeffler, F.; Jannetts, S.; Beck, J.M. Reliability of clinical voice parameters captured with smartphones—Measurements of added noise and spectral tilt. In Proceedings of the 20th Annual Conference of the International Speech Communication Association INTERSPEECH 2019, Graz, Austria, 15–19 September 2019; pp. 2523–2527. Available online: https://eresearch.qmu.ac.uk/handle/20.500.12289/10013 (accessed on 3 April 2023). [CrossRef] [Green Version]

- Marsano-Cornejo, M.; Roco-Videla, Á. Comparison of the Acoustic Parameters Obtained with Different Smartphones and a Professional Microphone. Acta Otorrinolaringol. ESP 2022, 73, 51–55. [Google Scholar] [CrossRef]

- Pommée, T.; Morsomme, D. Voice Quality in Telephone Interviews: A preliminary Acoustic Investigation. J. Voice 2022. Available online: https://www.sciencedirect.com/science/article/pii/S0892199722002685 (accessed on 10 April 2023). [CrossRef]

- Maryn, Y.; De Bodt, M.; Roy, N. The Acoustic Voice Quality Index: Toward improved treatment outcomes assessment in voice disorders. J. Commun. Disord. 2010, 43, 161–174. Available online: https://www.sciencedirect.com/science/article/pii/S0021992409000884 (accessed on 12 December 2022). [CrossRef]

- Barsties, B.; Maryn, Y. The Acoustic Voice Quality Index. Toward expanded measurement of dysphonia severity in German subjects. HNO 2012, 60, 715–720. [Google Scholar] [CrossRef]

- Hosokawa, K.; Barsties, B.; Iwahashi, T.; Iwahashi, M.; Kato, C.; Iwaki, S.; Sasai, H.; Miyauchi, A.; Matsushiro, N.; Inohara, H.; et al. Validation of the Acoustic Voice Quality Index in the Japanese Language. J. Voice 2017, 31, 260.e1–260.e9. Available online: https://www.sciencedirect.com/science/article/pii/S0892199716300789 (accessed on 3 April 2023). [CrossRef]

- Uloza, V.; Petrauskas, T.; Padervinskis, E.; Ulozaitė, N.; Barsties, B.; Maryn, Y. Validation of the Acoustic Voice Quality Index in the Lithuanian Language. J. Voice 2017, 31, 257.e1–257.e11. Available online: https://www.sciencedirect.com/science/article/pii/S0892199716300716 (accessed on 3 January 2023). [CrossRef] [PubMed]

- Kankare, E.; Barsties, V.; Latoszek, B.; Maryn, Y.; Asikainen, M.; Rorarius, E.; Vilpas, S.; Ilomäki, I.; Tyrmi, J.; Rantala, L.; et al. The acoustic voice quality index version 02.02 in the Finnish-speaking population. Logop. Phoniatr. Vocol. 2020, 45, 49–56. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Englert, M.; Lopes, L.; Vieira, V.; Behlau, M. Accuracy of Acoustic Voice Quality Index and Its Isolated Acoustic Measures to Discriminate the Severity of Voice Disorders. J. Voice 2022, 36, 582.e1–582.e10. Available online: https://www.sciencedirect.com/science/article/pii/S0892199720302939 (accessed on 3 April 2023). [CrossRef] [PubMed]

- Yeşilli-Puzella, G.; Tadıhan-Özkan, E.; Maryn, Y. Validation and Test-Retest Reliability of Acoustic Voice Quality Index Version 02.06 in the Turkish Language. J. Voice 2022, 36, 736.e25–736.e32. Available online: https://www.sciencedirect.com/science/article/pii/S0892199720303222 (accessed on 10 April 2023). [CrossRef]

- Englert, M.; Latoszek, B.B.V.; Behlau, M. Exploring the Validity of Acoustic Measurements and Other Voice Assessments. J. Voice 2022. Available online: https://www.sciencedirect.com/science/article/pii/S0892199721004392 (accessed on 3 April 2023). [CrossRef]

- Shabnam, S.; Pushpavathi, M.; Gopi Sankar, R.; Sridharan, K.V.; Vasanthalakshmi, M.S. A Comprehensive Application for Grading Severity of Voice Based on Acoustic Voice Quality Index v.02.03. J. Voice 2022. Available online: https://www.sciencedirect.com/science/article/pii/S0892199722002454 (accessed on 3 April 2023). [CrossRef] [PubMed]

- Latoszek, B.B.V.; Ulozaitė-Stanienė, N.; Maryn, Y.; Petrauskas, T.; Uloza, V. The Influence of Gender and Age on the Acoustic Voice Quality Index and Dysphonia Severity Index: A Normative Study. J. Voice 2019, 33, 340–345. Available online: https://www.sciencedirect.com/science/article/pii/S089219971730468X (accessed on 3 April 2023). [CrossRef] [PubMed]

- Batthyany, C.; Maryn, Y.; Trauwaen, I.; Caelenberghe, E.; van Dinther, J.; Zarowski, A.; Wuyts, F. A case of specificity: How does the acoustic voice quality index perform in normophonic subjects? Appl. Sci. 2019, 9, 2527. [Google Scholar] [CrossRef] [Green Version]

- Jayakumar, T.; Benoy, J.J.; Yasin, H.M. Effect of Age and Gender on Acoustic Voice Quality Index Across Lifespan: A Cross-sectional Study in Indian Population. J. Voice 2022, 36, 436.e1–436.e8. Available online: https://www.sciencedirect.com/science/article/pii/S0892199720301995 (accessed on 10 April 2023). [CrossRef]

- Jayakumar, T.; Benoy, J.J. Acoustic Voice Quality Index (AVQI) in the Measurement of Voice Quality: A Systematic Review and Meta-Analysis. J. Voice 2022. Available online: https://www.sciencedirect.com/science/article/pii/S0892199722000844 (accessed on 3 April 2023). [CrossRef] [PubMed]

- Batthyany, C.; Latoszek, B.B.V.; Maryn, Y. Meta-Analysis on the Validity of the Acoustic Voice Quality Index. J. Voice 2022. [CrossRef] [PubMed]

- Saeedi, S.; Aghajanzade, M.; Khatoonabadi, A.R. A Literature Review of Voice Indices Available for Voice Assessment. JRSR 2022, 9, 151–155. [Google Scholar] [CrossRef]

- Uloza, V.; Ulozaitė-Stanienė, N.; Petrauskas, T.; Kregždytė, R. Accuracy of Acoustic Voice Quality Index Captured with a Smartphone—Measurements with Added Ambient Noise. J. Voice 2021, 37, 465.e19–465.e26. [Google Scholar] [CrossRef]

- Dejonckere, P.H.; Bradley, P.; Clemente, P.; Cornut, G.; Crevier-Buchman, L.; Friedrich, G.; Van De Heyning, P.; Remacle, M.; Woisard, V. A basic protocol for functional assessment of voice pathology, especially for investigating the efficacy of (phonosurgical) treatments and evaluating new assessment techniques. Guideline elaborated by the Committee on Phoniatrics of the European Laryngological Society (ELS). Eur. Arch. Otorhinolaryngol. 2001, 258, 77–82. [Google Scholar] [CrossRef]

- Senn, S.; Richardson, W. The first t-test. Stat. Med. 1994, 13, 785–803. [Google Scholar] [CrossRef]

- McHugh, M.L. Multiple comparison analysis testing in ANOVA. Biochem. Med. 2011, 21, 203–209. [Google Scholar] [CrossRef] [PubMed]

- Cho, E. Making Reliability Reliable: A Systematic Approach to Reliability Coefficients. Organ. Res. Methods 2016, 19, 651–682. [Google Scholar] [CrossRef]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef] [Green Version]

- Dollaghan, C.A. The Handbook for Evidence-Based Practice in Communication Disorders; Paul H. Brookes Publishing Co.: Baltimore, MD, USA, 2007. [Google Scholar]

- Bottalico, P.; Codino, J.; Cantor-Cutiva, L.C.; Marks, K.; Nudelman, C.J.; Skeffington, J.; Shrivastav, R.; Jackson-Menaldi, M.C.; Hunter, E.J.; Rubin, A.D. Reproducibility of Voice Parameters: The Effect of Room Acoustics and Microphones. J. Voice 2020, 34, 320–334. Available online: https://www.sciencedirect.com/science/article/pii/S0892199718304338 (accessed on 10 April 2023). [CrossRef] [PubMed]

- Lehnert, B.; Herold, J.; Blaurock, M.; Busch, C. Reliability of the Acoustic Voice Quality Index AVQI and the Acoustic Breathiness Index (ABI) when wearing COVID-19 protective masks. Eur. Arch. Otorhinolaryngol. 2022, 279, 4617–4621. [Google Scholar] [CrossRef] [PubMed]

| Diagnosis | n | Age | |

|---|---|---|---|

| Mean | SD | ||

| Normal voice | 49 | 31.69 | 9.89 |

| Mass lesions of vocal folds | 49 | 44.39 | 12.4 |

| Vocal fold cancer | 11 | 65.09 | 7.71 |

| Chronic hyperplastic laryngitis | 10 | 55.9 | 7.34 |

| Unilateral vocal fold paralysis | 6 | 40.83 | 12.77 |

| Bilateral vocal folds paralysis | 4 | 52.75 | 12.61 |

| Functional dysphonia | 2 | 39 | 24.04 |

| Reflux laryngitis | 2 | 57 | 15.56 |

| Parkinson’s disease | 2 | 71.5 | 9.19 |

| Total | 135 | 42.92 | 15.26 |

| Microphone | n | Mean AVQI | Std. Deviation | F | p |

|---|---|---|---|---|---|

| AKG Perception 220 | 135 | 3.43 | 1.83 | 0.759 | 0.58 |

| iPhone SE | 3.56 | 1.86 | |||

| iPhone Pro Max 13 | 3.16 | 1.83 | |||

| Huawei P50 pro | 3.37 | 1.96 | |||

| Samsung S22 Ultra | 3.52 | 1.93 | |||

| OnePlus 9 PRO | 3.42 | 1.86 |

| Microphones | iPhone SE | iPhone Pro Max 13 | Huawei P50 pro | Samsung S22 Ultra | OnePlus 9 PRO | |

|---|---|---|---|---|---|---|

| AKG Perception 220 | r | 0.991 | 0.987 | 0.970 | 0.979 | 0.992 |

| p | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | |

| n | 135 | 135 | 135 | 135 | 135 | |

| AVQI | AUC | Cut-Off | Sensitivity % | Specificity % | Youden-Index J |

|---|---|---|---|---|---|

| AKG Perception 220 | 0.834 | 3.27 | 93.88 | 68.18 | 0.62 |

| iPhone SE | 0.844 | 3.23 | 91.84 | 70.45 | 0.62 |

| iPhone Pro Max 13 | 0.858 | 2.14 | 81.63 | 82.95 | 0.65 |

| Huawei P50 pro | 0.835 | 3.08 | 93.88 | 70.45 | 0.64 |

| Samsung S22 Ultra | 0.862 | 2.93 | 89.8 | 73.86 | 0.64 |

| OnePlus 9 PRO | 0.86 | 2.3 | 79.59 | 84.09 | 0.64 |

| p | AKG Perception 220 | iPhone SE | iPhone Pro Max 13 | Huawei P50 Pro | Samsung S22 Ultra | OnePlus 9 Pro |

|---|---|---|---|---|---|---|

| AKG Perception 220 | - | 0.163 | 0.099 | 0.966 | 0.11 | 0.086 |

| iPhone SE | 0.163 | - | 0.367 | 0.579 | 0.282 | 0.863 |

| iPhone Pro Max 13 | 0.099 | 0.367 | - | 0.268 | 0.718 | 0.863 |

| Huawei P50 pro | 0.966 | 0.579 | 0.268 | - | 0.223 | 0.256 |

| Samsung S22 Ultra | 0.11 | 0.282 | 0.718 | 0.223 | - | 0.863 |

| OnePlus 9 PRO | 0.086 | 0.863 | 0.863 | 0.256 | 0.863 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uloza, V.; Ulozaitė-Stanienė, N.; Petrauskas, T.; Pribuišis, K.; Blažauskas, T.; Damaševičius, R.; Maskeliūnas, R. Reliability of Universal-Platform-Based Voice Screen Application in AVQI Measurements Captured with Different Smartphones. J. Clin. Med. 2023, 12, 4119. https://doi.org/10.3390/jcm12124119

Uloza V, Ulozaitė-Stanienė N, Petrauskas T, Pribuišis K, Blažauskas T, Damaševičius R, Maskeliūnas R. Reliability of Universal-Platform-Based Voice Screen Application in AVQI Measurements Captured with Different Smartphones. Journal of Clinical Medicine. 2023; 12(12):4119. https://doi.org/10.3390/jcm12124119

Chicago/Turabian StyleUloza, Virgilijus, Nora Ulozaitė-Stanienė, Tadas Petrauskas, Kipras Pribuišis, Tomas Blažauskas, Robertas Damaševičius, and Rytis Maskeliūnas. 2023. "Reliability of Universal-Platform-Based Voice Screen Application in AVQI Measurements Captured with Different Smartphones" Journal of Clinical Medicine 12, no. 12: 4119. https://doi.org/10.3390/jcm12124119

APA StyleUloza, V., Ulozaitė-Stanienė, N., Petrauskas, T., Pribuišis, K., Blažauskas, T., Damaševičius, R., & Maskeliūnas, R. (2023). Reliability of Universal-Platform-Based Voice Screen Application in AVQI Measurements Captured with Different Smartphones. Journal of Clinical Medicine, 12(12), 4119. https://doi.org/10.3390/jcm12124119