Explainable Vision Transformers and Radiomics for COVID-19 Detection in Chest X-rays

Abstract

:1. Introduction

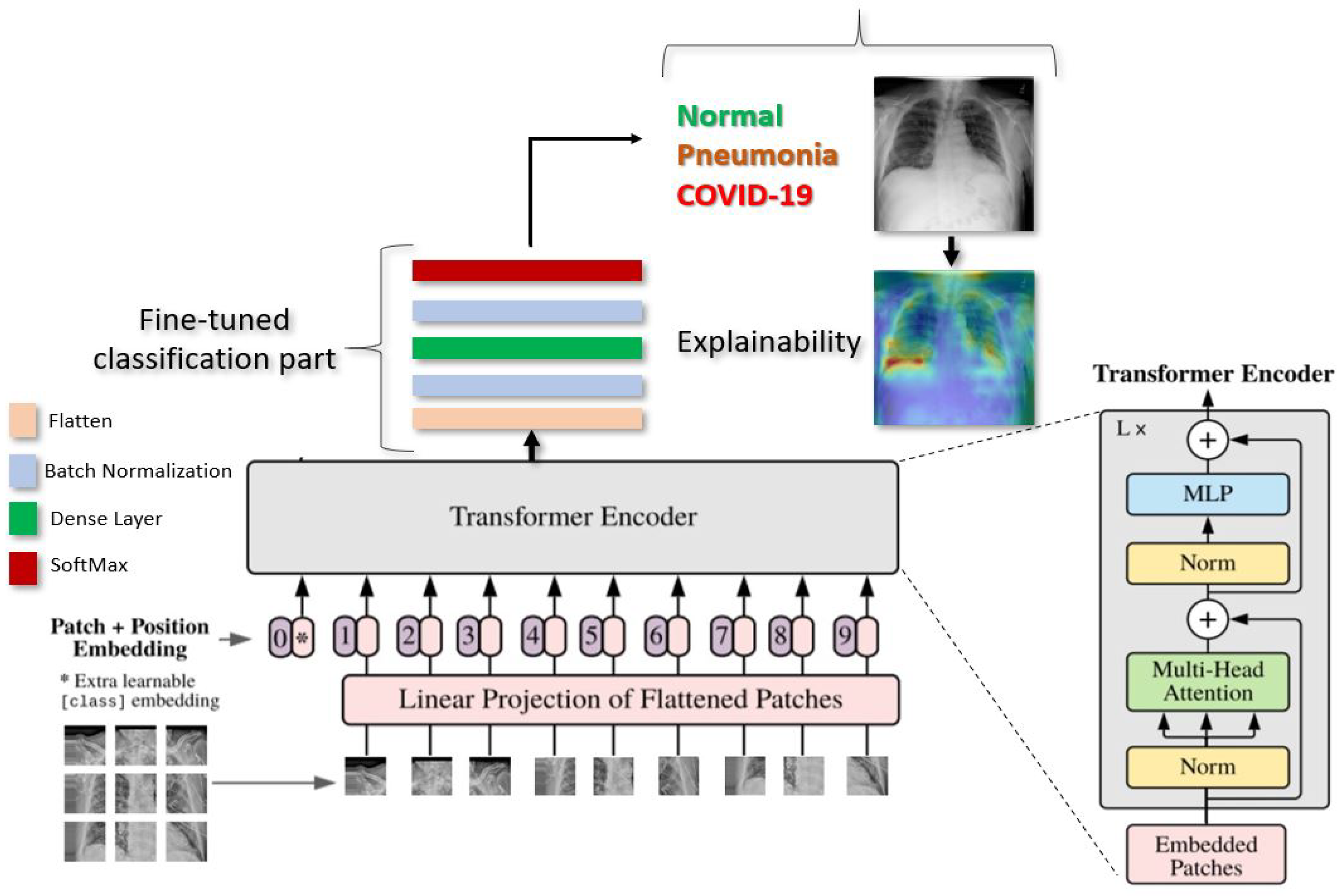

- Several Vision Transformers models have been fine-tuned to test the performance of these models in the classification of CXR images in comparison with convolutional neuron networks (CNN).

- Two large datasets and more than 20,000 CXR images are used to assess the efficiency of the proposed technique.

- We experimentally demonstrated that our proposed approach outperforms the previous models for COVID-19, as well as other CNN and Transformer-based architectures, especially in terms of the generalization on unseen data.

- The Attention map for the proposed models showed that our model is able to efficiently identify the signs of COVID-19.

- The obtained results achieved a high performance with an Area Under Curve (AUC) of 0.99 for multi-class classification (COVID-19 vs. Pneumonia vs. Normal). The sensitivity of the COVID-19 class achieved 0.99.

2. Datasets

2.1. SIIM-FISABIO-RSNA COVID-19

2.2. RSNA

3. Vision Transformer Model

3.1. Patch Embedding

3.2. Class

3.3. Positional Encodings/Embeddings

3.4. Model Architecture

4. Experimentation

4.1. Metrics

4.2. Results

5. Model Explainabilty

Performance Comparison

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rahaman, M.M.; Li, C.; Yao, Y.; Kulwa, F.; Rahman, M.A.; Wang, Q.; Qi, S.; Kong, F.; Zhu, X.; Zhao, X. Identification of COVID-19 samples from chest X-ray images using deep learning: A comparison of transfer learning approaches. J. X-ray Sci. Technol. 2020, 28, 821–839. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J.P.; Morrison, P.; Dao, L.; Roth, K.; Duong, T.Q.; Ghassemi, M. COVID-19 Image Data Collection: Prospective Predictions Are the Future. arXiv 2020, arXiv:2006.11988. [Google Scholar]

- Pan, I.; Cadrin-Chênevert, A.; Cheng, P.M. Tackling the radiological society of north america pneumonia detection challenge. Am. J. Roentgenol. 2019, 213, 568–574. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Aznaouridis, S.I.; Tzani, M.A. Extracting Possibly Representative COVID-19 Biomarkers from X-ray Images with Deep Learning Approach and Image Data Related to Pulmonary Diseases. J. Med Biol. Eng. 2020, 40, 462–469. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Malhotra, A.; Mittal, S.; Majumdar, P.; Chhabra, S.; Thakral, K.; Vatsa, M.; Singh, R.; Chaudhury, S.; Pudrod, A.; Agrawal, A. Multi-task driven explainable diagnosis of COVID-19 using chest X-ray images. Pattern Recognit. 2022, 122, 108243. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-Ray8: Hospital-Scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar]

- Das, N.N.; Kumar, N.; Kaur, M.; Kumar, V.; Singh, D. Automated Deep Transfer Learning-Based Approach for Detection of COVID-19 Infection in Chest X-rays. IRBM 2020, 43, 114–119. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Iraji, M.S.; Feizi-Derakhshi, M.R.; Tanha, J. COVID-19 Detection Using Deep Convolutional Neural Networks and Binary Differential Algorithm-Based Feature Selection from X-ray Images. Complexity 2021, 2021, 9973277. [Google Scholar] [CrossRef]

- Kermany, D. Labeled Optical Coherence Tomography (OCT) and Chest X-ray Images for Classification. Mendeley Data 2018. [Google Scholar] [CrossRef]

- Yousefi, B.; Kawakita, S.; Amini, A.; Akbari, H.; Advani, S.M.; Akhloufi, M.; Maldague, X.P.V.; Ahadian, S. Impartially Validated Multiple Deep-Chain Models to Detect COVID-19 in Chest X-ray Using Latent Space Radiomics. J. Clin. Med. 2021, 10, 3100. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Arias-Garzón, D.; Alzate-Grisales, J.A.; Orozco-Arias, S.; Arteaga-Arteaga, H.B.; Bravo-Ortiz, M.A.; Mora-Rubio, A.; Saborit-Torres, J.M.; Serrano, J.Á.M.; de la Iglesia Vayá, M.; Cardona-Morales, O.; et al. COVID-19 detection in X-ray images using convolutional neural networks. Mach. Learn. Appl. 2021, 6, 100138. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- de la Iglesia Vayá, M.; Saborit, J.M.; Montell, J.A.; Pertusa, A.; Bustos, A.; Cazorla, M.; Galant, J.; Barber, X.; Orozco-Beltrán, D.; García-García, F.; et al. BIMCV COVID-19+: A large annotated dataset of RX and CT images from COVID-19 patients. arXiv 2020, arXiv:2006.01174. [Google Scholar]

- Shome, D.; Kar, T.; Mohanty, S.; Tiwari, P.; Muhammad, K.; AlTameem, A.; Zhang, Y.; Saudagar, A. COVID-Transformer: Interpretable COVID-19 Detection Using Vision Transformer for Healthcare. Int. J. Environ. Res. Public Health 2021, 18, 11086. [Google Scholar] [CrossRef] [PubMed]

- El-Shafai, W. Extensive COVID-19 X-ray and CT Chest Images Dataset. Mendeley Data 2020. [Google Scholar] [CrossRef]

- Sait, U. Curated Dataset for COVID-19 Posterior-Anterior Chest Radiography Images (X-rays). Mendeley Data 2021. [Google Scholar] [CrossRef]

- Qi, X.; Brown, L.G.; Foran, D.J.; Nosher, J.; Hacihaliloglu, I. Chest X-ray image phase features for improved diagnosis of COVID-19 using convolutional neural network. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 197–206. [Google Scholar] [CrossRef]

- Mondal, A.K.; Bhattacharjee, A.; Singla, P.; Prathosh, A.P. xViTCOS: Explainable Vision Transformer Based COVID-19 Screening Using Radiography. IEEE J. Transl. Eng. Health Med. 2021, 10, 1–10. [Google Scholar] [CrossRef]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef]

- Krishnan, K.S.; Krishnan, K.S. Vision Transformer based COVID-19 Detection using Chest X-rays. In Proceedings of the 2021 6th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 7–9 October 2021; pp. 644–648. [Google Scholar]

- Asraf, A. COVID19, Pneumonia and Normal Chest X-ray PA Dataset. Mendeley Data 2021. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Iqbal, A.; Emadi, N.A.; et al. Can AI Help in Screening Viral and COVID-19 Pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Kashem, S.B.A.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Kim, G.; Oh, Y.; Seo, J.B.; Lee, S.M.; Kim, J.H.; Moon, S.; Lim, J.K.; Ye, J.C. Vision Transformer for COVID-19 CXR Diagnosis using Chest X-ray Feature Corpus. arXiv 2021, arXiv:2103.07055. [Google Scholar]

- Choi, Y.Y.; Song, E.S.; Kim, Y.H.; Song, T.B. Analysis of high-risk infant births and their mortality: Ten years’ data from chonnam national university hospital. Chonnam Med. J. 2011, 47, 31–38. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.S.; Kwun, W.H.; Yun, S.S.; Kim, H.J.; Kwun, K.B. Results of 2000 laparoscopic cholecystectomies at the Yeungnam University Hospital. J. Minim. Invasive Surg. 2001, 4, 16–22. [Google Scholar]

- Park, J.R.; Heo, W.B.; Park, S.H.; Park, K.S.; Suh, J.S. The frequency unexpected antibodies at Kyungpook national university hospital. Korean J. Blood Transfus. 2007, 18, 97–104. [Google Scholar]

- Society for Imaging Informatics in Medicine (SIIM). SIIM-FISABIO-RSNA COVID-19 Detection. Available online: https://www.kaggle.com/c/siim-covid19-detection (accessed on 5 February 2022).

- Shih, G.; Wu, C.C.; Halabi, S.S.; Kohli, M.D.; Prevedello, L.M.; Cook, T.S.; Sharma, A.; Amorosa, J.K.; Arteaga, V.; Galperin-Aizenberg, M.; et al. Augmenting the National Institutes of Health chest radiograph dataset with expert annotations of possible pneumonia. Radiol. Artif. Intell. 2019, 1, e180041. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Melinte, D.O.; Vladareanu, L. Facial expressions recognition for human–robot interaction using deep convolutional neural networks with rectified adam optimizer. Sensors 2020, 20, 2393. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F.; et al. Keras. 2015. Available online: https://keras.io (accessed on 7 February 2022).

- NVIDIA. 2080 Ti. Available online: https://www.nvidia.com/en-us/geforce/graphics-cards/rtx-2080 (accessed on 18 January 2022).

- Chetoui, M.; Akhloufi, M.A. Explainable end-to-end deep learning for diabetic retinopathy detection across multiple datasets. J. Med. Imaging 2020, 7, 044503. [Google Scholar] [CrossRef] [PubMed]

- Luz, E.; Silva, P.; Silva, R.; Silva, L.; Guimarães, J.; Miozzo, G.; Moreira, G.; Menotti, D. Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. Res. Biomed. Eng. 2021, 38, 149–162. [Google Scholar] [CrossRef]

- Wehbe, R.M.; Sheng, J.; Dutta, S.; Chai, S.; Dravid, A.; Barutcu, S.; Wu, Y.; Cantrell, D.R.; Xiao, N.; Allen, B.D.; et al. DeepCOVID-XR: An Artificial Intelligence Algorithm to Detect COVID-19 on Chest Radiographs Trained and Tested on a Large U.S. Clinical Data Set. Radiology 2021, 299, E167–E176. [Google Scholar] [CrossRef]

- Chetoui, M.; Akhloufi, M.A.; Yousefi, B.; Bouattane, E.M. Explainable COVID-19 Detection on Chest X-rays Using an End-to-End Deep Convolutional Neural Network Architecture. Big Data Cogn. Comput. 2021, 5, 73. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; PMLR: New York, NY, USA, 2019; pp. 6105–6114. [Google Scholar]

- Chetoui, M.; Akhloufi, M.A. Deep Efficient Neural Networks for Explainable COVID-19 Detection on CXR Images. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Kuala Lumpur, Malaysia, 26 July 26–29 July 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 329–340. [Google Scholar]

- Afshar, P.; Heidarian, S.; Naderkhani, F.; Oikonomou, A.; Plataniotis, K.N.; Mohammadi, A. Covid-caps: A capsule network-based framework for identification of covid-19 cases from X-ray images. Pattern Recognit. Lett. 2020, 138, 638–643. [Google Scholar] [CrossRef]

| Configuration | Value |

|---|---|

| Optimizer | RectifiedAdam |

| Epoch | 200 |

| Batch size | 16 for ViT-B16/32 and 4 for ViT-L32 |

| Learning rate | |

| Batch Normalization | True |

| Model | ACC % | AUC | SN | SP |

|---|---|---|---|---|

| ViT-B16 | 87.00 | 0.96 | 0.86 | 0.87 |

| ViT-B32 | 96.00 | 0.99 | 0.96 | 0.96 |

| ViT-L32 | 53.00 | 0.79 | 0.54 | 0.54 |

| Model | ACC (%) | AUC | SP | SN |

|---|---|---|---|---|

| EfficientNet-B7 | 93.82 | 0.95 | 0.92 | 0.93 |

| EfficientNet-B5 | 94.64 | 0.95 | 0.83 | 0.92 |

| DenseNet-121 | 88.13 | 0.90 | 0.91 | 0.87 |

| NasNetLarge | 94.48 | 0.96 | 0.90 | 0.96 |

| MobileNet | 93.16 | 0.95 | 0.92 | 0.94 |

| ViT-B32 | 96.00 | 0.99 | 0.96 | 0.96 |

| Ref. | Dataset | #COVID-19 Images | ACC % | AUC | SN | SP |

|---|---|---|---|---|---|---|

| Rahman et al. [1] | CIDR | 260 | 89 | - | - | - |

| Afshar et al. [45] | Unspecified | N/A | 95 | 0.970 | 0.90 | 0.95 |

| Apostolopoulos et al. [4] | CIDR | 450 | 87 | - | 0.97 | 0.99 |

| Luz et al. [40] | CIDR | 183 | 93 | - | 0.96 | - |

| Ozturk et al. [7] | CIDR | 125 | 87 | - | 0.85 | 0.92 |

| Das et al. [9] | CIDR | N/A | 97 | 0.986 | 0.97 | 0.97 |

| Wehbe et al. [41] | Multiple institutions | 4253 | 83 | 0.900 | 0.71 | 0.92 |

| ViT-B32 | RSNA | 7598 | 96 | 0.991 | 0.96 | 0.96 |

| Ref. | #CO-19 Images | ACC | AUC | SN | SP | Classification |

|---|---|---|---|---|---|---|

| [24] | 3500 | 97.61 | - | 0.93 | - | Binary-class |

| 12,083 | 92.00 | 0.980 | - | - | Multi-class | |

| [22] | 2358 | 96.00 | - | 0.96 | 0.97 | Multi-class |

| [28] | 2431 | 86.40 95.90 85.2 | 0.941 (CNUH) 0.909 (YNU) 0.915 (KNUH) | 0.87 (CNUH ) 0.85 (YNU) 0.85 (KNUH) | 0.91 (CNUH) 0.84 (YNU) 0.84 (KNUH) | Multi-class |

| ViT-B32 | 7598 | 96.00 | 0.991 | 0.96 | 0.96 | Multi-class |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chetoui, M.; Akhloufi, M.A. Explainable Vision Transformers and Radiomics for COVID-19 Detection in Chest X-rays. J. Clin. Med. 2022, 11, 3013. https://doi.org/10.3390/jcm11113013

Chetoui M, Akhloufi MA. Explainable Vision Transformers and Radiomics for COVID-19 Detection in Chest X-rays. Journal of Clinical Medicine. 2022; 11(11):3013. https://doi.org/10.3390/jcm11113013

Chicago/Turabian StyleChetoui, Mohamed, and Moulay A. Akhloufi. 2022. "Explainable Vision Transformers and Radiomics for COVID-19 Detection in Chest X-rays" Journal of Clinical Medicine 11, no. 11: 3013. https://doi.org/10.3390/jcm11113013