Cross-Modal Priming Effect of Rhythm on Visual Word Recognition and Its Relationships to Music Aptitude and Reading Achievement

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Standardized Measures

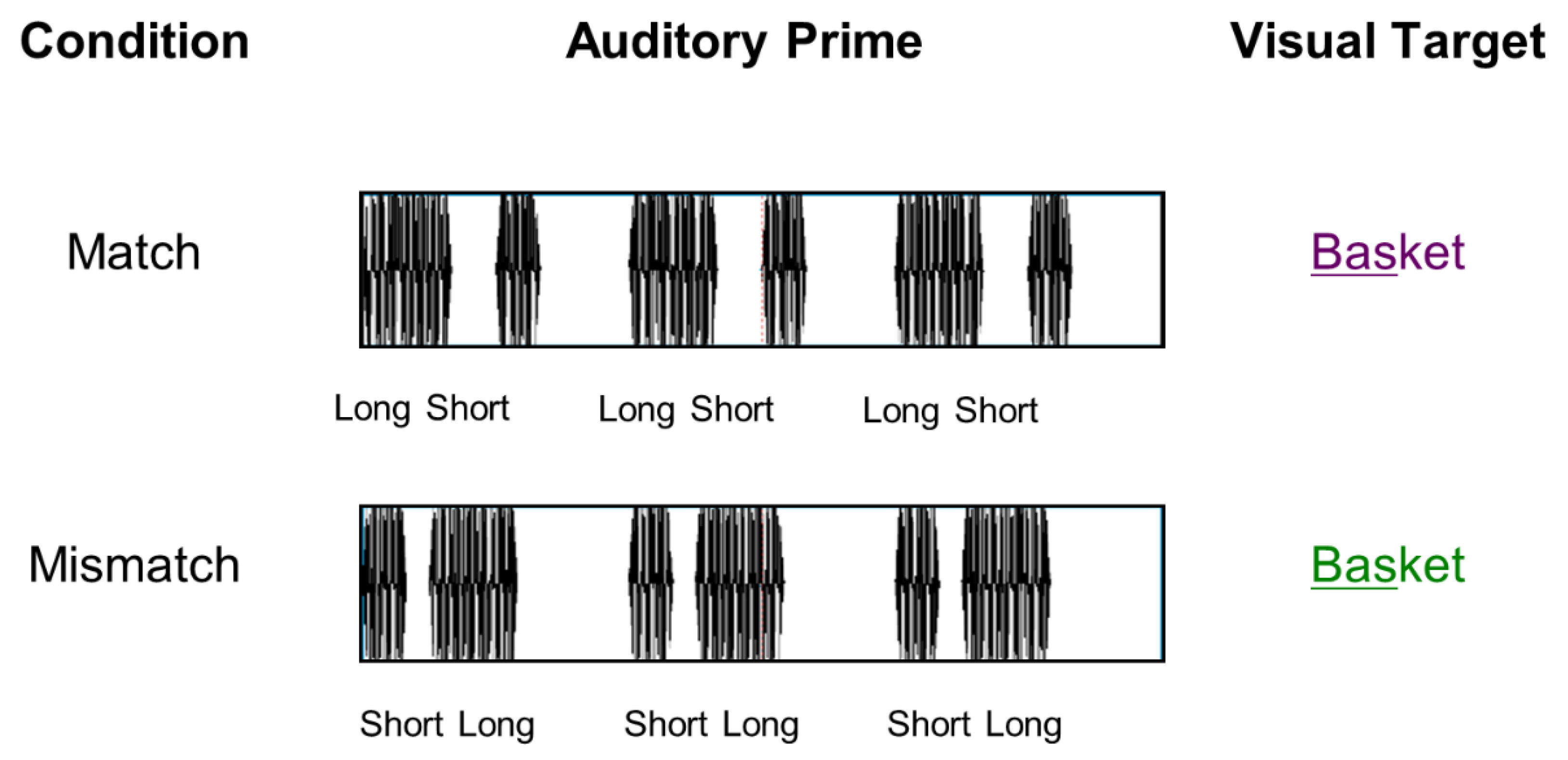

2.3. EEG Cross-Modal Priming Paradigm

2.4. Procedure

2.5. EEG Acquisition and Preprocessing

2.6. Data Analysis

3. Results

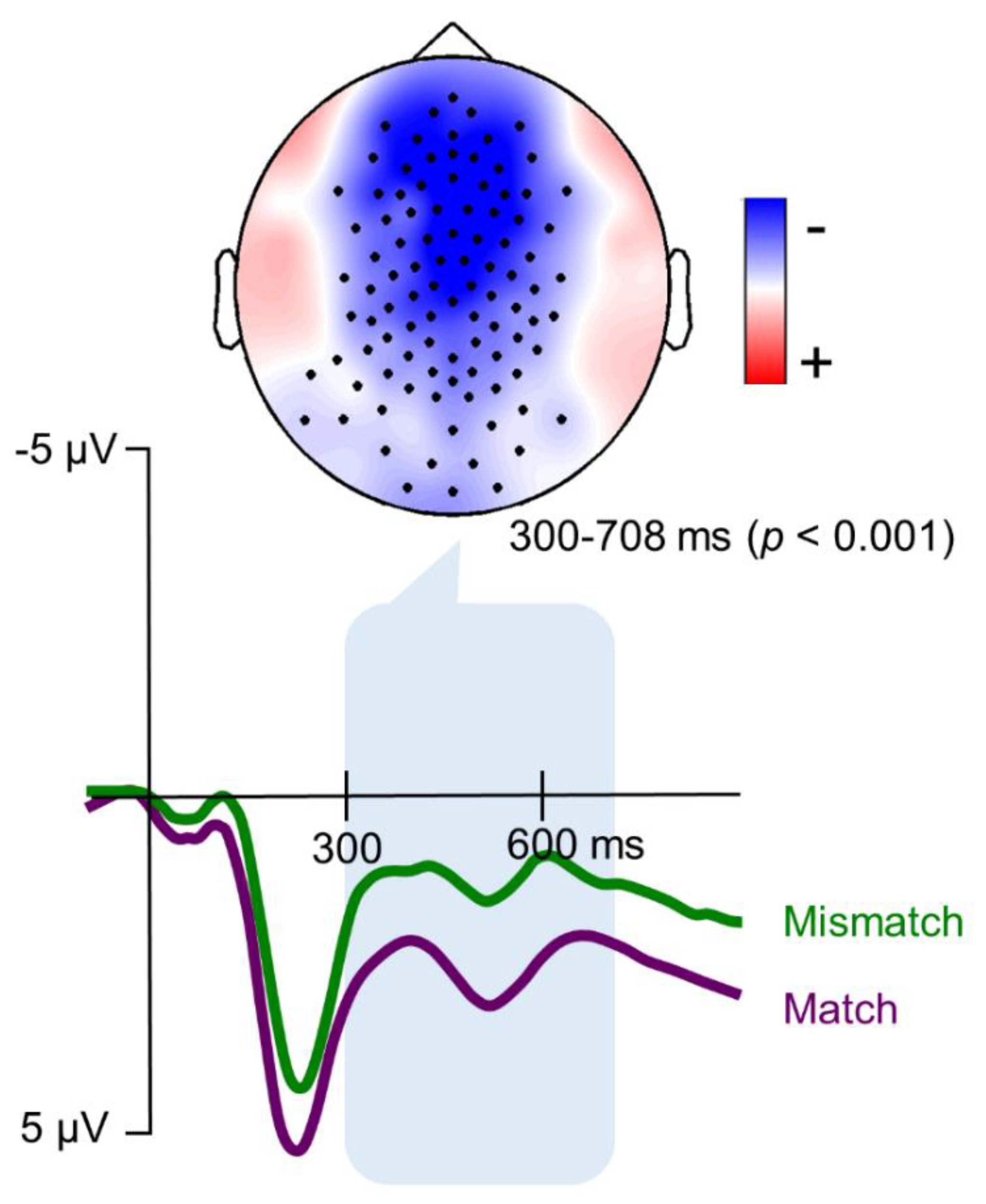

3.1. Metrical Expectancy

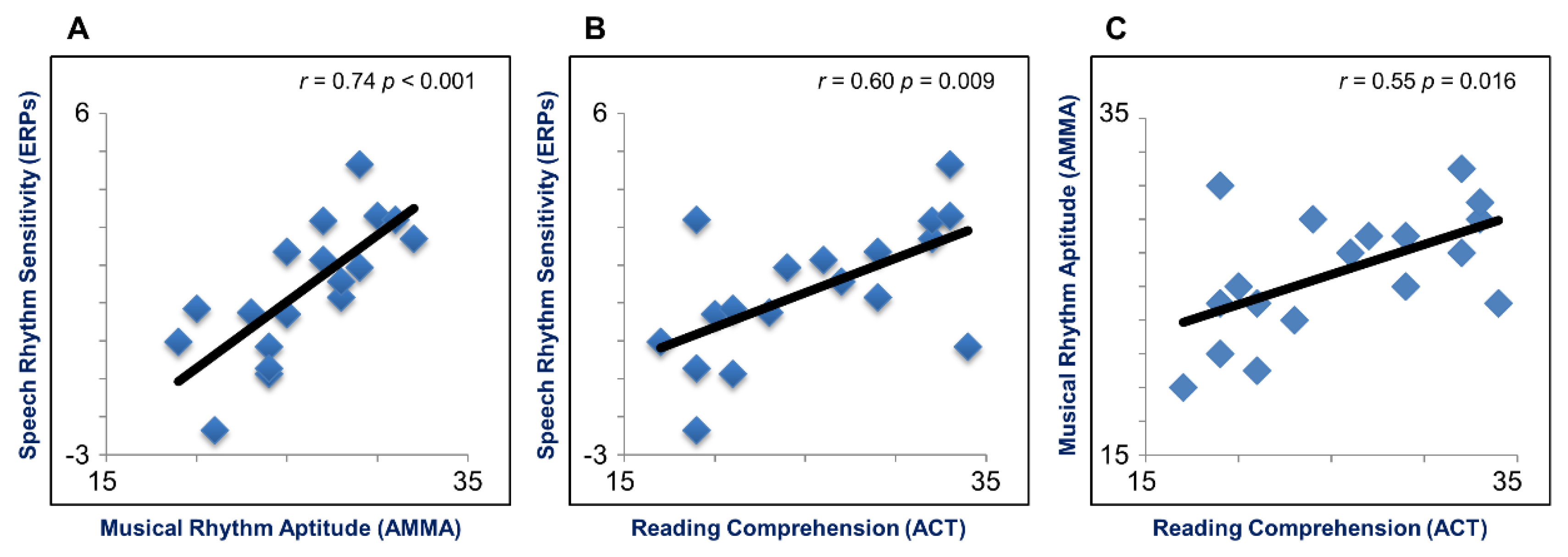

3.2. Brain-Behavior Relationships

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lerdahl, F.; Jackendoff, R. An overview of hierarchical structure in music. Music Percept. Interdiscip. J. 1984, 1, 229–252. [Google Scholar] [CrossRef]

- London, J. Hearing in Time: Psychological Aspects of Musical Meter, 1st ed.; Oxford University Press: Oxford, UK, 2004; ISBN 0-19-516081-9. [Google Scholar]

- Fox, A. Prosodic Features and Prosodic Structures: The Phonology of Suprasegmentals; Oxford University Press: New York, NY, USA, 2000. [Google Scholar]

- Nespor, M. On the rhythm parameter in phonology. In Logical Issues in Language Acquisition; Rocca, I., Ed.; Foris Publications: Dordrech, The Netherlands, 1990; pp. 157–175. [Google Scholar]

- Delattre, P. Studies in French and Comparative Phonetics, 1st ed.; Mouton: The Hague, The Netherlands, 1966. [Google Scholar]

- Moon, H.; Magne, C. Noun/verb distinction in English stress homographs: An ERP study. Neuroreport 2015, 26, 753–757. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.D. Music, Language, and the Brain; Oxford University Press: New York, NY, USA, 2008; ISBN 978-0-19-975530-1. [Google Scholar]

- Liberman, M.; Prince, A. On stress and linguistic rhythm. Linguist. Inq. 1977, 8, 249–336. [Google Scholar]

- Henrich, K.; Alter, K.; Wiese, R.; Domahs, U. The relevance of rhythmical alternation in language processing: An ERP study on English compounds. Brain Lang. 2014, 136, 19–30. [Google Scholar] [CrossRef] [PubMed]

- Jones, M.R.; Boltz, M. Dynamic attending and responses to time. Psychol. Rev. 1989, 96, 459–491. [Google Scholar] [CrossRef] [PubMed]

- Large, E.W.; Jones, M.R. The dynamics of attending: How people track time-varying events. Psychol. Rev. 1999, 106, 119–159. [Google Scholar] [CrossRef]

- Quené, H.; Port, R.F. Effects of timing regularity and metrical expectancy on spoken-word perception. Phonetica 2005, 62, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Jusczyk, P.W. How infants begin to extract words from speech. Trends Cogn. Sci. 1999, 3, 323–328. [Google Scholar] [CrossRef]

- Mattys, S.L.; Samuel, A.G. How lexical stress affects speech segmentation and interactivity: Evidence from the migration paradigm. J. Mem. Lang. 1997, 36, 87–116. [Google Scholar] [CrossRef]

- Magne, C.; Astésano, C.; Aramaki, M.; Ystad, S.; Kronland-Martinet, R.; Besson, M. Influence of syllabic lengthening on semantic processing in spoken French: Behavioral and electrophysiological evidence. Cereb. Cortex 2007, 17, 2659–2668. [Google Scholar] [CrossRef] [PubMed]

- Schmidt-Kassow, M.; Kotz, S.A. Entrainment of syntactic processing? ERP-responses to predictable time intervals during syntactic reanalysis. Brain Res. 2008, 1226, 144–155. [Google Scholar] [CrossRef] [PubMed]

- Jantzen, M.G.; Large, E.W.; Magne, C. Editorial: Overlap of neural systems for processing language and music. Front. Psychol. 2016, 7, 876. [Google Scholar] [CrossRef] [PubMed]

- Peretz, I.; Vuvan, D.; Lagrois, M.-E.; Armony, J.L. Neural overlap in processing music and speech. Philos. Trans. R. Soc. B 2015, 370, 20140090. [Google Scholar] [CrossRef] [PubMed]

- Hausen, M.; Torppa, R.; Salmela, V.R.; Vainio, M.; Särkämö, T. Music and speech prosody: A common rhythm. Front. Psychol. 2013, 4, 566. [Google Scholar] [CrossRef] [PubMed]

- Magne, C.; Jordan, D.K.; Gordon, R.L. Speech rhythm sensitivity and musical aptitude: ERPs and individual differences. Brain Lang. 2016, 153, 13–19. [Google Scholar] [CrossRef] [PubMed]

- Peter, V.; Mcarthur, G.; Thompson, W.F. Discrimination of stress in speech and music: A mismatch negativity (MMN) study. Psychophysiology 2012, 49, 1590–1600. [Google Scholar] [CrossRef] [PubMed]

- Gordon, R.L.; Magne, C.L.; Large, E.W. EEG correlates of song prosody: A new look at the relationship between linguistic and musical rhythm. Front. Psychol. 2011, 2, 352. [Google Scholar] [CrossRef] [PubMed]

- Cason, N.; Schön, D. Rhythmic priming enhances the phonological processing of speech. Neuropsychologia 2012, 50, 2652–2658. [Google Scholar] [CrossRef] [PubMed]

- Falk, S.; Lanzilotti, C.; Schön, D. Tuning neural phase entrainment to speech. J. Cogn. Neurosci. 2017, 29, 1378–1389. [Google Scholar] [CrossRef] [PubMed]

- Cutler, A.; Carter, D.M. The Predominance of Strong Initial Syllables in the English Vocabulary. Comput. Speech Lang. 1987, 2, 133–142. [Google Scholar] [CrossRef]

- Cutler, A.; Norris, D. The role of strong syllables in segmentation for lexical access. J. Exp. Psychol. Hum. Percept. Perform. 1988, 14, 113–121. [Google Scholar] [CrossRef]

- Jusczyk, P.W.; Cutler, A.; Redanz, N.J. Infants’ preference for the predominant stress patterns of English words. Child Dev. 1993, 64, 675–687. [Google Scholar] [CrossRef] [PubMed]

- Rothermich, K.; Schmidt-Kassow, M.; Schwartze, M.; Kotz, S.A. Event-related potential responses to metric violations: Rules versus meaning. Neuroreport 2010, 21, 580–584. [Google Scholar] [CrossRef] [PubMed]

- Magne, C.; Schön, D.; Besson, M. Musician children detect pitch violations in both music and language better than nonmusician children: Behavioral and electrophysiological approaches. J. Cogn. Neurosci. 2006, 18, 199–211. [Google Scholar] [CrossRef] [PubMed]

- Schön, D.; Magne, C.; Besson, M. The music of speech: Music training facilitates pitch processing in both music and language. Psychophysiology 2004, 41, 341–349. [Google Scholar] [CrossRef] [PubMed]

- Marie, C.; Magne, C.; Besson, M. Musicians and the metric structure of words. J. Cogn. Neurosci. 2011, 23, 294–305. [Google Scholar] [CrossRef] [PubMed]

- Goswami, U. A temporal sampling framework for developmental dyslexia. Trends Cogn. Sci. 2011, 15, 3–10. [Google Scholar] [CrossRef] [PubMed]

- Harrison, E.; Wood, C.; Holliman, A.J.; Vousden, J.I. The immediate and longer-term effectiveness of a speech-rhythm-based reading intervention for beginning readers. J. Res. Read. 2018, 41, 220–241. [Google Scholar] [CrossRef]

- Holliman, A.J.; Williams, G.J.; Mundy, I.R.; Wood, C.; Hart, L.; Waldron, S. Beginning to disentangle the prosody-literacy relationship: A multi-component measure of prosodic sensitivity. Read. Writ. 2014, 27, 255–266. [Google Scholar] [CrossRef]

- Thomson, J.; Jarmulowicz, L. Linguistic Rhythm and Literacy; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2016. [Google Scholar] [CrossRef]

- Gordon, E.E. Predictive Validity Study of AMMA: A One-Year Longitudinal Predictive Validity Study of the Advanced Measures of Music Audiation; GIA Publications: Chicago, IL, USA, 1990. [Google Scholar]

- Schneider, P.; Scherg, M.; Dosch, H.G.; Specht, H.J.; Gutschalk, A.; Rupp, A. Morphology of Heschl’s gyrus reflects enhanced activation in the auditory cortex of musicians. Nat. Neurosci. 2002, 5, 688–694. [Google Scholar] [CrossRef] [PubMed]

- Seppänen, M.; Brattico, E.; Tervaniemi, M. Practice strategies of musicians modulate neural processing and the learning of sound-patterns. Neurobiol. Learn. Mem. 2007, 87, 236–247. [Google Scholar] [CrossRef] [PubMed]

- Vuust, P.; Brattico, E.; Seppänen, M.; Näätänen, R.; Tervaniemi, M. The sound of music: Differentiating musicians using a fast, musical multi-feature mismatch negativity paradigm. Neuropsychologia 2012, 50, 1432–1443. [Google Scholar] [CrossRef] [PubMed]

- Hay, J.S.F.; Diehl, R.L. Perception of rhythmic grouping: Testing the iambic/trochaic law. Percept. Psychophys. 2007, 69, 113–122. [Google Scholar] [CrossRef] [PubMed]

- Iversen, J.R.; Patel, A.D.; Ohgushi, K. Perception of rhythmic grouping depends on auditory experience. J. Acoust. Soc. Am. 2008, 124, 2263–2271. [Google Scholar] [CrossRef] [PubMed]

- Balota, D.A.; Yap, M.J.; Hutchison, K.A.; Cortese, M.J.; Kessler, B.; Loftis, B.; Neely, J.H.; Nelson, D.L.; Simpson, G.B.; Treiman, R. The English Lexicon Project. Behav. Res. Methods 2007, 39, 445–459. [Google Scholar] [CrossRef] [PubMed]

- Lund, K.; Burgess, C. Producing high-dimensional semantic spaces from lexical co-occurrence. Behav. Res. Methods Instrum. Comput. 1996, 28, 203–208. [Google Scholar] [CrossRef]

- Thomas, E.R. Rural white southern accents. In A Handbook of Varieties of English: A Multimedia Reference Tool; Kortmann, B., Schneider, E.W., Eds.; Mouton de Gruyter: New York, NY, USA, 2004; pp. 300–324. [Google Scholar]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.-M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 2011, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Maris, E.; Oostenveld, R. Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 2007, 164, 177–190. [Google Scholar] [CrossRef] [PubMed]

- Lense, M.D.; Gordon, R.L.; Key, A.P.F.; Dykens, E.M. Neural correlates of cross-modal affective priming by music in Williams syndrome. Soc. Cogn. Affect. Neurosci. 2014, 9, 529–537. [Google Scholar] [CrossRef] [PubMed]

- Bohn, K.; Knaus, J.; Wiese, R.; Domahs, U. The influence of rhythmic (IR) regularities on speech processing: Evidence from an ERP study on German phrases. Neuropsychologia 2013, 51, 760–771. [Google Scholar] [CrossRef] [PubMed]

- Domahs, U.; Wiese, R.; Bornkessel-Schlesewsky, I.; Schlesewsky, M. The processing of German word stress: Evidence for the prosodic hierarchy. Phonology 2008, 25, 1–36. [Google Scholar] [CrossRef]

- McCauley, S.M.; Hestvik, A.; Vogel, I. Perception and bias in the processing of compound versus phrasal stress: Evidence from event-related brain potentials. Lang. Speech 2012, 56, 23–44. [Google Scholar] [CrossRef] [PubMed]

- Rothermich, K.; Schmidt-Kassow, M.; Kotz, S.A. Rhythm’s gonna get you: Regular meter facilitates semantic sentence processing. Neuropsychologia 2012, 50, 232–244. [Google Scholar] [CrossRef] [PubMed]

- Schmidt-Kassow, M.; Kotz, S.A. Attention and perceptual regularity in speech. Neuroreport 2009, 20, 1643–1647. [Google Scholar] [CrossRef] [PubMed]

- Brochard, R.; Abecasis, D.; Potter, D.; Ragot, R.; Drake, C. The “Ticktock” of our internal clock: Direct brain evidence of subjective accents in isochronous sequences. Psychol. Sci. 2003, 14, 362–366. [Google Scholar] [CrossRef] [PubMed]

- Ystad, S.; Magne, C.; Farner, S.; Pallone, G.; Aramaki, M.; Besson, M.; Kronland-Martinet, R. Electrophysiological study of algorithmically processed metric/rhythmic variations in language and music. EURASIP J. Audio Speech, Music Process. 2007, 2007, 03019. [Google Scholar] [CrossRef]

- Cason, N.; Astésano, C.; Schön, D. Bridging music and speech rhythm: Rhythmic priming and audio-motor training affect speech perception. Acta Psychol. 2015, 155, 43–50. [Google Scholar] [CrossRef] [PubMed]

- Gordon, R.L.; Shivers, C.M.; Wieland, E.A.; Kotz, S.A.; Yoder, P.J.; Devin McAuley, J. Musical rhythm discrimination explains individual differences in grammar skills in children. Dev. Sci. 2015, 18, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Chern, A.; Tillmann, B.; Vaughan, C.; Gordon, R.L. New evidence of a rhythmic priming effect that enhances grammaticality judgments in children. J. Exp. Child Psychol. 2018, 173, 371–379. [Google Scholar] [CrossRef] [PubMed]

- Kotz, S.A.; Gunter, T.C. Can rhythmic auditory cuing remediate language-related deficits in Parkinson’s disease? Ann. N. Y. Acad. Sci. 2015, 1337, 62–68. [Google Scholar] [CrossRef] [PubMed]

- Cason, N.; Hidalgo, C.; Isoard, F.; Roman, S.; Schön, D. Rhythmic priming enhances speech production abilities: Evidence from prelingually deaf children. Neuropsychology 2015, 29, 102–107. [Google Scholar] [CrossRef] [PubMed]

- Przybylski, L.; Bedoin, N.; Herbillon, V.; Roch, D.; Kotz, S.A.; Tillmann, B. Rhythmic auditory stimulation influences syntactic processing in children with developmental language disorders. Neuropsychology 2013, 27, 121–131. [Google Scholar] [CrossRef] [PubMed]

- Brochard, R.; Tassin, M.; Zagar, D. Got rhythm for better and for worse. Cross-modal effects of auditory rhythm on visual word recognition. Cognition 2013, 127, 214–219. [Google Scholar] [CrossRef] [PubMed]

- Gordon, R.L.; Magne, C.L.; Magne, C.L. Music and the brain: Music and cognitive abilities. In The Routledge Companion to Music Cognition; Ashley, R., Timmers, R., Eds.; Routledge: New York, NY, USA, 2017; pp. 49–62. [Google Scholar]

- Holliman, A.J.; Wood, C.; Sheehy, K. Sensitivity to speech rhythm explains individual differences in reading ability independently of phonological awareness. Br. J. Dev. Psychol. 2008, 26, 357–367. [Google Scholar] [CrossRef]

- Holliman, A.J.; Wood, C.; Sheehy, K. A cross-sectional study of prosodic sensitivity and reading difficulties. J. Res. Read. 2012, 35, 32–48. [Google Scholar] [CrossRef]

- Whalley, K.; Hansen, J. The role of prosodic sensitivity in children’s reading development. J. Res. Read. 2006, 29, 288–303. [Google Scholar] [CrossRef]

- Wood, C. Metrical stress sensitivity in young children and its relationship to phonological awareness and reading. J. Res. Read. 2006, 29, 270–287. [Google Scholar] [CrossRef]

- Holliman, A.J.; Gutiérrez Palma, N.; Critten, S.; Wood, C.; Cunnane, H.; Pillinger, C. Examining the independent contribution of prosodic sensitivity to word reading and spelling in early readers. Read. Writ. 2017, 30, 509–521. [Google Scholar] [CrossRef]

- Cooper, N.; Cutler, A.; Wales, R. Constraints of lexical stress on lexical access in English: Evidence from native and non-native listeners. Lang. Speech 2002, 45, 207–228. [Google Scholar] [CrossRef] [PubMed]

- Friedrich, C.K. Neurophysiological correlates of mismatch in lexical access. BMC Neurosci. 2005, 6, 64. [Google Scholar] [CrossRef] [PubMed]

- Chan, J.S.; Wade-Woolley, L. Explaining phonology and reading in adult learners: Introducing prosodic awareness and executive functions to reading ability. J. Res. Read. 2018, 41, 42–57. [Google Scholar] [CrossRef]

- Heggie, L.; Wade-Woolley, L. Prosodic awareness and punctuation ability in adult readers. Read. Psychol. 2018, 39, 188–215. [Google Scholar] [CrossRef]

- Leong, V.; Hämäläinen, J.; Soltész, F.; Goswami, U. Rise time perception and detection of syllable stress in adults with developmental dyslexia. J. Mem. Lang. 2011, 64, 59–73. [Google Scholar] [CrossRef]

- Leong, V.; Goswami, U. Assessment of rhythmic entrainment at multiple timescales in dyslexia: Evidence for disruption to syllable timing. Hear. Res. 2014, 308, 141–161. [Google Scholar] [CrossRef] [PubMed]

- Mundy, I.R.; Carroll, J.M. Speech prosody and developmental dyslexia: Reduced phonological awareness in the context of intact phonological representations. J. Cogn. Psychol. 2012, 24, 560–581. [Google Scholar] [CrossRef]

- Thomson, J.M.; Fryer, B.; Maltby, J.; Goswami, U. Auditory and motor rhythm awareness in adults with dyslexia. J. Res. Read. 2006, 29, 334–348. [Google Scholar] [CrossRef]

- Dickie, C.; Ota, M.; Clark, A. Revisiting the phonological deficit in dyslexia: Are implicit nonorthographic representations impaired? Appl. Psycholinguist. 2013, 34, 649–672. [Google Scholar] [CrossRef]

- Patel, A.D. Why would Musical Training Benefit the Neural Encoding of Speech? The OPERA Hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.D. Can nonlinguistic musical training change the way the brain processes speech? The expanded OPERA hypothesis. Hear. Res. 2014, 308, 98–108. [Google Scholar] [CrossRef] [PubMed]

- Tierney, A.; Kraus, N. Auditory-motor entrainment and phonological skills: Precise auditory timing hypothesis (PATH). Front. Hum. Neurosci. 2014, 8, 949. [Google Scholar] [CrossRef] [PubMed]

- Gordon, R.L.; Fehd, H.M.; McCandliss, B.D. Does music training enhance literacy skills? A meta-analysis. Front. Psychol. 2015, 6, 1777. [Google Scholar] [CrossRef] [PubMed]

- Francois, C.; Chobert, J.; Besson, M.; Schon, D. Music training for the development of speech segmentation. Cereb. Cortex 2013, 23, 2038–2043. [Google Scholar] [CrossRef] [PubMed]

- Breen, M.; Clifton, C. Stress matters: Effects of anticipated lexical stress on silent reading. J. Mem. Lang. 2011, 64, 153–170. [Google Scholar] [CrossRef] [PubMed]

- Magne, C.; Gordon, R.L.; Midha, S. Influence of metrical expectancy on reading words: An ERP study. In Proceedings of the Speech Prosody 2010 Conference, Chicago, IL, USA, 10–14 May 2010; pp. 1–4. [Google Scholar]

- Fodor, J.D. Learning to parse? J. Psycholinguist. Res. 1998, 27, 285–319. [Google Scholar] [CrossRef]

- Ashby, J.; Clifton, C., Jr. The prosodic property of lexical stress affects eye movements during silent reading. Cognition 2005, 96, B89–B100. [Google Scholar] [CrossRef] [PubMed]

- Kentner, G.; Vasishth, S. Prosodic Focus Marking in Silent Reading: Effects of Discourse Context and Rhythm. Front. Psychol. 2016, 7, 319. [Google Scholar] [CrossRef] [PubMed]

- Teaching Children to Read: An Evidence-Based Assessment of the Scientific Research Literature on Reading and Its Implications for Reading Instruction. Available online: https://www.nichd.nih.gov/sites/default/files/publications/pubs/nrp/Documents/report.pdf (accessed on 1 November 2018).

| Source | B | SE | β | t | p |

|---|---|---|---|---|---|

| Constant | 8.000 | 2.033 | 3.935 | 0.001 | |

| ACT Reading | 0.081 | 0.060 | 0.267 | 1.359 | 0.194 |

| AMMA Rhythm | 0.281 | 0.093 | 0.594 | 3.023 | 0.009 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fotidzis, T.S.; Moon, H.; Steele, J.R.; Magne, C.L. Cross-Modal Priming Effect of Rhythm on Visual Word Recognition and Its Relationships to Music Aptitude and Reading Achievement. Brain Sci. 2018, 8, 210. https://doi.org/10.3390/brainsci8120210

Fotidzis TS, Moon H, Steele JR, Magne CL. Cross-Modal Priming Effect of Rhythm on Visual Word Recognition and Its Relationships to Music Aptitude and Reading Achievement. Brain Sciences. 2018; 8(12):210. https://doi.org/10.3390/brainsci8120210

Chicago/Turabian StyleFotidzis, Tess S., Heechun Moon, Jessica R. Steele, and Cyrille L. Magne. 2018. "Cross-Modal Priming Effect of Rhythm on Visual Word Recognition and Its Relationships to Music Aptitude and Reading Achievement" Brain Sciences 8, no. 12: 210. https://doi.org/10.3390/brainsci8120210

APA StyleFotidzis, T. S., Moon, H., Steele, J. R., & Magne, C. L. (2018). Cross-Modal Priming Effect of Rhythm on Visual Word Recognition and Its Relationships to Music Aptitude and Reading Achievement. Brain Sciences, 8(12), 210. https://doi.org/10.3390/brainsci8120210