Reproducing the Few-Shot Learning Capabilities of the Visual Ventral Pathway Using Vision Transformers and Neural Fields

Abstract

1. Introduction

2. Preliminaries

2.1. Few-Shot Learning

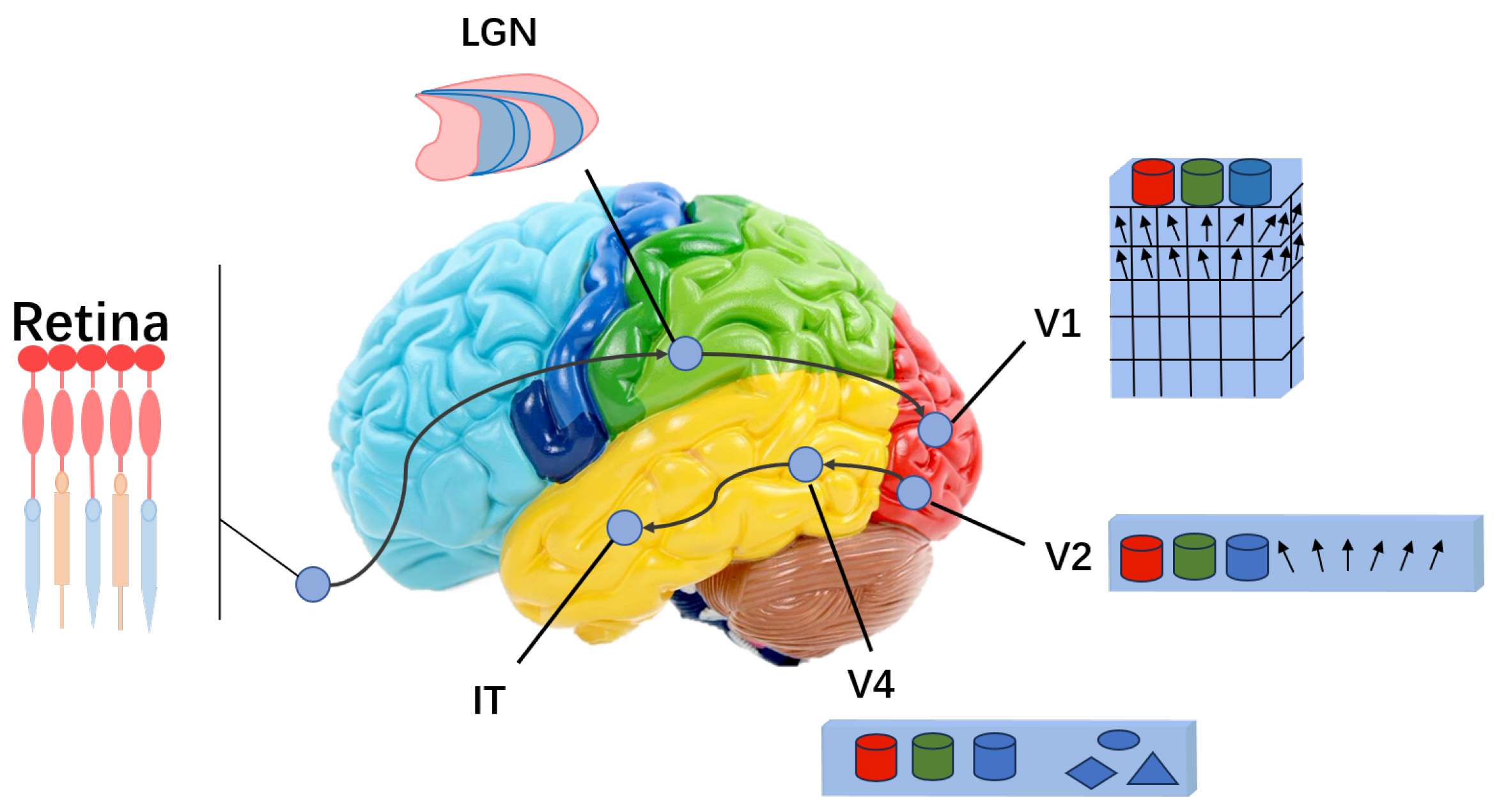

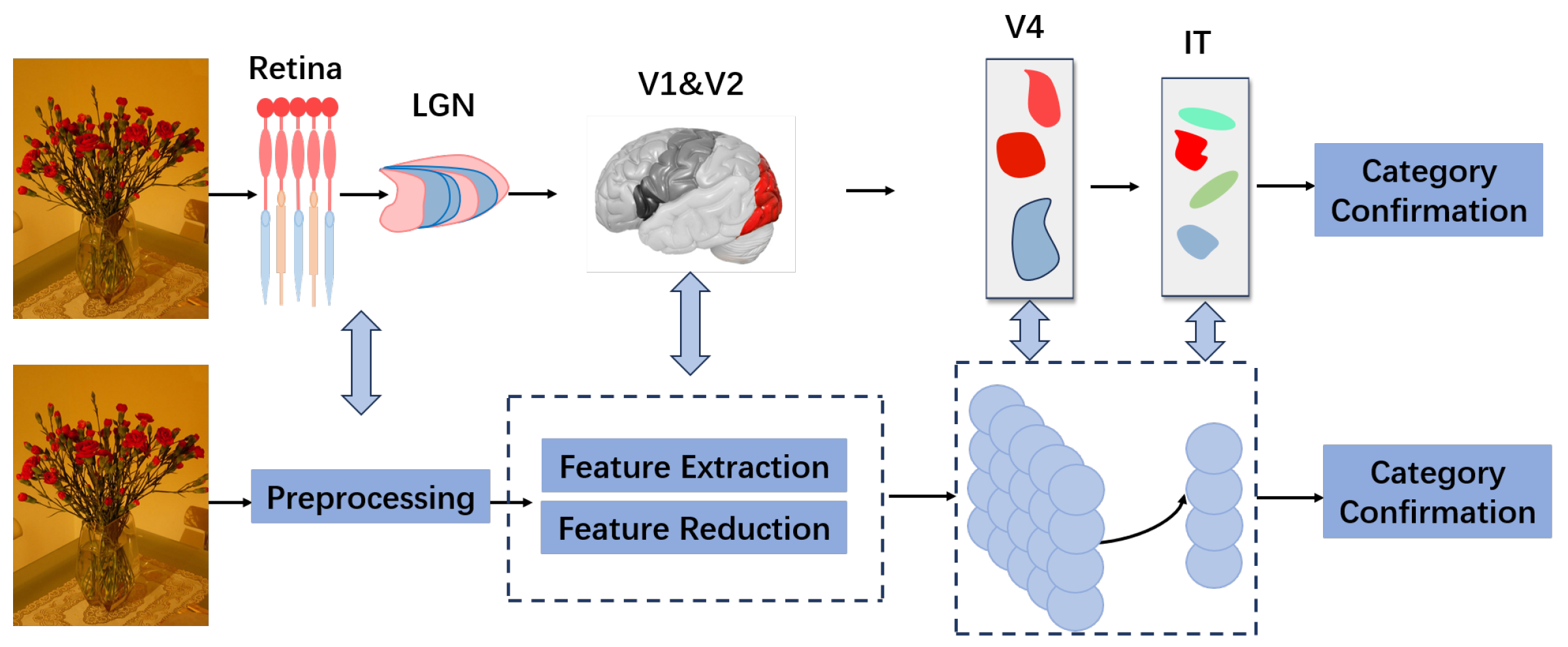

2.2. The Ventral Visual Stream

3. Methodology

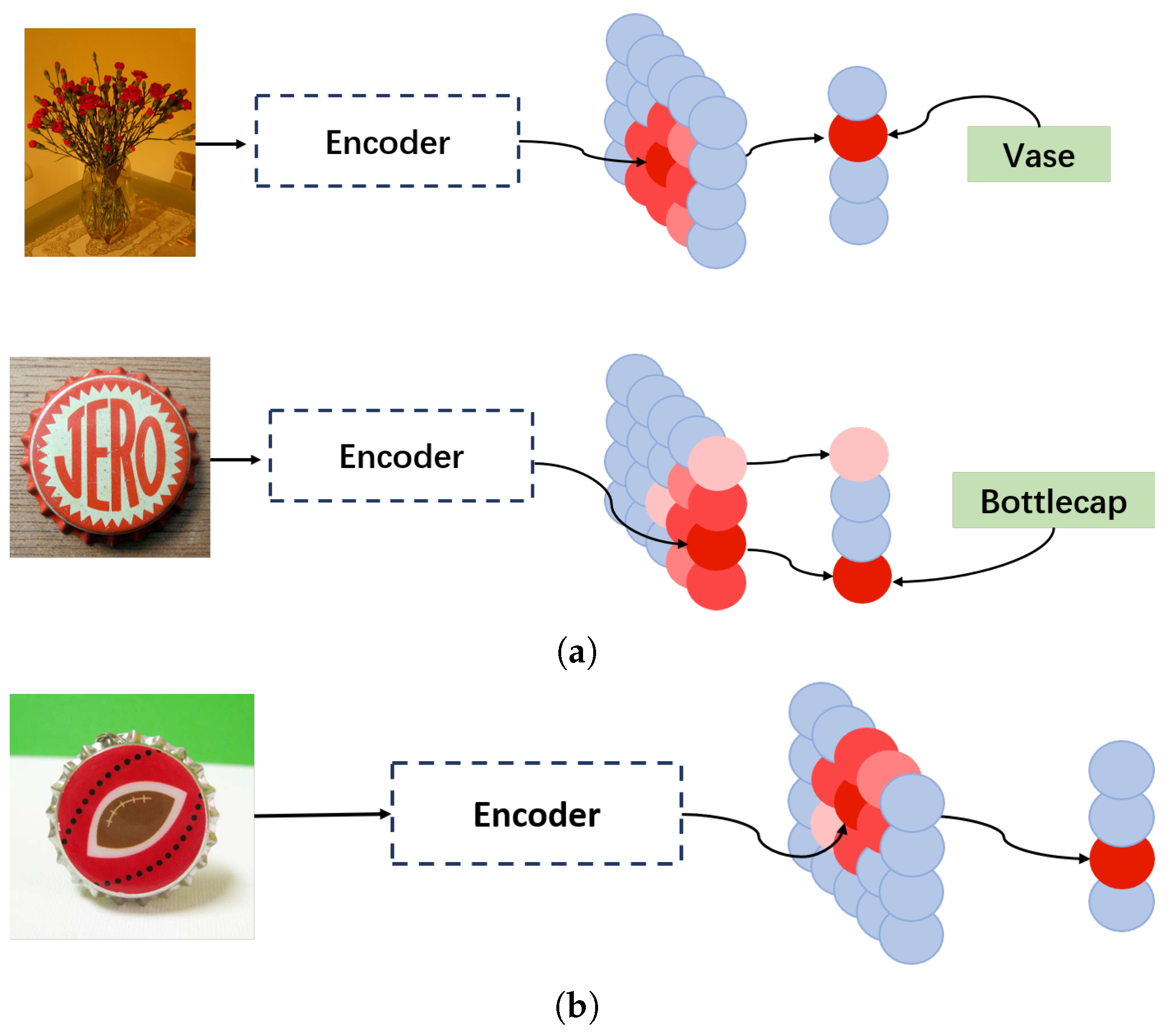

3.1. Architecture of Two Fields

3.2. Approximation of the Static Solution of Neural Fields

3.3. Feature Extraction and Preprocessing

3.4. Training and Prediction

3.4.1. Training Phase

3.4.2. Adaptation of the Scale Parameter

| Algorithm 1 Scale Adaptation algorithm |

|

4. Results

4.1. Datasets and Experimental Settings

- •

- CUB200-2011: CUB200-2011 is a benchmark image dataset for fine-grained classification and recognition research. The dataset contains 11,788 images of birds across 200 subclasses.

- •

- CIFAR-FS: The CIFAR-FS dataset (full name: the CIFAR100 Few Shots dataset) is derived from the CIFAR100 dataset. It contains 100 categories, with 600 images per category (60,000 images in total), each of size .

- •

- miniImageNet: miniImageNet, derived from ImageNet, is intended for meta-learning and few-shot learning studies. It contains 60,000 color images across 100 categories, with 600 images per category.

| CUB200-2011 | CIFAR-FS | miniImageNet | |

|---|---|---|---|

| Instances | 11,788 | 60,000 | 60,000 |

| Classes | 200 | 100 | 100 |

4.2. Comparison with State-of-the-Art Models

4.3. Ablation Experiments

4.3.1. Comparison with Different Distance Metrics

4.3.2. Comparison with Different Feature Extractors and Encoders

5. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ViT | Vision Transformer |

| LGN | Lateral geniculate nucleus |

| V1 | Primary visual cortex |

| V2 | Secondary visual cortex |

| IT | Inferior temporal cortex |

References

- Fang, W.; Chen, Y.; Ding, J.; Yu, Z.; Masquelier, T.; Chen, D.; Huang, L.; Zhou, H.; Li, G.; Tian, Y. Spikingjelly: An open-source machine learning infrastructure platform for spike-based intelligence. Sci. Adv. 2023, 9, eadi1480. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Rahman, M.M.; Munir, M.; Marculescu, R. Emcad: Efficient multi-scale convolutional attention decoding for medical image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 11769–11779. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual Event, 3–7 May 2021. [Google Scholar]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. Rep ViT: Revisiting Mobile CNN From ViT Perspective. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15909–15920. [Google Scholar]

- Dampfhoffer, M.; Mesquida, T.; Valentian, A.; Anghel, L. Backpropagation-Based Learning Techniques for Deep Spiking Neural Networks: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 11906–11921. [Google Scholar] [CrossRef]

- Pai, S.; Sun, Z.; Hughes, T.W.; Park, T.; Bartlett, B.; Williamson, I.A.D.; Minkov, M.; Milanizadeh, M.; Abebe, N.; Morichetti, F.; et al. Experimentally realized in situ backpropagation for deep learning in photonic neural networks. Science 2023, 380, 398–404. [Google Scholar] [CrossRef]

- Meng, Q.; Xiao, M.; Yan, S.; Wang, Y.; Lin, Z.; Luo, Z.Q. Towards Memory- and Time-Efficient Backpropagation for Training Spiking Neural Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 6143–6153. [Google Scholar]

- Wei, W.; Zhang, M.; Qu, H.; Belatreche, A.; Zhang, J.; Chen, H. Temporal-Coded Spiking Neural Networks with Dynamic Firing Threshold: Learning with Event-Driven Backpropagation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 10518–10528. [Google Scholar]

- Wright, L.G.; Onodera, T.; Stein, M.M.; Wang, T.; Schachter, D.T.; Hu, Z.; McMahon, P.L. Deep physical neural networks trained with backpropagation. Nature 2021, 601, 549–555. [Google Scholar] [CrossRef]

- Rani, S.; Kataria, A.; Kumar, S.; Tiwari, P. Federated learning for secure IoMT-applications in smart healthcare systems: A comprehensive review. Knowl.-Based Syst. 2023, 274, 110658. [Google Scholar] [CrossRef]

- Song, Y.; Wang, T.; Cai, P.; Mondal, S.K.; Sahoo, J.P. A comprehensive survey of few-shot learning: Evolution, applications, challenges, and opportunities. ACM Comput. Surv. 2023, 55, 1–40. [Google Scholar] [CrossRef]

- Niu, S.; Liu, Y.; Wang, J.; Song, H. A decade survey of transfer learning (2010–2020). IEEE Trans. Artif. Intell. 2021, 1, 151–166. [Google Scholar] [CrossRef]

- Zhu, Z.; Lin, K.; Jain, A.K.; Zhou, J. Transfer learning in deep reinforcement learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13344–13362. [Google Scholar] [CrossRef] [PubMed]

- Peng, F.; Yang, X.; Xiao, L.; Wang, Y.; Xu, C. SgVA-CLIP: Semantic-Guided Visual Adapting of Vision-Language Models for Few-Shot Image Classification. Trans. Multi. 2024, 26, 3469–3480. [Google Scholar] [CrossRef]

- Bendou, Y.; Hu, Y.; Lafargue, R.; Lioi, G.; Pasdeloup, B.; Pateux, S.; Gripon, V. Easy-Ensemble Augmented-Shot-Y-Shaped Learning: State-of-the-Art Few-Shot Classification with Simple Components. J. Imaging 2022, 8, 179. [Google Scholar] [CrossRef]

- Zhang, X.; Meng, D.; Gouk, H.; Hospedales, T. Shallow Bayesian Meta Learning for Real-World Few-Shot Recognition. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 631–640. [Google Scholar]

- Hu, S.X.; Li, D.; Stühmer, J.; Kim, M.; Hospedales, T.M. Pushing the Limits of Simple Pipelines for Few-Shot Learning: External Data and Fine-Tuning Make a Difference. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 9058–9067. [Google Scholar]

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. DeepEMD: Differentiable Earth Mover’s Distance for Few-Shot Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 5632–5648. [Google Scholar] [CrossRef]

- Rong, Y.; Lu, X.; Sun, Z.; Chen, Y.; Xiong, S. ESPT: A Self-Supervised Episodic Spatial Pretext Task for Improving Few-Shot Learning. Proc. AAAI Conf. Artif. Intell. 2023, 37, 9596–9605. [Google Scholar] [CrossRef]

- Shalam, D.; Korman, S. The balanced-pairwise-affinities feature transform. In Proceedings of the 41st International Conference on Machine Learning, JMLR.org, Vienna, Austria, 21–27 July 2024; ICML’24. [Google Scholar]

- Fifty, C.; Duan, D.; Junkins, R.G.; Amid, E.; Leskovec, J.; Re, C.; Thrun, S. Context-Aware Meta-Learning. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Song, M.; Yao, F.; Zhong, G.; Ji, Z.; Zhang, X. Matching Multi-Scale Feature Sets in Vision Transformer for Few-Shot Classification. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 12638–12651. [Google Scholar] [CrossRef]

- Tang, R.; Song, Q.; Li, Y.; Zhang, R.; Cai, X.; Lu, H.D. Curvature-processing domains in primate V4. eLife 2020, 9, e57502. [Google Scholar] [CrossRef]

- Jiang, R.; Andolina, I.M.; Li, M.; Tang, S. Clustered functional domains for curves and corners in cortical area V4. eLife 2021, 10, e63798. [Google Scholar] [CrossRef] [PubMed]

- Bao, P.; She, L.; McGill, M.; Tsao, D.Y. A map of object space in primate inferotemporal cortex. Nature 2020, 583, 103–108. [Google Scholar] [CrossRef] [PubMed]

- Gordleeva, S.Y.; Tsybina, A.G.; Kazantsev, V.B.; Volgushev, M.; Zefirov, A.V.; Volterra, A. Modeling Working Memory in a Spiking Neuron Network Accompanied by Astrocytes. Front. Cell. Neurosci. 2021, 15, 631485. [Google Scholar] [CrossRef] [PubMed]

- Kim, R.; Sejnowski, T.J. Strong inhibitory signaling underlies stable temporal dynamics and working memory in spiking neural networks. Nat. Neurosci. 2020, 24, 129–139. [Google Scholar] [CrossRef]

- Xu, J.; Pan, Y.; Pan, X.; Hoi, S.; Yi, Z.; Xu, Z. RegNet: Self-Regulated Network for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 9562–9567. [Google Scholar] [CrossRef]

- Fotiadis, P.; Parkes, L.; Davis, K.A.; Satterthwaite, T.D.; Shinohara, R.T.; Bassett, D.S. Structure–function coupling in macroscale human brain networks. Nat. Rev. Neurosci. 2024, 25, 688–704. [Google Scholar] [CrossRef]

- Bonnen, T.; Yamins, D.L.; Wagner, A.D. When the ventral visual stream is not enough: A deep learning account of medial temporal lobe involvement in perception. Neuron 2021, 109, 2755–2766.e6. [Google Scholar] [CrossRef]

- Xu, S.; Liu, X.; Almeida, J.; Heinke, D. The contributions of the ventral and the dorsal visual streams to the automatic processing of action relations of familiar and unfamiliar object pairs. NeuroImage 2021, 245, 118629. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a Few Examples: A Survey on Few-shot Learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Alayrac, J.B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A visual language model for few-shot learning. Adv. Neural Inf. Process. Syst. 2022, 35, 23716–23736. [Google Scholar]

- Gharoun, H.; Momenifar, F.; Chen, F.; Gandomi, A.H. Meta-learning approaches for few-shot learning: A survey of recent advances. ACM Comput. Surv. 2024, 56, 1–41. [Google Scholar] [CrossRef]

- Ptito, M.; Bleau, M.; Bouskila, J. The Retina: A Window into the Brain. Cells 2021, 10, 3269. [Google Scholar] [CrossRef] [PubMed]

- Shepherd, G.M.; Yamawaki, N. Untangling the cortico-thalamo-cortical loop: Cellular pieces of a knotty circuit puzzle. Nat. Rev. Neurosci. 2021, 22, 389–406. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Zhao, M.; Deng, H.; Wang, T.; Xin, Y.; Dai, W.; Huang, J.; Zhou, T.; Sun, X.; Liu, N.; et al. The neural origin for asymmetric coding of surface color in the primate visual cortex. Nat. Commun. 2024, 15, 516. [Google Scholar] [CrossRef]

- Semedo, J.D.; Jasper, A.I.; Zandvakili, A.; Krishna, A.; Aschner, A.; Machens, C.K.; Kohn, A.; Yu, B.M. Feedforward and feedback interactions between visual cortical areas use different population activity patterns. Nat. Commun. 2022, 13, 1099. [Google Scholar] [CrossRef]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. Caltech-UCSD Birds-200-2011 (CUB-200-2011); Technical Report CNS-TR-2011-001; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Bertinetto, L.; Henriques, J.F.; Torr, P.; Vedaldi, A. Meta-learning with differentiable closed-form solvers. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, k.; Wierstra, D. Matching Networks for One Shot Learning. In Proceedings of the Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Curran Associates, Inc.: Nice, France,, 2016; Volume 29. [Google Scholar]

- Triantafillou, E.; Zemel, R.; Urtasun, R. Few-Shot Learning Through an Information Retrieval Lens. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2017; Volume 30. [Google Scholar]

- Belkin, M.; Niyogi, P. Laplacian Eigenmaps for Dimensionality Reduction and Data Representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; NIPS’17. pp. 4080–4090. [Google Scholar]

| Method (Backbone) | CUB(5-1) | CUB(5-5) | CIFAR-FS(5-1) | CIFAR-FS(5-5) | MINI(5-1) | MINI(5-5) |

|---|---|---|---|---|---|---|

| EASY (ResNet12) [16] | 0.7856 | 0.9193 | 0.7620 | 0.8900 | 0.7175 | 0.8715 |

| ESPT (ResNet12) [20] | 0.8545 | 0.9402 | \ | \ | 0.6836 | 0.8411 |

| ProtoNet (ResNet18) [45] | 0.7188 | 0.8742 | 0.7220 | 0.8350 | 0.5416 | 0.7368 |

| ProtoNet (ViT) [45] | 0.8700 | 0.9710 | 0.5770 | 0.8100 | 0.8530 | 0.9600 |

| MetaQDA (ViT) [17] | 0.8830 | 0.9740 | 0.6040 | 0.8320 | 0.8820 | 0.9740 |

| CAML (ViT) [22] | 0.9180 | 0.9710 | 0.7080 | 0.8476 | 0.9620 | 0.9860 |

| DeepEMD (ViT) [19] | \ | \ | 0.8280 | 0.9310 | 0.9050 | 0.9720 |

| PMF-BPA (ViT) [21] | 0.9580 | 0.9712 | 0.8710 | 0.9470 | 0.9520 | 0.9870 |

| P >M > F (ViT) [18] | 0.9230 | 0.9700 | 0.8430 | 0.9220 | 0.9530 | 0.9840 |

| FSViT (ViT) [23] | \ | \ | 0.8370 | 0.9360 | 0.9590 | 0.9850 |

| SgVA-CLIP (ViT) [15] | \ | \ | \ | \ | 0.9795 | 0.9872 |

| Ours (ViT) | 0.9242 | 0.9524 | 0.9811 | 0.9846 | 0.9887 | 0.9902 |

| Metric | CUB(5-1) | CUB(5-5) | CIFAR-FS(5-1) | CIFAR-FS(5-5) | MINI(5-1) | MINI(5-5) |

|---|---|---|---|---|---|---|

| Euclidean | 0.6831 | 0.7409 | 0.7178 | 0.7861 | 0.7878 | 0.8243 |

| Cosine | 0.9242 | 0.9524 | 0.9811 | 0.9846 | 0.9887 | 0.9902 |

| Correlation | 0.9125 | 0.9494 | 0.9785 | 0.9816 | 0.9873 | 0.9899 |

| Minkowski | 0.8839 | 0.9192 | 0.9517 | 0.9596 | 0.9772 | 0.9825 |

| Encoder | Classifer | CUB(5-1) | CUB(5-5) | CIFAR-FS(5-1) | CIFAR-FS(5-5) | MINI(5-1) | MINI(5-5) |

|---|---|---|---|---|---|---|---|

| ViT | KNN | 0.9099 | 0.9302 | 0.9678 | 0.9726 | 0.9720 | 0.9786 |

| SVM | 0.7462 | 0.9445 | 0.7670 | 0.9747 | 0.7914 | 0.9833 | |

| OURS | 0.9242 | 0.9524 | 0.9811 | 0.9846 | 0.9887 | 0.9902 | |

| Resnet18 | KNN | 0.8300 | 0.8632 | 0.7768 | 0.8272 | 0.6495 | 0.7201 |

| SVM | 0.6420 | 0.8670 | 0.5825 | 0.8185 | 0.4956 | 0.7150 | |

| OURS | 0.8435 | 0.8738 | 0.7912 | 0.8380 | 0.6743 | 0.7374 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, J.; Xing, L.; Li, T.; Xiang, N.; Shi, J.; Jin, D. Reproducing the Few-Shot Learning Capabilities of the Visual Ventral Pathway Using Vision Transformers and Neural Fields. Brain Sci. 2025, 15, 882. https://doi.org/10.3390/brainsci15080882

Su J, Xing L, Li T, Xiang N, Shi J, Jin D. Reproducing the Few-Shot Learning Capabilities of the Visual Ventral Pathway Using Vision Transformers and Neural Fields. Brain Sciences. 2025; 15(8):882. https://doi.org/10.3390/brainsci15080882

Chicago/Turabian StyleSu, Jiayi, Lifeng Xing, Tao Li, Nan Xiang, Jiacheng Shi, and Dequan Jin. 2025. "Reproducing the Few-Shot Learning Capabilities of the Visual Ventral Pathway Using Vision Transformers and Neural Fields" Brain Sciences 15, no. 8: 882. https://doi.org/10.3390/brainsci15080882

APA StyleSu, J., Xing, L., Li, T., Xiang, N., Shi, J., & Jin, D. (2025). Reproducing the Few-Shot Learning Capabilities of the Visual Ventral Pathway Using Vision Transformers and Neural Fields. Brain Sciences, 15(8), 882. https://doi.org/10.3390/brainsci15080882