Abstract

Background/Objectives: The accurate and efficient segmentation of brain tumors from 3D MRI data remains a significant challenge in medical imaging. Conventional deep learning methods, such as convolutional neural networks and transformer-based models, frequently introduce significant computational overhead or fail to effectively represent multi-scale features. Methods: This paper presents a lightweight deep learning framework that uses adaptive discrete wavelet decomposition and iterative axial attention to improve 3D brain tumor segmentation. The wavelet decomposition module effectively captures multi-scale information by breaking it down into frequency sub-bands, thereby the mitigating detail loss often associated with standard downsampling methods. Ablation studies confirm that this module enhances segmentation accuracy, particularly in preserving the finer structural details of tumor components. Simultaneously, the iterative axial factorization attention reduces the computational burden of 3D spatial modeling by processing attention sequentially along individual axes, preserving long-range interdependence while consuming minimal resources. Results: Our model performs well on the BraTS2020 and FeTS2022 datasets with average Dice scores of 85.0% and 88.1%, with our competitive results using only 5.23 million parameters and 9.75 GFLOPs. In comparison to state-of-the-art methods, it effectively balances accuracy and efficiency, making it suitable for resource-constrained clinical applications. Conclusions: This study underscores the advantages of integrating frequency-domain analysis with optimized attention mechanisms, paving the way for scalable, high-performance medical image segmentation algorithms with broader clinical diagnostic applications.

1. Introduction

As the most common primary malignant tumors in the central nervous system, gliomas are highly heterogeneous, aggressive, and have a poor clinical prognosis. Accurate imaging assessment is crucial for surgical planning and efficacy monitoring [1]. Multimodal magnetic resonance imaging (MRI) provides multidimensional information on tumor anatomy and function through T1-weighted, T1-enhanced, T2-weighted, and FLAIR sequences, but manually outlining the tumor region is time consuming and susceptible to subjective factors [2]. In recent years, deep learning techniques have significantly promoted the automation of medical image segmentation; however, 3D glioma segmentation still faces many challenges: variable tumor morphology, fuzzy boundaries, large-scale variations, and complex semantic features in different sub-regions such as enhanced tumors and peri-tumor edemas [3]. How to balance the computational efficiency and segmentation accuracy of the model while effectively modeling long-range dependencies and multi-scale features has become a core problem to be solved in this field.

Traditional convolutional neural networks (CNNs) dominate medical image segmentation by virtue of their local feature extraction capability. The encoder-decoder architecture represented by U-Net [4] fuses shallow details with deep semantic information through jump connections and performs well in the brain tumor segmentation task. Subsequent studies have further optimized the performance by introducing designs such as residual connectivity [5], attention mechanisms [6], and adaptive normalization [7]. However, traditional CNNs are limited by local receptive fields, which makes it difficult to capture the global spatial correlation of tumors, and are especially ineffective in segmenting irregular morphology or small-sized lesions [8]. In addition, the high-resolution nature of 3D medical images leads to a surge in the number of model parameters and computational complexity, which also limits the efficiency of their deployment in clinical environments [9].

In order to break through the limitations of CNN, researchers have attempted to introduce Transformer [10] into the field of medical image segmentation. Vision Transformer (ViT) [11] models the global context through a self-attentive mechanism and demonstrates excellent performance in natural image tasks. TransUNet [8] embedded ViT into the bottleneck layer of U-Net for the first time, which utilizes Transformer to encode long-range dependencies while preserving the local feature extraction capability of CNN. However, the high computational complexity and data dependency of ViT limit its application in 3D medical imaging: the original ViT needs to divide the image into fixed-size patches, which leads to the loss of spatial information, and the pre-training weights depend on large-scale datasets [12]. Swin UNETR [13] reduces computational complexity through the mechanism of layered-windowed self-attention and demonstrates excellent performance, but its parameter count is still high, which makes it difficult to meet the lightweight demand. Recent studies have proposed hybrid architectures, such as TransBTS [14], which combines 3D CNN and Transformer in the encoder, which improves the segmentation accuracy; however, the efficiency of multi-stage feature fusion is insufficient, and the decoupling of multi-scale frequency information is not fully considered.

Existing methods still have significant limitations in the following aspects: the traditional downsampling operation represented by pooling or stepwise convolution is prone to lead to the loss of high-frequency details, while frequency-domain analysis methods, such as wavelet transform, can preserve multiscale features, but their nondiminutability restricts the ability of end-to-end optimization [15]; the computational complexity of the 3D global self-attention grows squarely with the number of voxels, which makes it difficult to be directly applied to high-resolution MRI data [16]; most existing decoders rely on simple upsampling and jump connections and lack dynamic calibration and structured fusion of multi-scale features, leading to blurring of boundary segmentation and false-positive problems [17]. In addition, most models do not fully consider the inter-class imbalance problem, especially in small target regions such as enhanced tumors (ETs), which are prone to under-segmentation [18].

Furthermore, the deployment of deep learning models in clinical settings, such as intraoperative surgical navigation systems or resource-constrained diagnostic centers, imposes stringent requirements on computational efficiency [19]. Surgical navigation, for instance, demands near real-time segmentation feedback to guide interventions, necessitating models with low latency and minimal computational footprint.

To address the above motivation, the study in this paper proposes a lightweight 3D medical image segmentation framework for gliomas, which we name the Wavelet-guided Iterative Axial Factorization (WIAF) model. The main design includes an adaptive discrete wavelet domain feature decoupling module (AWD-FD), which separates multi-frequency-band features via a three-dimensional discrete wavelet transform and incorporates attentional gating to dynamically augment the task-relevant frequency components. Our proposed iterative axial decomposition of attention mechanism (IAFT) significantly reduces the computational time complexity by decomposing the 3D self-attention into serialized local computations in the height, width, and depth directions; the model employs a multiscale feature fusion decoder (MSFFD) to enhance the boundary segmentation accuracy through axial attention-guided hierarchical feature alignment with a progressive upsampling strategy. Our experiments show that the model achieves a state-of-the-art performance level on the BraTS2020 and FeTS2022 datasets, respectively, while the number of covariates is only 5.23 M and the computation amount is 9.75 GFLOPs, which is a significant advantage.

The contributions of this paper can be summarized as follows:

- -

- Combining 3D wavelet transform with the wavelet coefficient gating mechanism to realize end-to-end multi-band feature decoupling and dynamic calibration.

- -

- Proposal of an iterative attention mechanism for axial decomposition to efficiently model long-range dependencies while preserving local geometric structures.

- -

- Design of a lightweight multi-scale decoder to balance the computational efficiency and segmentation accuracy through a joint channel-space optimization strategy.

The remainder of this paper is structured as follows: Section 2 reviews related research methods and progress; Section 3 details the model architecture and key technologies; Section 4 demonstrates the experimental design and result analysis; Section 5 discusses the model limitations and future directions. The source code of the proposed model is available in the Supplementary Materials.

2. Related Work

2.1. Convolutional Neural Network-Based Medical Image Segmentation Methods

Since the proposal of U-Net [4], the encoder-decoder architecture has become the dominant paradigm for medical image segmentation. For the task of 3D glioma segmentation, 3D U-Net [20] extends the modeling capability of traditional U-Net by introducing 3D convolution, but its restricted receptive field leads to insufficient accuracy for small-size tumor segmentation. Subsequent studies, such as Res-UNet [21] optimized gradient propagation by residual connection, and Dense-UNet [22] introduced the design of dense connection but failed to effectively solve the multi-scale feature fusion problem caused by tumor heterogeneity.

2.2. Attention Mechanism and Feature Calibration Strategy

The attention mechanism significantly improves the performance of medical image segmentation through dynamic feature weighting. The nnFormer proposed by Zhou et al. [23] utilizes a combination of interleaved convolution and self-attention operations and also introduces a self-attention mechanism based on local and global volumes to learn the volume representation. TransBTS proposed by Wang et al. [14] learned the volume representation through the Transformer bottleneck layer to model global dependencies, but multi-stage feature alignment is inefficient. Attention U-Net proposed by Oktay et al. [24] introduces gated attention into encoder-decoder jump connections. TransUNet [8] embeds Vision Transformer into the encoder to capture long-range dependencies using self-attention, but its two-dimensional patch division leads to loss of 3D spatial continuity. UNETR proposed by Hatamizadeh et al. [9] uses a pure Transformer architecture, which is prone to overfitting on small datasets. To balance the local details and global context, Zhang et al. [25] designed a hybrid CNN-Transformer architecture, which still faces high complexity in its multi-branch computation.

2.3. Application of Wavelet Transform in Medical Image Analysis

Wavelet transform shows unique advantages in medical image denoising and feature decoupling by virtue of its multi-resolution analysis capability. Liu et al. [26] proposed MWCNN to integrate discrete wavelet transform into a CNN downsampling layer, but the non-microscopic wavelet operation restricted the end-to-end optimization. Kang et al. [27] constructed a wavelet residual network to suppress the noise propagation by frequency domain decomposition; however, the high-frequency information is directly discarded and easily leads to the loss of edge details. Zhao et al. [28] proposed WRANet using learnable wavelet basis functions to achieve a dynamic frequency band selection. Huang et al. [29] further designed a reversible wavelet network, WINNet, to enhance the feature robustness through the joint frequency domain-space domain optimization.

2.4. Lightweight Transformer Architecture Design

To address the high computational complexity problem of traditional Transformer, the axial attention mechanism has been widely explored. Axial-DeepLab proposed by Wang et al. [30] decomposed the 2D self-attention into 1D computation in the height and width directions. Valanarasu et al. [31] proposed MedT, which introduced gated axial attention and dynamically adjusted the positional coding contribution through learnable gating parameters. Perera et al. [32] proposed SegFormer3D with a hierarchical Transformer with a full MLP decoder, with a parameter count of only 4.5 M, and achieved similar performance to nnFormer [23]. In addition, EfficientViT [33] reduced memory consumption by cascading group attention, but its channel compression strategy leads to high-frequency feature loss.

2.5. Multi-Scale Feature Fusion

Feature fusion and information interaction play a very important role in segmentation boundary accuracy. CE-Net, proposed by Gu et al. [34], introduces a feature pyramid module to enhance multiscale context awareness, but its cascaded upsampling strategy creates a memory bottleneck. CA-Net proposed by Gu et al. [35], in order to achieve more accurate and interpretable medical image segmentation, calibrates the encoder features by introducing a joint spatial channel, and the scaled APRNet proposed by Zhuang et al. [36] uses 3D anisotropic pyramid convolution reversible residual sequences to capture multimodal image inter-dimensional information. Poudel et al. [37] designed a multiscale attention mechanism to expand the receptive field by expanding the convolution, but it is not sufficiently adapted to irregular tumor morphology.

Compared with existing studies, the key difference of this study is that the AWD-FD module proposed in this paper fully considers the importance of decoupling the frequency domain features, and adaptively enhances the task-relevant frequency components through a dynamic gating mechanism. The proposed IAFT module solves the problem of insufficient adaptation of traditional CNNs to irregular tumor morphology through an axial decomposition strategy that efficiently captures long-range dependencies while preserving local geometric structures. The overall framework achieves accurate modeling of 3D voxel-level spatial correlations while reducing the number of parameters and computational effort through an axial attention-guided hierarchical feature alignment strategy.

3. Methodology

3.1. Overview of the Proposed Model

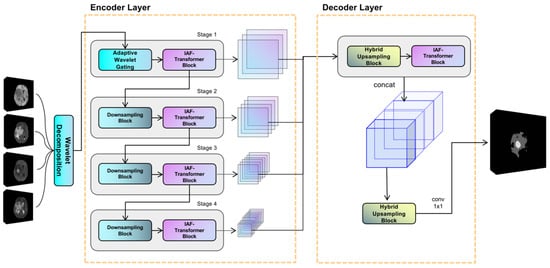

The proposed model utilizes an encoder-decoder structure, and its overall framework is illustrated in Figure 1. The encoder is composed of four hierarchical stages for extracting multiscale features. Notably, the first stage uniquely incorporates an adaptive discrete wavelet domain feature decoupling (AWD-FD) design to process the initial input, while subsequent stages utilize standard convolutional downsampling. Each stage integrates an iterative axial factorization transformer (IAFT) module. The decoder is designed with multi-scale feature fusion, which aggregates features from different encoder stages and restores spatial resolution.

Figure 1.

Overview of the proposed WIAF model architecture, highlighting the key components and their interactions for processing 3D medical imaging data.

In the encoder, the initial stage implements the three-dimensional discrete wavelet transform to decompose the input volume into eight wavelet coefficients, ranging from low to high frequencies along the three orthogonal axes: height, width, and depth. Subsequent stages progressively reduce the feature sampling rate using segmented convolution while expanding the channel dimensions. It is evident that all stages employ IAFT blocks to model long-range dependencies through axial decomposition. The decoder upsamples the feature mappings to a uniform resolution and combines them along the channel dimensions through a tensor splicing operation. The convolutional layer then outputs the final prediction.

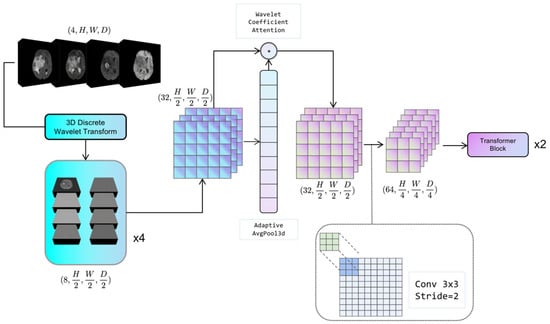

3.2. Adaptive Discrete Wavelet Domain Feature Decoupling

The configuration of the adaptive discrete wavelet domain feature decoupling module is illustrated in Figure 2. The system under consideration comprises two distinct components: a non-learnable wavelet decomposition part and a learnable wavelet coefficient attention gating and downsampling part. The present study serializes the unlearnable part of the module along with the non-random transforms into the Hierarchical Data Format (HDF5) format [38], which is a file format for organizing and storing large amounts of data. This approach effectively mitigates the problem of non-micro-miniature wavelet transforms consuming model computation resources. The learnable part is trained as part of the backbone network.

Figure 2.

The schematic of Adaptive Wavelet Domain Feature Decoupling (AWD-FD) module. It depicts the process of feature decomposition and integration in the wavelet domain for multi-scale feature extraction.

3.2.1. Discrete Wavelet Decomposition

For a given 3D medical image tensor , the Haar wavelet transform is utilized, where its corresponding low-pass filter ϕ and high-pass filter ψ are applied along each axis to decompose the tensor into eight wavelet coefficients, denoted as Equation (1):

where ∘ denotes the convolution operation. For each mode of the image, it is decomposed into 8 wavelet coefficients; if the input 3D medical image contains C modes, C is the number of channels. The combined output of wavelet coefficients of all modes can be expressed as .

3.2.2. Adaptive Feature Recalibration

This section is used to dynamically adjust the sensitivity of the model to different wavelet coefficients by performing Global Average Pooling (GAP) on Xsub and generating learnable channel weight vectors at the Multi-Layer Perceptron (MLP) layer. In Equation (2), W1 and W2 denote the two parameter matrices in the fully connected neural network:

The symbol “σ” denotes the sigmoid activation function, which is employed to generate weights ranging from 0 to 1. The recalibrated feature calculation formula is illustrated by Equation (3):

In this context, ⊙ is used to denote the Hadamard product, defined as the element-by-element multiplication of the matrix. The module enables the model to adaptively suppress redundant information and enhance task-relevant frequency components.

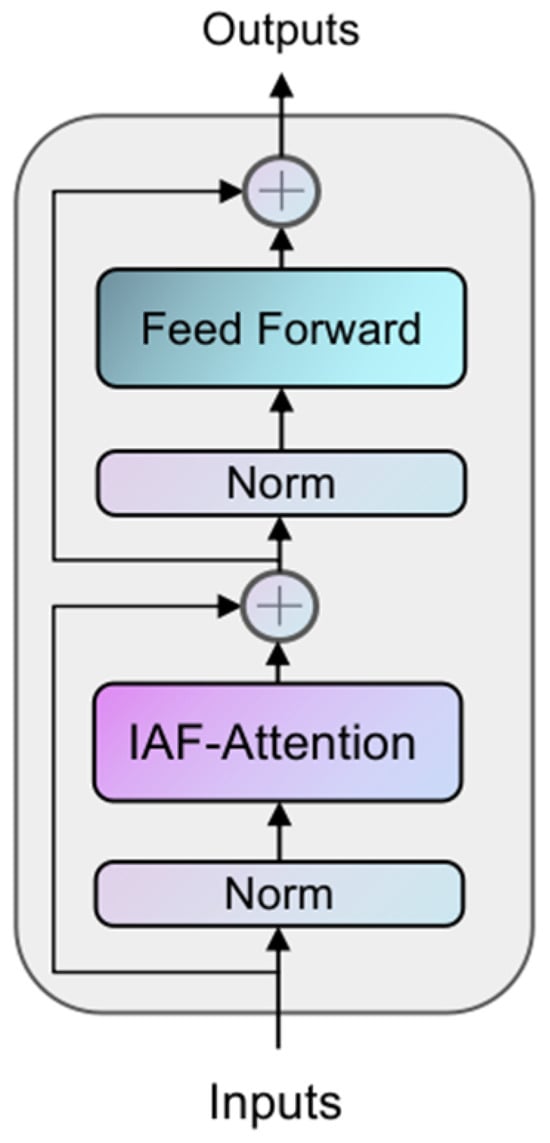

3.3. Iterative Axial Factorization Transformer

In 3D medical image segmentation tasks, traditional global self-attention mechanisms face the challenge of high computational complexity. To solve this problem, this study proposes the IAFT module, which achieves efficient feature modeling through axial decomposition and iterative optimization. The core idea is to decompose the 3D spatial attention into serialized local attention computations in three orthogonal directions (height H, width W, and depth D), which is mainly used to balance computational efficiency and global context modeling.

The structure of the IAFT module is shown in Figure 3, where the input tensor is layer-normalized and input into the Iterative Axial Factorization Attention; then, multi-layer level feature fusion is achieved by residual connection.

Figure 3.

The architecture of the IAFT Module. Each block contains an Iterative Axial Factorization Attention (IAF-Attention), and MLP and incorporates a residual linkage design.

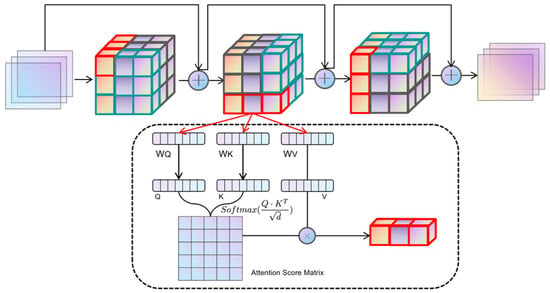

Given an input tensor X of shape (B, N, C), where N is defined as H × W × D, for the axis α, the dimensions are aligned through the operation of tensor reshaping to isolate the vector Z in the direction of α. To illustrate, the tensor is initially transformed along the height axis H into a tensor Zh of shape (W × D, H, C). Each tensor Zα reshaped along the axis possesses distinct Query, Key, and Value components, which are derived through the learnable parameter matrices WQ, WK, and WV, respectively. The Qα, Kα, and Vα components in each axial direction are divided into heads according to the specified num_heads before performing the standard dot-product scaled attention computation as per the practice of multi-head self-attention, whose formulas are shown in (4):

where is the scaling factor and C is equal to the feature dimension of the transformer block divided by num_heads. The outputs of the three axial iterations are sequentially summarized by residual concatenation, the representation of which is given in Equation (5):

Where silu denotes the Sigmoid linear cell activation function [39] and t denotes the number of iterations. The core idea is to decompose the 3D spatial attention into serialized local attention computations along three orthogonal directions (height H, width W, and depth D). Unlike window-based attention mechanisms (e.g., Swin Transformer), which approximate global interactions through shifted local windows, IAFT processes attention sequentially along each full axis. This sequential axial processing allows for the direct capture of long-range dependencies along individual anatomical axes. The iterative nature of IAFT (Equation (5)), where the output of one axial attention pass becomes the input for the next (after residual connection and SiLU), enables the integration of contextual information from all three dimensions. This iterative refinement across axes helps to preserve and model complex 3D geometric structures and inter-dependencies more effectively than factorizing attention independently without iteration or relying solely on local window interactions, particularly for elongated or complexly shaped tumors in 3D space. This approach significantly reduces computational complexity from for full 3D self-attention to for high-resolution 3D data, while enhancing the model’s capacity for geometric modeling of anatomical structures by maintaining spatial localization and capturing extensive contextual cues. The overall operation of Iterative Axial Factorization Attention is shown in Figure 4.

Figure 4.

The schematic of IAF-Attention. The input image sequentially reshapes the tensor shape along different orthogonal directions and computes the attention.

3.4. Multi-Scale Feature Fusion Decoder

In order to effectively integrate the multi-resolution features of the encoder output, a lightweight hybrid upsampling decoder is designed in this study. The decoder accomplishes accurate pixel-level prediction through layer-level feature alignment and channel attention weighting with progressive upsampling.

For the four-stage feature output from the encoder, upsampling is performed using a combination of transposed convolution and trilinear interpolation to ensure that the spatial dimensions of each feature map are aligned to a uniform resolution. The upsampled features undergo a series of processing steps, beginning with joint channel-space optimization via a lightweight axial attention module. This module involves the spreading of features into a sequence form, their input into IAFT to compute cross-positional dependencies, the preservation of low-frequency anatomical structural information via residual concatenation, and the enhancement of nonlinear representativeness using the SILU activation function.

The aligned multi-scale features are spliced along the channel dimension and compressed to a uniform dimension by 1 × 1 convolution. A progressive upsampling strategy is finally adopted, which entails the restoration of resolution to the fused feature maps using transposed convolution. This is followed by the refinement and restoration of edge details to the original resolution using trilinear interpolation. Ultimately, the category probability maps are output through point-by-point convolution.

MSFFD employs a phased feature processing strategy to circumvent the memory bottleneck that arises from concurrent processing of full-resolution features. Axial attention is incorporated into the decoding process to preserve anatomical consistency and augment the modeling capability of the tumor boundary region. The computational volume of the decoder component is 3.71 GFLOPs, and the number of parameters is only 167,940, which accounts for a mere 3.32% of the total parameters of the model. This is indicative of the design concept of lightweight computing.

3.5. Loss Function

3.5.1. Dice Loss

The Dice Loss function is among the most frequently employed loss functions in the domain of medical image segmentation, with its formula specified as follows:

In this context, represents the true label, denotes the predicted probability, and i denotes the sample index. The Dice Loss has been demonstrated to be particularly effective in addressing the category imbalance problem. In the context of brain glioma segmentation, the tumor region is typically considerably smaller than the non-tumor region, leading to a pronounced category imbalance in the dataset. The Dice coefficient, a metric of similarity between two sets, is employed to quantify this imbalance. The Dice Loss function, which prioritizes the overlapping region over the precise classification, effectively mitigates the issue of significant imbalance between positive and negative samples in the glioma segmentation task.

3.5.2. Cross Entropy Loss

The cross-entropy loss function is another widely used loss function for multi-category classification problems, with the formula definition shown in Equation (7):

In this equation, denotes the true label, denotes the predicted probability, i denotes the sample index, and N denotes the total number of samples. The cross-entropy loss function is designed to promote accurate classification at the pixel level, a critical component for precise glioma localization.

3.5.3. Training Policy

The cross-entropy loss function and the Dice Loss function are complementary in their approaches to the problem. The cross-entropy loss function emphasizes the differences in probability distributions, while the Dice Loss function concentrates on the size of the overlapping regions [40]. This complementarity allows for the combination of these elements, resulting in a more comprehensive enhancement of performance.

In the context of glioma segmentation tasks, it is often challenging for a single loss function to simultaneously satisfy multiple segmentation requirements. To harness the strengths of both the Dice Loss and the cross-entropy loss functions, a strategy of weighting the two loss functions is adopted. The joint loss function is defined as Equation (8):

where and are weighting coefficients that balance the contributions of the two loss functions. By adjusting these weights, one can flexibly control the importance the model assigns to different loss terms.

3.6. Statistical Analysis

The performance of our proposed model and other compared methods was primarily evaluated using the Dice Similarity Coefficient (DSC) and the 95% Hausdorff Distance (HD95) for the enhanced tumor (ET), tumor core (TC), and whole tumor (WT) regions. For the quantitative results reported in our experiments (e.g., Section 4.2, Table 1 and Table 2; Section 4.3, Table 3), mean values and their corresponding 95% confidence intervals (CIs) were calculated to provide a measure of the precision of our estimates.

Table 1.

Quantitative analysis of the BraTS2020 dataset. Dice coefficients and Hausdorff distances of ET, TC, and WT were used as evaluation metrics. The up arrow (↑) indicates that higher values are better, while the down arrow (↓) indicates that lower values are better.

Table 2.

Quantitative analysis of the FeTS2022 dataset. Dice coefficients and Hausdorff distances of ET, TC, and WT were used as evaluation metrics. The up arrow (↑) indicates that higher values are better, while the down arrow (↓) indicates that lower values are better.

Table 3.

Quantitative analysis of lightweight design on the BraTS2020 dataset. This table compares parameter count (Params.), floating-point operations (FLOPs), average inference time per 3D patient volume (Inference Time), and average Dice coefficient (Avg. Dice). Inference time was measured on a single NVIDIA RTX 3080 GPU.

In the ablation study (Section 4.4, Table 4), paired-sample t-tests were employed to assess the statistical significance of differences in Dice scores for ET, TC, and WT regions between different model configurations (baseline model and models with incrementally added components). A p-value less than 0.05 was considered statistically significant (*), and a p-value less than 0.01 was considered highly statistically significant (**).

Table 4.

Ablation study of the proposed model on the testing dataset of BraTS2020. The Dice score on ET, TC, and WT were evaluated to demonstrate the contribution of each component.

All statistical analyses, including the calculation of performance metrics and hypothesis testing, were performed using Python (version 3.11). Specifically, the NumPy library (version 1.26.4) was utilized for numerical operations and data handling, and the SciPy library (version 1.15.2, specifically the ‘scipy.stats.ttest_rel’ function) was used for conducting the paired-sample t-tests.

4. Results and Discussion

4.1. Experimental Setup

Our model operates on a platform equipped with a single NVIDIA RTX 3080 (10 GB VRAM), and the model code is implemented using the PyTorch (version 2.3.1) framework. We conduct experiments using two publicly available datasets: BraTS2020 [2] and FeTS2022 [41]. Each sample contains four modalities: T1, T2, T1ce, and Flair sequences. The BraTS2020 dataset includes MRI data from 369 patients diagnosed with various types of high-grade gliomas (HGGs) and low-grade gliomas (LGGs). The training set of FeTS2022 comprises a total of 1286 samples, which include MRI scans of brain tumors sourced from multiple centers. These scans are radiologically characterized as glioblastomas (GBMs); specifically, the FeTS dataset is a subset of GBM cases from the ongoing evaluation of BraTS. For both the BraTS2020 and FeTS2022 datasets, we partitioned the data into training, validation, and test sets in an 8:1:1 ratio. We selected the model exhibiting optimal performance based on validation set results and subsequently evaluated the final performance using the test set. During the training process, all scanned images were resized to a voxel dimension of 128 × 128 × 128, focusing on the region of interest. We applied data augmentation techniques to the input images in the training set, including random flipping (30% probability) and random rotation (50% probability, with an angle of ±30°) combined with noise. We set the batch size to two and the initial learning rate to 2 × 10−4, training the model for 400 epochs using the AdamW [42] optimizer. Additionally, we employed the ‘CosineAnnealingWarmRestarts’ learning rate strategy, which restarts the learning rate to the initial value at the beginning of each cycle (every 20 epochs) and subsequently decreases it to the minimum learning rate through cosine decay during the cycle, which we set to 1 × 10−5 in our experiments.

4.2. Comparison with State-of-the-Art Models

Our model was experimentally compared with a variety of techniques that encompass both classical models and recent state-of-the-art (SOTA) approaches, including 3D UNet [20], AttentionUNet [24], SegResNet [43], nnUNet [7], DiNTS [44], TransUNet [8], TransBTS [14], UNETR [9], nnFormer [23], DAUnet [45], Segformer3D [32], VcaNet [46], and CR-Swin2-VT [47]. All implementations were supplied by the authors, and all model setups and hyperparameters were maintained uniformly to guarantee an equitable comparison. In order to obtain the average value for all regions, the evaluation metrics compare the models’ Dice coefficients and 95% Hausdorff distance (HD95) over three distinct region types: enhanced tumor (ET), tumor core (TC), and whole tumor (WT). The quantitative comparison results of the various approaches on the BraTS2020 dataset are shown in Table 1, and the quantitative comparison findings on the FeTS2022 dataset are shown in Table 2.

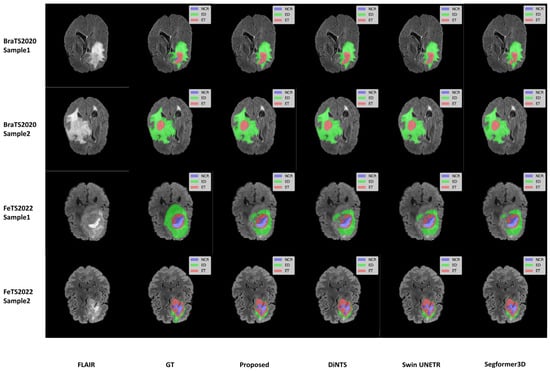

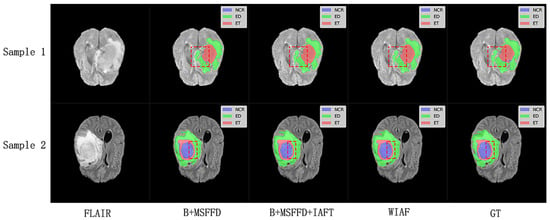

We selected two samples from each dataset of BraTS2020 and FeTS2022 for qualitative analysis using the methods proposed in this paper, specifically DiNTS, Swin UNETR, and Segformer3D. The results of the visualization are presented in Figure 5. To facilitate presentation, the 3D medical images will be displayed using a grayscale map of intermediate 2D slices along the depth dimension, with different tumor subregions distinguished by varying colors. Each tumor part is represented by a corresponding color. We chose the Flair sequence image as the primary reference point, while the Ground Truth (GT) indicates the segmented region of the label mask.

Figure 5.

Qualitative visualization of brain tumor segmentation results on selected axial slices from the BraTS2020 and FeTS2022 datasets. For each sample, the FLAIR MRI sequence is shown first, followed by the Ground Truth (GT) segmentation and predictions from our proposed WIAF model, DiNTS, Swin UNETR, and Segformer3D. In the segmentation masks, tumor subregions are color-coded as follows: enhancing tumor (ET) in red, peritumoral edema (ED) in green, and necrotic and non-enhancing tumor core (NCR/NET) in blue.

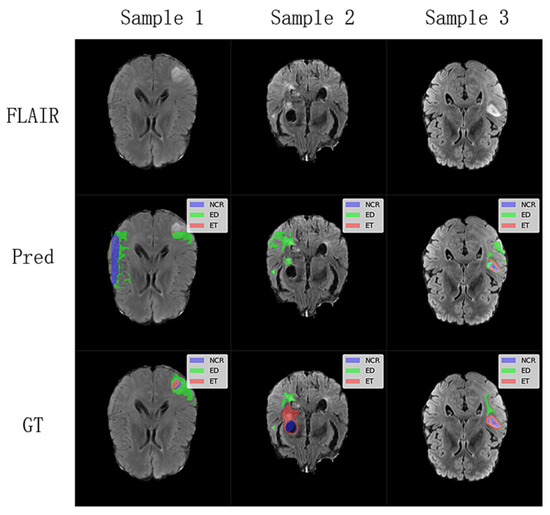

To further understand the limitations of our proposed model, we investigated challenging cases where segmentation performance was suboptimal, as illustrated in Figure 6. These cases, identified through an analysis of outliers in our test set quantitative metrics, highlight specific scenarios where the model struggles. These challenging cases suggest that while our lightweight model performs well on average, its performance can be compromised in images with very low contrast between tumor and healthy tissue, or for particularly heterogeneous or small tumor sub-regions. The model’s efficiency, achieved through a reduced parameter count, might lead to a diminished capacity to learn highly complex and subtle feature variations present in these difficult instances. Future work could focus on targeted data augmentation for such low-contrast scenarios or a more sophisticated feature enhancement module to improve robustness in these specific failure modes.

Figure 6.

Analysis of challenging segmentation cases from the BraTS2020 and FeTS2022 testing dataset. The first row displays the FLAIR sequence (middle slice) of three distinct subjects where our model encountered difficulties. The Pred row shows the corresponding segmentation predictions from the WIAF model, and the GT row presents the ground truth labels.

Our proposed model achieves competitive performance on both datasets, We use n to represent the number of samples in the test set. Specifically, on the BraTS2020 test set (n = 38), for the ET, TC, and WT regions, our model obtained mean Dice scores of 76.8% (95% CI: 68.5–84.8%), 87.8% (95% CI: 85.4–90.6%), and 90.5% (95% CI: 88.8–92.3%), respectively. The corresponding mean HD95 values were 5.26 mm (95% CI: 1.65 mm–8.86 mm) for ET, 7.00 mm (95% CI: 3.60 mm–10.40 mm) for TC, and 7.03 mm (95% CI: 4.38 mm–9.69 mm) for WT.

On the larger FeTS2022 test set (n = 126), our model demonstrated mean Dice scores of 86.1% (95% CI: 82.2–89.9%) for ET, 92.0% (95% CI: 90.7–93.3%) for TC, and 93.4% (95% CI: 92.7–94.2%) for WT. The HD95 results were 2.12 mm (95% CI: 0.39 mm–3.85 mm) for ET, 3.72 mm (95% CI: 2.99 mm–4.44 mm) for TC, and 6.64 mm (95% CI: 4.60 mm–8.68 mm) for WT. These confidence intervals provide a measure of the precision of our mean estimates.

This advantage may arise from the adaptive wavelet decomposition, which preserves high-frequency details, enabling the model to delineate tumor boundaries more accurately. Additionally, the iterative axial attention mechanism mitigates the high computational cost associated with global self-attention by decomposing 3D spatial relations while retaining the capability to perceive a broad range of structures. In the ET region, our model’s Dice coefficient is comparatively lower than some heavier counterparts like DAUnet and Swin UNETR. This can be attributed to several factors inherent to lightweight designs and the nature of ET: these regions are often small, possess indistinct boundaries, and exhibit high heterogeneity. Our model’s lightweight architecture, while beneficial for computational efficiency, inherently involves a trade-off, potentially limiting the depth of feature extraction hierarchies required to capture extremely fine-grained details or subtle textural differences characteristic of small ET lesions. This is further corroborated by the Hausdorff distance for ET, which, being higher, suggests challenges in precise boundary localization for these smaller structures. However, the model’s strong performance in TC and WT regions underscores its efficacy for larger, more structurally defined tumor components. However, the model performs well in the TC and WT regions, reflecting its superiority in larger structures. Overall, the proposed method exhibits advantages in delineating tumor boundaries, particularly in regions with irregular tumor morphology. It consistently generates high-quality segmentation results across different slices and datasets, demonstrating strong performance consistency and robustness.

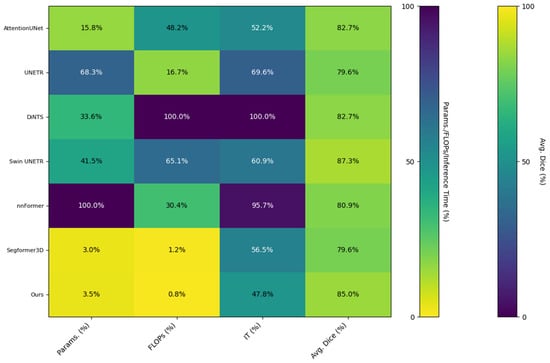

4.3. Quantitative Analysis of Lightweight Design

To validate the lightweight advantage of the proposed model, Table 3 compares the number of parameters, computational volume, inference speed, and average Dice coefficient across different methods using the BraTS2020 dataset. The proposed model requires only 5.23 million parameters with 9.75 GFLOPs of computation and achieves an average inference time of 289.47 ms per 3D patient volume on a single RTX 3080 GPU. This represents an 89.6% reduction in parameter count and a 99.2% reduction in computational load compared to DiNTS, while maintaining state-of-the-art segmentation performance. Notably, although Segformer3D has fewer parameters, it yields lower average Dice coefficients than the proposed method, demonstrating that our model achieves a superior balance between accuracy and efficiency in lightweight design.

Figure 7 presents the matrix heat map derived from the results displayed in Table 3. We normalized the non-uniform scale metrics (Parameters, FLOPs, Inference Time) from Table 3, converting all metrics into percentile values ranging from 0 to 1 based on the maximum value for each metric. This transformation visualizes the lightweight design and actual performance of the model through matrix heatmaps: lighter colors indicate higher performance, while darker colors signify lower performance. The three indicators—Parameters, FLOPs, and Inference Time—are arranged in reverse order, meaning that a lower percentage indicates better performance. Conversely, the Dice indicator is plotted in a positive order, where a higher percentage reflects superior model performance, represented by brighter colors in the matrix heatmap. The visualization results in Figure 6 illustrate that our method effectively mitigates the challenges associated with model parameter count and computational redundancy in medical image segmentation tasks, achieving a high-quality balance between performance and efficiency.

Figure 7.

Matrix heatmap of the quantitative metrics from Table 3, comparing the computational efficiency and segmentation performance of various methods.

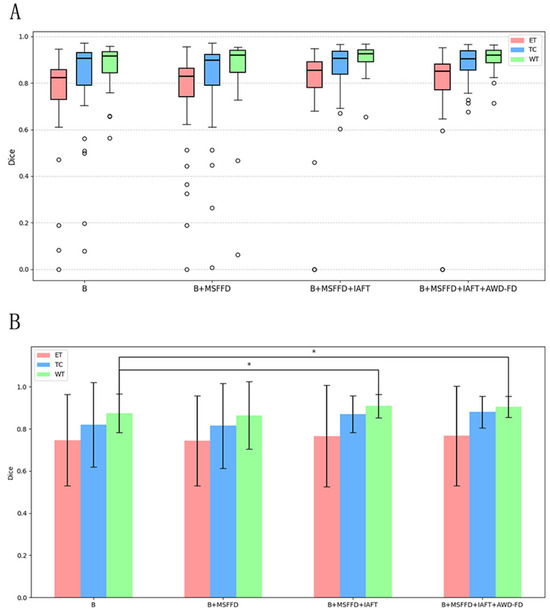

4.4. Ablation Study

To verify the effectiveness of the method proposed in this paper, we conducted ablation experiments on the BraTS2020 dataset. Specifically, we removed the AWD-FD module from the proposed model and replaced it with 3D convolution for the downsampling operation. Additionally, we substituted the IAFT with a Transformer Block that implements standard multi-head self-attention. To address the issue of excessive 3D image size in the initial stage of the encoder, which can lead to memory overflow, we employed 3D convolution with a stride of two to downsample the image at this stage. This downsampling allows us to obtain the self-attention Key and Value components after size reduction. Subsequently, we calculated the attention using the original Query component to mitigate the problem of video memory overflow under high token length. Finally, in the decoder layer, we adopted transposed convolution for upsampling to restore the feature maps at different stages to a unified size, followed by feature fusion and output classification operations. The model resulting from all these modifications served as baseline B for our ablation experiment, to which we added various modules designed by us to validate the effectiveness of the proposed method.

All comparison results are derived from the test subset of BraTS2020. Table 4 illustrates the changes in DSC, parameter count, and computational load from the infrastructure to the complete model.

After adopting the standard 3D convolution downsampling, the average Dice coefficient decreased to 81.4%, with particularly poor performance observed in the TC region. This suggests that traditional downsampling approaches may lead to the loss of high-frequency details. The introduction of the MSFFD resulted in a significant reduction in both the number of parameters and computational load. However, the Dice coefficients across all regions experienced a decline; this indicates that an upsampling strategy alone is insufficient to fully capture cross-level feature correlations. In contrast, the addition of iterative axial decomposition attention led to a notable increase in the Dice coefficient for the TC region, which rose to 87.0%, while the WT region achieved a coefficient of 90.9%. This finding validates the effectiveness of axial attention in modeling long-range dependencies.

Figure 8 presents a detailed analysis of the ablation experiments, illustrating the incremental impact of the MSFFD, IAFT, and AWD-FD modules on segmentation performance using the BraTS2020 test set. The final integration of the AWD-FD module led to a further increase in the Dice coefficient for the TC region from 87.0% to 87.8% and the average Dice to 85.0% (Table 4). The impact of AWD-FD on preserving fine structural details is visually exemplified in Figure 8, where the model with AWD-FD demonstrates improved delineation of tumor core boundaries and internal heterogeneity compared to the model without it. This highlights the AWD-FD module’s contribution to better feature representation by preserving high-frequency information. As illustrated in Figure 8, the complete model also exhibits a more concentrated distribution of scores with fewer outliers.

Figure 8.

Ablation study results on the BraTS2020 test set. (A) Boxplots showing the distribution of Dice scores for ET, TC, and WT for the baseline model and with the progressive addition of modules. Circles represent outliers. (B) Bar chart depicting mean Dice scores with error bars for ET, TC, and WT across the ablation study configurations. Asterisks (*) indicate statistically significant differences (p < 0.05), determined by paired t-tests.

The visual results shown in Figure 9 further validate the quantitative findings of the ablation study. The model without IAFT and AWD-FD exhibits significant undersegmentation in the ED region compared to the ground truth labels, and the boundaries of the WT region are not defined with sufficient precision. After integrating the IAFT module, a significant improvement in tumor boundary segmentation was observed, highlighting the effectiveness of IAFT in modeling long-range dependencies and optimizing feature representations. Finally, the complete proposed model incorporating the AWD-FD module is the closest to the ground truth (GT). The AWD-FD module appears to further optimize segmentation by preserving finer details and improving contour accuracy, particularly in the ED region and at the interfaces between different tumor subregions. For example, the highlighted regions in Sample 2 indicate that the model successfully captured subtle infiltration patterns and reduced false positives within the tumor compared to the intermediate ablation stage. This qualitative evidence strongly supports the synergistic contribution of each proposed module toward achieving robust and accurate brain tumor segmentation.

Figure 9.

Qualitative comparison of ablation study results on a representative axial slice from the BraTS2020 testing dataset. The red dashed boxes highlight the key differences between the model predictions at different stages and the ground truth labels.

5. Conclusions

This study proposes an innovative lightweight deep learning model for the efficient segmentation of brain tumors from 3D MRI scans. By introducing adaptive discrete wavelet decomposition and an iterative axial attention mechanism, our model significantly reduces computational complexity while maintaining high-precision segmentation capabilities. The experimental results demonstrate that this model achieves an average Dice coefficient of 85.0% and 88.1% for BraTS2020 and FeTS2022, respectively, reaching an advanced level compared to the state-of-the-art models. Additionally, it maintains a low parameter count and computational load, outperforming many existing complex models. This indicates that our method strikes a balance between performance and efficiency, possesses significant advantages in resource-constrained scenarios, and offers practicality for clinical practice.

However, this study has certain limitations. The current framework primarily focuses on Dice Loss and cross-entropy loss, which, while effective for overall segmentation accuracy, may not fully address the under-segmentation of small targets like ET regions due to class imbalance. For instance, focal loss, by down-weighting the loss assigned to well-classified examples, can encourage the model to focus more on hard-to-segment samples, which often include the small and indistinctly bounded ET regions. This could potentially alleviate the under-segmentation issue observed for ET, which is often attributed to class imbalance and its challenging characteristics. Future work will explore integrating focal loss variants or sensitivity-specific metrics (e.g., recall for ET) to further improve performance on these critical sub-regions.

Looking ahead, we intend to broaden the application of our model to other 3D medical imaging tasks to assess its generalization potential. Concurrently, investigating more flexible wavelet transform strategies or integrating multimodal data may yield additional performance enhancements. Additionally, optimizing the segmentation of small regions will emerge as a crucial research direction. This study, by integrating the frequency domain with the attention mechanism, has demonstrated the feasibility of a lightweight model for high-precision medical segmentation, thereby providing a theoretical foundation and direction for the future development of efficient and universal clinical tools.

Supplementary Materials

The full implementation of the proposed framework is publicly available at https://github.com/ZyySagit/WIAF-Brain-Tumor-Segmentation, accessed on 3 June 2025.

Author Contributions

Formal analysis, Y.Z.; methodology, Y.Z.; software, Y.Z.; supervision, S.W.; validation, Y.M.; investigation, T.Z.; writing—original draft, Y.Z.; writing—review and editing, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study utilized publicly available and de-identified datasets: the BraTS2020 Challenge dataset and the FeTS2022 Challenge dataset. The original data collection for these datasets was conducted by their respective organizing committees, who were responsible for obtaining appropriate institutional review board (IRB) approvals and patient consents. As this research involved the secondary analysis of existing, anonymized public data, ethical approval for this specific study was not required by our institution.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available in the Multimodal Brain Tumor Segmentation Challenge 2020 repository at https://www.med.upenn.edu/cbica/brats2020/data.html, accessed on 3 June 2025 and Federated Tumor Segmentation (FeTS) 2024 Challenge repository at https://www.synapse.org/fets2024, accessed on 3 June 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Louis, D.N.; Ohgaki, H.; Wiestler, O.D.; Cavenee, W.K.; Burger, P.C.; Jouvet, A.; Scheithauer, B.W.; Kleihues, P. The 2007 WHO classification of tumours of the central nervous system. Acta Neuropathol. 2007, 114, 97–109. [Google Scholar] [CrossRef]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 2014, 34, 1993–2024. [Google Scholar] [CrossRef] [PubMed]

- Bakas, S.; Akbari, H.; Sotiras, A.; Bilello, M.; Rozycki, M.; Kirby, J.S.; Freymann, J.B.; Farahani, K.; Davatzikos, C. Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features. Sci. Data 2017, 4, 170117. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 565–571. [Google Scholar]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef] [PubMed]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-Net architecture design for medical image segmentation through the lens of transformers. Med. Image Anal. 2024, 97, 103280. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. Unetr: Transformers for 3d medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin unetr: Swin transformers for semantic segmentation of brain tumors in mri images. In International MICCAI Brainlesion Workshop, 27 September 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 272–284. [Google Scholar]

- Wang, W.; Chen, C.; Ding, M.; Yu, H.; Zha, S.; Li, J. TransBTS: Multimodal brain tumor segmentation using transformer, Medical Image Computing and Computer Assisted Intervention-MICCAI 2021. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 109–119. [Google Scholar]

- Lyu, C.; Shu, H. A two-stage cascade model with variational autoencoders and attention gates for MRI brain tumor segmentation. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: 6th International Workshop, BrainLes 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, 4 October 2020, Revised Selected Papers, Part I 6; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 435–447. [Google Scholar]

- Guo, X.; Yang, Y.; Ye, C.; Lu, S.; Peng, B.; Huang, H.; Xiang, Y.; Ma, T. Accelerating diffusion models via pre-segmentation diffusion sampling for medical image segmentation. In Proceedings of the 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI), Cartagena de Indias, Colombia, 18–21 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Zhu, Z.; He, X.; Qi, G.; Li, Y.; Cong, B.; Liu, Y. Brain tumor segmentation based on the fusion of deep semantics and edge information in multimodal MRI. Inf. Fusion 2023, 91, 376–387. [Google Scholar] [CrossRef]

- Li, W.; Huang, W.; Zheng, Y. CorrDiff: Corrective diffusion model for accurate MRI brain tumor segmentation. IEEE J. Biomed. Health Inform. 2024, 28, 1587–1598. [Google Scholar] [CrossRef]

- Shen, Y.; Wang, S.; Shen, Y.; Hu, J. The Application of Augmented Reality Technology in Perioperative Visual Guidance: Technological Advances and Innovation Challenges. Sensors 2024, 24, 7363. [Google Scholar] [CrossRef] [PubMed]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, 17–21 October 2016, Proceedings, Part II 19; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 424–432. [Google Scholar]

- Xiao, X.; Lian, S.; Luo, Z.; Li, S. Weighted res-unet for high-quality retina vessel segmentation. In Proceedings of the 2018 9th International Conference on Information Technology in Medicine and Education (ITME), Hangzhou, China, 19–21 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 327–331. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhou, H.Y.; Guo, J.; Zhang, Y.; Han, X.; Yu, L.; Wang, L.; Yu, Y. nnFormer: Volumetric medical image segmentation via a 3D transformer. IEEE Trans. Image Process. 2023, 32, 4036–4045. [Google Scholar] [CrossRef] [PubMed]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Zhang, Y.; Liu, H.; Hu, Q. Transfuse: Fusing transformers and cnns for medical image segmentation. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021, Proceedings, Part I 24; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 14–24. [Google Scholar]

- Liu, P.; Zhang, H.; Zhang, K.; Lin, L.; Zuo, W. Multi-level wavelet-CNN for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 773–782. [Google Scholar]

- Kang, E.; Chang, W.; Yoo, J.; Ye, J.C. Deep convolutional framelet denosing for low-dose CT via wavelet residual network. IEEE Trans. Med. Imaging 2018, 37, 1358–1369. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, S.; Zhang, Y.; Qiao, S.; Zhang, M. WRANet: Wavelet integrated residual attention U-Net network for medical image segmentation. Complex Intell. Syst. 2023, 9, 6971–6983. [Google Scholar] [CrossRef]

- Huang, J.J.; Dragotti, P.L. WINNet: Wavelet-inspired invertible network for image denoising. IEEE Trans. Image Process. 2022, 31, 4377–4392. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhu, Y.; Green, B.; Adam, H.; Yuille, A.; Chen, L.C. Axial-deeplab: Stand-alone axial-attention for panoptic segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 108–126. [Google Scholar]

- Valanarasu, J.M.J.; Oza, P.; Hacihaliloglu, I.; Patel, V.M. Medical transformer: Gated axial-attention for medical image segmentation. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021, Proceedings, Part I 24; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 36–46. [Google Scholar]

- Perera, S.; Navard, P.; Yilmaz, A. Segformer3d: An efficient transformer for 3d medical image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 4981–4988. [Google Scholar]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. Ce-net: Context encoder network for 2d medical image segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef]

- Gu, R.; Wang, G.; Song, T.; Huang, R.; Aertsen, M.; Deprest, J.; Ourselin, S.; Vercauteren, T.; Zhang, S. CA-Net: Comprehensive attention convolutional neural networks for explainable medical image segmentation. IEEE Trans. Med. Imaging 2020, 40, 699–711. [Google Scholar] [CrossRef]

- Zhuang, Y.; Liu, H.; Song, E.; Ma, G.; Xu, X.; Hung, C.C. APRNet: A 3D anisotropic pyramidal reversible network with multi-modal cross-dimension attention for brain tissue segmentation in MR images. IEEE J. Biomed. Health Inform. 2021, 26, 749–761. [Google Scholar] [CrossRef]

- Poudel, S.; Lee, S.W. Deep multi-scale attentional features for medical image segmentation. Appl. Soft Comput. 2021, 109, 107445. [Google Scholar] [CrossRef]

- Krijnen, T.; Beetz, J. An efficient binary storage format for IFC building models using HDF5 hierarchical data format. Autom. Constr. 2020, 113, 103134. [Google Scholar] [CrossRef]

- Singh, B.; Patel, S.; Vijayvargiya, A.; Kumar, R. Analyzing the impact of activation functions on the performance of the data-driven gait model. Results Eng. 2023, 18, 101029. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.B.; Rundo, L. Unified focal loss: Generalising dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef] [PubMed]

- Manthe, M.; Duffner, S.; Lartizien, C. Federated brain tumor segmentation: An extensive benchmark. Med. Image Anal. 2024, 97, 103270. [Google Scholar] [CrossRef]

- Zhou, P.; Xie, X.; Lin, Z.; Yan, S. Towards understanding convergence and generalization of AdamW. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6486–6493. [Google Scholar] [CrossRef]

- Myronenko, A. 3D MRI brain tumor segmentation using autoencoder regularization. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 311–320. [Google Scholar]

- He, Y.; Yang, D.; Roth, H.; Zhao, C.; Xu, D. Dints: Differentiable neural network topology search for 3d medical image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5841–5850. [Google Scholar]

- Feng, Y.; Cao, Y.; An, D.; Liu, P.; Liao, X.; Yu, B. DAUnet: A U-shaped network combining deep supervision and attention for brain tumor segmentation. Knowl. Based Syst. 2024, 285, 111348. [Google Scholar] [CrossRef]

- Pan, D.; Shen, J.; Al-Huda, Z.; Al-Qaness, M.A. VcaNet: Vision Transformer with fusion channel and spatial attention module for 3D brain tumor segmentation. Comput. Biol. Med. 2025, 186, 109662. [Google Scholar] [CrossRef]

- Peiris, H.; Hayat, M.; Chen, Z.; Egan, G.; Harandi, M. Hybrid window attention based transformer architecture for brain tumor segmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Singapore, 18 September 2022; Springer Nature: Cham, Switzerland, 2022; pp. 173–182. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).