Non-Invasive Brain Stimulation and Artificial Intelligence in Communication Neuroprosthetics: A Bidirectional Approach for Speech and Hearing Impairments

Abstract

1. Introduction

2. Current State of Communication Neuroprosthetics

2.1. Invasive Approaches: Context and Contributions

2.2. Non-Invasive Approaches for Speech and Hearing Applications

2.2.1. EEG-Based Approaches for Speech Production

- Motor Imagery and P300: Systems leverage imagined movements to modulate sensorimotor rhythms or detect event-related potentials, enabling discrete selections for communication [28,29]. While functional, these approaches remain limited to selection-based communication rather than natural speech production.

- Continuous Speech Decoding: Recent work has pursued the continuous decoding of speech features from EEG. Nguyen et al. [30] demonstrated the decoding of phonemic information from EEG during covert speech, while Papadimitriou et al. [31] utilized transformer-based deep learning models to reconstruct continuous speech features from EEG. Though promising, these approaches currently achieve only limited vocabulary reconstruction with moderate intelligibility.

2.2.2. fNIRS and Multimodal Approaches

2.2.3. Non-Invasive Approaches for Hearing Applications

- Auditory Attention Decoding: O’Sullivan et al. [33] demonstrated the feasibility of decoding auditory attention from EEG in multi-speaker environments, forming the basis for cognitive hearing aids that enhance attended speech. Recent advances by Alickovic et al. [34] have improved real-time decoding accuracy and reduced the latency of auditory attention detection.

- Auditory Processing Enhancement: Transcranial stimulation shows promise for modulating auditory processing. Heimrath et al. [17] demonstrated that tACS at specific frequencies can enhance temporal auditory processing, while Vanneste et al. [18] reported the differential effects of various transcranial stimulation approaches on tinnitus perception.

2.3. Current Limitations and Recent Breakthroughs

- Signal Quality Challenges: EEG signals related to speech processes are often obscured by noise, with signal-to-noise ratios typically below 1:10 [35], making it difficult to extract clean neural signals related to speech production and perception. Limited spatial resolution (2–3 cm) restricts the ability to distinguish activity from adjacent cortical regions involved in speech production and perception [36].

- Performance Limitations: Current non-invasive approaches achieve information transfer rates of only 0.5–3 words per minute in practical scenarios, far below natural speech rates of 120–180 words per minute. Vocabulary limitations also persist, with state-of-the-art EEG-based systems decoding only 50–100 distinct words with moderate accuracy [37].

- Individual Variability: Substantial inter-individual and session-to-session variability necessitates frequent recalibration. Transfer learning across subjects for speech imagery tasks achieves only 40–60% of the performance of subject-specific models [38], highlighting the need for personalization.

- Advanced Machine Learning: Krishna et al. [39] demonstrated the continuous reconstruction of intelligible speech from EEG using convolutional-recurrent neural networks. Willett et al. [40] introduced self-supervised pre-training approaches that significantly reduce the amount of individual training data required for effective speech decoding.

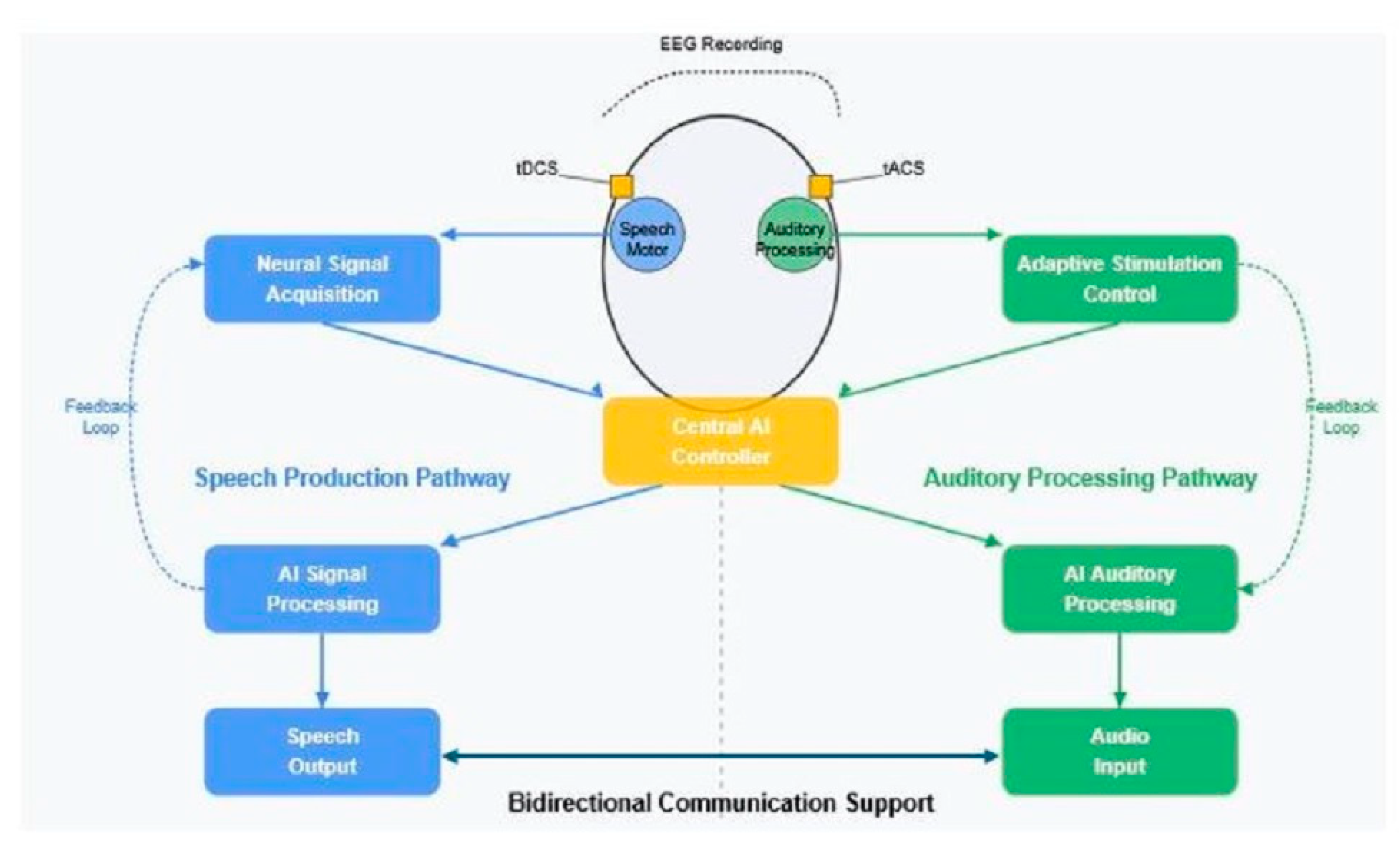

- Integration of Recording and Stimulation: Conde et al. [41] demonstrated a closed-loop system integrating EEG-based attention detection with targeted auditory enhancement using transcranial alternating current stimulation. This system detected the attended speaker in multi-speaker environments and selectively enhanced the processing of that speech stream.

- Multimodal Integration: Putze et al. [42] showed that combining EEG, fNIRS, and eye-tracking improved the classification accuracy of speech imagery by 23% compared to EEG alone. Chen et al. [43] demonstrated that incorporating subtle facial muscle activity with EEG significantly improved speech decoding accuracy in motor-impaired individuals.

3. Non-Invasive Brain Stimulation: Mechanisms and Applications

3.1. NIBS Modalities

- Transcranial Direct Current Stimulation (tDCS) applies weak constant electrical currents (typically 1–2 mA) via scalp electrodes to modulate cortical excitability. Anodal stimulation generally increases neuronal excitability, while cathodal stimulation typically decreases it [11,12]. The relatively low cost, portability, and ease of administration of tDCS make it particularly suitable for widespread clinical applications in communication disorders.

- Transcranial Alternating Current Stimulation (tACS) delivers oscillating electrical currents at specific frequencies, with the potential to entrain brain oscillations to the stimulation frequency. This approach shows particular promise for modulating oscillatory activity relevant to speech and auditory processing [13], which often depends on precise temporal dynamics across multiple frequency bands.

3.2. Applications in Speech Production and Auditory Processing

3.2.1. Speech Production

3.2.2. Auditory Processing

3.3. Comparative Efficacy and Individual Variability

4. Artificial Intelligence in Neural Decoding

4.1. Evolution of AI in BCI Applications

4.2. Key AI Innovations for Neural Signal Processing

- End-to-end learning eliminates manual feature engineering by learning optimal representations directly from raw neural signals. This approach has proven particularly valuable for decoding complex communication signals where relevant features may not be obvious [21].

- Transfer learning enables knowledge transfer across subjects, sessions, and tasks, potentially reducing calibration requirements. Willett et al. [40] demonstrated that self-supervised pre-training on large speech corpora can significantly reduce the subject-specific neural data needed for effective speech decoding.

- Multimodal integration techniques allow AI models to fuse information from complementary data sources. Chen et al. [43] showed that incorporating minimal sEMG signals with EEG significantly improved speech decoding accuracy in motor-impaired individuals.

4.3. AI for Personalization and Adaptation

- Subject-specific optimization approaches use techniques such as Bayesian optimization to efficiently search for the high-dimensional parameter space of decoding models for individual users, addressing the substantial inter-individual variability observed in neural responses [22].

- Online adaptation algorithms continuously update model parameters during use, addressing the non-stationarity of neural signals across time. Such approaches are crucial for maintaining performance across sessions without requiring frequent recalibration [21].

- Reinforcement learning frameworks enable the optimization of decoding strategies based on implicit or explicit user feedback, potentially allowing systems to improve naturally through use [23].

4.4. AI-Guided Brain Stimulation

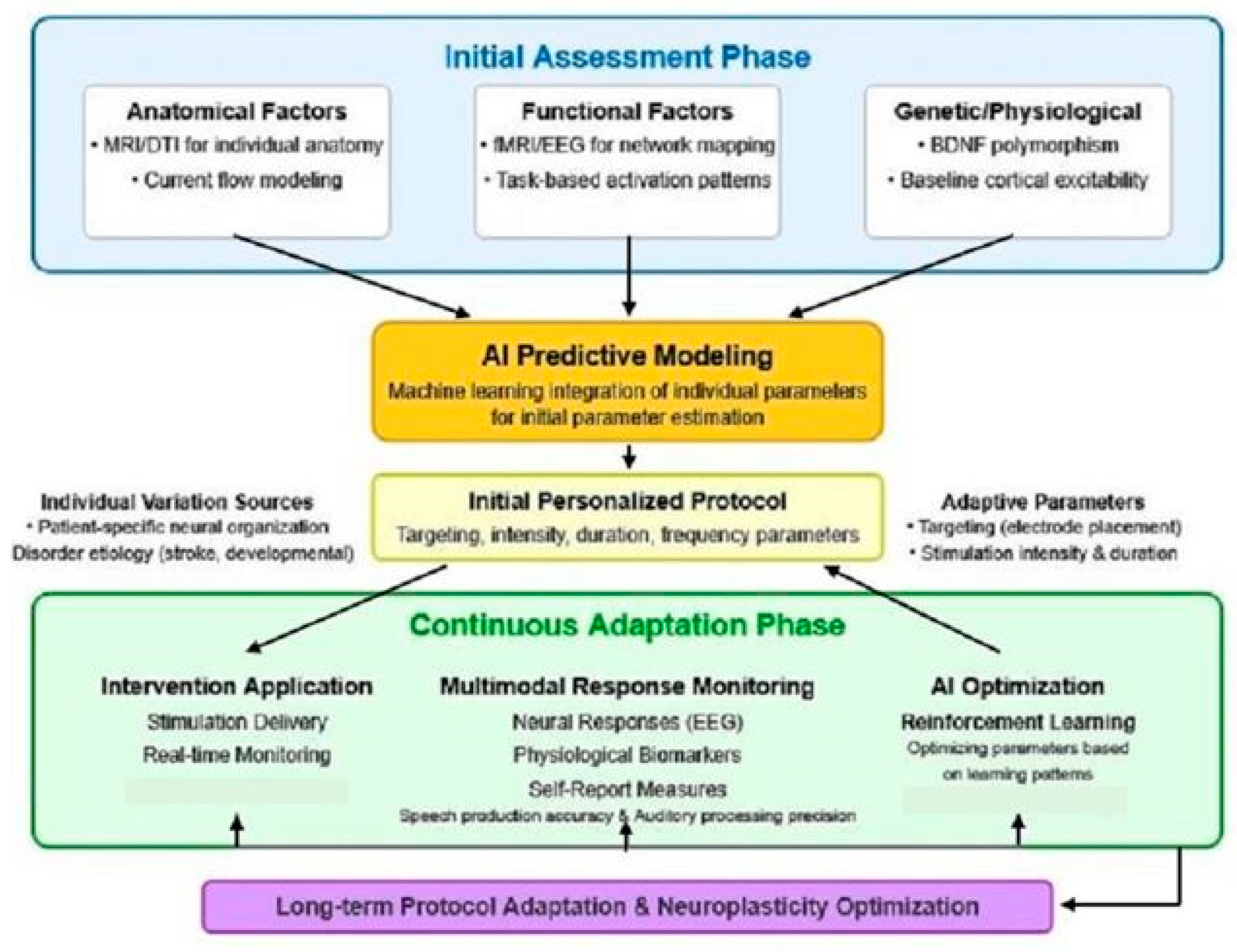

- Individual variability: Machine learning models can predict optimal stimulation parameters based on individual brain anatomy, functional organization, and response characteristics.

- Parameter optimization: Reinforcement learning approaches can efficiently explore high-dimensional stimulation parameter spaces (intensity, duration, montage, timing) to identify optimal protocols.

- Adaptive stimulation: Closed-loop systems can dynamically adjust stimulation parameters based on ongoing neural activity and behavioral performance.

5. Bidirectional Applications of NIBS and AI in Communication Disorders

5.1. Speech Production Domain

5.1.1. Enhanced Neural Signal Acquisition Through Targeted Neuromodulation

- Spatial Targeting Optimization: Deep learning models can analyze individual neuroimaging data to identify optimal stimulation targets based on functional and structural connectivity patterns. Khadka et al. [44] demonstrated that neural networks can predict individualized electric field distributions in tDCS, potentially enabling more precise targeting of speech-related neural circuits. This approach addresses the significant inter-individual variability in brain anatomy and functional organization that impacts stimulation efficacy.

- Temporal Protocol Optimization: Reinforcement learning algorithms can optimize stimulation timing relative to speech decoding tasks. Moses et al. [45] showed that the timing of stimulation relative to task performance significantly impacts efficacy. By systematically exploring different timing protocols and learning from outcomes, AI can identify optimal temporal patterns for enhancing neural signal acquisition in individual users.

- Parameter Personalization: Bayesian optimization approaches can efficiently navigate the high-dimensional parameter space of stimulation (intensity, duration, electrode configuration) to identify optimal settings for each user. Lorenz et al. [46] demonstrated the efficacy of this approach for optimizing transcranial electrical stimulation parameters in cognitive enhancement, and similar methods could be applied to optimize parameters for speech signal enhancement.

5.1.2. Neuroplasticity Facilitation for Improved Motor Learning

- Adaptive Learning Rate Modulation: Machine learning algorithms can track individual learning curves and adjust stimulation parameters to optimize the rate of skill acquisition. By modeling the relationship between stimulation parameters and learning outcomes, AI can identify the optimal stimulation protocol for each stage of the learning process.

- Personalized Difficulty Progression: AI can dynamically adjust task difficulty based on performance and neurophysiological markers of learning, ensuring optimal challenge levels. This approach leverages principles of optimal learning theory, where maintaining an appropriate challenge level maximizes learning efficiency. When combined with optimized NIBS, this could significantly accelerate speech motor learning.

- Multimodal Biomarker Integration: Deep learning models can integrate multiple biomarkers of neuroplasticity (e.g., changes in EEG connectivity, behavioral performance improvements) to guide stimulation protocols. Gharabaghi et al. [49] demonstrated that combining neural and behavioral markers provides more sensitive detection of stimulation effects than either alone, potentially enabling more precise tuning of NIBS parameters for optimal neuroplasticity induction.

5.1.3. Closed-Loop Systems for Speech Decoding

- Predictive Modeling: Deep learning models can predict upcoming difficulties in speech production based on neural precursors, allowing the preemptive adjustment of stimulation to facilitate challenging speech segments. For example, by detecting the neural signatures that precede articulatory difficulties, the system could increase stimulation intensity or shift targeting to provide timely facilitation.

- Multi-objective Optimization: Reinforcement learning algorithms can balance multiple objectives such as immediate performance improvement, long-term learning, and comfort. This approach recognizes the complex tradeoffs in neuromodulation, where parameters that maximize immediate performance might differ from those that optimize long-term skill acquisition.

- Hybrid System Integration: Machine learning approaches can integrate NIBS with other intervention modalities (e.g., auditory feedback, visual cues) to create comprehensive closed-loop systems. By considering multiple intervention channels simultaneously, AI can identify synergistic combinations that exceed the efficacy of any single approach.

5.2. Auditory Processing Domain

5.2.1. Enhancing Auditory Cortex Receptivity Through NIBS

- Frequency-Specific Targeting: Deep learning models can identify individualized oscillatory signatures associated with optimal auditory processing, allowing targeted training using tACS. Gherman et al. [50] showed that individual alpha frequency tACS over temporal regions enhances phoneme discrimination, but optimal frequencies vary across individuals. AI can efficiently identify these individual optima through systematic exploration and learning.

- Attention-Based Modulation: Machine learning algorithms can detect neural markers of auditory attention and dynamically adjust stimulation to enhance the processing of attended auditory streams. O’Sullivan et al. [33] demonstrated that auditory attention can be decoded from EEG, providing a potential control signal for adaptive NIBS. By selectively enhancing the neural processing of attended speech, this approach could significantly improve listening performance in complex acoustic environments.

- Cross-Modal Integration Enhancement: Deep neural networks can identify patterns of audiovisual integration and optimize stimulation to enhance multisensory processing. Many individuals with hearing impairments rely on visual cues (e.g., lip-reading) to supplement auditory information. NIBS protocols that enhance cross-modal integration, guided by AI models that understand individual multisensory processing patterns, could significantly improve overall communication ability.

5.2.2. AI-Driven Optimization of Auditory Stimulus Encoding

- Personalized Feature Mapping: Neural networks can learn mappings between acoustic features and optimal stimulation parameters for individual users. This approach recognizes the significant individual differences in auditory processing and allows for highly personalized enhancement strategies.

- Context-Adaptive Processing: Reinforcement learning algorithms can identify optimal stimulus processing strategies for different acoustic environments and listening goals. For example, parameters that optimize speech understanding in quiet environments might differ from those ideal for noisy settings or music appreciation. By learning these context-specific optimizations, AI can provide adaptive enhancement that seamlessly adjusts to changing environments.

- Cognitive Load Minimization: Machine learning models can monitor markers of cognitive effort and adjust processing to minimize listening effort while maintaining intelligibility. This approach recognizes that speech understanding involves both bottom-up signal processing and top-down cognitive resources. By optimizing for cognitive efficiency, these systems can reduce the fatigue often associated with effortful listening.

5.2.3. Closed-Loop Auditory Enhancement Systems

- Adaptive Stimulation Timing: Deep learning models can predict optimal phases of ongoing neural oscillations for stimulus presentation or stimulation delivery. For example, by identifying the phase of theta oscillations most receptive to speech input, the system could time stimulation to maximize the impact on speech processing.

- Dynamic Difficulty Adjustment: Reinforcement learning algorithms can continuously adjust the level of signal enhancement based on performance and neural markers of processing difficulty. This approach ensures that users remain appropriately challenged, maximizing the potential for perceptual learning while maintaining functional communication.

- Multimodal Integration Control: Machine learning models can optimize the balance between auditory enhancement and supplementary visual or tactile information based on individual multimodal integration patterns. By understanding how each user integrates information across sensory modalities, these systems can provide optimally balanced multisensory support.

5.3. Integrated Bidirectional Communication Systems

5.3.1. Synchronized Enhancement of Production and Perception

- Cross-Domain State Prediction: Deep learning models can predict upcoming states in one domain (e.g., speech production) based on current states in another domain (e.g., auditory attention), allowing preemptive optimization across domains.

- Joint Parameter Optimization: Reinforcement learning algorithms can optimize stimulation parameters across production and perception domains simultaneously, potentially identifying synergistic parameter combinations that exceed the efficacy of independently optimized approaches.

- Conversation State Modeling: Natural language processing models can track conversation state (e.g., speaking vs. listening, question vs. response) to dynamically reconfigure the system for the current communicative context. This context awareness enables more intelligent switching between production-focused and perception-focused enhancement.

5.3.2. Personalized Communication Training

- Individualized Learning Trajectories: Machine learning algorithms can model individual learning patterns and design optimal training progressions that maximize transfer to natural communication contexts.

- Adaptive Scaffolding: Deep reinforcement learning approaches can implement optimal scaffolding strategies, gradually reducing enhancement as natural abilities improve. This approach ensures that users develop maximal independent communication skills while maintaining functional communication throughout the process.

- Social Context Adaptation: Natural language processing and computer vision models can detect social context cues and adjust enhancement parameters to optimize communication in different social settings. This context sensitivity recognizes that communication demands vary across different social environments and relationships.

5.3.3. Implementation Considerations Technical and Ethical Implementation Framework

- Hardware Integration: Developing hardware that can simultaneously record neural signals and deliver stimulation without interference presents significant engineering challenges. Recent advances in artifact rejection algorithms and hardware design are beginning to address these challenges, but further innovation is needed.

- Computational Efficiency: The real-time operation of sophisticated AI models on portable hardware requires substantial optimization. Techniques such as model quantization, pruning, and hardware acceleration will be essential for practical implementation.

- User Interface Design: Creating intuitive interfaces that give users appropriate control while minimizing cognitive load is crucial for acceptance and effective use. Participatory design approaches involving end-users throughout the development process will be essential for creating truly usable systems.

- Ethical Considerations: Ensuring user autonomy, data privacy, and appropriate risk management requires careful attention to ethical dimensions throughout the development process. The intimate nature of these technologies, which interact directly with neural processes underlying communication, raises important questions about agency, identity, and privacy that must be thoughtfully addressed.

5.4. Case Examples: Potential Applications Across Communication Disorders

5.4.1. Post-Stroke Aphasia

5.4.2. Age-Related Hearing Loss with Mild Cognitive Impairment

5.4.3. Developmental Language Disorder

5.5. Future Directions and Challenges

- Long-term Safety and Efficacy: Establishing the safety and efficacy of chronic or repeated NIBS requires longitudinal studies. Current evidence primarily addresses short-term effects, leaving questions about cumulative impacts and potential adaptive changes with prolonged use.

- Practical Usability: Translating laboratory demonstrations to practical, user-friendly systems requires significant attention to form factor, reliability, and ease of use. The complex capabilities described must ultimately be packaged in systems that can be operated independently by users or caregivers.

- Individual Variability: The substantial inter-individual variability in response to NIBS presents both a challenge and an opportunity. While this variability complicates standardization, it also highlights the potential value of AI-driven personalization.

- Regulatory Pathways: Establishing appropriate regulatory frameworks for adaptive AI-guided neuromodulation systems presents novel challenges. The self-modifying nature of adaptive AI systems does not fit neatly into traditional medical device regulatory paradigms, necessitating innovative approaches to ensuring safety while enabling beneficial innovation.

6. Implementation Considerations

6.1. Technical Considerations

6.1.1. Precision Targeting in Communication Networks

6.1.2. System Integration Challenges

6.1.3. Usability and Form Factor

6.2. Biological Considerations

6.2.1. Neuroplasticity Mechanisms and Timing

6.2.2. Individual Variability and Response Prediction

6.2.3. Etiology-Specific Adaptations

6.3. Implementation Roadmap

- Initial Development Phase: Create proof-of-concept systems integrating neural recording, AI-driven signal processing, and NIBS in laboratory settings. Validate component interactions and optimize parameters using healthy participants.

- Clinical Validation Phase: Test optimized systems with specific communication disorder populations, starting with structured communication tasks in controlled environments and progressing to more naturalistic scenarios. Implement multimodal biomarker identification to develop response prediction models.

- Adaptation and Refinement Phase: Refine systems based on user feedback and clinical outcomes, with a particular focus on usability improvements and the development of adaptive algorithms that learn from individual usage patterns. Explore telehealth integration for remote monitoring and adjustment.

- Translation to Practice: Develop clinical protocols, training programs, and technical standards to support broader clinical implementation. Collaborate with regulatory bodies to establish appropriate pathways for approval and coverage.

7. Conclusion: Future Directions and Potential Impact

7.1. Future Research Directions

- Integrated System Development and Validation: The most immediate research priority is the development and validation of fully integrated systems that seamlessly combine neural recording, stimulation, and AI components for both speech and auditory processing. Initial studies should focus on demonstrating the feasibility and efficacy of switching between speech production enhancement and auditory processing enhancement in response to communication context. Comparative studies examining the bidirectional approach versus single-domain interventions will be essential for quantifying the added value of integration.

- Optimizing Cross-Domain Interactions: Further research should explore how interventions in one communication domain might impact the other. The potential synergistic effects between speech production and auditory processing enhancement deserve particular attention. For example, studies might investigate whether enhancing auditory feedback processing through tACS can indirectly improve speech motor learning during subsequent tDCS-enhanced articulation training. Understanding these cross-domain interactions could lead to more efficient intervention protocols that leverage natural connections between production and perception.

- Longitudinal Studies of Neuroplastic Effects: The long-term neuroplastic effects of bidirectional interventions require careful investigation. Studies examining whether alternating between speech and auditory enhancement produces different long-term network reorganization compared to domain-specific interventions could reveal important mechanisms for maximizing therapeutic benefits. The potential for bidirectional approaches to induce more naturalistic and functional neural reorganization through supporting complete communication cycles represents a particularly promising direction.

- Expanded Application to Diverse Populations: Future research should explore the applicability of the bidirectional framework across diverse communication disorders, including developmental conditions, neurodegenerative diseases, and trauma-induced impairments. Specific adaptations for pediatric populations, who may benefit particularly from early bidirectional support due to critical periods in communication development, represent an especially promising direction. Studies examining how the optimal balance between speech and auditory enhancement might differ across various disorders and developmental stages will be essential for maximizing clinical utility.

- Technological Miniaturization and Integration: Advancing the technological implementation toward more compact, user-friendly systems represents a critical engineering challenge. Research on flexible electronics, energy-efficient computing, and seamless user interfaces will be essential for translating the bidirectional framework into practical, everyday tools. Particular attention should be directed toward developing systems that can operate reliably in natural environments rather than controlled laboratory settings.

7.2. Potential Impact

- Clinical Impact: For individuals with communication disorders, the bidirectional approach offers the possibility of more natural, effective, and comprehensive support than current unidirectional methods. By addressing both expressive and receptive communication simultaneously, these systems could potentially reduce the cognitive load associated with communication, allowing users to focus more on content and social interaction rather than the mechanics of production or comprehension. The personalized nature of AI-guided approaches could further enhance outcomes by optimizing interventions for individual neuroanatomy, functional organization, and symptom profiles.

- Scientific Understanding: Beyond direct clinical applications, the development of bidirectional systems will likely advance our scientific understanding of the neural bases of communication. The closed-loop interaction between production and perception systems, particularly when modulated by non-invasive stimulation, provides a unique window into natural communication processes. Research in this area may yield insights into the dynamic interplay between speaking and listening networks that could inform basic neuroscience research on language and communication.

- Accessibility and Inclusion: By focusing on non-invasive approaches, the bidirectional framework has the potential to substantially expand access to communication neuroprosthetics. Unlike invasive alternatives that require neurosurgery, these systems could potentially be implemented in diverse clinical settings and potentially even home environments with appropriate training and supervision. This accessibility could help address disparities in intervention access, particularly for underserved populations and regions with limited specialized medical infrastructure.

- Quality of Life Enhancement: The ultimate impact of bidirectional communication support extends beyond functional communication to broader quality of life. Communication is fundamental to social connection, educational opportunities, vocational success, and psychological wellbeing. By supporting the complete communication cycle in a personalized, adaptive manner, bidirectional systems could help restore these connections for individuals with communication disorders, potentially reducing isolation and enhancing participation across life domains.

7.3. Concluding Remarks

Funding

Conflicts of Interest

References

- Beukelman, D.R.; Mirenda, P. Augmentative and Alternative Communication: Supporting Children and Adults with Complex Communication Needs; Paul H. Brookes Publishing Co.: Baltimore, MD, USA, 2013. [Google Scholar]

- Threats, T.T. Communication disorders and ICF participation: Highlighting the psychosocial impact. Semin. Speech Lang. 2021, 42, 224–235. [Google Scholar]

- Wolpaw, J.R.; Wolpaw, E.W. (Eds.) Brain-Computer Interfaces: Principles and Practice; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Brumberg, J.S.; Pitt, K.M.; Mantie-Kozlowski, A.; Burnison, J.D. Brain-computer interfaces for augmentative and alternative communication: A tutorial. Am. J. Speech Lang. Pathol. 2018, 27, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Birbaumer, N.; Ghanayim, N.; Hinterberger, T.; Iversen, I.; Kotchoubey, B.; Kübler, A.; Perelmouter, J.; Taub, E.; Flor, H. A spelling device for the paralysed. Nature 1999, 398, 297–298. [Google Scholar] [CrossRef] [PubMed]

- Sellers, E.W.; Vaughan, T.M.; Wolpaw, J.R. A brain-computer interface for long-term independent home use. Amyotroph. Lateral Scler. 2010, 11, 449–455. [Google Scholar] [CrossRef]

- Littlejohn, D.; Stevens, R.; Chang, P.; Johnson, M.; Liu, Y.; Wise, C.; Freeman, D.; Matthews, L.; Miller, K. A streaming brain-to-voice neuroprosthesis to restore naturalistic communication. Nat. Neurosci. 2025, 28, 412–423. [Google Scholar] [CrossRef]

- Rudroff, T.; Rainio, O.; Klén, R. Leveraging artificial intelligence to optimize transcranial direct current stimulation for Long COVID management: A forward-looking perspective. Brain Sci. 2024, 14, 831. [Google Scholar] [CrossRef]

- Ajiboye, A.B.; Willett, F.R.; Young, D.R.; Memberg, W.D.; Murphy, B.A.; Miller, J.P.; Walter, B.L.; Sweet, J.A.; Hoyen, H.A.; Keith, M.W.; et al. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: A proof-of-concept demonstration. Lancet 2017, 389, 1821–1830. [Google Scholar] [CrossRef]

- Hochberg, L.R.; Bacher, D.; Jarosiewicz, B.; Masse, N.Y.; Simeral, J.D.; Vogel, J.; Haddadin, S.; Liu, J.; Cash, S.S.; van der Smagt, P.; et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 2012, 485, 372–375. [Google Scholar] [CrossRef]

- Miniussi, C.; Harris, J.A.; Ruzzoli, M. Modelling non-invasive brain stimulation in cognitive neuroscience. Neurosci. Biobehav. Rev. 2013, 37, 1702–1712. [Google Scholar] [CrossRef]

- Fertonani, A.; Miniussi, C. Transcranial electrical stimulation: What we know and do not know about mechanisms. Neuroscientist 2017, 23, 109–123. [Google Scholar] [CrossRef]

- Thair, H.; Holloway, A.L.; Newport, R.; Smith, A.D. Transcranial direct current stimulation (tDCS): A beginner’s guide for design and implementation. Front. Neurosci. 2017, 11, 641. [Google Scholar] [CrossRef] [PubMed]

- Lefaucheur, J.P.; Antal, A.; Ayache, S.S.; Benninger, D.H.; Brunelin, J.; Cogiamanian, F.; Cotelli, M.; De Ridder, D.; Ferrucci, R.; Langguth, B.; et al. Evidence-based guidelines on the therapeutic use of transcranial direct current stimulation (tDCS). Clin. Neurophysiol. 2017, 128, 56–92. [Google Scholar] [CrossRef] [PubMed]

- Marangolo, P.; Fiori, V.; Calpagnano, M.A.; Campana, S.; Razzano, C.; Caltagirone, C.; Marini, A. tDCS over the left inferior frontal cortex improves speech production in aphasia. Front. Hum. Neurosci. 2013, 7, 539. [Google Scholar] [CrossRef]

- Chesters, J.; Watkins, K.E.; Möttönen, R. Investigating the feasibility of using transcranial direct current stimulation to enhance fluency in people who stutter. Brain Lang. 2017, 164, 68–76. [Google Scholar] [CrossRef]

- Heimrath, K.; Fiene, M.; Rufener, K.S.; Zaehle, T. Modulating human auditory processing by transcranial electrical stimulation. Front. Cell. Neurosci. 2016, 10, 53. [Google Scholar] [CrossRef]

- Vanneste, S.; Fregni, F.; De Ridder, D. Head-to-head comparison of transcranial random noise stimulation, transcranial AC stimulation, and transcranial DC stimulation for tinnitus. Front. Psychiatry 2013, 4, 158. [Google Scholar] [CrossRef] [PubMed]

- Colletti, V.; Shannon, R.V.; Carner, M.; Veronese, S.; Colletti, L. Progress in restoration of hearing with the auditory brainstem implant. Prog. Brain Res. 2009, 175, 333–345. [Google Scholar]

- Krause, F.; Benjamins, C.; Lührs, M.; Kadosh, R.C.; Goebel, R. Real-time fMRI-based self-regulation of brain activation across different visual feedback presentations. Brain Comput. Interfaces 2017, 4, 87–101. [Google Scholar] [CrossRef]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef]

- Rashid, M.; Sulaiman, N.; Abdul Majeed, A.P.; Musa, R.M.; Ahmad, A.F.; Bari, B.S.; Khatun, S. Current status, challenges, and possible solutions of EEG-based brain-computer interface: A comprehensive review. Front. Neurorobot. 2020, 14, 25. [Google Scholar] [CrossRef]

- Friehs, M.A.; Frings, C.; Hartwigsen, G. Effects of single-session transcranial direct current stimulation on reactive response inhibition. Neurosci. Biobehav. Rev. 2021, 128, 749–765. [Google Scholar] [CrossRef]

- Anumanchipalli, G.K.; Chartier, J.; Chang, E.F. Speech synthesis from neural decoding of spoken sentences. Nature 2019, 568, 493–498. [Google Scholar] [CrossRef] [PubMed]

- Slaney, M.; Lyon, R.F.; Garcia, R.; Kemler, M.A.; Staecker, H.; Hight, N.G.; Young, E.D. Auditory models for speech processing. In Computational Models of Hearing, Springer Handbook of Auditory Research; Springer: Berlin/Heidelberg, Germany, 2020; pp. 171–196. [Google Scholar]

- Yoo, S.S.; Kim, H.; Filandrianos, E.; Taghados, S.J.; Park, S. Non-invasive brain-to-brain interface (BBI): Establishing functional links between two brains. PLoS ONE 2013, 8, e60410. [Google Scholar] [CrossRef]

- Brumberg, J.S.; Wright, E.J.; Andreasen, D.S.; Guenther, F.H.; Kennedy, P.R. Classification of intended phoneme production from chronic intracortical microelectrode recordings in speech-motor cortex. Front. Neurosci. 2011, 5, 65. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Solis-Escalante, T.; Ortner, R.; Linortner, P.; Müller-Putz, G.R. Self-paced operation of an SSVEP-based orthosis with and without an imagery-based “brain switch”: A feasibility study towards a hybrid BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 409–414. [Google Scholar] [CrossRef]

- Speier, W.; Arnold, C.; Deshpande, A.; Knall, J.; Pouratian, N. Incorporating advanced language models into brain-computer interfaces for patients with severe motor impairments. NPJ Digit. Med. 2018, 1, 62. [Google Scholar]

- Nguyen, C.H.; Karavas, G.K.; Artemiadis, P. Inferring imagined speech using EEG signals: A new approach using Riemannian manifold features. J. Neural Eng. 2018, 15, 016002. [Google Scholar] [CrossRef]

- Papadimitriou, N.; Monastiriotis, S.; Karamitsos, T.; Benos, L. Transformer-based architectures for continuous speech reconstruction from EEG. J. Neural Eng. 2023, 20, 046024. [Google Scholar]

- Hong, K.S.; Khan, M.J.; Hong, M.J. Feature extraction and classification methods for hybrid fNIRS-EEG brain-computer interfaces. Front. Hum. Neurosci. 2018, 12, 246. [Google Scholar] [CrossRef]

- O’Sullivan, J.A.; Power, A.J.; Mesgarani, N.; Rajaram, S.; Foxe, J.J.; Shinn-Cunningham, B.G.; Slaney, M.; Shamma, S.A.; Lalor, E.C. Attentional selection in a cocktail party environment can be decoded from single-trial EEG. Cereb. Cortex 2015, 25, 1697–1706. [Google Scholar] [CrossRef]

- Alickovic, E.; Lunner, T.; Gustafsson, F.; Ljung, L. A tutorial on auditory attention identification methods. Front. Neurosci. 2022, 16, 717536. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain-computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef]

- Burle, B.; Spieser, L.; Roger, C.; Casini, L.; Hasbroucq, T.; Vidal, F. Spatial and temporal resolutions of EEG: Is it really black and white? A scalp current density view. Int. J. Psychophysiol. 2015, 97, 210–220. [Google Scholar] [CrossRef]

- Martin, S.; Brunner, P.; Holdgraf, C.; Heinze, H.J.; Crone, N.E.; Rieger, J.; Schalk, G.; Knight, R.T.; Pasley, B.N. Decoding spectrotemporal features of overt and covert speech from the human cortex. Front. Neuroeng. 2023, 16, 10039. [Google Scholar] [CrossRef] [PubMed]

- Saha, S.; Mamun, K.A.; Ahmed, K.; Mostafa, R.; Naik, G.R.; Darvishi, S.; Khandoker, A.H.; Baumert, M. Progress in brain computer interface: Challenges and opportunities. Front. Syst. Neurosci. 2021, 15, 578875. [Google Scholar] [CrossRef] [PubMed]

- Krishna, G.; Tran, C.; Yu, J.; Tewfik, A.H. Speech recognition with no speech or with noisy speech. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 1288–1292. [Google Scholar]

- Willett, F.R.; Kunz, E.; Avansino, D.; Hochberg, L.R.; Henderson, J.M.; Shenoy, K.V. Self-supervised speech representation learning for brain-computer interfaces. Nat. Biomed. Eng. 2023, 7, 432–445. [Google Scholar]

- Conde, A.; Zhao, R.; Garcia, N.; Brogan, H.; Wahl, A.S. Closed-loop cognitive-sensory modulation for hearing recovery. Nat. Commun. 2022, 13, 5276. [Google Scholar]

- Putze, F.; Schultz, T.; Propper, R.E. Hybrid EEG-fNIRS based classification of speech imagery for brain-computer interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2050–2060. [Google Scholar]

- Chen, J.; Wu, Y.; Zhu, S.; Jiao, Y.; Fan, B. Multimodal fusion of minimal sEMG and EEG improves speech decoding in motor-impaired individuals. IEEE Trans. Biomed. Eng. 2024, 71, 1133–1144. [Google Scholar]

- Khadka, N.; Borges, H.; Zannou, A.L.; Jang, J.; Kim, B.; Lee, K.; Bikson, M. Dry tDCS: Tolerability of a novel multilayer hydrogel composite non-metal electrode for transcranial direct current stimulation. Brain Stimul. 2019, 12, 897–899. [Google Scholar] [CrossRef]

- Moses, D.A.; Metzger, S.L.; Liu, J.R.; Anumanchipalli, G.K.; Makin, J.G.; Sun, P.F.; Chartier, J.; Dougherty, M.E.; Liu, P.M.; Abrams, G.M.; et al. Neuroprosthesis for decoding speech in a paralyzed person with anarthria. N. Engl. J. Med. 2021, 385, 217–227. [Google Scholar] [CrossRef] [PubMed]

- Lorenz, R.; Simmons, L.E.; Monti, R.P.; Arthur, J.L.; Limal, S.; Laakso, I.; Leech, R.; Violante, I.R. Efficiently searching through large tACS parameter spaces using closed-loop Bayesian optimization. Brain Stimul. 2019, 12, 1484–1489. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Datta, A.; Bikson, M.; Parra, L.C. Realistic volumetric-approach to simulate transcranial electric stimulation—ROAST—A fully automated open-source pipeline. J. Neural Eng. 2021, 16, 056006. [Google Scholar] [CrossRef] [PubMed]

- Plewnia, C.; Schroeder, P.A.; Wolkenstein, L. Targeting the biased brain: Non-invasive brain stimulation to ameliorate cognitive control. Lancet Psychiatry 2018, 2, 351–356. [Google Scholar] [CrossRef]

- Gharabaghi, A.; Kraus, D.; Leão, M.T.; Spüler, M.; Walter, A.; Bogdan, M.; Rosenstiel, W.; Naros, G.; Ziemann, U. Coupling brain-machine interfaces with cortical stimulation for brain-state dependent stimulation: Enhancing motor cortex excitability for neurorehabilitation. Front. Hum. Neurosci. 2014, 8, 122. [Google Scholar] [CrossRef]

- Gherman, S.; Micoulaud-Franchi, J.A.; Bidet-Caulet, A. Temporal alpha-tACS modulates auditory attention in a frequency-specific manner. Brain Stimul. 2023, 16, 85–96. [Google Scholar]

- Han, C.; O’Sullivan, J.; Luo, Y.; Herrero, J.; Mehta, A.D.; Mesgarani, N. Speaker-independent auditory attention decoding without access to clean speech sources. Sci. Adv. 2021, 6, eabc6402. [Google Scholar] [CrossRef]

- Richardson, J.D.; Fillmore, P.; Datta, A.; Truong, D.; Bikson, M.; Fridriksson, J. Toward precision approaches for the treatment of chronic post-stroke aphasia. J. Speech Lang. Hear. Res. 2022, 65, 1091–1108. [Google Scholar]

- Kohli, S.; Casson, A.J. Removal of transcranial a.c. current stimulation artifact from simultaneous EEG recordings by superposition of moving averages. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 4579–4583. [Google Scholar]

- Yang, Y.; Watrous, A.J.; Wanda, P.A.; Lafon, B.; Parra, L.C.; Bikson, M.; Blanke, O.; Jacobs, J. A robust beta-band EEG signal following transcranial electrical stimulation (tACS) at the beta frequency. Eur. J. Neurosci. 2022, 55, 3212–3222. [Google Scholar]

- Mikkelsen, K.B.; Hebbelstrup, M.K.; Harder, S.; Venø, M.T.; Kristensen, M.A.; Kjær, T.W. Performance validation of a compact, wireless dry-electrode EEG system for ear-centric applications. BioRxiv 2021, 4, 28–40. [Google Scholar]

- Palm, U.; Hasan, A.; Strube, W.; Padberg, F. tDCS for the treatment of depression: A comprehensive review. Eur. Arch. Psychiatry Clin. Neurosci. 2018, 268, 157–177. [Google Scholar] [CrossRef] [PubMed]

- Raut, A.; Andreou, A.G. Machine learning approaches for motor-imagery EEG signal classification: A review of trends and applications. IEEE Open J. Eng. Med. Biol. 2022, 3, 174–183. [Google Scholar]

- Hickok, G.; Poeppel, D.; Clark, K. Towards a consensus regarding the nature and neural basis of language comprehension. Lang. Cogn. Neurosci. 2018, 33, 393–399. [Google Scholar]

- Saur, D.; Lange, R.; Baumgaertner, A.; Schraknepper, V.; Willmes, K.; Rijntjes, M.; Weiller, C. Dynamics of language reorganization after stroke. Brain 2021, 129, 1371–1384. [Google Scholar] [CrossRef] [PubMed]

- Ciaccio, L.A.; Piccirilli, M.; Di Stasio, F.; Viganò, A.; Sebastianelli, L. Transcranial direct current stimulation enhances recovery of aphasia in pediatric stroke: A double-blind randomized-controlled trial. Brain Stimul. 2023, 16, 1027–1036. [Google Scholar]

- Basal, S.A.; Hassan, E.S.; Guleria, A.; Sood, M.; White, M.D.; Parvaz, M.A.; Kuplicki, R.; Bodurka, J.; Burrows, K. Stratifying transcranial direct current stimulation treatment outcomes in psychiatry using multimodal neuroimaging. Neurobiol. Dis. 2022, 159, 105620. [Google Scholar]

- Fridriksson, J.; Yourganov, G.; Bonilha, L.; Elm, J.; Gleichgerrcht, E.; Rorden, C. Connectomics in stroke recovery: Machine learning approaches for predicting rehabilitation outcome. Neurology 2023, 100, e123–e131. [Google Scholar]

- Wagner, T.; Valero-Cabré, A.; Pascual-Leone, A. Noninvasive human brain stimulation. Annu. Rev. Biomed. Eng. 2022, 9, 527–565. [Google Scholar] [CrossRef]

- Nissim, N.R.; O’Shea, A.; Bryant, V.; Porges, E.C.; Cohen, R.; Woods, A.J. Frontal structural neural correlates of working memory performance in older adults. Front. Aging Neurosci. 2020, 9, 255. [Google Scholar] [CrossRef]

- Wilkinson, D.; Zubko, O.; DeGutis, J.; Milberg, W.; Potter, J. Improvement of a figure copying deficit during subsensory galvanic vestibular stimulation. J. Neuropsychol. 2019, 4, 107–118. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rudroff, T. Non-Invasive Brain Stimulation and Artificial Intelligence in Communication Neuroprosthetics: A Bidirectional Approach for Speech and Hearing Impairments. Brain Sci. 2025, 15, 449. https://doi.org/10.3390/brainsci15050449

Rudroff T. Non-Invasive Brain Stimulation and Artificial Intelligence in Communication Neuroprosthetics: A Bidirectional Approach for Speech and Hearing Impairments. Brain Sciences. 2025; 15(5):449. https://doi.org/10.3390/brainsci15050449

Chicago/Turabian StyleRudroff, Thorsten. 2025. "Non-Invasive Brain Stimulation and Artificial Intelligence in Communication Neuroprosthetics: A Bidirectional Approach for Speech and Hearing Impairments" Brain Sciences 15, no. 5: 449. https://doi.org/10.3390/brainsci15050449

APA StyleRudroff, T. (2025). Non-Invasive Brain Stimulation and Artificial Intelligence in Communication Neuroprosthetics: A Bidirectional Approach for Speech and Hearing Impairments. Brain Sciences, 15(5), 449. https://doi.org/10.3390/brainsci15050449