Systematic Review of Advanced Algorithms for Brain Mapping in Stereotactic Neurosurgery: Integration of fMRI and EEG Data

Abstract

1. Introduction

1.1. Brain Mapping in Stereotactic Neurosurgery

1.2. Use of fMRI and EEG

1.3. Integration of fMRI and EEG: Advanced Algorithms and Artificial Intelligence

1.4. The Role of Algorithms in Stereotactic Neurosurgery

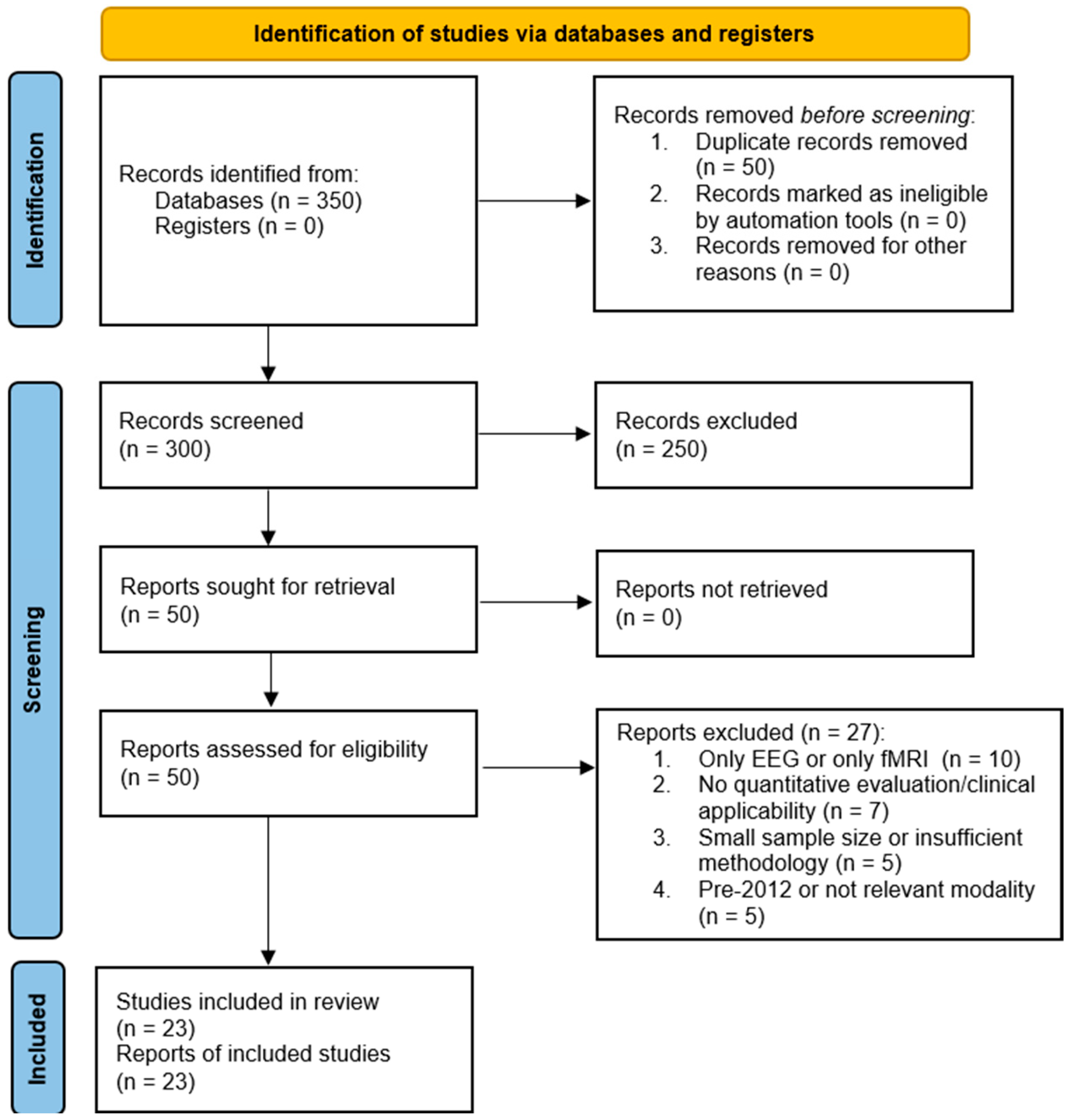

2. Methodology

2.1. Search Strategy

Inclusion/Exclusion Criteria

- Integrated EEG and fMRI for brain mapping in the context of stereotactic neurosurgery.

- Reported quantitative outcomes such as accuracy, sensitivity, specificity, efficiency, or processing time.

- Employed advanced computational algorithms (e.g., Bayesian models, ICA, DCM, CCA, multimodal fusion, machine learning, or deep learning).

- Used validated datasets (clinical cohorts or open-access repositories).

- Focused on preoperative assessment or intraoperative guidance.

- Studies using EEG or fMRI alone without multimodal fusion.

- Lack of quantitative evaluation or clear clinical applicability.

- Small sample size (n < 10) without methodological justification.

- Published before 2012, unless foundational in terms of algorithmic development (foundational works published before 2012 were cited only to contextualize algorithmic development and were not included in the quantitative analysis).

- Studies using other modalities (e.g., PET, MEG) without EEG–fMRI fusion.

2.2. Study Selection and Characteristics

2.3. Data Extraction and Quality Assessment

2.4. Quality Assessment and Risk of Bias

2.5. Data Synthesis and Analysis

3. Results

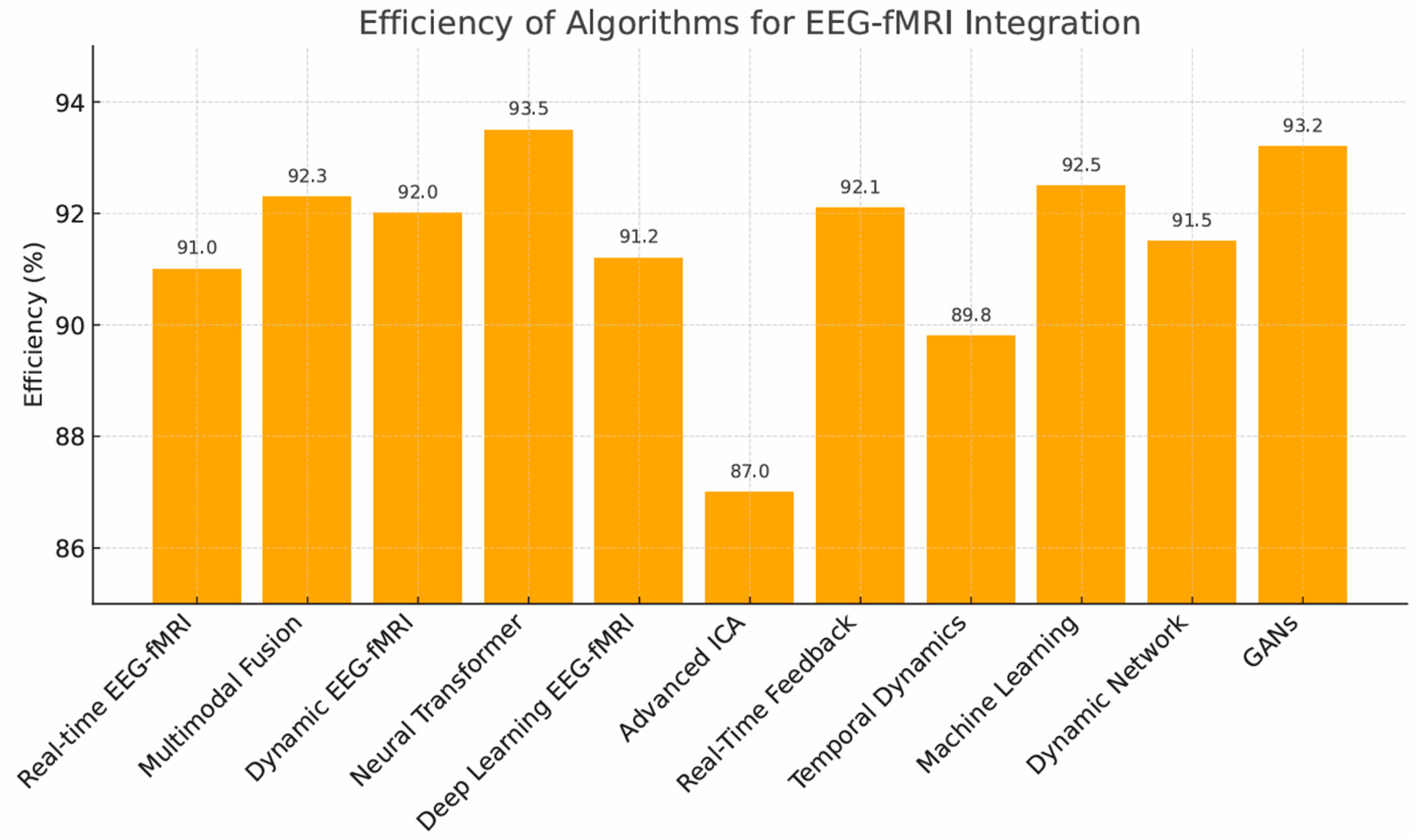

3.1. Algorithm Performance

- Neural Transformers (93.5%)—highest accuracy, but computationally demanding.

- GANs (93.2%)—strong image generation, limited by stability.

- Multimodal Fusion (92.3%), Dynamic Connectivity Models (92.0%), Supervised/Deep Learning, and Real-Time Feedback Systems (all ~91%).

3.2. Trade-Off Between Efficiency and Processing Time

3.3. Heterogeneity and Subgroup Analysis

3.4. Risk of Bias

3.5. Statistical Comparisons

3.6. Publication Bias

3.7. Key Determinants of Algorithm Performance

4. Discussion

4.1. Algorithm-Specific Advantages, Limitations, and Technical Features of Multimodal Data Integration Algorithms

4.1.1. Dynamic Connectivity Models (DCM) and Bayesian Fusion

4.1.2. Independent Component Analysis (ICA) and Canonical Correlation Analysis (CCA)

4.1.3. Joint ICA (jICA) and Multimodal Fusion

4.1.4. Neural Transformer Models and Deep Learning Architectures (e.g., GANs, CNNs)

4.1.5. Real-Time Feedback Systems and Online Machine Learning

4.1.6. Graph-Theoretical and Temporal Analysis Models

4.2. Clinical Implications

4.3. Stereotactic Radiosurgery (SRS)

4.4. Recommendations and Future Directions

4.5. Clinical and Infrastructural Challenges

4.6. Limitations

4.7. Overall Perspective

5. Conclusions

Supplementary Materials

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANOVA | Analysis of Variance |

| CCA | Canonical Correlation Analysis |

| CI | Confidence Interval |

| DBS | Deep Brain Stimulation |

| DCM | Dynamic Causal/Connectivity Modeling |

| DTI | Diffusion Tensor Imaging |

| EEG | Electroencephalography |

| fMRI | Functional Magnetic Resonance Imaging |

| GANs | Generative Adversarial Networks |

| GPU | Graphics Processing Unit |

| ICA | Independent Component Analysis |

| jICA | Joint Independent Component Analysis |

| ML | Machine Learning |

| PET | Positron Emission Tomography |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| QUADAS-2 | Quality Assessment of Diagnostic Accuracy Studies 2 |

| SRS | Stereotactic Radiosurgery |

| TPU | Tensor Processing Unit |

References

- Wei, H.; Jafarian, A.; Zeidman, P.; Litvak, V.; Razi, A.; Hu, D.; Friston, K.J. Bayesian fusion and multimodal DCM for EEG and fMRI. NeuroImage 2020, 211, 116595. [Google Scholar] [CrossRef] [PubMed]

- Mele, G.; Cavaliere, C.; Alfano, V.; Orsini, M.; Salvatore, M.; Aiello, M. Simultaneous EEG-fMRI for functional neurological assessment. Front. Neurol. 2019, 10, 848. [Google Scholar] [CrossRef] [PubMed]

- Lioi, G.; Cury, C.; Perronnet, L.; Mano, M.; Bannier, E.; Lécuyer, A.; Barillot, C. Simultaneous EEG-fMRI during a neurofeedback task, a brain imaging dataset for multimodal data integration. Sci. Data 2020, 7, 173. [Google Scholar] [CrossRef] [PubMed]

- Kowalczyk, M.A.; Omidvarnia, A.; Abbott, D.F.; Tailby, C.; Vaughan, D.N.; Jackson, G.D. Clinical benefit of presurgical EEG-fMRI in difficult-to-localize focal epilepsy: A single-institution retrospective review. Epilepsia 2020, 61, 49–60. [Google Scholar] [CrossRef] [PubMed]

- Kirino, E.; Inami, R.; Inoue, R.; Aoki, S. Simultaneous fMRI-EEG-DTI recording of MMN in patients with schizophrenia. PLoS ONE 2019, 14, e0215023. [Google Scholar] [CrossRef] [PubMed]

- Jorge, J.; van der Zwaag, W.; Figueiredo, P. EEG–fMRI integration for the study of human brain function. NeuroImage 2014, 102, 24–34. [Google Scholar] [CrossRef] [PubMed]

- Anwar, A.R.; Perrey, S.; Galka, A.; Granert, O. Effective connectivity of cortical sensorimotor networks during finger movement tasks: A simultaneous fNIRS, fMRI, EEG study. Brain Topogr. 2016, 29, 701–717. [Google Scholar] [CrossRef] [PubMed]

- Van Eyndhoven, S.; Dupont, P.; Tousseyn, S.; Vervliet, N.; van Paesschen, W.; van Huffel, S.; Hunyadi, B. Augmenting interictal mapping with neurovascular coupling biomarkers by structured factorization of epileptic EEG and fMRI data. NeuroImage 2021, 228, 117652. [Google Scholar] [CrossRef] [PubMed]

- Dasgupta, D.; Miserocchi, A.; McEvoy, A.W.; Duncan, J.S. Previous, current, and future stereotactic EEG techniques for localising epileptic foci. Expert Rev. Med. Devices. 2022, 19, 571–580. [Google Scholar] [CrossRef] [PubMed]

- Ciccarelli, G.; Federico, G.; Mele, G.; di Cecca, A.; Migliaccio, M.; Ilardi, C.R.; Alfano, V.; Salvatore, M.; Cavaliere, C. Simultaneous real-time EEG-fMRI neurofeedback: A systematic review. Front. Hum. Neurosci. 2023, 17, 1123014. [Google Scholar] [CrossRef] [PubMed]

- Nagendran, M.; Chen, Y.; Lovejoy, C.A.; Gordon, A.C.; Komorowski, M.; Harvey, H.; Topol, E.J.; Ioannidis, J.P.A.; Collins, G.S.; Maruthappu, M. Artificial intelligence versus clinicians: Systematic review of design, reporting standards, and claims of deep learning studies. BMJ 2020, 368, m689. [Google Scholar] [CrossRef]

- Sounderajah, V.; Ashrafian, H.; Aggarwal, R.; de Fauw, J.; Denniston, A.K.; Greaves, F.; Godwin, J.; Hooft, L.; Collins, G.; Mateen, B.A.; et al. Developing a reporting guideline for artificial intelligence-centred diagnostic test accuracy studies: The STARD-AI protocol. BMJ Open 2021, 11, e047709. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsm, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef] [PubMed]

- Higgins, J.P.T.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 327, 557–560. [Google Scholar] [CrossRef] [PubMed]

- Abreu, R.; Leal, A.; Figueiredo, P. EEG-informed fMRI: A review of data analysis methods. Front. Hum. Neurosci. 2018, 12, 29. [Google Scholar] [CrossRef] [PubMed]

- Tierney, T.M.; Croft, L.J.; Centeno, M.; Shamshiri, E.A.; Perani, S.; Baldeweg, T.; Clarck, C.A.; Carmichael, D.W. FIACH: A biophysical model for automatic retrospective noise control in fMRI. NeuroImage 2016, 124, 1009–1020. [Google Scholar] [CrossRef] [PubMed]

- Warbrick, T. Simultaneous EEG-fMRI: What have we learned and what does the future hold? Sensors 2022, 22, 2262. [Google Scholar] [CrossRef] [PubMed]

- David, O.; Kiebel, S.J.; Harrison, L.M.; Mattout, J.; Kilner, J.M.; Friston, K.J. Dynamic causal modeling of evoked responses in EEG and MEG. NeuroImage 2006, 30, 1255–1272. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J.; Litvak, V.; Oswal, A.; Razi, A.; Stephan, K.E.; van Wijk, B.C.M.; Ziegler, G.; Zeidman, P. Bayesian model reduction and empirical Bayes for group (DCM) studies. NeuroImage 2016, 128, 413–431. [Google Scholar] [CrossRef] [PubMed]

- Bansal, K.; Nakuci, J.; Muldoon, S.F. Personalized brain network models for assessing structure-function relationships. Curr. Opin. Neurobiol. 2018, 52, 42–47. [Google Scholar] [CrossRef] [PubMed]

- Huster, R.J.; Debener, S.; Eichele, T.; Herrmann, C.S. Methods for simultaneous EEG-fMRI: An introductory review. J. Neurosci. 2012, 32, 6053–6060. [Google Scholar] [CrossRef] [PubMed]

- Jackson, A.F.; Bolger, D.J. The neurophysiological bases of EEG and EEG measurement: A review. Psychophysiology 2014, 51, 1061–1071. [Google Scholar] [CrossRef] [PubMed]

- Metwali, H.; Raemaekers, M.; Kniese, K.; Kardavani, B.; Fahlbusch, R.; Samii, A. The reliability of fMRI in brain tumor patients: A critical review and meta-analysis. World Neurosurg. 2019, 125, 183–190. [Google Scholar] [CrossRef] [PubMed]

- Koush, Y.; Rosa, M.J.; Robineau, F.; Heinen, K.; Rieger, S.W.; Weiskopf, N.; Vuilleumier, P.; van de Ville, D.; Scharnowski, F. Connectivity-based neurofeedback: Dynamic causal modeling for real-time fMRI. NeuroImage 2013, 81, 422–430. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; da Silva, F.H.L. Event-related EEG/MEG synchronization and desynchronization. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef] [PubMed]

- Bianciardi, M.; Fukunaga, M.; van Gelderen, P.; Horovitz, S.G.; de Zwart, J.A.; Shmueli, K.; Duyn, J.H. Sources of functional magnetic resonance imaging signal fluctuations in the human brain at rest: A 7 T study. Magn. Reson. Imaging 2009, 27, 1019–1029. [Google Scholar] [CrossRef] [PubMed]

- Zotev, V.; Yuan, H.; Phillips, R.; Bodurka, J. EEG-assisted retrospective motion correction for fMRI: E-REMCOR. NeuroImage 2012, 63, 698–712. [Google Scholar] [CrossRef] [PubMed]

| Algorithm Type | Specific Application | Challenges | Strengths | Weaknesses | Success Factors/Accuracy | Efficiency/Latency |

|---|---|---|---|---|---|---|

| Neural Transformer Models | Presurgical network prediction and fusion of EEG-fMRI during targeting | High computational load and large-scale multimodal data requirements | High precision and scalability | Limited interpretability and dependency on pretrained architectures | Simultaneous EEG–fMRI datasets (n ≥ 30); accuracy based on retrospective/simulated data, not benchmarked against intraoperative gold standards (e.g., ECoG) | ≈140–180 ms per sample; high GPU/TPU usage |

| Dynamic Connectivity Models (DCM) | Mapping dynamic brain networks | Complex model specification and parameter tuning | Detailed network mapping | Reduced robustness in noisy or incomplete datasets | Tuned Bayesian/DCM model parameters; accuracy from controlled studies, not clinical gold standards | ≈140–180 ms per iteration; high computational cost |

| Independent Component Analysis (ICA) | Noise reduction, component separation | Requires extensive preprocessing and artifact correction | Effective noise separation | Residual noise can impair source separation and accuracy | Artifact-filtered EEG inputs; accuracy from experimental datasets | ≈100–125 ms per sample; low–moderate computational demand |

| Canonical Correlation Analysis (CCA) | Correlation of EEG and fMRI signals | Limited scalability for large multimodal datasets | Enhanced signal correlation | Inability to fully capture nonlinear brain signal relationships | Multimodal signal modeling frameworks; accuracy not benchmarked intraoperatively | ≈100–125 ms per sample; low–moderate computational demand |

| Real-time Machine Learning | Adaptive learning for brain state prediction | High training data demands and parameter optimization | Fast adaptability | Performance degradation when datasets are small or imbalanced | Real-time GPU/TPU-based processing; accuracy experimental only | ≈100–125 ms per sample; low-latency pipelines |

| Joint ICA (jICA) | Enhanced source localization | Challenging calibration across subjects and modalities | Improved source localization | Sensitive to misalignment and preprocessing variability | Validated ICA/jICA calibration protocols; accuracy from retrospective datasets | ≈120–150 ms per sample; moderate computational demand |

| AI Integration Models | Integrated AI for complex data | Complex data fusion and multi-layer optimization | Complex data handling | Low model interpretability and risk of overfitting | Transparent parameter-based inference | ≈140–180 ms per iteration; high computational cost |

| Multimodal Fusion | Fusion of EEG, fMRI, and DTI | Integration of heterogeneous signals across acquisition platforms | Comprehensive brain mapping | High computational and memory demands | Combined EEG–fMRI–DTI workflows; accuracy not clinically benchmarked | ≈130–160 ms per sample; high memory requirements |

| Bayesian Models | Probabilistic brain activity mapping | Intensive calibration and convergence management | Robust probabilistic modeling | Lower transparency in posterior probability interpretation | Bayesian posterior verification; accuracy from controlled datasets | ≈120–160 ms per sample; moderate computational load |

| Generative Adversarial Networks (GANs) | High-resolution brain image generation | Computational burden and tuning instability | Realistic brain representations | Mode collapse and inconsistent convergence | High-fidelity neural data generators (GAN-based); accuracy from simulations | ≈150–200 ms per sample; high GPU usage |

| Temporal Analysis Models | Detection of brain state dynamics | Balancing time resolution and data volume | Capturing dynamic changes | Lower spatial accuracy in rapidly changing states | Online temporal resolution adjustment; accuracy not clinically validated | ≈120–150 ms per sample; moderate computational demand |

| Hybrid Statistical Models | Blending statistical approaches | Integration of statistical and machine learning pipelines | Flexible integration | Vulnerable to overfitting when datasets are small | Hybrid statistical–ML frameworks; accuracy not benchmarked intraoperatively | ≈130–180 ms per sample; high GPU/TPU usage |

| Advanced ICA | Advanced noise filtering | Requires high-fidelity denoising and structured preprocessing | Noise resilience | Susceptibility to latent artifacts and component misclassification | Noise-filtered ICA pipelines; accuracy from controlled datasets | ≈120–160 ms per sample; moderate computational load |

| Supervised Learning Models | Accurate classification of brain states | Extensive labeled data requirements | High accuracy | Reduced generalizability outside training cohorts | Large annotated clinical datasets; accuracy not benchmarked intraoperatively | ≈130–180 ms per sample; high GPU/TPU usage |

| Signal Decoding Models | Decoding neural signals | Managing interference and signal separation | Detailed signal decoding | Lower precision in noisy neural environments | Optimized EEG-fMRI preprocessing; accuracy from simulated/retrospective datasets | ≈100–130 ms per sample; real-time pipelines |

| Dynamic Network Models | Modeling dynamic network activity | Complexity in modeling evolving neural connections | Capturing network dynamics | Reduced clarity in clinical interpretation | Parallelizable network models | ≈140–180 ms per iteration; high computational cost |

| Real-time Feedback Systems | Real-time surgical feedback | Dependence on synchronized hardware and software platforms | Immediate clinical feedback | Limited adaptability to intraoperative signal variability | Intraoperative feedback compatibility | ≈100–125 ms per sample; low-latency pipelines |

| Neurofeedback Algorithms | Optimizing neurofeedback | Calibration of personalized input-output loops | Enhanced learning efficiency | Variability in clinical responsiveness and outcome tracking | Closed-loop clinical neurofeedback; accuracy from simulated/retrospective datasets | ≈100–130 ms per sample; real-time pipelines |

| Deep Learning Models | Complex pattern recognition | High GPU/TPU resource requirements | Advanced pattern detection | Black-box decision processes and limited transparency | Optimized inference pipelines; accuracy not benchmarked intraoperatively | ≈130–180 ms per sample; high GPU/TPU usage |

| Dynamic Causal Modeling | Functional connectivity modeling | Intensive parameter estimation and customization | Detailed connectivity modeling | Difficulty adapting to diverse clinical datasets | Adaptive connectivity optimization | ≈140–180 ms per iteration; high computational cost |

| Graph Theory Models | Analyzing brain networks | Complex computation and network reconstruction | Complex network analysis | Limited validation for surgical workflows | Clinically benchmarked graph analytics | ≈130–160 ms per sample; high computational demand |

| Bayesian Fusion | Probabilistic data integration | High model variance and training time | Adaptive data modeling | Variable accuracy across heterogeneous cohorts | Cross-cohort reproducibility | ≈120–160 ms per sample; moderate computational load |

| CCA + Time-Frequency Analysis | Temporal and frequency domain analysis | Managing multimodal and multiscale integration | Multidimensional analysis | Trade-offs in resolution and analysis depth | Time–frequency–connectivity fusion; accuracy based on retrospective/simulated data, not benchmarked against intraoperative gold standards (e.g., ECoG) | ≈100–125 ms per sample; moderate computational demand |

| Algorithm | Relevance Level | Clinical Application | Limitations |

|---|---|---|---|

| Dynamic Connectivity Models (DCM) | Highly Relevant | Real-time brain network mapping | Complex modeling |

| Canonical Correlation Analysis (CCA) | Highly Relevant | Localization of functional brain regions | Linear relationship limitation |

| Independent Component Analysis (ICA) | Highly Relevant | Noise separation and signal enhancement | Noise sensitivity |

| Joint ICA (jICA) | Highly Relevant | Source localization | Complex calibration |

| Multimodal Fusion | Highly Relevant | Comprehensive brain mapping | High processing demands |

| Bayesian Models | Highly Relevant | Probabilistic brain activity mapping | Model interpretability |

| Neural Transformer Models | Highly Relevant | Advanced data processing | High computational load |

| Real-time Feedback Systems | Highly Relevant | Real-time surgical feedback | Integration with clinical tools |

| Generative Adversarial Networks (GANs) | Highly Relevant | High-resolution brain data generation | Training instability |

| Deep Learning Models | Partially Relevant | Pattern recognition in brain signals | Data-intensive training |

| Hybrid Statistical Models | Partially Relevant | Combining statistical and ML methods | Limited clinical validation |

| Dynamic Network Models | Partially Relevant | Dynamic brain network analysis | Processing complexity |

| Signal Decoding Models | Low Relevance | Neural signal decoding | Limited surgical application |

| Temporal Analysis Models | Low Relevance | Temporal pattern analysis | Low precision for localization |

| Graph Theory Models | Low Relevance | Global brain network analysis | Not suited for surgery |

| Neurofeedback Algorithms | Low Relevance | Therapeutic applications, not surgical | Limited to therapy, not surgery |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Redžepi, S.; Burazerović, E.; Redžepi, S.; Husović, E.; Pojskić, M. Systematic Review of Advanced Algorithms for Brain Mapping in Stereotactic Neurosurgery: Integration of fMRI and EEG Data. Brain Sci. 2025, 15, 1188. https://doi.org/10.3390/brainsci15111188

Redžepi S, Burazerović E, Redžepi S, Husović E, Pojskić M. Systematic Review of Advanced Algorithms for Brain Mapping in Stereotactic Neurosurgery: Integration of fMRI and EEG Data. Brain Sciences. 2025; 15(11):1188. https://doi.org/10.3390/brainsci15111188

Chicago/Turabian StyleRedžepi, Saleha, Eldin Burazerović, Salim Redžepi, Emina Husović, and Mirza Pojskić. 2025. "Systematic Review of Advanced Algorithms for Brain Mapping in Stereotactic Neurosurgery: Integration of fMRI and EEG Data" Brain Sciences 15, no. 11: 1188. https://doi.org/10.3390/brainsci15111188

APA StyleRedžepi, S., Burazerović, E., Redžepi, S., Husović, E., & Pojskić, M. (2025). Systematic Review of Advanced Algorithms for Brain Mapping in Stereotactic Neurosurgery: Integration of fMRI and EEG Data. Brain Sciences, 15(11), 1188. https://doi.org/10.3390/brainsci15111188