Fractal Neural Dynamics and Memory Encoding Through Scale Relativity

Abstract

1. Introduction

- •

- Neural activity is modeled as a complex-valued wave field propagating through a fractal space-time, yielding nonlinear solutions such as solitons and cnoidal waves.

- •

2. Materials and Methods

2.1. Mathematical Framework

2.1.1. Scale Relativity and Fractal Velocity

- •

- v(x,t) is the smooth, classical velocity field corresponding to deterministic signal propagation.

- •

- w(x,t,δt) is the stochastic fractal fluctuation, defined as

2.1.2. Covariant Derivative and Complex Dynamics

2.1.3. Fractal Neural Activity Equation

- •

- Solitons (localized wave packets);

- •

- Cnoidal waves (periodic, elliptic function-based solutions), depending on the signs and magnitudes of the parameters α and β.

2.1.4. First Integral and Wave Amplitude Evolution

2.1.5. Synaptic Plasticity Model

- •

- ρ is the intrinsic growth rate of synaptic strength.

- •

- K is the carrying capacity, representing a saturation limit.

- •

- α∣ψ∣2 modulates plasticity based on the local energy or intensity of neural activity.

- •

- DW controls diffusion of plasticity across the synaptic field, allowing spatial smoothing and interaction.

2.1.6. Complexity Measure: Spectral Entropy of the Synaptic Weight Field

2.1.7. Hysteresis Quantification

- •

- Global hysteresis index (loop area):normalized as

- •

- Reversibility index: Measuring recovery toward baseline W0(x)where Wfwd and Wrev are the endpoint fields after forward and reverse phases. R = 1 indicates full reversibility; R = 0 no recovery.

- •

- Trace overlap: Cosine similarity between post-forward and post-reverse mapswith Ω = 1 for identical traces.

- •

- Per-position hysteresis density: Local contributionsvisualized as spatial maps (Supplementary Material S2, Figures S2 and S3) to identify path-dependent “hot spots”.

2.2. Methods

- •

- 1D simulations: Spatial domain x∈[−50, 50], discretized into 500 points.

- •

- 2D simulations: Spatial domain (x,y)∈[−10, 10] × [−10, 10], discretized into 200 × 200 grid points.

- •

- Time domain: t∈[0, T], with T = 50 or T = 100, and a time step Δt = 0.01.

- •

- Fractal diffusion coefficient: D = 1.0 (unless otherwise stated).

- •

- Wave propagation speed: c = 1.0.

- •

- Nonlinear wave equation coefficients:

- ○

- α = 0.8 (controls linear amplification).

- ○

- β = −1.2 (controls nonlinear compression).

- •

- Plasticity model parameters:

- ○

- Reaction rate ρ = 1.0.

- ○

- Plasticity decay term α = 1.0.

- ○

- Plasticity carrying capacity K = 1.0.

- ○

- Diffusion coefficient DW = 0.5.

- •

- Laplacians were discretized using a standard 3-point (1D) or 5-point (2D) stencil.

- •

- Boundary conditions were periodic in space for wave simulations and Neumann (no-flux) for plasticity evolution.

3. Results

3.1. Simulation

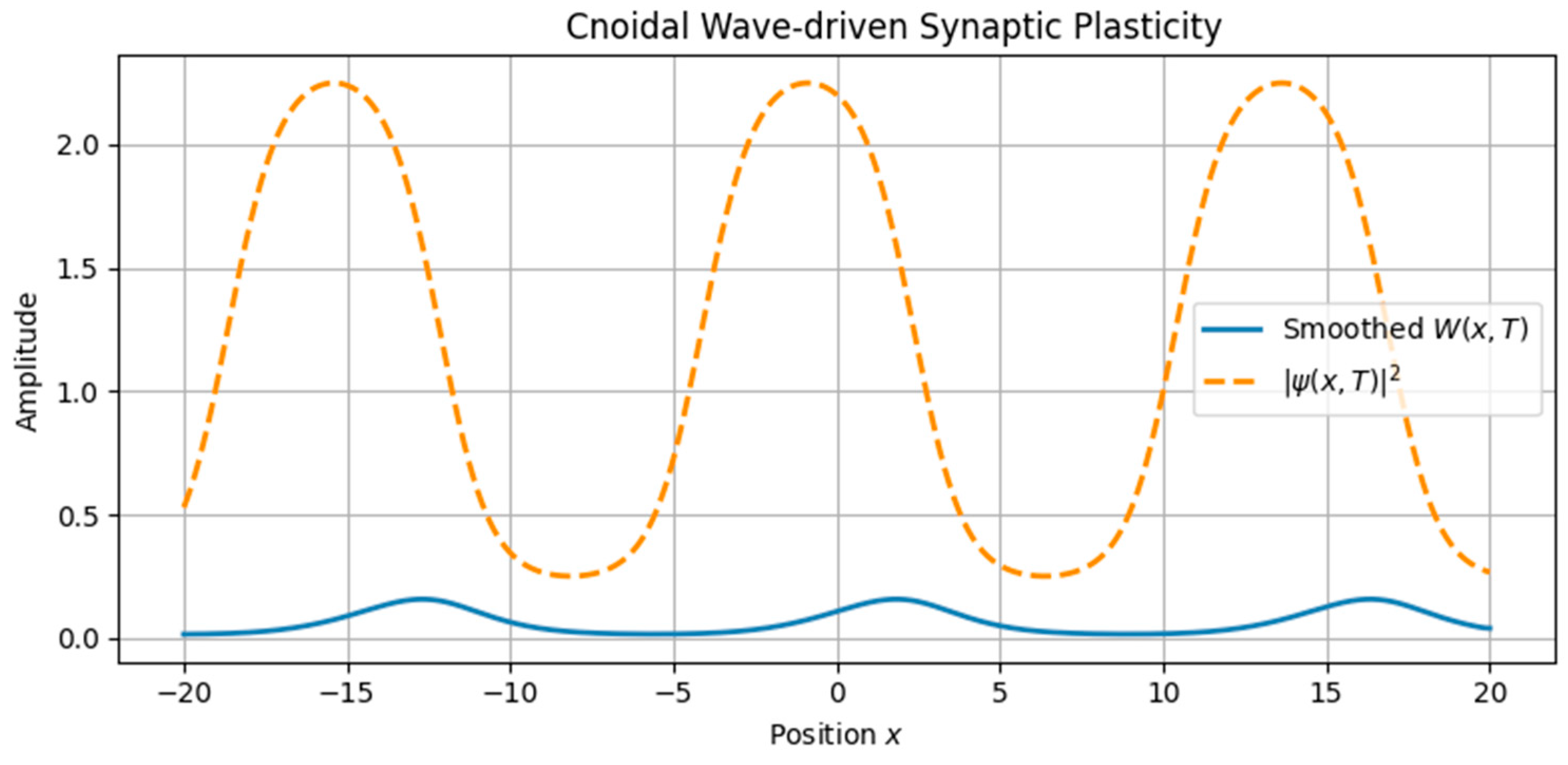

3.1.1. Soliton and Cnoidal Wave-Induced Plasticity

- •

- Soliton waves were generated from Equation (5), with parameters supporting localized, bell-shaped wave packets.

- •

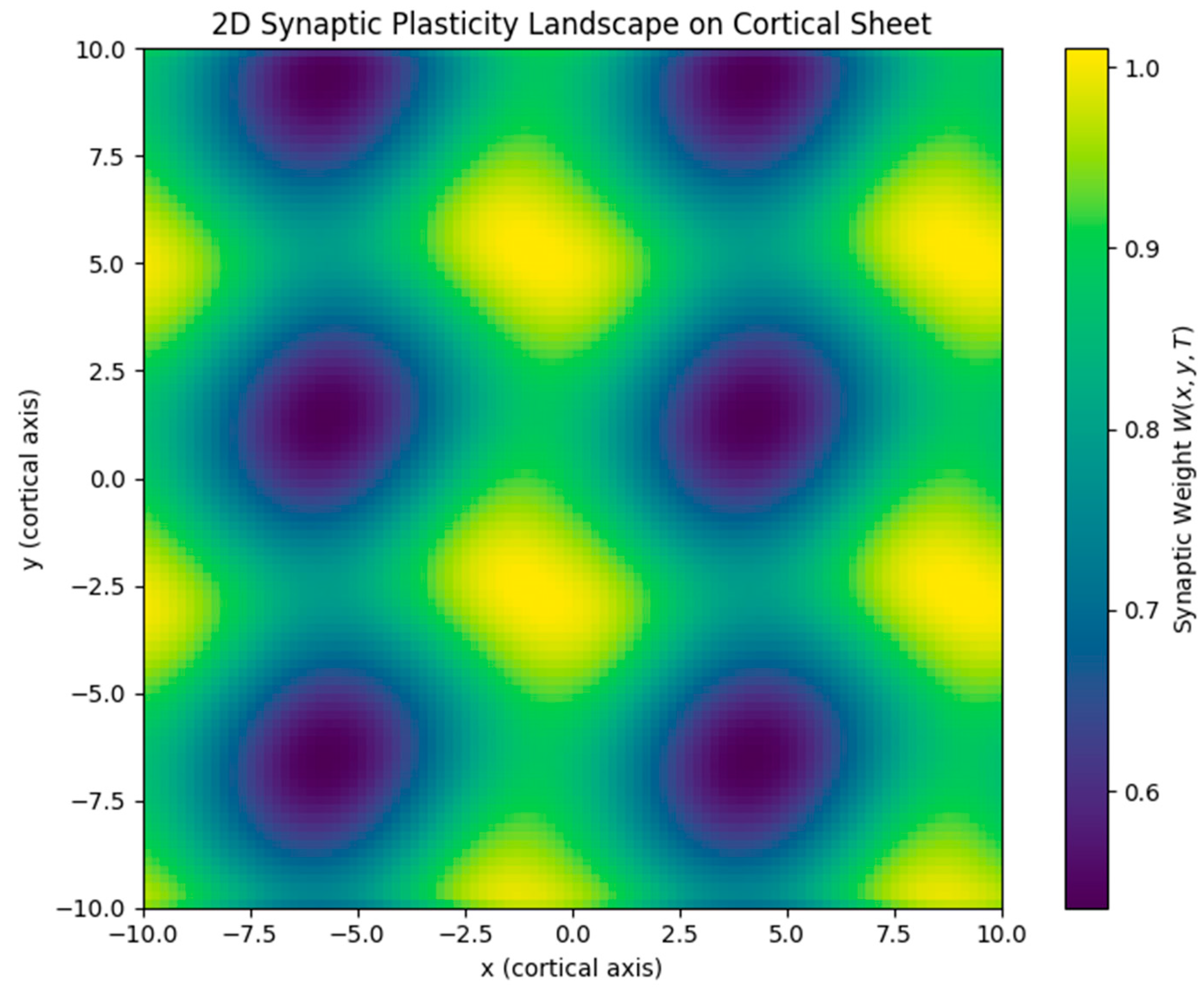

- Cnoidal waves, solutions of the same equation under different boundary conditions, were modeled using Jacobi elliptic functions, yielding spatially periodic activity patterns.

- •

- Localized soliton input induced sharply confined increases in synaptic weight.

- •

- Cnoidal wave input produced periodic modulation in the plasticity field, replicating structured, grid-like patterns.

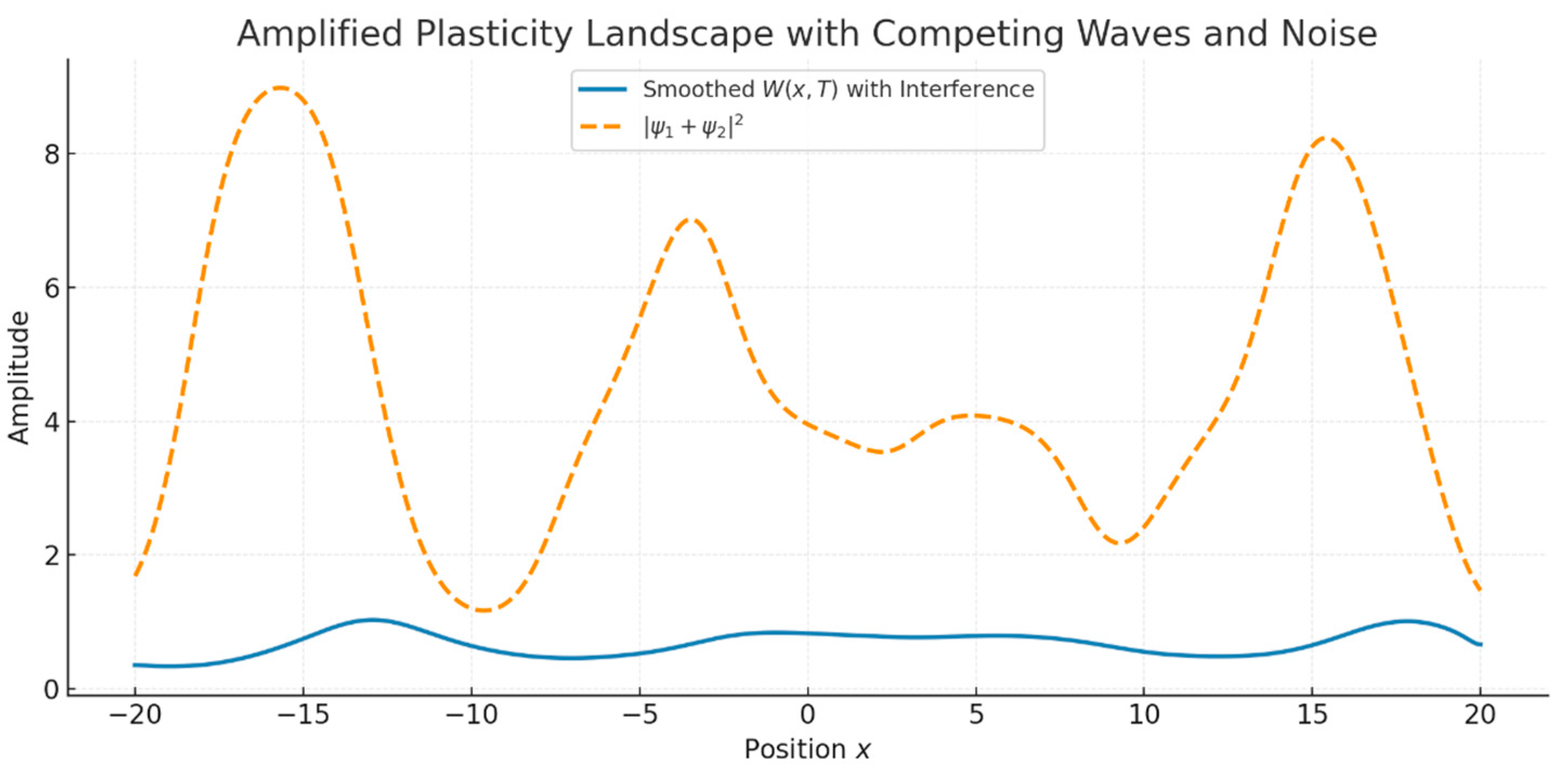

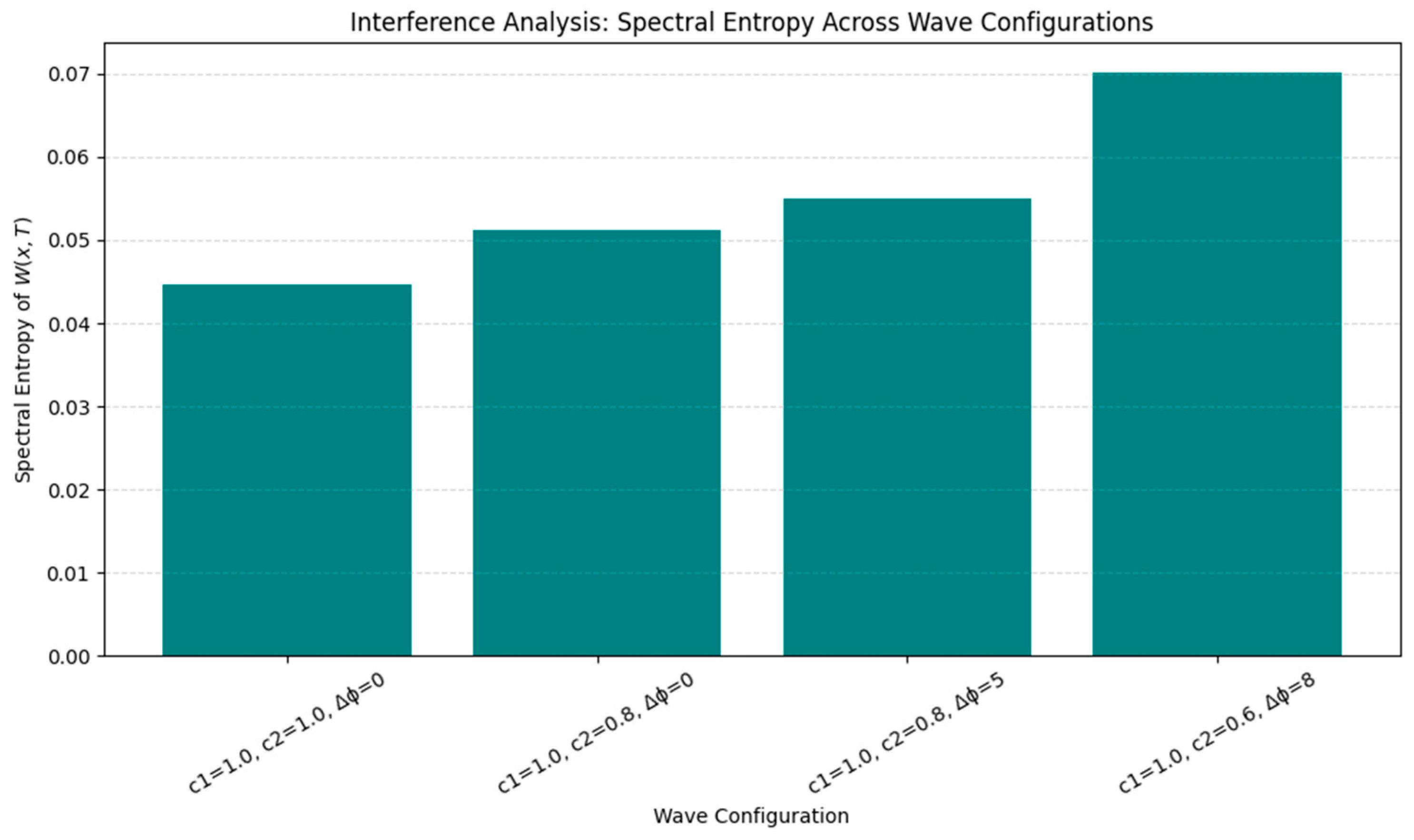

3.1.2. Interference and Noise: Emergent Complexity

- •

- Interference yielded a modulated plasticity landscape with alternating zones of constructive and destructive enhancement.

- •

- The addition of noise slightly distorted but did not destroy the overall structure, demonstrating a degree of noise robustness Figure 5.

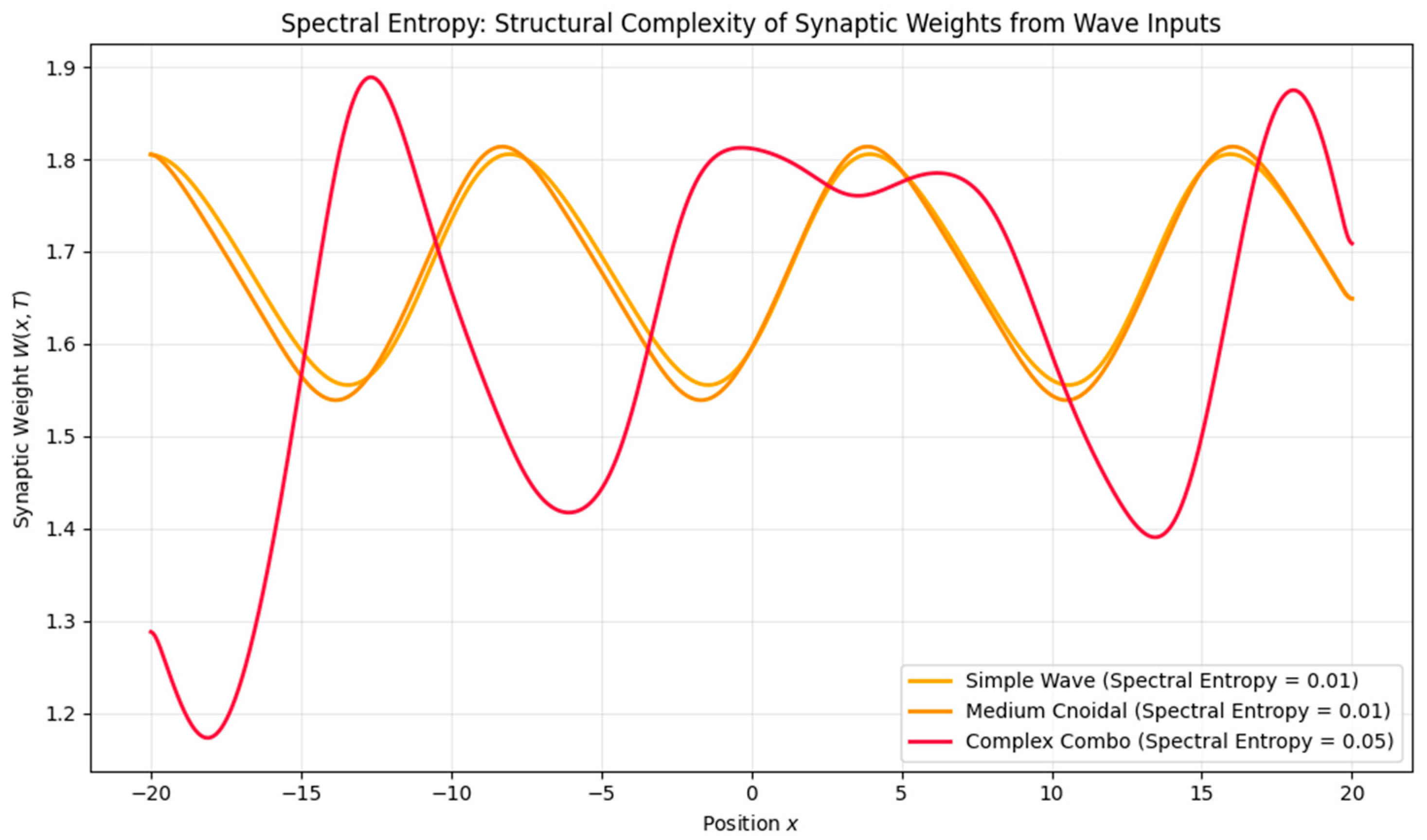

3.1.3. Quantitative Trace Analysis

- •

- Entropy Analysis (Shannon spectral entropy) yielded a value of ~6.89, indicating a memory trace that is moderately complex and spatially distributed—neither overly localized nor fully diffuse. From a cognitive perspective, low entropy corresponds to highly specific traces dominated by a single spatial frequency component, analogous to sharply tuned hippocampal place fields. High entropy, by contrast, reflects a broadband, multi-frequency structure, consistent with distributed and overlapping codes such as entorhinal grid patterns or modular cortical maps. Although spectral entropy is not identical to the spatial information content commonly used in the place cell literature [16], both serve to index representational richness and discriminability. In this sense, spectral entropy can be interpreted as a complementary complexity measure, quantifying how richly structured or compressed a simulated memory trace is.

- •

- Cross-correlation between the neural intensity and the synaptic weight field showed a strong positive peak (max correlation ≈ 3.42), indicating that plasticity reliably tracks the input structure even under interference.

- •

- Fourier Spectrum Analysis revealed a dominant low-frequency component corresponding to the beat frequency of the two interfering cnoidal waves. This suggests the preferential encoding of coarse-grained spatial information (Figure 6).

3.1.4. Temporal Evolution and Hysteresis

- •

- Gradual buildup of potentiation in regions of sustained neural activity.

- •

- Temporal saturation without runaway growth or collapse.

- •

- Increasing differentiation and contrast over time, eventually stabilizing.

- •

- Some memory traces persisted despite the reversal.

- •

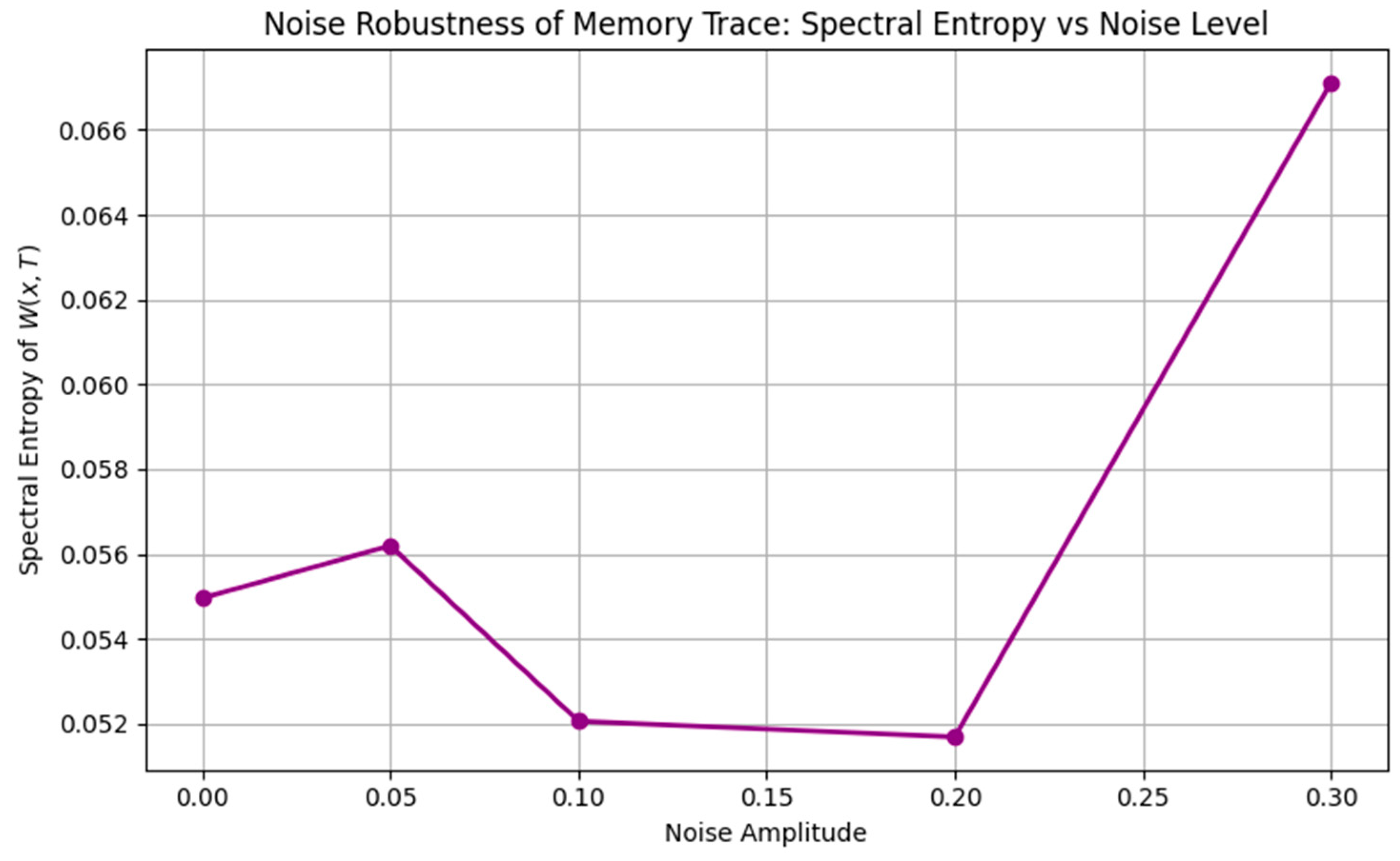

3.1.5. Functional Complexity, Robustness, and Interference

- •

- Waveform Complexity: Higher-frequency or nested inputs (e.g., theta–gamma modulation) induced higher spectral entropy, suggesting a richer, less compressible memory trace.

- •

- Noise Robustness: Low noise preserved the memory structure, moderate noise introduced blurring, and high noise eventually erased all encoding.

- •

3.2. Extensions and Predictions

3.2.1. Cross-Frequency Coupling

3.2.2. Multi-Dimensional Plasticity

3.2.3. Biological Fitting

Entorhinal Cortex (Grid Cells)

Hippocampus (CA1 Place Fields)

Primary Visual Cortex (V1)

4. Discussion

4.1. Biological Implications

4.2. General Analysis

4.2.1. Summary and Interpretation of Key Findings

- •

- Soliton-like waves induce localized, persistent plasticity, aligning with the observations of sharp, spatially confined representations such as hippocampal place fields [14].

- •

- Cnoidal (periodic) waves, by contrast, produce regularly spaced plasticity landscapes resembling grid cell patterns in the entorhinal cortex [13].

4.2.2. Relationship with Existing Work

- •

- Neural waves and rhythms in information flow. Empirical studies have shown that traveling cortical waves [19,20] and nested oscillations such as theta–gamma coupling [11,21] coordinate neural activity across spatial and temporal scales and are crucial for learning, working memory, and attention [22]. By modeling waves as carriers of both energy and structure, our work provides a formal mechanism for how such dynamics might imprint memory onto synaptic substrates.

- •

- Plasticity mechanisms and memory models. Classical accounts such as spike-timing-dependent plasticity (STDP) [3,4], cascade models of synaptic memory [23], and homeostatic plasticity frameworks [24] explain how local timing rules, probabilistic cascades, or stability constraints shape synaptic strength. Our reaction–diffusion rule, coupled with nonlinear wave dynamics, complements these frameworks by emphasizing the spatially distributed aspects of plasticity. Unlike pointwise or pair-based rules, it explicitly incorporates diffusion and wave interference, thereby generating map-like synaptic organizations and interference-driven distortions.

4.2.3. Limitations and Assumptions

- Dimensionality. Most simulations were conducted in 1D or simplified 2D environments. While these are sufficient for modeling linear cortical strips or hippocampal slices, full cortical modeling would require extending this to 3D networks with heterogeneous geometries. A further limitation is that our 2D results have thus far been evaluated only qualitatively. Although the simulated maps reproduce hexagonal grid-like tiling and modular V1-like domains, they have not been quantitatively benchmarked against biological data. Established metrics such as the grid score (from spatial autocorrelation analyses) and the orientation selectivity index (OSI) would provide stronger validation and enable parameter fitting across datasets. We regard this as a key direction for future work. Furthermore, our analysis shows that the hexagonal symmetry observed in 2D simulations is contingent on the relative orientation of the component waves. While the grid pattern is robust to small angular perturbations (±5–10°), larger deviations disrupt the symmetry. This suggests that interference-driven map formation depends on geometric constraints that may reflect or limit the flexibility of biological grid cell formation mechanisms.

- Simplified plasticity. Although the logistic reaction–diffusion model captures important qualitative dynamics, it does not account for complex biological processes such as neuromodulatory gating (e.g., dopamine, acetylcholine), synaptic tagging, or glial influence. The framework should therefore be regarded as a minimal proof-of-concept rather than a complete biophysical description of synaptic plasticity.

- No direct behavioral link. Our simulations do not yet connect memory traces to behavior or decision-making. Future work should investigate how structured plasticity fields relate to navigation, recall, or pattern recognition.

- Speculative assumption of fractal geodesics. A core feature of the model is the assumption that neural signal propagation can be described by fractal geodesics in non-differentiable space-time, as formalized in the Scale Relativity Theory [1,5]. While fractal and scale-free properties are widely observed in neural anatomy and dynamics, there is no direct evidence from neurophysiological recordings that signals follow such geodesic trajectories. This assumption should therefore be framed as speculative and hypothesis-generating.

- Empirical comparison and validation. Although our qualitative comparisons show structural parallels with grid cells [13], CA1 place fields [14], and V1 orientation maps [15], these remain preliminary. Stronger validation will require quantitative fitting across datasets and experimental tests of the model’s predictions.

- Robustness and universality. The present results demonstrate a tolerance to noise and the repeated emergence of structured motifs, but these behaviors are simulation-based and parameter-dependent. Claims of robustness and universality should therefore be moderated until supported by systematic sensitivity analyses.

- Benchmarking against established models. The framework was not explicitly compared with conventional accounts such as oscillatory and traveling wave models [3,4], short-term dynamics and STDP [16], or engram-level studies linking plasticity to memory [18]. Future benchmarking against these well-established frameworks will be critical to clarify what is unique about the SRT formulation and where it aligns with or diverges from existing theories.

- Hysteresis quantification. Although we have now introduced formal hysteresis metrics—loop area ∣H∣, normalized hysteresis index Hnorm, reversibility index R, and trace overlap Ω—these are based on simulations. Validation against experimental data on synaptic reversibility (e.g., extinction and reconsolidation studies [12,13]) will be essential to establish biological relevance.

- Cross-frequency coupling. Our implementation of the theta–gamma interaction (Equation (13)) represents a minimal amplitude-modulated wave. This does not fully reflect the phase–amplitude coupling observed in hippocampal circuits, where gamma bursts are locked to specific theta phases. Future extensions should incorporate more physiologically grounded PAC models (e.g., modulation index-based parametrizations) to better connect with experimental data.

4.2.4. Future Directions

- •

- Cross-frequency coupling: Preliminary results with nested theta–gamma waves suggest rich possibilities for encoding sequences or hierarchical memory structures. This should be systematically explored using empirical oscillatory parameters [21].

- •

- Integration with spiking models: Coupling the wave plasticity model to spiking networks could offer a bridge between abstract field dynamics and biologically detailed simulations [26].

- •

- Realistic cortical topologies: Mapping our interference-based plasticity model onto anatomically accurate cortical surfaces could explain the map formation in V1, auditory tonotopy, or motor coordination patterns.

- •

- Behavioral modeling: Memory-driven behavior, including path planning, goal-directed recall, or contextual modulation, could be simulated by feeding wave-shaped plasticity fields into higher-order decision models.

- •

- Validation through imaging and optogenetics: Experimental designs could use patterned stimulation to induce traveling waves and observe whether similar plasticity patterns emerge in vivo [20].

- •

- Experimental validation. Although our work is simulation-based, it generates several testable predictions that can guide empirical studies. First, targeted optogenetic stimulation patterns could be used to induce traveling or interfering waves in cortical tissue; our model predicts that such patterned inputs would leave structured synaptic weight maps resembling grid-like or modular arrangements. Second, in vivo hippocampal recordings under theta–gamma coupling paradigms could be analyzed for plasticity hysteresis, with partial reversibility expected during extinction or reversal learning. Third, quantitative analyses of cortical maps (grid scores, orientation selectivity indices) under controlled perturbations of oscillatory phase relationships could directly assess whether interference geometry governs the emergence of spatial symmetries, as predicted here. These paradigms provide concrete routes to falsify or refine the framework and to bridge theory with experiment. To facilitate empirical testing, we summarize, in Supplementary Material S2, Table S2, a set of model predictions alongside concrete experimental paradigms. These include the optogenetic induction of traveling waves, grid cell stimulation under interference angles, closed-loop theta–gamma modulation, and extinction/reversal protocols for probing hysteresis. This table provides a roadmap for the validation and falsification of the present framework.

4.2.5. Implications for Neuroscience and AI

- •

- Introducing a novel mathematical formalism for synaptic plasticity grounded in the Scale Relativity Theory.

- •

- Demonstrating explanatory power across multiple scales, from synaptic-level learning to map-like cortical organization.

- •

- Offering testable predictions and clear paths for extension through both computational refinement and empirical validation.

5. Conclusions

- •

- Localized waveforms generate sharp, discrete potentiation zones, modeling features like place fields;

- •

- Periodic waves yield grid-like or modular synaptic architectures, paralleling grid cells and cortical maps;

- •

- Wave interference and noise produce complex plasticity landscapes, offering a mechanism for memory interference, competition, and contextual encoding.

- •

- Time-dependent plasticity buildup and saturation;

- •

- Asymmetric forgetting and hysteresis;

- •

- Selectivity for structured input over unstructured or transient signals.

- •

- Experimental validation using patterned stimulation and imaging;

- •

- Computational extension to more realistic, high-dimensional neural systems;

- •

- Application to neuromorphic computing where robust, distributed memory is a central design challenge.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hebb, D.O. The Organization of Behavior: A Neuropsychological Theory; John Wiley & Sons. Inc.: Hoboken, NJ, USA, 1949. [Google Scholar]

- Bi, G.Q.; Poo, M.M. Synaptic modifications in cultured hippocampal neurons: Dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 1998, 18, 10464–10472. [Google Scholar] [CrossRef] [PubMed]

- Buzsáki, G. Rhythms of the Brain; Oxford University Press: Oxford, UK, 2006. [Google Scholar] [CrossRef]

- Muller, L.; Chavane, F.; Reynolds, J.; Sejnowski, T.J. Cortical travelling waves: Mechanisms and computational principles. Nat. Rev. Neurosci. 2018, 19, 255–268. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nottale, L. Fractal Space-Time and Microphysics: Towards a Theory of Scale Relativity; World Scientific: Singapore, 1993. [Google Scholar] [CrossRef]

- Buzea, C.G.; Agop, M.; Moraru, E.; Stana, B.A.; Girtu, M.; Iancu, D. Some implications of Scale Relativity theory in avascular stages of growth of solid tumors in the presence of an immune system response. J. Theor. Biol. 2011, 282, 52–64. [Google Scholar] [CrossRef]

- Bardella, G.; Franchini, S.; Pani, P.; Ferraina, S. Lattice physics approaches for neural networks. iScience 2024, 27, 111390. [Google Scholar] [CrossRef]

- Buice, M.A.; Cowan, J.D. Field-theoretic approach to fluctuation effects in neural networks. Phys. Rev. E 2007, 75, 051919. [Google Scholar] [CrossRef]

- Grosu, G.F.; Hopp, A.V.; Moca, V.V.; Bârzan, H.; Ciuparu, A.; Ercsey-Ravasz, M.; Winkel, M.; Linde, H.; Mureșan, R.C. The fractal brain: Scale-invariance in structure and dynamics. Cereb. Cortex 2023, 33, 4574–4605. [Google Scholar] [CrossRef] [PubMed]

- Smith, J.H.; Rowland, C.; Harland, B.; Moslehi, S.; Montgomery, R.D.; Schobert, K.; Watterson, W.J.; Dalrymple-Alford, J. How neurons exploit fractal geometry to optimize their network connectivity. Sci. Rep. 2021, 11, 2332. [Google Scholar] [CrossRef]

- Turing, A.M. The Chemical Basis of Morphogenesis. Philos. Trans. R. Soc. B 1952, 237, 37–72. [Google Scholar] [CrossRef]

- van Rossum, M.C.W.; Bi, G.Q.; Turrigiano, G.G. Stable Hebbian learning from spike timing-dependent plasticity. J. Neurosci. 2000, 20, 8812–8821. [Google Scholar] [CrossRef]

- Hafting, T.; Fyhn, M.; Molden, S.; Moser, M.-B. Microstructure of a spatial map in the entorhinal cortex. Nature 2005, 436, 801–806. [Google Scholar] [CrossRef] [PubMed]

- Hok, V.; Chah, E.; Save, E.; Poucet, B. Prefrontal cortex focally modulates hippocampal place cell firing patterns. J. Neurosci. 2013, 33, 3443–3451. [Google Scholar] [CrossRef]

- Bonhoeffer, T.; Grinvald, A. Iso-orientation domains in cat visual cortex are arranged in pinwheel-like patterns. Nature 1991, 353, 429–431. [Google Scholar] [CrossRef] [PubMed]

- Skaggs, W.E.; McNaughton, B.L.; Gothard, K.M.; Markus, E.J. An information-theoretic approach to deciphering the hippocampal code. In Advances in Neural Information Processing Systems; Hanson, S.J., Cowan, J.D., Giles, C.L., Eds.; Morgan Kaufmann: San Mateo, CA, USA, 1993; Volume 5, pp. 1030–1037. [Google Scholar]

- Tsodyks, M.; Markram, H. The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. USA 1997, 94, 719–723. [Google Scholar] [CrossRef]

- Tonegawa, S.; Liu, X.; Ramirez, S.; Redondo, R. Memory Engram Cells Have Come of Age. Neuron 2015, 87, 918–931. [Google Scholar] [CrossRef]

- Ermentrout, G.B.; Kleinfeld, D. Traveling electrical waves in cortex: Insights from phase dynamics and speculation on a computational role. Neuron 2001, 29, 33–44. [Google Scholar] [CrossRef]

- Muller, L.; Reynaud, A.; Chavane, F. The stimulus-evoked population response in visual cortex of awake monkey is a propagating wave. Nat. Commun. 2014, 5, 3675. [Google Scholar] [CrossRef]

- Lisman, J.E.; Jensen, O. The θ-γ neural code. Neuron 2013, 77, 1002–1016. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Fries, P. A mechanism for cognitive dynamics: Neuronal communication through neuronal coherence. Trends Cogn. Sci. 2005, 9, 474–480. [Google Scholar] [CrossRef] [PubMed]

- Fusi, S.; Drew, P.J.; Abbott, L.F. Cascade models of synaptically stored memories. Neuron 2005, 45, 599–611. [Google Scholar] [CrossRef]

- Clopath, C.; Büsing, L.; Vasilaki, E.; Gerstner, W. Connectivity reflects coding: A model of voltage-based STDP with homeostasis. Nat. Neurosci. 2010, 13, 344–352. [Google Scholar] [CrossRef] [PubMed]

- McClelland, J.L.; McNaughton, B.L.; O’Reilly, R.C. Why there are complementary learning systems in the hippocampus and neocortex: Insights from the successes and failures of connectionist models of learning and memory. Psychol. Rev. 1995, 102, 419–457. [Google Scholar] [CrossRef] [PubMed]

- Izhikevich, E.M. Solving the distal reward problem through linkage of STDP and dopamine signaling. Cereb. Cortex 2007, 17, 2443–2452. [Google Scholar] [CrossRef] [PubMed]

| Model Component | Biological Interpretation | Known Phenomenon Modeled |

|---|---|---|

| Soliton wave (localized ψ) | Burst of localized neural activity (e.g., sharp-wave ripple) | CA1 place fields |

| Cnoidal wave (periodic ψ) | Oscillatory input/spatial periodicity | Grid cell firing in mEC |

| ψ(x, t): Neural activity field | Distributed excitation density across space-time | Population-level neural field/LFP analog |

| ∣ψ∣2: Activity intensity | Proxy for metabolic/neural drive driving plasticity | Hebbian modulation by activity |

| Reaction–diffusion in W(x, t) | Synaptic strength modulated by activity + lateral spread | Cortical map self-organization |

| Interference patterns | Competing inputs or memories interacting | Memory interference and consolidation |

| DW (plasticity diffusion) | Lateral spread of synaptic effects | Dendritic/synaptic propagation of plastic change |

| Model Feature | Simulated Outcome | Biological Correspondence |

|---|---|---|

| Soliton wave input | Localized, persistent synaptic potentiation | Hippocampal place fields (CA1) |

| Cnoidal wave input | Periodic synaptic weight landscape | Grid cell firing structure (entorhinal cortex) |

| Wave interference (cnoidal × 2) | Constructive/destructive plasticity modulation | Memory competition and interference effects |

| Time-dependent wave input | Gradual potentiation; saturation without divergence | Temporal dynamics of learning and consolidation |

| Wave reversal | Asymmetric forgetting; residual traces and overcorrection | Hysteresis and reconsolidation in synaptic memory |

| Noise scaling | Degradation of structure with high noise | Cognitive load, distractor interference, and forgetting |

| Input complexity variation | Increase in spectral entropy with waveform complexity | Information richness and selective memory encoding |

| Phase mismatch/wave speed shift | Reduced encoding fidelity and periodic distortion | Memory degradation under asynchronous input |

| Extended Model Feature | Simulated Outcome | Biological Correspondence |

|---|---|---|

| Theta–gamma nested input | High spectral entropy; multi-scale trace complexity | Cross-frequency coupling in hippocampal memory encoding |

| 2D wave interference (3 angles) | Hexagonal grid of synaptic weights | Grid cell lattice pattern in medial entorhinal cortex |

| Gaussian-modulated wave input | Localized potentiation zones; late buildup | CA1 place field development |

| Multi-wave + noise interaction | Irregular, interference-driven plasticity structure | Memory overlap and competitive encoding under noise |

| Periodic 2D maps | Modular plasticity zones; columnar tiling | V1 orientation maps and pinwheel structures |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buzea, C.G.; Nedeff, V.; Nedeff, F.; Lehăduș, M.P.; Ochiuz, L.; Rusu, D.I.; Agop, M.; Iancu, D.T. Fractal Neural Dynamics and Memory Encoding Through Scale Relativity. Brain Sci. 2025, 15, 1037. https://doi.org/10.3390/brainsci15101037

Buzea CG, Nedeff V, Nedeff F, Lehăduș MP, Ochiuz L, Rusu DI, Agop M, Iancu DT. Fractal Neural Dynamics and Memory Encoding Through Scale Relativity. Brain Sciences. 2025; 15(10):1037. https://doi.org/10.3390/brainsci15101037

Chicago/Turabian StyleBuzea, Călin Gheorghe, Valentin Nedeff, Florin Nedeff, Mirela Panaite Lehăduș, Lăcrămioara Ochiuz, Dragoș Ioan Rusu, Maricel Agop, and Dragoș Teodor Iancu. 2025. "Fractal Neural Dynamics and Memory Encoding Through Scale Relativity" Brain Sciences 15, no. 10: 1037. https://doi.org/10.3390/brainsci15101037

APA StyleBuzea, C. G., Nedeff, V., Nedeff, F., Lehăduș, M. P., Ochiuz, L., Rusu, D. I., Agop, M., & Iancu, D. T. (2025). Fractal Neural Dynamics and Memory Encoding Through Scale Relativity. Brain Sciences, 15(10), 1037. https://doi.org/10.3390/brainsci15101037