Syllable as a Synchronization Mechanism That Makes Human Speech Possible

Abstract

1. Introduction

Although nearly everyone can identify syllables, almost nobody can define them.—Ladefoged (1982, p. 220) [1]

The human motor apparatus … comprises more than 200 bones, 110 joints and over 600 muscles, each one of which either spans one, two or even three joints. While the degrees of freedom are already vast on the biomechanical level of description, their number becomes dazzling when going into neural space.—Huys (2010, p. 70) [2]

2. Syllable and Coarticulation

- Why are there syllables?

- Do syllables have clear phonetic boundaries?

- Do segments have definitive syllable affiliations?

2.1. Why Are There Syllables?

2.2. Are There Clear Boundaries to the Syllable?

2.3. Do Segments Have Definitive Syllable Affiliations?

2.4. Are Syllables Related to Coarticulation?

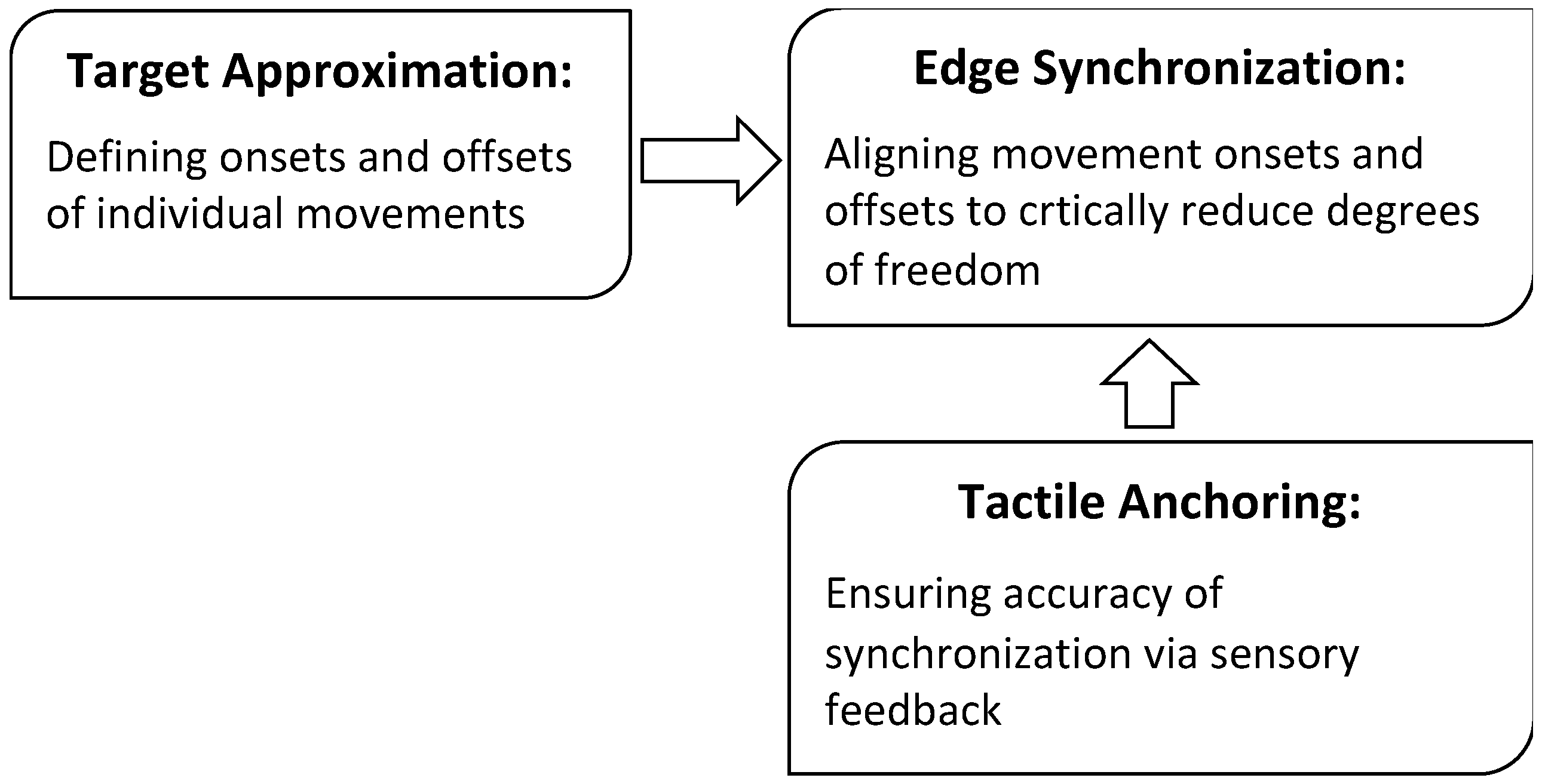

3. Syllable as a Synchronization Mechanism

3.1. Targets and Target Approximation

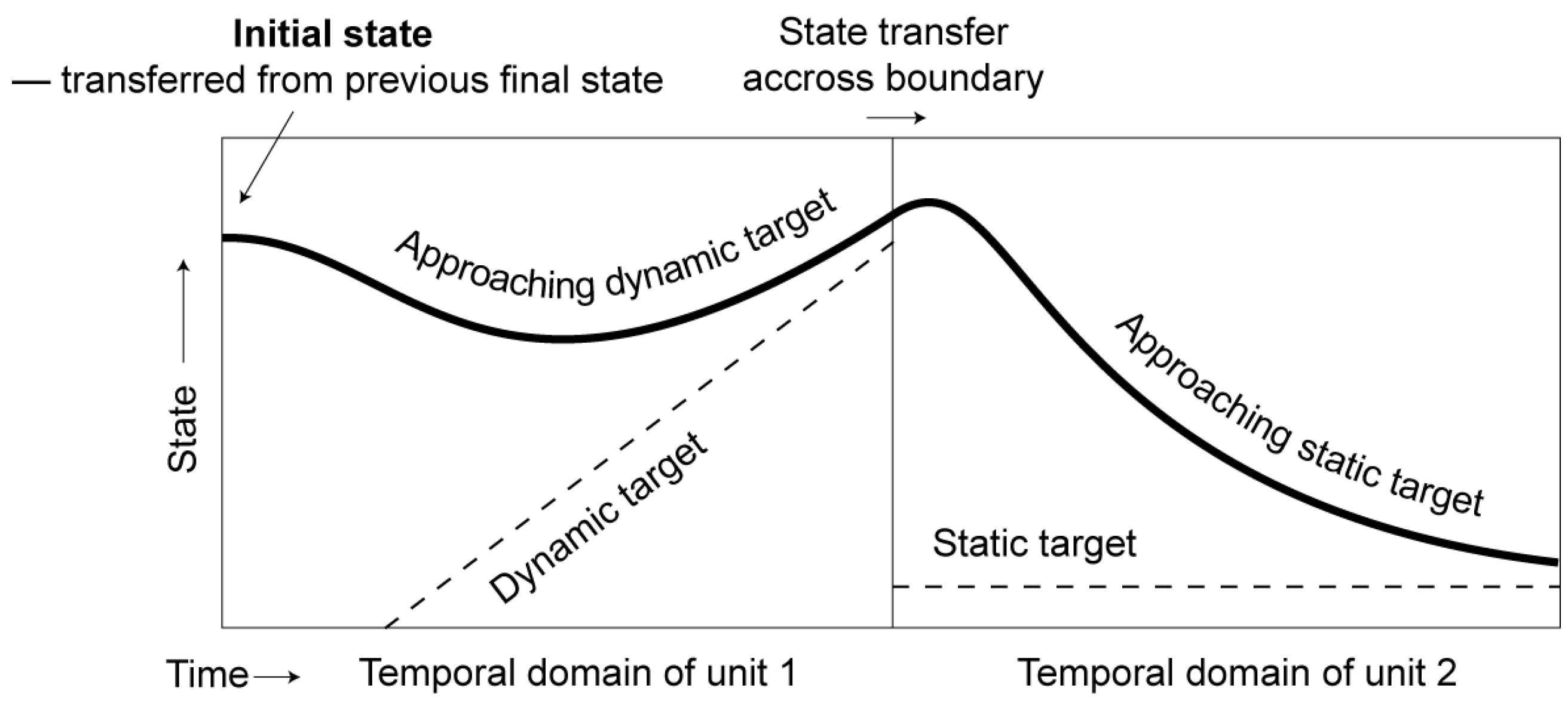

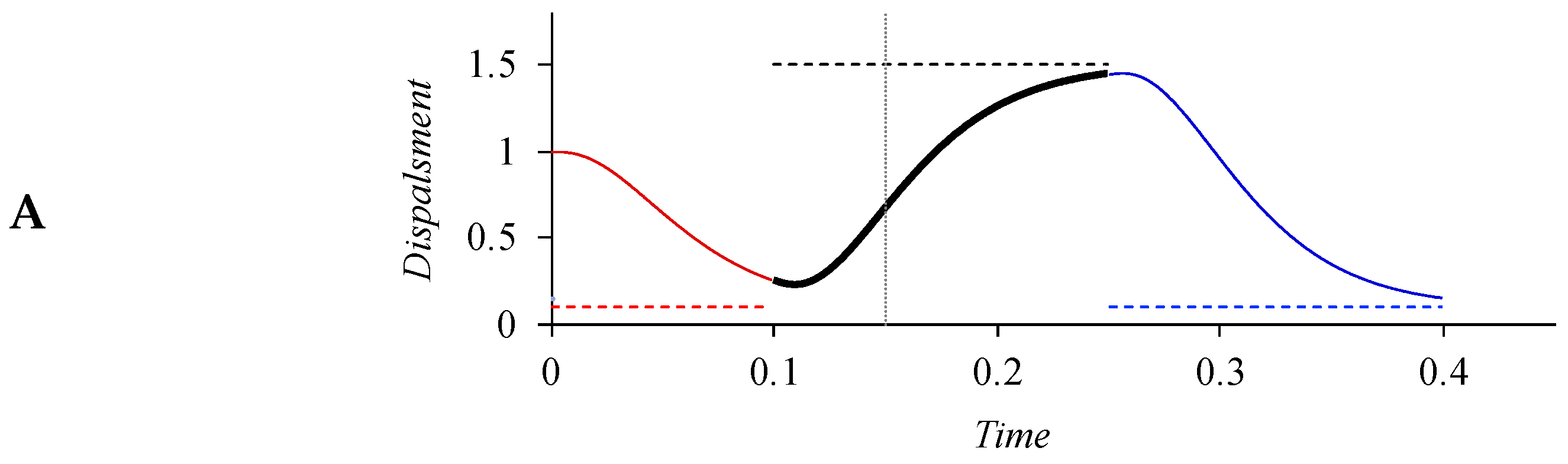

- Targets can be intrinsically dynamic, i.e., with underlying slopes of various degrees. No other model, to our knowledge, has incorporated dynamic targets. (See Section 3.1.5 for critical differences between underlying velocity and surface velocity. The former is a property of the target, which can be either static or dynamic, while the latter is the consequence of executing the target. Some models, like TD and Fujisaki models, specify the stiffness of the target gesture, which indirectly specifies surface velocity. But they have no specifications for underlying velocity. So, a fully achieved target in those models can only generate an asymptote to a static articulatory state.)

3.1.1. Asymptotic Approximation

3.1.2. Sequentiality

3.1.3. Full vs. Underspecified Targets

3.1.4. Target Approximation vs. Its Preparation

3.1.5. Dynamic Targets and Velocity Propagation/Continuity

3.1.6. Summary of Target Approximation

3.2. Edge Synchronization

3.2.1. C-V Synchronization and Coarticulation

3.2.2. Coarticulation Resistance

3.2.3. Locus and Locus Equations

3.2.4. Synchronization of Laryngeal and Supralaryngeal Articulations

3.2.5. Vowel Harmony, an Unresolved Issue

3.2.6. Summary and Implications of Edge Synchronization

3.3. Tactile Anchoring

3.3.1. Why Is Tactile Anchoring Needed?

3.3.2. Evidence for Tactile Anchoring in Speech

3.3.3. Summary of Tactile Anchoring

3.4. What Is New Compared to Previous Theories

4. Neural Prerequisites for Syllable Articulation

5. Conclusions and Broader Implications

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ladefoged, P. A Course in Phonetics; Hartcourt Brace Jovanovich: New York, NY, USA, 1982. [Google Scholar]

- Huys, R. The Dynamical Organization of Limb Movements. In Nonlinear Dynamics in Human Behavior; Springer: Berlin/Heidelberg, Germany, 2010; pp. 69–90. [Google Scholar]

- Bernstein, N.A. The Co-Ordination and Regulation of Movements; Pergamon Press: Oxford, UK, 1967. [Google Scholar]

- Stevens, K.N. Acoustic Phonetics; The MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

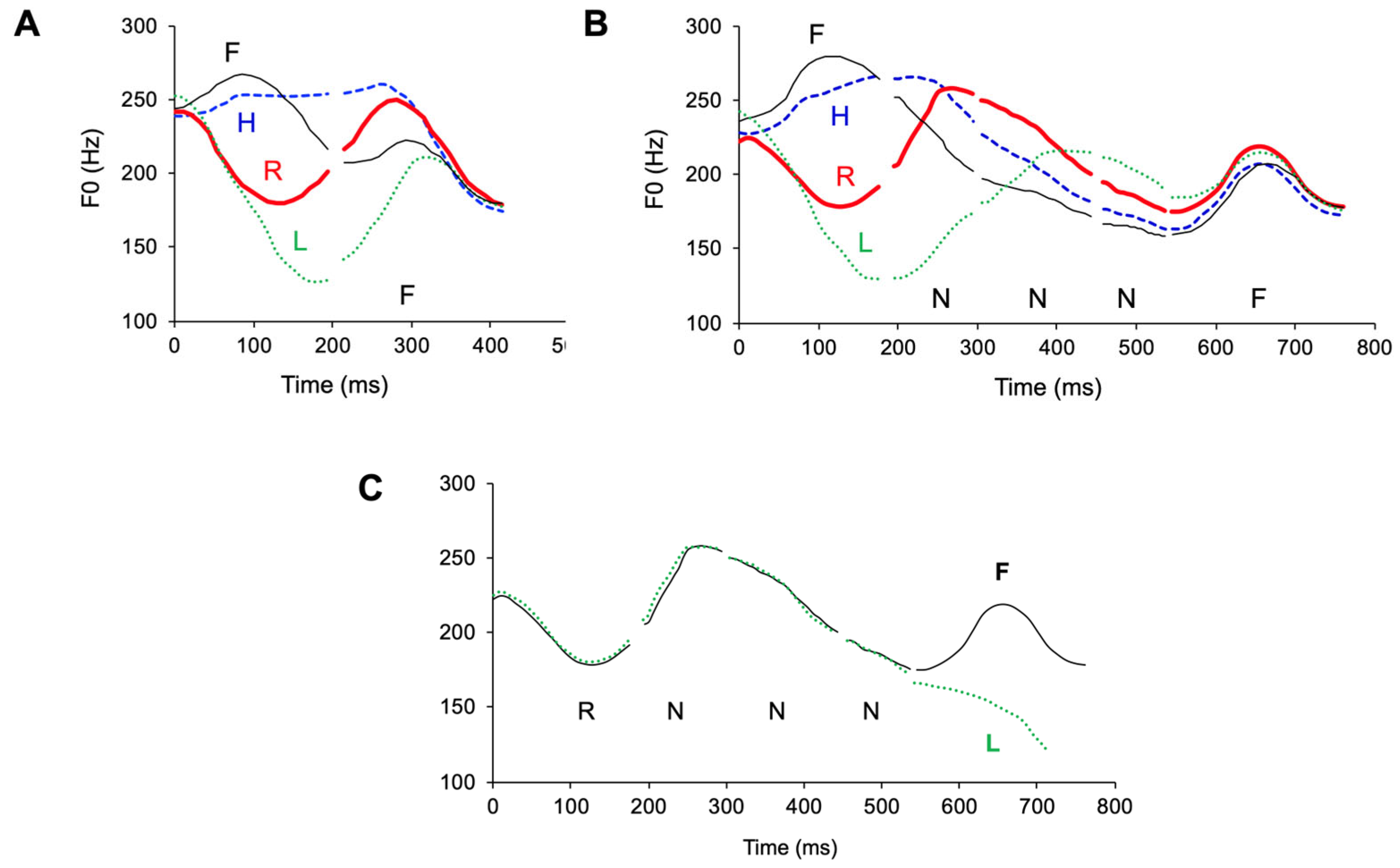

- Xu, Y. Contextual tonal variations in Mandarin. J. Phon. 1997, 25, 61–83. [Google Scholar] [CrossRef]

- Tiffany, W.R. The effects of syllable structure on diadochokinetic and reading rates. J. Speech Hear. Res. 1980, 23, 894–908. [Google Scholar] [CrossRef] [PubMed]

- Latash, M.L.; Scholz, J.P.; Schöner, G. Toward a new theory of motor synergies. Mot. Control. 2007, 11, 276–308. [Google Scholar] [CrossRef] [PubMed]

- Easton, T.A. On the normal use of reflexes: The hypothesis that reflexes form the basic language of the motor program permits simple, flexible specifications of voluntary movements and allows fruitful speculation. Am. Sci. 1972, 60, 591–599. [Google Scholar]

- Turvey, M.T. Preliminaries to a theory of action with reference to vision. In Perceiving, Acting, and Knowing: Toward an Ecological Psychology; Shaw, R., Bransford, J., Eds.; Lawrence Erlbaum: Hillsdale, NJ, USA, 1977; pp. 211–265. [Google Scholar]

- Fowler, C.A.; Rubin, P.; Remez, R.E.; Turvey, M.T. Implications for speech production of a general theory of action. In Language Production; Butterworth; Academic Press: New York, NY, USA, 1980; pp. 373–420. [Google Scholar]

- Saltzman, E.L.; Munhall, K.G. A dynamical approach to gestural patterning in speech production. Ecol. Psychol. 1989, 1, 333–382. [Google Scholar] [CrossRef]

- Browman, C.P.; Goldstein, L. Articulatory phonology: An overview. Phonetica 1992, 49, 155–180. [Google Scholar] [CrossRef]

- Goldstein, L.; Byrd, D.; Saltzman, E. The role of vocal tract gestural action units in understanding the evolution of phonology. In Action to Language via the Mirror Neuron System; Arbib, M.A., Ed.; Cambridge University Press: Cambridge, UK, 2006; pp. 215–249. [Google Scholar]

- Nam, H.; Goldstein, L.; Saltzman, E. Self-organization of syllable structure: A coupled oscillator model. In Approaches to Phonological Complexity; Pellegrino, F., Marsico, E., Chitoran, I., Coupé, C., Eds.; Mouton de Gruyter: New York, NY, USA, 2009; pp. 299–328. [Google Scholar]

- Saltzman, E.; Nam, H.; Krivokapić, J.; Goldstein, L. A task-dynamic toolkit for modeling the effects of prosodic structure on articulation. Proc. Speech Prosody 2008, 2008, 175–184. [Google Scholar]

- Haken, H.; Kelso, J.A.S.; Bunz, H. A Theoretical Model of Phase Transitions in Human Hand Movements. Biol. Cybern. 1985, 51, 347–356. [Google Scholar] [CrossRef]

- Kay, B.; Kelso, J.; Saltzman, E.; Schöner, G. Space–time behavior of single and bimanual rhythmical movements: Data and limit cycle model. J. Exp. Psychol. Hum. Percept. Perform. 1987, 13, 178. [Google Scholar] [CrossRef]

- Semjen, A.; Ivry, R.B. The coupled oscillator model of between-hand coordination in alternate-hand tapping: A reappraisal. J. Exp. Psychol. Hum. Percept. Perform. 2001, 27, 251. [Google Scholar] [CrossRef]

- Huygens, C. A letter to his father, dated 26 Feb. 1665. In Ouevres Completes de Christian Huyghens; Nijhoff, M., Ed.; Societe Hollandaise des Sciences: The Hague, The Netherlands, 1893; Volume 5, p. 243. [Google Scholar]

- Pikovsky, A.; Rosenblum, M.; Kurths, J. Synchronization—A Universal Concept in Nonlinear Sciences; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Kelso, J.A.S.; Southard, D.L.; Goodman, D. On the nature of human interlimb coordination. Science 1979, 203, 1029–1031. [Google Scholar] [CrossRef] [PubMed]

- Cummins, F.; Li, C.; Wang, B. Coupling among speakers during synchronous speaking in English and Mandarin. J. Phon. 2013, 41, 432–441. [Google Scholar] [CrossRef]

- Cummins, F. Periodic and Aperiodic Synchronization in Skilled Action. Front. Hum. Neurosci. 2011, 5, 170. [Google Scholar] [CrossRef] [PubMed]

- Kelso, J.A.S. Phase transitions and critical behavior in human bimanual coordination. Am. J. Physiol. Regul. Integr. Comp. 1984, 246, R1000–R1004. [Google Scholar] [CrossRef]

- Kelso, J.A.S.; Tuller, B.; Harris, K.S. A “dynamic pattern” perspective on the control and coordination of movement. In The Production of Speech; MacNeilage, P.F., Ed.; Springer-Verlag: New York, NY, USA, 1983; pp. 137–173. [Google Scholar]

- Mechsner, F.; Kerzel, D.; Knoblich, G.; Prinz, W. Perceptual basis of bimanual coordination. Nature 2001, 414, 69–73. [Google Scholar] [CrossRef]

- Schmidt, R.C.; Carello, C.; Turvey, M.T. Phase transitions and critical fluctuations in the visual coordination of rhythmic movements between people. J. Exp. Psychol. Hum. Percept. Perform. 1990, 16, 227–247. [Google Scholar] [CrossRef]

- Kelso, J.A.S.; Saltzman, E.L.; Tuller, B. The dynamical perspective on speech production: Data and theory. J. Phon. 1986, 14, 29–59. [Google Scholar] [CrossRef]

- Adler, R. A study of locking phenomena in oscillators. Proc. IRE 1946, 34, 351–357. [Google Scholar] [CrossRef]

- Bennett, M.; Schatz, M.F.; Rockwood, H.; Wiesenfeld, K. Huygens’s clocks. Proc. Math. Phys. Eng. Sci. 2002, 458, 563–579. [Google Scholar] [CrossRef]

- DeFrancis, J.F. Visible Speech: The Diverse Oneness of Writing Systems; University of Hawaii Press: Honolulu, HI, USA, 1989. [Google Scholar]

- Gnanadesikan, A.E. The Writing Revolution: Cuneiform to the Internet; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Liberman, I.Y.; Shankweiler, D.; Fischer, F.W.; Carter, B. Explicit syllable and phoneme segmentation in the young child. J. Exp. Child Psychol. 1974, 18, 201–212. [Google Scholar] [CrossRef]

- Fox, B.; Routh, D. Analyzing spoken language into words, syllables, and phonomes: A developmental study. J. Psycholinguist. Res. 1975, 4, 331–342. [Google Scholar] [CrossRef]

- Shattuck-Hufnagel, S. The role of the syllable in speech production in American English: A fresh consideration of the evidence. In Handbook of the Syllable; Cairns, C.E., Raimy, E., Eds.; Brill: Boston, MA, USA, 2011; pp. 197–224. [Google Scholar]

- Bolinger, D. Contrastive accent and contrastive stress. Language 1961, 37, 83–96. [Google Scholar] [CrossRef]

- de Jong, K. Stress, lexical focus, and segmental focus in English: Patterns of variation in vowel duration. J. Phon. 2004, 32, 493–516. [Google Scholar] [CrossRef]

- Pierrehumbert, J. The Phonology and Phonetics of English Intonation. Ph.D. Dissertation, Massachusetts Institutes of Technology, Cambridge, MA, USA, 1980. [Google Scholar]

- Barbosa, P.; Bailly, G. Characterisation of rhythmic patterns for text-to-speech synthesis. Speech Commun. 1994, 15, 127–137. [Google Scholar] [CrossRef]

- Cummins, F.; Port, R. Rhythmic constraints on stress timing in English. J. Phon. 1998, 26, 145–171. [Google Scholar] [CrossRef]

- Nolan, F.; Asu, E.L. The Pairwise Variability Index and Coexisting Rhythms in Language. Phonetica 2009, 66, 64–77. [Google Scholar] [CrossRef]

- Abramson, A.S. Static and dynamic acoustic cues in distinctive tones. Lang. Speech 1978, 21, 319–325. [Google Scholar] [CrossRef]

- Chao, Y.R. A Grammar of Spoken Chinese; University of California Press: Berkeley, CA, USA, 1968. [Google Scholar]

- Selkirk, E.O. On prosodic structure and its relation to syntactic structure. In Nordic Prosody II, Trondheim, Norway; Fretheim, T., Ed.; Indiana University Linguistics Club: Bloomington, IN, USA, 1978; pp. 111–140, TAPIR. [Google Scholar]

- Nespor, M.; Vogel, I. Prosodic Phonology; Foris Publications: Dordrecht, the Netherlands, 1986. [Google Scholar]

- Blevins, J. The syllable in phonological theory. In Handbook of Phonological Theory; Goldsmith, J., Ed.; Blackwell: Cambridge, MA, USA, 2001; pp. 206–244. [Google Scholar]

- Hooper, J.B. The syllable in phonological theory. Language 1972, 48, 525–540. [Google Scholar] [CrossRef]

- Bertoncini, J.; Mehler, J. Syllables as units in infant speech perception. Infant Behav. Dev. 1981, 4, 247–260. [Google Scholar] [CrossRef]

- Content, A.; Kearns, R.K.; Frauenfelder, U.H. Boundaries versus onsets in syllabic segmentation. J. Mem. Lang. 2001, 45, 177–199. [Google Scholar] [CrossRef]

- Cutler, A.; Mehler, J.; Norris, D.; Segui, J. The syllable’s differing role in the segmentation of French and English. J. Mem. Lang. 1986, 25, 385–400. [Google Scholar] [CrossRef]

- Kohler, K.J. Is the syllable a phonological universal? J. Linguist. 1966, 2, 207–208. [Google Scholar] [CrossRef]

- Labrune, L. Questioning the universality of the syllable: Evidence from Japanese. Phonology 2012, 29, 113–152. [Google Scholar] [CrossRef]

- Gimson, A.C. An Introduction to the Pronunciation of English; Arnold: London, UK, 1970. [Google Scholar]

- Steriade, D. Alternatives to syllable-based accounts of consonantal phonotactics. In Proceedings of the Linguistics and Phonetics 1998: Item Order in Language and Speech; Fujimura, O., Joseph, B.D., Palek, B., Eds.; Karolinum Press: Prague, Czech Republic, 1999; pp. 205–245. [Google Scholar]

- Blevins, J. Evolutionary Phonology: The Emergence of Sound Patterns; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Stetson, R.H. Motor Phonetics: A study of Speech Movements in Action; North Holland: Amsterdam, The Netherlands, 1951. [Google Scholar]

- Fujimura, O. C/D Model: A computational model of phonetic implementation. In Language and Computations; Ristad, E.S., Ed.; American Math Society: Providence, RI, USA, 1994; pp. 1–20. [Google Scholar]

- MacNeilage, P.F. The frame/content theory of evolution of speech production. Behav. Brain Sci. 1998, 21, 499–546. [Google Scholar] [CrossRef]

- Fitch, W.T. The Evolution of Language; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Pinker, S. The Language Instinct: The New Science of Language and Mind; Penguin: London, UK, 1995. [Google Scholar]

- Dell, G.S. The retrieval of phonological forms in production: Tests of predictions from a connectionist model. J. Mem. Lang. 1988, 27, 124–142. [Google Scholar] [CrossRef]

- Levelt, W.J.M.; Roelofs, A.; Meyer, A.S. A theory of lexical access in speech production. Behav. Brain Sci. 1999, 22, 1–38. [Google Scholar] [CrossRef]

- Jakobson, R.; Fant, C.G.; Halle, M. Preliminaries to Speech Analysis. The Distinctive Features and Their Correlates; MIT Press: Cambridge, MA, USA, 1951. [Google Scholar]

- Turk, A.; Nakai, S.; Sugahara, M. Acoustic Segment Durations in Prosodic Research: A Practical Guide. In Methods in Empirical Prosody Research; Sudhoff, S., Lenertová, D., Meyeretal, R., Eds.; De Gruyter: Berlin/Heidelberg, Germany; New York, NY, USA, 2006; pp. 1–28. [Google Scholar]

- Peterson, G.E.; Lehiste, I. Duration of syllable nuclei in English. J. Acoust. Soc. Am. 1960, 32, 693–703. [Google Scholar] [CrossRef]

- Hockett, C.F. A Manual of Phonology (International Journal of American Linguistics, Memoir 11); Waverly Press: Baltimore, MD, USA, 1955. [Google Scholar]

- Farnetani, E.; Recasens, D. Coarticulation and connected speech processes. Handb. Phon. Sci. 1997, 371, 404. [Google Scholar]

- Kühnert, B.; Nolan, F. The origin of coarticulation. In Coarticulation: Theory, Data and Techniques; Hardcastle, W.J., Newlett, N., Eds.; Cambridge University Press: Cambridge, UK, 1999; pp. 7–30. [Google Scholar]

- Vennemann, T. Preference Laws for Syllable Structure and the Explanation of Sound Change; Mouton de Gruyter: Berlin, Germany, 1988. [Google Scholar]

- Pulgram, E. Syllable, Word, Nexus, Cursus; The Hague: Mouton, France, 1970. [Google Scholar]

- Steriade, D. Greek Prosodies and the Nature of Syllabification. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1982. [Google Scholar]

- Hoard, J.E. Aspiration, tenseness, and syllabication in English. Language 1971, 47, 133–140. [Google Scholar] [CrossRef]

- Wells, J.C. Syllabification and allophony. In Studies in the Pronunciation of English: A Commemorative Volume in Honour of A. C. Gimson; Ramsaran, S., Ed.; Routledge: London, UK, 1990; pp. 76–86. [Google Scholar]

- Fudge, E. Syllables. J. Linguist. 1969, 5, 253–286. [Google Scholar] [CrossRef]

- Selkirk, E.O. The syllable. In The Structure of Phonological Representations, Part II; Hulst, H.V.D., Smith, N., Eds.; Foris: Dordrecht, The Netherlands, 1982; pp. 337–383. [Google Scholar]

- Duanmu, S. Syllable Structure: The Limits of Variation; Oxford University Press: Oxford, UK, 2009. [Google Scholar]

- Chiosáin, M.N.; Welby, P.; Espesser, R. Is the syllabification of Irish a typological exception? An experimental study. Speech Commun. 2012, 54, 68–91. [Google Scholar] [CrossRef]

- Goslin, J.; Frauenfelder, U.H. A Comparison of Theoretical and Human Syllabification. Lang. Speech 2001, 44, 409–436. [Google Scholar] [CrossRef] [PubMed]

- Schiller, N.O.; Meyer, A.S.; Levelt, W.J. The syllabic structure of spoken words: Evidence from the syllabification of intervocalic consonants. Lang. Speech 1997, 40, 103–140. [Google Scholar] [CrossRef] [PubMed]

- Menzerath, P.; de Lacerda, A. Koartikulation, Seuerung und Lautabgrenzung; Dummlers: Berlin/Bonn, Germany, 1933. [Google Scholar]

- Kozhevnikov, V.A.; Chistovich, L.A. Speech: Articulation and Perception; Translation by Joint Publications Research Service: Washington, DC, USA, 1965; JPRS 30543. [Google Scholar]

- Gay, T. Articulatory units: Segments or syllables. In Syllables and Segments; Bell, A., Hooper, J., Eds.; North-Holland: Amsterdam, The Netherlands, 1978; pp. 121–132. [Google Scholar]

- Kent, R.; Minifie, F. Coarticulation in recent speech production models. J. Phon. 1977, 5, 115–133. [Google Scholar] [CrossRef]

- Kent, R.D.; Moll, K.L. Tongue Body Articulation during Vowel and Diphthong Gestures. Folia Phoniatr. Logop. 1972, 24, 278–300. [Google Scholar] [CrossRef] [PubMed]

- Moll, K.; Daniloff, R. Investigation of the timing of velar movement during speech. J. Acoust. Soc. Am. 1971, 50, 678–684. [Google Scholar] [CrossRef] [PubMed]

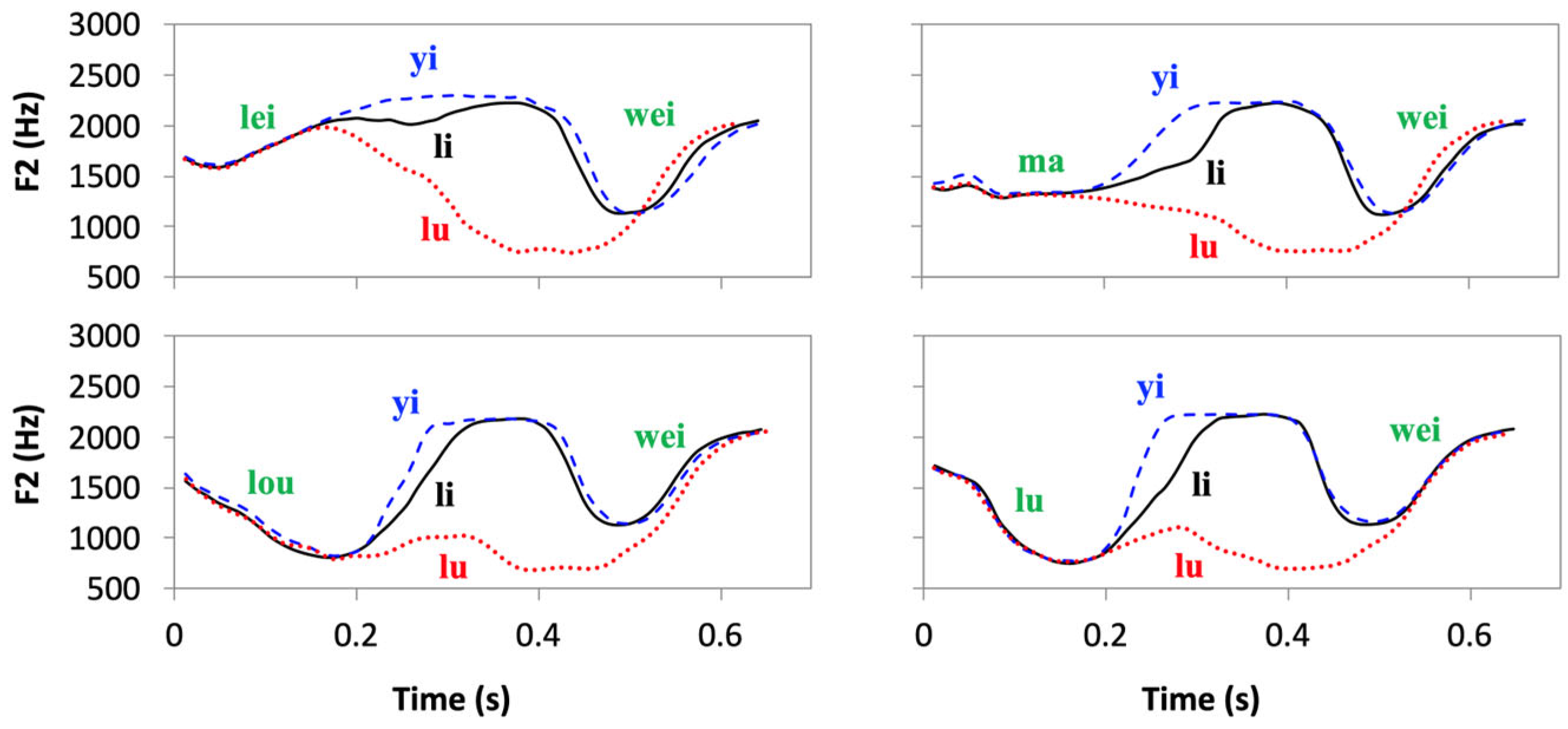

- Öhman, S.E.G. Coarticulation in VCV utterances: Spectrographic measurements. J. Acoust. Soc. Am. 1966, 39, 151–168. [Google Scholar] [CrossRef] [PubMed]

- Clements, G.N. Vowel Harmony in Nonlinear Generative Phonology; Indiana University Linguistics Club: Bloomington, IN, USA, 1976. [Google Scholar]

- Huffman, M.K. Measures of phonation type in Hmong. J. Acoust. Soc. Am. 1987, 81, 495–504. [Google Scholar] [CrossRef]

- Wayland, R.; Jongman, A. Acoustic correlates of breathy and clear vowels: The case of Khmer. J. Phon. 2003, 31, 181–201. [Google Scholar] [CrossRef]

- Lindblom, B. Spectrographic study of vowel reduction. J. Acoust. Soc. Am. 1963, 35, 1773–1781. [Google Scholar] [CrossRef]

- Fujisaki, H. Dynamic characteristics of voice fundamental frequency in speech and singing. In The Production of Speech; MacNeilage, P.F., Ed.; Springer-Verlag: New York, NY, USA, 1983; pp. 39–55. [Google Scholar]

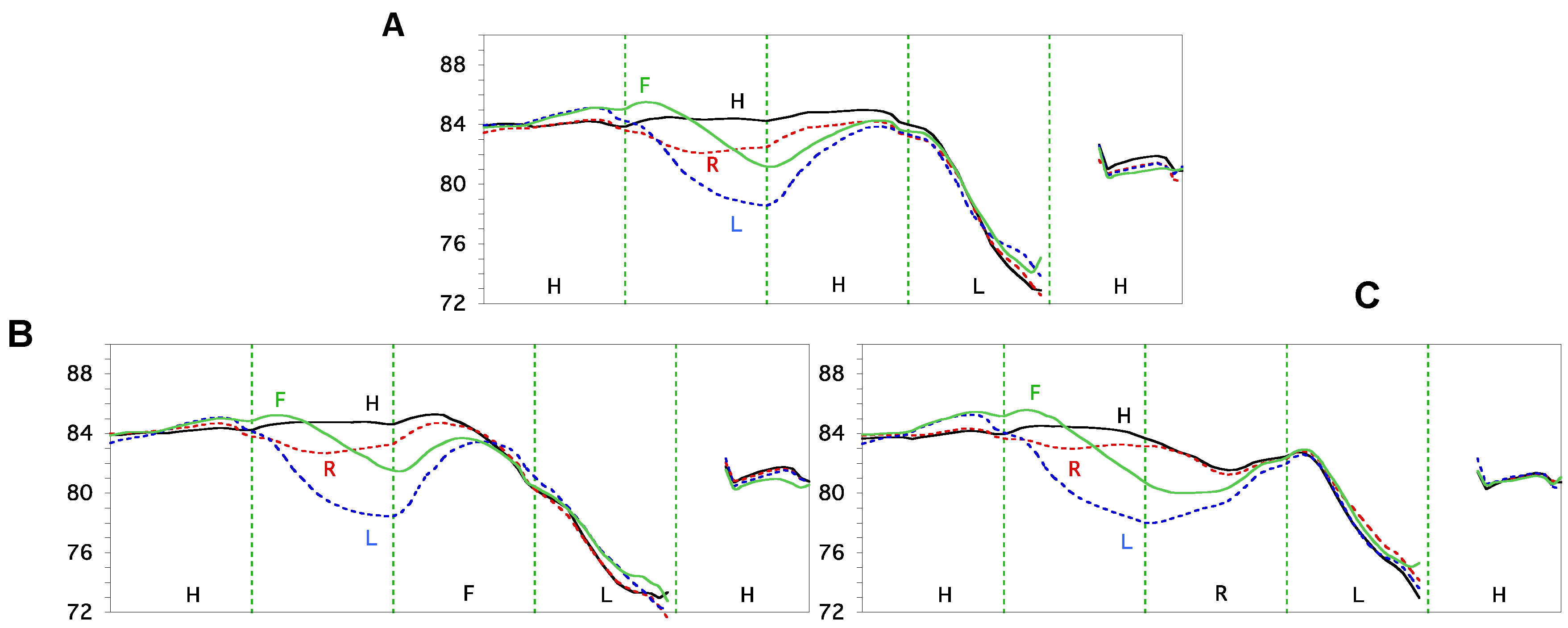

- Xu, Y.; Wang, Q.E. Pitch targets and their realization: Evidence from Mandarin Chinese. Speech Commun. 2001, 33, 319–337. [Google Scholar] [CrossRef]

- Xu, Y. Consistency of tone-syllable alignment across different syllable structures and speaking rates. Phonetica 1998, 55, 179–203. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y. Effects of tone and focus on the formation and alignment of F0 contours. J. Phon. 1999, 27, 55–105. [Google Scholar] [CrossRef]

- Xu, Y. Fundamental frequency peak delay in Mandarin. Phonetica 2001, 58, 26–52. [Google Scholar] [CrossRef]

- Bailly, G.; Holm, B. SFC: A trainable prosodic model. Speech Commun. 2005, 46, 348–364. [Google Scholar] [CrossRef]

- van Santen, J.; Kain, A.; Klabbers, E.; Mishra, T. Synthesis of prosody using multi-level unit sequences. Speech Commun. 2005, 46, 365–375. [Google Scholar] [CrossRef]

- Browman, C.P.; Goldstein, L. Articulatory gestures as phonological units. Phonology 1989, 6, 201–251. [Google Scholar] [CrossRef]

- Byrd, D.; Saltzman, E. The elastic phrase: Modeling the dynamics of boundary-adjacent lengthening. J. Phon. 2003, 31, 149–180. [Google Scholar] [CrossRef]

- Fowler, C.A. Coarticulation and theories of extrinsic timing. J. Phon. 1980, 8, 113–133. [Google Scholar] [CrossRef]

- Xu, Y. Timing and coordination in tone and intonation—An articulatory-functional perspective. Lingua 2009, 119, 906–927. [Google Scholar] [CrossRef]

- Prom-on, S.; Xu, Y.; Thipakorn, B. Modeling tone and intonation in Mandarin and English as a process of target approximation. J. Acoust. Soc. Am. 2009, 125, 405–424. [Google Scholar] [CrossRef]

- Alzaidi, M.S.A.; Xu, Y.; Xu, A.; Szreder, M. Analysis and computational modelling of Emirati Arabic intonation—A preliminary study. J. Phon. 2023, 98, 101236. [Google Scholar] [CrossRef]

- Lee, A.; Simard, C.; Tamata, A.; Xu, Y.; Prom-on, S.; Sun, J. Modelling Fijian focus prosody using PENTAtrainer: A pilot study. In Proceedings of the 2nd International Conference on Tone and Intonation (TAI 2023), Singapore, 18–21 November 2023; pp. 9–10. [Google Scholar]

- Lee, A.; Xu, Y. Modelling Japanese intonation using pentatrainer2. In Proceedings of the 18th International Congress of Phonetic Sciences, Glasgow, UK, 10–14 August 2015; International Phonetic Association: Glasgow, UK, 2015. [Google Scholar]

- Ouyang, I.C. Non-segmental cues for syllable perception: The role of local tonal f0 and global speech rate in syllabification. IJCLCLP 2013, 18, 59–79. [Google Scholar]

- Simard, C.; Wegener, C.; Lee, A.; Chiu, F.; Youngberg, C. Savosavo word stress: A quantitative analysis. In Proceedings of the Speech Prosody, Dublin, Ireland, 20–23 May 2014; pp. 512–514. [Google Scholar]

- Ta, T.Y.; Ngo, H.H.; Van Nguyen, H. A New Computational Method for Determining Parameters Representing Fundamental Frequency Contours of Speech Words. J. Inf. Hiding Multim. Signal Process. 2020, 11, 1. [Google Scholar]

- Taheri-Ardali, M.; Xu, Y. An articulatory-functional approach to modeling Persian focus prosody. In Proceedings of the 18th International Congress of Phonetic Sciences, Glasgow, UK, 10–14 August 2015; pp. 326–329. [Google Scholar]

- Thai, T.Y.; Huy, H.N.; Tuyet, D.V.; Ablameyko, S.V.; Hung, N.V.; Hoa, D.V. Tonal languages speech synthesis using an indirect pitch markers and the quantitative target approximation methods. J. Belarusian State Univ. Math. Inform. 2019, 3, 105–121. [Google Scholar] [CrossRef]

- Xu, Y.; Prom-on, S. Toward invariant functional representations of variable surface fundamental frequency contours: Synthesizing speech melody via model-based stochastic learning. Speech Commun. 2014, 57, 181–208. [Google Scholar] [CrossRef]

- Liu, F.; Xu, Y.; Prom-on, S.; Yu, A.C.L. Morpheme-like prosodic functions: Evidence from acoustic analysis and computational modeling. J. Speech Sci. 2013, 3, 85–140. [Google Scholar] [CrossRef]

- Cheng, C.; Xu, Y. Articulatory limit and extreme segmental reduction in Taiwan Mandarin. J. Acoust. Soc. Am. 2013, 134, 4481–4495. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, F. Tonal alignment, syllable structure and coarticulation: Toward an integrated model. Ital. J. Linguist. 2006, 18, 125–159. [Google Scholar]

- Xu, Y.; Prom-on, S. Economy of Effort or Maximum Rate of Information? Exploring Basic Principles of Articulatory Dynamics. Front. Psychol. 2019, 10, 2469. [Google Scholar] [CrossRef]

- Birkholz, P.; Kroger, B.J.; Neuschaefer-Rube, C. Model-Based Reproduction of Articulatory Trajectories for Consonant-Vowel Sequences. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 1422–1433. [Google Scholar] [CrossRef]

- Krug, P.K.; Birkholz, P.; Gerazov, B.; Niekerk, D.R.V.; Xu, A.; Xu, Y. Artificial vocal learning guided by phoneme recognition and visual information. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 1734–1744. [Google Scholar] [CrossRef]

- Prom-on, S.; Birkholz, P.; Xu, Y. Training an articulatory synthesizer with continuous acoustic data. Proc. Interspeech 2013, 2013, 349–353. [Google Scholar]

- Prom-on, S.; Birkholz, P.; Xu, Y. Identifying underlying articulatory targets of Thai vowels from acoustic data based on an analysis-by-synthesis approach. EURASIP J. Audio Speech Music. Process. 2014, 2014, 23. [Google Scholar] [CrossRef]

- Van Niekerk, D.R.; Xu, A.; Gerazov, B.; Krug, P.K.; Birkholz, P.; Halliday, L.; Prom-on, S.; Xu, Y. Simulating vocal learning of spoken language: Beyond imitation. Speech Commun. 2023, 147, 51–62. [Google Scholar] [CrossRef]

- Xu, A.; van Niekerk, D.R.; Gerazov, B.; Krug, P.K.; Birkholz, P.; Prom-on, S.; Halliday, L.F.; Xu, Y. Artificial vocal learning guided by speech recognition: What it may tell us about how children learn to speak. J. Phon. 2024, 105, 101338. [Google Scholar] [CrossRef]

- Moon, S.-J.; Lindblom, B. Interaction between duration, context, and speaking style in English stressed vowels. J. Acoust. Soc. Am. 1994, 96, 40–55. [Google Scholar] [CrossRef]

- Browman, C.P.; Goldstein, L. Targetless schwa: An articulatory analysis. In Papers in Laboratory Phonology II: Gesture, Segment, Prosody; Docherty, G.J., Ladd, R., Eds.; Cambridge University Press: Cambridge, UK, 1992; pp. 26–36. [Google Scholar]

- Wood, S.A.J. Assimilation or coarticulation? Evidence from the temporal co-ordination of tongue gestures for the palatalization of Bulgarian alveolar stops. J. Phon. 1996, 24, 139–164. [Google Scholar] [CrossRef]

- Bell-Berti, F.; Krakow, R.A. Anticipatory velar lowering: A coproduction account. J. Acoust. Soc. Am. 1991, 90, 112–123. [Google Scholar] [CrossRef]

- Boyce, S.E.; Krakow, R.A.; Bell-Berti, F. Phonological underspecification and speech motor organization. Phonology 1991, 8, 210–236. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, Y. Production of weak elements in speech -- Evidence from f0 patterns of neutral tone in standard Chinese. Phonetica 2006, 63, 47–75. [Google Scholar] [CrossRef] [PubMed]

- Ostry, D.J.; Gribble, P.L.; Gracco, V.L. Coarticulation of jaw movements in speech production: Is context sensitivity in speech kinematics centrally planned? J. Neurosicence 1996, 16, 1570–1979. [Google Scholar] [CrossRef] [PubMed]

- Laboissiere, R.; Ostry, D.J.; Feldman, A.G. The control of multi-muscle systems: Human jaw and hyoid movements. Biol. Cybern. 1996, 74, 373–384. [Google Scholar] [CrossRef] [PubMed]

- Byrd, D.; Kaun, A.; Narayanan, S.; Saltzman, E. Phrasal signatures in articulation. In Papers in Laboratory Phonology V: Acquisition and the Lexicon; Broe, M.B., Pierrehumbert, J.B., Eds.; Cambridge University Press: Cambridge, UK, 2000; pp. 70–87. [Google Scholar]

- Edwards, J.R.; Beckman, M.E.; Fletcher, J. The articulatory kinematics of final lengthening. J. Acoust. Soc. Am. 1991, 89, 369–382. [Google Scholar] [CrossRef]

- Arvaniti, A.; Ladd, D.R. Underspecification in intonation revisited: A reply to Xu, Lee, Prom-on and Liu. Phonology 2015, 32, 537–541. [Google Scholar] [CrossRef]

- Keating, P.A. Underspecification in phonetics. Phonology 1988, 5, 275–292. [Google Scholar] [CrossRef]

- Myers, S. Surface underspecification of tone in Chichewa. Phonology 1998, 15, 367–392. [Google Scholar] [CrossRef]

- Steriade, D. Underspecification and markedness. In Handbook of Phonological Theory; Goldsmith, J.A., Ed.; Basil Blackweell: Oxford, UK, 1995; pp. 114–174. [Google Scholar]

- Fujisaki, H.; Wang, C.; Ohno, S.; Gu, W. Analysis and synthesis of fundamental frequency contours of Standard Chinese using the command–response model. Speech Commun. 2005, 47, 59–70. [Google Scholar] [CrossRef]

- Whalen, D.H. Coarticulation is largely planned. J. Phon. 1990, 18, 3–35. [Google Scholar] [CrossRef]

- Saitou, T.; Unoki, M.; Akagi, M. Development of an F0 control model based on F0 dynamic characteristics for singing-voice synthesis. Speech Commun. 2005, 46, 405–417. [Google Scholar] [CrossRef]

- Gandour, J.; Potisuk, S.; Dechongkit, S. Tonal coarticulation in Thai. J. Phon. 1994, 22, 477–492. [Google Scholar] [CrossRef]

- Gu, W.; Lee, T. Effects of tonal context and focus on Cantonese F0. In Proceedings of the 16th International Congress of Phonetic Sciences, Saarbrucken, Germany, 6–10 August 2007; pp. 1033–1036. [Google Scholar]

- Laniran, Y.O.; Clements, G.N. Downstep and high raising: Interacting factors in Yoruba tone production. J. Phon. 2003, 31, 203–250. [Google Scholar] [CrossRef]

- Lee, A.; Xu, Y.; Prom-On, S. Pre-low raising in Japanese pitch accent. Phonetica 2017, 74, 231–246. [Google Scholar] [PubMed]

- hubpages.com. How to Hit a Great Smash in Badminton. 2014. Available online: http://hubpages.com/games-hobbies/Badminton-Smash-How-to-Play-the-Shot# (accessed on 8 September 2016).

- Wong, Y.W. Contextual Tonal Variations and Pitch Targets in Cantonese. In Proceedings of the Speech Prosody 2006, Dresden, Germany, 2–5 May 2006. PS3-13-199. [Google Scholar]

- Gay, T. Effect of speaking rate on diphthong formant movements. J. Acoust. Soc. Am. 1968, 44, 1570–1573. [Google Scholar] [CrossRef]

- Fowler, C.A. Production and perception of coarticulation among stressed and unstressed vowels. J. Speech Hear. Res. 1981, 46, 127–139. [Google Scholar]

- Brunner, J.; Geng, C.; Sotiropoulou, S.; Gafos, A. Timing of German onset and word boundary clusters. Lab. Phonol. 2014, 5, 403–454. [Google Scholar]

- Gao, M. Gestural Coordination among vowel, consonant and tone gestures in Mandarin Chinese. Chin. J. Phon. 2009, 2, 43–50. [Google Scholar]

- Shaw, J.A.; Chen, W.-R. Spatially-conditioned speech timing: Evidence and implications. Front. Psychol. 2019, 10, 2726. [Google Scholar] [CrossRef]

- Šimko, J.; O’Dell, M.; Vainio, M. Emergent consonantal quantity contrast and context-dependence of gestural phasing. J. Phon. 2014, 44, 130–151. [Google Scholar]

- Yi, H.; Tilsen, S. Interaction between lexical tone and intonation: An EMA study. Proc. Interspeech 2016, 2016, 2448–2452. [Google Scholar]

- Tilsen, S. Detecting anticipatory information in speech with signal chopping. J. Phon. 2020, 82, 100996. [Google Scholar] [CrossRef]

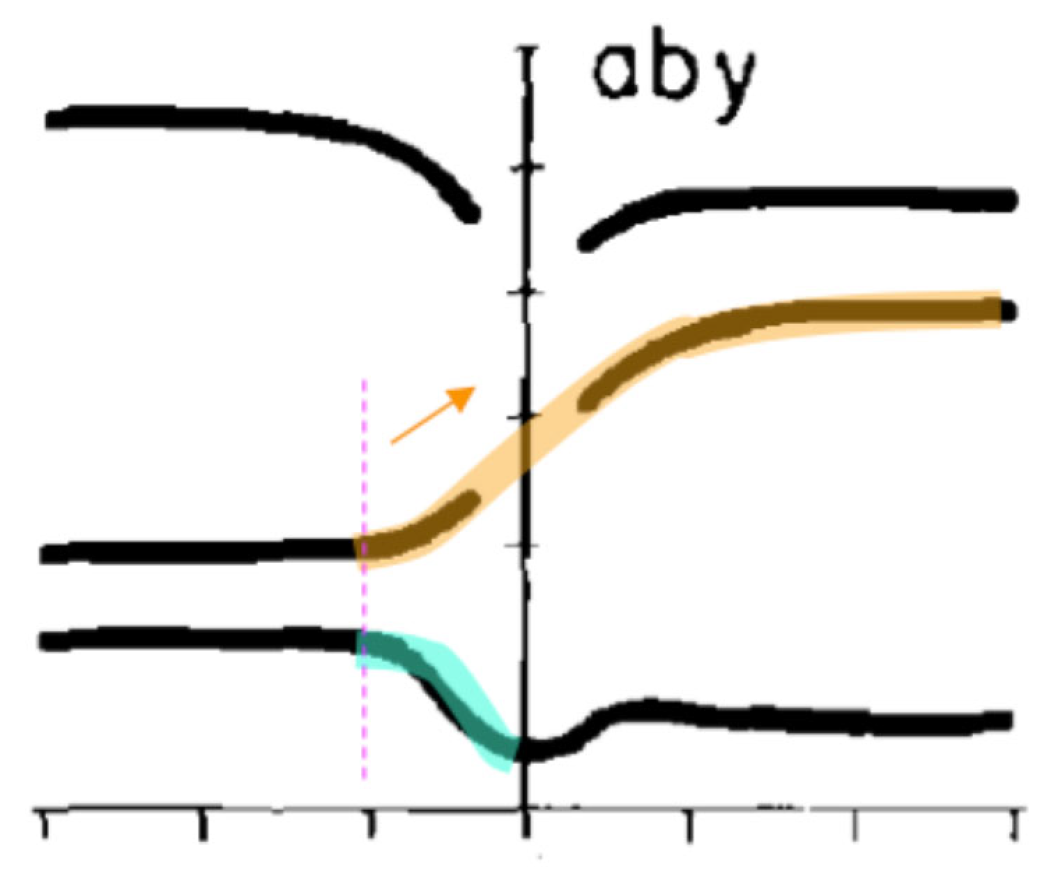

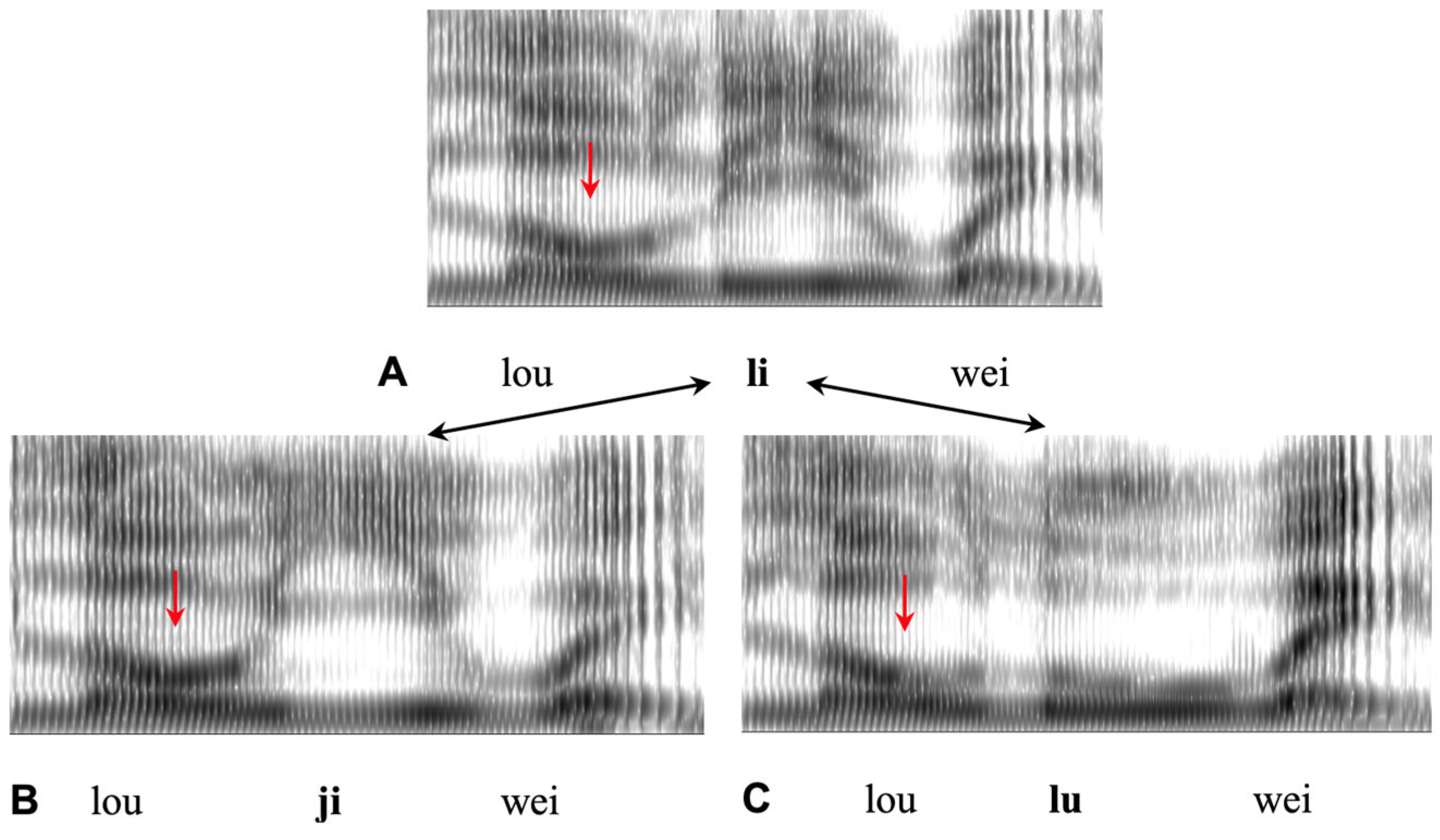

- Liu, Z.; Xu, Y.; Hsieh, F.-f. Coarticulation as synchronised CV co-onset – Parallel evidence from articulation and acoustics. J. Phon. 2022, 90, 101116. [Google Scholar] [CrossRef]

- Xu, Y. Speech as articulatory encoding of communicative functions. In Proceedings of the 16th International Congress of Phonetic Sciences, Saarbrucken, Germany, 6–10 August 2007; pp. 25–30. [Google Scholar]

- Xu, Y.; Gao, H. FormantPro as a tool for speech analysis and segmentation. Rev. Estud. Ling. 2018, 26, 1435–1454. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, F. Determining the temporal interval of segments with the help of F0 contours. J. Phon. 2007, 35, 398–420. [Google Scholar] [CrossRef]

- Liu, Z.; Xu, Y. Segmental alignment of English syllables with singleton and cluster onsets. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021. [Google Scholar]

- Browman, C.P.; Goldstein, L.M. Competing constraints on intergestural coordination and self-organization of phonological structures. Cah. l’ICP. Bull. Commun. Parlée 2000, 5, 25–34. [Google Scholar]

- Bladon, R.A.W.; Al-Bamerni, A. Coarticulation resistance of English /l/. J. Phon. 1976, 4, 135–150. [Google Scholar] [CrossRef]

- Recasens, D. Vowel-to-vowel coarticulation in Catalan VCV sequences. J. Acoust. Soc. Am. 1984, 76, 1624–1635. [Google Scholar] [CrossRef]

- Recasens, D. V-to-C coarticulation in Catalan VCV sequences: An articulatory and acoustical study. J. Phon. 1984, 12, 61–73. [Google Scholar] [CrossRef]

- Dembowski, J.; Lindstrom, M.J.; Westbury, J.R. Articulator point variability in the production of stop consonants. In Neuromotor Speech Disorders: Nature, Assessment, and Management; Cannito, M.P., Yorkston, K.M., Beukelman, D.R., Eds.; Paul H. Brookes: Baltimore, MD, USA, 1998; pp. 27–46. [Google Scholar]

- Xu, A.; Birkholz, P.; Xu, Y. Coarticulation as synchronized dimension-specific sequential target approximation: An articulatory synthesis simulation. In Proceedings of the 19th International Congress of Phonetic Sciences, Melbourne, Australia, 5–9 August 2019. [Google Scholar]

- Birkholz, P.; Jackel, D. A three-dimensional model of the vocal tract for speech synthesis. In Proceedings of the 15th International Congress of Phonetic Sciences, Barcelona, Spain, 3–9 August 2003; pp. 2597–2600. [Google Scholar]

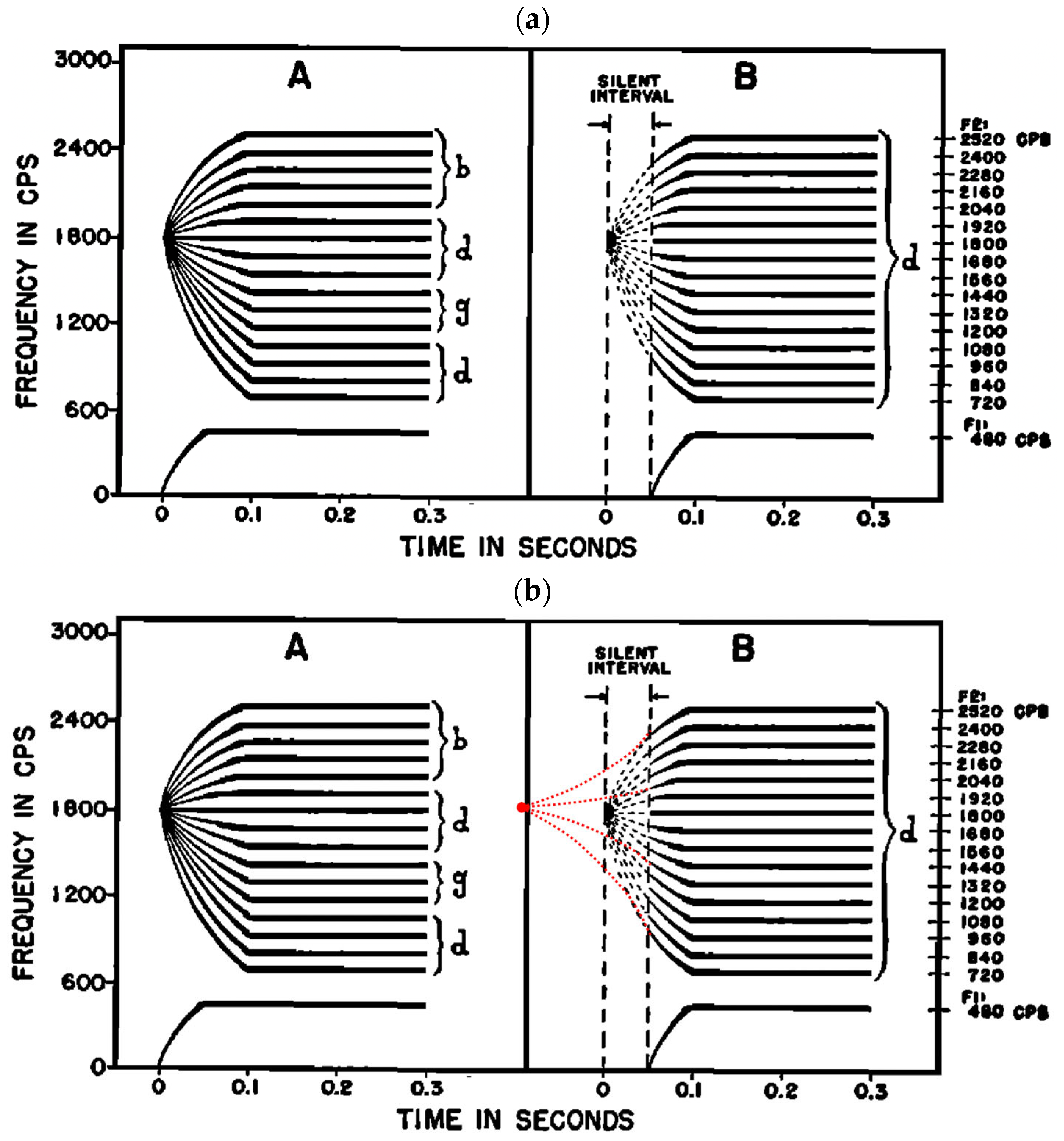

- Cooper, F.S.; Delattre, P.C.; Liberman, A.M.; Borst, J.M.; Gerstman, L.J. Some experiments on the perception of synthetic speech sounds. J. Acoust. Soc. Am. 1952, 24, 597–606. [Google Scholar] [CrossRef]

- Delattre, P.C.; Liberman, A.M.; Cooper, F.S. Acoustic Loci and Transitional Cues for Consonants. J. Acoust. Soc. Am. 1955, 27, 769–773. [Google Scholar] [CrossRef]

- Liberman, A.M.; Cooper, F.S.; Shankweiler, D.P.; Studdert-Kennedy, M.G. Perception of the speech code. Psychol. Rev. 1967, 74, 431–461. [Google Scholar] [CrossRef] [PubMed]

- Cooper, F.S.; Liberman, A.M.; Borst, J.M. The interconversion of audible and visible patterns as a basis for research in the perception of speech. Proc. Natl. Acad. Sci. USA 1951, 37, 318–325. [Google Scholar] [CrossRef] [PubMed]

- Lindblom, B.; Sussman, H.M. Dissecting coarticulation: How locus equations happen. J. Phon. 2012, 40, 1–19. [Google Scholar] [CrossRef]

- Fowler, C.A. Invariants, specifiers, cues: An investigation of locus equations as information for place of articulation. Percept. Psychophys. 1994, 55, 597–610. [Google Scholar] [CrossRef]

- Iskarous, K.; Fowler, C.A.; Whalen, D.H. Locus equations are an acoustic expression of articulator synergy. J. Acoust. Soc. Am. 2010, 128, 2021–2032. [Google Scholar] [CrossRef]

- Benoit, C. Note on the use of correlation in speech timing. J. Acoust. Soc. Am. 1986, 80, 1846–1849. [Google Scholar] [CrossRef]

- Löfqvist, A. Proportional timing in speech motor control. J. Phon. 1991, 19, 343–350. [Google Scholar] [CrossRef]

- Munhall, K.G. An examination of intra-articulator relative timing. J. Acoust. Soc. Am. 1985, 78, 1548–1553. [Google Scholar] [CrossRef]

- Ohala, J.J.; Kawasaki, H. Prosodic phonology and phonetics. Phonology 1984, 1, 113–127. [Google Scholar] [CrossRef]

- Ling, B.; Liang, J. Tonal alignment in shanghai Chinese. In Proceedings of the COCOSDA2015, Shanghai, China, 28–30 October 2015; pp. 128–132. [Google Scholar]

- Caspers, J.; van Heuven, V.J. Effects of time pressure on the phonetic realization of the Dutch accent-lending pitch rise and fall. Phonetica 1993, 50, 161–171. [Google Scholar] [CrossRef]

- Ladd, D.R.; Mennen, I.; Schepman, A. Phonological conditioning of peak alignment in rising pitch accents in Dutch. J. Acoust. Soc. Am. 2000, 107, 2685–2696. [Google Scholar] [CrossRef] [PubMed]

- Prieto, P.; Torreira, F. The segmental anchoring hypothesis revisited: Syllable structure and speech rate effects on peak timing in Spanish. J. Phon. 2007, 35, 473–500. [Google Scholar] [CrossRef]

- Arvaniti, A.; Ladd, D.R.; Mennen, I. Stability of tonal alignment: The case of Greek prenuclear accents. J. Phon. 1998, 36, 3–25. [Google Scholar] [CrossRef]

- Ladd, D.R.; Faulkner, D.; Faulkner, H.; Schepman, A. Constant “segmental anchoring” of F0 movements under changes in speech rate. J. Acoust. Soc. Am. 1999, 106, 1543–1554. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Xu, C.X. Phonetic realization of focus in English declarative intonation. J. Phon. 2005, 33, 159–197. [Google Scholar] [CrossRef]

- D’Imperio, M. Focus and tonal structure in Neapolitan Italian. Speech Commun. 2001, 33, 339–356. [Google Scholar] [CrossRef]

- Frota, S. Tonal association and target alignment in European Portuguese nuclear falls. In Laboratory Phonology VII; Gussenhoven, C., Warner, N., Eds.; Mouton de Gruyter: Berlin/Heidelberg, Germany, 2002; pp. 387–418. [Google Scholar]

- Atterer, M.; Ladd, D.R. On the phonetics and phonology of "segmental anchoring" of F0: Evidence from German. J. Phon. 2004, 32, 177–197. [Google Scholar] [CrossRef]

- Yeou, M. Effects of focus, position and syllable structure on F0 alignment patterns in Arabic. In Proceedings of the JEP-TALN 2004, Arabic Language Processing, Fez, Morocco, 19–22 April 2004; pp. 19–22. [Google Scholar]

- Sadat-Tehrani, N. The alignment of L+H* pitch accents in Persian intonation. J. Int. Phon. Assoc. 2009, 39, 205–230. [Google Scholar] [CrossRef]

- Xu, C.X.; Xu, Y. Effects of consonant aspiration on Mandarin tones. J. Int. Phon. Assoc. 2003, 33, 165–181. [Google Scholar] [CrossRef]

- Wong, Y.W.; Xu, Y. Consonantal perturbation of f0 contours of Cantonese tones. In Proceedings of the 16th International Congress of Phonetic Sciences, Saarbrucken, Germany, 6–10 August 2007; pp. 1293–1296. [Google Scholar]

- Xu, Y.; Xu, A. Consonantal F0 perturbation in American English involves multiple mechanisms. J. Acoust. Soc. Am. 2021, 149, 2877–2895. [Google Scholar] [CrossRef]

- Xu, Y.; Prom-on, S. Degrees of freedom in prosody modeling. In Speech Prosody in Speech Synthesis—Modeling, Realizing, Converting Prosody for High Quality and Flexible speech Synthesis; Hirose, K., Tao, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 19–34. [Google Scholar]

- Kang, W.; Xu, Y. Tone-syllable synchrony in Mandarin: New evidence and implications. Speech Commun. 2024, 163, 103121. [Google Scholar] [CrossRef]

- Nguyen, N.; Fagyal, Z. Acoustic aspects of vowel harmony in French. J. Phon. 2008, 36, 1–27. [Google Scholar] [CrossRef]

- Magen, H.S. The extent of vowel-to-vowel coarticulation in English. J. Phon. 1997, 25, 187–205. [Google Scholar] [CrossRef]

- Grosvald, M. Long-Distance Coarticulation in Spoken and Signed Language: An Overview. Lang. Linguist. Compass 2010, 4, 348–362. [Google Scholar] [CrossRef]

- Xu, Y. Speech melody as articulatorily implemented communicative functions. Speech Commun. 2005, 46, 220–251. [Google Scholar] [CrossRef]

- Gafos, A.I.; Benus, S. Dynamics of phonological cognition. Cogn. Sci. 2006, 30, 905–943. [Google Scholar] [CrossRef]

- Ohala, J.J. Towards a universal, phonetically-based, theory of vowel harmony. In Proceedings of the Third International Conference on Spoken Language Processing, Yokohama, Japan, 18–22 September 1994; pp. 491–494. [Google Scholar]

- Heid, S.; Hawkins, S. An acoustical study of long-domain/r/and/l/coarticulation. In Proceedings of the 5th Seminar on Speech Production: Models and Data, Kloster Seeon, Germany, 1–4 May 2000; pp. 77–80. [Google Scholar]

- West, P. The extent of coarticulation of English liquids: An acoustic and articulatory study. In Proceedings of the 14th International Congress of Phonetic Sciences, San Francisco, CA, USA, 1–7 August 1999; pp. 1901–1904. [Google Scholar]

- Chiu, F.; Fromont, L.; Lee, A.; Xu, Y. Long-distance anticipatory vowel-to-vowel assimilatory effects in French and Japanese. In Proceedings of the 18th International Congress of Phonetic Sciences, Glasgow, UK, 10–14 August 2015; pp. 1008–1012. [Google Scholar]

- Peterson, G.E.; Wang WS, Y.; Sivertsen, E. Segmentation techniques in speech synthesis. J. Acoust. Soc. Am. 1958, 30, 739–742. [Google Scholar] [CrossRef]

- Taylor, P. Text-to-Speech Synthesis; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Ohala, J.J. Alternatives to the sonority hierarchy for explaining segmental sequential constraints. In Papers from the Parasession on the Syllable; Chicago Linguistic Society: Chicago, IL, USA, 1992; pp. 319–338. [Google Scholar]

- Buchanan, J.J.; Ryu, Y.U. The interaction of tactile information and movement amplitude in a multijoint bimanual circle-tracing task: Phase transitions and loss of stability. Q. J. Exp. Psychol. Sect. A 2005, 58, 769–787. [Google Scholar] [CrossRef]

- Johansson, R.S.; Flanagan, J.R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 2009, 10, 345. [Google Scholar] [CrossRef]

- Kelso SJ, A.; Fink, P.W.; DeLaplain, C.R.; Carson, R.G. Haptic information stabilizes and destabilizes coordination dynamics. Proc. R. Soc. Lond. B Biol. Sci. 2001, 268, 1207–1213. [Google Scholar] [CrossRef]

- Koh, K.; Kwon, H.J.; Yoon, B.C.; Cho, Y.; Shin, J.-H.; Hahn, J.-O.; Miller, R.H.; Kim, Y.H.; Shim, J.K. The role of tactile sensation in online and offline hierarchical control of multi-finger force synergy. Exp. Brain Res. 2015, 233, 2539–2548. [Google Scholar] [CrossRef] [PubMed]

- Baldissera, F.; Cavallari, P.; Marini, G.; Tassone, G. Differential control of in-phase and anti-phase coupling of rhythmic movements of ipsilateral hand and foot. Exp. Brain Res. 1991, 83, 375–380. [Google Scholar] [CrossRef] [PubMed]

- Mechsner, F.; Stenneken, P.; Cole, J.; Aschersleben, G.; Prinz, W. Bimanual circling in deafferented patients: Evidence for a role of visual forward models. J. Neuralphsiology 2007, 1, 259–282. [Google Scholar] [CrossRef] [PubMed]

- Ridderikhoff, A.; Peper, C.E.; Beek, P.J. Error correction in bimanual coordination benefits from bilateral muscle activity: Evidence from kinesthetic tracking. Exp. Brain Res. 2007, 181, 31–48. [Google Scholar] [CrossRef] [PubMed]

- Spencer, R.M.C.; Ivry, R.B.; Cattaert, D.; Semjen, A. Bimanual coordination during rhythmic movements in the absence of somatosensory feedback. J. Neuralphsiology 2005, 94, 2901–2910. [Google Scholar] [CrossRef]

- Wilson, A.D.; Bingham, G.P.; Craig, J.C. Proprioceptive perception of phase variability. J. Exp. Psychol. Hum. Percept. Perform. 2003, 29, 1179–1190. [Google Scholar] [CrossRef]

- Masapollo, M.; Nittrouer, S. Immediate auditory feedback regulates inter-articulator speech coordination in service to phonetic structure. J. Acoust. Soc. Am. 2024, 156, 1850–1861. [Google Scholar] [CrossRef]

- Cowie, R.I.; Douglas-Cowie, E. Speech production in profound postlingual deafness. In Hearing Science and Hearing Disorders; Elsevier: Amsterdam, The Netherlands, 1983; pp. 183–230. [Google Scholar]

- Lane, H.; Webster, J.W. Speech deterioration in postlingually deafened adults. J. Acoust. Soc. Am. 1991, 89, 859–866. [Google Scholar] [CrossRef]

- Lyubimova, Z.V.; Sisengalieva, G.Z.; Chulkova, N.Y.; Smykova, O.I.; Selin, S.V. Role of tactile receptor structures of the tongue in speech sound production of infants of the first year of life. Bull. Exp. Biol. Med. 1999, 127, 115–119. [Google Scholar] [CrossRef]

- Ringel, R.L.; Ewanowski, S.J. Oral Perception: 1. Two-Point Discrimination. J. Speech Lang. Hear. Res. 1965, 8, 389–398. [Google Scholar] [CrossRef]

- Kent, R.D. The Feel of Speech: Multisystem and Polymodal Somatosensation in Speech Production. J. Speech Lang. Hear. Res. 2024, 67, 1424–1460. [Google Scholar] [CrossRef] [PubMed]

- De Letter, M.; Criel, Y.; Lind, A.; Hartsuiker, R.; Santens, P. Articulation lost in space. The effects of local orobuccal anesthesia on articulation and intelligibility of phonemes. Brain Lang. 2020, 207, 104813. [Google Scholar] [CrossRef] [PubMed]

- Sproat, R.; Fujimura, O. Allophonic variation in English /l/ and its implications for phonetic implementation. J. Phon. 1993, 21, 291–311. [Google Scholar] [CrossRef]

- Gick, B. Articulatory correlates of ambisyllabicity in English glides and liquids. In Papers in Laboratory Phonology VI: Constraints on Phonetic Interpretation; Local, J., Ogden, R., Temple, R., Eds.; Cambridge University Press: Cambridge, UK, 2003; pp. 222–236. [Google Scholar]

- Clements, G.N.; Keyser, S.J. CV phonology. a generative theory of the syllable. Linguist. Inq. Monogr. Camb. Mass. 1983, 9, 1–191. [Google Scholar]

- Locke, J.L. Phonological Acquisition and Change; Academic Press: London, UK, 1983. [Google Scholar]

- Gao, H.; Xu, Y. Ambisyllabicity in English: How real is it? In Proceedings of the 9th Phonetics Conference of China (PCC2010), Tianjin, China, 28 May–1 June 2010. [Google Scholar]

- Xu, Y. Acoustic-phonetic characteristics of junctures in Mandarin Chinese. Zhongguo Yuwen [J. Chin. Linguist.] 1986, 4, 353–360. (In Chinese) [Google Scholar]

- Anderson-Hsieh, J.; Riney, T.; Koehler, K. Connected speech modifications in the English of Japanese ESL learners. Ideal 1994, 7, 31–52. [Google Scholar]

- Hieke, A.E. Linking as a marker of fluent speech. Lang. Speech 1984, 27, 343–354. [Google Scholar] [CrossRef]

- Gaskell, M.G.; Spinelli, E.; Meunier, F. Perception of resyllabification in French. Mem. Cognit. 2002, 30, 798–810. [Google Scholar] [CrossRef]

- Treiman, R.; Danis, C. Syllabification of intervocalic consonants. J. Mem. Lang. 1988, 27, 87–104. [Google Scholar] [CrossRef]

- Strycharczuk, P.; Kohlberger, M. Resyllabification reconsidered: On the durational properties of word-final /s/ in Spanish. Lab. Phonol. 2016, 7, 1–24. [Google Scholar]

- Liu, Z.; Xu, Y. Deep learning assessment of syllable affiliation of intervocalic consonants. J. Acoust. Soc. Am. 2023, 153, 848–866. [Google Scholar] [CrossRef] [PubMed]

- de Jong, K. Rate-induced resyllabification revisited. Lang. Speech 2001, 44, 197–216. [Google Scholar] [CrossRef] [PubMed]

- Eriksson, A. Aural/acoustic vs. automatic methods in forensic phonetic case work. In Forensic Speaker Recognition: Law Enforcement and Counter-Terrorism; Neustein, A., Patil, H.A., Eds.; Springer: New York, NY, USA, 2012; pp. 41–69. [Google Scholar]

- Jespersen, O. Fonetik: En Systematisk Fremstilling af Læren om Sproglyd; Det Schøbergse Forlag: København, Denmark, 1899. [Google Scholar]

- Whitney, W.D. The relation of vowel and consonant. J. Am. Orient. Soc. 1865, 8, 357–373. [Google Scholar]

- Clements, G.N. The Role of the Sonority Cycle in Core Syllabification. In Papers in Laboratory Phonology 1: Between the Grammar and Physics of Speech; Beckman, M., Ed.; Cambridge University Press: Cambridge, UK, 1990; pp. 283–333. [Google Scholar]

- Guenther, F.H.; Ghosh, S.S.; Tourville, J.A. Neural modeling and imaging of the cortical interactions underlying syllable production. Brain Lang. 2006, 96, 280–301. [Google Scholar] [CrossRef]

- Tourville, J.A.; Guenther, F.H. The DIVA model: A neural theory of speech acquisition and production. Lang. Cogn. Process. 2011, 26, 952–981. [Google Scholar] [CrossRef]

- Cohen, A.; Hart, J.T. On the anatomy of intonation. Lingua 1967, 19, 177–192. [Google Scholar] [CrossRef]

- Kuhl, P.K. On babies, birds, modules, and mechanisms: A comparative approach to the acquisition of vocal communication. In The Comparative Psychology of Audition: Perceiving Complex Sounds; Dooling, R.J., Hulse, S.H., Eds.; Erlbaum: Hillsdale, NJ, USA, 1989; pp. 379–419. [Google Scholar]

- Margoliash, D.; Schmidt, M.F. Sleep, off-line processing, and vocal learning. Brain Lang. 2010, 115, 45–58. [Google Scholar] [CrossRef]

- Meng, H.; Chen, Y.; Liu, Z.; Xu, Y. Mandarin tone production can be learned under perceptual guidance—A machine learning simulation. In Proceedings of the 20th International Congress of Phonetic Sciences, Prague, Czech Republic, 7–11 August 2023; pp. 2324–2328. [Google Scholar]

- Guenther, F.H.; Hickok, G. Neural models of motor speech control. In Neurobiology of Language; Hickok, G., Small, S.L., Eds.; Elsevier: San Diego, CA, USA, 2016; pp. 725–740. [Google Scholar]

- Xu, Y.; Larson, C.R.; Bauer, J.J.; Hain, T.C. Compensation for pitch-shifted auditory feedback during the production of Mandarin tone sequences. J. Acoust. Soc. Am. 2004, 116, 1168–1178. [Google Scholar] [CrossRef]

- Fry, D.B. Experiments in the perception of stress. Lang. Speech 1958, 1, 126–152. [Google Scholar] [CrossRef]

- Nakatani, L.H.; O’connor, K.D.; Aston, C.H. Prosodic aspects of American English speech rhythm. Phonetica 1981, 38, 84–106. [Google Scholar] [CrossRef]

- Wang, C.; Xu, Y.; Zhang, J. Functional timing or rhythmical timing, or both? A corpus study of English and Mandarin duration. Front. Psychol. 2023, 13, 869049. [Google Scholar] [CrossRef] [PubMed]

- Baker, R.E.; Bradlow, A.R. Variability in word duration as a function of probability, speech style, and prosody. Lang. Speech 2009, 52, 391–413. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.D. Music, Language, and the Brain; Oxford University Press: New York, NY, USA, 2008. [Google Scholar]

- Belyk, M.; Brown, S. The origins of the vocal brain in humans. Neurosci. Biobehav. Rev. 2017, 77, 177–193. [Google Scholar] [CrossRef]

- Sporns, O. Small-world connectivity, motif composition, and complexity of fractal neuronal connections. Biosystems 2006, 85, 55–64. [Google Scholar] [CrossRef]

- Sporns, O.; Zwi, J.D. The small world of the cerebral cortex. Neuroinformatics 2004, 2, 145–162. [Google Scholar] [CrossRef]

- Patel, A.D. Beat-based dancing to music has evolutionary foundations in advanced vocal learning. BMC Neurosci. 2024, 25, 65. [Google Scholar] [CrossRef]

- Hickok, G. The “coordination conjecture” as an alternative to Patel’s fortuitous enhancement hypothesis for the relation between vocal learning and beat-based dancing. BMC Neurosci. 2024, 25, 59. [Google Scholar] [CrossRef]

- Hickok, G.; Venezia, J.; Teghipco, A. Beyond Broca: Neural architecture and evolution of a dual motor speech coordination system. Brain 2023, 146, 1775–1790. [Google Scholar] [CrossRef]

- Dichter, B.K.; Breshears, J.D.; Leonard, M.K.; Chang, E.F. The control of vocal pitch in human laryngeal motor cortex. Cell 2018, 174, 21–31.e9. [Google Scholar] [CrossRef]

- Duraivel, S.; Rahimpour, S.; Chiang, C.-H.; Trumpis, M.; Wang, C.; Barth, K.; Harward, S.C.; Lad, S.P.; Friedman, A.H.; Southwell, D.G. High-resolution neural recordings improve the accuracy of speech decoding. Nat. Commun. 2023, 14, 6938. [Google Scholar] [CrossRef]

- Kubikova, L.; Bosikova, E.; Cvikova, M.; Lukacova, K.; Scharff, C.; Jarvis, E.D. Basal ganglia function, stuttering, sequencing, and repair in adult songbirds. Sci. Rep. 2014, 4, 6590. [Google Scholar] [CrossRef] [PubMed]

- Tanakaa, M.; Alvarado, J.S.; Murugan, M.; Mooney, R. Focal expression of mutant huntingtin in the songbird basal ganglia disrupts cortico-basal ganglia networks and vocal sequences. Proc. Natl. Acad. Sci. USA 2016, 113, E1720–E1727. [Google Scholar] [CrossRef] [PubMed]

- Perkell, J.S. Movement goals and feedback and feedforward control mechanisms in speech production. J. Neurolinguist. 2012, 25, 382–407. [Google Scholar] [CrossRef]

- Kuhl, P.K.; Meltzoff, A.N. Infant vocalizations in response to speech: Vocal imitation and developmental change. J. Acoust. Soc. Am. 1996, 100, 2425–2438. [Google Scholar] [CrossRef]

- Chartier, J.; Anumanchipalli, G.K.; Johnson, K.; Chang, E.F. Encoding of articulatory kinematic trajectories in human speech sensorimotor cortex. Neuron 2018, 98, 1042–1054.e4. [Google Scholar] [CrossRef]

- ter Haar, S.M.; Fernandez, A.A.; Gratier, M.; Knörnschild, M.; Levelt, C.; Moore, R.K.; Vellema, M.; Wang, X.; Oller, D.K. Cross-species parallels in babbling: Animals and algorithms. Philos. Trans. R. Soc. B Biol. Sci. 2021, 376, 20200239. [Google Scholar] [CrossRef]

- Grimme, B.; Fuchs, S.; Perrier, P.; Schöner, G. Limb versus speech motor control: A conceptual review. Motor Control 2011, 15, 5–33. [Google Scholar] [CrossRef]

- Konczak, J.; Vander Velden, H.; Jaeger, L. Learning to play the violin: Motor control by freezing, not freeing degrees of freedom. J. Mot. Behav. 2009, 41, 243–252. [Google Scholar] [CrossRef]

- Morasso, P. A vexing question in motor control: The degrees of freedom problem. Front. Bioeng. Biotechnol. 2022, 9, 783501. [Google Scholar] [CrossRef]

| Property | Motor Synchrony | Entrainment |

|---|---|---|

| Synchrony in a single cycle? | Yes | N/A |

| Speed of achieving synchrony | Immediate (1–2 cycles) | Many cycles |

| Similarity in natural frequency? | No | Yes |

| In-synch out-synch undulation? | No | Yes |

| Under central/shared control? | Yes | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y. Syllable as a Synchronization Mechanism That Makes Human Speech Possible. Brain Sci. 2025, 15, 33. https://doi.org/10.3390/brainsci15010033

Xu Y. Syllable as a Synchronization Mechanism That Makes Human Speech Possible. Brain Sciences. 2025; 15(1):33. https://doi.org/10.3390/brainsci15010033

Chicago/Turabian StyleXu, Yi. 2025. "Syllable as a Synchronization Mechanism That Makes Human Speech Possible" Brain Sciences 15, no. 1: 33. https://doi.org/10.3390/brainsci15010033

APA StyleXu, Y. (2025). Syllable as a Synchronization Mechanism That Makes Human Speech Possible. Brain Sciences, 15(1), 33. https://doi.org/10.3390/brainsci15010033