Dual Representation of the Auditory Space

Abstract

1. Introduction

2. Methods

2.1. Search Strategy

2.2. Inclusion Criteria

2.3. Data Analysis

3. Results

3.1. Focal Brain Lesions: Dissociation between Explicit and Implicit Use of Auditory Spatial Cues

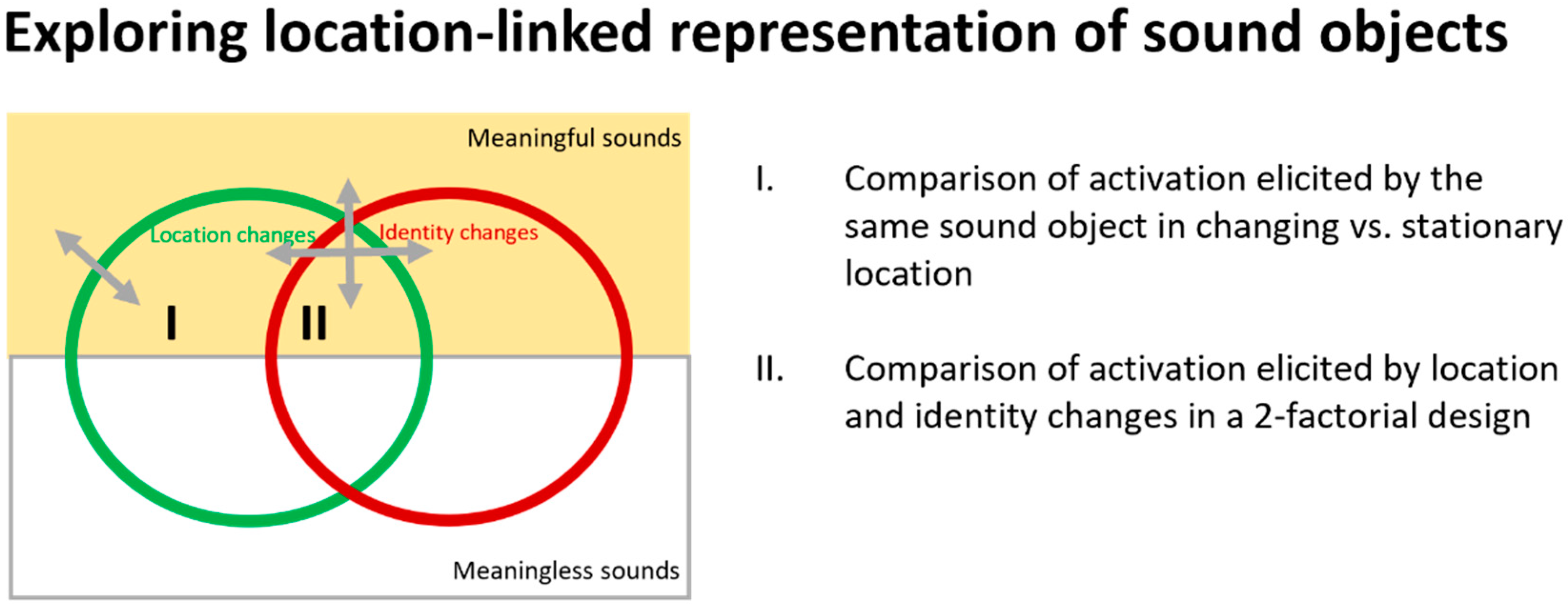

3.2. Neuroimaging Paradigms to Investigate Location-Linked Representation of Sound Objects

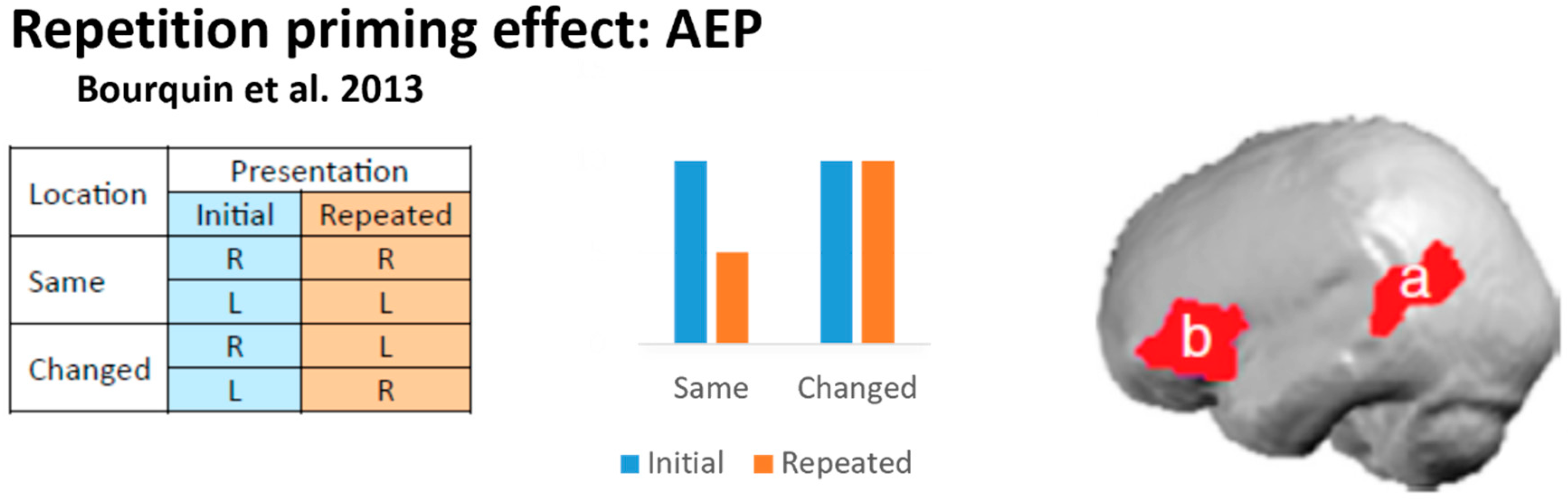

3.3. AEP: Left Hemispheric Contribution to the Rapid Discrimination of Location Changes

3.4. fMRI Evidence for Combined Encoding of Sound Identity and Location

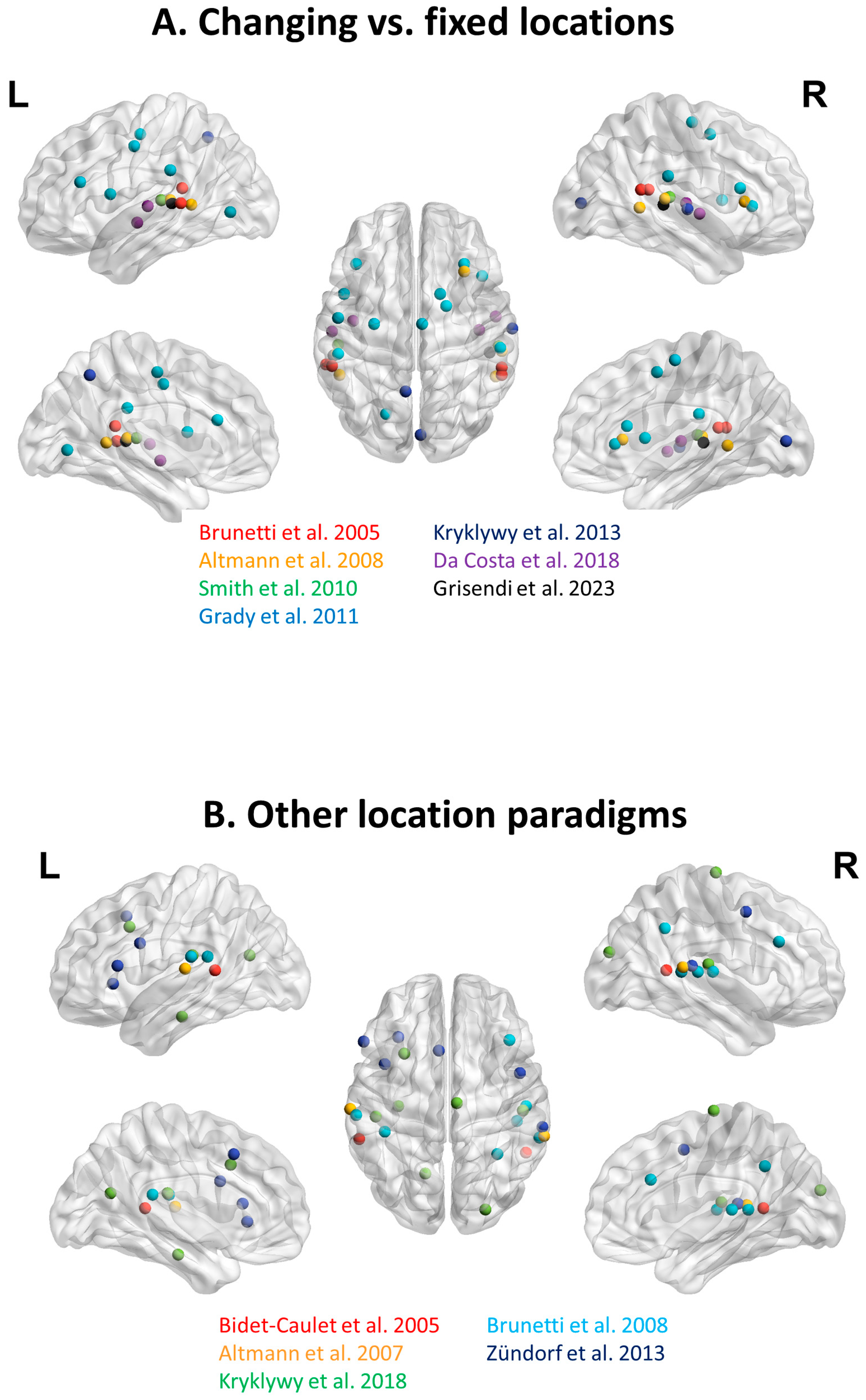

3.4.1. Changing vs. Fixed Locations

3.4.2. Other Location Paradigms

3.4.3. Contribution of Early-Stage Auditory Areas

3.5. fMRI Evidence for Location-Linked Encoding of Emotional Valence

4. Discussion

4.1. Current Evidence for Location-Linked Representation of Sound Objects

4.2. Outstanding Issues

4.3. Clinical Impact

4.4. Virtual Reality and Artificial Intelligence Applications

5. Conclusions

Funding

Conflicts of Interest

References

- Ahveninen, J.; Jääskeläinen, I.P.; Raij, T.; Bonmassar, G.; Devore, S.; Hämäläinen, M.; Levänen, S.; Lin, F.-H.; Sams, M.; Shinn-Cunningham, B.G.; et al. Task-Modulated “What” and “Where” Pathways in Human Auditory Cortex. Proc. Natl. Acad. Sci. USA 2006, 103, 14608–14613. [Google Scholar] [CrossRef]

- Alain, C.; Arnott, S.R.; Hevenor, S.; Graham, S.; Grady, C.L. “What” and “Where” in the Human Auditory System. Proc. Natl. Acad. Sci. USA 2001, 98, 12301–12306. [Google Scholar] [CrossRef]

- Anourova, I.; Nikouline, V.V.; Ilmoniemi, R.J.; Hotta, J.; Aronen, H.J.; Carlson, S. Evidence for Dissociation of Spatial and Nonspatial Auditory Information Processing. Neuroimage 2001, 14, 1268–1277. [Google Scholar] [CrossRef]

- Arnott, S.R.; Binns, M.A.; Grady, C.L.; Alain, C. Assessing the Auditory Dual-Pathway Model in Humans. Neuroimage 2004, 22, 401–408. [Google Scholar] [CrossRef]

- Barrett, D.J.K.; Hall, D.A. Response Preferences for “What” and “Where” in Human Non-Primary Auditory Cortex. NeuroImage 2006, 32, 968–977. [Google Scholar] [CrossRef] [PubMed]

- Bergerbest, D.; Ghahremani, D.G.; Gabrieli, J.D.E. Neural Correlates of Auditory Repetition Priming: Reduced fMRI Activation in the Auditory Cortex. J. Cogn. Neurosci. 2004, 16, 966–977. [Google Scholar] [CrossRef] [PubMed]

- De Santis, L.; Clarke, S.; Murray, M.M. Automatic and Intrinsic Auditory “What” and “Where” Processing in Humans Revealed by Electrical Neuroimaging. Cereb. Cortex 2007, 17, 9–17. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Doehrmann, O.; Naumer, M.J.; Volz, S.; Kaiser, J.; Altmann, C.F. Probing Category Selectivity for Environmental Sounds in the Human Auditory Brain. Neuropsychologia 2008, 46, 2776–2786. [Google Scholar] [CrossRef]

- Griffiths, T.D.; Kumar, S.; Warren, J.D.; Stewart, L.; Stephan, K.E.; Friston, K.J. Approaches to the Cortical Analysis of Auditory Objects. Hear. Res. 2007, 229, 46–53. [Google Scholar] [CrossRef]

- Hall, A.J.; Butler, B.E.; Lomber, S.G. The Cat’s Meow: A High-Field fMRI Assessment of Cortical Activity in Response to Vocalizations and Complex Auditory Stimuli. Neuroimage 2016, 127, 44–57. [Google Scholar] [CrossRef]

- Hart, H.C.; Palmer, A.R.; Hall, D.A. Different Areas of Human Non-Primary Auditory Cortex Are Activated by Sounds with Spatial and Nonspatial Properties. Hum. Brain Mapp. 2004, 21, 178–190. [Google Scholar] [CrossRef]

- Johnson, B.W.; Hautus, M.J.; Hayns, A.L.; Fitzgibbon, B.M. Differential Cortical Processing of Location and Pitch Changes in Dichotic Pitch. NeuroReport 2006, 17, 389. [Google Scholar] [CrossRef]

- Kaas, J.H.; Hackett, T.A. Subdivisions of Auditory Cortex and Processing Streams in Primates. Proc. Natl. Acad. Sci. USA 2000, 97, 11793–11799. [Google Scholar] [CrossRef] [PubMed]

- Maeder, P.P.; Meuli, R.A.; Adriani, M.; Bellmann, A.; Fornari, E.; Thiran, J.P.; Pittet, A.; Clarke, S. Distinct Pathways Involved in Sound Recognition and Localization: A Human fMRI Study. Neuroimage 2001, 14, 802–816. [Google Scholar] [CrossRef]

- Rauschecker, J.P.; Scott, S.K. Maps and Streams in the Auditory Cortex: Nonhuman Primates Illuminate Human Speech Processing. Nat. Neurosci. 2009, 12, 718–724. [Google Scholar] [CrossRef]

- Rauschecker, J.P.; Tian, B. Mechanisms and Streams for Processing of “What” and “Where” in Auditory Cortex. Proc. Natl. Acad. Sci. USA 2000, 97, 11800–11806. [Google Scholar] [CrossRef]

- Tian, B.; Reser, D.; Durham, A.; Kustov, A.; Rauschecker, J.P. Functional Specialization in Rhesus Monkey Auditory Cortex. Science 2001, 292, 290–293. [Google Scholar] [CrossRef] [PubMed]

- Warren, J.D.; Griffiths, T.D. Distinct Mechanisms for Processing Spatial Sequences and Pitch Sequences in the Human Auditory Brain. J. Neurosci. 2003, 23, 5799–5804. [Google Scholar] [CrossRef] [PubMed]

- Adriani, M.; Maeder, P.; Meuli, R.; Thiran, A.B.; Frischknecht, R.; Villemure, J.-G.; Mayer, J.; Annoni, J.-M.; Bogousslavsky, J.; Fornari, E.; et al. Sound Recognition and Localization in Man: Specialized Cortical Networks and Effects of Acute Circumscribed Lesions. Exp. Brain Res. 2003, 153, 591–604. [Google Scholar] [CrossRef]

- Clarke, S.; Bellmann, A.; Meuli, R.A.; Assal, G.; Steck, A.J. Auditory Agnosia and Auditory Spatial Deficits Following Left Hemispheric Lesions: Evidence for Distinct Processing Pathways. Neuropsychologia 2000, 38, 797–807. [Google Scholar] [CrossRef]

- Clarke, S.; Bellmann Thiran, A.; Maeder, P.; Adriani, M.; Vernet, O.; Regli, L.; Cuisenaire, O.; Thiran, J.-P. What and Where in Human Audition: Selective Deficits Following Focal Hemispheric Lesions. Exp. Brain Res. 2002, 147, 8–15. [Google Scholar] [CrossRef] [PubMed]

- Rey, B.; Frischknecht, R.; Maeder, P.; Clarke, S. Patterns of Recovery Following Focal Hemispheric Lesions: Relationship between Lasting Deficit and Damage to Specialized Networks. Restor. Neurol. Neurosci. 2007, 25, 285–294. [Google Scholar] [PubMed]

- Zatorre, R.J.; Penhune, V.B. Spatial Localization after Excision of Human Auditory Cortex. J. Neurosci. 2001, 21, 6321–6328. [Google Scholar] [CrossRef] [PubMed]

- Zündorf, I.C.; Lewald, J.; Karnath, H.-O. Testing the Dual-Pathway Model for Auditory Processing in Human Cortex. Neuroimage 2016, 124, 672–681. [Google Scholar] [CrossRef] [PubMed]

- Bisiach, E.; Cornacchia, L.; Sterzi, R.; Vallar, G. Disorders of Perceived Auditory Lateralization after Lesions of the Right Hemisphere. Brain 1984, 107 Pt 1, 37–52. [Google Scholar] [CrossRef] [PubMed]

- Ruff, R.M.; Hersh, N.A.; Pribram, K.H. Auditory Spatial Deficits in the Personal and Extrapersonal Frames of Reference Due to Cortical Lesions. Neuropsychologia 1981, 19, 435–443. [Google Scholar] [CrossRef]

- Spierer, L.; Bellmann-Thiran, A.; Maeder, P.; Murray, M.M.; Clarke, S. Hemispheric Competence for Auditory Spatial Representation. Brain 2009, 132, 1953–1966. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, H.; Hachisuka, K.; Ogata, H. Sound Lateralisation in Patients with Left or Right Cerebral Hemispheric Lesions: Relation with Unilateral Visuospatial Neglect. J. Neurol. Neurosurg. Psychiatry 1999, 67, 481–486. [Google Scholar] [CrossRef]

- Thiran, A.B.; Clarke, S. Preserved Use of Spatial Cues for Sound Segregation in a Case of Spatial Deafness. Neuropsychologia 2003, 41, 1254–1261. [Google Scholar] [CrossRef]

- Xia, M.; Wang, J.; He, Y. BrainNet Viewer: A Network Visualization Tool for Human Brain Connectomics. PLoS ONE 2013, 8, e68910. [Google Scholar] [CrossRef]

- Duffour-Nikolov, C.; Tardif, E.; Maeder, P.; Thiran, A.B.; Bloch, J.; Frischknecht, R.; Clarke, S. Auditory Spatial Deficits Following Hemispheric Lesions: Dissociation of Explicit and Implicit Processing. Neuropsychol. Rehabil. 2012, 22, 674–696. [Google Scholar] [CrossRef] [PubMed]

- Tissieres, I.; Crottaz-Herbette, S.; Clarke, S. Implicit Representation of the Auditory Space: Contribution of the Left and Right Hemispheres. Brain Struct. Funct. 2019, 224, 1569–1582. [Google Scholar] [CrossRef] [PubMed]

- Tissieres, I.; Crottaz-Herbette, S.; Clarke, S. Exploring Auditory Neglect: Anatomo-Clinical Correlations of Auditory Extinction. Ann. Phys. Rehabil. Med. 2018, 61, 386–394. [Google Scholar] [CrossRef] [PubMed]

- Schadwinkel, S.; Gutschalk, A. Functional Dissociation of Transient and Sustained fMRI BOLD Components in Human Auditory Cortex Revealed with a Streaming Paradigm Based on Interaural Time Differences. Eur. J. Neurosci. 2010, 32, 1970–1978. [Google Scholar] [CrossRef] [PubMed]

- Schadwinkel, S.; Gutschalk, A. Transient Bold Activity Locked to Perceptual Reversals of Auditory Streaming in Human Auditory Cortex and Inferior Colliculus. J. Neurophysiol. 2011, 105, 1977–1983. [Google Scholar] [CrossRef] [PubMed]

- Best, V.; Ozmeral, E.J.; Kopco, N.; Shinn-Cunningham, B.G. Object Continuity Enhances Selective Auditory Attention. Proc. Natl. Acad. Sci. USA 2008, 105, 13174–13178. [Google Scholar] [CrossRef] [PubMed]

- Best, V.; Shinn-Cunningham, B.G.; Ozmeral, E.J.; Kopco, N. Exploring the Benefit of Auditory Spatial Continuity. J. Acoust. Soc. Am. 2010, 127, EL258–EL264. [Google Scholar] [CrossRef] [PubMed]

- Carhart, R.; Tillman, T.W.; Johnson, K.R. Release of Masking for Speech through Interaural Time Delay. J. Acoust. Soc. Am. 1967, 42, 124–138. [Google Scholar] [CrossRef]

- Carhart, R.; Tillman, T.W.; Johnson, K.R. Effects of Interaural Time Delays on Masking by Two Competing Signals. J. Acoust. Soc. Am. 1968, 43, 1223–1230. [Google Scholar] [CrossRef]

- Carhart, R.; Tillman, T.W.; Greetis, E.S. Release from Multiple Maskers: Effects of Interaural Time Disparities. J. Acoust. Soc. Am. 1969, 45, 411–418. [Google Scholar] [CrossRef]

- Carhart, R.; Tillman, T.W.; Greetis, E.S. Perceptual Masking in Multiple Sound Backgrounds. J. Acoust. Soc. Am. 1969, 45, 694–703. [Google Scholar] [CrossRef]

- Drennan, W.R.; Gatehouse, S.; Lever, C. Perceptual Segregation of Competing Speech Sounds: The Role of Spatial Location. J. Acoust. Soc. Am. 2003, 114, 2178–2189. [Google Scholar] [CrossRef]

- Schubert, E.D. Some Preliminary Experiments on Binaural Time Delay and Intelligibility. J. Acoust. Soc. Am. 1956, 28, 895–901. [Google Scholar] [CrossRef]

- Schubert, E.D.; Schultz, M.C. Some Aspects of Binaural Signal Selection. J. Acoust. Soc. Am. 1962, 34, 844–849. [Google Scholar] [CrossRef]

- Eramudugolla, R.; McAnally, K.I.; Martin, R.L.; Irvine, D.R.F.; Mattingley, J.B. The Role of Spatial Location in Auditory Search. Hear. Res. 2008, 238, 139–146. [Google Scholar] [CrossRef]

- Clarke, S.; Geiser, E. Roaring Lions and Chirruping Lemurs: How the Brain Encodes Sound Objects in Space. Neuropsychologia 2015, 75, 304–313. [Google Scholar] [CrossRef]

- Schwartz, A.; McDermott, J.H.; Shinn-Cunningham, B. Spatial Cues Alone Produce Inaccurate Sound Segregation: The Effect of Interaural Time Differences. J. Acoust. Soc. Am. 2012, 132, 357–368. [Google Scholar] [CrossRef]

- Altmann, C.F.; Henning, M.; Döring, M.K.; Kaiser, J. Effects of Feature-Selective Attention on Auditory Pattern and Location Processing. Neuroimage 2008, 41, 69–79. [Google Scholar] [CrossRef]

- Bourquin, N.M.-P.; Murray, M.M.; Clarke, S. Location-Independent and Location-Linked Representations of Sound Objects. Neuroimage 2013, 73, 40–49. [Google Scholar] [CrossRef]

- Brunetti, M.; Belardinelli, P.; Caulo, M.; Del Gratta, C.; Della Penna, S.; Ferretti, A.; Lucci, G.; Moretti, A.; Pizzella, V.; Tartaro, A.; et al. Human Brain Activation during Passive Listening to Sounds from Different Locations: An fMRI and MEG Study. Hum. Brain Mapp. 2005, 26, 251–261. [Google Scholar] [CrossRef]

- Smith, K.R.; Hsieh, I.-H.; Saberi, K.; Hickok, G. Auditory Spatial and Object Processing in the Human Planum Temporale: No Evidence for Selectivity. J. Cogn. Neurosci. 2010, 22, 632–639. [Google Scholar] [CrossRef] [PubMed]

- Altmann, C.F.; Bledowski, C.; Wibral, M.; Kaiser, J. Processing of Location and Pattern Changes of Natural Sounds in the Human Auditory Cortex. Neuroimage 2007, 35, 1192–1200. [Google Scholar] [CrossRef] [PubMed]

- Da Costa, S.; Clarke, S.; Crottaz-Herbette, S. Keeping Track of Sound Objects in Space: The Contribution of Early-Stage Auditory Areas. Hear. Res. 2018, 366, 17–31. [Google Scholar] [CrossRef] [PubMed]

- Grady, C.L.; Charlton, R.; He, Y.; Alain, C. Age Differences in FMRI Adaptation for Sound Identity and Location. Front. Hum. Neurosci. 2011, 5, 24. [Google Scholar] [CrossRef] [PubMed]

- Grisendi, T.; Clarke, S.; Da Costa, S. Emotional Sounds in Space: Asymmetrical Representation within Early-Stage Auditory Areas. Front. Neurosci. 2023, 17, 1164334. [Google Scholar] [CrossRef]

- Kryklywy, J.H.; Macpherson, E.A.; Greening, S.G.; Mitchell, D.G.V. Emotion Modulates Activity in the “what” but Not “Where” Auditory Processing Pathway. Neuroimage 2013, 82, 295–305. [Google Scholar] [CrossRef] [PubMed]

- Kryklywy, J.H.; Macpherson, E.A.; Mitchell, D.G.V. Decoding Auditory Spatial and Emotional Information Encoding Using Multivariate versus Univariate Techniques. Exp. Brain Res. 2018, 236, 945–953. [Google Scholar] [CrossRef] [PubMed]

- Deouell, L.Y.; Heller, A.S.; Malach, R.; D’Esposito, M.; Knight, R.T. Cerebral Responses to Change in Spatial Location of Unattended Sounds. Neuron 2007, 55, 985–996. [Google Scholar] [CrossRef] [PubMed]

- Hall, D.A.; Barrett, D.J.K.; Akeroyd, M.A.; Summerfield, A.Q. Cortical Representations of Temporal Structure in Sound. J. Neurophysiol. 2005, 94, 3181–3191. [Google Scholar] [CrossRef]

- Higgins, N.C.; McLaughlin, S.A.; Rinne, T.; Stecker, G.C. Evidence for Cue-Independent Spatial Representation in the Human Auditory Cortex during Active Listening. Proc. Natl. Acad. Sci. USA 2017, 114, E7602–E7611. [Google Scholar] [CrossRef]

- Shrem, T.; Deouell, L.Y. Frequency-Dependent Auditory Space Representation in the Human Planum Temporale. Front. Hum. Neurosci. 2014, 8, 524. [Google Scholar] [CrossRef] [PubMed]

- Weeks, R.; Horwitz, B.; Aziz-Sultan, A.; Tian, B.; Wessinger, C.M.; Cohen, L.G.; Hallett, M.; Rauschecker, J.P. A Positron Emission Tomographic Study of Auditory Localization in the Congenitally Blind. J. Neurosci. 2000, 20, 2664–2672. [Google Scholar] [CrossRef] [PubMed]

- Weeks, R.A.; Aziz-Sultan, A.; Bushara, K.O.; Tian, B.; Wessinger, C.M.; Dang, N.; Rauschecker, J.P.; Hallett, M. A PET Study of Human Auditory Spatial Processing. Neurosci. Lett. 1999, 262, 155–158. [Google Scholar] [CrossRef] [PubMed]

- Zimmer, U.; Lewald, J.; Erb, M.; Karnath, H.-O. Processing of Auditory Spatial Cues in Human Cortex: An fMRI Study. Neuropsychologia 2006, 44, 454–461. [Google Scholar] [CrossRef] [PubMed]

- Zimmer, U.; Macaluso, E. High Binaural Coherence Determines Successful Sound Localization and Increased Activity in Posterior Auditory Areas. Neuron 2005, 47, 893–905. [Google Scholar] [CrossRef] [PubMed]

- Budd, T.W.; Hall, D.A.; Gonçalves, M.S.; Akeroyd, M.A.; Foster, J.R.; Palmer, A.R.; Head, K.; Summerfield, A.Q. Binaural Specialisation in Human Auditory Cortex: An fMRI Investigation of Interaural Correlation Sensitivity. NeuroImage 2003, 20, 1783–1794. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.T.; Raley, J.; Tunbridge, E.; Lanary, K.; Floyer-Lea, A.; Narain, C.; Cohen, I.; Behrens, T.; Jezzard, P.; Matthews, P.M.; et al. Functional Asymmetry for Auditory Processing in Human Primary Auditory Cortex. J. Neurosci. 2003, 23, 11516–11522. [Google Scholar] [CrossRef] [PubMed]

- Schönwiesner, M.; Krumbholz, K.; Rübsamen, R.; Fink, G.R.; von Cramon, D.Y. Hemispheric Asymmetry for Auditory Processing in the Human Auditory Brain Stem, Thalamus, and Cortex. Cereb. Cortex 2007, 17, 492–499. [Google Scholar] [CrossRef] [PubMed]

- Woldorff, M.G.; Matzke, M.; Zamarripa, F.; Fox, P.T. Hemodynamic and Electrophysiological Study of the Role of the Anterior Cingulate in Target-Related Processing and Selection for Action. Hum. Brain Mapp. 1999, 8, 121–127. [Google Scholar] [CrossRef]

- Gotts, S.J.; Chow, C.C.; Martin, A. Repetition Priming and Repetition Suppression: A Case for Enhanced Efficiency Through Neural Synchronization. Cogn. Neurosci. 2012, 3, 227–237. [Google Scholar] [CrossRef]

- Grill-Spector, K.; Henson, R.; Martin, A. Repetition and the Brain: Neural Models of Stimulus-Specific Effects. Trends Cogn. Sci. 2006, 10, 14–23. [Google Scholar] [CrossRef] [PubMed]

- Krekelberg, B.; Boynton, G.M.; van Wezel, R.J.A. Adaptation: From Single Cells to BOLD Signals. Trends Neurosci. 2006, 29, 250–256. [Google Scholar] [CrossRef] [PubMed]

- Altmann, C.F.; Nakata, H.; Noguchi, Y.; Inui, K.; Hoshiyama, M.; Kaneoke, Y.; Kakigi, R. Temporal Dynamics of Adaptation to Natural Sounds in the Human Auditory Cortex. Cereb. Cortex 2008, 18, 1350–1360. [Google Scholar] [CrossRef] [PubMed]

- Murray, M.M.; Camen, C.; Spierer, L.; Clarke, S. Plasticity in Representations of Environmental Sounds Revealed by Electrical Neuroimaging. Neuroimage 2008, 39, 847–856. [Google Scholar] [CrossRef] [PubMed]

- Bidet-Caulet, A.; Voisin, J.; Bertrand, O.; Fonlupt, P. Listening to a Walking Human Activates the Temporal Biological Motion Area. Neuroimage 2005, 28, 132–139. [Google Scholar] [CrossRef] [PubMed]

- Brunetti, M.; Della Penna, S.; Ferretti, A.; Del Gratta, C.; Cianflone, F.; Belardinelli, P.; Caulo, M.; Pizzella, V.; Olivetti Belardinelli, M.; Romani, G.L. A Frontoparietal Network for Spatial Attention Reorienting in the Auditory Domain: A Human fMRI/MEG Study of Functional and Temporal Dynamics. Cereb. Cortex 2008, 18, 1139–1147. [Google Scholar] [CrossRef] [PubMed]

- Zündorf, I.C.; Lewald, J.; Karnath, H.-O. Neural Correlates of Sound Localization in Complex Acoustic Environments. PLoS ONE 2013, 8, e64259. [Google Scholar] [CrossRef]

- Da Costa, S.; van der Zwaag, W.; Marques, J.P.; Frackowiak, R.S.J.; Clarke, S.; Saenz, M. Human Primary Auditory Cortex Follows the Shape of Heschl’s Gyrus. J. Neurosci. 2011, 31, 14067–14075. [Google Scholar] [CrossRef]

- Rivier, F.; Clarke, S. Cytochrome Oxidase, Acetylcholinesterase, and NADPH-Diaphorase Staining in Human Supratemporal and Insular Cortex: Evidence for Multiple Auditory Areas. Neuroimage 1997, 6, 288–304. [Google Scholar] [CrossRef]

- Wallace, M.N.; Johnston, P.W.; Palmer, A.R. Histochemical Identification of Cortical Areas in the Auditory Region of the Human Brain. Exp. Brain Res. 2002, 143, 499–508. [Google Scholar] [CrossRef]

- Grisendi, T.; Reynaud, O.; Clarke, S.; Da Costa, S. Processing Pathways for Emotional Vocalizations. Brain Struct. Funct. 2019, 224, 2487–2504. [Google Scholar] [CrossRef] [PubMed]

- Bach, D.R.; Schächinger, H.; Neuhoff, J.G.; Esposito, F.; Salle, F.D.; Lehmann, C.; Herdener, M.; Scheffler, K.; Seifritz, E. Rising Sound Intensity: An Intrinsic Warning Cue Activating the Amygdala. Cereb. Cortex 2008, 18, 145–150. [Google Scholar] [CrossRef]

- Bach, D.R.; Neuhoff, J.G.; Perrig, W.; Seifritz, E. Looming Sounds as Warning Signals: The Function of Motion Cues. Int. J. Psychophysiol. 2009, 74, 28–33. [Google Scholar] [CrossRef] [PubMed]

- Tajadura-Jiménez, A.; Larsson, P.; Väljamäe, A.; Västfjäll, D.; Kleiner, M. When Room Size Matters: Acoustic Influences on Emotional Responses to Sounds. Emotion 2010, 10, 416–422. [Google Scholar] [CrossRef] [PubMed]

- Tajadura-Jiménez, A.; Väljamäe, A.; Asutay, E.; Västfjäll, D. Embodied Auditory Perception: The Emotional Impact of Approaching and Receding Sound Sources. Emotion 2010, 10, 216–229. [Google Scholar] [CrossRef] [PubMed]

- Erhan, H.; Borod, J.C.; Tenke, C.E.; Bruder, G.E. Identification of Emotion in a Dichotic Listening Task: Event-Related Brain Potential and Behavioral Findings. Brain Cogn. 1998, 37, 286–307. [Google Scholar] [CrossRef] [PubMed]

- Gadea, M.; Espert, R.; Salvador, A.; Martí-Bonmatí, L. The Sad, the Angry, and the Asymmetrical Brain: Dichotic Listening Studies of Negative Affect and Depression. Brain Cogn. 2011, 76, 294–299. [Google Scholar] [CrossRef] [PubMed]

- Jäncke, L.; Buchanan, T.W.; Lutz, K.; Shah, N.J. Focused and Nonfocused Attention in Verbal and Emotional Dichotic Listening: An FMRI Study. Brain Lang. 2001, 78, 349–363. [Google Scholar] [CrossRef] [PubMed]

- Zimmermann, J.F.; Moscovitch, M.; Alain, C. Attending to Auditory Memory. Brain Res. 2016, 1640, 208–221. [Google Scholar] [CrossRef]

- Zimmermann, J.F.; Moscovitch, M.; Alain, C. Long-Term Memory Biases Auditory Spatial Attention. J. Exp. Psychol. Learn. Mem. Cogn. 2017, 43, 1602–1615. [Google Scholar] [CrossRef]

- Fischer, M.; Moscovitch, M.; Alain, C. Incidental Auditory Learning and Memory-Guided Attention: Examining the Role of Attention at the Behavioural and Neural Level Using EEG. Neuropsychologia 2020, 147, 107586. [Google Scholar] [CrossRef] [PubMed]

- At, A.; Spierer, L.; Clarke, S. The Role of the Right Parietal Cortex in Sound Localization: A Chronometric Single Pulse Transcranial Magnetic Stimulation Study. Neuropsychologia 2011, 49, 2794–2797. [Google Scholar] [CrossRef] [PubMed]

- Clarke, S.; Rivier, F. Compartments within Human Primary Auditory Cortex: Evidence from Cytochrome Oxidase and Acetylcholinesterase Staining. Eur. J. Neurosci. 1998, 10, 741–745. [Google Scholar] [CrossRef] [PubMed]

- Tardif, E.; Clarke, S. Intrinsic Connectivity of Human Auditory Areas: A Tracing Study with DiI. Eur. J. Neurosci. 2001, 13, 1045–1050. [Google Scholar] [CrossRef] [PubMed]

- Moerel, M.; De Martino, F.; Uğurbil, K.; Yacoub, E.; Formisano, E. Processing Complexity Increases in Superficial Layers of Human Primary Auditory Cortex. Sci. Rep. 2019, 9, 5502. [Google Scholar] [CrossRef] [PubMed]

- Sitek, K.R.; Gulban, O.F.; Calabrese, E.; Johnson, G.A.; Lage-Castellanos, A.; Moerel, M.; Ghosh, S.S.; de Martino, F. Mapping the Human Subcortical Auditory System Using Histology, Post Mortem MRI and in Vivo MRI at 7T. eLife 2019, 8, e48932. [Google Scholar] [CrossRef] [PubMed]

- Moerel, M.; De Martino, F.; Uğurbil, K.; Formisano, E.; Yacoub, E. Evaluating the Columnar Stability of Acoustic Processing in the Human Auditory Cortex. J. Neurosci. 2018, 38, 7822–7832. [Google Scholar] [CrossRef] [PubMed]

- Lankinen, K.; Ahlfors, S.P.; Mamashli, F.; Blazejewska, A.I.; Raij, T.; Turpin, T.; Polimeni, J.R.; Ahveninen, J. Cortical Depth Profiles of Auditory and Visual 7 T Functional MRI Responses in Human Superior Temporal Areas. Hum. Brain Mapp. 2023, 44, 362–372. [Google Scholar] [CrossRef] [PubMed]

- Spierer, L.; Tardif, E.; Sperdin, H.; Murray, M.M.; Clarke, S. Learning-Induced Plasticity in Auditory Spatial Representations Revealed by Electrical Neuroimaging. J. Neurosci. 2007, 27, 5474–5483. [Google Scholar] [CrossRef]

- Aldè, M.; Cantarella, G.; Zanetti, D.; Pignataro, L.; La Mantia, I.; Maiolino, L.; Ferlito, S.; Di Mauro, P.; Cocuzza, S.; Lechien, J.R.; et al. Autosomal Dominant Non-Syndromic Hearing Loss (DFNA): A Comprehensive Narrative Review. Biomedicines 2023, 11, 1616. [Google Scholar] [CrossRef]

- You, E.; Lin, V.; Mijovic, T.; Eskander, A.; Crowson, M.G. Artificial Intelligence Applications in Otology: A State of the Art Review. Otolaryngol. Head Neck Surg. 2020, 163, 1123–1133. [Google Scholar] [CrossRef] [PubMed]

- Maniaci, A.; Riela, P.M.; Iannella, G.; Lechien, J.R.; La Mantia, I.; De Vincentiis, M.; Cammaroto, G.; Calvo-Henriquez, C.; Di Luca, M.; Chiesa Estomba, C.; et al. Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study. Life 2023, 13, 702. [Google Scholar] [CrossRef] [PubMed]

| Publication | Methodology | Contrast & Conclusions | Side | BA/br/aa | x | y | z |

|---|---|---|---|---|---|---|---|

| Bidet-Caulet et al., 2005 [75] Listening to a walking human activates the temporal biological motion area Neuroimage | 3T fMRI 10 normal subjects Footsteps

| Active localization: Footstep task > noise detection Conclusions: Posterior STS activation by human steps in line with its role in social perception | R | STS | 52 | −50 | 9 |

| L | STS | −50 | −41 | 9 | |||

| Brunetti et al., 2005 [50] Human Brain activation during passive listening to sounds from different locations: an fMRI and MEG study Human Brain Mapping | 1.5T fMRI 11 normal subjects Knife tapping on glass −90°, −45°, 0°, 45°, 90° Runs 8 presentations:

| Mixed vs. right | R | p STG | 57 | −41 | 15 |

| L | p STG | −56 | −40 | 16 | |||

| Mixed vs. left Conclusions: Both hemispheres involved in processing sounds from different locations | R | p STG | 57 | −46 | 15 | ||

| L | p STG | −60 | −39 | 6 | |||

| Altmann et al., 2007 [52] Processing of location and pattern changes of natural sounds in the human auditory cortex Neuroimage | 3T fMRI—event-related 17 normal subjects Sheep, dog −90°, 90° Blocks of 9 presentations with sound object and/or location changes Task: passive listening | Location changes Conclusions: Posterior STL involved in spatial processing of auditory stimuli | L | STL | −71 | −21 | 10 |

| R | STL | 64 | −39 | 11 | |||

| Altmann et al., 2008 [48] Effects of feature-selective attention on auditory pattern and location processing Neuroimage | 3T fMRI adaptation 12 N subject 10 animal species −70°, −15°, 15°, 70° Pairs of sounds Task: match sound identity or location | Different vs. same location (different location and identity plus different location minus different identity minus same location and identity) Conclusions: fMRI adaptation effects in STS, PT and INS for location changes | L | p STS | −51 | −46 | 5 |

| R | p STS | 53 | −47 | 3 | |||

| L | PT | −53 | −32 | 8 | |||

| R | PT | 57 | −30 | 9 | |||

| R | a INS | 32 | 23 | 7 | |||

| Brunetti et al., 2008 [76] A frontoparietal network for spatial attention reorienting in the auditory domain: a human fMRI/MEG study of functional temporal dynamics Cerebral Cortex | 1.5T fMRI 10 normal subjects Knife tapping on glass Alternate locations: Right: 90°, 50° Left: −90°, −50°, Central: −20°, 20° Mixed: −90°, −50° 0°, 50°, 90° Task: passive listening | Mixed vs. rest Conclusions: Supratemporal plane modulated by variations in sound location | R | HG | 52 | −19 | 8 |

| L | HG | −61 | −25 | 18 | |||

| R | HG | 46 | −29 | 8 | |||

| L | HG | −42 | −36 | 18 | |||

| R | STG | 61 | −40 | 8 | |||

| R | IPL | 33 | −51 | 37 | |||

| R | PFC | 41 | 25 | 28 | |||

| Smith et al., 2010 [51] Auditory spatial and object processing in the human planum temporale: no evidence for selectivity J. Cognitive Neuroscience | 3T fMRI 10 normal subjects 1 or 3 talkers 1 location; changing between 3 locations; moving −60° to 60° Task: passive listening | Spatial manipulation (3-locations/1-talker vs. 1-location/1-talker) Conclusions: Spatial sensitivity in PT reflects auditory source separation using spatial cues | L | PT | −52 | −26 | 8 |

| R | PT | 55 | −27 | 10 | |||

| Grady et al., 2011 [54] Age differences in fMRI adaptation for sound identity and location Frontiers Neuroscience | 3T fMRI 19 young/20 old normal subjects Sounds (S): human non-speech, animal, musical, machine Location (L): −95°, −60°, 0°, 60°, 95° Conditions:

| Adaptation to location (in young): Same sound, different locations > different sounds, same location Conclusions: Age differences in adaptation to repetition of sound location | R | 6 | 20 | 0 | 52 |

| R | 45 | 44 | 20 | 16 | |||

| R | 47 | 32 | 28 | 4 | |||

| L | 44 | −48 | 8 | 12 | |||

| L | 46 | −40 | 28 | 20 | |||

| 6 | 4 | −12 | 60 | ||||

| L | 6 | −28 | −12 | 52 | |||

| L | 6 | −52 | −8 | 44 | |||

| R | 40 | 56 | −28 | 24 | |||

| L | 40 | −52 | −32 | 28 | |||

| L | 18 | −20 | −72 | 0 | |||

| R | put | 16 | 8 | 8 | |||

| Kryklywy et al., 2013 [56] Emotion modulates activity in the what but not where auditory processing pathway Neuroimage | 3T fMRI 18 normal subjects Emotional sounds in space: 4 locations × 3 valences Task: to localize sounds | 2 ANOVA location X valence Significant interaction Conclusions: Emotion modulates activity in the «what» but not the «where» auditory processing pathway | R/L | 31, 7 | −7 | −57 | 50 |

| R/L | 19, 18, 17 | 3 | −86 | 6 | |||

| R | 42, 41, 22 | 64 | −15 | 2 | |||

| Zündorf et al., 2013 [77] Neural correlates of sound localization in complex acoustic environments PLoS ONE | 3T fMRI 20 normal subjects Environmental sounds Location: −45°, −22.5°, 0°, 22.5°, 45° Conditions: Single: 1 target at one of 5 locations Cocktail: 1 target among 5 different sounds at different locations Passive: as cocktail, no task Sequence: 1–5 sounds consecutively, no location Task: to localize the target | Cocktail > Passive Conclusions: Activity related to auditory stream segregation in posterior STG, INS, SMA and fronto-parietal network | R | STG | 63 | −33 | 12 |

| L | STG | −66 | 24 | 12 | |||

| L | SMA | −6 | 18 | 45 | |||

| L | a INS | −33 | 27 | 0 | |||

| L | IFG | −42 | 9 | 27 | |||

| R | FEF | 48 | 3 | 48 | |||

| Da Costa et al., 2018 [53] Keeping track of sound objects in space: the contribution of early-stage auditory areas Hearing Research | 7T fMRI 10 normal subjects Block of 2 × 4 sounds: Same or different category Locations: Left (−60°, −40°) or right (40°, 60°) Location change: yes or no Task: passive listening | 3-way ANOVA Category × Change in location × Location Interaction Category × Change in location Driven by larger effect for change in “same” category and for no-change in “different” category (mean coordinates of tonotopically identified auditory areas in individual subjects) Conclusions: A third auditory stream, originating in lateral belt areas, tracks sound objects across space | L | L2 | −56 | −17 | 4 |

| L | L4 | −42 | −10 | −7 | |||

| R | R | 42 | −16 | 6 | |||

| R | L3 | 53 | −7 | −1 | |||

| Kryklywy et al., 2018 [57] Decoding auditory spatial and emotional information encoding using multivariate versus univariate techniques Experimental Brain Research | 3T fMRI 18 normal subjects 12 sounds with neutral, positive or negative valence Location: −90°, −22.5°, 22.5°, 90° Task: to localize sounds with specific emotion | Multivariate search light analysis Activity patterns predictive of sound location Conclusions: Multivariate pattern analysis larger overlapping spatial and emotional representation of sound within early secondary auditory regions than univariate analysis | L | 2, 4, 13, 41, 42 | −48 | −26 | 19 |

| R | 2, 4, 13, 41, 42 | 50 | −22 | 13 | |||

| L | 8 | −29 | 16 | 38 | |||

| L | 31 | −15 | −64 | 19 | |||

| R | 24 | 6 | −17 | 47 | |||

| R | 19 | 25 | −88 | 21 | |||

| L | 35 | −33 | −19 | −22 | |||

| Grisendi et al., 2023 [55] Emotional sounds in space: asymmetrical representation within early-stage auditory areas Frontiers Neuroscience | 7T fMRI 13 normal subjects Category: human vocalizations; environmental sounds Emotional valence: positive, neutral negative Location: −60°, 0°, 60°) Task: passive listening | 3-way ANOVA Category × Valence × Location Significant interaction Valence × Location (mean coordinates of auditory areas identified by tonotopic mapping or voice area localizer in individual subjects) Conclusions: Positive vocalizations presented on left side yield strong activity; spatial cues render emotional valence more salient within early-stage auditory areas | L | VA | −55.50 | −33.45 | 6.08 |

| R | VA | 48.79 | −31.39 | 5.46 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Clarke, S.; Da Costa, S.; Crottaz-Herbette, S. Dual Representation of the Auditory Space. Brain Sci. 2024, 14, 535. https://doi.org/10.3390/brainsci14060535

Clarke S, Da Costa S, Crottaz-Herbette S. Dual Representation of the Auditory Space. Brain Sciences. 2024; 14(6):535. https://doi.org/10.3390/brainsci14060535

Chicago/Turabian StyleClarke, Stephanie, Sandra Da Costa, and Sonia Crottaz-Herbette. 2024. "Dual Representation of the Auditory Space" Brain Sciences 14, no. 6: 535. https://doi.org/10.3390/brainsci14060535

APA StyleClarke, S., Da Costa, S., & Crottaz-Herbette, S. (2024). Dual Representation of the Auditory Space. Brain Sciences, 14(6), 535. https://doi.org/10.3390/brainsci14060535