Mental Workload Classification and Tasks Detection in Multitasking: Deep Learning Insights from EEG Study

Abstract

1. Introduction

1.1. Related Work

1.2. Research Overview

- (a)

- We designed an experiment with participants performing under several distinct levels of TL for the purpose of creating a dataset for EEG-based TL classification.

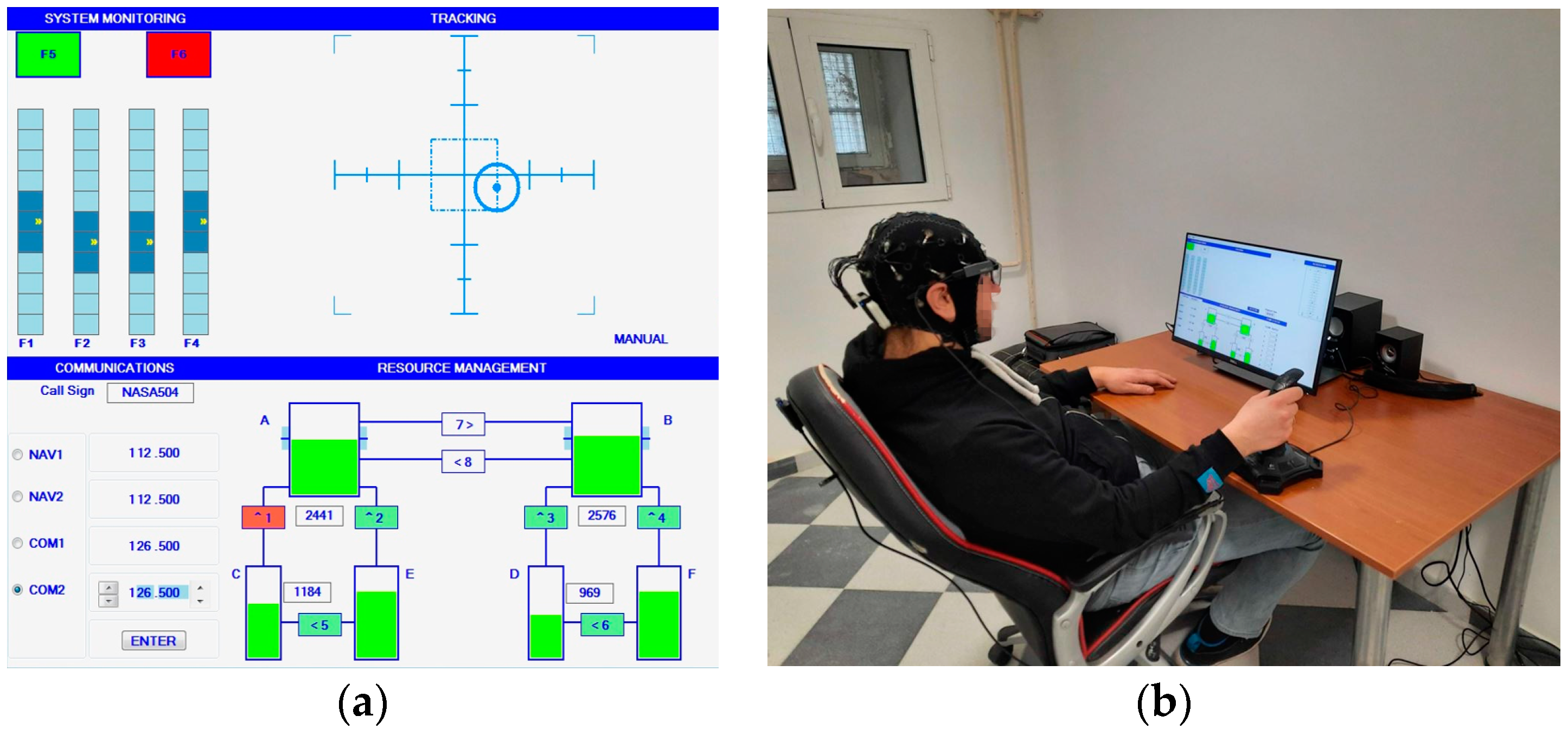

- (b)

- We employed the well-known and adaptable setting of the NASA Multi-Attribute Task Battery II (MATB-II, version 2.0) [39], which requires simultaneous management of multiple subtasks. Since the MATB-II software allows for easy customization of subtasks frequencies and distribution, the task was suitable for the design of the variable multitasking environment. Aside from controllability and trackability of the task, MATB-II is employed for its wide presence in the literature of the domain. It makes this study comparable to similar studies in the field. Furthermore, the experiment was designed to minimize participants’ physical load, ensuring that only their mental load is altered during the task. This also allows for the mitigation of EEG signal artifacts.

- (c)

- The experiment was designed in a way that changes the TL put upon an operator by changing the loads of individual MATB-II subtasks. Specifically, we designed 4 blocks of MATB-II subtasks combinations, representing 4 distinct TL levels by increasing/decreasing number of subtasks to be handled in a given timeframe. They were named Passive Watching (PW), Low Load (LL), Medium Load (ML), and Hard Load (HL) levels (reflecting increasing levels of difficulty). The first three blocks differed not only in task load, but also in the selection of active MATB-II subtasks. PW had no subtasks active, LL had 3 (out of 4) subtasks active, while ML and HL had all the subtasks active and differed only in the rate of occurrence of events to which participants were exposed. A description of the experimental design is reported in Section 2.3.

- (d)

- The EEG dataset was acquired against the predefined sequences of blocks representing the different TL levels, assuming that they would induce different MWL levels that could be detected by EEG. The environment was precisely controlled, with the activity of the MATB-II task software and the activity of the participants logged into separate text files synchronized with the collected EEG data for further analysis. These log files were vital for the data preparation for the model training (for the correct data labeling).

- (e)

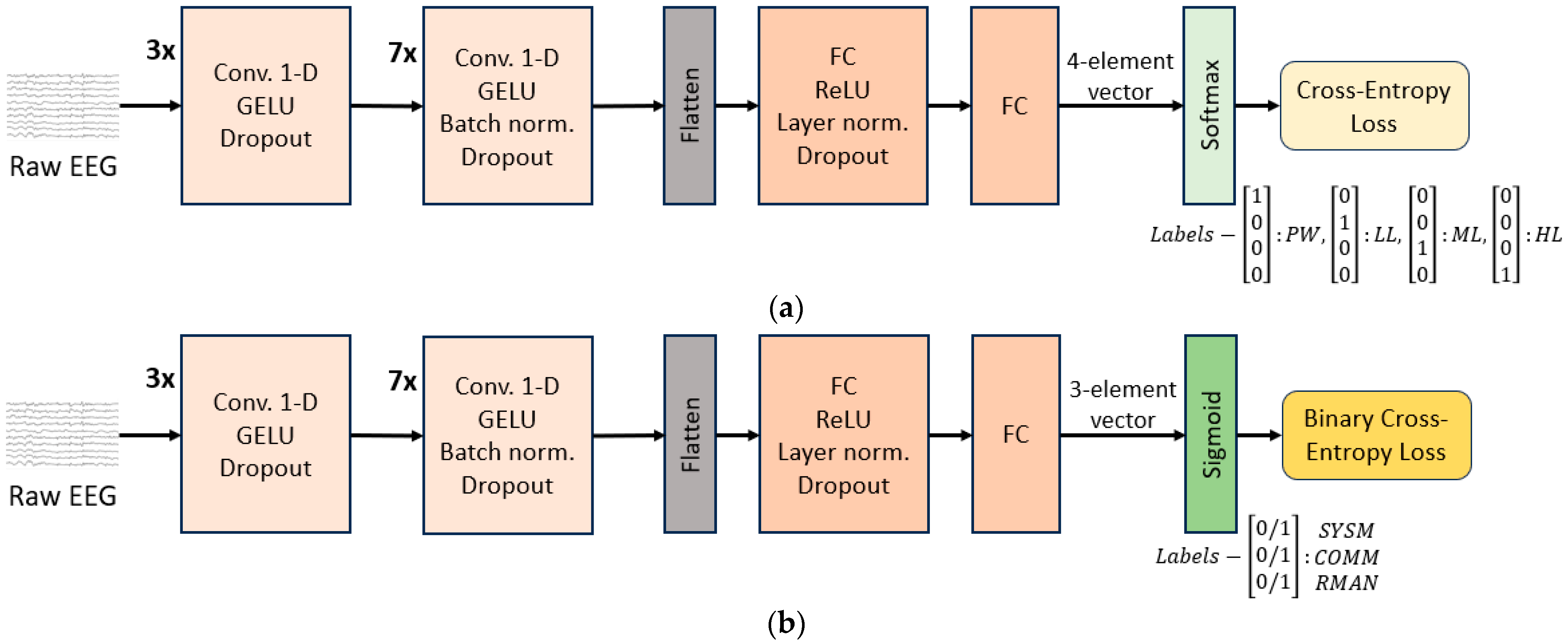

- To distinguish between different TL levels, we employed a CNN. The input to the network was a short EEG segment and the output was a classified TL level (target class was the TL level assigned to the block to which the segment belonged).

- (f)

- The same model architecture was trained independently to detect the presence of each particular MATB-II subtask in a given segment. We wanted to test whether the same model was able to learn to distinguish between EEG patterns related to different subtasks’ activity. To the best of our knowledge, this is the first study to perform MATB-II subtasks detection from EEG. The results of this part of the study would also provide valuable insights for the application of EEG-based cognitive activity classification in the field of BCI, where distinguishing engagement in different tasks is relevant [40,41,42,43]. Additionally, good model performance in this part would further validate the model’s suitability for learning cognition-related EEG patterns, relevant to the problem of TL level classification The input to the model was also a short EEG segment and the output was a binary vector representing the activity status of each subtask.

2. Materials and Methods

2.1. MATB-II Task

2.2. Experiment Setup

2.3. Experimental Protocol and Task Design

- Passive watching (PW): No activity was expected—the task was frozen and the participant would just look at the screen and wait for the next block;

- Low load (LL): All the MATB-II subtasks are active except TRCK, which was set to auto mode (no action required);

- Medium load (ML): All the MATB-II subtasks are active and the rates at which they would demand a response form the operator are increased compared to LL (see Table 1 for details);

- Hard load (HL). All the MATB-II subtasks are active and the rates at which they demand a response from the participant is about twice the ones in the ML block (except for COMM subtask). For instance, for RMAN in the HL block, the number of times valves turning on/off would be greater, as well as a higher liquid flow speed, requiring more attention from the participant. The exact number of occurrences of each subtask in a 5 min. block are given in Table 1.

2.4. Participants

2.5. Equipment and Software

2.6. Subjective MWL

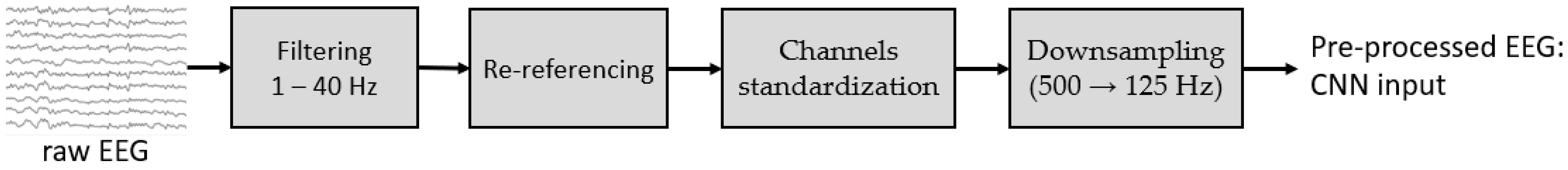

2.7. EEG Pre-Processing

- (1)

- Band-pass filtering (1–40 Hz)—to retain the frequency band related to brain activity;

- (2)

- Average re-referencing—to mitigate one-electrode-reference bias. The average of all the channels was added as the 25th channel to address the EEG data rank reduction issue [49] (reduced number of linearly independent channels due to re-referencing);

- (3)

- Channels standardization (by subtracting the mean channel value and diving by channel standard deviation)—to put the channels in the same scale and help better model training;

- (4)

- EEG signal downsampling from 500 Hz to 125 Hz, preserving the bandwidth of 40 Hz set by previous filtering.

2.8. CNN Architecture and Training Configuration

- In the case of TL level estimation, we assigned each EEG segment to the block type it belongs to: PW, LL, ML, HL. Hence, the last layer dimension had 4 nodes and using a softmax function on top of it, we performed classification into the 4 classes. The loss function used was cross-entropy loss (Figure 4a).

- For MATB-II subtasks detection, the model output was a 3-dimensional bit-vector containing 1 or 0 in their respective positions if SYSM, COMM, RMAN subtasks individually were present/not present in the segment (the TRCK task was ignored for the detection as it is explained in Section 2.9.2). In accordance with that, the loss function was Binary Cross Entropy Loss (Figure 4b).

2.9. Dataset Labeling and Training Procedure

- TL level classification: 10 s segments, with 5 s overlapping. With 50 subjects completing two 51 min. sessions each, this resulted in the dataset of 61,100 segments;

- MATB-II subtasks detection: 15 s segments, with 10 s overlapping, resulting in the dataset of 61,000 segments.

2.9.1. TL Level Classification Labeling

2.9.2. MATB-II Subtasks Detection Labeling

2.9.3. Training and Test Dataset Split

3. Results

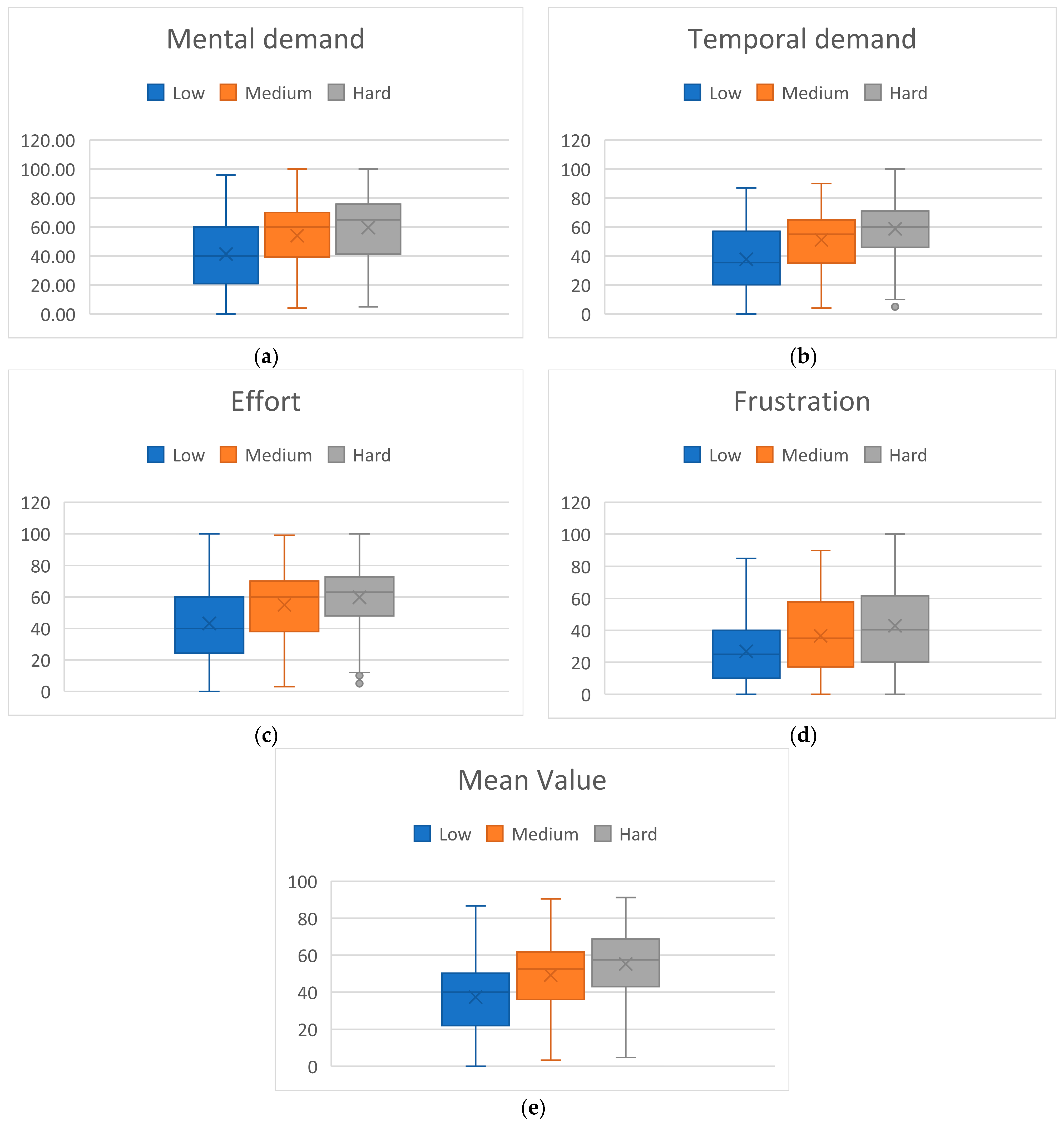

3.1. Subjective MWL Assessment and Task Error Rate

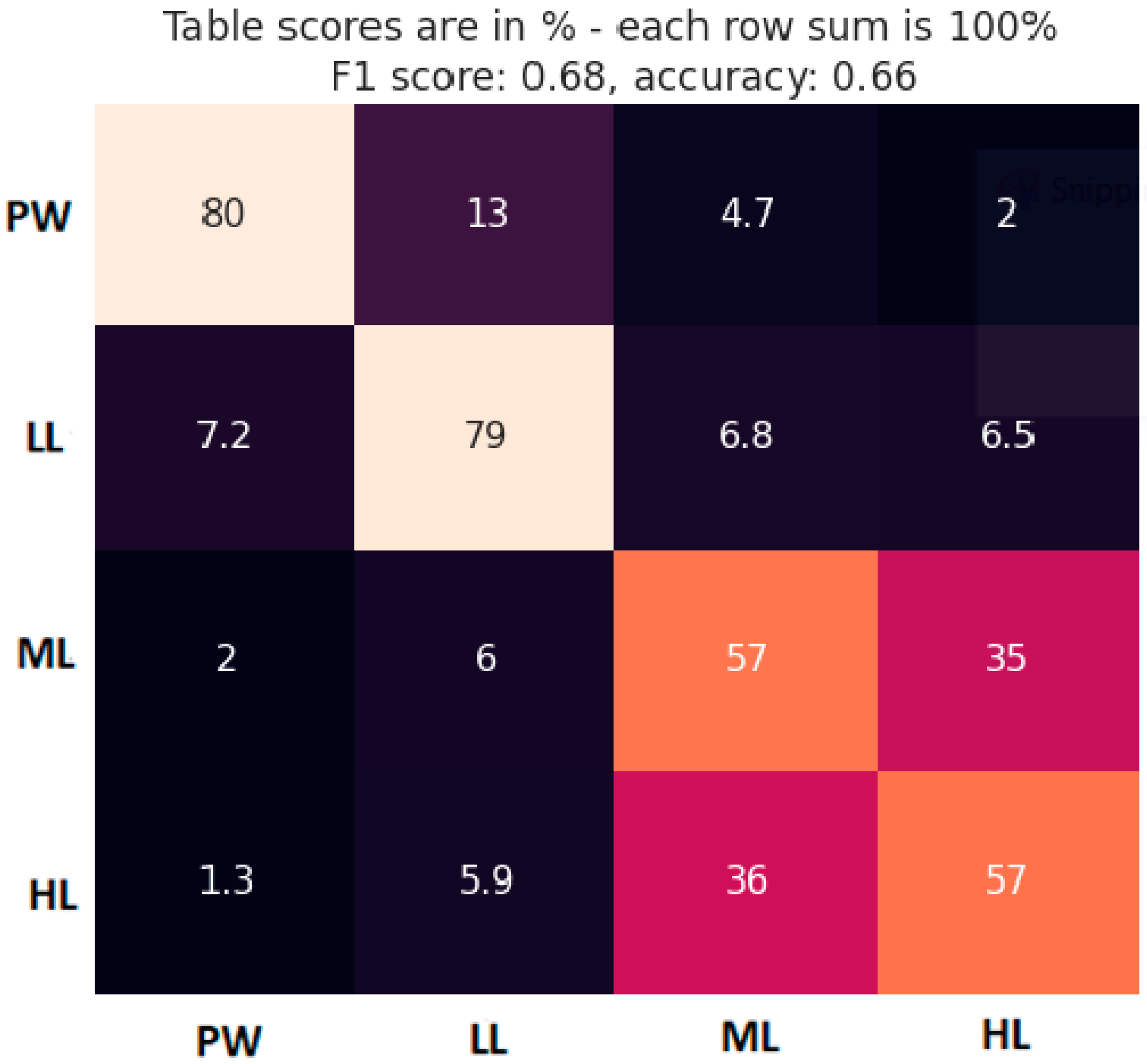

3.2. TL Level Classification

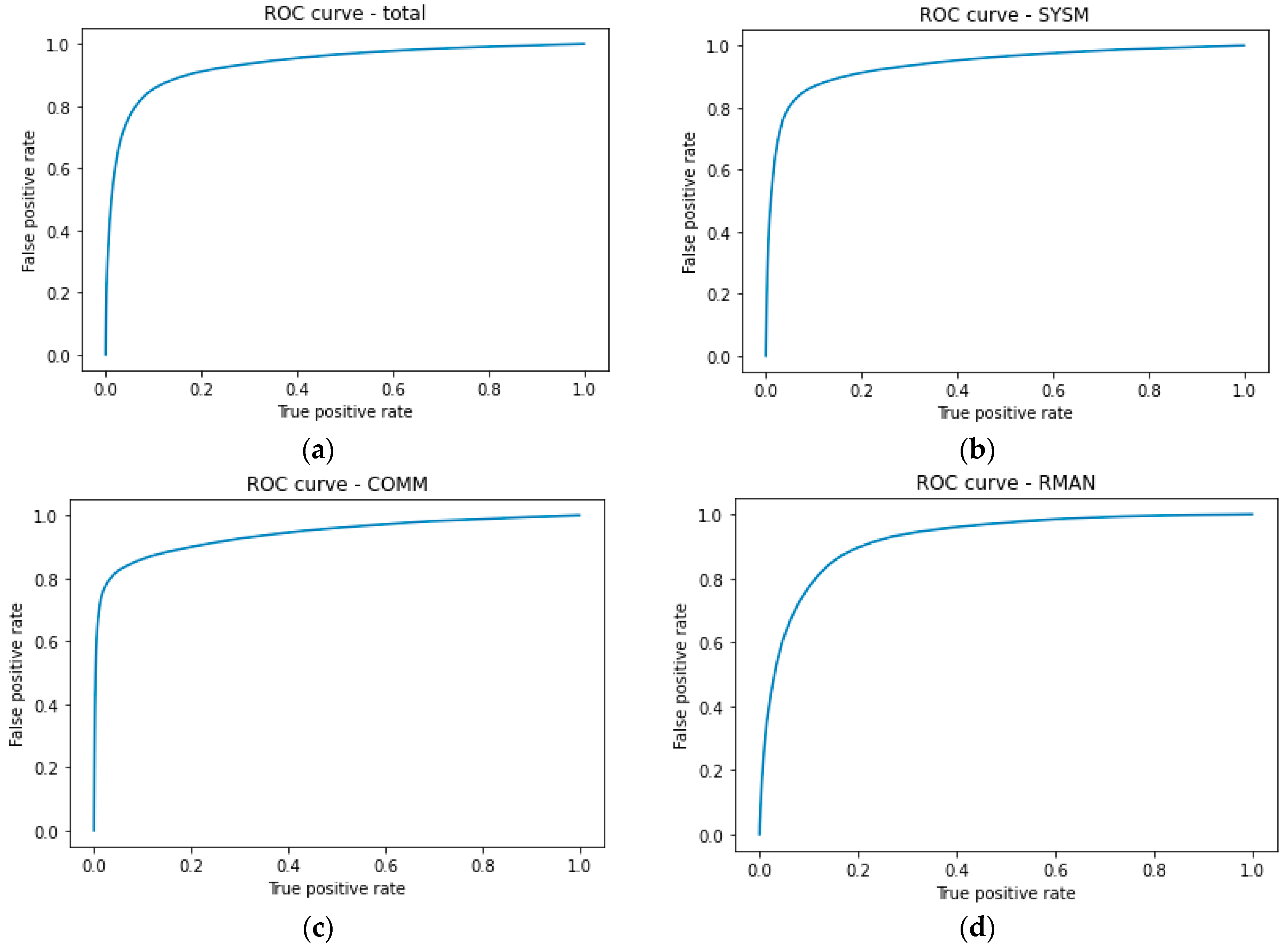

3.3. MATB-II Subtasks Detection

4. Discussion

4.1. Subjective MWL Assessment and Task Error Rate

4.2. TL Level Classification

4.3. MATB-II Subtasks Detection

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hogervorst, M.A.; Brouwer, A.M.; Van Erp, J.B. Combining and comparing EEG, peripheral physiology and eye-related measures for the assessment of mental workload. Front. Neurosci. 2014, 8, 322. [Google Scholar] [CrossRef]

- Wilson, G.F.; Russell, C.A. Real-time assessment of mental workload using psychophysiological measures and artificial neural networks. Hum. Factors 2003, 45, 635–644. [Google Scholar] [CrossRef]

- Tao, D.; Tan, H.; Wang, H.; Zhang, X.; Qu, X.; Zhang, T. A systematic review of physiological measures of mental workload. Int. J. Environ. Res. Public Health 2019, 16, 2716. [Google Scholar] [CrossRef]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef] [PubMed]

- Chikhi, S.; Matton, N.; Blanchet, S. EEG power spectral measures of cognitive workload: A meta-analysis. Psychophysiology 2022, 59, e14009. [Google Scholar] [CrossRef]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef]

- Başar, E.; Başar-Eroglu, C.; Karakaş, S.; Schürmann, M. Gamma, alpha, delta, and theta oscillations govern cognitive processes. Int. J. Psychophysiol. 2001, 39, 241–248. [Google Scholar] [CrossRef] [PubMed]

- Campisi, P.; La Rocca, D. Brain waves for automatic biometric-based user recognition. IEEE Trans. Inf. Forensics Secur. 2014, 9, 782–800. [Google Scholar] [CrossRef]

- So, W.K.; Wong, S.W.; Mak, J.N.; Chan, R.H. An evaluation of mental workload with frontal EEG. PLoS ONE 2017, 12, e0174949. [Google Scholar] [CrossRef]

- Pergher, V.; Wittevrongel, B.; Tournoy, J.; Schoenmakers, B.; Van Hulle, M.M. Mental workload of young and older adults gauged with ERPs and spectral power during N-Back task performance. Biol. Psychol. 2019, 146, 107726. [Google Scholar] [CrossRef] [PubMed]

- Raufi, B.; Longo, L. An Evaluation of the EEG alpha-to-theta and theta-to-alpha band Ratios as Indexes of Mental Workload. Front. Neuroinformatics 2022, 16, 44. [Google Scholar] [CrossRef] [PubMed]

- Dan, A.; Reiner, M. Real time EEG based measurements of cognitive load indicates mental states during learning. J. Educ. Data Min. 2017, 9, 31–44. [Google Scholar]

- Pušica, M.; Caiazzo, C.; Djapan, M.; Savković, M.; Leva, M.C. Visual Mental Workload Assessment from EEG in Manual Assembly Task. In Proceedings of the 33rd European Safety and Reliability Conference, Southampton, UK, 3–7 September 2023. [Google Scholar]

- Kartali, A.; Janković, M.M.; Gligorijević, I.; Mijović, P.; Mijović, B.; Leva, M.C. Real-time mental workload estimation using EEG. In Human Mental Workload: Models and Applications, Proceedings of the Third International Symposium, H-WORKLOAD 2019, Rome, Italy, 14–15 November 2019; Proceedings 3; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 20–34. [Google Scholar]

- Borghini, G.; Vecchiato, G.; Toppi, J.; Astolfi, L.; Maglione, A.; Isabella, R.; Caltagirone, C.; Kong, W.; Wei, D.; Zhou, Z.; et al. Assessment of mental fatigue during car driving by using high resolution EEG activity and neurophysiologic indices. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: New York, NY, USA; pp. 6442–6445. [Google Scholar]

- Fernandez Rojas, R.; Debie, E.; Fidock, J.; Barlow, M.; Kasmarik, K.; Anavatti, S.; Garratt, M.; Abbass, H. Electroencephalographic workload indicators during teleoperation of an unmanned aerial vehicle shepherding a swarm of unmanned ground vehicles in contested environments. Front. Neurosci. 2020, 14, 40. [Google Scholar] [CrossRef]

- Caiazzo, C.; Savkovic, M.; Pusica, M.; Milojevic, D.; Leva, M.C.; Djapan, M. Development of a Neuroergonomic Assessment for the Evaluation of Mental Workload in an Industrial Human–Robot Interaction Assembly Task: A Comparative Case Study. Machines 2023, 11, 995. [Google Scholar] [CrossRef]

- Berka, C.; Levendowski, D.J.; Lumicao, M.N.; Yau, A.; Davis, G.; Zivkovic, V.T.; Olmstead, R.E.; Tremoulet, P.D.; Craven, P.L. EEG correlates of task engagement and mental workload in vigilance, learning, and memory tasks. Aviat. Space Environ. Med. 2007, 78, B231–B244. [Google Scholar] [PubMed]

- Kosti, M.V.; Georgiadis, K.; Adamos, D.A.; Laskaris, N.; Spinellis, D.; Angelis, L. Towards an affordable brain computer interface for the assessment of programmers’ mental workload. Int. J. Hum.-Comput. Stud. 2018, 115, 52–66. [Google Scholar] [CrossRef]

- Choi, M.K.; Lee, S.M.; Ha, J.S.; Seong, P.H. Development of an EEG-based workload measurement method in nuclear power plants. Ann. Nucl. Energy 2018, 111, 595–607. [Google Scholar] [CrossRef]

- Dussault, C.; Jouanin, J.C.; Philippe, M.; Guezennec, C.Y. EEG and ECG changes during simulator operation reflect mental workload and vigilance. Aviat. Space Environ. Med. 2005, 76, 344–351. [Google Scholar] [PubMed]

- Liu, Y.; Yu, Y.; Ye, Z.; Li, M.; Zhang, Y.; Zhou, Z.; Hu, D.; Zeng, L.L. Fusion of Spatial, Temporal, and Spectral EEG Signatures Improves Multilevel Cognitive Load Prediction. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 357–366. [Google Scholar] [CrossRef]

- Li, D.; Mattsson, S.; Salunkhe, O.; Fast-Berglund, Å.; Skoogh, A.; Broberg, J. Effects of information content in work instructions for operator performance. Procedia Manuf. 2018, 25, 628–635. [Google Scholar] [CrossRef]

- Bläsing, D.; Bornewasser, M. Influence of complexity and noise on mental workload during a manual assembly task. In Human Mental Workload: Models and Applications, Proceedings of the 4th International Symposium, H-WORKLOAD 2020, Granada, Spain, 3–5 December 2020; Proceedings 4; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 147–174. [Google Scholar]

- Van Acker, B.B.; Bombeke, K.; Durnez, W.; Parmentier, D.D.; Mateus, J.C.; Biondi, A.; Saldien, J.; Vlerick, P. Mobile pupillometry in manual assembly: A pilot study exploring the wearability and external validity of a renowned mental workload lab measure. Int. J. Ind. Ergon. 2020, 75, 102891. [Google Scholar] [CrossRef]

- Sciaraffa, N.; Borghini, G.; Aricò, P.; Di Flumeri, G.; Colosimo, A.; Bezerianos, A.; Thakor, N.V.; Babiloni, F. Brain interaction during cooperation: Evaluating local properties of multiple-brain network. Brain Sci. 2017, 7, 90. [Google Scholar] [CrossRef]

- Hsu, B.W.; Wang, M.J.; Chen, C.Y.; Chen, F. Effective indices for monitoring mental workload while performing multiple tasks. Percept. Mot. Ski. 2015, 121, 94–117. [Google Scholar] [CrossRef]

- Smith, M.E.; Gevins, A.; Brown, H.; Karnik, A.; Du, R. Monitoring task loading with multivariate EEG measures during complex forms of human-computer interaction. Hum. Factors 2001, 43, 366–380. [Google Scholar] [CrossRef] [PubMed]

- Kosch, T.; Funk, M.; Schmidt, A.; Chuang, L.L. Identifying cognitive assistance with mobile electroencephalography: A case study with in-situ projections for manual assembly. Proc. ACM Hum. Comput. Interact. 2018, 2, 1–20. [Google Scholar] [CrossRef]

- Comstock, J.R., Jr.; Arnegard, R.J. The Multi-Attribute Task Battery for Human Operator Workload and Strategic Behavior Research; NASA: Washington, DC, USA, 1992.

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; North-Holland: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Xie, J.; Zhou, H. EEG classification with transformer-based models. In Proceedings of the 2021 IEEE 3rd Global Conference on Life Sciences and Technologies (Lifetech), Nara, Japan, 9–11 March 2021; IEEE: New York, NY, USA; pp. 92–93. [Google Scholar]

- Özdenizci, O.; Wang, Y.; Koike-Akino, T.; Erdoğmuş, D. Learning invariant representations from EEG via adversarial inference. IEEE Access 2020, 8, 27074–27085. [Google Scholar] [CrossRef]

- Li, G.; Lee, C.H.; Jung, J.J.; Youn, Y.C.; Camacho, D. Deep learning for EEG data analytics: A survey. Concurr. Comput. Pract. Exp. 2020, 32, e5199. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef]

- Gao, Z.; Dang, W.; Wang, X.; Hong, X.; Hou, L.; Ma, K.; Perc, M. Complex networks and deep learning for EEG signal analysis. Cognitive Neurodynamics 2021, 15, 369–388. [Google Scholar] [CrossRef] [PubMed]

- Santiago-Espada, Y.; Myer, R.R.; Latorella, K.A.; Comstock, J.R., Jr. The Multi-Attribute Task Battery ii (matb-ii) Software for Human Performance and Workload Research: A User’s Guide; NASA: Washington, DC, USA, 2011.

- Lee, J.C.; Tan, D.S. Using a low-cost electroencephalograph for task classification in HCI research. In Proceedings of the 19th Annual ACM Symposium on User Interface Software and Technology, Montreux, Switzerland, 15–18 October 2006; pp. 81–90. [Google Scholar]

- Millan, J.R. On the need for on-line learning in brain-computer interfaces. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; IEEE: New York, NY, USA; Volume 4, pp. 2877–2882. [Google Scholar]

- Gysels, E.; Celka, P. Phase synchronization for the recognition of mental tasks in a brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2004, 12, 406–415. [Google Scholar] [CrossRef] [PubMed]

- Tavakolian, K.; Vasefi, F.; Naziripour, K.; Rezaei, S. Mental task classification for brain computer interface applications. In Proceedings of the Canadian Student Conference on Biomedical Computing, Waterloo, Belgium, 20–22 May 2006. [Google Scholar]

- Available online: https://matb.larc.nasa.gov/ (accessed on 15 October 2023).

- Arnegard, R.J. Operator Strategies under Varying Conditions of Workload. Ph.D. Thesis, Old Dominion University, Norfolk, VA, USA, 1991. [Google Scholar]

- Available online: https://mbraintrain.com/smarting-wireless-eeg/ (accessed on 15 October 2023).

- Lab Streaming Layer. Available online: https://github.com/sccn/labstreaminglayer (accessed on 5 September 2023).

- Varone, G.; Boulila, W.; Driss, M.; Kumari, S.; Khan, M.K.; Gadekallu, T.R.; Hussain, A. Finger pinching and imagination classification: A fusion of CNN architectures for IoMT-enabled BCI applications. Inf. Fusion 2024, 101, 102006. [Google Scholar] [CrossRef]

- Makoto’s Preprocessing Pipeline. Available online: https://sccn.ucsd.edu/wiki/Makoto%27s_preprocessing_pipeline (accessed on 10 November 2023).

- Schneider, S.; Baevski, A.; Collobert, R.; Auli, M. wav2vec: Unsupervised pre-training for speech recognition. arXiv 2019, arXiv:1904.05862. [Google Scholar]

- Smith, M.E.; Gevins, A. Neurophysiologic monitoring of mental workload and fatigue during operation of a flight simulator. In Biomonitoring for Physiological and Cognitive Performance during Military Operations; SPIE: Bellingham, WA, USA, 2005; Volume 5797, pp. 116–126. [Google Scholar]

- Wilson, G.F.; Russell, C.A. Operator functional state classification using multiple psychophysiological features in an air traffic control task. Hum. Factors 2003, 45, 381–389. [Google Scholar] [CrossRef]

- Wang, S.; Gwizdka, J.; Chaovalitwongse, W.A. Using wireless EEG signals to assess memory workload in the n-back task. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 424–435. [Google Scholar] [CrossRef]

- Holm, A.; Lukander, K.; Korpela, J.; Sallinen, M.; Müller, K.M. Estimating brain load from the EEG. Sci. World J. 2009, 9, 639–651. [Google Scholar] [CrossRef] [PubMed]

| PW | LL | ML | HL | |

|---|---|---|---|---|

| SYSM | - | 10 | 10 | 20 |

| TRCK | - | - | Active | Active, faster |

| COMM | - | 6 | 10 | 14 |

| RMAN | - | 5 | 10 | 20 |

| Overall | SYSM | COMM | RMAN | |

|---|---|---|---|---|

| F1 score | 0.87 | 0.88 | 0.87 | 0.86 |

| Precision | 0.87 | 0.88 | 0.90 | 0.85 |

| Recall | 0.87 | 0.88 | 0.84 | 0.88 |

| Accuracy | 0.87 | 0.87 | 0.90 | 0.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pušica, M.; Kartali, A.; Bojović, L.; Gligorijević, I.; Jovanović, J.; Leva, M.C.; Mijović, B. Mental Workload Classification and Tasks Detection in Multitasking: Deep Learning Insights from EEG Study. Brain Sci. 2024, 14, 149. https://doi.org/10.3390/brainsci14020149

Pušica M, Kartali A, Bojović L, Gligorijević I, Jovanović J, Leva MC, Mijović B. Mental Workload Classification and Tasks Detection in Multitasking: Deep Learning Insights from EEG Study. Brain Sciences. 2024; 14(2):149. https://doi.org/10.3390/brainsci14020149

Chicago/Turabian StylePušica, Miloš, Aneta Kartali, Luka Bojović, Ivan Gligorijević, Jelena Jovanović, Maria Chiara Leva, and Bogdan Mijović. 2024. "Mental Workload Classification and Tasks Detection in Multitasking: Deep Learning Insights from EEG Study" Brain Sciences 14, no. 2: 149. https://doi.org/10.3390/brainsci14020149

APA StylePušica, M., Kartali, A., Bojović, L., Gligorijević, I., Jovanović, J., Leva, M. C., & Mijović, B. (2024). Mental Workload Classification and Tasks Detection in Multitasking: Deep Learning Insights from EEG Study. Brain Sciences, 14(2), 149. https://doi.org/10.3390/brainsci14020149