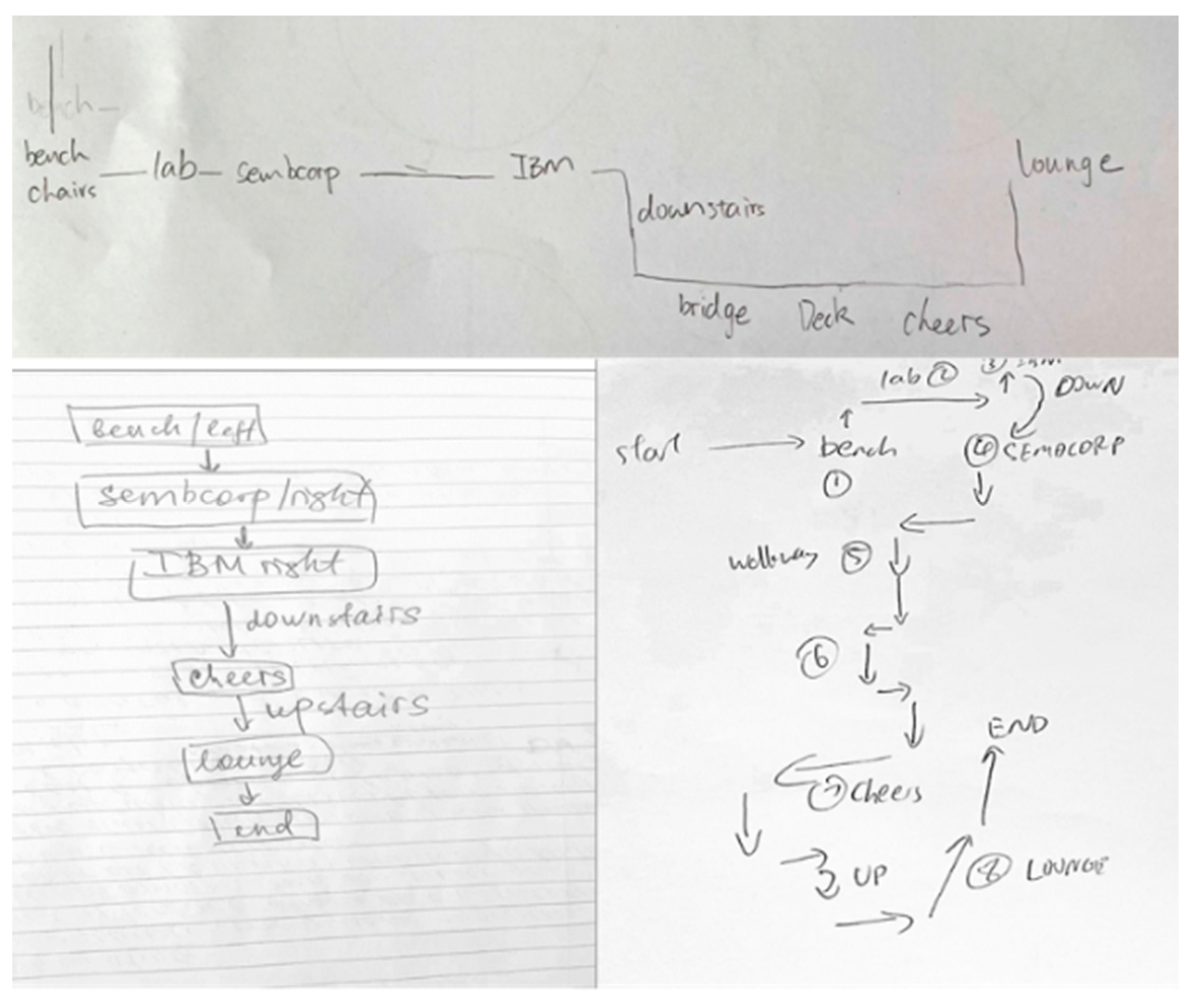

Different Types of Survey-Based Environmental Representations: Egocentric vs. Allocentric Cognitive Maps

Abstract

1. Introduction

2. Experiment 1

2.1. Methods

2.1.1. Participants

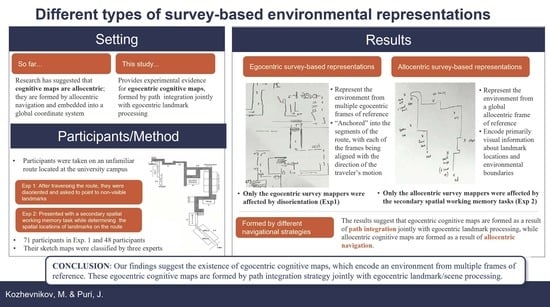

2.1.2. Route Traversal

2.2. Tasks and Materials

2.2.1. Triangle Completion Task

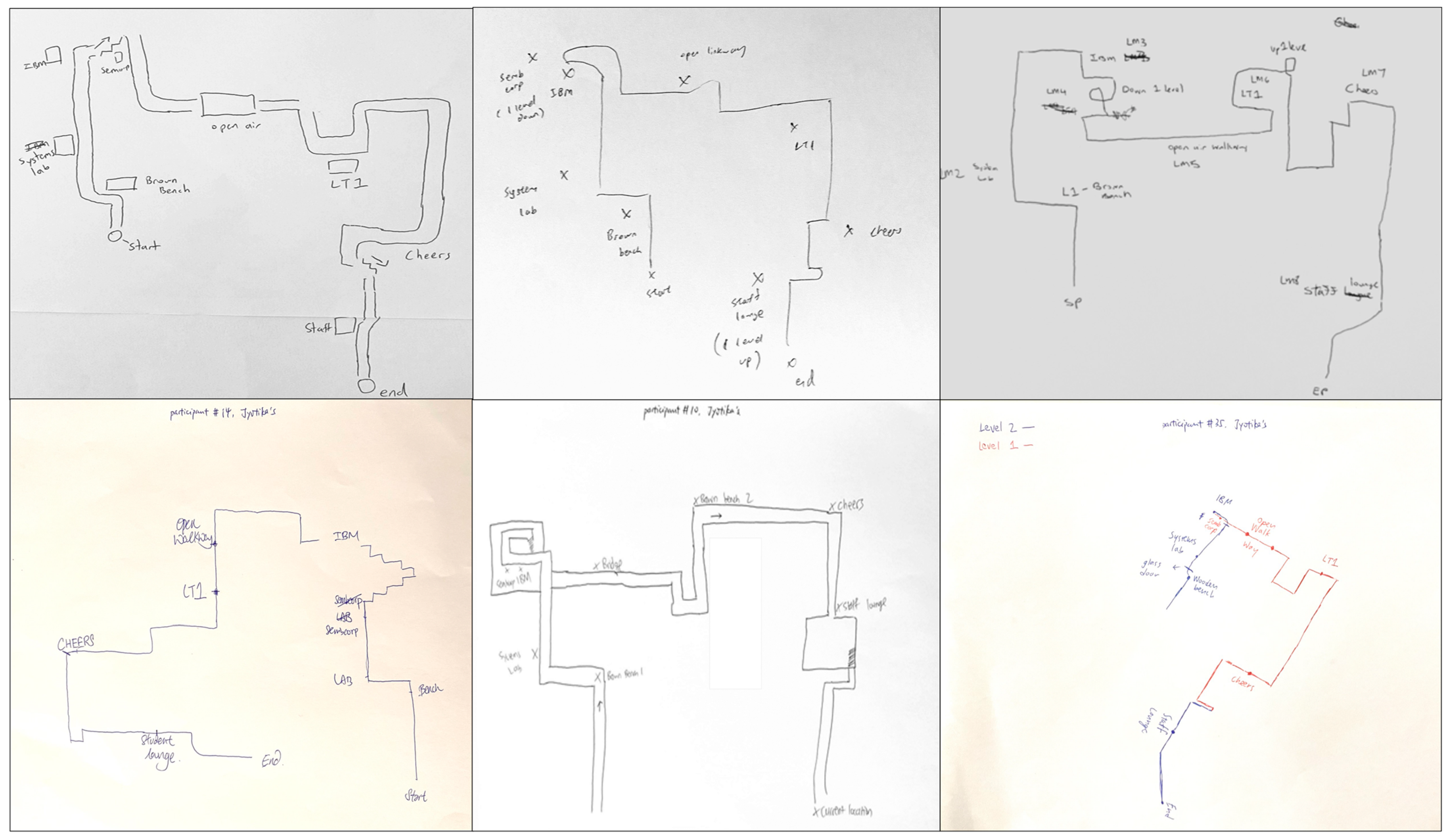

2.2.2. Sketch Map Task

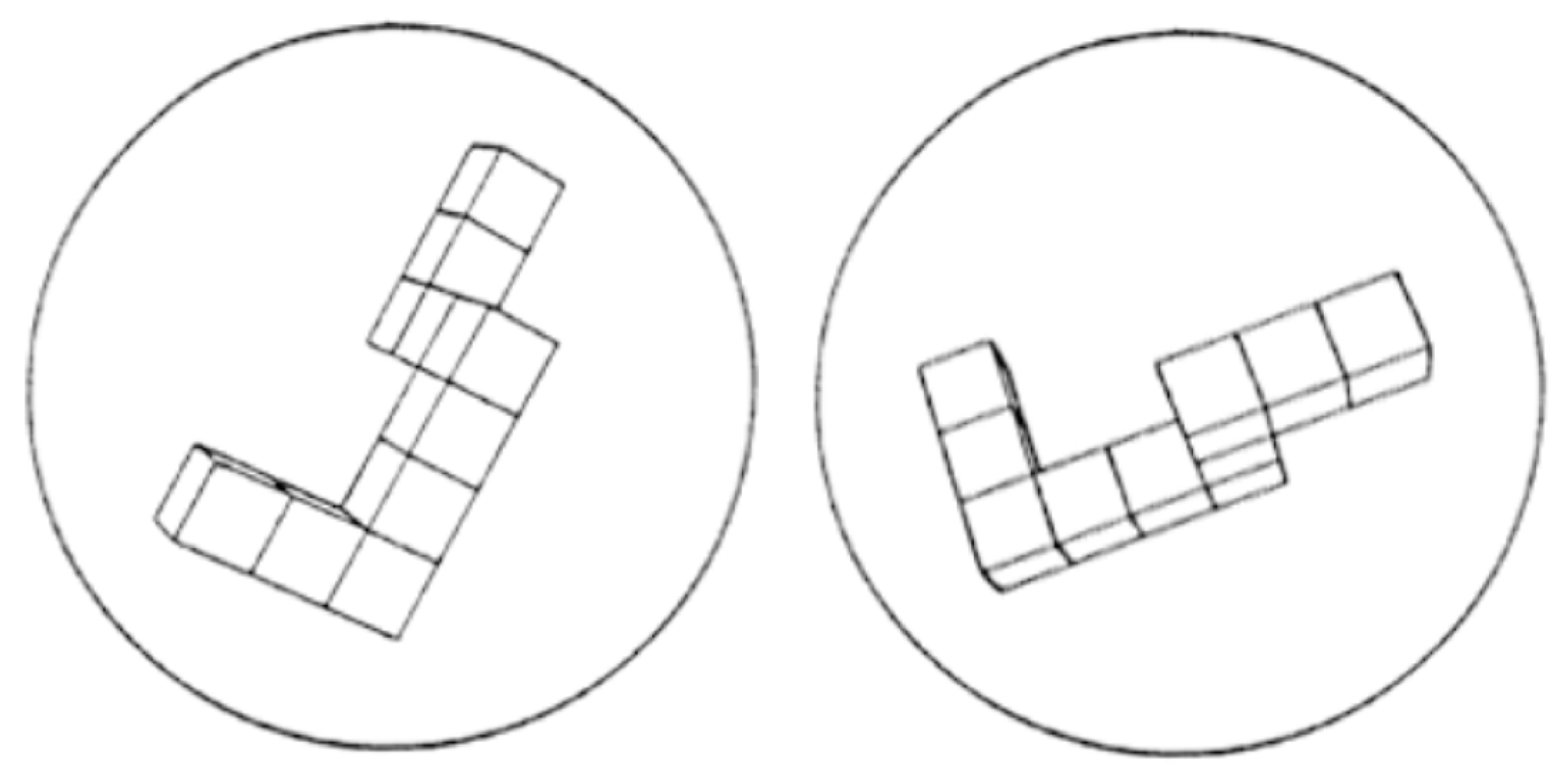

2.2.3. Route-Pointing Direction Task (R-PDT)

2.2.4. Perspective-Taking Ability (PTA) Task

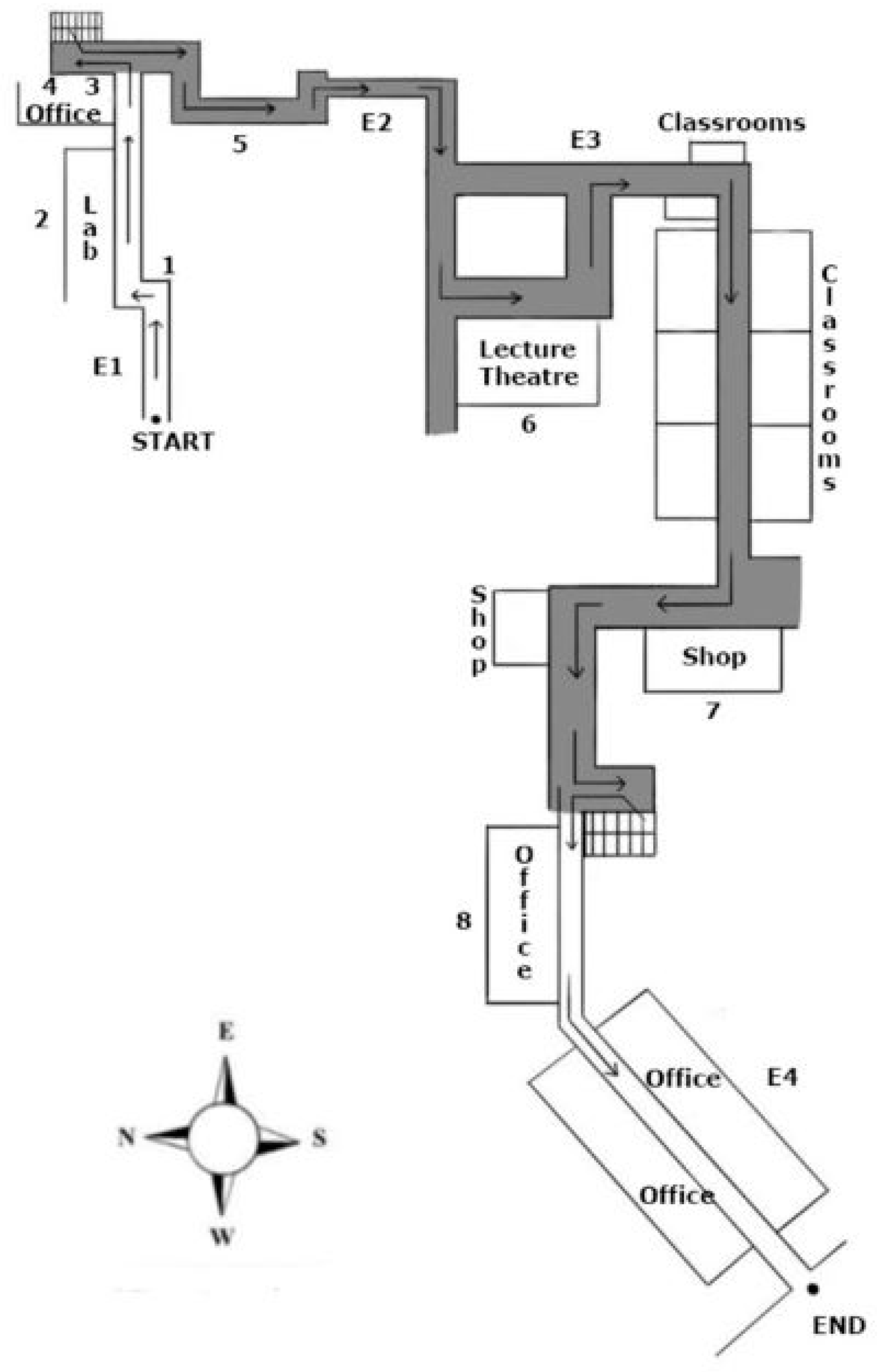

2.2.5. Mental Rotation (MR) Task

2.2.6. Procedure

2.3. Results

2.3.1. Sketch-Map Categorization

2.3.2. Descriptive Statistics

2.3.3. Effects of the Disorientation Paradigm

2.3.4. Survey Responses

2.4. Discussion

3. Experiment 2

3.1. Methods

3.1.1. Participants

3.1.2. Route Traversal

3.1.3. Tasks and Materials

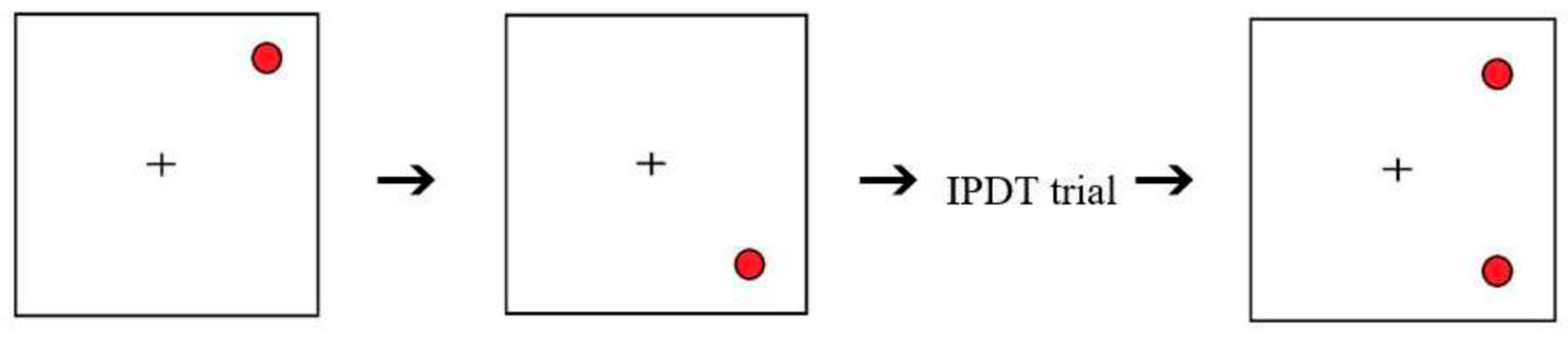

Imagined-Pointing Direction Task (I-PDT)

Spatial Working Memory (SWM) Distractor Task

Procedure

3.2. Results

3.2.1. Descriptive Statistics

3.2.2. Effects of Concurrent SWM Tasks

3.3. Discussion

4. General Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ekstrom, A.D.; Spiers, H.J.; Bohbot, V.D.; Rosenbaum, R.S. Human Spatial Navigation; Princeton University Press: Princeton, NJ, USA, 2018. [Google Scholar]

- O’Keefe, J.; Dostrovsky, J. The hippocampus as a spatial map: Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 1971, 34, 171–175. [Google Scholar] [CrossRef] [PubMed]

- Klatzky, R.L. Allocentric and egocentric spatial representations: Definitions, distinctions, and interconnections. In Spatial Cognition: An Interdisciplinary Approach to Representing and Processing Spatial Knowledge; Springer: Berlin/Heidelberg, Germany, 1998; pp. 1–17. [Google Scholar]

- Byrne, P.; Becker, S.; Burgess, N. Remembering the past and imagining the future: A neural model of spatial memory and imagery. Psychol. Rev. 2007, 114, 340. [Google Scholar] [CrossRef] [PubMed]

- Nadel, L. Cognitive maps. In Handbook of Spatial Cognition; Waller, D., Nadel, L., Eds.; American Psychological Association: Washington, DC, USA, 2013; pp. 155–173. [Google Scholar]

- McNaughton, B.L.; Battaglia, F.P.; Jensen, O.; Moser, E.I.; Moser, M.-B. Path integration and the neural basis of the ‘cognitive map’. Nat. Rev. Neurosci. 2006, 7, 663–678. [Google Scholar] [CrossRef] [PubMed]

- Burgess, N.; Becker, S.; King, J.A.; O’Keefe, J. Memory for events and their spatial context: Models and experiments. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 2001, 356, 1493–1503. [Google Scholar] [CrossRef] [PubMed]

- O’Keefe, J.; Nadal, L. The Hippocampus as a Cognitive Map; Oxford University: Oxford, UK, 1978. [Google Scholar]

- Siegel, A.W.; White, S.H. The development of spatial representations of large-scale environments. Adv. Child Dev. Behav. 1975, 10, 9–55. [Google Scholar] [PubMed]

- Thorndyke, P.W.; Goldin, S.E. Spatial learning and reasoning skills. In Spatial Orientation; Springer: Boston, MA, USA, 1983; pp. 195–217. [Google Scholar]

- Foo, P.; Warren, W.H.; Duchon, A.; Tarr, M.J. Do humans integrate routes into a cognitive map? Map-versus landmark-based navigation of novel shortcuts. J. Exp. Psychol. Learn. Mem. Cogn. 2005, 31, 195. [Google Scholar] [CrossRef]

- Collett, M.; Collett, T.S.; Bisch, S.; Wehner, R. Local and global vectors in desert ant navigation. Nature 1998, 394, 269–272. [Google Scholar] [CrossRef]

- Loomis, J.M.; Klatzky, R.L.; Golledge, R.G.; Philbeck, J.W. Human navigation by path integration. In Wayfinding Behavior; Cognitive Mapping and Other Spatial Processes; Johns Hopkins University Press: Charles Village, BA, USA, 1999; pp. 125–151. [Google Scholar]

- Farrell, M.J.; Thomson, J.A. Automatic spatial updating during locomotion without vision. Q. J. Exp. Psychol. Sect. A 1998, 51, 637–654. [Google Scholar] [CrossRef]

- Gallistel, C.R. Representations in animal cognition: An introduction. Cognition 1990, 37, 1–22. [Google Scholar] [CrossRef]

- Holmes, C.A.; Newcombe, N.S.; Shipley, T.F. Move to learn: Integrating spatial information from multiple viewpoints. Cognition 2018, 178, 7–25. [Google Scholar] [CrossRef]

- Peyrache, A.; Schieferstein, N.; Buzsáki, G. Transformation of the head-direction signal into a spatial code. Nat. Commun. 2017, 8, 1752. [Google Scholar] [CrossRef] [PubMed]

- Whitlock, J.R.; Pfuhl, G.; Dagslott, N.; Moser, M.-B.; Moser, E.I. Functional split between parietal and entorhinal cortices in the rat. Neuron 2012, 73, 789–802. [Google Scholar] [CrossRef] [PubMed]

- Wilber, A.A.; Clark, B.J.; Forster, T.C.; Tatsuno, M.; McNaughton, B.L. Interaction of egocentric and world-centered reference frames in the rat posterior parietal cortex. J. Neurosci. 2014, 34, 5431–5446. [Google Scholar] [CrossRef] [PubMed]

- Alexander, A.S.; Nitz, D.A. Spatially periodic activation patterns of retrosplenial cortex encode route sub-spaces and distance traveled. Curr. Biol. 2017, 27, 1551–1560.e4. [Google Scholar] [CrossRef] [PubMed]

- Knierim, J.J. The path-integration properties of hippocampal place cells. In The Neural Basis of Navigation: Evidence from Single Cell Recording; Kluwer: New York, NY, USA, 2002; pp. 41–58. [Google Scholar]

- Arleo, A.; Déjean, C.; Allegraud, P.; Khamassi, M.; Zugaro, M.B.; Wiener, S.I. Optic flow stimuli update anterodorsal thalamus head direction neuronal activity in rats. J. Neurosci. 2013, 33, 16790–16795. [Google Scholar] [CrossRef]

- Burgess, N.; Barry, C.; O’Keefe, J. An oscillatory interference model of grid cell firing. Hippocampus 2007, 17, 801–812. [Google Scholar] [CrossRef]

- Bjerknes, T.L.; Dagslott, N.C.; Moser, E.I.; Moser, M.-B. Path integration in place cells of developing rats. Proc. Natl. Acad. Sci. USA 2018, 115, E1637–E1646. [Google Scholar] [CrossRef]

- Hegarty, M.; Richardson, A.E.; Montello, D.R.; Lovelace, K.; Subbiah, I. Development of a self-report measure of environmental spatial ability. Intelligence 2002, 30, 425–447. [Google Scholar] [CrossRef]

- Kato, Y.; Takeuchi, Y. Individual differences in wayfinding strategies. J. Environ. Psychol. 2003, 23, 171–188. [Google Scholar] [CrossRef]

- Lawton, C.A.; Kallai, J. Gender differences in wayfinding strategies and anxiety about wayfinding: A cross-cultural comparison. Sex Roles 2002, 47, 389–401. [Google Scholar] [CrossRef]

- Pazzaglia, F.; Cornoldi, C.; De Beni, R. Differenze individuali nella rappresentazione dello spazio e nell’abilità di orientamento: Presentazione di un questionario autovalutativo [Individual differences in spatial representation: A self-rating questionnaire]. G. Ital. Di Psicol. 2000, 27, 627. [Google Scholar] [CrossRef]

- Pazzaglia, F.; De Beni, R. Strategies of processing spatial information in survey and landmark-centred individuals. Eur. J. Cogn. Psychol. 2001, 13, 493–508. [Google Scholar] [CrossRef]

- Blajenkova, O.; Motes, M.A.; Kozhevnikov, M. Individual differences in the representations of novel environments. J. Environ. Psychol. 2005, 25, 97–109. [Google Scholar] [CrossRef]

- Chrastil, E.R.; Warren, W.H. From cognitive maps to cognitive graphs. PloS ONE 2014, 9, e112544. [Google Scholar] [CrossRef] [PubMed]

- Nigro, G.; Neisser, U. Point of view in personal memories. Cogn. Psychol. 1983, 15, 467–482. [Google Scholar] [CrossRef]

- Zhong, J.Y.; Kozhevnikov, M. Relating allocentric and egocentric survey-based representations to the self-reported use of a navigation strategy of egocentric spatial updating. J. Environ. Psychol. 2016, 46, 154–175. [Google Scholar] [CrossRef]

- Warren, W.H. Non-euclidean navigation. J. Exp. Biol. 2019, 222, jeb187971. [Google Scholar] [CrossRef]

- Harley, J.B.; Woodward, D. The History of Cartography: Cartography in the Traditional East and Southeast Asian Societies; University of Chicago Press: Chicago, IL, USA, 1994; Volume 2, Book Two. [Google Scholar]

- Hapgood, C.H. Maps of the Ancient Sea Kings: Evidence of Advanced Civilization in the Ice Age; Adventures Unlimited Press: Kempton, IL, USA, 1966. [Google Scholar]

- Wang, R.F.; Spelke, E.S. Updating egocentric representations in human navigation. Cognition 2000, 77, 215–250. [Google Scholar] [CrossRef]

- Wang, R.F.; Spelke, E.S. Human spatial representation: Insights from animals. Trends Cogn. Sci. 2002, 6, 376–382. [Google Scholar] [CrossRef]

- Waller, D.; Montello, D.R.; Richardson, A.E.; Hegarty, M. Orientation specificity and spatial updating of memories for layouts. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 1051. [Google Scholar] [CrossRef]

- Warren, W.H.; Rothman, D.B.; Schnapp, B.H.; Ericson, J.D. Wormholes in virtual space: From cognitive maps to cognitive graphs. Cognition 2017, 166, 152–163. [Google Scholar] [CrossRef] [PubMed]

- Chrastil, E.R.; Warren, W.H. Rotational error in path integration: Encoding and execution errors in angle reproduction. Exp. Brain Res. 2017, 235, 1885–1897. [Google Scholar] [CrossRef] [PubMed]

- McNaughton, B.; Chen, L.; Markus, E. “Dead reckoning,” landmark learning, and the sense of direction: A neurophysiological and computational hypothesis. J. Cogn. Neurosci. 1991, 3, 190–202. [Google Scholar] [CrossRef] [PubMed]

- Cheng, K. A purely geometric module in the rat’s spatial representation. Cognition 1986, 23, 149–178. [Google Scholar] [CrossRef]

- Biegler, R.; Morris, R.G. Landmark stability is a prerequisite for spatial but not discrimination learning. Nature 1993, 361, 631–633. [Google Scholar] [CrossRef]

- Mou, W.; McNamara, T.P.; Valiquette, C.M.; Rump, B. Allocentric and egocentric updating of spatial memories. J. Exp. Psychol. Learn. Mem. Cogn. 2004, 30, 142. [Google Scholar] [CrossRef]

- Wilson, M.A.; McNaughton, B.L. Dynamics of the hippocampal ensemble code for space. Science 1993, 261, 1055–1058. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.; Buchner, A. G* Power 3: A flexible statistical power analysis for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Klatzky, R.L.; Beall, A.C.; Loomis, J.M.; Golledge, R.G.; Philbeck, J.W. Human navigation ability: Tests of the encoding-error model of path integration. Spat. Cogn. Comput. 1999, 1, 31–65. [Google Scholar] [CrossRef]

- Avraamides, M.N.; Loomis, J.M.; Klatzky, R.L.; Golledge, R.G. Functional equivalence of spatial representations derived from vision and language: Evidence from allocentric judgments. J. Exp. Psychol. Learn. Mem. Cogn. 2004, 30, 801. [Google Scholar] [CrossRef]

- Ting, C.P. “Sense” of Direction? Individual Differences in the Spatial Updating Style of Navigation; ScholarBank@NUS Repository; National University of Singapore: Singapore, 2020. [Google Scholar]

- Klatzky, R.L.; Loomis, J.M.; Beall, A.C.; Chance, S.S.; Golledge, R.G. Spatial updating of self-position and orientation during real, imagined, and virtual locomotion. Psychol. Sci. 1998, 9, 293–298. [Google Scholar] [CrossRef]

- Kozhevnikov, M.; Hegarty, M. A dissociation between object manipulation spatial ability and spatial orientation ability. Mem. Cogn. 2001, 29, 745–756. [Google Scholar] [CrossRef] [PubMed]

- Shepard, R.N.; Metzler, J. Mental rotation of three-dimensional objects. Science 1971, 171, 701–703. [Google Scholar] [CrossRef] [PubMed]

- Diwadkar, V.A.; McNamara, T.P. Viewpoint dependence in scene recognition. Psychol. Sci. 1997, 8, 302–307. [Google Scholar] [CrossRef]

- Waller, D.; Hodgson, E. Transient and enduring spatial representations under disorientation and self-rotation. J. Exp. Psychol. Learn. Mem. Cogn. 2006, 32, 867. [Google Scholar] [CrossRef] [PubMed]

- Knierim, J.J.; Kudrimoti, H.S.; McNaughton, B.L. Place cells, head direction cells, and the learning of landmark stability. J. Neurosci. 1995, 15, 1648–1659. [Google Scholar] [CrossRef]

- Kozhevnikov, M.; Motes, M.A.; Rasch, B.; Blajenkova, O. Perspective-taking vs. mental rotation transformations and how they predict spatial navigation performance. Appl. Cogn. Psychol. Off. J. Soc. Appl. Res. Mem. Cogn. 2006, 20, 397–417. [Google Scholar] [CrossRef]

- Easton, R.D.; Sholl, M.J. Object-array structure, frames of reference, and retrieval of spatial knowledge. J. Exp. Psychol. Learn. Mem. Cogn. 1995, 21, 483. [Google Scholar] [CrossRef]

- Shelton, A.L.; McNamara, T.P. Systems of spatial reference in human memory. Cogn. Psychol. 2001, 43, 274–310. [Google Scholar] [CrossRef]

- Yokoyama, T.; Kato, R.; Inoue, K.; Takeda, Y. Joint attention is intact even when visuospatial working memory is occupied. Vis. Res. 2019, 154, 54–59. [Google Scholar] [CrossRef]

- Castillo Escamilla, J.; Fernández Castro, J.J.; Baliyan, S.; Ortells-Pareja, J.J.; Ortells Rodríguez, J.J.; Cimadevilla, J.M. Allocentric spatial memory performance in a virtual reality-based task is conditioned by visuospatial working memory capacity. Brain Sci. 2020, 10, 552. [Google Scholar] [CrossRef]

- De Beni, R.; Pazzaglia, F.; Gyselinck, V.; Meneghetti, C. Visuospatial working memory and mental representation of spatial descriptions. Eur. J. Cogn. Psychol. 2005, 17, 77–95. [Google Scholar] [CrossRef]

- Garden, S.; Cornoldi, C.; Logie, R.H. Visuo-spatial working memory in navigation. Appl. Cogn. Psychol. Off. J. Soc. Appl. Res. Mem. Cogn. 2002, 16, 35–50. [Google Scholar] [CrossRef]

- Dudchenko, P.A. Why People Get Lost: The Psychology and Neuroscience of Spatial Cognition; Oxford University Press: Cary, NC, USA, 2010. [Google Scholar]

- Huth, J.E. The Lost Art of Finding Our Way; Harvard University Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Kim, S.; Sapiurka, M.; Clark, R.E.; Squire, L.R. Contrasting effects on path integration after hippocampal damage in humans and rats. Proc. Natl. Acad. Sci. USA 2013, 110, 4732–4737. [Google Scholar] [CrossRef] [PubMed]

- Etienne, A.S.; Jeffery, K.J. Path integration in mammals. Hippocampus 2004, 14, 180–192. [Google Scholar] [CrossRef]

- Savelli, F.; Knierim, J.J. Origin and role of path integration in the cognitive representations of the hippocampus: Computational insights into open questions. J. Exp. Biol. 2019, 222, jeb188912. [Google Scholar] [CrossRef]

- Klatzky, R.L.; Loomis, J.M.; Golledge, R.G.; Cicinelli, J.G.; Doherty, S.; Pellegrino, J.W. Acquisition of route and survey knowledge in the absence of vision. J. Mot. Behav. 1990, 22, 19–43. [Google Scholar] [CrossRef]

- Fujita, N.; Klatzky, R.L.; Loomis, J.M.; Golledge, R.G. The encoding-error model of pathway completion without vision. Geogr. Anal. 1993, 25, 295–314. [Google Scholar] [CrossRef]

- Bayramova, R.; Valori, I.; McKenna-Plumley, P.E.; Callegher, C.Z.; Farroni, T. The role of vision and proprioception in self-motion encoding: An immersive virtual reality study. Atten. Percept. Psychophys. 2021, 83, 2865–2878. [Google Scholar] [CrossRef]

- Mou, W.; McNamara, T.P. Intrinsic frames of reference in spatial memory. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 162. [Google Scholar] [CrossRef]

- Mou, W.; Wang, L. Piloting and path integration within and across boundaries. J. Exp. Psychol. Learn. Mem. Cogn. 2015, 41, 220. [Google Scholar] [CrossRef] [PubMed]

- McNaughton, B.L.; Barnes, C.A.; Gerrard, J.L.; Gothard, K.; Jung, M.W.; Knierim, J.J.; Kudrimoti, H.; Qin, Y.; Skaggs, W.E.; Suster, M.; et al. Deciphering the hippocampal polyglot: The hippocampus as a path integration system. J. Exp. Biol. 1996, 199, 173–185. [Google Scholar] [CrossRef] [PubMed]

- Knierim, J.J. From the GPS to HM: Place cells, grid cells, and memory. Hippocampus 2015, 25, 719–725. [Google Scholar] [CrossRef] [PubMed]

- Sjolund, L.A.; Kelly, J.W.; McNamara, T.P. Optimal combination of environmental cues and path integration during navigation. Mem. Cogn. 2018, 46, 89–99. [Google Scholar] [CrossRef]

- Burgess, N. Spatial memory: How egocentric and allocentric combine. Trends Cogn. Sci. 2006, 10, 551–557. [Google Scholar] [CrossRef]

- Richardson, A.E.; Montello, D.R.; Hegarty, M. Spatial knowledge acquisition from maps and from navigation in real and virtual environments. Mem. Cogn. 1999, 27, 741–750. [Google Scholar] [CrossRef]

- Weisberg, S.M.; Newcombe, N.S. Cognitive maps: Some people make them, some people struggle. Curr. Dir. Psychol. Sci. 2018, 27, 220–226. [Google Scholar] [CrossRef]

- Chrastil, E.R.; Sherrill, K.R.; Aselcioglu, I.; Hasselmo, M.E.; Stern, C.E. Individual differences in human path integration abilities correlate with gray matter volume in retrosplenial cortex, hippocampus, and medial prefrontal cortex. Eneuro 2017, 4, 0346-16. [Google Scholar] [CrossRef]

- Gramann, K.; Onton, J.; Riccobon, D.; Mueller, H.J.; Bardins, S.; Makeig, S. Human brain dynamics accompanying use of egocentric and allocentric reference frames during navigation. J. Cogn. Neurosci. 2010, 22, 2836–2849. [Google Scholar] [CrossRef]

- Knierim, J.J.; Lee, I.; Hargreaves, E.L. Hippocampal place cells: Parallel input streams, subregional processing, and implications for episodic memory. Hippocampus 2006, 16, 755–764. [Google Scholar] [CrossRef]

- Manns, J.R.; Eichenbaum, H. Evolution of declarative memory. Hippocampus 2006, 16, 795–808. [Google Scholar] [CrossRef] [PubMed]

- Knierim, J.J.; Neunuebel, J.P.; Deshmukh, S.S. Functional correlates of the lateral and medial entorhinal cortex: Objects, path integration and local–global reference frames. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369, 20130369. [Google Scholar] [CrossRef] [PubMed]

- Hafting, T.; Fyhn, M.; Molden, S.; Moser, M.-B.; Moser, E.I. Microstructure of a spatial map in the entorhinal cortex. Nature 2005, 436, 801–806. [Google Scholar] [CrossRef] [PubMed]

- Taube, J.S.; Muller, R.U.; Ranck, J.B. Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. J. Neurosci. 1990, 10, 420–435. [Google Scholar] [CrossRef]

- Save, E.; Sargolini, F. Disentangling the role of the MEC and LEC in the processing of spatial and non-spatial information: Contribution of lesion studies. Front. Syst. Neurosci. 2017, 11, 81. [Google Scholar] [CrossRef] [PubMed]

- Jercog, P.E.; Ahmadian, Y.; Woodruff, C.; Deb-Sen, R.; Abbott, L.F.; Kandel, E.R. Heading direction with respect to a reference point modulates place-cell activity. Nat. Commun. 2019, 10, 2333. [Google Scholar] [CrossRef]

- Rubin, A.; Yartsev, M.M.; Ulanovsky, N. Encoding of head direction by hippocampal place cells in bats. J. Neurosci. 2014, 34, 1067–1080. [Google Scholar] [CrossRef]

- Maguire, E.A.; Mullally, S.L. The hippocampus: A manifesto for change. J. Exp. Psychol. Gen. 2013, 142, 1180. [Google Scholar] [CrossRef]

- Burgess, N. Spatial cognition and the brain. Ann. N. Y. Acad. Sci. 2008, 1124, 77–97. [Google Scholar] [CrossRef]

- Harvey, C.D.; Coen, P.; Tank, D.W. Choice-specific sequences in parietal cortex during a virtual-navigation decision task. Nature 2012, 484, 62–68. [Google Scholar] [CrossRef]

- Nitz, D.A. Spaces within spaces: Rat parietal cortex neurons register position across three reference frames. Nat. Neurosci. 2012, 15, 1365–1367. [Google Scholar] [CrossRef] [PubMed]

| Time 1 | Deviation from the Correct Angle | Time 2 | Deviation from the Correct PD | ||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||

| LDMK #2 | 18° | 23° | LDMK #5 | 30° | 29° |

| LDMK #4 | 108° | 48° | LDMK #3 | 105° | 55° |

| LDMK #6 | 81° | 36° | LDMK #1 | 80° | 32° |

| LDMK #8 | 78° | 30° | LDMK #7 | 74° | 35° |

| Task | Procedural/Route Map Sketchers | Allocentric-Survey Map Sketchers | Egocentric-Survey Map Sketchers | ANOVA Results | |||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | F(2, 63) | |

| (i) R-PDT Time 1 | 87.09 | 27.93 | 67.17 | 27.89 | 64.14 | 29.26 | 3.98 * |

| (ii) R-PDT Time 2 | 82.98 | 25.45 | 71.56.44 | 34.84 | 81.66 | 30.50 | 0.89 |

| (iii) MR efficiency | 0.42 | 0.09 | 0.47 | 0.11 | 0.49 | 0.08 | 3.56 * |

| (iv) PTA efficiency | 0.70 | 0.33 | 0.93 | 0.34 | 1.11 | 0.63 | 4.21 * |

| (iv) Triangle Completion | 21.95 | 11.95 | 25.55 | 12.79 | 13.31 | 7.98 | 7.08 ** |

| Assessment | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| 1. R-PDT Time 1 | -- | |||

| 2. Triangle completion task | 0.22 | -- | ||

| 3. PTA efficiency | 0.29 * | 0.21 | -- | |

| 4. MR efficiency | 0.08 | 0.20 | 0.34 ** | -- |

| Questions Asked by the Interviewer | Allocentric-Survey Mappers | Egocentric-Survey Mappers |

|---|---|---|

| What cues did you use to remember this route? | “I remember recalling the corridor first, and then from the starting point, I recall the relative position on one floor with respect to another.” | “I considered the distance between landmarks, sequence and location with respect to where I was.” “The sequence of landmarks and right and left turns. Used bigger landmarks for orientation and the general landscape as well.” |

| What do you remember from navigating the route? | “I remember it was like…not a straight corridor…it turned…it’s slanted. … I think it slanted to the…slanted right.” “We walked a little, and then we took a 45-degree turn to the left.” “I was basically making a round by the corner of the building so you go back so you turn right, turn right again so you pass by Sembcorp, which should be on top of the IBM then.” “Straight path and then made a 45-degree angled turn to the left, walked straight all the way to the endpoint.” “Long corridor, and then the bench was on the right side of it.” | “Facing the Sembcorp lab, we turned left. In front of me, on the right, was LT1 The staff lounge was on my right.” “Before we took the stairs, I could see the orange board, with words. After that, the orange board was like right in front… and then the staff lounge was on the right.” “When we came out of the stairs, I could see Sembcorp in front of me.” “From the link, you can see that the greenery that was there earlier and above, when we took the stairs, it became closer and at eye level”. “As we faced Cheers, we turned right… The unsheltered pathway was on my left. I saw LT1 on my right.” |

| How do you picture the route when you think about it? Do you imagine it from the top-down (bird’s-eye view) or first-person (as you were on the route) perspective? | “Definitely tried to create a top-down perspective. I was picturing myself on the route when I was navigating but not when I try to recall the route. I can’t picture this during recall.” “When I think about it, it looks the same to me as it would have on google maps.” | “No top-down perspective. I use a first-person perspective but only at landmarks. I mean I remember how the landmarks looked like from my viewpoint.” |

| IPDT without a Distractor | IPDT with a Distractor | |||

|---|---|---|---|---|

| Trial | Imagined Heading | Pointing Direction | Imagined Heading | Pointing Direction |

| 1 | 100° | BL | 100° | BL |

| 2 | 100° | BR | 100° | FL |

| 3 | 100° | BL | 120° | BR |

| 4 | 120° | BL | 120° | FR |

| 5 | 120° | FL | 120° | BR |

| 6 | 140° | BL | 140° | BL |

| 7 | 140° | FR | 140° | FL |

| 8 | 140° | FR | 160° | FL |

| 9 | 160° | FL | 160° | BR |

| 10 | 180° | BR | 160° | FR |

| 11 | 180° | FR | 180° | BL |

| 12 | 180° | BR | 180° | FL |

| Task | Procedural/Route Map Sketcherss | Allocentric-Survey Map Sketchers | Egocentric-Survey Map Sketchers | ANOVA Results | |||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | F(2, 44) | |

| (i) I-PDT-1 | 0.30 | 0.18 | 0.51 | 0.18 | 0.53 | 0.24 | 5.69 ** |

| (ii) I-PDT-2 | 0.35 | 0.16 | 0.50 | 0.14 | 0.56 | 0.22 | 5.65 ** |

| (iii) I-PDT-1 RT (s) | 14.80 | 6.28 | 12.86 | 2.91 | 10.95 | 2.28 | 1.47 |

| (iv) I-PDT-2 RT (s) | 15.20 | 5.48 | 16.53 | 3.85 | 11.94 | 2.60 | 4.59 * |

| (vi) MR—efficiency | 0.36 | 0.08 | 0.44 | 0.04 | 0.40 | 0.06 | 7.04 ** |

| (vii) PTA—minefficiency | 0.59 | 0.21 | 0.66 | 0.14 | 0.81 | 0.31 | 3.49 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kozhevnikov, M.; Puri, J. Different Types of Survey-Based Environmental Representations: Egocentric vs. Allocentric Cognitive Maps. Brain Sci. 2023, 13, 834. https://doi.org/10.3390/brainsci13050834

Kozhevnikov M, Puri J. Different Types of Survey-Based Environmental Representations: Egocentric vs. Allocentric Cognitive Maps. Brain Sciences. 2023; 13(5):834. https://doi.org/10.3390/brainsci13050834

Chicago/Turabian StyleKozhevnikov, Maria, and Jyotika Puri. 2023. "Different Types of Survey-Based Environmental Representations: Egocentric vs. Allocentric Cognitive Maps" Brain Sciences 13, no. 5: 834. https://doi.org/10.3390/brainsci13050834

APA StyleKozhevnikov, M., & Puri, J. (2023). Different Types of Survey-Based Environmental Representations: Egocentric vs. Allocentric Cognitive Maps. Brain Sciences, 13(5), 834. https://doi.org/10.3390/brainsci13050834