Abstract

The eye region conveys considerable information regarding an individual’s emotions, motivations, and intentions during interpersonal communication. Evidence suggests that the eye regions of an individual expressing emotions can capture attention more rapidly than the eye regions of an individual in a neutral affective state. However, how attentional resources affect the processing of emotions conveyed by the eye regions remains unclear. Accordingly, the present study employed a dual-target rapid serial visual presentation task: happy, neutral, or fearful eye regions were presented as the second target, with a temporal lag between two targets of 232 or 696 ms. Participants completed two tasks successively: Task 1 was to identify which species the upright eye region they had seen belonged to, and Task 2 was to identify what emotion was conveyed in the upright eye region. The behavioral results showed that the accuracy for fearful eye regions was lower than that for neutral eye regions under the condition of limited attentional resources; however, accuracy differences across the three types of eye regions did not reach significance under the condition of adequate attentional resources. These findings indicate that preferential processing of fearful expressions is not automatic but is modulated by available attentional resources.

1. Introduction

During the COVID-19 pandemic, face masks emerged as one of the most economically practical protective measures employed worldwide to mitigate viral transmission, becoming an essential item for daily travel. Notably, because wearing a mask can block the nose and mouth regions of the face, existing evidence suggests, on the one hand, that wearing face masks restrains the extraction and processing of facial information, leading to a decrease in the speed and accuracy of facial expression recognition [1,2,3]. On the other hand, wearing a face mask makes people pay more attention to information in the eye region, resulting in increased activity of the orbicularis oculi muscle and an expanded range of direct gaze perception [4,5]. Regarding the eye region, much popular literature refers to the eyes as “a window into the soul”. Empirical research in psychology has consistently revealed that during face perception tasks, the eye region tends to be prioritized for attention and receives the longest fixations within the facial structure [6,7]. Furthermore, the eye region represents one of the most informative sources for facial expression recognition, and people can accurately identify others’ current emotions and motivations based on isolated eye region cues. For instance, numerous studies have shown that basic facial expressions and a wide range of complex emotions can be recognized quickly and efficiently from images of isolated eye regions [8,9,10,11]. In particular, individuals from Eastern cultures exhibit a higher degree of reliance on the eye region to express and recognize emotional states from the face than do their Western counterparts [12,13]. Therefore, the eye region plays a crucial role in the perception of facial expressions. However, the perceptual and encoding processes underlying the perception of emotional cues in the eyes remain unclear.

It has long been known that people have evolved to prioritize perceptual analysis and elaborate encoding of visual stimuli with high-value signals while ignoring task-irrelevant stimuli because of the limited capacity of the attention system. This process is referred to as selective attention [14]. Facial expressions, which are highly prevalent expressive nonverbal social cues in daily life, have been shown to influence selective attention [15,16,17,18]. More specifically, both happy and threat-related fearful and angry expressions can rapidly capture attention and obtain prioritized processing compared to neutral expressions, as reflected by higher recognition accuracies and shorter reaction times [8,19,20,21]. Even when presented using isolated eye region cues, angry and fearful expressions can still be detected and recognized quickly and accurately, triggering pronounced threat detection advantage and increased neural activities [10,22,23,24]. Combining the classical spatial attention paradigm of dot-probe, Carlson and colleagues found that regardless of the conscious awareness of eye region stimuli, fearful eye regions modulate spatial attention by facilitating attentional orienting and delaying attentional disengagement [25,26], supporting the notion of high sensitivity of the human brain to emotional cues in the eye region [27,28].

In addition to selective attention in the spatial domain, selective attention in the temporal domain focuses on the attentional processing of sequentially presented stimulus streams. A classical task for investigating this issue is the dual-target rapid serial visual presentation (RSVP), which permits a detailed exploration of the impact of available attentional resources on the processing of predefined targets [29,30]. In this task, a widely acknowledged phenomenon is the attentional blink (AB), in which the recognition accuracy of the second target (T2) significantly decreases when it appears within 200–500 ms after the presentation of the first target (T1) [31,32]. When emotional words [33,34,35], scene pictures [36,37], or facial expressions [38,39,40,41] are used as T2 and neutral stimuli as T1, an increasing number of studies have revealed that positively and negatively valenced T2 stimuli presented within the AB period are associated with significantly higher accuracy than did neutral T2 stimuli. Considering the essential importance of the eye region in social interactions [42], we recently presented happy, neutral, or fearful eye regions as T2 and observed reduced AB effects induced by happy and fearful eye regions [23,24]. These findings strongly support the notion that the emotional significance of T2 stimuli facilitates prioritized access to the capacity-limited attentional system, allowing for fine-grained encoding and representation at the working memory stage [16,43].

Beyond the influence of the emotional valence of T2 stimuli, several studies have revealed that the perceptual relevance between two targets or between targets and distractors affects AB [30,44,45,46]. That is, the more similar the T2 perceptual attributes are to the preceding T1 or distractor, the stronger the AB effect. Based on multiple channels for configural and featural processing, the AB effect is assumed to occur when the processing channels or routes involved in the discrimination of T1 and T2 stimuli overlap. Importantly, in addition to the feature-based channel used in object discrimination, a configural-based channel is available for the discrimination of facial stimuli. Following this logic, non-face T1 and face T2 stimuli do not compete for a single capacity-limited resource pool, which might lead to attenuation or absence of the AB effect for face T2 presented during the AB period [47]. Our recent studies that focused on the relationship between temporal attention and the processing of eye region expressions repeatedly showed that happy and fearful eye regions could reduce the AB effect, regardless of whether the eye region of emotions was the first or second of the two targets; one of three neutral house images was displayed as the other target [23,48]. The differences in perceptual attributes and processing channels between both targets may partially account for the attenuated AB effect. Nevertheless, little is known about the influence of the emotional content of T2 on AB when the same processing channel is recruited.

The present study aimed to resolve the concerns raised above and further investigate whether emotional eye regions serving as the T2 still can reduce the AB effect when T1 and T2 belong to the same face processing channel. To this end, we carried out a dual-target RSVP task in which upright eye region images of humans, apes, and dogs with similar physical features were presented as the T1 stimuli and upright happy, neutral, or fearful eye region images of humans were presented as the T2 stimuli, and 12 images of inverted neutral eye regions were presented as distractors. The stimulus onset asynchrony (SOA) between T1 and T2 was set to either 232 or 696 ms so that T2 appeared at lag2 or lag6. This experimental protocol was similar to that used in our previous work [23,40], which could elucidate the effect of perceptual similarity between two targets on the AB to some degree. After the presentation of an RSVP stream, participants were asked to recognize the source of the T1 eye region and the emotional category of the T2 eye region successively. Increasing evidence supports the idea that T2 accuracy within the AB period is significantly lower than that outside the AB period [30,31,49]; the same holds true for emotionally valenced eye regions acting as T2 [23]. Consistent with these observations, we hypothesized that the accuracy of recognition of the emotions conveyed by eye regions in T2 in the lag6 condition would be higher than that in the lag2 condition. Furthermore, several studies have reported that an increase in the perceptual load of the T1 can reduce the detection rate of fearful T2 faces [50,51], which reflects that the privileged processing of fearful faces does not occur automatically but relies on available attentional resources. Since T1 and T2 in the present study were eye region stimuli, they would occupy the same processing channels and compete for limited central cognitive resources. Thus, reducing attentional resources would be expected to inhibit the prioritized processing of fearful eye regions in the lag2 condition and give rise to less accurate accuracy.

2. Materials and Methods

2.1. Participants

Twenty-eight (20 female) college students were recruited from Liaoning Normal University. Participants age was between 18 and 25 years (M = 20.46 years). This sample size was sufficient to test the effect of the emotional valence of eye regions on the AB at a medium effect size level (Cohen ƒ = 0.25, power = 0.95, α error = 0.05). All participants were right-handed, with normal vision or corrected vision, and had no history of brain or psychological disorders. They had not previously participated in similar experiments and received appropriate monetary compensation after the experiment.

2.2. Stimuli

From the Chinese Facial Affective Picture System [52], 15 neutral faces (8 female), 2 happy faces, and 2 fearful faces (half female) were selected as the original materials. Furthermore, high-definition images of 1 ape and 1 dog were selected from the internet. Adobe Photoshop 8.0 software was used to crop and grayscale the human or non-human images mentioned above, transforming them into elliptical images showing only the eye region visible, with a size of 252 pixels wide and 100 pixels high. Among these eye region pictures, 2 happy, 2 neutral, and 2 fearful upright human pictures were used as T2 stimuli, 12 neutral pictures (6 female) from human face models were used as distractors and presented inversely, and the remaining 3 upright pictures (1 ape, 1 dog, and 1 female) were used as the T1 stimuli. When participants viewed these images from a chair located 65 cm away from the display screen, the visual angle was 5.9° × 2.3°. The brightness, contrast, and other low-level physical features of all stimuli images were standardized.

In our pilot study, twenty-two participants (15 female, mean age = 21.68 years) from Liaoning Normal University were recruited to rate the valence and arousal of 6 eye region images (T2) using a 9-point scale. Rating results showed that three types of eye regions (happy, neutral, and fearful) differed significantly from one another in valence [F(2, 42) = 259.21, p < 0.001, ηp2 = 0.92]. As shown in Table 1, the happy eye regions were assessed more positively than the neutral (p < 0.001) and fearful (p < 0.001) eye regions and the fearful eye regions were assessed more negatively than the neutral eye regions (p < 0.001). Regarding arousal, a significant main effect of the emotional category was observed [F(2, 42) = 76.60, p < 0.001, ηp2 = 0.78]. Further pairwise comparisons revealed that the happy and fearful eye regions were rated as more arousing than the neutral eye regions (ps < 0.001), while the difference between the former two conditions was not significant (p = 0.17).

Table 1.

Mean valence and arousal scores for happy, neutral, and fearful eye regions. Standard deviations are in parentheses.

2.3. Procedure

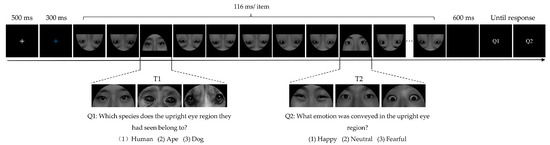

The experiment was conducted in a quiet cognitive-behavioral laboratory. Participants were seated in a comfortable chair positioned 65 cm away from the 19-inch computer monitor. The screen resolution was 1440 × 900 pixels with a 60 Hz refresh rate. The experimental procedure was programmed using E-Prime 2.0 software (Psychology Software Tools, Inc., Pittsburgh, PA, USA). As displayed in Figure 1, at the beginning of each trial, a white fixation cross was presented at the center of the screen for 500 ms, followed by a blue fixation cross for 300 ms, indicating the imminent appearance of the stimuli that needed attention. Next, an RSVP stream consisting of 12 inverted distractor images and 2 target images was presented at the same spatial location successively. Each image was presented for 116 ms. Three eye regions from different species (human, ape, and dog) were presented as T1, and happy, neutral, or fearful eye regions from human models were presented as T2. To avoid any preparation effect on T1, the stimulus appeared randomly and equiprobably at the 4th, 5th, or 6th position within the stimulus stream. T2 randomly and equiprobably appeared in the second or sixth position after T1. After a blank interval of 600 ms, two question screens appeared, and participants were required to report the specific source of T1 (Question 1: Which species does the upright eye region they had seen belong to? 1: Human; 2: Ape; 3: Dog) and the emotional category of T2 (Question 2: What emotion was conveyed in the upright eye region? 1: Happy; 2: Neutral; 3: Fearful) by pressing the corresponding digit on the keyboard without time constraint. The participants were informed that both tasks were equally important and that they had to report T2 as accurately as possible while prioritizing the correct identification of T1. No feedback was given. The inter-trial interval was 600 ms.

Figure 1.

Illustration of a typical experimental trial sequence.

Participants were instructed to practice 24 trials before the formal experiment to ensure that they clearly understood and were familiar with the experimental procedure. During the practice stage, the participants were given timely feedback on correct or incorrect responses. The formal experiment was divided into 4 blocks, resulting in 288 trials in total, and there were 48 trials for each condition. In each block of 72 trials, the number of conditions was equal, and the order was randomized. After completing a block, the participants were allowed to take a break for an appropriate amount of time before proceeding to the next block.

3. Results

3.1. Analysis of T1 Accuracy

The mean percentage of T1 accuracy across all conditions was 0.96 ± 0.01 (M ± SE). A two-way repeated measures analysis of variance (ANOVA) containing the within-subject factors of lag (2 levels: lag2, lag6) and emotional valence of the eye region (3 levels: happy, neutral, fearful) was run on the T1 accuracy. No significant main effects of lag [F(1, 27) = 0.63, p = 0.43, ηp2 = 0.02] and emotional valence [F(2, 54) = 0.75, p = 0.47, ηp2 = 0.03] were found, nor was there a significant interaction effect [F(2, 54) = 1.05, p = 0.36, ηp2 = 0.04]. These results indicate that the accuracy of T1 was immune to the impact of T2 condition.

3.2. Analysis of T2 Accuracy

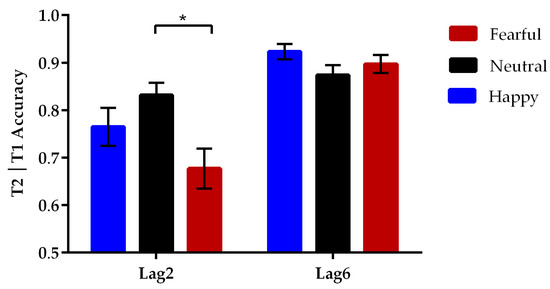

Since the source of T2 identification errors in incorrect T1 trials was unknown, the analysis of T2 accuracy focused only on trials wherein T1 was correctly reported [23,30]; see Table 2. A two-way repeated measures ANOVA on T2 accuracy was conducted with lag (2 levels: lag2, lag6) and emotional valence of the eye region (3 levels: happy, neutral, fearful) as within-subject factors. A significant main effect of lag was observed [F(1, 27) = 56.55, p < 0.001, ηp2 = 0.68]. Further pairwise comparisons with Bonferroni correction revealed that the T2 eye regions in the lag6 condition (M ± SE, 0.90 ± 0.01) were identified more accurately than those in the lag2 condition (0.76 ± 0.02, p < 0.001). More importantly, there was a significant interaction between the lag and emotional valence [F(2, 54) = 11.76, p < 0.001, ηp2 = 0.30], as seen in Figure 2. Post-hoc tests showed that the identification accuracy of neutral T2 (0.83 ± 0.03) was significantly higher than that of fearful T2 (0.68 ± 0.04, p = 0.012) during the condition of limited attentional resources (lag2), whereas the accuracy differences between the neutral and happy T2 trials (0.76 ± 0.04, p = 0.51) and between fearful and happy T2 trials (p = 0.23) were not significant. In the lag6 condition of adequate attentional resources, the accuracy of identifying happy (0.92 ± 0.02), neutral (0.87 ± 0.02), and fearful T2 (0.90 ± 0.02, ps > 0.14) did not differ significantly from one another.

Table 2.

Summary of the mean number of valid trials for different conditions. Standard deviations are in parentheses.

Figure 2.

Mean percentage of correct identification of happy, neutral, and fearful T2 eye regions at each T1–T2 lag. Error bars represent standard errors of the means (* p < 0.05). The report of the fearful eye region was less accurate than that of the neutral eye region at the lag2 condition.

Furthermore, we analyzed the types of errors made when emotional T2 was incorrectly responded to explore the effect of response bias. Firstly, we calculated the percentage of trials on which T1 was correctly reported and T2 was incorrectly judged as either the neutral (Eneu) or emotional (Eemo) eye region. Then, a two-way repeated measures ANOVA was performed with error type (2 levels: Eemo, Eneu), lag (2 levels: lag2, lag6), and emotional valence of the eye region (2 levels: happy, fearful) as within-subject factors. As illustrated in Table 3, a significant main effect of lag was observed [F(1, 27) = 46.04, p < 0.001, ηp2 = 0.63]. Further pairwise comparisons revealed that the rate of incorrect judgment for the T2 eye regions in the lag2 condition (M ± SE, 0.02 ± 0.003) was significantly higher compared to the lag6 condition (0.004 ± 0.001, p < 0.001). Additionally, a significant main effect of error type was observed [F(1, 27) = 26.22, p < 0.001, ηp2 = 0.49]; incorrect judgments were more likely to be neutral (0.02 ± 0.003) rather than emotional (0.004 ± 0.001, p < 0.001). Moreover, there was a significant interaction between lag and error type [F(1, 27) = 24.32, p < 0.001, ηp2 = 0.47]. Post-hoc tests showed that in the lag2 condition, the rate of T2 being incorrectly judged as neutral (0.033 ± 0.005) was significantly higher than that of emotional (0.006 ± 0.001, p < 0.001). Similarly, in the lag6 condition, the rate of T2 being incorrectly judged as neutral (0.007 ± 0.002) was also significantly higher than that of the emotional (0.001 ± 0.000, p = 0.001).

Table 3.

Summary of the mean error rates for different conditions. Standard deviations are in parentheses.

4. Discussion

The human eye region has been found to transmit various emotional and social signals and play an important role in a large range of cognitive processes and adaptive behavior [42,53]. For instance, numerous studies indicated that not only fearful and angry expressions but also happy expressions projected in the eye region can attract attention rapidly and be processed efficiently [23,25,26,48]. However, it remains unclear whether the prioritized processing of fearful eye regions depends on available attentional resources. In this study, we selected neutral eye region images derived from three different species as T1 stimuli and emotionally valenced eye region images from humans as T2 stimuli. This was designed to enhance the perceptual similarity between two targets and maintain consistency in the processing channels. Furthermore, we manipulated the temporal interval between T1 and T2 as well as the emotionality of the T2 eye region to examine the influence of the affective valence of the eye region on AB. Here, we found a significant main effect of lag. Compared with the limited attentional resources condition of lag2, T2 accuracy in the adequate attentional resources condition of lag6 was significantly higher, indicating a close relationship between T2 accuracy and available attentional resources. Previous studies that used digits, letters, words, or facial expressions as T2 stimuli also systematically manipulated the temporal interval between T1 and T2 and consistently found a significant decrease in T2 recognition accuracy when T2 appeared 100–500 ms after the presentation of T1 [30,38,40,50,54]. In the present study, the lag2 condition represented a T1–T2 interval of 232 ms, while the lag6 condition represented a T1–T2 interval of 696 ms; the relatively higher recognition accuracy in the lag6 condition compared to the lag2 condition indicates that our manipulation of the T1–T2 temporal lag was appropriate and successfully induced an AB effect, providing new insight into the idea that attentional resources availability is a critical factor to influence the perception of visual stimuli.

Most importantly, there was a significant interaction between lag and emotional valence. Specifically, in the lag2 condition, the recognition accuracy for the neutral eye region was significantly higher than that for the fearful eye region, whereas no significant differences in recognition accuracy were observed between the happy and neutral eye regions or between the happy and fearful eye regions. In the lag6 condition, there were no significant differences in recognition accuracy among the happy, neutral, and fearful eye regions. In previous AB studies that used non-facial stimuli such as architectural images or letters as T1 and emotional facial expressions as T2, a widely accepted view was that emotional facial expressions, particularly threat-related expressions such as anger and fear, can rapidly capture attention and provide privileged access to awareness, leading to reduced AB effects [38,40]. Similarly, in two of our recent studies, in which neutral house pictures or neutral eye regions overlaid with five arrow pictures were used as T1 and emotionally valenced eye regions as T2, we also found that the recognition accuracies for happy and fearful eye regions in the lag2 condition were significantly higher than those in the neutral eye region [23,24]. These observations indicate that the emotional saliency of the T2 stimulus weakened the interference effect of T1. The results of the present study, which are contrary to previous findings, suggest that the prioritized processing of emotional stimuli may not be entirely automated and may be influenced by the availability of attentional resources and the congruency of processing channels for the two targets.

Previously, researchers systematically manipulated the perceptual load level of T1 stimuli and the emotional valence of T2 faces. They found that the detection advantage of fearful faces diminished or even disappeared under T1 high-load conditions [50,51], indicating that prioritized access of fearful faces to the limited-capacity cognitive system is not automatic and is sensitive to available attentional resources. Since the eye region is considered a diagnostic area for the recognition of fearful expressions [8,55], it has been shown that fearful eye regions can induce a threat detection advantage, similar to intact fearful faces [10,25]. In this case, the relatively lower accuracy for fearful relative to neutral eye regions in the condition of limited attentional resources in this study might suggest an inhibited processing advantage for fearful expressions, supporting the view that the processing of threatening stimuli requires a small number of attentional resources [50]. Furthermore, this result provides an important update to our understanding concerning how we perceive and encode the social signals derived from the eye region [53]. Simultaneously, the perceptual similarities between two targets and between targets and distractors can influence the magnitude of AB. In general, the AB effect increases with higher perceptual similarity between stimuli [30,56]. Unlike prior studies that used houses and intact facial expressions as T1 and T2 stimuli, respectively [38,40,56], both T1 and T2 employed eye-region stimuli in our study, resulting in higher perceptual similarity and shared processing channels. This setting may reduce the emotional significance of fearful eye regions and trigger a psychological refractory period [57], making it difficult for the perceptual representation of T2 to enter the central working memory consolidation stage successfully.

Furthermore, task switching between two targets as well as a response bias to report a neutral target, are potential factors that could influence the AB effect. Many studies have found that task switching involves time-consuming visual system representation reconstruction processes, during which the representation of T2 is delayed and attenuated to an unrepresentative level, leading to AB [58,59]. Moreover, the extent of system reconfiguration and cognitive resources consumed are closely linked to the relationship between the two targets. When two targets belong to the same category of stimuli, the cognitive system does not require reconfiguration. In contrast, the cognitive system needs to expend cognitive resources to reconfigure itself for efficient processing of the second target stimulus [60]. Thus, although eye region-eye region targets have a higher degree of perceptual similarity, the sources and task demands for these two targets are different. More cognitive resources of the same processing channel may be consumed during task switching, resulting in minimal cognitive resources being allocated to the T2 eye region, especially under conditions of limited attentional resources. With respect to the influence of response bias, Anderson, using a variety of dual-targets RSVP tasks, demonstrated that participants were more likely to guess the T2 as neutral words, especially when the T2 appeared within the AB period [33]. Similarly, our analysis of error types showed a pronounced response bias to report neutral expression. Considering our recent findings related to emotional eye region processing [23,24] and the absence of significant accuracy differences between neutral and happy conditions, it is possible that the neutral-oriented guessing strategy does not have the greatest impact on our results. Since the present study could not provide more information about the exact contributions of these factors to the AB, it may be better to see whether the present findings will be replicated in future investigations.

Two limitations of the present study need to be mentioned. First, considering the obvious individual differences in low-level physical features of the eye region, only two images for each kind of T2 stimuli were included. In this case, each picture was repeated 24 times in this study, which undoubtedly increased the familiarity of the stimuli and probably reduced the difficulty of the task. Second, it has been found that configural information in a face plays a crucial role in capturing attention and emotion recognition [61,62]. Previous studies often investigated the preferential processing advantage of fearful expressions with intact face images [17,40,50], but we only used isolated eye regions as experimental stimuli. In comparison with intact fearful faces, the perception of isolated, fearful eye regions is associated with reduced emotional saliency and inadequate configural encoding, which might hinder attentional capture and efficient recognition. Future studies with a great number of intact facial expression stimuli are necessary to replicate the current findings and further illustrate the relationship between emotional expression processing and temporal attention.

5. Conclusions

In conclusion, this study combined a dual-target RSVP task with emotionally valenced eye regions and found that the fearful eye region induced an increased AB effect compared with the neutral eye region under the condition of limited attentional resources, providing novel evidence for the prioritized processing of emotionally salient stimuli. Importantly, the preferential processing of fearful faces is not entirely automatic and depends on the availability of attentional resources and the congruency of processing channels.

Author Contributions

S.L., W.H. and W.L. designed the study. B.H. and W.D. collected the data. S.L. and B.H. analyzed the data and wrote the manuscript. S.L. and B.H. contributed equally to this research. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (grant no. 32200852, 31970991), Liaoning Revitalization Talents Program (grant no. XLYC2007106), Liaoning Natural Science Foundation of China (grant no. 2023-BS-153, 2023-MS-252).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of Liaoning Normal University (LL2023052).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Carbon, C.-C. Wearing face masks strongly confuses counterparts in reading emotions. Front. Psychol. 2020, 11, 566886. [Google Scholar] [CrossRef]

- Grundmann, F.; Epstude, K.; Scheibe, S. Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS ONE 2021, 16, e0249792. [Google Scholar] [CrossRef]

- Marini, M.; Ansani, A.; Paglieri, F.; Caruana, F.; Viola, M. The impact of facemasks on emotion recognition, trust attribution and re-identification. Sci. Rep. 2021, 11, 5577. [Google Scholar] [CrossRef]

- Liu, J.K.; Yang, J.J.; Huang, L.H.; Zhou, L.; Xie, J.X.; Hu, Z.H. Masked face is looking at me: Face mask increases the feeling of being looked at during the COVID-19 pandemic. Front. Neurosci. 2022, 16, 1056793. [Google Scholar] [CrossRef]

- Okazaki, S.; Yamanami, H.; Nakagawa, F.; Takuwa, N.; Kawabata Duncan, K.J. Mask wearing increases eye involvement during smiling: A facial EMG study. Sci. Rep. 2021, 11, 20370. [Google Scholar] [CrossRef]

- Guo, K.; Shaw, H. Face in profile view reduces perceived facial expression intensity: An eye-tracking study. Acta Psychol. 2015, 155, 19–28. [Google Scholar] [CrossRef]

- Li, S.X.; Li, P.; Wang, W.; He, W.Q.; Luo, W.B. The neural mechanisms of the processing of facial expressions based on cues from eye region. Adv. Psychol. Sci. 2017, 25, 221–229. [Google Scholar] [CrossRef]

- Calvo, M.G.; Fernández-Martín, A.; Nummenmaa, L. Facial expression recognition in peripheral versus central vision: Role of the eyes and the mouth. Psychol. Res. 2014, 78, 180–195. [Google Scholar] [CrossRef]

- Calvo, M.G.; Gutierrez-Garcia, A.; Avero, P.; Lundqvist, D. Attentional mechanisms in judging genuine and fake smiles: Eye-movement patterns. Emotion 2013, 13, 792–802. [Google Scholar] [CrossRef]

- Fox, E.; Damjanovic, L. The eyes are sufficient to produce a threat superiority effect. Emotion 2006, 6, 534–539. [Google Scholar] [CrossRef]

- Pavlova, M.A.; Sokolov, A.A. Reading language of the eyes. Neurosci. Biobehav. Rev. 2022, 140, 104755. [Google Scholar] [CrossRef]

- Jack, R.E.; Garrod, O.G.B.; Yu, H.; Caldara, R.; Schyns, P.G. Facial expressions of emotion are not culturally universal. Proc. Natl. Acad. Sci. USA 2012, 109, 7241. [Google Scholar] [CrossRef]

- Yuki, M.; Maddux, W.W.; Masuda, T. Are the windows to the soul the same in the East and West? Cultural differences in using the eyes and mouth as cues to recognize emotions in Japan and the United States. J. Exp. Soc. Psychol. 2007, 43, 303–311. [Google Scholar] [CrossRef]

- Mangun, G.R. Neural mechanisms of visual selective attention. Psychophysiology 1995, 32, 4–18. [Google Scholar] [CrossRef]

- Fox, E.; Russo, R.; Dutton, K. Attentional bias for threat: Evidence for delayed disengagement from emotional faces. Cogn. Emot. 2002, 16, 355–379. [Google Scholar] [CrossRef]

- Mishra, M.V.; Ray, S.B.; Srinivasan, N. Effect of emotions on temporal attention. Prog. Brain Res. 2017, 236, 287–309. [Google Scholar] [CrossRef]

- Pourtois, G.; Grandjean, D.; Sander, D.; Vuilleumier, P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb. Cortex 2004, 14, 619–633. [Google Scholar] [CrossRef]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef]

- Calvo, M.G.; Lundqvist, D. Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behav. Res. Methods 2008, 40, 109–115. [Google Scholar] [CrossRef]

- Frischen, A.; Eastwood, J.D.; Smilek, D. Visual search for faces with emotional expressions. Psychol. Bull. 2008, 134, 662–676. [Google Scholar] [CrossRef]

- Carlson, J.M.; Mujica-Parodi, L.R. Facilitated attentional orienting and delayed disengagement to conscious and nonconscious fearful faces. J. Nonverbal Behav. 2015, 39, 69–77. [Google Scholar] [CrossRef]

- Hardee, J.E.; Thompson, J.C.; Puce, A. The left amygdala knows fear: Laterality in the amygdala response to fearful eyes. Soc. Cogn. Affect. Neurosci. 2008, 3, 47–54. [Google Scholar] [CrossRef]

- Li, S.X.; Ding, R.; Zhao, D.F.; Zhou, X.; Zhan, B.; Luo, W.B. Processing of emotions expressed through eye regions attenuates attentional blink. Int. J. Psychophysiol. 2022, 182, 1–11. [Google Scholar] [CrossRef]

- Li, S.X.; Yang, L.; Hao, B.; He, W.Q.; Luo, W.B. Perceptual load-independent modulation of the facilitated processing of emotional eye regions. Int. J. Psychophysiol. 2023, 190, 8–19. [Google Scholar] [CrossRef]

- Carlson, J.M.; Reinke, K.S. Attending to the fear in your eyes: Facilitated orienting and delayed disengagement. Cogn. Emot. 2014, 28, 1398–1406. [Google Scholar] [CrossRef]

- Carlson, J.M.; Torrence, R.D.; Vander Hyde, M.R. Beware the eyes behind the mask: The capture and hold of selective attention by backward masked fearful eyes. Motiv. Emot. 2016, 40, 498–505. [Google Scholar] [CrossRef]

- Feng, W.; Luo, W.; Liao, Y.; Wang, N.; Gan, T.; Luo, Y. Human brain responsivity to different intensities of masked fearful eye whites: An ERP study. Brain Res. 2009, 1286, 147–154. [Google Scholar] [CrossRef]

- Whalen, P.J.; Kagan, J.; Cook, R.G.; Davis, F.C.; Kim, H.; Polis, S.; McLaren, D.G.; Somerville, L.H.; McLean, A.A.; Maxwell, J.S. Human amygdala responsivity to masked fearful eye whites. Science 2004, 306, 2061. [Google Scholar] [CrossRef]

- Broadbent, D.E.; Broadbent, M.H. From detection to identification: Response to multiple targets in rapid serial visual presentation. Percept. Psychophys. 1987, 42, 105–113. [Google Scholar] [CrossRef]

- Chun, M.M.; Potter, M.C. A two-stage model for multiple target detection in rapid serial visual presentation. J. Exp. Psychol. Hum. Percept. Perform. 1995, 21, 109–127. [Google Scholar] [CrossRef]

- Dux, P.E.; Marois, R. The attentional blink: A review of data and theory. Atten. Percept. Psychophys. 2009, 71, 1683–1700. [Google Scholar] [CrossRef]

- Shapiro, K.L.; Raymond, J.E.; Arnell, K.M. The attentional blink. Trends Cogn. Sci. 1997, 1, 291–296. [Google Scholar] [CrossRef]

- Anderson, A.K. Affective influences on the attentional dynamics supporting awareness. J. Exp. Psychol. Gen. 2005, 134, 258–281. [Google Scholar] [CrossRef]

- Arend, I.; Botella, J. Emotional stimuli reduce the attentional blink in sub-clinical anxious subjects. Psicothema 2002, 14, 209–214. [Google Scholar]

- Zhang, D.; He, W.; Wang, T.; Luo, W.; Zhu, X.; Gu, R.; Li, H.; Luo, Y.J. Three stages of emotional word processing: An ERP study with rapid serial visual presentation. Soc. Cogn. Affect. Neurosci. 2014, 9, 1897–1903. [Google Scholar] [CrossRef]

- Zhu, C.; He, W.; Qi, Z.; Wang, L.; Song, D.; Zhan, L.; Yi, S.; Luo, Y.; Luo, W. The time course of emotional picture processing: An event-related potential study using a rapid serial visual presentation paradigm. Front. Psychol. 2015, 6, 954. [Google Scholar] [CrossRef]

- de Oca, B.M.; Villa, M.; Cervantes, M.; Welbourne, T. Emotional modulation of the attentional blink by pleasant and unpleasant pictures. J. Gen. Psychol. 2012, 139, 289–314. [Google Scholar] [CrossRef]

- de Jong, P.J.; Koster, E.H.W.; van Wees, R.; Martens, S. Emotional facial expressions and the attentional blink: Attenuated blink for angry and happy faces irrespective of social anxiety. Cogn. Emot. 2009, 23, 1640–1652. [Google Scholar] [CrossRef]

- De Martino, B.; Kalisch, R.; Rees, G.; Dolan, R.J. Enhanced processing of threat stimuli under limited attentional resources. Cereb. Cortex 2009, 19, 127–133. [Google Scholar] [CrossRef]

- Luo, W.; Feng, W.; He, W.; Wang, N.Y.; Luo, Y.J. Three stages of facial expression processing: ERP study with rapid serial visual presentation. NeuroImage 2010, 49, 1857–1867. [Google Scholar] [CrossRef]

- Miyazawa, S.; Iwasaki, S. Do happy faces capture attention? The happiness superiority effect in attentional blink. Emotion 2010, 10, 712–716. [Google Scholar] [CrossRef] [PubMed]

- Conty, L.; George, N.; Hietanen, J.K. Watching eyes effects: When others meet the self. Conscious. Cogn. 2016, 45, 184–197. [Google Scholar] [CrossRef]

- Zivony, A.; Lamy, D. What processes are disrupted during the attentional blink? An integrative review of event-related potential research. Psychon. Bull. Rev. 2022, 29, 394–414. [Google Scholar] [CrossRef]

- Ghorashi, S.M.S.; Zuvic, S.M.; Visser, T.A.W.; Di Lollo, V. Focal distraction: Spatial shifts of attentional focus are not required for contingent capture. J. Exp. Psychol. Hum. Percept. Perform. 2003, 29, 78–91. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Maki, W.S.; Mebane, M.W. Attentional capture triggers an attentional blink. Psychon. Bull. Rev. 2006, 13, 125–131. [Google Scholar] [CrossRef] [PubMed]

- Stein, T.; Zwickel, J.; Kitzmantel, M.; Ritter, J.; Schneider, W.X. Irrelevant words trigger an attentional blink. Exp. Psychol. 2010, 57, 301–307. [Google Scholar] [CrossRef]

- Awh, E.; Serences, J.; Laurey, P.; Dhaliwal, H.; van der Jagt, T.; Dassonville, P. Evidence against a central bottleneck during the attentional blink: Multiple channels for configural and featural processing. Cogn. Psychol. 2004, 48, 95–126. [Google Scholar] [CrossRef]

- Li, S.X.; Li, Y.W.; Liu, S.C.; He, W.Q.; Luo, W.B. Emotional valence in the eye region modulates the attentional blink in a task-dependent manner: Evidence from event-related potentials. Brain Sci. 2022, 12, 1665. [Google Scholar] [CrossRef]

- Arnell, K.M.; Howe, A.E.; Joanisse, M.F.; Klein, R.M. Relationships between attentional blink magnitude, RSVP target accuracy, and performance on other cognitive tasks. Mem. Cogn. 2006, 34, 1472–1483. [Google Scholar] [CrossRef]

- Stein, T.; Peelen, M.V.; Funk, J.; Seidl, K.N. The fearful-face advantage is modulated by task demands: Evidence from the attentional blink. Emotion 2010, 10, 136–140. [Google Scholar] [CrossRef]

- Ye, R.; Yu, F.Q.; Jiang, Y.B.; Wang, K. The impact of perceptual load on emotional face processing in attentional blink paradigm. Acta Psychol. Sin. 2011, 43, 483–493. [Google Scholar] [CrossRef]

- Gong, X.; Huang, Y.X.; Wang, Y.; Luo, Y.J. Revision of the Chinese facial affective picture system. Chin. Ment. Health J. 2011, 25, 40–46. [Google Scholar] [CrossRef]

- Grossmann, T. The eyes as windows into other minds: An integrative perspective. Perspect. Psychol. Sci. J. Assoc. Psychol. Sci. 2017, 12, 107–121. [Google Scholar] [CrossRef]

- Raymond, J.E.; Shapiro, K.L.; Arnell, K.M. Temporary suppression of visual processing in an RSVP task: An attentional blink? J. Exp. Psychol. Hum. Percept. Perform. 1992, 18, 849–860. [Google Scholar] [CrossRef]

- Calvo, M.G.; Nummenmaa, L.; Avero, P. Recognition advantage of happy faces in extrafoveal vision: Featural and affective processing. Vis. Cogn. 2010, 18, 1274–1297. [Google Scholar] [CrossRef]

- Müsch, K.; Engel, A.K.; Schneider, T.R. On the blink: The importance of target-distractor similarity in eliciting an attentional blink with faces. PLoS ONE 2012, 7, e41257. [Google Scholar] [CrossRef] [PubMed]

- Wong, K.F.E. The relationship between attentional blink and psychological refractory period. J. Exp. Psychol. Hum. Percept. Perform. 2002, 28, 54–71. [Google Scholar] [CrossRef]

- Kawahara, J.I.; Zuvic, S.M.; Enns, J.T.; Di Lollo, V. Task switching mediates the attentional blink even without backward masking. Percept. Psychophys. 2003, 65, 339–351. [Google Scholar] [CrossRef]

- Visser, T.A.W.; Bischof, W.F.; Di Lollo, V. Attentional switching in spatial and nonspatial domains: Evidence from the attentional blink. Psychol. Bull. 1999, 125, 458–469. [Google Scholar] [CrossRef]

- Pecchinenda, A.; Monachesi, B.; Laeng, B. Fearful expressions of rapidly presented hybrid-faces modulate the lag 1 sparing in the attentional blink. Acta Psychol. 2020, 209, 103124. [Google Scholar] [CrossRef]

- Bombari, D.; Schmid, P.C.; Schmid Mast, M.; Birri, S.; Mast, F.W.; Lobmaier, J.S. Emotion recognition: The role of featural and configural face information. Q. J. Exp. Psychol. 2013, 66, 2426–2442. [Google Scholar] [CrossRef] [PubMed]

- Song, J.; Liu, M.; Yao, S.; Yan, Y.; Ding, H.; Yan, T.; Zhao, L.; Xu, G. Classification of emotional expressions is affected by inversion: Behavioral and electrophysiological evidence. Front. Behav. Neurosci. 2017, 11, 21. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).