The Wisconsin Card Sorting Test: Split-Half Reliability Estimates for a Self-Administered Computerized Variant

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

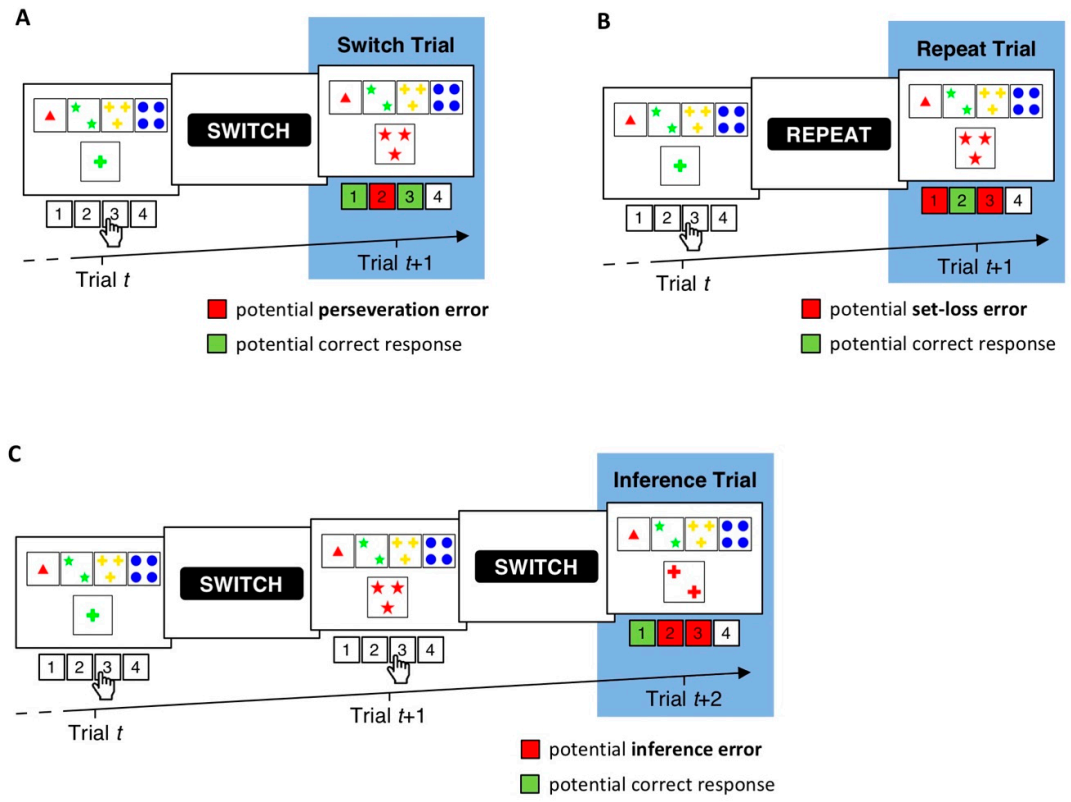

2.2. cWCST

2.3. Split-Half Reliability Estimation

3. Results

3.1. Descriptive Statistics

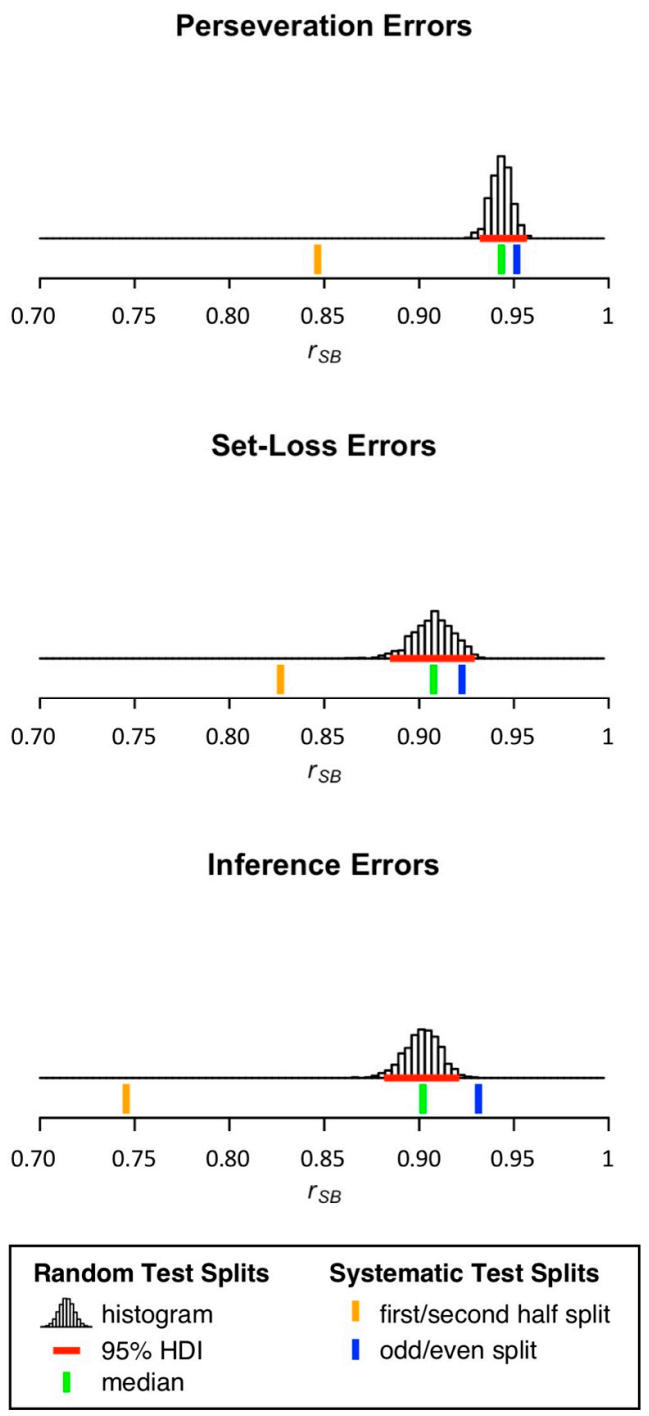

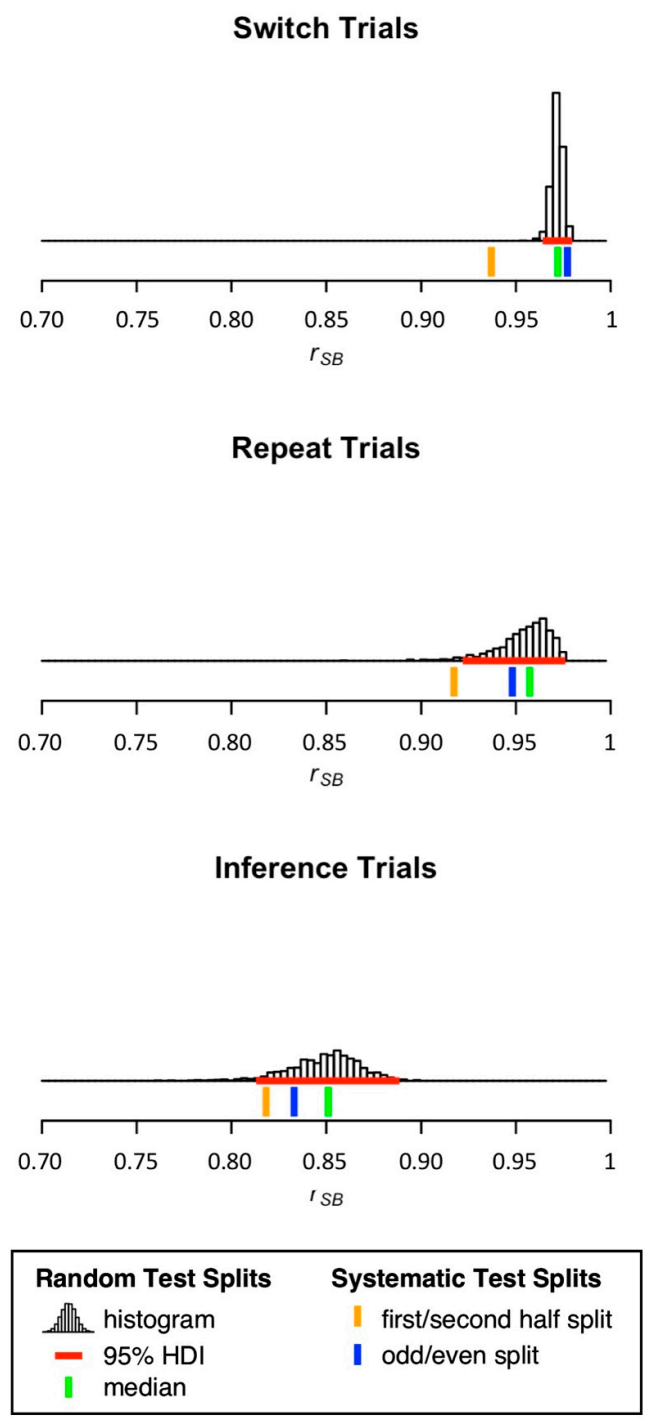

3.2. Split-Half Reliability Estimation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Miller, J.B.; Barr, W.B. The technology crisis in neuropsychology. Arch. Clin. Neuropsychol. 2017, 32, 541–554. [Google Scholar] [CrossRef] [PubMed]

- Germine, L.; Reinecke, K.; Chaytor, N.S. Digital neuropsychology: Challenges and opportunities at the intersection of science and software. Clin. Neuropsychol. 2019, 33, 271–286. [Google Scholar] [CrossRef] [PubMed]

- Munro Cullum, C.; Hynan, L.S.; Grosch, M.; Parikh, M.; Weiner, M.F. Teleneuropsychology: Evidence for video teleconference-based neuropsychological assessment. J. Int. Neuropsychol. Soc. 2014, 20, 1028–1033. [Google Scholar] [CrossRef]

- Bilder, R.M.; Reise, S.P. Neuropsychological tests of the future: How do we get there from here? Clin. Neuropsychol. 2019, 33, 220–245. [Google Scholar] [CrossRef] [PubMed]

- Bauer, R.M.; Iverson, G.L.; Cernich, A.N.; Binder, L.M.; Ruff, R.M.; Naugle, R.I. Computerized neuropsychological assessment devices: Joint position paper of the American Academy of Clinical Neuropsychology and the National Academy of Neuropsychology. Arch. Clin. Neuropsychol. 2012, 27, 362–373. [Google Scholar] [CrossRef] [PubMed]

- Chaytor, N.S.; Barbosa-Leiker, C.; Germine, L.T.; Fonseca, L.M.; McPherson, S.M.; Tuttle, K.R. Construct validity, ecological validity and acceptance of self-administered online neuropsychological assessment in adults. Clin. Neuropsychol. 2020, 35, 148–164. [Google Scholar] [CrossRef] [PubMed]

- Germine, L.; Nakayama, K.; Duchaine, B.C.; Chabris, C.F.; Chatterjee, G.; Wilmer, J.B. Is the Web as good as the lab? Comparable performance from Web and lab in cognitive/perceptual experiments. Psychon. Bull. Rev. 2012, 19, 847–857. [Google Scholar] [CrossRef] [PubMed]

- Mackin, R.S.; Insel, P.S.; Truran, D.; Finley, S.; Flenniken, D.; Nosheny, R.; Ulbright, A.; Comacho, M.; Bickford, D.; Harel, B.; et al. Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: Results from the Brain Health Registry. Alzheimer Dement. Diagn. Assess. Dis. Monit. 2018, 10, 573–582. [Google Scholar] [CrossRef]

- Parlar, M.E.; Spilka, M.J.; Wong Gonzalez, D.; Ballantyne, E.C.; Dool, C.; Gojmerac, C.; King, J.; McNeely, H.; MacKillop, E. “You can’t touch this”: Delivery of inpatient neuropsychological assessment in the era of COVID-19 and beyond. Clin. Neuropsychol. 2020, 34, 1395–1410. [Google Scholar] [CrossRef]

- Marra, D.E.; Hoelzle, J.B.; Davis, J.J.; Schwartz, E.S. Initial changes in neuropsychologists clinical practice during the COVID-19 pandemic: A survey study. Clin. Neuropsychol. 2020, 34, 1251–1266. [Google Scholar] [CrossRef]

- Hewitt, K.C.; Loring, D.W. Emory university telehealth neuropsychology development and implementation in response to the COVID-19 pandemic. Clin. Neuropsychol. 2020, 34, 1352–1366. [Google Scholar] [CrossRef]

- Feenstra, H.E.M.; Murre, J.M.J.; Vermeulen, I.E.; Kieffer, J.M.; Schagen, S.B. Reliability and validity of a self-administered tool for online neuropsychological testing: The Amsterdam Cognition Scan. J. Clin. Exp. Neuropsychol. 2018, 40, 253–273. [Google Scholar] [CrossRef]

- Assmann, K.E.; Bailet, M.; Lecoffre, A.C.; Galan, P.; Hercberg, S.; Amieva, H.; Kesse-Guyot, E. Comparison between a self-administered and supervised version of a web-based cognitive test battery: Results from the NutriNet-Santé Cohort Study. J. Med. Internet Res. 2016, 18, e68. [Google Scholar] [CrossRef]

- Backx, R.; Skirrow, C.; Dente, P.; Barnett, J.H.; Cormack, F.K. Comparing web-based and lab-based cognitive assessment using the Cambridge Neuropsychological Test Automated Battery: A within-subjects counterbalanced study. J. Med. Internet Res. 2020, 22, e16792. [Google Scholar] [CrossRef]

- Grant, D.A.; Berg, E.A. A behavioral analysis of degree of reinforcement and ease of shifting to new responses in a Weigl-type card-sorting problem. J. Exp. Psychol. 1948, 38, 404–411. [Google Scholar] [CrossRef]

- Berg, E.A. A simple objective technique for measuring flexibility in thinking. J. Gen. Psychol. 1948, 39, 15–22. [Google Scholar] [CrossRef]

- MacPherson, S.E.; Sala, S.D.; Cox, S.R.; Girardi, A.; Iveson, M.H. Handbook of Frontal Lobe Assessment; Oxford University Press: New York, NY, USA, 2015. [Google Scholar]

- Rabin, L.A.; Barr, W.B.; Burton, L.A. Assessment practices of clinical neuropsychologists in the United States and Canada: A survey of INS, NAN, and APA Division 40 members. Arch. Clin. Neuropsychol. 2005, 20, 33–65. [Google Scholar] [CrossRef]

- Diamond, A. Executive functions. Annu. Rev. Psychol. 2013, 64, 135–168. [Google Scholar] [CrossRef]

- Kopp, B.; Lange, F.; Steinke, A. The reliability of the Wisconsin Card Sorting Test in clinical practice. Assessment 2019, 28, 248–263. [Google Scholar] [CrossRef]

- Heaton, R.K.; Chelune, G.J.; Talley, J.L.; Kay, G.G.; Curtiss, G. Wisconsin Card Sorting Test Manual: Revised and Expanded; Psychological Assessment Resources Inc.: Odessa, FL, USA, 1993. [Google Scholar]

- Lange, F.; Seer, C.; Kopp, B. Cognitive flexibility in neurological disorders: Cognitive components and event-related potentials. Neurosci. Biobehav. Rev. 2017, 83, 496–507. [Google Scholar] [CrossRef]

- Lange, F.; Kröger, B.; Steinke, A.; Seer, C.; Dengler, R.; Kopp, B. Decomposing card-sorting performance: Effects of working memory load and age-related changes. Neuropsychology 2016, 30, 579–590. [Google Scholar] [CrossRef] [PubMed]

- Steinke, A.; Lange, F.; Seer, C.; Kopp, B. Toward a computational cognitive neuropsychology of Wisconsin card sorts: A showcase study in Parkinson’s disease. Comput. Brain Behav. 2018, 1, 137–150. [Google Scholar] [CrossRef]

- Lange, F.; Dewitte, S. Cognitive flexibility and pro-environmental behaviour: A multimethod approach. Eur. J. Personal. 2019, 56, 46–54. [Google Scholar] [CrossRef]

- Nelson, H.E. A modified card sorting test sensitive to frontal lobe defects. Cortex 1976, 12, 313–324. [Google Scholar] [CrossRef]

- Schretlen, D.J. Modified Wisconsin Card Sorting Test (M-WCST): Professional Manual; Psychological Assessment Resources Inc.: Lutz, FL, USA, 2010. [Google Scholar]

- Kongs, S.K.; Thompson, L.L.; Iverson, G.L.; Heaton, R.K. WCST-64: Wisconsin Card Sorting Test-64 Card Version: Professional Manual; Psychological Assessment Resources Inc.: Lutz, FL, USA, 2000. [Google Scholar]

- Barceló, F. The Madrid card sorting test (MCST): A task switching paradigm to study executive attention with event-related potentials. Brain Res. Protoc. 2003, 11, 27–37. [Google Scholar] [CrossRef]

- Lange, F.; Vogts, M.-B.; Seer, C.; Fürkötter, S.; Abdulla, S.; Dengler, R.; Kopp, B.; Petri, S. Impaired set-shifting in amyotrophic lateral sclerosis: An event-related potential study of executive function. Neuropsychology 2016, 30, 120–134. [Google Scholar] [CrossRef]

- Kopp, B.; Lange, F. Electrophysiological indicators of surprise and entropy in dynamic task-switching environments. Front. Hum. Neurosci. 2013, 7, 300. [Google Scholar] [CrossRef]

- Heaton, R.K.; Staff, P.A.R. Wisconsin Card Sorting Test; Computer Version 4; Psychological Assessment Resources: Odessa, FL, USA, 2003. [Google Scholar]

- Lange, F.; Seer, C.; Loens, S.; Wegner, F.; Schrader, C.; Dressler, D.; Dengler, R.; Kopp, B. Neural mechanisms underlying cognitive inflexibility in Parkinson’s disease. Neuropsychologia 2016, 93, 142–150. [Google Scholar] [CrossRef]

- Lange, F.; Seer, C.; Müller-Vahl, K.; Kopp, B. Cognitive flexibility and its electrophysiological correlates in Gilles de la Tourette syndrome. Dev. Cogn. Neurosci. 2017, 27, 78–90. [Google Scholar] [CrossRef]

- Lange, F.; Seer, C.; Salchow, C.; Dengler, R.; Dressler, D.; Kopp, B. Meta-analytical and electrophysiological evidence for executive dysfunction in primary dystonia. Cortex 2016, 82, 133–146. [Google Scholar] [CrossRef]

- Lange, F.; Seer, C.; Müller, D.; Kopp, B. Cognitive caching promotes flexibility in task switching: Evidence from event-related potentials. Sci. Rep. 2015, 5, 17502. [Google Scholar] [CrossRef]

- Steinke, A.; Lange, F.; Kopp, B. Parallel Model-Based and Model-Free Reinforcement Learning for Card Sorting Performance. Sci. Rep. 2020, 10, 15464. [Google Scholar] [CrossRef]

- Steinke, A.; Lange, F.; Seer, C.; Hendel, M.K.; Kopp, B. Computational modeling for neuropsychological assessment of bradyphrenia in Parkinson’s disease. J. Clin. Med. 2020, 9, 1158. [Google Scholar] [CrossRef]

- Steinke, A.; Lange, F.; Seer, C.; Petri, S.; Kopp, B. A computational study of executive dysfunction in amyotrophic lateral sclerosis. J. Clin. Med. 2020, 9, 2605. [Google Scholar] [CrossRef]

- Steinke, A.; Kopp, B. Toward a computational neuropsychology of cognitive flexibility. Brain Sci. 2020, 10, 1000. [Google Scholar] [CrossRef]

- Parsons, S.; Kruijt, A.-W.; Fox, E. Psychological Science needs a standard practice of reporting the reliability of cognitive behavioural measurements. Adv. Methods Pract. Psychol. Sci. 2018, 2, 378–395. [Google Scholar] [CrossRef]

- Cho, E. Making reliability reliable: A systematic approach to reliability coefficients. Organ. Res. Methods 2016, 19, 651–682. [Google Scholar] [CrossRef]

- Slick, D.J. Psychometrics in neuropsychological assessment. In A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary; Strauss, E., Sherman, E.M.S., Spreen, O., Eds.; Oxford University Press: New York, NY, USA, 2006; pp. 3–43. [Google Scholar]

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory, 3rd ed.; McGraw-Hill: New York, NY, USA, 1994; ISBN 9780070478497. [Google Scholar]

- Kopp, B.; Maldonado, N.; Scheffels, J.F.; Hendel, M.; Lange, F. A meta-analysis of relationships between measures of Wisconsin card sorting and intelligence. Brain Sci. 2019, 9, 349. [Google Scholar] [CrossRef]

- Steinmetz, J.-P.; Brunner, M.; Loarer, E.; Houssemand, C. Incomplete psychometric equivalence of scores obtained on the manual and the computer version of the Wisconsin Card Sorting Test? Psychol. Assess. 2010, 22, 199–202. [Google Scholar] [CrossRef]

- Fortuny, L.I.A.; Heaton, R.K. Standard versus computerized administration of the Wisconsin Card Sorting Test. Clin. Neuropsychol. 1996, 10, 419–424. [Google Scholar] [CrossRef]

- Feldstein, S.N.; Keller, F.R.; Portman, R.E.; Durham, R.L.; Klebe, K.J.; Davis, H.P. A comparison of computerized and standard versions of the Wisconsin Card Sorting Test. Clin. Neuropsychol. 1999, 13, 303–313. [Google Scholar] [CrossRef]

- Tien, A.Y.; Spevack, T.V.; Jones, D.W.; Pearlson, G.D.; Schlaepfer, T.E.; Strauss, M.E. Computerized Wisconsin Card Sorting Test: Comparison with manual administration. Kaohsiung J. Med. Sci. 1996, 12, 479–485. [Google Scholar]

- Bowden, S.C.; Fowler, K.S.; Bell, R.C.; Whelan, G.; Clifford, C.C.; Ritter, A.J.; Long, C.M. The reliability and internal validity of the Wisconsin Card Sorting Test. Neuropsychol. Rehabil. 1998, 8, 243–254. [Google Scholar] [CrossRef]

- Spearman, C. Correlation calculated from faulty data. Br. J. Psychol. 1910, 3, 271–295. [Google Scholar] [CrossRef]

- Brown, W. Some experimental results in the correlation of mental abilities. Br. J. Psychol. 1910, 3, 296–322. [Google Scholar]

- Steinke, A.; Kopp, B. RELEX: An Excel-based software tool for sampling split-half reliability coefficients. Methods Psychol. 2020, 2, 100023. [Google Scholar] [CrossRef]

- Lord, F.M. The measurement of growth. Educ. Psychol. Meas. 1956, 16, 421–437. [Google Scholar] [CrossRef]

- Hussey, I.; Hughes, S. Hidden invalidity among 15 commonly used measures in social and personality psychology. Adv. Methods Pract. Psychol. Sci. 2020, 3, 166–184. [Google Scholar] [CrossRef]

- Cooper, S.R.; Gonthier, C.; Barch, D.M.; Braver, T.S. The role of psychometrics in individual differences research in cognition: A case study of the AX-CPT. Front. Psychol. 2017, 8, 1482. [Google Scholar] [CrossRef]

- Enock, P.M.; Hofmann, S.G.; McNally, R.J. Attention bias modification training via smartphone to reduce social anxiety: A randomized, controlled multi-session experiment. Cogn. Ther. Res. 2014, 38, 200–216. [Google Scholar] [CrossRef]

- MacLeod, J.W.; Lawrence, M.A.; McConnell, M.M.; Eskes, G.A.; Klein, R.M.; Shore, D.I. Appraising the ANT: Psychometric and theoretical considerations of the Attention Network Test. Neuropsychology 2010, 24, 637–651. [Google Scholar] [CrossRef] [PubMed]

- Meule, A.; Lender, A.; Richard, A.; Dinic, R.; Blechert, J. Approach—Avoidance tendencies towards food: Measurement on a touchscreen and the role of attention and food craving. Appetite 2019, 137, 145–151. [Google Scholar] [CrossRef] [PubMed]

- Revelle, W.; Condon, D. Reliability from α to ω: A tutorial. Psychol. Assess. 2018, 31, 1395–1411. [Google Scholar] [CrossRef] [PubMed]

- Barceló, F. Electrophysiological evidence of two different types of error in the Wisconsin Card Sorting Test. Neuroreport 1999, 10, 1299–1303. [Google Scholar] [CrossRef]

- Díaz-Blancat, G.; García-Prieto, J.; Maestú, F.; Barceló, F. Fast neural dynamics of proactive cognitive control in a task-switching analogue of the Wisconsin Card Sorting Test. Brain Topogr. 2018, 31, 407–418. [Google Scholar] [CrossRef]

- Lange, F.; Kip, A.; Klein, T.; Müller, D.; Seer, C.; Kopp, B. Effects of rule uncertainty on cognitive flexibility in a card-sorting paradigm. Acta Psychol. 2018, 190, 53–64. [Google Scholar] [CrossRef]

- Mathôt, S.; Schreij, D.; Theeuwes, J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behav. Res. Methods 2012, 44, 314–324. [Google Scholar] [CrossRef]

- American Educational Research Association; American Psychological Association; American Educational Research Association; National Council on Measurement in Education. Standards for Educational and Psychological Testing; Amer Educational Research Assn: Washington, DC, USA, 2014. [Google Scholar]

- Appelbaum, M.; Cooper, H.; Kline, R.B.; Mayo-Wilson, E.; Nezu, A.M.; Rao, S.M. Journal article reporting standards for quantitative research in psychology: The APA Publications and Communications Board task force report. Am. Psychol. 2018, 73, 3–25. [Google Scholar] [CrossRef]

- Henry, F.M. Reliability, measurement error, and intra-individual difference. Res. Q. Am. Assoc. Health Phys. Educ. Recreat. 1959, 30, 21–24. [Google Scholar] [CrossRef]

- Lange, K.; Kühn, S.; Filevich, E. “Just Another Tool for Online Studies” (JATOS): An Easy Solution for Setup and Management of Web Servers Supporting Online Studies. PLoS ONE 2015, 10, e0130834. [Google Scholar] [CrossRef]

| Number of Committed Errors | Split-Half Reliability Estimates | ||||||

|---|---|---|---|---|---|---|---|

| First/Second Half Split | Odd/Even Split | Random Test Splits | |||||

| Median | 95% HDI | ||||||

| Error Type | Mean | SD | Lower | Upper | |||

| Perseveration Errors | 12.16 | 13.65 | 0.8465 | 0.9515 | 0.9434 | 0.9318 | 0.9534 |

| Set-Loss Errors | 5.03 | 7.07 | 0.8269 | 0.9226 | 0.9076 | 0.8843 | 0.9259 |

| Inference Errors | 8.46 | 8.82 | 0.7455 | 0.9313 | 0.9020 | 0.8819 | 0.9185 |

| Descriptive Statistics | Split-Half Reliability Estimates | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Valid Trials | Response Time | First/Second Half Split | Odd/Even Split | Random Test Splits | ||||||

| Median | 95% HDI | |||||||||

| Trial Type | Mean | SD | Mean | SD | Lower | Upper | ||||

| Switch Trial | 68.76 | 8.94 | 1835 | 694 | 0.9370 | 0.9772 | 0.9721 | 0.9653 | 0.9772 | |

| Repeat Trial | 77.41 | 13.18 | 1241 | 441 | 0.9173 | 0.9481 | 0.9573 | 0.9190 | 0.9731 | |

| Inference Trial | 18.98 | 3.74 | 1700 | 636 | 0.8183 | 0.8330 | 0.8510 | 0.8059 | 0.8802 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Steinke, A.; Kopp, B.; Lange, F. The Wisconsin Card Sorting Test: Split-Half Reliability Estimates for a Self-Administered Computerized Variant. Brain Sci. 2021, 11, 529. https://doi.org/10.3390/brainsci11050529

Steinke A, Kopp B, Lange F. The Wisconsin Card Sorting Test: Split-Half Reliability Estimates for a Self-Administered Computerized Variant. Brain Sciences. 2021; 11(5):529. https://doi.org/10.3390/brainsci11050529

Chicago/Turabian StyleSteinke, Alexander, Bruno Kopp, and Florian Lange. 2021. "The Wisconsin Card Sorting Test: Split-Half Reliability Estimates for a Self-Administered Computerized Variant" Brain Sciences 11, no. 5: 529. https://doi.org/10.3390/brainsci11050529

APA StyleSteinke, A., Kopp, B., & Lange, F. (2021). The Wisconsin Card Sorting Test: Split-Half Reliability Estimates for a Self-Administered Computerized Variant. Brain Sciences, 11(5), 529. https://doi.org/10.3390/brainsci11050529