Differentiating Real-World Autobiographical Experiences without Recourse to Behaviour

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

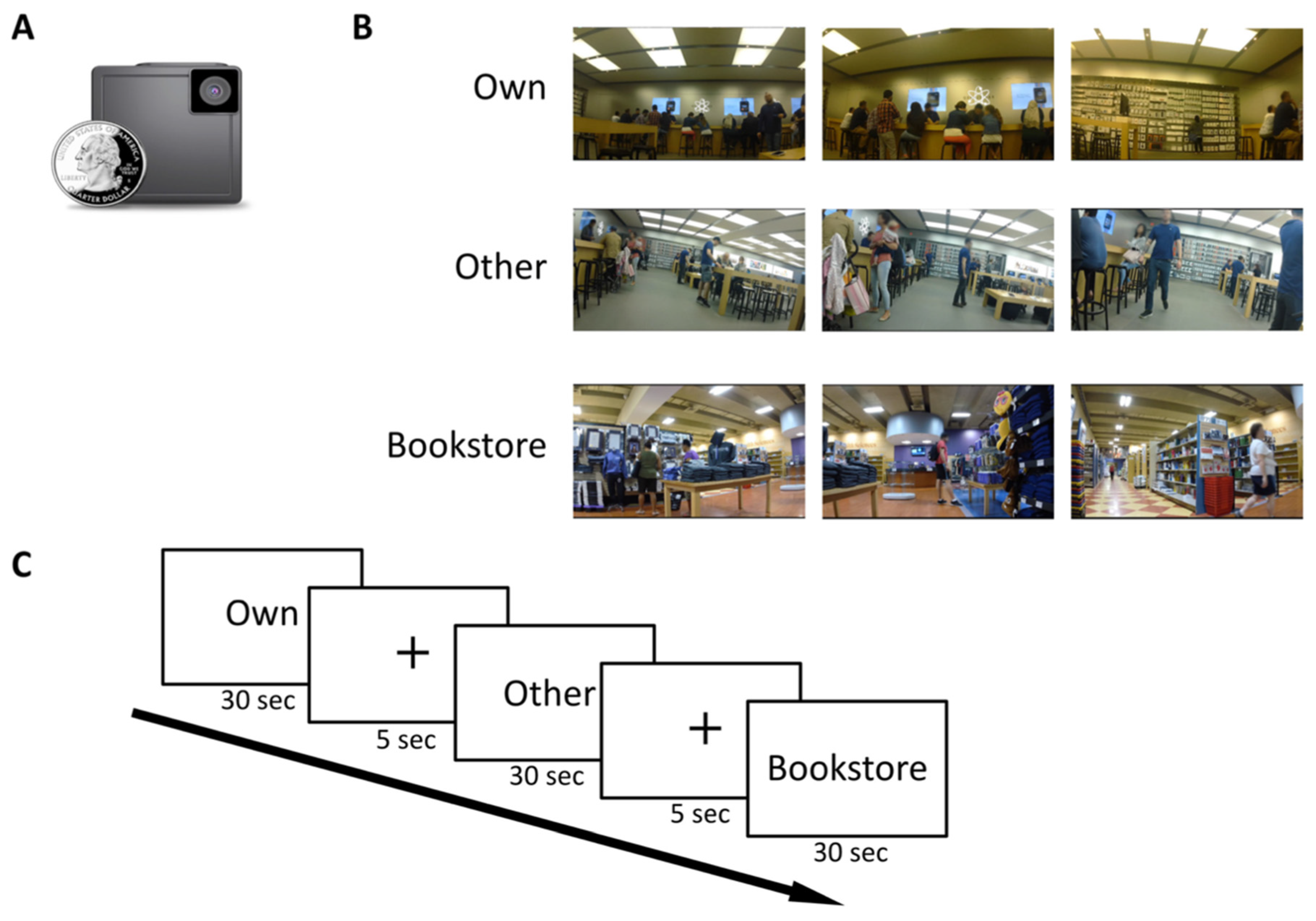

2.2. Procedure and Design

2.3. Mall-Visit Phase

2.4. fMRI Scan Phase

2.5. fMRI Data Acquisition

2.6. fMRI Data Preprocessing and Analysis

2.7. Feature Selection and Classification

2.7.1. Leave One Participant Out Cross-Validation (LOPOCV)

2.7.2. Within-Participant Cross-Validation (WPCV)

3. Results

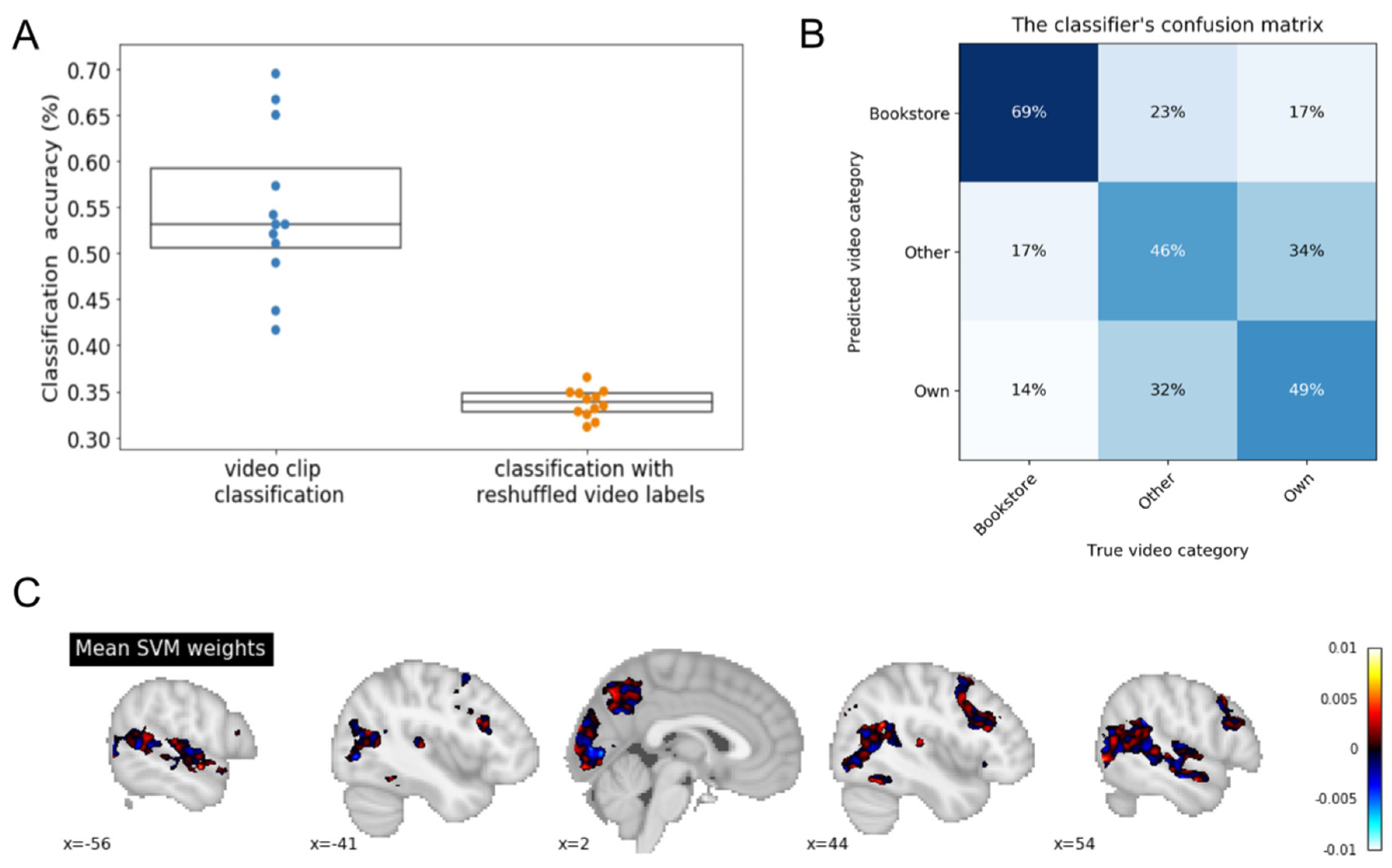

3.1. Leave One Participant out Cross-Validation (LOPOCV)

3.2. LOPOCV—Last Three Runs (Repeated Presentation of Each Video)

3.3. LOPOCV—Own vs. Other Videos Only

3.4. An Analysis Restricted to Voxels from a Brain Mask Derived from a Meta-Analysis of Many Autobiographical Studies

3.5. Within-Participant Cross-Validation (WPCV)

3.6. WPCV—Last Three Runs (Repeated Presentation of Each Video)

3.7. WPCV—Own vs. Other Videos Only

3.8. An analysis Restricted to Voxels from a Brain Mask Derived from a Meta Analysis of Several Autobiographical Studies

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, J.; Leong, Y.C.; Norman, K.A.; Hasson, U. Shared experience, shared memory: A common structure for brain activity during naturalistic recall. bioRxiv 2016, 035931. [Google Scholar] [CrossRef]

- Naci, L.; Cusack, R.; Anello, M.; Owen, A.M. A common neural code for similar conscious experiences in different individuals. Proc. Natl. Acad. Sci. USA 2014, 111, 14277–14282. [Google Scholar] [CrossRef] [PubMed]

- Cabeza, R.; St. Jacques, P. Functional neuroimaging of autobiographical memory. Trends Cogn. Sci. 2007, 11, 219–227. [Google Scholar] [CrossRef]

- St. Jacques, P.; De Brigard, F. Neural correlates of autobiographical memory: Methodological considerations. In The Wiley Handbook on The Cognitive Neuroscience of Memory; Duarte, A., Barense, M., Addis, D., Eds.; Wiley Blackwell: Hoboken, NJ, USA, 2015; pp. 265–286. [Google Scholar] [CrossRef]

- Svoboda, E.; McKinnon, M.C.; Levine, B. The functional neuroanatomy of autobiographical memory: A meta-analysis. Neuropsychologia 2006, 44, 2189–2208. [Google Scholar] [CrossRef]

- Fuster, J.M. Cortex and Memory: Emergence of a New Paradigm. Cog. Neurosci. 2009, 21, 2047–2072. [Google Scholar] [CrossRef] [PubMed]

- Spreng, R.N.; Grady, C.L. Patterns of brain activity supporting autobiographical memory, prospection, and theory of mind, and their relationship to the default mode network. J. Cogn. Neurosci. 2010, 22, 1112–1123. [Google Scholar] [CrossRef]

- Gilboa, A.; Rosenbaum, R.S.; Mendelsohna, A. Neuropsychologia Autobiographical Memory: From experiences to brain representations. Neuropsychologia 2018, 110, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Raichle, M.E.; MacLeod, A.M.; Snyder, A.Z.; Powers, W.J.; Gusnard, D.A.; Shulman, G.L. A default mode of brain function. Proc. Natl. Acad. Sci. USA 2001, 98, 676–682. [Google Scholar] [CrossRef] [PubMed]

- Andrews-Hanna, J.R.; Smallwood, J.; Spreng, R.N. The default network and self-generated thought: Component processes, dynamic control, and clinical relevance. Ann. N. Y. Acad. Sci. 2014, 1316, 29–52. [Google Scholar] [CrossRef]

- Muscatell, K.A.; Addis, D.R.; Kensinger, E.A. Self-involvement modulates the effective connectivity of the autobiographical memory network. Soc. Cogn. Affect. Neurosci. 2009, 5, 68–76. [Google Scholar] [CrossRef]

- Rabin, J.S.; Gilboa, A.; Stuss, D.T.; Mar, R.A.; Rosenbaum, R.S. Common and Unique Neural Correlates of Autobiographical Memory and Theory of Mind. J. Cogn. Neurosci. 2009, 22, 1095–1111. [Google Scholar] [CrossRef]

- Monge, Z.A.; Wing, E.A.; Stokes, J.; Cabeza, R. Search and recovery of autobiographical and laboratory memories: Shared and distinct neural components. Neuropsychologia 2018, 110, 44–54. [Google Scholar] [CrossRef] [PubMed]

- Diana, R.A.; Yonelinas, A.P.; Ranganath, C. Imaging recollection and familiarity in the medial temporal lobe: A three-component model. Trends Cogn. Sci. 2007, 11, 379–386. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.I.; Rissman, J.; Chow, T.E.; Uncapher, M.R.; Wagner, A.D. Differential Medial Temporal Lobe and Parietal Cortical Contributions to Real-world Autobiographical Episodic and Autobiographical Semantic Memory. Sci. Rep. 2018, 1–14. [Google Scholar] [CrossRef]

- Dosenbach, N.U.F.; Fair, D.A.; Miezin, F.M.; Cohen, A.L.; Wenger, K.K.; Dosenbach, R.A. Distinct brain networks for adaptive and stable task control in humans. Proc. Natl. Acad. Sci. USA 2007, 104, 11073–11078. [Google Scholar] [CrossRef] [PubMed]

- Vincent, J.L.; Kahn, I.; Snyder, A.Z.; Raichle, M.E.; Buckner, R.L. Evidence for a Frontoparietal Control System Revealed by Intrinsic Functional Connectivity. J. Neurophysiol. 2008, 100, 3328–3342. [Google Scholar] [CrossRef]

- Inman, C.S.; James, G.A.; Vytal, K.; Hamann, S. Neuropsychologia Dynamic changes in large-scale functional network organization during autobiographical memory retrieval. Neuropsychologia 2018, 110, 208–224. [Google Scholar] [CrossRef]

- St. Jacques, P.; Conway, M.; Lowder, M.W.; Cabeza, R. Watching my mind unfold versus yours: An fMRI study using a novel camera technology to examine neural differences in self-projection of self versus other perspectives. J. Cogn. Neurosci. 2011, 23, 1275–1284. [Google Scholar] [CrossRef]

- Peirce, J.W. PsychoPy-Psychophysics software in Python. J. Neurosci. Methods 2007, 162, 8–13. [Google Scholar] [CrossRef]

- Krzysztof, G.; Christopher, D.B.; Cindee, M.; Dav, C.; Yaroslav, O.H.; Michael, L.W.; Satrajit, S.G. Nipype: A flexible, lightweight and extensible neuroimaging data processing framework in python. Front. Neuroinform. 2011, 5, 13. [Google Scholar]

- Yarkoni, T.; Poldrack, R.A.; Nichols, T.E.; Van Essen, D.C.; Wager, T.D. Large-scale automated synthesis of human functional neuroimaging data. Nat. Methods 2011, 8, 665–670. [Google Scholar] [CrossRef]

- Mitchell, J.P. Inferences about mental states. Phil. Trans. R. Soc. 2009, 364, 1309–1316. [Google Scholar] [CrossRef]

- Levine, B.; Turner, G.R.; Tisserand, D.; Hevenor, S.J.; Graham, S.J.; McIntosh, A.R. The functional neuroanatomy of episodic and semantic autobiographical remembering. J. Cogn. Neurosci. 2004, 16, 1633–1646. [Google Scholar] [CrossRef]

- Santangelo, V.; Cavallina, C.; Colucci, P.; Santori, A.; Macrì, S.; McGaugh, J.L.; Campolongo, P. Enhanced brain activity associated with memory access in highly superior autobiographical memory. Proc. Natl. Acad. Sci. USA 2018, 115, 7795–7800. [Google Scholar] [CrossRef]

- Bonnici, H.M.; Maguire, E.A. Two years later–Revisiting autobiographical memory representations in vmPFC and hippocampus. Neuropsychologia 2018, 110, 159–169. [Google Scholar] [CrossRef]

- Sheldon, S.; Levine, B. Same as it ever was: Vividness modulates the similarities and differences between the neural networks that support retrieving remote and recent autobiographical memories. Neuroimage 2013, 83, 880–891. [Google Scholar] [CrossRef] [PubMed]

- Plancher, G.; Tirard, A.; Gyselinck, V.; Nicolas, S.; Piolino, P. Using virtual reality to characterize episodic memory profiles in amnestic mild cognitive impairment and Alzheimer’s disease: Influence of active and passive encoding. Neuropsychologia 2012, 50, 592–602. [Google Scholar] [CrossRef] [PubMed]

- Rissman, J.; Chow, E.T.; Reggente, N.; Wagner, A.D. Decoding fMRI Signatures of Real-world Autobiographical Memory Retrieval. J. Cogn. Neurosci. 2016, 28, 604–620. [Google Scholar] [CrossRef] [PubMed]

- Balci, S.K.; Sabuncu, M.R.; Yoo, J.; Ghosh, S.S.; Whitfield-Gabrieli, S.; Gabrieli, J.D.E.; Golland, P. Prediction of successful memory encoding from fMRI data. In Medical Image Computing and Computer-Assisted Intervention: International Conference on Medical Image Computing and Computer-Assisted Intervention; NIH Public Access: Bethesda, MD, USA, 2008. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Erez, J.; Gagnon, M.-E.; Owen, A.M. Differentiating Real-World Autobiographical Experiences without Recourse to Behaviour. Brain Sci. 2021, 11, 521. https://doi.org/10.3390/brainsci11040521

Erez J, Gagnon M-E, Owen AM. Differentiating Real-World Autobiographical Experiences without Recourse to Behaviour. Brain Sciences. 2021; 11(4):521. https://doi.org/10.3390/brainsci11040521

Chicago/Turabian StyleErez, Jonathan, Marie-Eve Gagnon, and Adrian M. Owen. 2021. "Differentiating Real-World Autobiographical Experiences without Recourse to Behaviour" Brain Sciences 11, no. 4: 521. https://doi.org/10.3390/brainsci11040521

APA StyleErez, J., Gagnon, M.-E., & Owen, A. M. (2021). Differentiating Real-World Autobiographical Experiences without Recourse to Behaviour. Brain Sciences, 11(4), 521. https://doi.org/10.3390/brainsci11040521