Exploring EEG Characteristics to Identify Emotional Reactions under Videogame Scenarios

Abstract

1. Introduction

- The EEG traits that correlated with the emotional self-assessment responses for all the participants, in an individual approach after playing a videogame level.

- The performance of machine learning regression methods to predict emotional self-assessment responses.

- The relation between the number of game events from different game levels and the arousal/valence responses from the participants.

- The characteristics of the EEG signal in the presence of game time events related with emotional reactions.

- The possibility to classify those game events using only EEG traits to assess emotional reactions inside a game play time window.

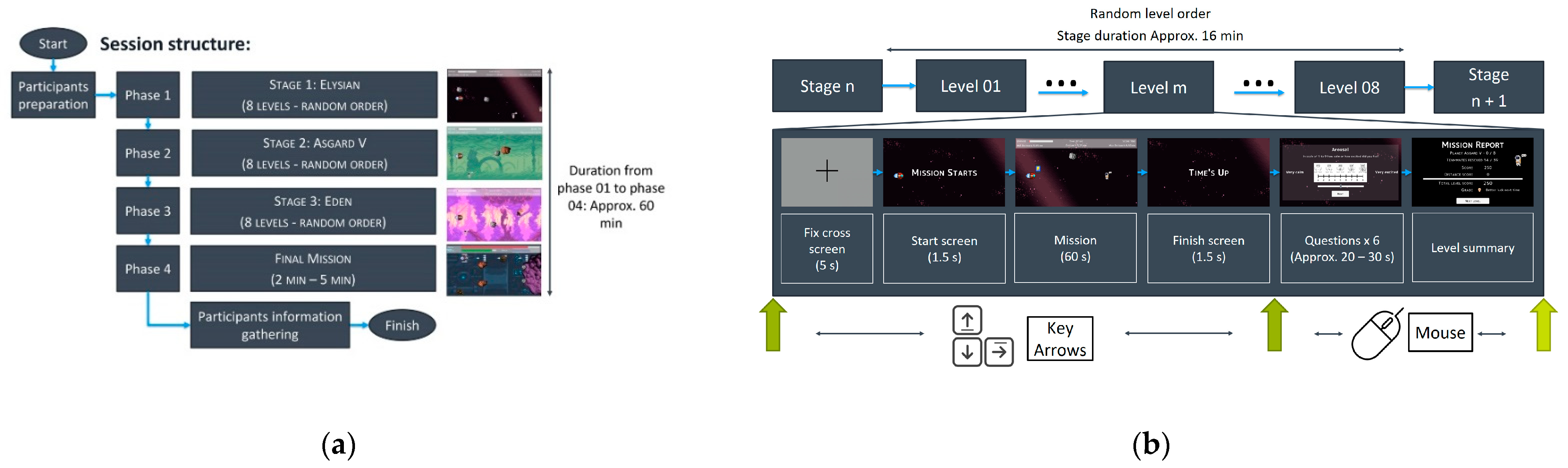

2. Materials and Methods

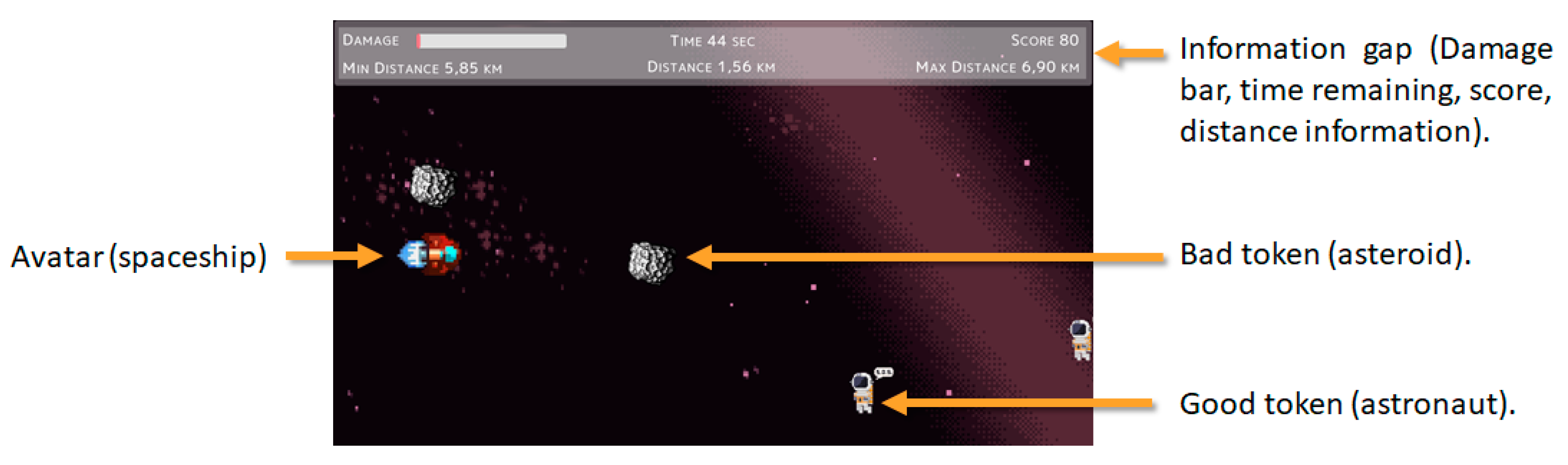

2.1. Emotional Stimuli Tool

2.2. Type of Acquire Information

2.2.1. Emotional Questionnaires

2.2.2. Game Events

2.2.3. Electroencephalography

Signal Preprocessing

Signal Traits

- Statistical (time domain) features: are statistical parameters of the physiological signal time series, over a relatively a long-time window.

- Frequency domain features: considers a frequency spectrum and different frequency bands related to signal activation produce by a specific stimulus.

2.2.4. Participants

3. Results

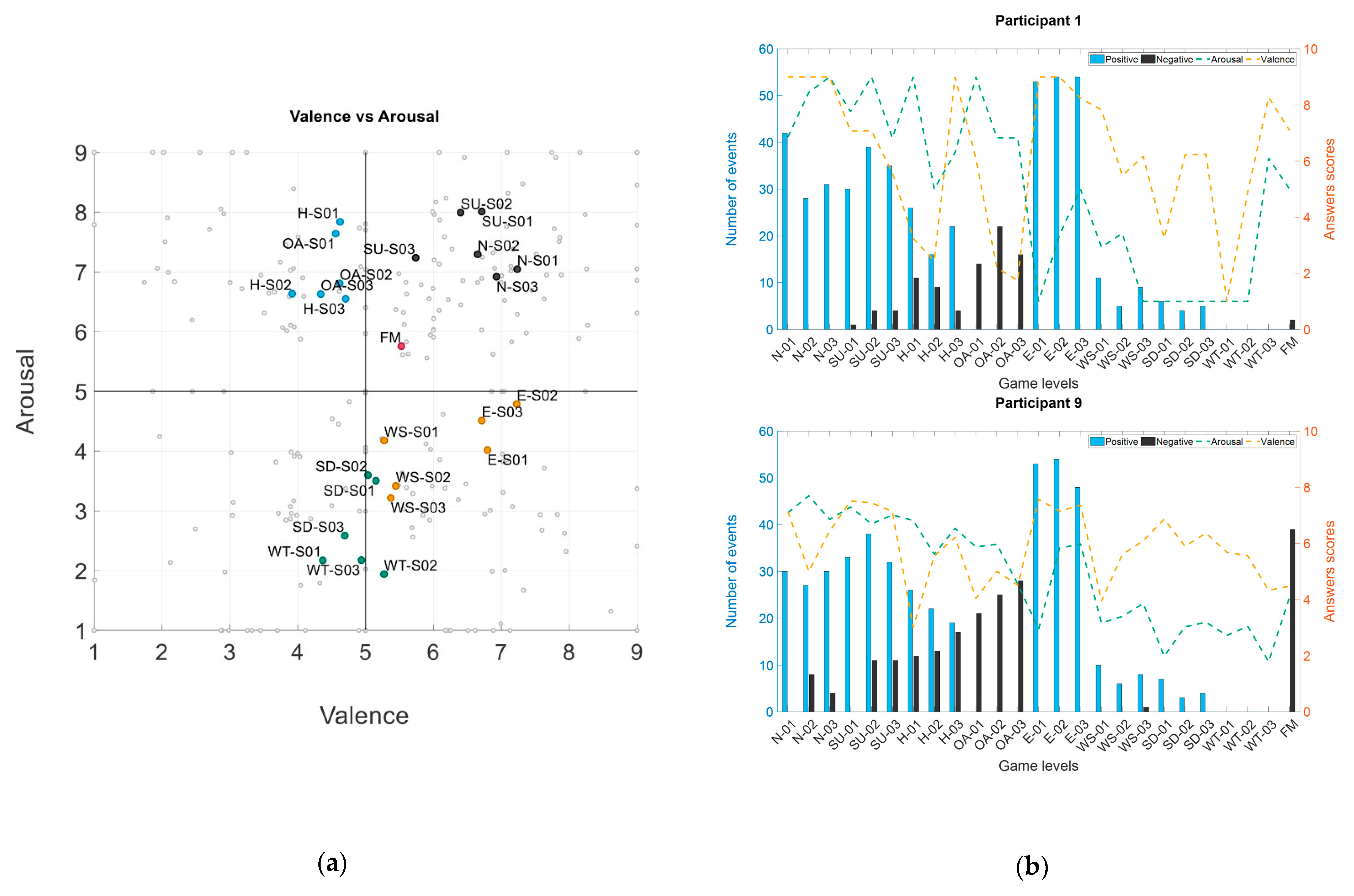

3.1. Self-Assessment Responses and Game Events

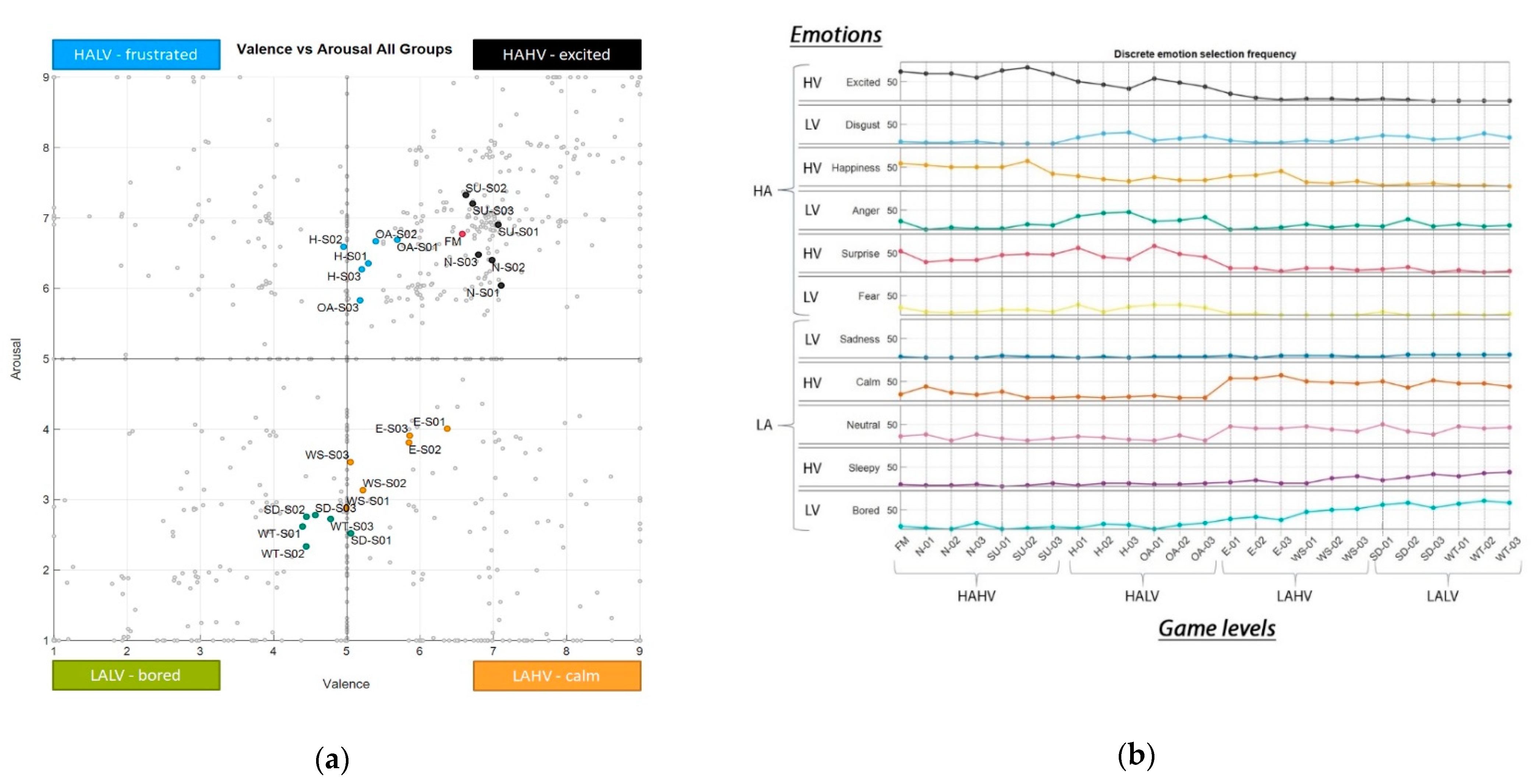

3.1.1. Arousal–Valence Dispersion

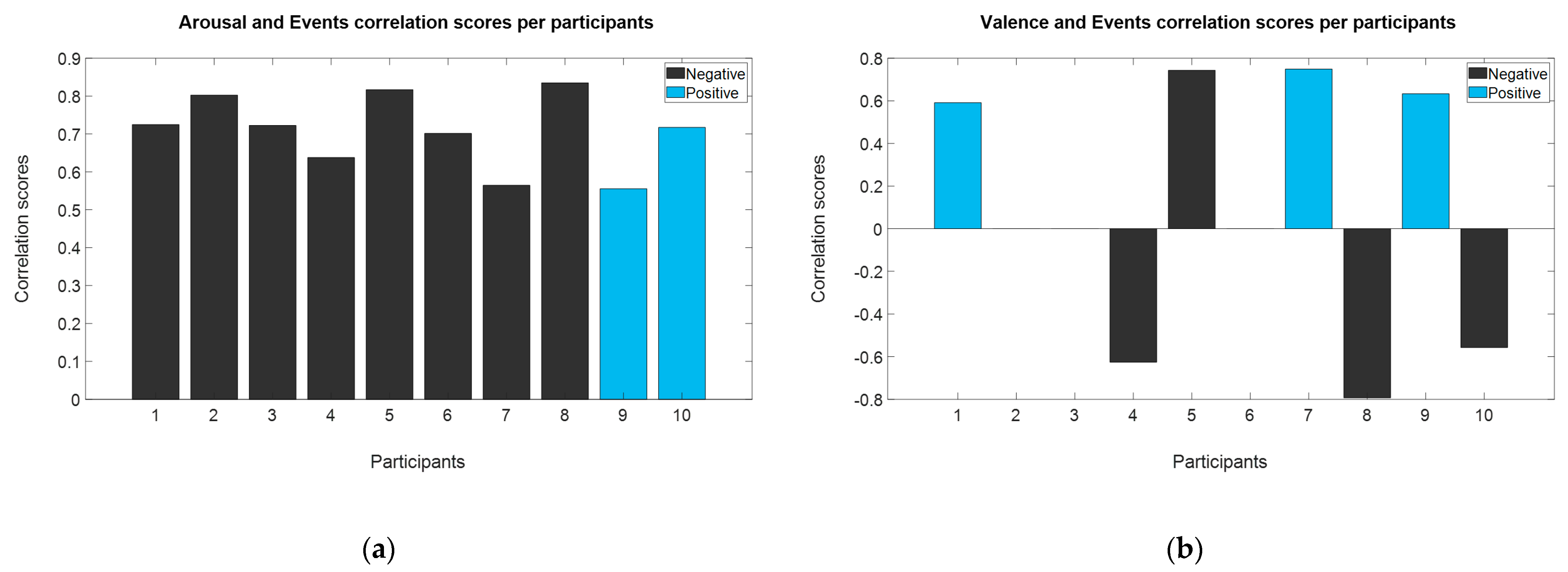

3.1.2. Game Events

3.2. Analysis from Self-Assessment Responses and EEG Traits

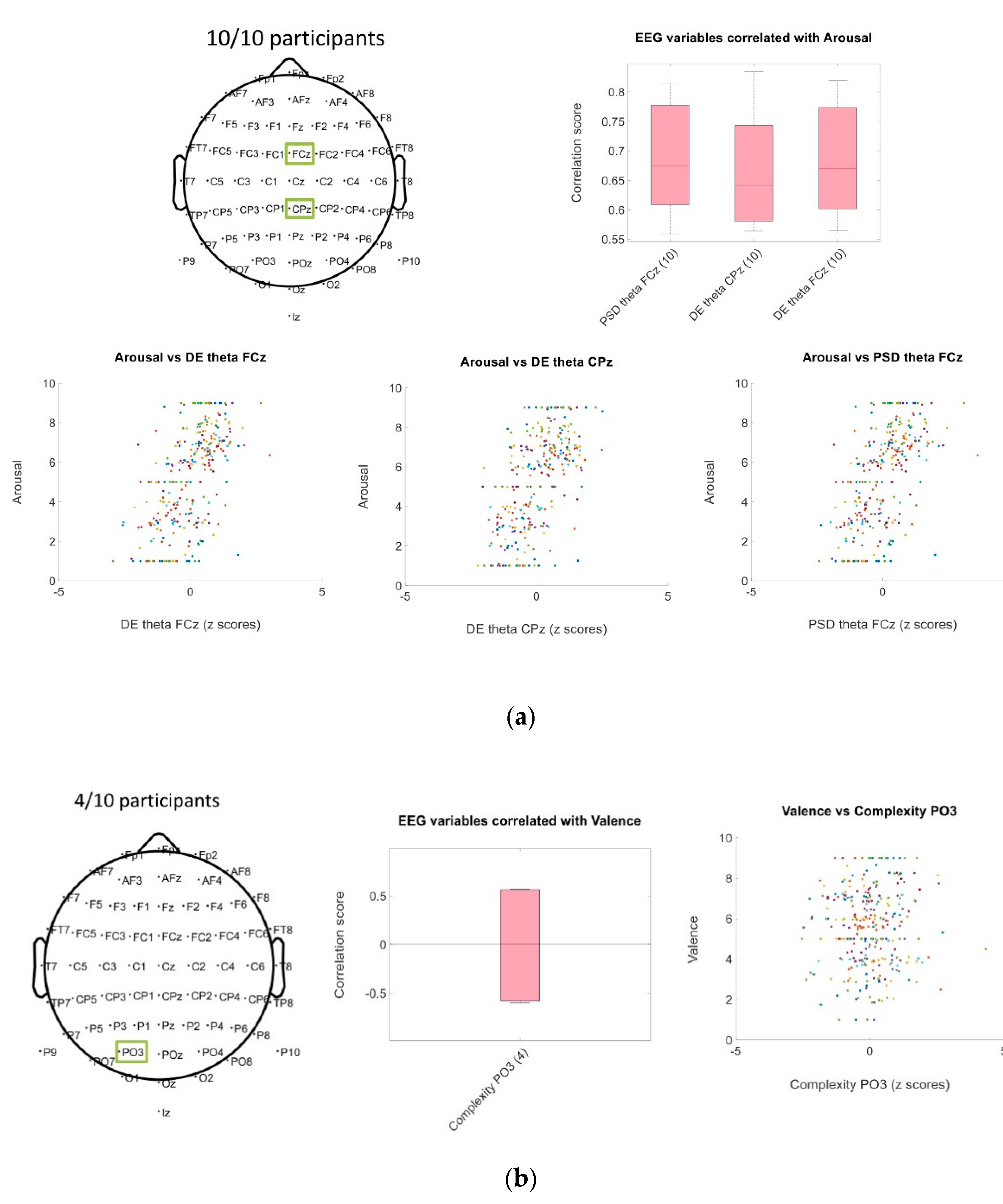

3.2.1. Spearman’s Correlation of EEG Traits with Arousal and Valence Scores

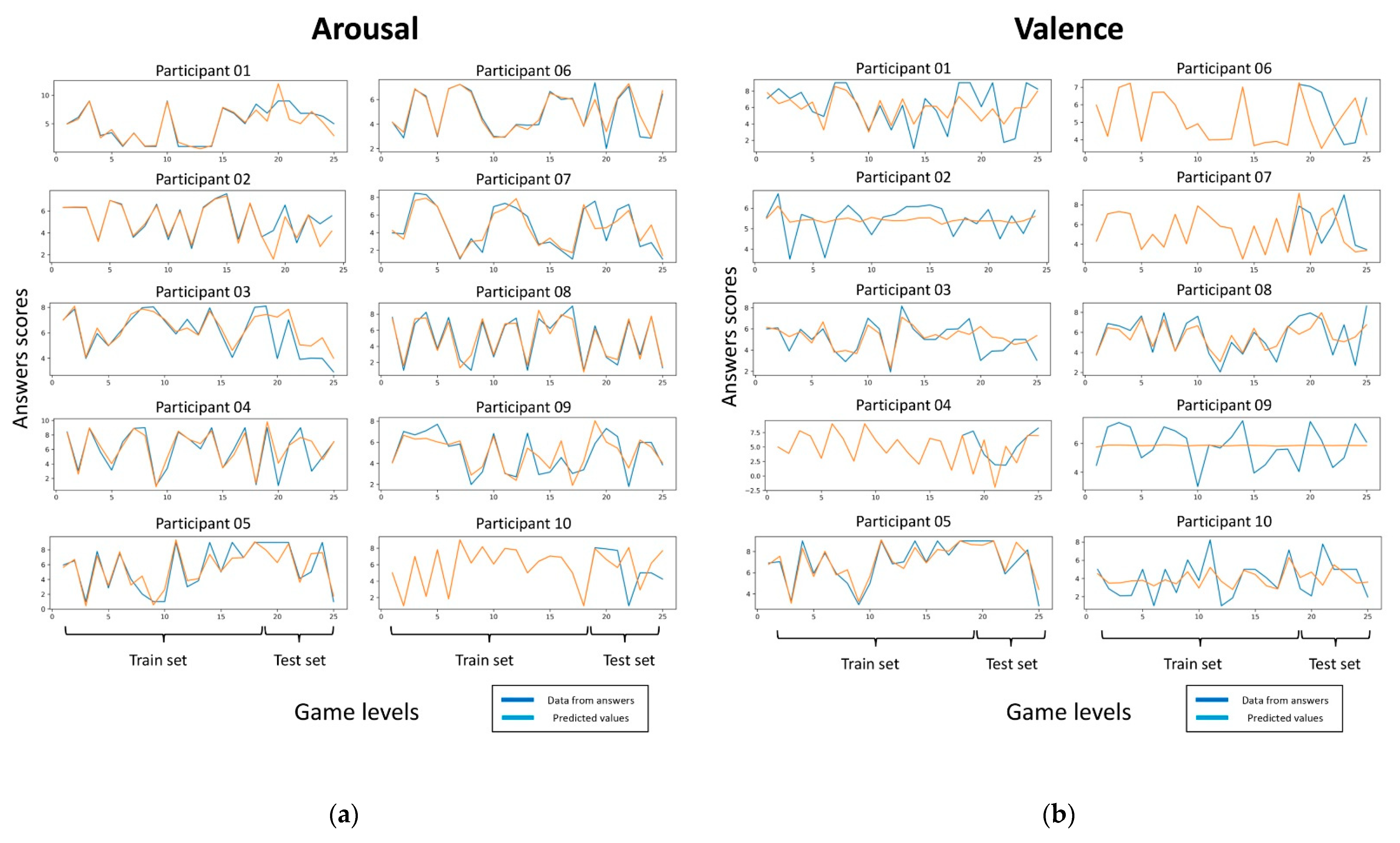

3.2.2. Arousal and Valence Prediction Using Bayesian Ridge Regression Model

3.3. Analysis from Time Related Events and EEG Traits

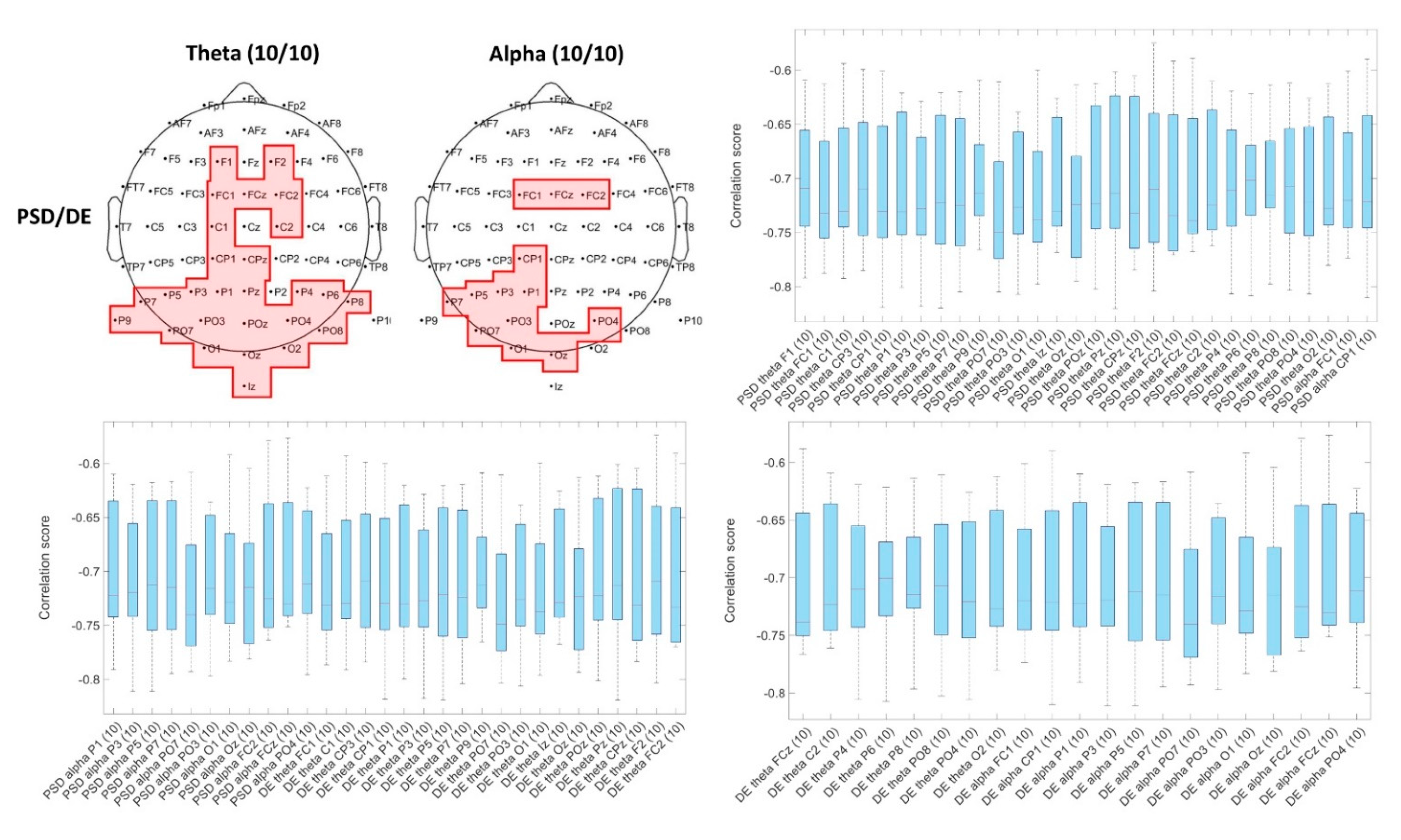

3.3.1. Spearman’s Correlation of EEG Traits with Game Events

3.3.2. Game events Classification using Ensembling Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Selection of Target Emotions

Appendix A.2. Videogame Frameworks

| Level | Emotions | Ships Characteristics | Tokens Characteristics | Tokens Stage 1 | Tokens Stage 2 | Tokens Stage 3 |

|---|---|---|---|---|---|---|

| Normal | HAHL Excitement | Normal Speed Normal Controls Accelerate Option | Normal Speed | Astronauts Asteroids | Astronauts Asteroids Special Asteroid | Astronauts Asteroids Enemies |

| Speed Up | Normal Speed Normal Controls Accelerate Option | Speed Gradually Increases | Astronauts Asteroids | Astronauts Asteroids Special Asteroid | Astronauts Asteroids Enemies | |

| Hard | HALV Frustration | Speed Gradually Decreases Inverted Controls every 10 s Deaccelerate Option | Normal Speed Larger Size of Asteroids Smaller Astronaut’s Collision Region If Collision, The Size of the Negative Tokens Increases | Astronauts Big Asteroids Asteroids | Astronauts Big Asteroids Special Asteroid | Astronauts Big Asteroids Enemies |

| Only Asteroids | Normal Speed Normal Controls FOV Gradually Decreases Accelerate Option | Speed Gradually Increases Respawn Time Gradually Decreases No Good Tokens Inclusion of Larger Sized Asteroid If Collision, the Size of FOV Decreases | Big Asteroids Asteroids | Big Asteroids Asteroids Special Asteroid | Big Asteroids Asteroids Enemies | |

| Easy | LAHV Calm | Normal Speed Normal Controls Accelerate Option | Decrease Speed * No Negative Tokens | Astronauts Small Coins Big Coins | Astronauts Small Coins Big Coins | Astronauts Small Coins Big Coins |

| Without Speed | Normal Speed Normal Controls No accelerate Option | Decrease Speed * | Astronauts Asteroids | Astronauts Asteroids Special Asteroid | Astronauts Asteroids Enemies | |

| Speed Down | LALV Bored | Higher Decrease Speed Normal Controls Accelerate Option | Higher Decrease Speed * Spawn from the Middle of the Screen | Astronauts Asteroids | Astronauts Asteroids Special Asteroid | Astronauts Asteroids Enemies |

| Without Tokens | Higher Decrease Speed Normal Controls Accelerate Option | None | None | None | None | |

| Final Mission | – | Speed Depends on Power Ups Normal Movement Controls Shooting Option | Normal speed Different trajectories Respawn in pair | Asteroids Special Asteroids Enemy type 01 Enemy type 02 | ||

Appendix A.3. Final Mission

Appendix A.4. Videogame Evaluation

Appendix A.5. Arousal–Valence Dispersion

Appendix A.6. Discrete Emotion Selection

Appendix A.7. Repeated-Measures ANOVA between Level Stages of Each Group

References

- Al-Nafjan, A.; Hosny, M.; Al-Ohali, Y.; Al-Wabil, A. Review and Classification of Emotion Recognition Based on EEG Brain-Computer Interface System Research: A Systematic Review. Appl. Sci. 2017, 7, 1239. [Google Scholar] [CrossRef]

- Alarcao, S.M.; Fonseca, M.J. Emotions Recognition Using EEG Signals: A Survey. IEEE Trans. Affect. Comput. 2019, 10, 374–393. [Google Scholar] [CrossRef]

- Yeh, C.S.-H. Exploring the effects of videogame play on creativity performance and emotional responses. Comput. Hum. Behav. 2015, 53, 396–407. [Google Scholar] [CrossRef]

- Ravaja, N.; Saari, T.; Salminen, M.; Laarni, J.; Kallinen, K. Phasic Emotional Reactions to Video Game Events: A Psychophysiological Investigation. Media Psychol. 2006, 8, 343–367. [Google Scholar] [CrossRef]

- Perez Martínez, H.; Garbarino, M.; Yannakakis, G.N. Generic Physiological Features as Predictors of Player Experience. In Affective Computing and Intelligent Interaction. ACII 2011. Lecture Notes in Computer Science; D’Mello, S., Graesser, A., Schuller, B., Martin, J.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6974, pp. 267–276. [Google Scholar]

- Alhargan, A.; Cooke, N.; Binjammaz, T. Multimodal affect recognition in an interactive gaming environment using eye tracking and speech signals. In Proceedings of the 19th ACM International Conference on Multimodal Interaction (ICMI 2017), Glasgow, UK, 13–17 November 2017; pp. 479–486. [Google Scholar]

- Fortin-Côté, A.; Beaudoin-Gagnon, N.; Chamberland, C.; Desbiens, F.; Lefebvre, L.; Bergeron, J.; Campeau-Lecours, A.; Tremblay, S.; Jackson, P.L. FUNii: The Physio-Behavioural Adaptive Video Game. In Augmented Cognition. HCII 2019. Lecture Notes in Computer Science; Schmorrow, D., Fidopiastis, C., Eds.; Springer: Cham, Switzerland, 2019; Volume 11580, pp. 14–28. [Google Scholar]

- Bevilacqua, F.; Engström, H.; Backlund, P. Game-Calibrated and User-Tailored Remote Detection of Stress and Boredom in Games. Sensors 2019, 19, 2877. [Google Scholar] [CrossRef]

- Pallavicini, F.; Ferrari, A.; Mantovani, F. Video games for well-being: A systematic review on the application of computer games for cognitive and emotional training in the adult population. Front. Psychol. 2018, 9, 2127. [Google Scholar] [CrossRef] [PubMed]

- Kivikangas, J.M.; Chanel, G.; Cowley, B.; Ekman, I.; Salminen, M.; Järvelä, S.; Ravaja, N. A review of the use of psychophysiological methods in game research. J. Gaming Virtual Worlds 2011, 3, 181–199. [Google Scholar] [CrossRef]

- Sawangjai, P.; Hompoonsup, S.; Leelaarporn, P.; Kongwudhikunakorn, S.; Wilaiprasitporn, T. Consumer Grade EEG Measuring Sensors as Research Tools: A Review. IEEE Sens. J. 2020, 20, 3996–4024. [Google Scholar] [CrossRef]

- Cavanagh, J.F.; Castellanos, J. Identification of canonical neural events during continuous gameplay of an 8-bit style video game. NeuroImage 2016, 133, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Kerous, B.; Skola, F.; Liarokapis, F. EEG-based BCI and video games: A progress report. Virtual Real. 2018, 22, 119–135. [Google Scholar] [CrossRef]

- Alakus, T.B.; Gonen, M.; Turkoglu, I. Database for an emotion recognition system based on EEG signals and various computer games—GAMEEMO. Biomed. Signal Process. Control. 2020, 60, 101951. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, L.; Saha, S.; Konar, A. Decoding emotional changes of android-gamers using a fused Type-2 fuzzy deep neural network. Comput. Hum. Behav. 2021, 116, 106640. [Google Scholar] [CrossRef]

- Chanel, G.; Rebetez, C.; Bétrancourt, M.; Pun, T. Emotion Assessment from Physiological Signals for Adaptation of Game Difficulty. IEEE Trans. Syst. Man, Cybern. Part A Syst. Hum. 2011, 41, 1052–1063. [Google Scholar] [CrossRef]

- Russell, J.A. A circumflex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Kim, J.; Andre, E. Emotion recognition based on physiological changes in music listening. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 2067–2083. [Google Scholar] [CrossRef]

- Yang, Y.-H.; Lin, Y.-C.; Su, Y.-F.; Chen, H.H. A Regression Approach to Music Emotion Recognition. IEEE Trans. Audio, Speech, Lang. Process. 2008, 16, 448–457. [Google Scholar] [CrossRef]

- Kim, Y.; Schmidt, E.M.; Migneco, R.; Morton, B.G.; Richardson, P.; Scott, J.; Speck, J.A. Turnbull. Music emotion recognition: A state of the art review. In Proceedings of the 11th International Society for Music Information Retrieval Conference, ISMIR 2010, Utrecht, The Netherlands, 9–13 August 2010; pp. 255–266. [Google Scholar]

- Yang, Y.-H.; Chen, H.H. Music Emotion Recognition; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Zhao, G.; Ge, Y.; Shen, B.; Wei, X.; Wang, H. Emotion Analysis for Personality Inference from EEG Signals. IEEE Trans. Affect. Comput. 2017, 9, 362–371. [Google Scholar] [CrossRef]

- Jeon, M. Emotions and Affect in Human Factors and Human-Computer Interaction; Academic Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Urigüen, J.A.; Garcia-Zapirain, B. EEG artifact removal—State-of-the-art and guidelines. J. Neural Eng. 2015, 12, 031001. [Google Scholar] [CrossRef]

- Jung, T.P.; Makeig, S.; Humphries, C.; Lee, T.W.; McKeown, M.J.; Iragui, V.; Sejnowski, T.J. Removing electroencephalographic artifacts by blind source separation. Psychophysiology 2000, 37, 163–178. [Google Scholar] [CrossRef] [PubMed]

- Jung, T.P.; Makeig, S.; Westerfield, M.; Townsend, J.; Courchesne, E.; Sejnowski, T.J. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin. Neurophysiol. 2000, 111, 1745–1758. [Google Scholar] [CrossRef]

- Chandra, S.; Sharma, G.; Salam, A.A.; Jha, D.; Mittal, A.P. Playing Action Video Games a Key to Cognitive Enhancement. Procedia Comput. Sci. 2016, 84, 115–122. [Google Scholar] [CrossRef]

- Singh, A.K.; Wang, Y.K.; King, J.T.; Lin, C.T. Extended interaction with a bci video game changes resting-state brain activity. IEEE Trans. Cogn. Dev. Syst. 2020, 12, 809–823. [Google Scholar] [CrossRef]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward Machine Emotional Intelligence: Analysis of Affective Physiological State. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Gouizi, K.; Reguig, F.B.; Maaoui, C. Analysis physiological signals for emotion recognition. In Proceedings of the International Workshop on Systems, Signal Processing and their Applications, WOSSPA, Tipaza, Algeria, 9–11 May 2011; pp. 147–150. [Google Scholar]

- Jerritta, S.; Murugappan, M.; Wan, K.; Yaacob, S. Emotion recognition from facial EMG signals using higher order statistics and principal component analysis. J. Chin. Inst. Eng. 2014, 37, 385–394. [Google Scholar] [CrossRef]

- Li, X.; Song, D.; Zhang, P.; Zhang, Y.; Hou, Y.; Hu, B. Exploring EEG Features in Cross-Subject Emotion Recognition. Front. Neurosci. 2018, 12, 162. [Google Scholar] [CrossRef] [PubMed]

- Patil, A.; Deshmukh, C.; Panat, A.R. Feature extraction of EEG for emotion recognition using Hjorth features and higher order crossings. In Proceedings of the 2016 Conference on Advances in Signal Processing (CASP), Pune, India, 9–11 June 2016; pp. 429–434. [Google Scholar]

- Correa, J.A.M.; Abadi, M.K.; Sebe, N.; Patras, I. AMIGOS: A Dataset for Affect, Personality and Mood Research on Individuals and Groups. IEEE Trans. Affect. Comput. 2018, 1. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 13, 18–31. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Liu, W.; Lu, Y.; Lu, B.-L.; Cichocki, A. EmotionMeter: A Multimodal Framework for Recognizing Human Emotions. IEEE Trans. Cybern. 2019, 49, 1110–1122. [Google Scholar] [CrossRef] [PubMed]

- Ping, H.Y.; Abdullah, L.N.; Halin, A.A.; Sulaiman, P.S. A Study of Physiological Signals-based Emotion Recognition Systems. Int. J. Comput. Technol. 2013, 11, 2189–2196. [Google Scholar] [CrossRef]

- Chen, D.-W.; Miao, R.; Yang, W.-Q.; Liang, Y.; Chen, H.-H.; Huang, L.; Deng, C.-J.; Han, N. A Feature Extraction Method Based on Differential Entropy and Linear Discriminant Analysis for Emotion Recognition. Sensors 2019, 19, 1631. [Google Scholar] [CrossRef]

- Duan, R.-N.; Zhu, J.-Y.; Lu, B.-L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 81–84. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Vergara, J.R.; Estévez, P.A. A review of feature selection methods based on mutual information. Neural Comput. Appl. 2014, 24, 175–186. [Google Scholar] [CrossRef]

- Lemaitre, G.; Nogueira, F.; Oliveira, D.; Aridas, C. Ensemble of Samplers—Imbalanced-Learn 0.7.0 Documentation. 2016. Available online: https://imbalanced-learn.org/stable/ensemble.html (accessed on 2 February 2021).

- Kostyunina, M.B.; Kulikov, M.A. Frequency characteristics of EEG spectra in the emotions. Neurosci. Behav. Physiol. 1996, 26, 340–343. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.-W.; Nie, D.; Lu, B.-L. EEG-Based Emotion Recognition Using Frequency Domain Features and Support Vector Machines. In Proceedings of the International Conference on Neural Information Processing, Shanghai, China, 13–17 November 2011; pp. 734–743. [Google Scholar] [CrossRef]

- Zheng, W. Multichannel EEG-Based Emotion Recognition via Group Sparse Canonical Correlation Analysis. IEEE Trans. Cogn. Dev. Syst. 2017, 9, 281–290. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. Investigating Critical Frequency Bands and Channels for EEG-Based Emotion Recognition with Deep Neural Networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Jatupaiboon, N.; Pan-Ngum, S.; Israsena, P. Emotion classification using minimal EEG channels and frequency bands. In Proceedings of the 2013 10th International Joint Conference on Computer Science and Software Engineering (JCSSE), Khon Kaen, Thailand, 29–31 May 2013; pp. 21–24. [Google Scholar]

- Zhang, J.; Chen, P.; Nichele, S.; Yazidi, A. Emotion Recognition Using Time-frequency Analysis of EEG Signals and Machine Learning. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; pp. 404–409. [Google Scholar]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Subramanian, R.; Wache, J.; Abadi, M.K.; Vieriu, R.L.; Winkler, S.; Sebe, N. ASCERTAIN: Emotion and Personality Recognition Using Commercial Sensors. IEEE Trans. Affect. Comput. 2016, 9, 147–160. [Google Scholar] [CrossRef]

- Stein, A.; Yotam, Y.; Puzis, R.; Shani, G.; Taieb-Maimon, M. EEG-triggered dynamic difficulty adjustment for multiplayer games. Entertain. Comput. 2018, 25, 14–25. [Google Scholar] [CrossRef]

- Chen, D.; James, J.; Bao, F.S.; Ling, C.; Fan, T. Relationship between Video Game Events and Player Emotion Based on EEG. In Proceedings of the International Conference on Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Volume 9733, pp. 377–384. [Google Scholar]

- Hunicke, R.; Leblanc, M.; Zubek, R. MDA: A formal approach to game design and game research. In AAAI Workshop—Technical Report; AAAI Press: Menlo Park, CA, USA, 2004; Volume WS-04-04, pp. 1–5. [Google Scholar]

- Dillon, R. On the Way to Fun: An Emotion-Based Approach to Successful Game Design; A K Peters/CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Isbister, K. How Games Move Us: Emotion by Design; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Martinez-Tejada, L.A.; Gonzalez, A.P.; Yoshimura, N.; Koike, Y. Videogame design as a elicit tool for emotion recognition experiments. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 4320–4326. [Google Scholar]

| Article | EEG Characteristics | Video Game and Measured Emotions | Game Play Time Window | Emotional Reference Information | Game Time Event Analysis | Participants |

|---|---|---|---|---|---|---|

| [14] | 14 channels | Train Sim World—boring, Unravel—calm, Slender—The Arrival—horror, and Goat Simulator—funny. | 5 min | Arousal/Valence Self-Assessment Manikins (SAM) | No | 28 |

| [15] | 9 channels | Four architectural environments designed based on Kazuyo Sejima’s “Villa in the Forest” modifying illumination, color, and geometry. High and low arousal and valence states. | 1.5 min | Arousal/Valence Self-Assessment Manikins (SAM) | No | 38 |

| [16] | 24 channels | Candy Crush and Stickman Archers. Happiness, sadness, surprise, anger, disgust and neutral | 10 min | Visual inspection of facial expressions | No | 35 |

| [17] | 19 channels | Tetris: medium condition, easy condition, hard condition | 5 min | Arousal/Valence Self-Assessment Manikins (SAM) | No | 14 |

| Type of Feature | Feature Name |

|---|---|

| Time domain features | Picard parameters [31,32]: mean, standard deviations of the physiological signal, max/min ratio of the EEG signals. Higher order statistics [33]: skewness measures the degree of asymmetry of a distribution around the signal’s mean. Kurtosis is the measure of relative heaviness of the tail of a distribution with respect to the normal distribution. Hjorth variables [34,35]: activity represents the signal power by the variance of a time function. Mobility represents the mean frequency or the proportion of standard deviation of the power spectrum. Complexity represents the change in frequency comparing the signal’s similarity to a pure sine wave, the value converges to 1 if the signals are similar. |

| Frequency domain features | Power spectral density (PDS) [31,36,37,38] by Welch’s method (time window = 512 samples corresponding to 1 s, on the theta (4–8 Hz), alpha (8–12 Hz), beta (12–30 Hz) and gamma (30–47 Hz) bands for each electrode) [36]. PS-ASM between the 27 pairs of electrodes in the five bands were calculated [36]. Differential entropy (DE) equivalent to the logarithm of the energy spectrum [39,40]. DE can be defined as the entropy of continuous random variables and is used to measure its complexity, and is equivalent to the logarithm of the energy spectrum in a certain frequency band for a fixed length EEG sequence ([41]). DASM and RASM were calculated as the differences and ratios between the DE of the 27 pairs of asymmetry electrodes [36]. |

| Game Level | Arousal | Valence | |||

|---|---|---|---|---|---|

| Mean | Std | Mean | Std | ||

| HAHV | N–01 | 7.05 | 0.45 | 7.23 | 1.24 |

| N–02 | 7.29 | 1.03 | 6.65 | 1.30 | |

| N–03 | 6.92 | 0.99 | 6.93 | 1.19 | |

| SU–01 | 8.01 | 0.68 | 6.71 | 1.64 | |

| SU–02 | 7.99 | 1.22 | 6.40 | 1.60 | |

| SU–03 | 7.24 | 0.75 | 5.74 | 1.59 | |

| HALV | H–01 | 7.84 | 0.89 | 4.62 | 2.52 |

| H–02 | 6.64 | 1.06 | 3.92 | 1.94 | |

| H–03 | 6.55 | 1.94 | 4.71 | 2.56 | |

| OA–01 | 7.64 | 1.13 | 4.56 | 2.33 | |

| OA–02 | 6.81 | 1.97 | 4.62 | 2.31 | |

| OA - 03 | 6.63 | 1.34 | 4.34 | 2.10 | |

| LAHV | E–01 | 4.02 | 1.78 | 6.80 | 1.23 |

| E–02 | 4.79 | 1.86 | 7.23 | 1.75 | |

| E–03 | 4.51 | 1.76 | 6.71 | 1.45 | |

| WS–01 | 4.18 | 1.71 | 5.27 | 1.73 | |

| WS–02 | 3.42 | 1.12 | 5.44 | 1.24 | |

| WS - 03 | 3.22 | 1.48 | 5.37 | 1.25 | |

| LALV | SD–01 | 3.50 | 1.85 | 5.15 | 1.59 |

| SD–02 | 3.60 | 1.46 | 5.03 | 1.32 | |

| SD–03 | 2.59 | 1.49 | 4.69 | 1.51 | |

| WT–01 | 2.17 | 0.96 | 4.37 | 2.26 | |

| WT–02 | 1.94 | 1.22 | 5.27 | 1.35 | |

| WT–03 | 2.18 | 1.64 | 4.94 | 2.08 | |

| -- | Final Mission | 7.05 | 0.45 | 7.23 | 1.24 |

| a Individual Traits Correlated for Each Participant | b Number of Traits Correlated Common among Participants | |||||

|---|---|---|---|---|---|---|

| Participant | Gender | Arousal | Valence | Number of Participants | Arousal | Valence |

| Num. of Traits | Num. of Traits | Num. of Traits | Num. of Traits | |||

| 1 | Male | 223 | 200 | 1/10 | 461 | 480 |

| 2 | Male | 245 | 9 | 2/10 | 260 | 82 |

| 3 | Male | 207 | 10 | 3/10 | 155 | 0 |

| 4 | Female | 265 | 8 | 4/10 | 79 | 1 |

| 5 | Male | 287 | 323 | 5/10 | 35 | 0 |

| 6 | Male | 272 | 16 | 6/10 | 10 | 0 |

| 9 | Male | 146 | 13 | 7/10 | 4 | 0 |

| 10 | Female | 254 | 60 | 8/10 | 9 | 0 |

| 11 | Male | 140 | 7 | 9/10 | 2 | 0 |

| 12 | Female | 106 | 2 | 10/10 | 3 | 0 |

| Total | 2145 | 648 | Total | 2145 | 648 | |

| Events | Participants | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| Positive | 470 | 428 | 437 | 409 | 465 | 478 | 467 | 445 | 450 | 320 |

| Negative | 85 | 121 | 94 | 130 | 114 | 91 | 117 | 114 | 151 | 171 |

| Events | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Training | Test | |||||||||

| Num. of Participants | Gender | N. Traits | Acc | F1 | AUC | Acc | F1 | |||

| Mean | Std | Mean | Std | Mean | Std | |||||

| 1 | Male | 21 | 0.97 | 0.05 | 0.98 | 0.03 | 0.99 | 0.01 | 0.91 | 0.94 |

| 2 | Male | 2 (Pz) | 0.99 | 0.03 | 0.99 | 0.02 | 0.99 | 0.0 | 0.99 | 1.00 |

| 3 | Male | 2 (PO3) | 0.99 | 0.04 | 0.99 | 0.04 | 0.99 | 0.01 | 0.97 | 0.98 |

| 4 | Female | 2 (Pz) | 0.99 | 0.02 | 1.00 | 0.01 | 0.99 | 0.0 | 1.0 | 1.0 |

| 5 | Male | 2 (Oz) | 0.99 | 0.03 | 0.99 | 0.02 | 0.99 | 0.0 | 0.98 | 0.98 |

| 6 | Male | 2 (POz) | 0.99 | 0.02 | 0.99 | 0.01 | 0.99 | 0.01 | 0.98 | 0.99 |

| 9 | Male | 2 (Pz) | 1.0 | 0.01 | 1.0 | 0.01 | 1.0 | 1.0 | 1.0 | 1.0 |

| 10 | Female | 2 (P8) | 1.0 | 0.01 | 1.0 | 0.01 | 0.99 | 0.0 | 1.0 | 1.0 |

| 11 | Male | 2 (PO4) | 0.99 | 0.02 | 1.0 | 0.02 | 0.99 | 0.0 | 0.98 | 0.98 |

| 12 | Female | 2 (P3) | 0.99 | 0.05 | 0.99 | 0.05 | 0.99 | 0.0 | 1.0 | 1.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Tejada, L.A.; Puertas-González, A.; Yoshimura, N.; Koike, Y. Exploring EEG Characteristics to Identify Emotional Reactions under Videogame Scenarios. Brain Sci. 2021, 11, 378. https://doi.org/10.3390/brainsci11030378

Martínez-Tejada LA, Puertas-González A, Yoshimura N, Koike Y. Exploring EEG Characteristics to Identify Emotional Reactions under Videogame Scenarios. Brain Sciences. 2021; 11(3):378. https://doi.org/10.3390/brainsci11030378

Chicago/Turabian StyleMartínez-Tejada, Laura Alejandra, Alex Puertas-González, Natsue Yoshimura, and Yasuharu Koike. 2021. "Exploring EEG Characteristics to Identify Emotional Reactions under Videogame Scenarios" Brain Sciences 11, no. 3: 378. https://doi.org/10.3390/brainsci11030378

APA StyleMartínez-Tejada, L. A., Puertas-González, A., Yoshimura, N., & Koike, Y. (2021). Exploring EEG Characteristics to Identify Emotional Reactions under Videogame Scenarios. Brain Sciences, 11(3), 378. https://doi.org/10.3390/brainsci11030378