The N-Grams Based Text Similarity Detection Approach Using Self-Organizing Maps and Similarity Measures

Abstract

1. Introduction

2. Text Similarity Detection

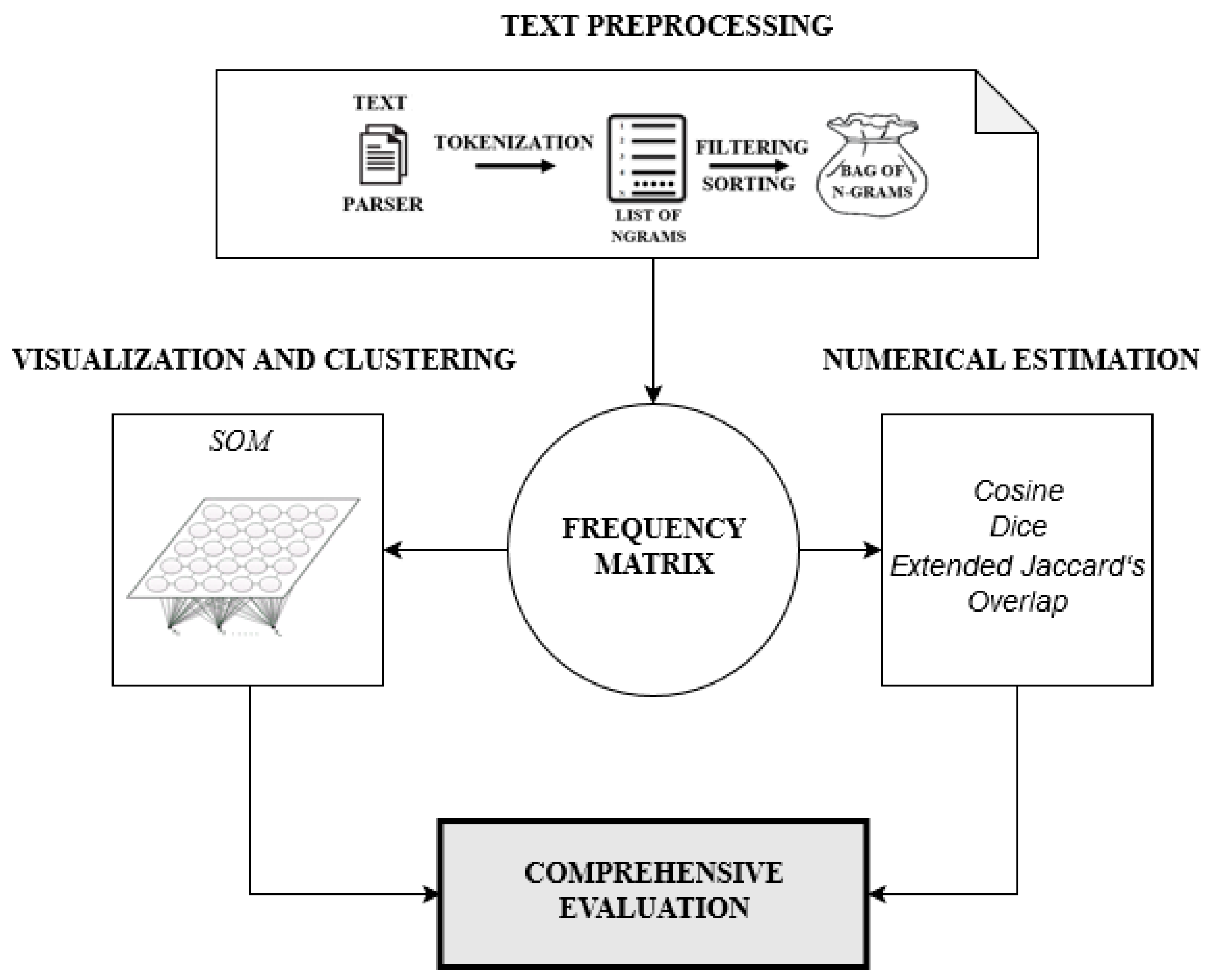

2.1. Proposed Approach to Evaluate Text Similarity

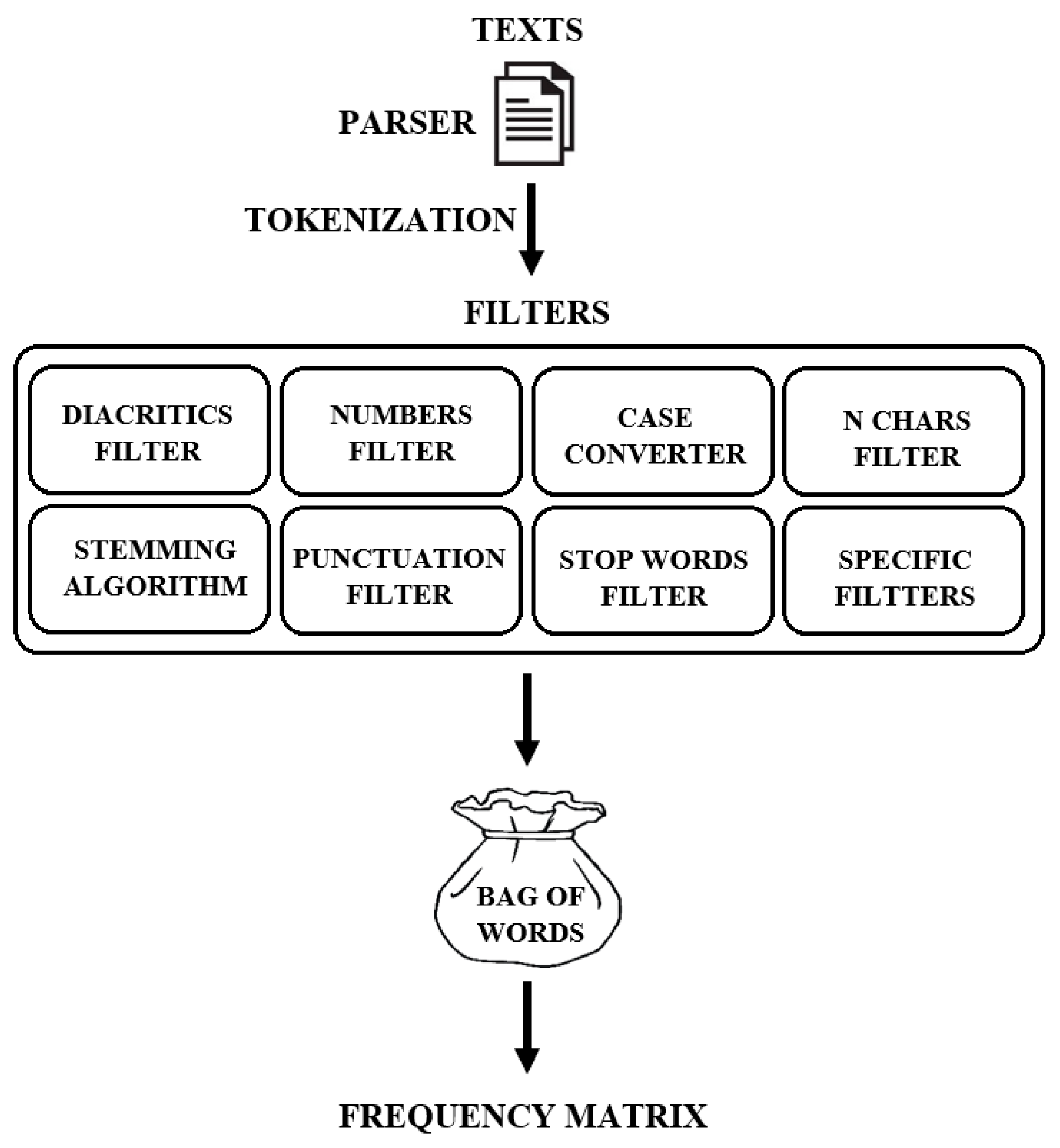

2.2. Preparation of Frequency Matrix

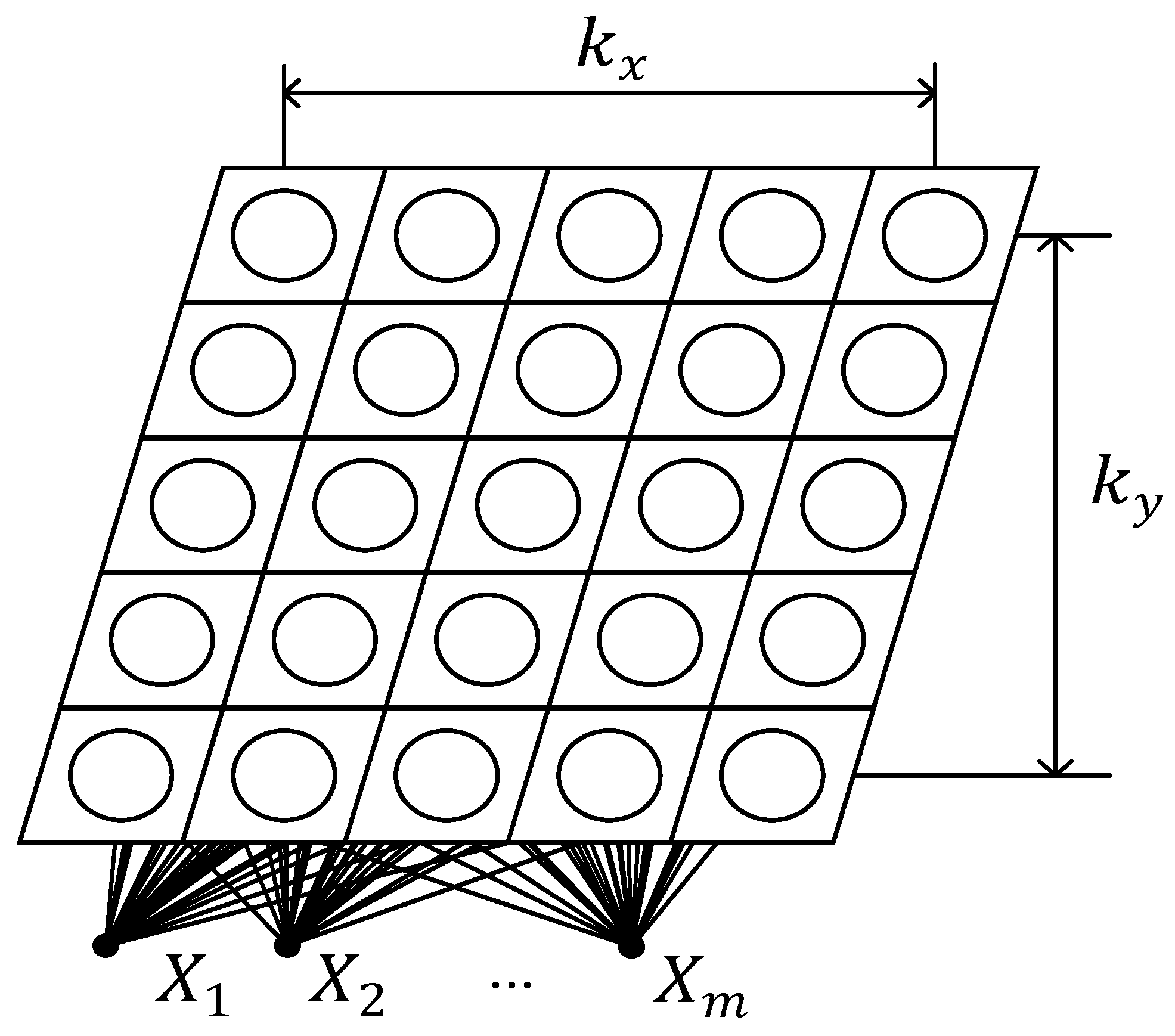

2.3. Self-Organizing Maps

2.4. Measures for Text Similarity Detection

3. Experimental Investigation

3.1. Dataset

- Q1—‘What is inheritance in object oriented programming?’

- Q2—‘Explain the PageRank algorithm that is used by the Google search engine’

- Q3—‘Explain the vector space model for Information Retrieval’

- Q4—‘Explain Bayes Theorem from probability theory’

- Q5—‘What is dynamic programming?’

- Near copy (cut)—participants were asked to answer the question by simply copying the text from the relevant Wikipedia article.

- Light revision (light)—participants were asked to base their answer on the text found in the Wikipedia article and were, once again, given no instructions about which parts of the article to copy.

- Heavy revision (heavy)—participants were once again asked to base their answer on the relevant Wikipedia article but were instructed to rephrase the text to generate the answer with the same meaning as the source text, but expressed using different words and structure.

- Non-plagiarism (non)—participants were provided with learning materials in the form of either lecture notes or sections from textbooks that could be used to answer the relevant question.

3.2. Steps of the Experiment

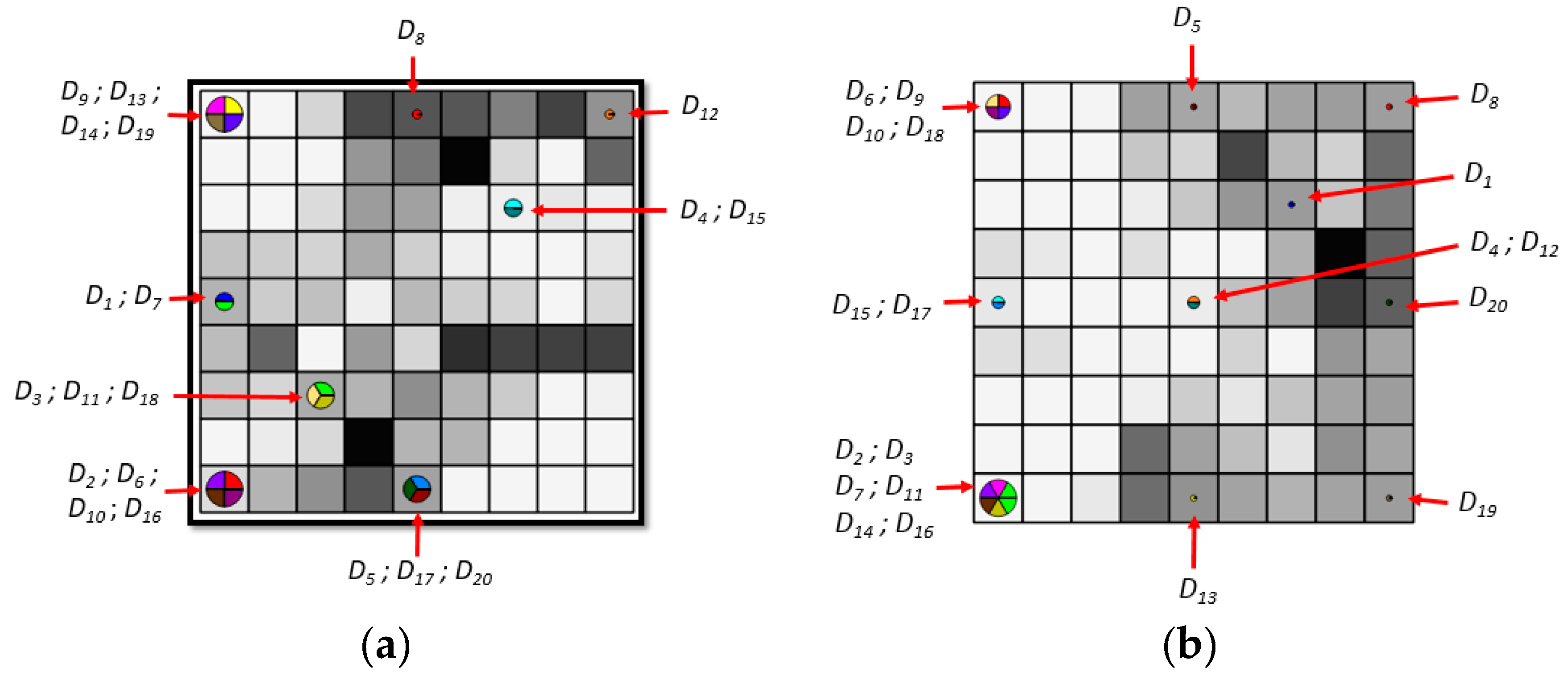

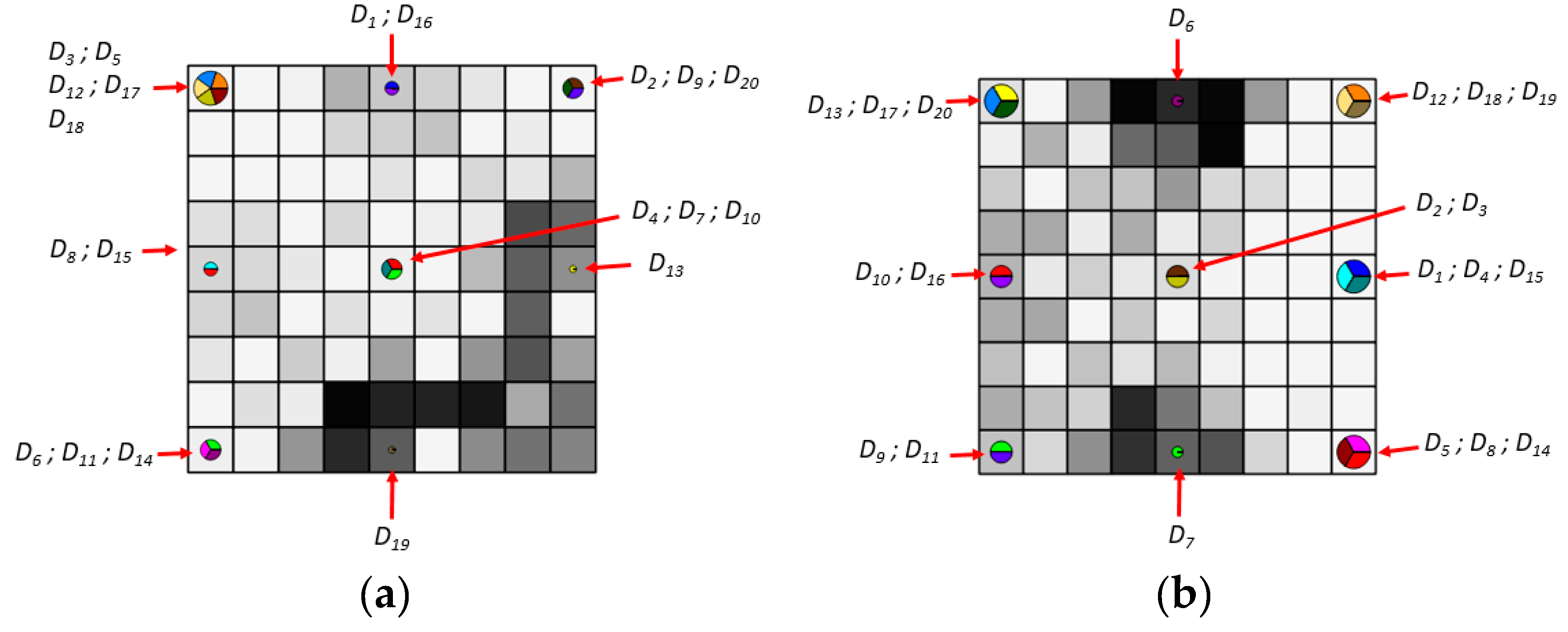

3.3. Experimental Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Miner, G.; Elder, J.; Fast, A.; Hill, T.; Nisbet, R.; Delen, D. Practical Text Mining and Statistical Analysis for Non-Structured Text Data Applications; Elsevier Inc.: Orlando, FL, USA, 2012. [Google Scholar]

- Kanaris, I.; Kanaris, K.; Houvardas, I.; Stamatatos, E. Words versus character n-grams for anti-spam filtering. Int. J. Artif. Intell. Tools 2007, 16, 1047–1067. [Google Scholar] [CrossRef]

- Mohammadi, H.; Khasteh, S.H. A Fast Text Similarity Measure for Large Document Collections using Multi-reference Cosine and Genetic Algorithm. arXiv 2018, arXiv:1810.03102. [Google Scholar]

- Camacho, J.E.P.; Ledeneva, Y.; García-Hernandez, R.A. Comparison of Automatic Keyphrase Extraction Systems in Scientific Papers. Res. Comput. Sci. 2016, 115, 181–191. [Google Scholar]

- Kundu, R.; Karthik, K. Contextual plagiarism detection using latent semantic analysis. Int. Res. J. Adv. Eng. Sci. 2017, 2, 214–217. [Google Scholar]

- Lopez-Gazpio, I.; Maritxalar, M.; Lapata, M.; Agirre, E. Word n-gram attention models for sentence similarity and inference. Expert Syst. Appl. 2019, 132, 1–11. [Google Scholar] [CrossRef]

- Bao, J.; Lyon, C.; Lane, P.C.R.; Ji, W.; Malcolm, J. Comparing Different Text Similarity Methods; Technical Report; University of Hertfordshire: Hatfield, UK, 2007; p. 461. [Google Scholar]

- Arora, S.; Khodak, M.; Saunshi, N.; Vodrahalli, K. A compressed sensingview of unsupervised text embeddings, bag-of-n-grams, and lstms. In Proceedings of the ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Gali, N.; Mariescu-Istodor, R.; Hostettler, D.; Fränti, P. Framework for syntactic string similarity measures. Expert Syst. Appl. 2019, 129, 169–185. [Google Scholar] [CrossRef]

- Nguyen, H.V.; Bai, L. Cosine Similarity Metric Learning for Face Verification. In Proceedings of the Computer Vision (ACCV 2010), Queenstown, New Zealand, 8–12 November 2010; Lecture Notes in Computer Science. Kimmel, R., Klette, R., Sugimoto, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6493. [Google Scholar]

- Niwattanakul, S.; Singthongchai, J.; Naenudorn, E.; Wanapu, S. Using of jaccard coefficient for keywords similarity. In Proceedings of the International Multi Conference of Engineers and Computer Scientists, Hong Kong, China, 13–15 March 2013. [Google Scholar]

- Zeimpekis, D.; Gallopoulos, E. TMG: A Matlab Toolbox for Generating Term-Text document Matrices from Text Collections; Technical Report HPCLAB-SCG 1/01-05; University of Patras: Patras, Greece, 2005. [Google Scholar]

- Berthold, M.R.; Cebron, N.; Dill, F.; Gabriel, T.R.; Kötter, T.; Meinl, T.; Ohl, P.; Sieb, C.; Thiel, K.; Wiswedel, B. Data Analysis, Machine Learning and Applications. In Studies in Classification, Data Analysis, and Knowledge Organization; Springer: Berlin, Germany, 2017. [Google Scholar]

- Ritthoff, O.; Klinkenberg, R.; Fisher, S.; Mierswa, I.; Felske, S. YALE: Yet Another Learning Environment; Technical Report 763; University of Dortmund: Dortmund, Germany, 2001; pp. 84–92. [Google Scholar]

- Stefanovič, P.; Kurasova, O. Creation of Text document Matrices and Visualization by Self-Organizing Map. Inf. Technol. Control 2014, 43, 37–46. [Google Scholar] [CrossRef]

- Porter, M.F. An algorithm for suffix stripping. Program 1980, 14, 130–137. [Google Scholar] [CrossRef]

- Li, B.; Liu, T.; Zhao, Z.; Wang, P.; Du, X. Neural Bag-of-Ngrams. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3067–3074. [Google Scholar]

- Balabantaray, R.C.; Sarma, C.; Jha, M. Document Clustering using K-Means and K-Medoids. arXiv 2015, arXiv:1502.07938. [Google Scholar]

- Shah, N.; Mahajan, S. Document Clustering: A Detailed Review. Int. J. Appl. Inf. Syst. 2012, 4, 30–38. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Zhai, C. A Survey of Text Clustering Algorithms. In Mining Text Data; Springer: Boston, MA, USA, 2012; pp. 77–128. [Google Scholar]

- Kohonen, T. Self-Organizing Maps, 3rd ed.; Springer Series in Information Sciences; Springer: Berlin, Germany, 2001. [Google Scholar]

- Stefanovič, P.; Kurasova, O. Visual analysis of self-organizing maps. Nonlinear Anal. Model. Control. 2011, 16, 488–504. [Google Scholar]

- Liu, Y.C.; Liu, M.; Wang, X.L. Application of Self-Organizing Maps in Text Clustering: A Review. In Applications of Self-Organizing Maps; Johnsson, M., Ed.; InTech: London, UK, 2012. [Google Scholar]

- Sharma, A.; Dey, S. Using Self-Organizing Maps for Sentiment Analysis. arXiv 2013, arXiv:1309.3946. [Google Scholar]

- Metzler, D.; Dumais, S.T.; Meek, C. Similarity Measures for Short Segments of Text. In Proceedings of the European Conference on Information Retrieval 2007, Rome, Italy, 2–5 April 2007; pp. 16–27. [Google Scholar]

- Rokach, L.; Maimon, O. Clustering Methods. In The Data Mining and Knowledge Discovery Handbook 2005; Springer: Boston, MA, USA, 2005; pp. 321–352. [Google Scholar]

- Clough, P.; Stevenson, M. Developing a Corpus of Plagiarized Short Answers. In Language Resources and Evaluation; Special Issue on Plagiarism and Authorship Analysis; Springer: Berlin, Germany, 2011. [Google Scholar]

- Demšar, J.; Curk, T.; Erjavec, A. Orange: Data Mining Toolbox in Python. J. Mach. Learn. Res. 2013, 14, 2349–2353. [Google Scholar]

| Filters | Description |

|---|---|

| Diacritics filter | Removes all diacritical marks. Diacritical marks are signs that have been attached to a character usually to indicate distinct sound or special pronunciation. Examples of words (terms) containing diacritical marks are naïve, jäger, réclame, etc. If the specific language texts are analyzed, the diacritical marks cannot be rejected because it can change the meaning of the word. For example, törn/torn (Swedish), sääri/saari (Finnish). |

| Number filter | Filters all terms that consist of digits, including decimal separators ‘,’ or ‘.’ and possible signs ‘+’ or ‘-’. |

| N chars filter | Filters all terms with less than the specified number N characters. |

| Case converter | Converts all words to lower or upper case. |

| Punctuation filter | Removes all punctuation characters of terms. |

| Stop words filter | Removes all words which are contained in the specified stop word list. Often words such as ‘there’, ‘where’, ‘that’, ‘when’, etc. compose the stop word list. Not all of them are important for texts analysis. However, the common word list can depend on the domain of texts. For example, if we analyze scientific papers, the words such as ‘describe’, ‘present’, ‘new’, ‘propose’, ‘method’, etc. also do not characterize the papers and it is not purposeful to include the words into the texts dictionary. Stop words list can be adapted for any language. |

| Stemming algorithm | The stemming algorithm separates the stem from the word [16]. For example, we have four words ‘accepted’, ‘acceptation’, ‘acceptance’, and ‘acceptably’. The stem of the words is ‘accept’, so only this word will be analyzed, other words are ignored. |

| Text Inside | Texts |

|---|---|

| text message | D1 |

| computer science | D2 |

| data mining and text mining | D3 |

| methods of text data mining | D4 |

| text | Message | Computer | Science | Data | Mining | and | Methods | of | |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | D1 |

| 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | D2 |

| 1 | 0 | 0 | 0 | 1 | 2 | 1 | 0 | 0 | D3 |

| 1 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 1 | D4 |

| Cosine Measure | ||||

| D1 | D2 | D3 | D4 | |

| D1 | 100 | 0 | 26 | 31 |

| D2 | 0 | 100 | 0 | 0 |

| D3 | 26 | 0 | 100 | 67 |

| D4 | 31 | 0 | 67 | 100 |

| Extended Jaccard’s Measure | ||||

| D1 | D2 | D3 | D4 | |

| D1 | 100 | 0 | 13 | 17 |

| D2 | 0 | 100 | 0 | 0 |

| D3 | 13 | 0 | 100 | 50 |

| D4 | 17 | 0 | 50 | 100 |

| Dice Measure | ||||

| D1 | D2 | D3 | D4 | |

| D1 | 100 | 0 | 22 | 29 |

| D2 | 0 | 100 | 0 | 0 |

| D3 | 22 | 0 | 100 | 67 |

| D4 | 29 | 0 | 67 | 100 |

| Overlap Measure | ||||

| D1 | D2 | D3 | D4 | |

| D1 | 100 | 0 | 50 | 50 |

| D2 | 0 | 100 | 0 | 0 |

| D3 | 50 | 0 | 100 | 80 |

| D4 | 50 | 0 | 80 | 100 |

| Texts ID | Category | ||||

|---|---|---|---|---|---|

| Q1 | Q2 | Q3 | Q4 | Q5 | |

| D1 | non | cut | light | heavy | non |

| D2 | non | non | cut | light | heavy |

| D3 | heavy | non | non | cut | light |

| D4 | cut | light | heavy | non | non |

| D5 | light | heavy | non | non | cut |

| D6 | non | heavy | light | cut | non |

| D7 | non | non | heavy | light | cut |

| D8 | light | cut | non | non | heavy |

| D9 | non | heavy | light | cut | non |

| D10 | non | non | heavy | light | cut |

| D11 | cut | non | non | heavy | light |

| D12 | heavy | light | cut | non | non |

| D13 | non | heavy | light | cut | non |

| D14 | non | non | heavy | light | cut |

| D15 | cut | non | non | heavy | light |

| D16 | non | non | heavy | light | cut |

| D17 | cut | non | non | heavy | light |

| D18 | light | cut | non | non | heavy |

| D19 | heavy | light | cut | non | non |

| D20 | Original | Original | Original | Original | Original |

| Q1 | Cut | Light | Heavy | Non | ||||||||||||||||

| Cosine | 61 | 51 | 55 | 96 | 94 | 19 | 21 | 7 | 53 | 0 | 1 | 0 | 0 | 5 | 0 | 0 | 4 | 0 | 0 | |

| Dice | 59 | 50 | 44 | 96 | 94 | 19 | 20 | 6 | 53 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 3 | 0 | 0 | |

| Extended Jaccard’s | 42 | 33 | 28 | 92 | 88 | 10 | 11 | 3 | 36 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | |

| Overlap | 80 | 66 | 113 | 99 | 97 | 26 | 30 | 10 | 54 | 0 | 2 | 0 | 0 | 8 | 0 | 0 | 5 | 0 | 0 | |

| Q2 | Cut | Light | Heavy | Non | ||||||||||||||||

| Cosine | 64 | 28 | 0 | 16 | 29 | 54 | 25 | 9 | 8 | 7 | 0 | 1 | 0 | 7 | 0 | 1 | 0 | 0 | 0 | |

| Dice | 58 | 27 | 0 | 11 | 23 | 50 | 18 | 8 | 7 | 6 | 0 | 1 | 0 | 6 | 0 | 1 | 0 | 0 | 0 | |

| Extended Jaccard’s | 41 | 15 | 0 | 6 | 13 | 33 | 10 | 4 | 4 | 3 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | |

| Overlap | 100 | 39 | 0 | 42 | 58 | 81 | 56 | 14 | 12 | 12 | 0 | 1 | 0 | 11 | 0 | 1 | 0 | 0 | 0 | |

| Q3 | Cut | Light | Heavy | Non | ||||||||||||||||

| Cosine | 79 | 0 | 24 | 51 | 18 | 77 | 46 | 36 | 32 | 14 | 17 | 45 | 3 | 3 | 6 | 6 | 4 | 2 | 5 | |

| Dice | 78 | 0 | 22 | 51 | 17 | 77 | 45 | 36 | 32 | 14 | 17 | 44 | 2 | 3 | 6 | 6 | 3 | 2 | 5 | |

| Extended Jaccard’s | 64 | 0 | 12 | 34 | 10 | 63 | 29 | 22 | 19 | 8 | 9 | 28 | 1 | 1 | 3 | 3 | 2 | 1 | 3 | |

| Overlap | 92 | 0 | 36 | 54 | 20 | 79 | 57 | 39 | 33 | 15 | 17 | 51 | 3 | 4 | 8 | 6 | 6 | 2 | 5 | |

| Q4 | Cut | Light | Heavy | Non | ||||||||||||||||

| Cosine | 60 | 36 | 36 | 98 | 24 | 13 | 59 | 18 | 43 | 14 | 27 | 6 | 93 | 0 | 0 | 0 | 0 | 1 | 0 | |

| Dice | 52 | 36 | 36 | 98 | 21 | 13 | 58 | 17 | 42 | 13 | 27 | 4 | 93 | 0 | 0 | 0 | 0 | 1 | 0 | |

| Extended Jaccard’s | 35 | 22 | 22 | 97 | 12 | 7 | 41 | 9 | 27 | 7 | 15 | 2 | 87 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Overlap | 105 | 39 | 39 | 99 | 39 | 17 | 66 | 25 | 56 | 22 | 28 | 16 | 96 | 0 | 0 | 0 | 0 | 1 | 0 | |

| Q5 | Cut | Light | Heavy | Non | ||||||||||||||||

| Cosine | 42 | 36 | 77 | 50 | 82 | 35 | 9 | 21 | 62 | 38 | 3 | 31 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | |

| Dice | 30 | 35 | 75 | 40 | 81 | 28 | 9 | 12 | 58 | 36 | 3 | 28 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | |

| Extended Jaccard’s | 17 | 21 | 59 | 25 | 69 | 16 | 5 | 7 | 40 | 22 | 1 | 16 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Overlap | 100 | 46 | 97 | 100 | 97 | 67 | 13 | 65 | 93 | 54 | 6 | 50 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stefanovič, P.; Kurasova, O.; Štrimaitis, R. The N-Grams Based Text Similarity Detection Approach Using Self-Organizing Maps and Similarity Measures. Appl. Sci. 2019, 9, 1870. https://doi.org/10.3390/app9091870

Stefanovič P, Kurasova O, Štrimaitis R. The N-Grams Based Text Similarity Detection Approach Using Self-Organizing Maps and Similarity Measures. Applied Sciences. 2019; 9(9):1870. https://doi.org/10.3390/app9091870

Chicago/Turabian StyleStefanovič, Pavel, Olga Kurasova, and Rokas Štrimaitis. 2019. "The N-Grams Based Text Similarity Detection Approach Using Self-Organizing Maps and Similarity Measures" Applied Sciences 9, no. 9: 1870. https://doi.org/10.3390/app9091870

APA StyleStefanovič, P., Kurasova, O., & Štrimaitis, R. (2019). The N-Grams Based Text Similarity Detection Approach Using Self-Organizing Maps and Similarity Measures. Applied Sciences, 9(9), 1870. https://doi.org/10.3390/app9091870