Selectively Connected Self-Attentions for Semantic Role Labeling

Abstract

:1. Introduction

2. Backgrounds

2.1. Semantic Role Labeling

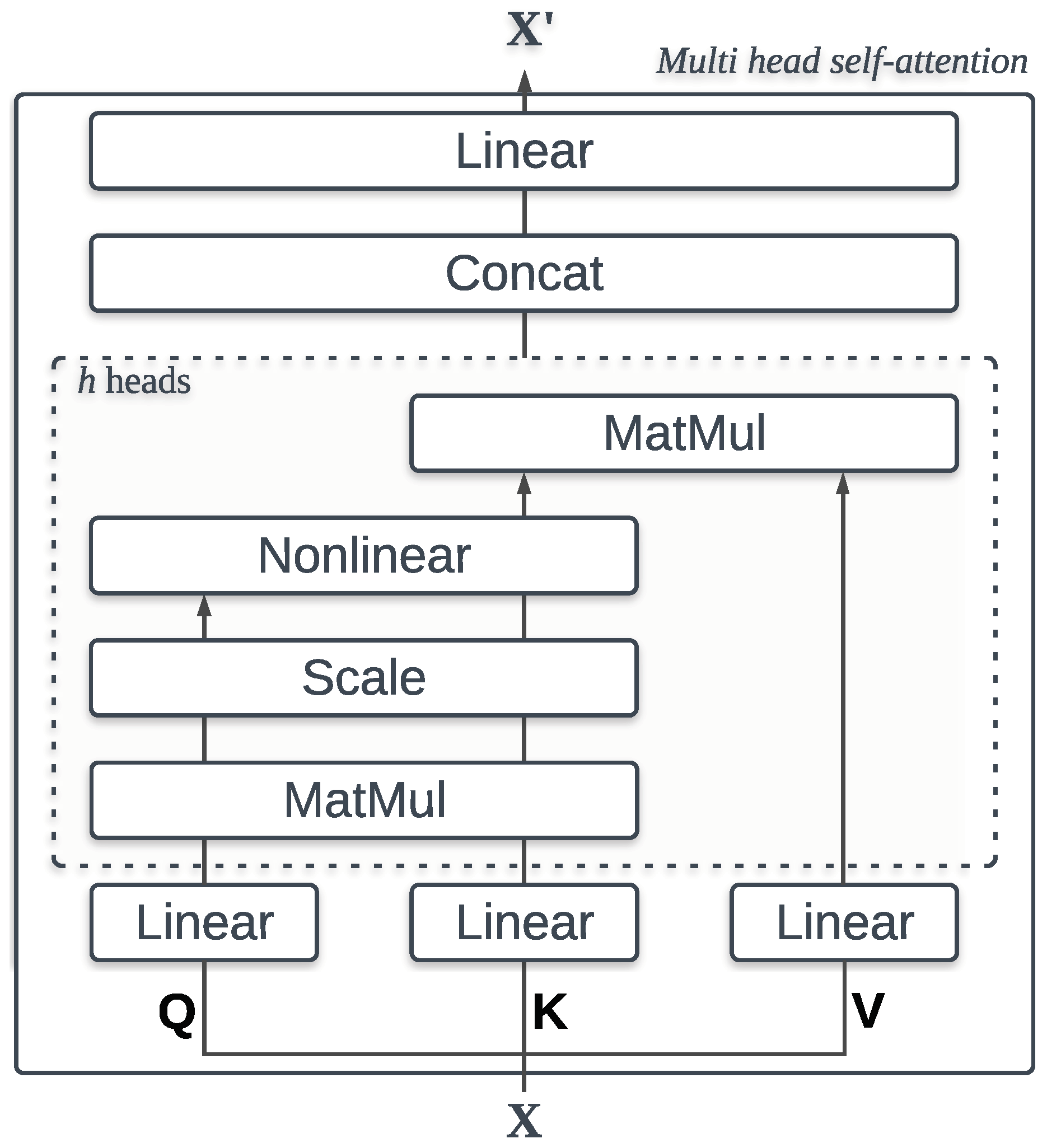

2.2. Attention Mechanisms

3. Selectively Connected Attentive Representation

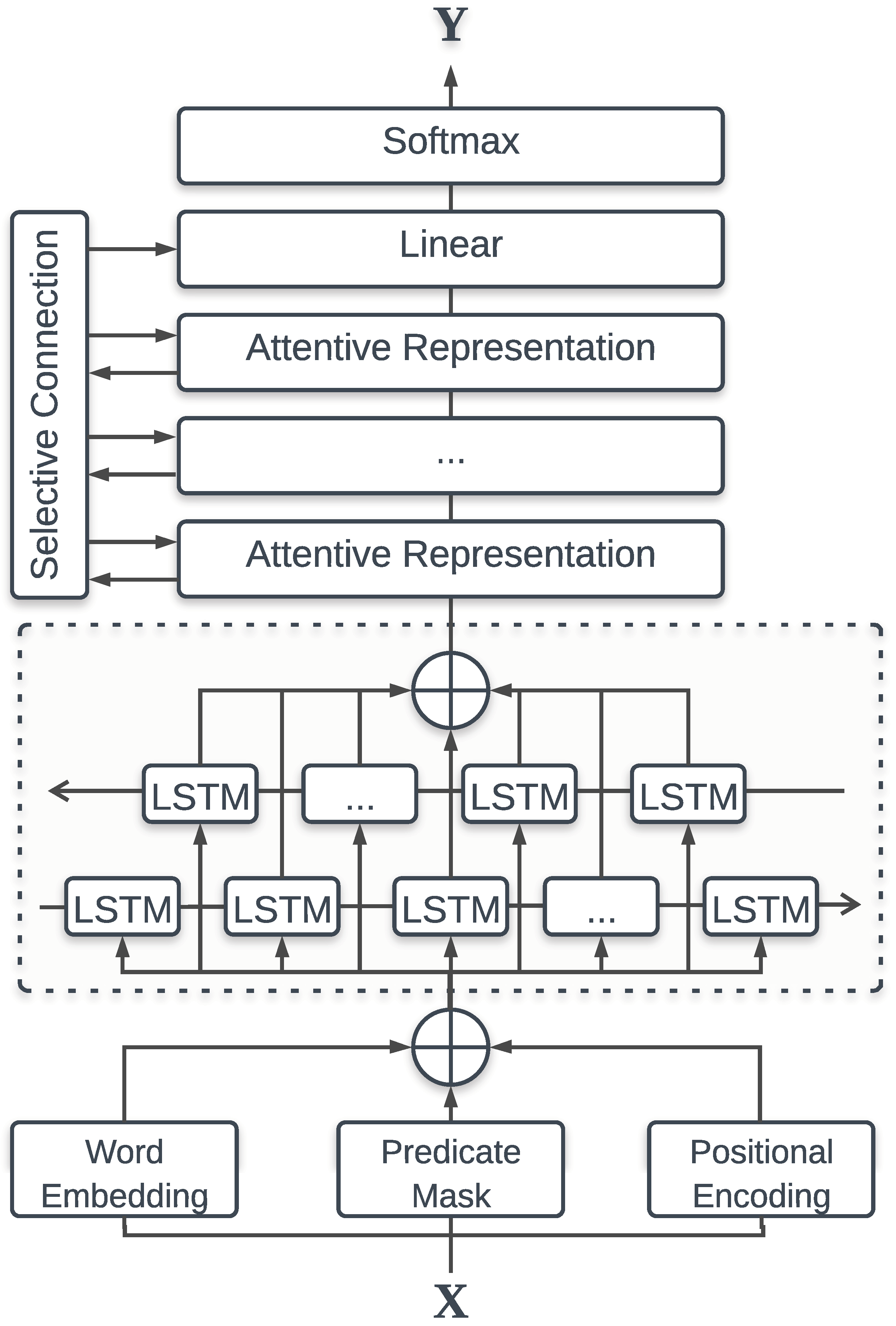

3.1. Embedding

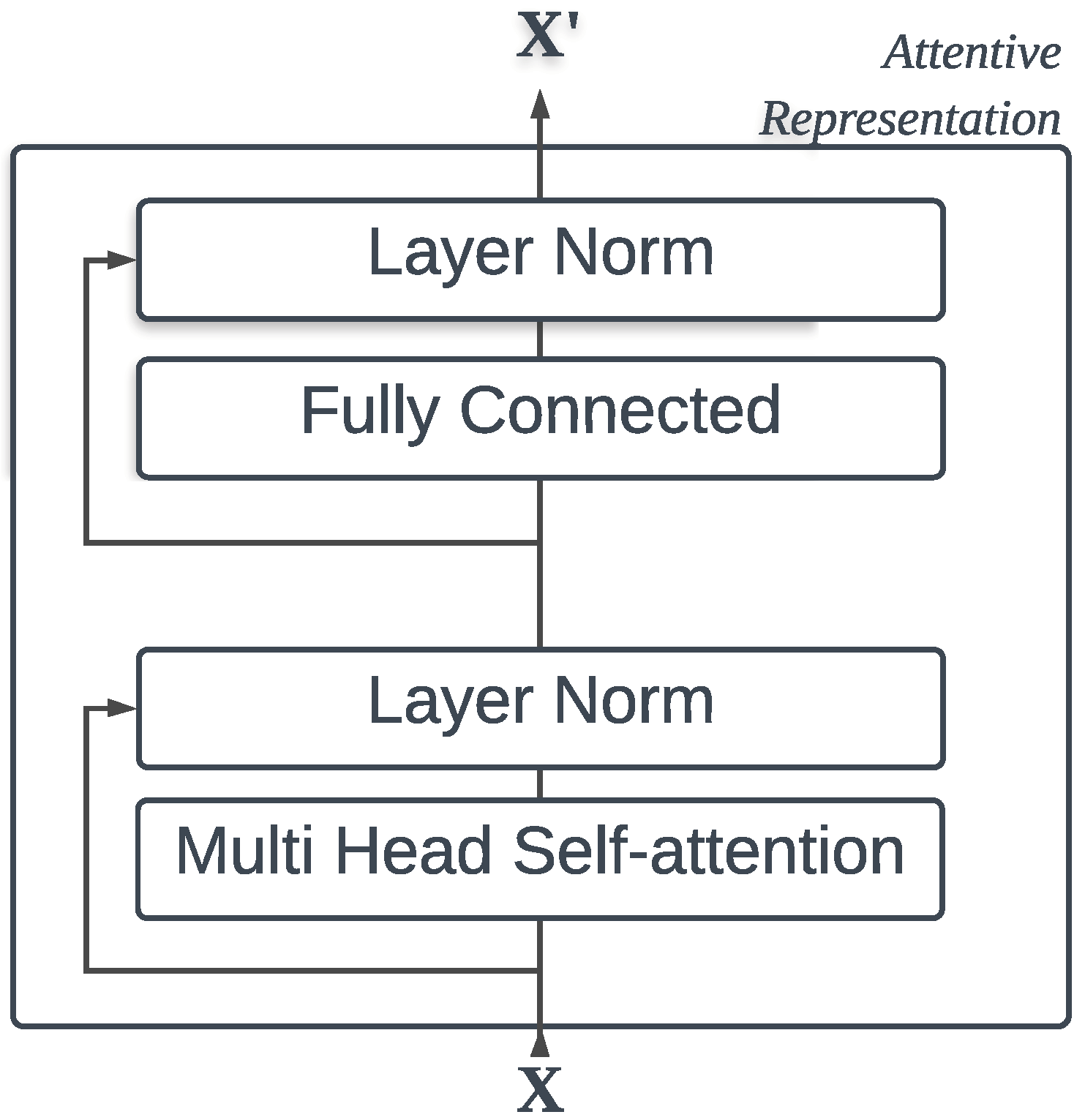

3.2. Attentive Representation

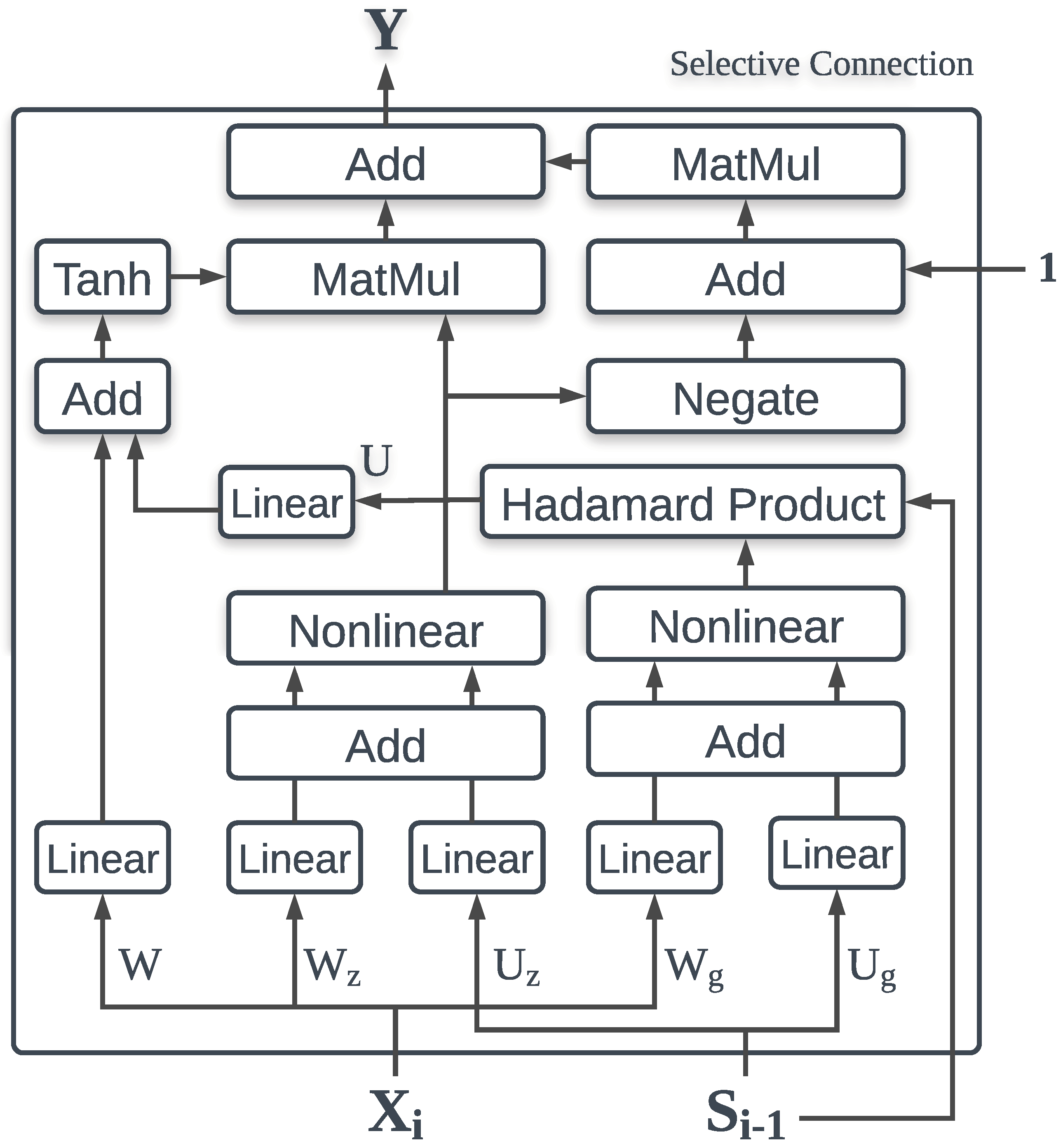

3.3. Selective Connection

3.4. Overall Architecture

3.5. Training

4. Experiments

4.1. Datasets

4.2. Experimental Setup

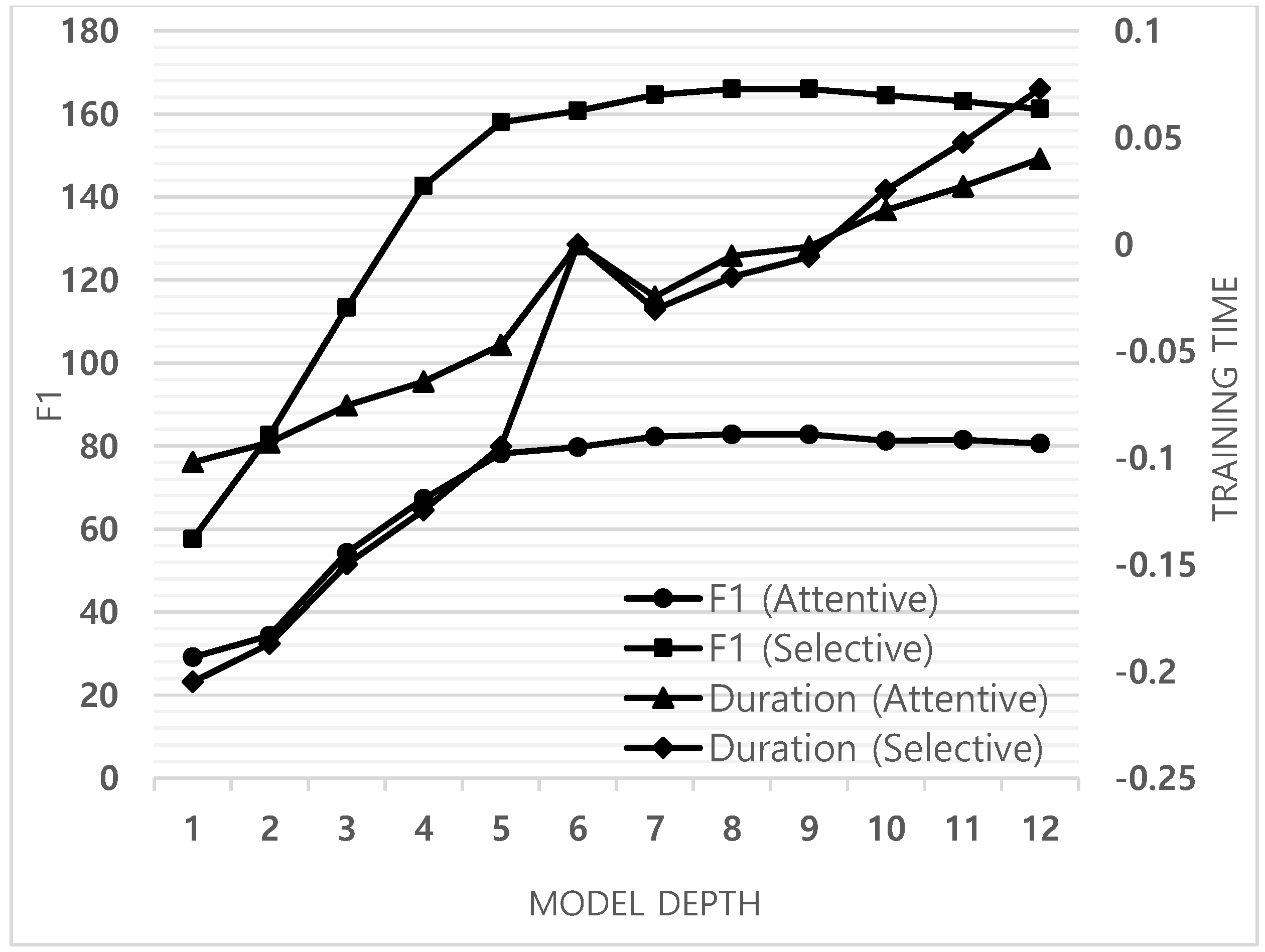

4.3. Experimental Results

5. Related Work

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Dowty, D. Thematic proto-roles and argument selection. Language 1991, 67, 547–619. [Google Scholar] [CrossRef]

- Fillmore, C.J. Frame semantics and the nature of language. Ann. N. Y. Acad. Sci. 1976, 280, 20–32. [Google Scholar] [CrossRef]

- He, L.; Lewis, M.; Zettlemoyer, L. Question-answer driven semantic role labeling: Using natural language to annotate natural language. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 643–653. [Google Scholar]

- Liu, D.; Gildea, D. Semantic role features for machine translation. In Proceedings of the 23rd International Conference on Computational Linguistics, Beijing, China, 23–27 August 2010; pp. 716–724. [Google Scholar]

- Collobert, R.; Weston, J. A unified architecture for natural language processing: Deep neural networks with multitask learning. In Proceedings of the 25th International Conference On Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 160–167. [Google Scholar]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Zhou, J.; Xu, W. End-to-end learning of semantic role labeling using recurrent neural networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015; pp. 1127–1137. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv, 2014; arXiv:1409.1259. [Google Scholar]

- He, L.; Lee, K.; Lewis, M.; Zettlemoyer, L. Deep Semantic Role Labeling: What Works and What’s Next. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 473–483. [Google Scholar]

- Marcheggiani, D.; Frolov, A.; Titov, I. A Simple and Accurate Syntax-Agnostic Neural Model for Dependency-based Semantic Role Labeling. In Proceedings of the 21st Conference on Computational Natural Language Learning (CoNLL 2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 411–420. [Google Scholar]

- He, L.; Lee, K.; Levy, O.; Zettlemoyer, L. Jointly predicting predicates and arguments in neural semantic role labeling. arXiv, 2018; arXiv:1805.04787. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv, 2014; arXiv:1409.0473. [Google Scholar]

- Lin, Z.; Feng, M.; Santos, C.N.d.; Yu, M.; Xiang, B.; Zhou, B.; Bengio, Y. A structured self-attentive sentence embedding. arXiv, 2017; arXiv:1703.03130. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv, 2017; arXiv:1706.03762. [Google Scholar]

- Tan, Z.; Wang, M.; Xie, J.; Chen, Y.; Shi, X. Deep Semantic Role Labeling with Self-Attention. arXiv, 2017; arXiv:1712.01586. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv, 2018; arXiv:1802.05365. [Google Scholar]

- Strubell, E.; Verga, P.; Andor, D.; Weiss, D.; McCallum, A. Linguistically-Informed Self-Attention for Semantic Role Labeling. arXiv, 2018; arXiv:1804.08199. [Google Scholar]

- Socher, R.; Lin, C.C.; Manning, C.; Ng, A.Y. Parsing natural scenes and natural language with recursive neural networks. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 129–136. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Carreras, X.; Màrquez, L. Introduction to the CoNLL-2005 shared task: Semantic role labeling. In Proceedings of the Ninth Conference on Computational Natural Language Learning (CoNLL-2005), Ann Arbor, MI, USA, 29–30 June 2005; pp. 152–164. [Google Scholar]

- Pradhan, S.; Moschitti, A.; Xue, N.; Uryupina, O.; Zhang, Y. CoNLL-2012 shared task: Modeling multilingual unrestricted coreference in OntoNotes. In Proceedings of the Joint Conference on EMNLP and CoNLL-Shared Task, Association for Computational Linguistics, Jeju Island, Korea, 13 July 2012; pp. 1–40. [Google Scholar]

- Saxe, A.M.; McClelland, J.L.; Ganguli, S. Exact solutions to the nonlinear dynamics of learning in deep linear neural networks. arXiv, 2013; arXiv:1312.6120. [Google Scholar]

- FitzGerald, N.; Täckström, O.; Ganchev, K.; Das, D. Semantic Role Labeling with Neural Network Factors. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing (EMNLP ’15), Lisbon, Portugal, 17–21 September 2015; pp. 960–970. [Google Scholar]

- Pradhan, S.; Ward, W.; Hacioglu, K.; Martin, J.H.; Jurafsky, D. Semantic role labeling using different syntactic views. In Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics, Ann Arbor, MI, USA, 25–30 June 2005; pp. 581–588. [Google Scholar]

- Surdeanu, M.; Turmo, J. Semantic role labeling using complete syntactic analysis. In Proceedings of the Ninth Conference on Computational Natural Language Learning (CoNLL-2005), Ann Arbor, MI, USA, 29–30 June 2005; pp. 221–224. [Google Scholar]

- Palmer, M.; Gildea, D.; Xue, N. Semantic role labeling. Synth. Lect. Hum. Lang. Technol. 2010, 3, 1–103. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P.P. Natural Language Processing (Almost) from Scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Fonseca, E.R.; Rosa, J.L.G. A two-step convolutional neural network approach for semantic role labeling. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–7. [Google Scholar]

- Marcheggiani, D.; Titov, I. Encoding Sentences with Graph Convolutional Networks for Semantic Role Labeling. arXiv, 2017; arXiv:1703.04826. [Google Scholar]

- Srivastava, R.K.; Greff, K.; Schmidhuber, J. Training very deep networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2377–2385. [Google Scholar]

- Cheng, J.; Dong, L.; Lapata, M. Long short-term memory-networks for machine reading. arXiv, 2016; arXiv:1601.06733. [Google Scholar]

- Roth, M.; Lapata, M. Neural Semantic Role Labeling with Dependency Path Embeddings. arXiv, 2016; arXiv:1605.07515. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference On Knowledge Discovery And Data Mining, New York City, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar]

- Parikh, A.P.; Täckström, O.; Das, D.; Uszkoreit, J. A decomposable attention model for natural language inference. arXiv, 2016; arXiv:1606.01933. [Google Scholar]

- Paulus, R.; Xiong, C.; Socher, R. A deep reinforced model for abstractive summarization. arXiv, 2017; arXiv:1705.04304. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv, 2018; arXiv:1810.04805. [Google Scholar]

- Zhou, L.; Zhou, Y.; Corso, J.J.; Socher, R.; Xiong, C. End-to-end dense video captioning with masked transformer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8739–8748. [Google Scholar]

| Model | Development | WSJ Test | Brown Test | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |||

| Ours+ELMo | 83.1 | 85.6 | 85.5 | 86.4 | 81.0 | 86.6 | 75.8 | 77.6 | 77.2 | ||

| Ours | 82.8 | 82.6 | 82.2 | 83.6 | 80.1 | 83.3 | 73.5 | 74.0 | 74.9 | ||

| He et al. (2018) | - | - | 85.3 | 84.8 | 87.2 | 86.0 | 73.9 | 78.4 | 76.1 | ||

| Tan et al. | 82.6 | 83.6 | 83.1 | 84.5 | 85.2 | 84.8 | 73.5 | 74.6 | 74.1 | ||

| He et al. (2017) | 81.6 | 81.6 | 81.6 | 83.1 | 83.0 | 83.1 | 72.9 | 71.4 | 72.1 | ||

| Zhou and Xu | 79.7 | 79.4 | 79.6 | 82.9 | 82.8 | 82.8 | 70.7 | 68.2 | 69.4 | ||

| FitzGerald et al. | 81.2 | 76.7 | 78.9 | 82.5 | 78.2 | 80.3 | 74.5 | 70.0 | 72.2 | ||

| Model | Development | OntoNotes Test | |||||

|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | ||

| Ours+ELMo | 82.9 | 81.6 | 81.9 | 83.2 | 83.8 | 83.6 | |

| Ours | 81.8 | 81.2 | 81.3 | 81.4 | 82.4 | 82.8 | |

| He et al. (2018) | 81.8 | 81.4 | 81.5 | 81.7 | 83.3 | 83.4 | |

| Tan et al. | 82.2 | 83.6 | 82.9 | 81.9 | 83.6 | 82.7 | |

| He et al. (2017) | 81.7 | 81.4 | 81.5 | 81.8 | 81.6 | 81.7 | |

| Zhou and Xu | - | - | 81.1 | - | - | 81.3 | |

| FitzGerald et al. | 81.0 | 78.5 | 78.9 | 81.2 | 79.0 | 80.1 | |

| Emb | Seq | Depth | SC | F1 | |

|---|---|---|---|---|---|

| 1 | ELMo | BiLSTMs | 4 | Use | 72.5 |

| ELMo | BiLSTMs | 8 | Use | 85.2 | |

| ELMo | BiLSTMs | 12 | Use | 84.8 | |

| 2 | Word2Vec | BiLSTMs | 4 | Use | 66.0 |

| Word2Vec | BiLSTMs | 8 | Use | 83.1 | |

| Word2Vec | BiLSTMs | 12 | Use | 81.7 | |

| 3 | ELMo | FC | 4 | Use | 64.2 |

| ELMo | FC | 8 | Use | 79.7 | |

| ELMo | FC | 12 | Use | 75.3 | |

| 4 | ELMo | FC | 4 | N/A | 71.3 |

| ELMo | FC | 8 | N/A | 82.2 | |

| ELMo | FC | 12 | N/A | 82.2 | |

| 5 | Word2Vec | FC | 4 | N/A | 68.9 |

| Word2Vec | FC | 8 | N/A | 80.7 | |

| Word2Vec | FC | 12 | N/A | 80.3 |

| N-th Layer | Inferred Labels |

|---|---|

| 4 | rat] chased] |

| 5 | the rat] chased] the birds] |

| 6 | the rat] chased] the birds] |

| 7 | the rat] chased] the bird] that saw the cat] |

| 8 | the rat] chased] the bird] that saw] the cat] |

| 9 | the rat] chased] the bird that saw the cat] that ate] the starling] |

| 10 | the rat] chased] the bird] that saw] the cat] that ate] the starling] |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J. Selectively Connected Self-Attentions for Semantic Role Labeling. Appl. Sci. 2019, 9, 1716. https://doi.org/10.3390/app9081716

Park J. Selectively Connected Self-Attentions for Semantic Role Labeling. Applied Sciences. 2019; 9(8):1716. https://doi.org/10.3390/app9081716

Chicago/Turabian StylePark, Jaehui. 2019. "Selectively Connected Self-Attentions for Semantic Role Labeling" Applied Sciences 9, no. 8: 1716. https://doi.org/10.3390/app9081716

APA StylePark, J. (2019). Selectively Connected Self-Attentions for Semantic Role Labeling. Applied Sciences, 9(8), 1716. https://doi.org/10.3390/app9081716