Numerical Evaluation of Sample Gathering Solutions for Mobile Robots

Abstract

1. Introduction

2. Problem Formulation

- the team of robots gathers (collects) all samples from graph G in the storage node within minimum time;

- each robot can carry at most one sample at any moment;

- the total amount of energy spent by each robot is at most its initially available energy.

3. Mathematical Model and Optimal Solution

- (i)

- Determine optimal paths in graph G from storage node to all nodes containing samples.

- (ii)

- Formulate linear constraints for correctly picking samples and for not exceeding robot’s available energy based on a given allocation of each robot to pick specific samples.

- (iii)

- Create a cost function based on robot-to-sample allocation and on necessary time for gathering all samples.

- (iv)

- Cast the above steps in a form suitable for applying existing optimization algorithms and thus find the desired robot-to-sample allocations.

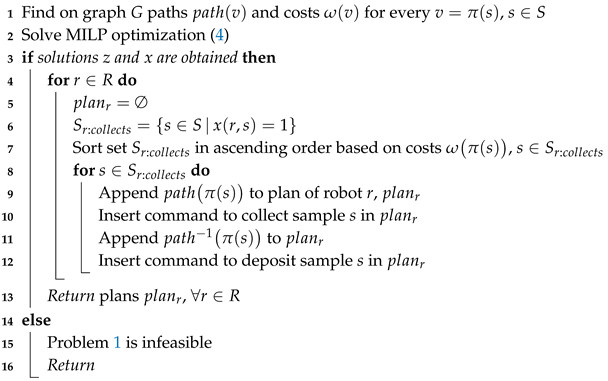

| Algorithm 1: Optimal solution |

| Input: Output: Robot movement plans  |

4. Sub-Optimal Planning Methods

4.1. Quadratic Programming Relaxation

4.2. Iterative Solution

| Algorithm 2: Iterative heuristic solution |

| Input: Output: Robot-to-sample assignments  |

5. Numerical Evaluation and Comparative Analysis

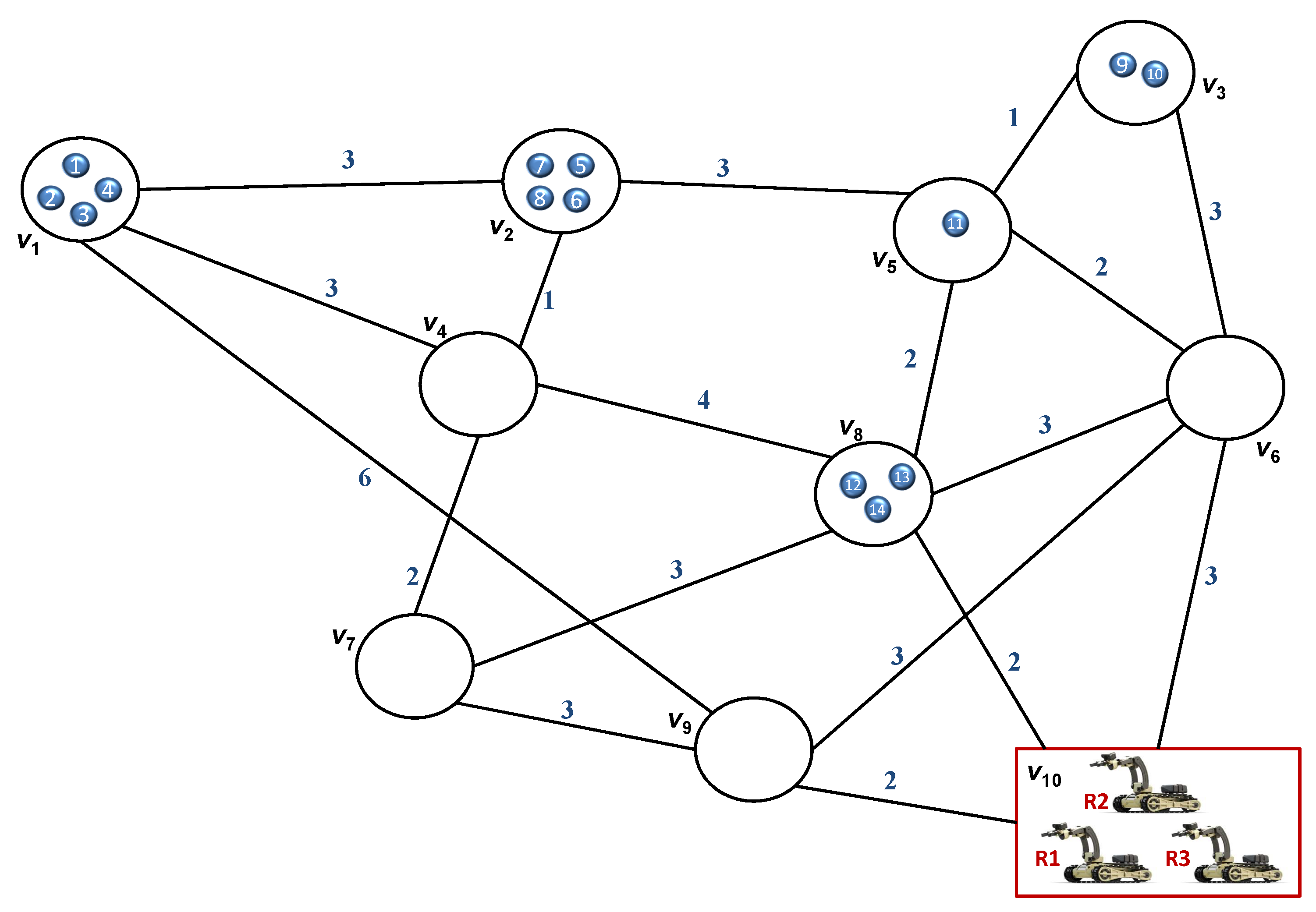

5.1. Additional Examples

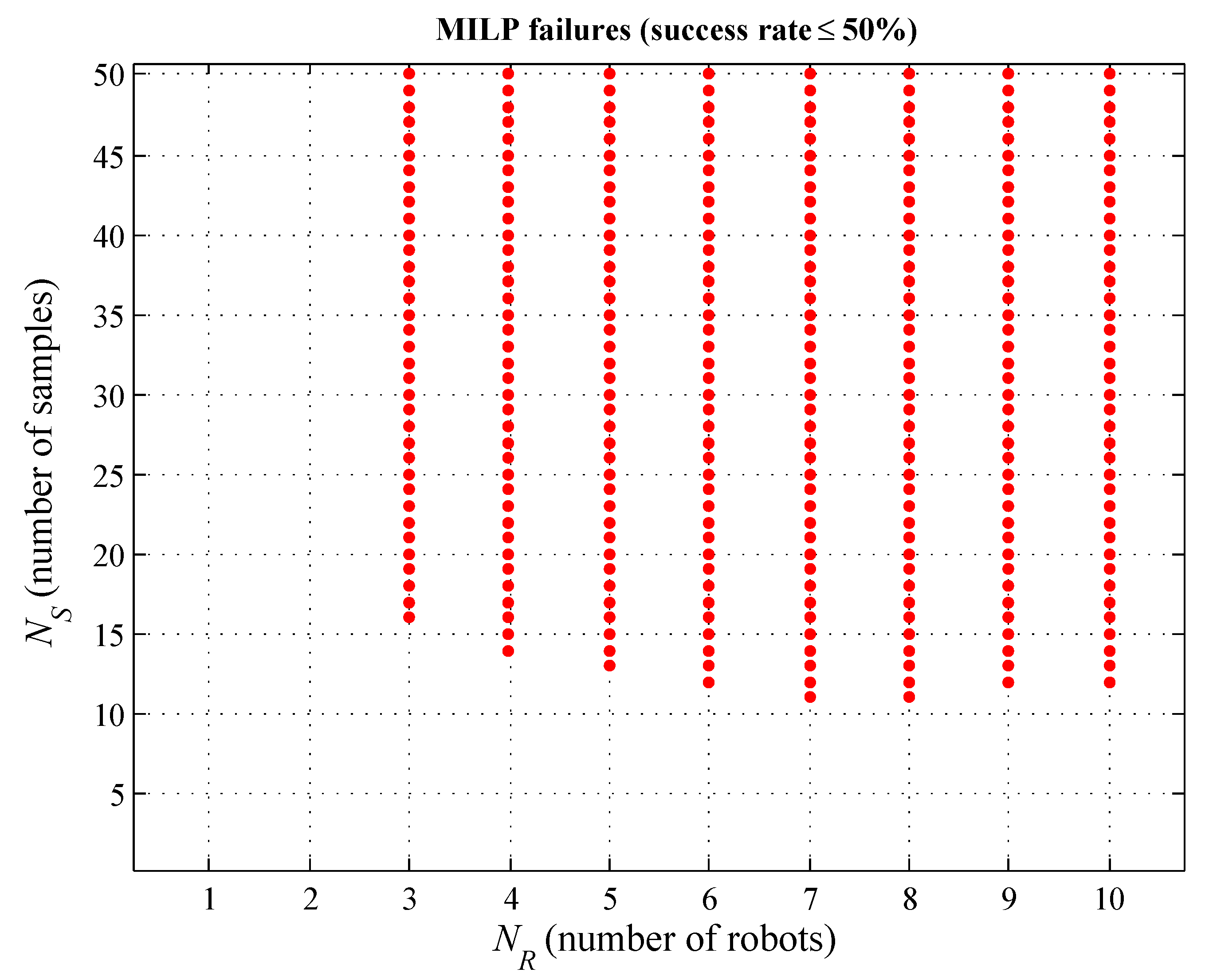

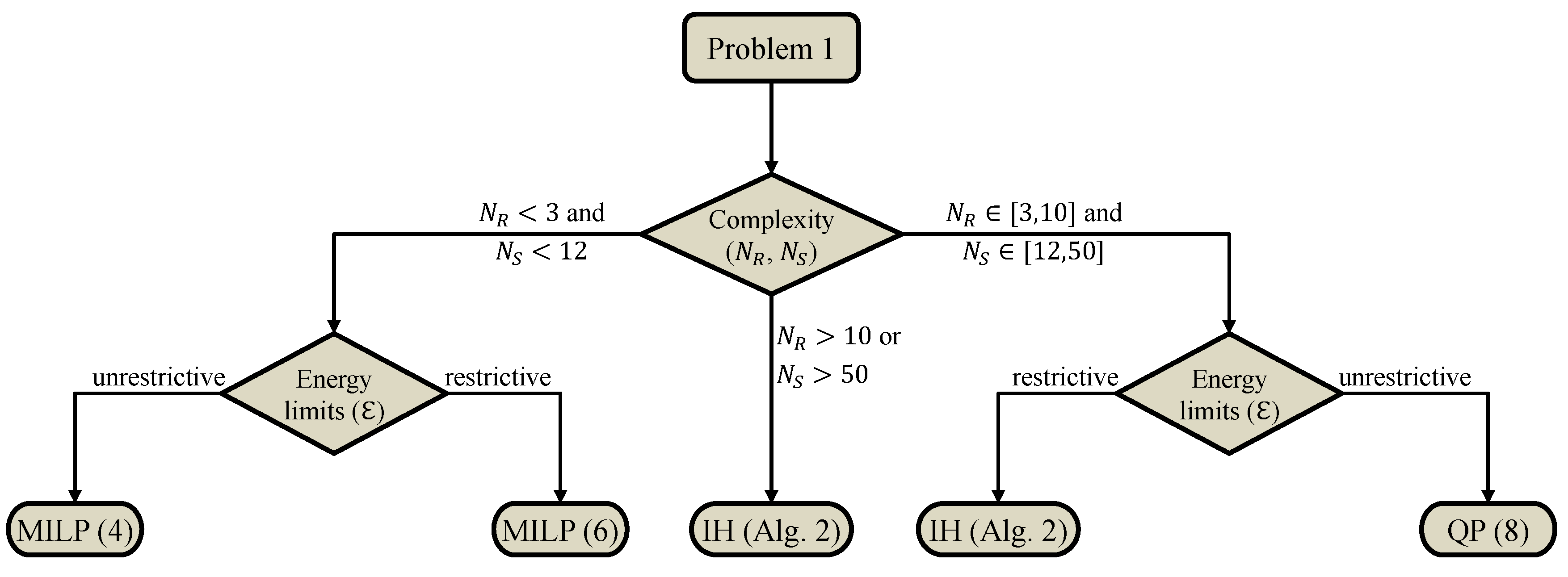

- The running time of the MILP optimization may exhibit unpredictable behaviors with respect to the team size and to the number and position of samples, leading to impossibility of obtaining a solution in some cases;

- In contrast to MILP, the running times of the QP and IH solutions have insignificant variations when small changes are made in the environment;

- When MILP optimization finishes, the sub-optimal costs obtained by solutions from Section 4 are generally acceptable when compared to the optimal cost;

- In some cases the QP cost was better than the one obtained by IH, while in other cases the vice versa, but again the observed differences were fairly small.

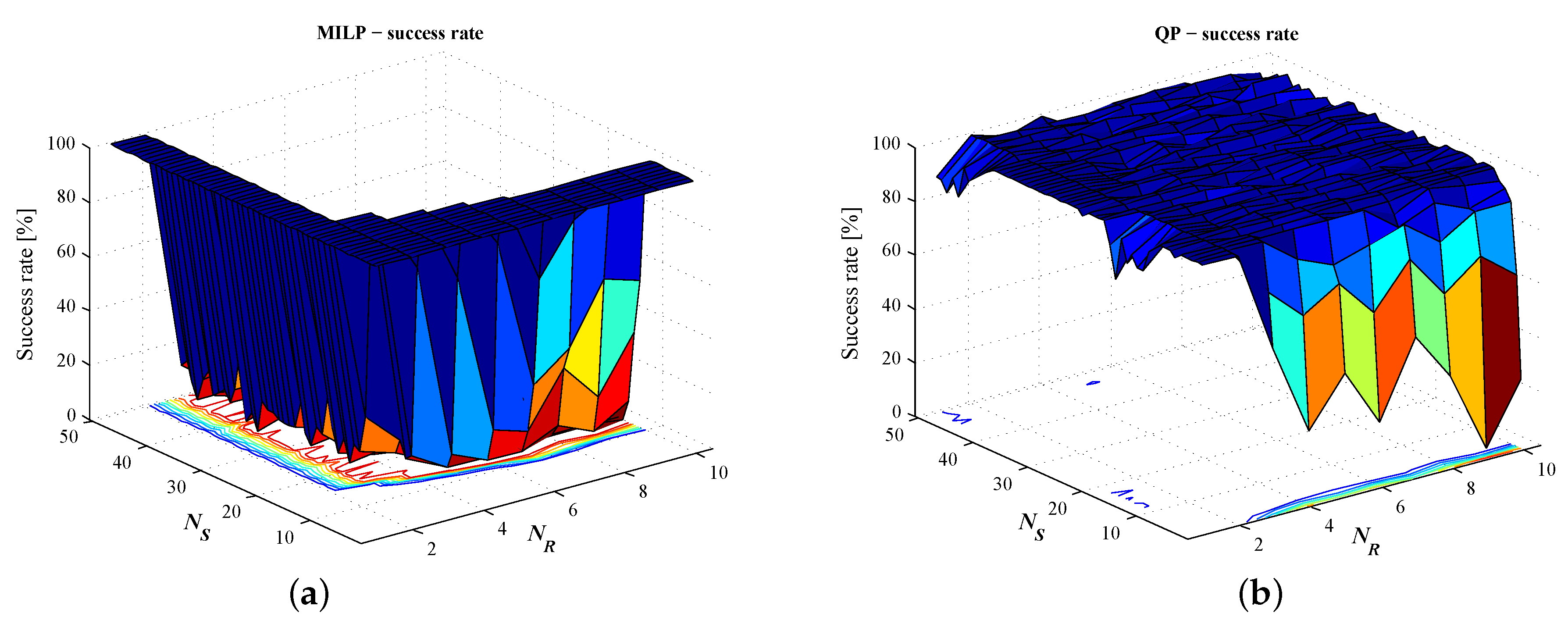

5.2. Numerical Experiments

- (i)

- Time complexity orders cannot be a priori given for MILP or for non-convex QP optimization problems. Thus, the time for obtaining a planning solution solving Problem 1 is to be recorded as an important comparison criterion.

- (ii)

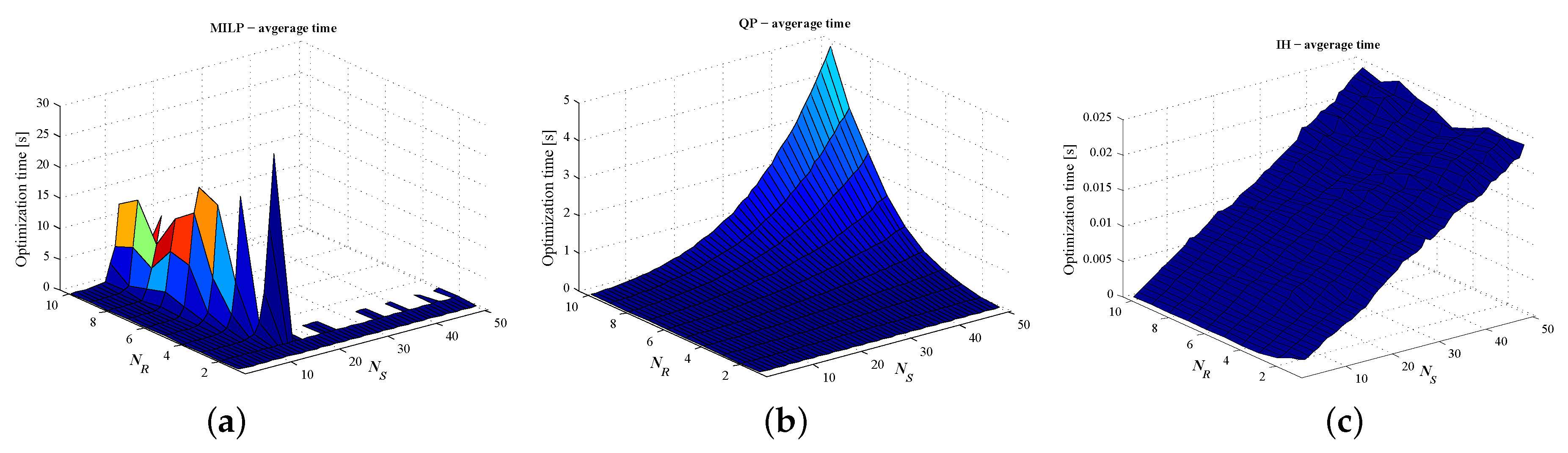

- The complexity of MILP, QP, and IH solutions does not directly depend on the size of environment graph G, except for the initial computation of map that further embeds the necessary information from the environment structure. Therefore, the running time of either solution is influenced by two parameters: the number of robots () and the number of samples ().

- (iii)

- Based on item (ii), we consider variation ranges , with unit increment steps for and . Please note that the cases of 1 robot and/or 1 sample are trivial, and therefore are not included in the above parameter intervals.

- (iv)

- To obtain reliable results for item (i), for each pair we have run a set of 50 trials. For each trial we generated a random distribution of samples in a 50-node graph. To maintain focus on time complexity, we assumed sufficiently large amounts of robot energy , such that Problem 1 is not infeasible due to these limitations.

- (v)

- (vi)

- For each trial from item (iv), the QP solution was deemed failed whenever it yielded non-binary outcomes of map x (see Remark 3). The IH solution is always successful.

- (vii)

- For each proposed solution, for each pair from (iii) and based on trials from (iv), we computed the following comparison criteria:

- success rate, showing the percentage of trials when the solution succeeded in outputting feasible plans;

- computation time, averaged over the successful trials of a given instance;

- solution cost, i.e., time for gathering all samples, for each successful situation.

5.3. Results

- For and , when the success rate of each optimization exceeds , the standard deviations of optimization times are: 15 for MILP, 0.01 for QP, 0.002 for IH;

- For and , when QP has success rate, the standard deviations of optimization times are: 0.23 for QP and 0.003 for IH.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Choset, H.; Lynch, K.; Hutchinson, S.; Kantor, G.; Burgard, W.; Kavraki, L.; Thrun, S. Principles of Robot Motion: Theory, Algorithms and Implementation; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Springer: Berlin, Germany, 2008. [Google Scholar]

- Belta, C.; Bicchi, A.; Egerstedt, M.; Frazzoli, E.; Klavins, E.; Pappas, G.J. Symbolic Planning and Control of Robot Motion. IEEE Robot. Autom. Mag. 2007, 14, 61–71. [Google Scholar] [CrossRef]

- Imeson, F.; Smith, S.L. A Language For Robot Path Planning in Discrete Environments: The TSP with Boolean Satisfiability Constraints. In Proceedings of the IEEE Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 5772–5777. [Google Scholar]

- Mahulea, C.; Kloetzer, M. Robot Planning based on Boolean Specifications using Petri Net Models. IEEE Trans. Autom. Control 2018, 63, 2218–2225. [Google Scholar] [CrossRef]

- Fainekos, G.; Girard, A.; Kress-Gazit, H.; Pappas, G. Temporal logic motion planning for dynamic robot. Automatica 2009, 45, 343–352. [Google Scholar] [CrossRef]

- Ding, X.; Lazar, M.; Belta, C. {LTL} receding horizon control for finite deterministic systems. Automatica 2014, 50, 399–408. [Google Scholar] [CrossRef]

- Schillinger, P.; Bürger, M.; Dimarogonas, D. Simultaneous task allocation and planning for temporal logic goals in heterogeneous multi-robot systems. Int. J. Robot. Res. 2018, 37, 818–838. [Google Scholar] [CrossRef]

- Kloetzer, M.; Mahulea, C. LTL-Based Planning in Environments With Probabilistic Observations. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1407–1420. [Google Scholar] [CrossRef]

- Berg, M.D.; Cheong, O.; van Kreveld, M. Computational Geometry: Algorithms and Applications, 3rd ed.; Springer: Berlin, Germany, 2008. [Google Scholar]

- Kloetzer, M.; Mahulea, C. A Petri net based approach for multi-robot path planning. Discret. Event Dyn. Syst. 2014, 24, 417–445. [Google Scholar] [CrossRef]

- Cassandras, C.; Lafortune, S. Introduction to Discrete Event Systems; Springer: Berlin, Germany, 2008. [Google Scholar]

- Silva, M. Introducing Petri nets. In Practice of Petri Nets in Manufacturing; Springer: Berlin, Germany, 1993; pp. 1–62. [Google Scholar]

- Burkard, R.; Dell’Amico, M.; Martello, S. Assignment Problems; SIAM e-Books; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 2009. [Google Scholar]

- Mosteo, A.; Montano, L. A Survey of Multi-Robot Task Allocation; Technical Report AMI-009-10-TEC; Instituto de Investigación en Ingenierıa de Aragón, University of Zaragoza: Zaragoza, Spain, 2010. [Google Scholar]

- Gerkey, B.; Matarić, M. A formal analysis and taxonomy of task allocation in multi-robot systems. Int. J. Robot. Res. 2004, 23, 939–954. [Google Scholar] [CrossRef]

- Korsah, G.; Stentz, A.; Dias, M. A comprehensive taxonomy for multi-robot task allocation. Int. J. Robot. Res. 2013, 32, 1495–1512. [Google Scholar] [CrossRef]

- Shmoys, D.; Tardos, É. An approximation algorithm for the generalized assignment problem. Math. Program. 1993, 62, 461–474. [Google Scholar] [CrossRef]

- Atay, N.; Bayazit, B. Emergent task allocation for mobile robots. In Proceedings of the Robotics: Science and Systems, Atlanta, GA, USA, 27–30 June 2007. [Google Scholar]

- Cardema, J.; Wang, P. Optimal Path Planning of Multiple Mobile Robots for Sample Collection on a Planetary Surface. In Mobile Robots: Perception & Navigation; Kolski, S., Ed.; IntechOpen: London, UK, 2007; pp. 605–636. [Google Scholar]

- Chen, J.; Sun, D. Coalition-Based Approach to Task Allocation of Multiple Robots with Resource Constraints. IEEE Trans. Autom. Sci. Eng. 2012, 9, 516–528. [Google Scholar] [CrossRef]

- Das, G.; McGinnity, T.; Coleman, S.; Behera, L. A Distributed Task Allocation Algorithm for a Multi-Robot System in Healthcare Facilities. J. Intell. Robot. Syst. 2015, 80, 33–58. [Google Scholar] [CrossRef]

- Burger, M.; Notarstefano, G.; Allgower, F.; Bullo, F. A distributed simplex algorithm and the multi-agent assignment problem. In Proceedings of the American Control Conference (ACC), San Francisco, CA, USA, 29 June–1 July 2011; pp. 2639–2644. [Google Scholar]

- Luo, L.; Chakraborty, N.; Sycara, K. Multi-robot assignment algorithm for tasks with set precedence constraints. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2526–2533. [Google Scholar]

- Toth, P.; Vigo, D. (Eds.) The Vehicle Routing Problem; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2001. [Google Scholar]

- Laporte, G.; Nobert, Y. Exact Algorithms for the Vehicle Routing Problem. In Surveys in Combinatorial Optimization; Martello, S., Minoux, M., Ribeiro, C., Laporte, G., Eds.; North-Holland Mathematics Studies; North-Holland: Amsterdam, The Netherlands, 1987; Volume 132, pp. 147–184. [Google Scholar]

- Toth, P.; Vigo, D. Models, relaxations and exact approaches for the capacitated vehicle routing problem. Discret. Appl. Math. 2002, 123, 487–512. [Google Scholar] [CrossRef]

- Raff, S. Routing and scheduling of vehicles and crews: The state of the art. Comput. Oper. Res. 1983, 10, 63–211. [Google Scholar] [CrossRef]

- Christofides, N. Vehicle routing. In The Traveling Salesman Problem: A guided Tour of Combinatorial Optimization; Wiley: New York, NY, USA, 1985; pp. 410–431. [Google Scholar]

- Laporte, G. The vehicle routing problem: An overview of exact and approximate algorithms. Eur. J. Oper. Res. 1992, 59, 345–358. [Google Scholar] [CrossRef]

- Chen, J.F.; Wu, T.H. Vehicle routing problem with simultaneous deliveries and pickups. J. Oper. Res. Soc. 2006, 57, 579–587. [Google Scholar] [CrossRef]

- Kloetzer, M.; Burlacu, A.; Panescu, D. Optimal multi-agent planning solution for a sample gathering problem. In Proceedings of the IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 22–24 May 2014. [Google Scholar]

- Kloetzer, M.; Ostafi, F.; Burlacu, A. Trading optimality for computational feasibility in a sample gathering problem. In Proceedings of the International Conference on System Theory, Control and Computing, Sinaia, Romania, 17–19 October 2014; pp. 151–156. [Google Scholar]

- Kloetzer, M.; Mahulea, C. An Assembly Problem with Mobile Robots. In Proceedings of the IEEE 19th Conference on Emerging Technologies Factory Automation, Barcelona, Spain, 16–19 September 2014. [Google Scholar]

- Panescu, D.; Kloetzer, M.; Burlacu, A.; Pascal, C. Artificial Intelligence based Solutions for Cooperative Mobile Robots. J. Control Eng. Appl. Inform. 2012, 14, 74–82. [Google Scholar]

- Kloetzer, M.; Mahulea, C.; Burlacu, A. Sample gathering problem for different robots with limited capacity. In Proceedings of the International Conference on System Theory, Control and Computing, Sinaia, Romania, 13–15 October 2016; pp. 490–495. [Google Scholar]

- Tumova, J.; Dimarogonas, D. Multi-agent planning under local LTL specifications and event-based synchronization. Automatica 2016, 70, 239–248. [Google Scholar] [CrossRef]

- Belta, C.; Habets, L. Constructing decidable hybrid systems with velocity bounds. In Proceedings of the 43rd IEEE Conference on Decision and Control, Nassau, Bahamas, 14–17 December 2004; pp. 467–472. [Google Scholar]

- Habets, L.C.G.J.M.; Collins, P.J.; van Schuppen, J.H. Reachability and control synthesis for piecewise-affine hybrid systems on simplices. IEEE Trans. Autom. Control 2006, 51, 938–948. [Google Scholar] [CrossRef]

- Garey, M.; Johnson, D. “Strong” NP-Completeness Results: Motivation, Examples, and Implications. J. ACM 1978, 25, 499–508. [Google Scholar] [CrossRef]

- Cormen, T.; Leiserson, C.; Rivest, R.; Stein, C. Introduction to Algorithms, 2nd ed.; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Ding-Zhu, D.; Pardolos, P. Nonconvex Optimization and Applications: Minimax and Applications; Spinger: New York, NY, USA, 1995. [Google Scholar]

- Polak, E. Optimization: Algorithms and Consistent Approximations; Spinger: New York, NY, USA, 1997. [Google Scholar]

- Floudas, C.; Pardolos, P. Encyclopedia of Optimization, 2nd ed.; Spinger: New York, NY, USA, 2009; Volume 2. [Google Scholar]

- Makhorin, A. GNU Linear Programming Kit. 2012. Available online: http://www.gnu.org/software/glpk/ (accessed on 4 January 2019).

- SAS Institute. The Mixed-Integer Linear Programming Solver. 2014. Available online: http://support.sas.com/rnd/app/or/mp/MILPsolver.html (accessed on 4 January 2019).

- The MathWorks. MATLAB®R2014a (v. 8.3); The MathWorks: Natick, MA, USA, 2006. [Google Scholar]

- Wolsey, L.; Nemhauser, G. Integer and Combinatorial Optimization; Wiley: New York, NY, USA, 1999. [Google Scholar]

- Vazirani, V.V. Approximation Algorithms; Springer: New York, NY, USA, 2001. [Google Scholar]

- Earl, M.; D’Andrea, R. Iterative MILP Methods for Vehicle-Control Problems. IEEE Trans. Robot. 2005, 21, 1158–1167. [Google Scholar] [CrossRef]

- Murray, W.; Ng, K. An Algorithm for Nonlinear Optimization Problems with Binary Variables. Comput. Optim. Appl. 2010, 47, 257–288. [Google Scholar] [CrossRef]

- Tiganas, V.; Kloetzer, M.; Burlacu, A. Multi-Robot based Implementation for a Sample Gathering Problem. In Proceedings of the International Conference on System Theory, Control and Computing, Sinaia, Romania, 11–13 October 2013; pp. 545–550. [Google Scholar]

- Kloetzer, M.; Mahulea, C.; Colom, J.M. Petri net approach for deadlock prevention in robot planning. In Proceedings of the IEEE Conference on Emerging Technologies Factory Automation (ETFA), Cagliari, Italy, 10–13 September 2013; pp. 1–4. [Google Scholar]

- Roszkowska, E.; Reveliotis, S. A Distributed Protocol for Motion Coordination in Free-Range Vehicular Systems. Automatica 2013, 49, 1639–1653. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Burlacu, A.; Kloetzer, M.; Mahulea, C. Numerical Evaluation of Sample Gathering Solutions for Mobile Robots. Appl. Sci. 2019, 9, 791. https://doi.org/10.3390/app9040791

Burlacu A, Kloetzer M, Mahulea C. Numerical Evaluation of Sample Gathering Solutions for Mobile Robots. Applied Sciences. 2019; 9(4):791. https://doi.org/10.3390/app9040791

Chicago/Turabian StyleBurlacu, Adrian, Marius Kloetzer, and Cristian Mahulea. 2019. "Numerical Evaluation of Sample Gathering Solutions for Mobile Robots" Applied Sciences 9, no. 4: 791. https://doi.org/10.3390/app9040791

APA StyleBurlacu, A., Kloetzer, M., & Mahulea, C. (2019). Numerical Evaluation of Sample Gathering Solutions for Mobile Robots. Applied Sciences, 9(4), 791. https://doi.org/10.3390/app9040791