Construction of the Uyghur Noun Morphological Re-Inflection Model Based on Hybrid Strategy

Abstract

:1. Introduction

2. The Nominal Paradigm of Uyghur Language

2.1. The Singular and Plural Forms of Uyghur Noun

" are chosen based on the phonetic harmony rule between stem and suffixes.

" are chosen based on the phonetic harmony rule between stem and suffixes.2.2. The Ownership-Dependent Forms of the Uyghur Noun

2.3. The Case Category of the Uyghur Noun

3. The Interconnection Rules of Uyghur Noun Stem and Suffixes

4. Description of Morphological Re-Inflection Model

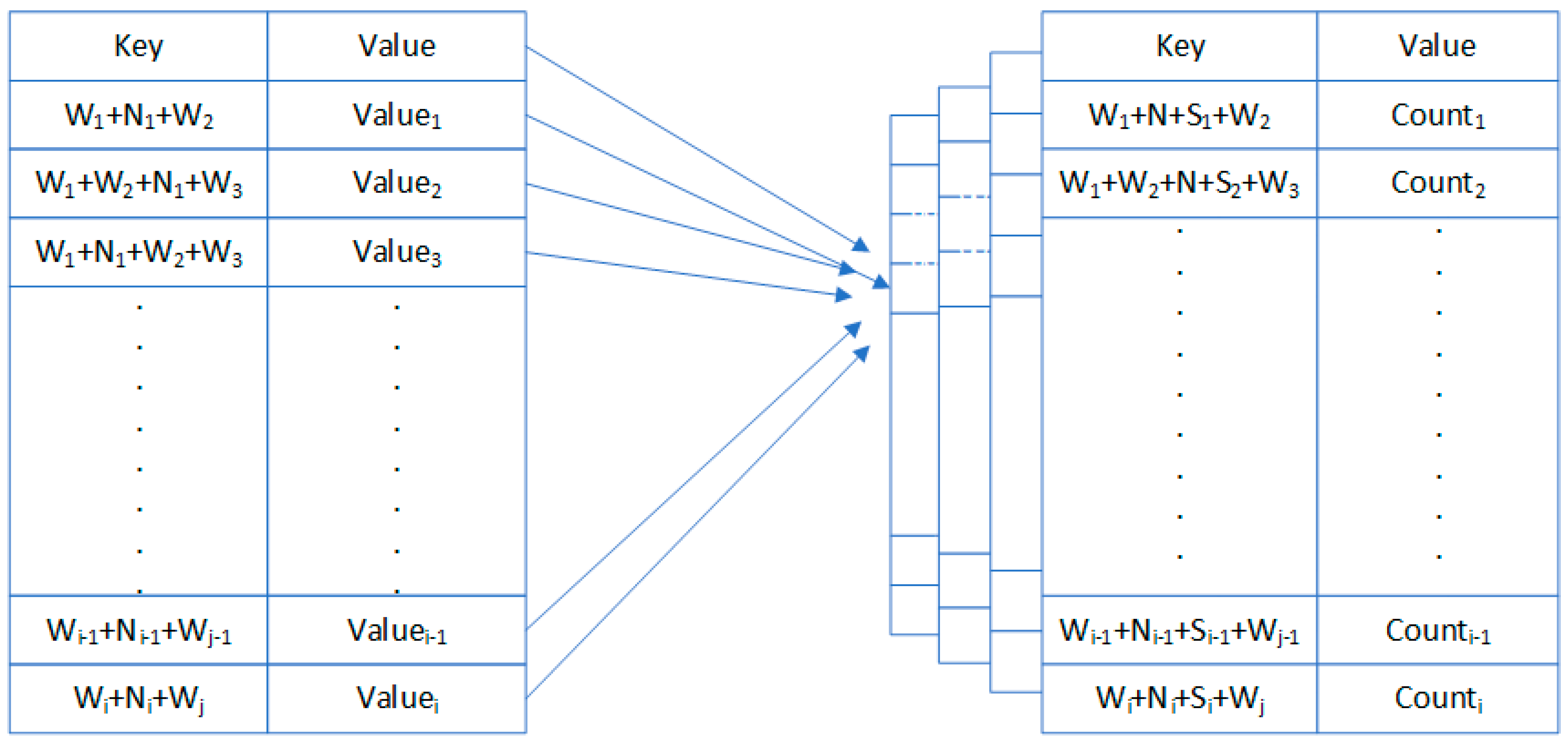

4.1. Design for Data Structure

4.2. Data Preprocessing

4.3. Data Training

4.4. Testing

4.4.1. Get the Test Data Combination

4.4.2. Suffix Selection

5. Matching of Uyghur Stem and Suffix

5.1. Uyghur Noun Suffix Variants

5.2. Uyghur Alphabet Classification

5.3. Construction of Variant Matching Rules Repository

5.3.1. Voice Consonants Stemmed Noun Suffixes

5.3.2. Voiceless Consonant Stems and Noun Suffixes

5.3.3. Front Vowel and Noun Suffixes

5.3.4. Back Vowel and Noun Suffixes

5.3.5. Middle Vowel and Noun Suffixes

5.4. Uyghur Phonological Phenomenon

6. Experiment

6.1. Design of the Experiment

6.2. Experiment and Analysis

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tomur, H. Modern Uighur Grammar (in Uighur); National Publishing House: Beijing, China, 1987. [Google Scholar]

- Adongbieke, G.; Ablimit, M. Research on Uighur Word Segmentation. J. Chin. Inf. Process. 2004, 11, 61–65. [Google Scholar]

- Ablimit, M.; Neubig, G.; Mimura, M.; Mori, S.; Kawahara, T.; Hamdulla, A. Uyghur Morpheme-based Language Models and ASR. In Proceedings of the IEEE 10th International Conference on Signal Processing (ICSP), Beijing, China, 24–28 October 2010. [Google Scholar]

- Ablimit, M.; Hamdulla, A.; Kawahara, T. Morpheme Concatenation Approach in Language Modeling for Large-Vocabulary Uyghur Speech Recognition. In Proceedings of the 2011 International Conference on Speech Database and Assessments (Oriental-COCOSDA), Hsinchu, Taiwan, 26–28 October 2011. [Google Scholar]

- Tachbelie, M.Y.; Abeta, S.T.; Besacier, L. Using different acoustic, lexical, and language modeling units for ASR of an under-resourced language—Amharic. Speech Commun. 2013, 56, 181–194. [Google Scholar] [CrossRef]

- Lee, A.; Kawahara, T.; Shikano, K. Julius—An open source real-time large vocabulary recognition engine. In Proceedings of the 7th European Conference on Speech Communication and Technology (Eurospeech), Aalborg, Denmark, 3–7 September 2001; pp. 1691–1694. [Google Scholar]

- Kwon, O.-W.; Park, J. Korean large vocabulary continuous speech recognition with morpheme-based recognition units. Speech Commun. 2003, 39, 287–300. [Google Scholar] [CrossRef]

- Neubig, G. Unsupervised Learning of Lexical Information for Language Processing Systems. Ph.D. Thesis, Kyoto University, Kyoto, Japan, 2012. [Google Scholar]

- Creutz, M.; Hirsimaki, T.; Kurimo, M.; Puurula, A.; Pylkkonen, J.; Siivola, V.; Varjokallio, M.; Arisoy, E.; Saraclar, M.; Stolcke, A. Morph-based speech recognition and the modeling of out-of-vocabulary words across languages. ACM Trans. Speech Lang. Process. 2007, 5, 1–29. [Google Scholar] [CrossRef]

- Creutz, M. Introduction of the Morphology of Natural Language: Unsupervised Morpheme Segmentation with Application to Automatic Speech Recognition. Ph.D. Thesis, Helsinki University of Technology, Helsinki, Finland, 2006. [Google Scholar]

- Ablimit, M.; Kawahara, T.; Hamdulla, A. Lexicon optimization based on discriminative learning for automatic speech recognition of agglutinative language. Speech Commun. 2014, 60, 78–87. [Google Scholar] [CrossRef]

- Xiang, B.; Nguyen, K.; Nguyen, L.; Schwartz, R.; Makhoul, J. Morphological decomposition for Arabic broadcast news transcription. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP), Toulouse, France, 14–19 May 2006. [Google Scholar]

- El-Desoky, A.; Gollan, C.; Rybach, D.; Schluter, R.; Ney, H. Investigating the use of morphological decomposition and diacrit. In Proceedings of the 10th Annual Conference of the International Speech Communication Association (Interspeech), Brighton, UK, 6–10 September 2009. [Google Scholar]

- Jongtaveesataporn, M.; Thienlikit, I.; Wutiwiwatchai, C.; Furui, S. Lexical units for Thai LVCSR. Speech Commun. 2009, 51, 379–389. [Google Scholar] [CrossRef]

- Pellegrini, T.; Lamel, L. Using phonetic features in unsupervised word decompounding for ASR with application to a less-represented language. In Proceedings of the 8th Annual Conference of the International Speech Communication Association (Interspeech), Antwerp, Belgium, 27–31 August 2007. [Google Scholar]

- Arisoy, E.; Saraclar, M.; Roark, B.; Shafran, I. Discriminative language modeling with linguistic and statistically derived features. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 540–550. [Google Scholar] [CrossRef]

- Porter, M.F. An algorithm for suffix stripping. Program 1980, 14, 130–137. [Google Scholar] [CrossRef]

- Eryiğit, G.; Adalı, E. An Affix Stripping Morphological Analyzer for Turkish. In Proceedings of the IASTED International Conference Artificial Intelligence and Applications, Innsbruck, Austria, 16–18 February 2004; pp. 299–304. [Google Scholar]

- Taghva, K.; Elkhoury, R.; Coombs, J. Arabic Stemming without A Root Dictionary. In Proceedings of the International Conference on Information Technology: Coding and Computing (ITCC’05), Las Vegas, NV, USA, 4–6 April 2005; pp. 152–157. [Google Scholar]

- Massimo, M.; Nicola, O. A Novel Method for Stemmer Generation Based on Hidden Markov Models. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 673–686. [Google Scholar]

- Gui, J.; Liu, T.; Sun, Z.; Tao, D.; Tan, T. Supervised Discrete Hashing with Relaxation. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 608–617. [Google Scholar] [CrossRef] [PubMed]

- You, Z.-H.; Yu, J.-Z.; Zhu, L.; Li, S.; Wen, Z.-K. A MapReduce based Parallel SVM for Large-Scale Predicting Protein-Protein Interactions. Neurocomputing 2014, 145, 37–43. [Google Scholar] [CrossRef]

- Li, S.; You, Z.H.; Guo, H.; Luo, X.; Zhao, Z.Q. Inverse-Free Extreme Learning Machine with Optimal Information Updating. IEEE Trans. Cybern. 2015, 46, 1229–1241. [Google Scholar] [CrossRef] [PubMed]

- Gui, J.; Liu, T.; Tao, D.; Sun, Z.; Tan, T. Representative Vector Machines: A Unified Framework for Classical Classifiers. IEEE Trans. Cybern. 2017, 46, 1877–1888. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhou, M.; Luo, X.; You, Z. Distributed Winner-take-all in Dynamic Networks. IEEE Trans. Autom. Control. 2017, 62, 577–589. [Google Scholar] [CrossRef]

- You, Z.H.; Zhou, M.; Luo, X.; Li, S. Highly Efficient Framework for Predicting Interactions Between Proteins. IEEE Trans. Cybern. 2016, 47, 731–743. [Google Scholar] [CrossRef] [PubMed]

- Gui, J.; Liu, T.; Sun, Z.; Tao, D.; Tan, T. Fast supervised discrete hashing. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 490–496. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Zhou, M.; Li, S.; Xia, Y.; You, Z.H.; Zhu, Q.; Leung, H. Incorporation of Efficient Second-Order Solvers into Latent Factor Models for Accurate Prediction of Missing QoS Data. IEEE Trans. Cybern. 2017, 48, 1216–1228. [Google Scholar] [CrossRef] [PubMed]

- Jie, G.; Sun, Z.; Cheng, J.; Ji, S.; Wu, X. How to Estimate the Regularization Parameter for Spectral Regression Discriminant Analysis and its Kernel Version? IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 211–223. [Google Scholar]

| Type | Suffix | Examples | In English | |

|---|---|---|---|---|

| 1st person | Singular type | م،ىم،ۇم،ۈم | بالام،قەلىمىم،قولۇم،كۆزۈم | My child, My pen |

| Plural type | مىز،ىمىز | بالىمىز،قەلىمىمىز | Our children | |

| 2nd person | Singular ordinary type | ڭ،ىڭ،ۇڭ،ۈڭ | بالاڭ،قەلىمىڭ،قولۇڭ | Your child, |

| Plural ordinary | ڭلار،ىڭلار،ۇڭلار, ۈڭلار | بالاڭلار،قەلىمىڭلار | Your children | |

| Singular refined type | ڭىز،ىڭىز | بالىڭىز،قەلىمىڭىز | Your child | |

| Singular and plural respectful | لىرى | بالىلىرى | His/their children | |

| 3rd person | سى،ى | بالىسى،قەلىمى | His/their pen | |

| Case Name | Case Suffixes | Examples | In English |

|---|---|---|---|

| Nominative case | Null | مەيدان،كىتاب،ئۆي،دەرس | Square, book, house, lesson |

| Possessive case | نىڭ- | كىتابنىڭ | Book’s |

| Dative case | غا،-قا،-گە،-كە- | مەيدانغا, سىرىتقا، ،ئۆيگە،دەرسكە | To square, To outside, |

| Accusative case | نى- | كىتابنى | This book |

| Locative case | دا،-تا،-دە،-تە- | مەيداندا،كىتابتا،ئۆيدە،دەرستە | On the square/…book |

| Ablative case | دىن،-تىن- | مەيداندىن،-كىتابتىن- | From square, from sth |

| Locative-qualitative | دىكى،-تىكى- | ،باغدىكى،شەھەردىكى | Flower (grows in gardens) |

| Limitative case | غىچە،قىچە،كىچە،گىچە | سىنتەبىرگىچە،قۇلاقلىرىغىچە | Till the September |

| Similitude case | دەك،-تەك- | بالاڭدەك،كىتابتەك- | Like your child |

| Equivalence case | چىلىك،-چە- | مەيدانچىلىك،-كىتابچە- | As a square |

| ['بۇ', 'شياۋمېي|N', <name>'ئىسىملىك|N', 'بىر<one>', <handicaps>'مېيىپ','قىزچاق<girl> |N', 'يازغان<wrote>'', <article>'ماقالە|N', '،',<article> 'ماقالە|N', 'ئۇنىڭ',<him> 'سودىيە|N',<mother> 'ئاپا|N', <named>'دەپ', <say> 'ئاتىغىنى<called>, 'پۇجياڭ<PuJiang>|N',< court> 'سوت|N',< administration> 'مەھكىمە|N',<judge> 'سودىيە|N',<Qin> 'چېن|N', <YanPing>'يەنپىڭ', '.'] | ||||

| [<this>'بۇ', 'N', 'N', <handcaps>'بىر', 'مېيىپ',<one> 'N', <wrote>'يازغان', 'N', '،', 'N', <Him>'ئۇنىڭ', 'N', 'N', <called>'دەپ','ئاتىغىنى<say>', 'N', 'N', 'N', 'N', 'N', <YanPing>'يەنپىڭ', '.'] | ||||

| [1,2,5,7,9,11,12,15,16,17,18,19] | ||||

| شياۋمېي <XiaoMei> | Gram | 2 | 3 | 4 |

| probability | 0.0093 | 2.648 | 4.56 | |

| combination | ['N', 'N'] | ['N', 'N+ى', 'بىر'] <N,N+one,suffix> | ['N+دا', 'N+دىن', 'بىر', 'مېيىپ'] <N+suffix,N+one,suffix+handcapes> | |

| position | 0 | 0 | 0 | |

| ئىسىملىك <name> | Gram | 2 | 3 | 4 |

| probablity | 0.00015 | 4.5617 | 4.56173 | |

| combination | ['N', 'بىر'] <N,one> | ['N+دىن' 'بىر', 'مېيىپ'] <N+handcapes,one,suffix> | ['N+دىن', 'بىر', 'مېيىپ', 'N'] <N+handcapes,one,suffix,N> | |

| position | 0 | 0 | 0 | |

| قىزچاق <girl> | Gram | 2 | 3 | 4 |

| probablity | 1.80188 | 6.64873 | 4.56173 | |

| combination | ['N', 'يازغان'] <N,wrote> | ['N+لىرى+غا', 'يازغان',N'] <N+wrote,suffix+suffix,N> | ['N+لەر+نى', 'يازغان', 'N', '،'] <N+wrote,suffix+suffix,N> | |

| position | 0 | 0 | 1 | |

| Vowel Type | Front Vowel | Middle Vowel | Back Vowel |

|---|---|---|---|

| Rounded vowel | ئۈ ئۆ | ئۇ ئو | |

| Exhibition vowel | ئە | ئې ئى | ئا |

| voice consonants | ۋ | ڭ | گ | ژ | ي | ن | م | ل | غ | ز | ر | د | ج | ب | ھ |

| voiceless consonants | ق | چ | پ | ك | ف | ش | س | خ | ت |

| N+Suffix | Replace the Actual Stem | Suffix Variants | Stem Correct Suffix | |||

|---|---|---|---|---|---|---|

| N+دىن | مەكتەپ+دىن | دىن | تىن | مەكتەپ+تىن | ||

| +Nغا | سىرت+غا | گە | غا | قا | كە | سىرت+قا |

| N+غىچە | سىنتەبىر+غىچە | قىچە | غىچە | گىچە | كىچە | سىنتەبىر+گىچە |

| Weakening | Insertion | Deletion | Weakening/Deletion | |

|---|---|---|---|---|

| Vowel’s Weakening | Consonant’s Weakening | |||

| قەلەم+ىم=قەلىمىم | كەل+ىپ+ىدىم=كېلىۋىدىم | ئارزۇ+ۇم=ئارزۇيۇم | بۇرۇن+ى=بۇرنى | چال+ىپ+تۇ+كەن+چېلىپتىكەن |

| vowelئەturn to vowelئى | Consonantپturn toۋ | Adding a consonantيafter the stem | Second consonantئۇ in the stem have deleted | ئاturn toئې,ل،and first ئى،ئۇ has deleted |

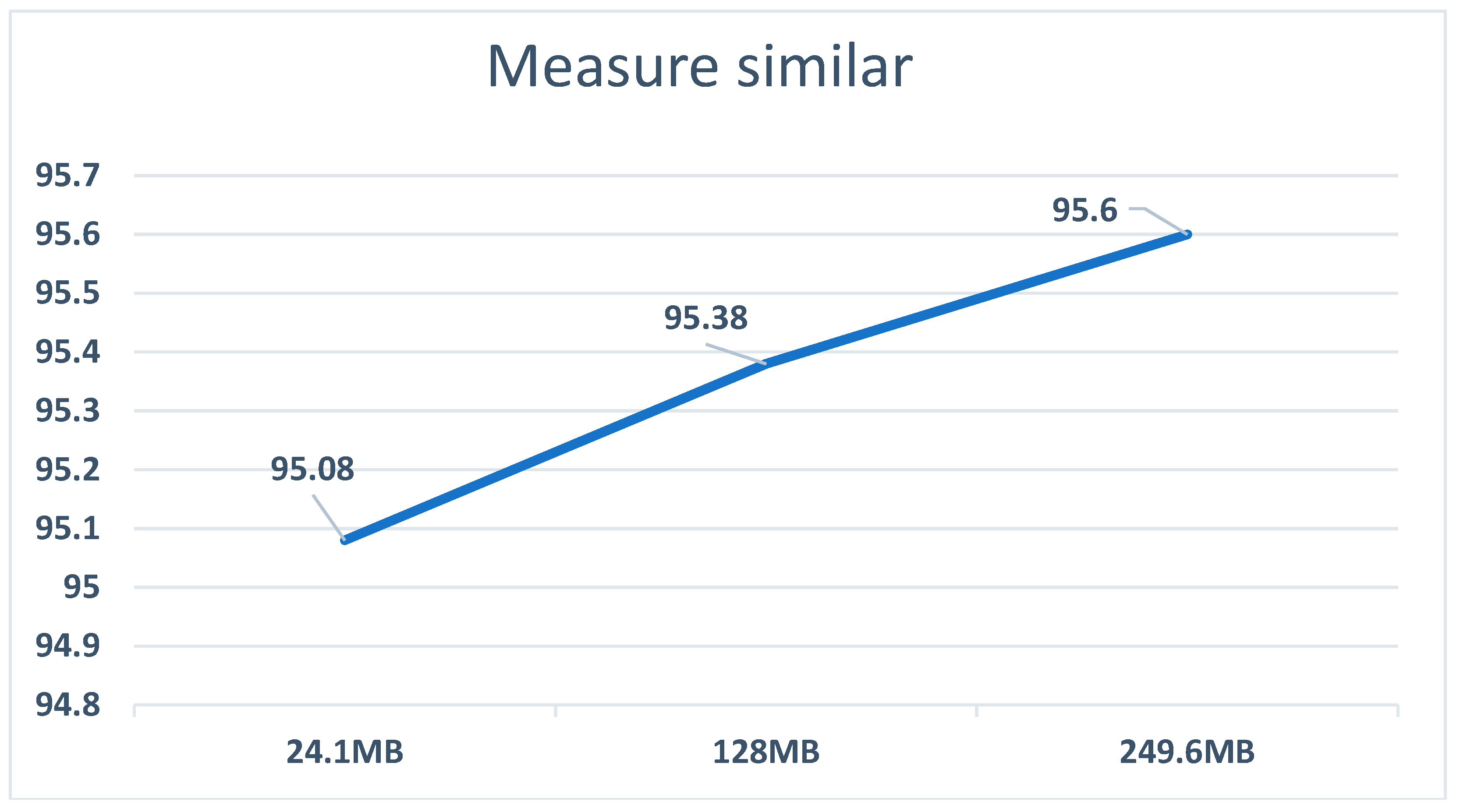

| Data Amount | Measure | |

|---|---|---|

| Train set | Test set | similar |

| 24.1 MB | 66 KB | 95.08 |

| 128 MB | 66 KB | 95.38 |

| 249.6 MB | 66 KB | 95.60 |

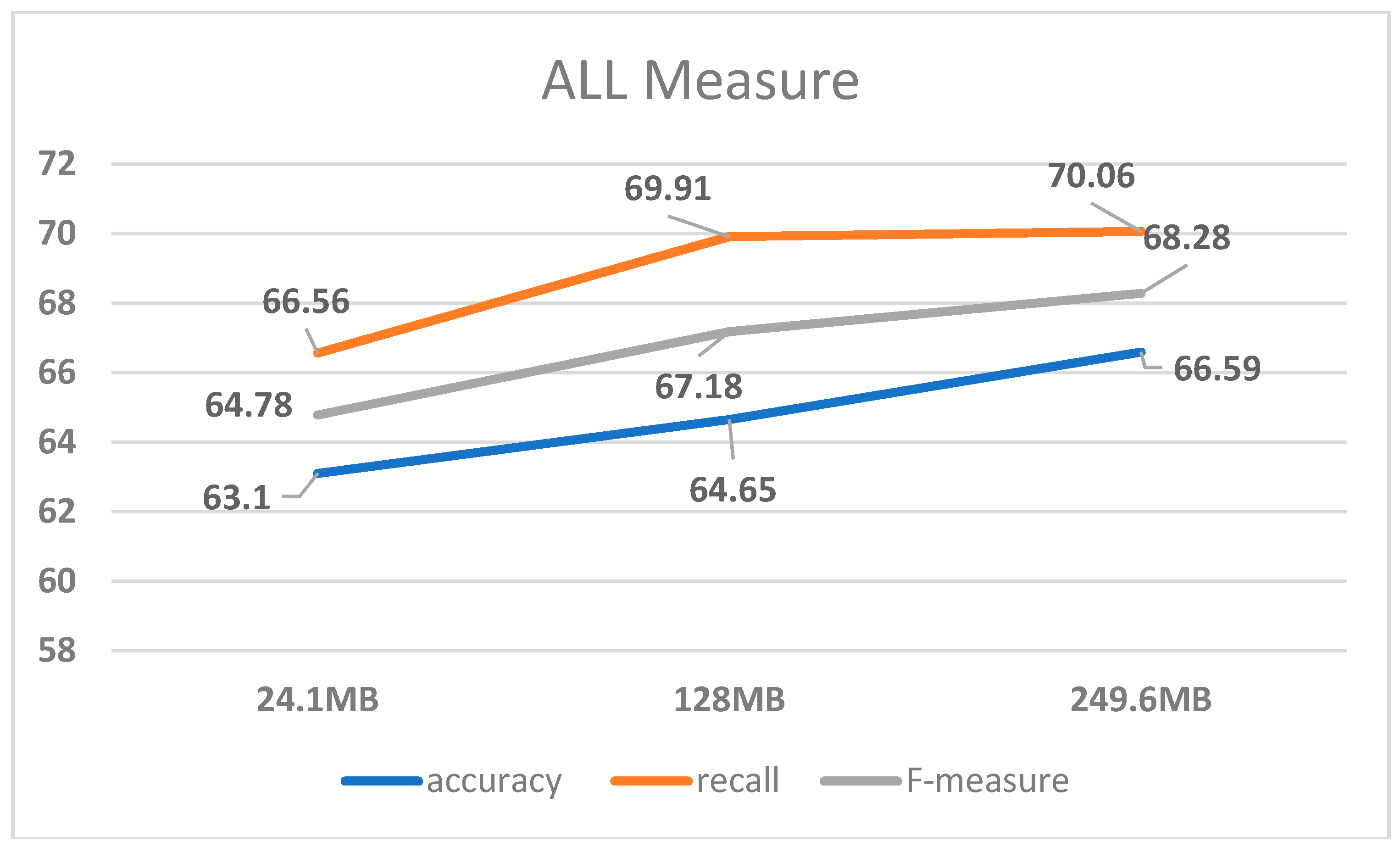

| Data Amount | All Measure | |||

|---|---|---|---|---|

| Train set | Test set | accuracy | recall | F-measure |

| 24.1 MB | 66 KB | 63.10 | 66.56 | 64.78 |

| 128 MB | 66 KB | 64.65 | 69.91 | 67.18 |

| 249.6 MB | 66 KB | 66.59 | 70.06 | 68.28 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Munire, M.; Li, X.; Yang, Y. Construction of the Uyghur Noun Morphological Re-Inflection Model Based on Hybrid Strategy. Appl. Sci. 2019, 9, 722. https://doi.org/10.3390/app9040722

Munire M, Li X, Yang Y. Construction of the Uyghur Noun Morphological Re-Inflection Model Based on Hybrid Strategy. Applied Sciences. 2019; 9(4):722. https://doi.org/10.3390/app9040722

Chicago/Turabian StyleMunire, Muhetaer, Xiao Li, and Yating Yang. 2019. "Construction of the Uyghur Noun Morphological Re-Inflection Model Based on Hybrid Strategy" Applied Sciences 9, no. 4: 722. https://doi.org/10.3390/app9040722

APA StyleMunire, M., Li, X., & Yang, Y. (2019). Construction of the Uyghur Noun Morphological Re-Inflection Model Based on Hybrid Strategy. Applied Sciences, 9(4), 722. https://doi.org/10.3390/app9040722