1. Introduction

This paper introduces a single-camera trilateration scheme that determines the instantaneous 3D pose (position and orientation) of a regular forward-looking camera, which can be moving, based on a single image of landmarks taken by the camera. It provides a convenient self-localization tool for mobile robots and other vehicles with onboard cameras.

Self-localization is a fundamental problem in mobile robotics. This refers to how a mobile robot localizes itself in its environment. Tremendous effort has been made to study this topic. The approach of this paper is inspired by the principle of trilateration which, given the correspondence of external references, is the most adopted external reference-based localization technique for mobile robots.

Trilateration refers to positioning an object based on the measured distances between the object and multiple references at known positions [

1,

2], i.e., locating an object by solving a system of equations in the form of:

where

p0 denotes the unknown position of the object,

pi the known position of the

ith reference point, and

ri the measured distance between

p0 and

pi. (Even when more than three references are involved in positioning, we still call it “trilateration” instead of “multilateration”, because “multilateration” has been used to name the positioning process based on the difference in the distances from three or more references [

3].)

Equation (1) represents the classical ranging-based trilateration problem which depends on measuring the distances between the target object and references. Existing algorithms include: closed-form solutions which have low time complexity, such as the algebraic algorithm proposed by Fang [

4] and Ziegert and Mize [

5] which refers to the base plane, that by Manolakis [

6] which adapts to an arbitrary frame of reference (a few typos in [

6] were fixed by Rao [

7]), that by Coope [

8] which finds the intersection points of n spheres in P

n based on Gaussian elimination, and the line intersection algorithm proposed by Pradhan et al. [

9], as well as the geometric solution developed by Thomas and Ros using Cayley–Menger determinants [

2], etc.; and numerical methods which provide optimal estimation by minimizing the residue of (1) in some form, such as least-squares estimation [

8,

10,

11], Taylor-series [

12], the extended Kalman filtering (EKF) [

13,

14,

15], linear algebraic techniques [

8,

16], and particle filtering [

17,

18], etc. Our work in [

19] proposed a closed-form algorithm for the non-linear least-squares trilateration formulation that is dominantly addressed with numerical methods, which provides near optimal estimation with low time complexity. Correspondingly, range sensors, which provide direct distance measurements based on the time of flight of the signals, have been dominantly used in trilateration systems, such as those based on ultrasonic [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34], radio frequency (RF) [

4,

14,

15,

17,

18,

35,

36,

37,

38,

39,

40,

41,

42,

43,

44,

45], laser [

5], and infrared [

46] signals, just to name a few. Existing ranging-based trilateration systems mostly adopt a GPS (Global Positioning System)-like configuration with either “fixed transmitters + onboard receiver” or “onboard transmitter + fixed receivers”. They require installing and maintaining relevant signaling devices in the target environment. The accuracy of the time-of-flight measurement depends on the accuracy of synchronization among the transmitters and receivers. Trilateration for moving objects needs to accommodate the time difference among sequentially received ranging signals and the effect of movement. The signal delay caused by obstacles, which is not easy to detect and filter, is a common source of localization inaccuracy. An onboard ranging-based self-trilateration system, which transmits ranging signals and positions itself based on reflected signals, is highly challenging to realize, due to the difficulty in identifying references from only the reflected signals.

Meanwhile, cameras have become major onboard sensors for autonomous mobile robots in various navigation tasks, due to the comprehensive amount of information contained in images. Correspondingly, vision-based trilateration is considered a natural extension of the classical ranging-based trilateration. Stereovision-based trilateration systems have been proposed [

47,

48,

49]. Compared with ranging-based trilateration which depends on signaling between the separate transmitter and receiver, vision-based trilateration determines the ranges to landmarks from onboard-taken camera images, and thus achieves self-trilateration given the correspondence of landmarks. Vision-based trilateration provides a complementary scheme which naturally avoids some aforementioned common issues with ranging-based trilateration, such as synchronization among signaling devices and obstacle-caused signal delay. Because a camera can capture multiple landmarks at the same time, a camera-based trilateration system can thus obtain simultaneous distance measurements from multiple landmarks. One intrinsic challenge for stereovision is to match the landmark projections in the two simultaneous images, in particular when the numbers of observed landmarks are different in the two images, the two cameras view different subsets of landmarks (which are only partly overlapped), or landmarks are projected close to each other on the same image. Mismatching in landmark projections will result in invalid landmark reconstruction, and hence may cause serious errors in localization. This problem can be avoided if trilateration can be achieved using one camera.

Thus, by comparing it with the ranging-based and stereovision-based trilateration schemes, we feel that single-camera trilateration has certain advantages in the category of trilateration techniques for mobile robots and vehicles. However, there has been a lack of systematic discussion on single-camera trilateration so far, with very limited work using single-camera ranging to assist localization, e.g., as a constraint to correct the odometry for 2D robot self-localization [

50]. With the intention to fully explore the capability of single-camera trilateration as an independent 3D self-localization scheme, in this paper we propose a general algorithm for single-camera trilateration that can determine the instantaneous 3D pose of a moving camera from a single image of three or more landmarks at known positions, and give a systematic discussion on the formulation and performance analysis of single-camera trilateration. Minimizing the requirements on the hardware system, the proposed algorithm is based on the classical pinhole model of the regular forward-looking camera. When the camera is fixed on a mobile robot, the self-localization of the camera is equivalent to the self-localization of the robot, where the pose of the camera and that of the robot are different by a fixed camera-robot transformation which can be obtained by the robot hand-eye calibration [

51,

52]; when the camera is movable on the robot, e.g., pan and tilt, the robot pose can be calculated from the camera pose by incorporating the camera-robot transformation. The proposed scheme extends the classical ranging-based trilateration formulation to encompass the geometry of single-camera imaging and defines an integrated trilateration procedure that fits with the nature of single-camera imaging. It also makes the estimation of the camera orientation a natural part of the complete trilateration process, by taking advantage of the geometric information contained in the image.

As the ranging-based trilateration requires the input of the global positions of reference objects, the camera-based trilateration requires the correspondence between observed landmarks and a map of known landmarks. Focusing on discussing the proposed single-camera trilateration algorithm itself in this paper, we refer to the approaches in the literature [

53,

54,

55,

56,

57] for the solutions of this correspondence problem. Minimally speaking, the proposed single-camera trilateration algorithm is highly applicable to indoor environments with identity-encoded artificial landmarks, such as shape patterns and color patterns, and outdoor environments with salient natural landmarks, such as uniquely-shaped mountain peaks and buildings. It can also be incorporated into a simultaneous localization and mapping (SLAM) process which generates a map of those distinct landmarks while locating the mobile robot with respect to the map.

We initially came up with the concept of single-camera trilateration and carried out some preliminary work based on simulation in [

58]. This current paper will present a fully developed single-camera trilateration algorithm with comprehensive performance analysis. Compared with our preliminary work, the work of this current paper made highly significant and extensive further development. The specific contributions on top of our preliminary work include:

- (1)

The proposed single-camera trilateration algorithm is fully developed with more systematic derivation and well-adopted notations.

- (2)

The algorithm is developed with high level of completion. In particular, in the preliminary work, the camera orientation estimation algorithm only estimated the directional vector of the camera optical axis; the current work largely enhances the camera orientation estimation by providing a complete estimation of the 3D rotation matrix of the camera frame relative to the environment frame.

- (3)

A comprehensive performance analysis of the proposed single-camera trilateration algorithm is carried out. In the preliminary work, the performance analysis of the algorithm considered mainly the effect of the landmark position errors in the environment and image; in the current work, a much more extensive performance analysis is carried out with the consideration of not only the effect of the landmark errors but also that of the errors in camera parameters. Moreover, along with the new camera orientation estimation algorithm, the corresponding performance indices are re-defined, and an enhanced performance analysis is carried out. In addition, the performance analysis in the current work is carried out with a much larger number of simulation data.

- (4)

An experiment is carefully set up and carried out to further verify the effectiveness of the proposed single-camera trilateration algorithm, and check the estimation error under the combined effect of various input errors. Our preliminary work was simulation-based. The experimental work tests the proposed algorithm in the physical environment, which is a significant addition to the simulation-based performance analysis.

The rest of this paper is laid out as follows.

Section 2 will present the derivation of the proposed algorithm.

Section 3 will present the error analysis of the proposed algorithm.

Section 4 will present the experimental verification.

Section 5 will summarize this work.

2. Single-Camera Trilateration Algorithm

2.1. Notation and Assumptions

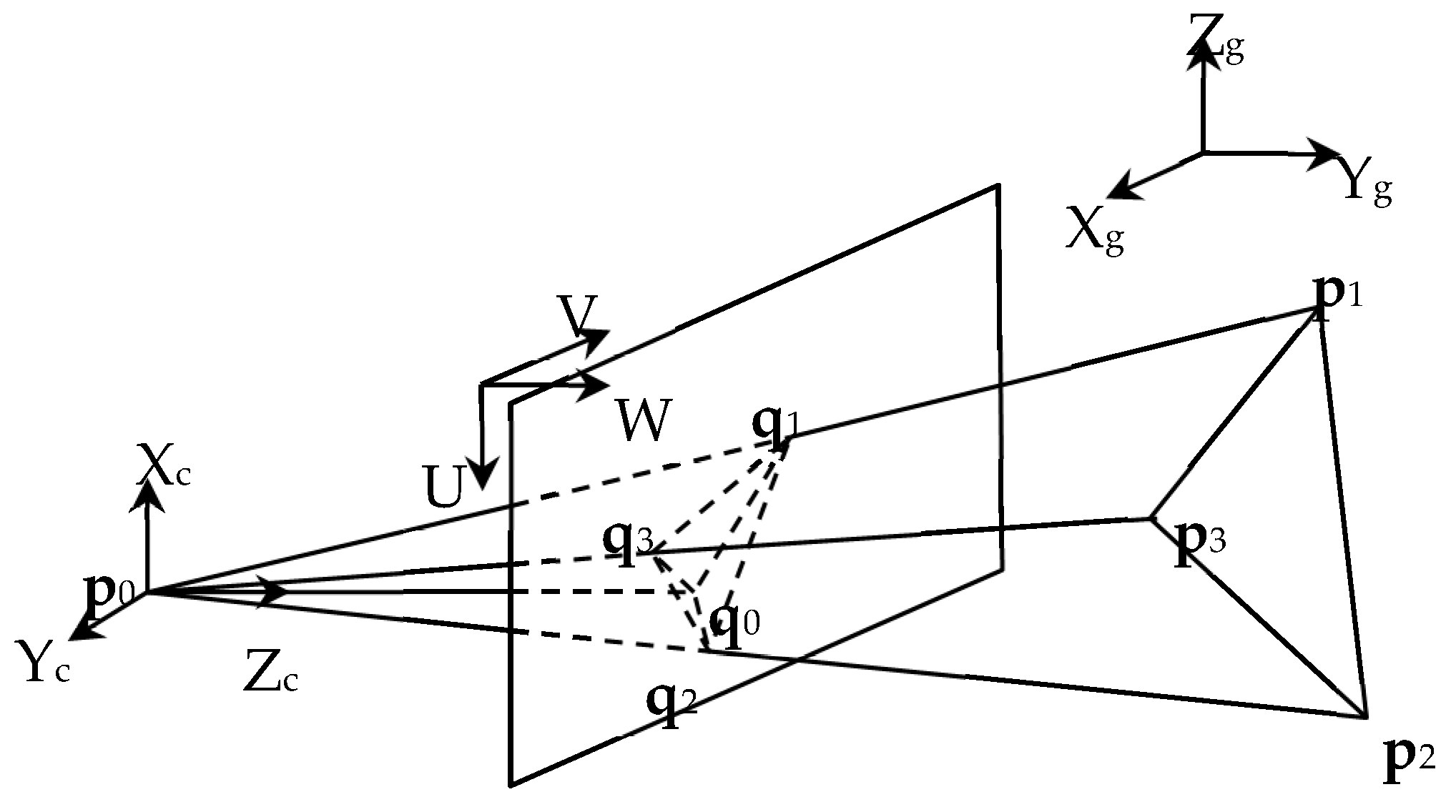

The proposed single-camera trilateration algorithm is derived on the basis of the classical pinhole camera model (

Figure 1). Here, X

gY

gZ

g denotes the global frame of reference attached to the environment in which the camera is moving, and a vector defined in X

gY

gZ

g is labeled with a superscript “g”, e.g.,

gv; X

cY

cZ

c denotes the camera frame with the origin at the optical center

p0 and Z

c axis pointing along the optical axis from

p0 towards the image center

q0, and a vector defined in X

cY

cZ

c is labeled with a superscript “c”, e.g.,

cv; UV denotes the image frame with the origin at the upper left corner of the image, and a vector defined in UV is labeled with a superscript “m”, e.g.,

mv; UVW is the corresponding 3D extension of UV. The vectors in X

gY

gZ

g, X

cY

cZ

c and UVW are 3-dimensional, while those in UV are 2-dimensional. A position defined in X

gY

gZ

g or X

cY

cZ

c is in the unit of millimeters, while that defined in UV or UVW is in the unit of pixels.

In particular, p0 denotes the optical center of the camera, and its positions defined in XgYgZg and XcYcZc are denoted by gp0 and cp0, respectively. q0 denotes the image center (also known as the principal point)—The intersection between the optical axis and image plane (which are perpendicular to each other), and its position defined in UV is denoted by mq0. The distance between p0 and q0 is known as the focal length f. Assuming that there are N landmarks captured by the camera (where N′ ≥ 3 for 3D trilateration according to the principle of trilateration), we denote the reference point of the ith landmark as pi, where i∈{1,2,…,N}, and its positions defined in XgYgZg and XcYcZc are denoted by gpi and cpi, respectively. Moreover, qi denotes the projection of pi on the image plane, and its positions defined in XcYcZc and UV are denoted by cqi and mqi, respectively.

As a necessary input to the proposed single-camera trilateration algorithm, the set of intrinsic parameters of the involved camera can be retrieved from an off-line camera calibration process, a classic topic which has been addressed extensively in computer vision literature such as [

59,

60,

61]. A well-adopted camera calibration toolbox can also be found at [

62]. Using the calibration toolbox, we can determine the set of intrinsic camera parameters, including the focal length

mf = [

fu,

fv]

T, image center

mq0 = [

u0,

v0]

T, and distortion coefficients, as well as their estimation uncertainty. In particular,

fu = f

Dusu,

fv =

fDv, where f is the physical focal length (mm),

Du the pixel size in the U direction (pixel/mm), D

v the pixel size in the V direction, and

su the scale factor. It is known that f,

Du,

Dv and

su are in general impossible to calibrate separately [

60,

61,

62]. Therefore, instead of using f,

Du,

Dv and

su separately, we use

fu and

fv in our algorithm.

The pinhole model is established on the basis of the calibrated camera parameters and corrected image. In the following derivation, we assume that the image distortions have been corrected according to the calibrated distortion coefficients. We also assume that at least three landmarks have been captured, segmented, identified and located at

mqi in UV. Relevant image processing techniques can be found in existing literature [

59,

63,

64]. Moreover, we assume that the positions of the involved landmarks in the global frame X

gY

gZ

g,

gpi, have been retrieved from a map of known landmarks using existing methods [

53,

54,

55,

56,

57].

The camera position is defined by the position of its optical center

p0 in X

gY

gZ

g,

gp0, and the camera orientation is defined by the rotation matrix of X

cY

cZ

c with respect to X

gY

gZ

g,

gRc. The proposed algorithm will estimate

gp0 and

gRc, which will be discussed in the following two subsections respectively. The following relationships obtained from the pinhole model will be used in the derivation:

where

ui and

vi denote the U and V components of

mqi respectively.

2.2. Camera Position Estimation

Assuming that

N ≥ 3 landmarks are chosen for localizing the camera, we have a system of N independent trilateration equations in X

gY

gZ

g in the form of:

which is in fact Equation (1) defined in X

gY

gZ

g. In principle, given

gpi and

ri, one can estimate

gp0 by solving Equation (5). Representing the standard ranging-based trilateration problem, Equation (5) can be solved using one of the existing closed-form or numerical algorithms in the literature [

2,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

19]. Here,

gpi are known as the pre-mapped landmark positions, but

ri are yet to be determined.

In order to determine

ri, we can write down a system of totally

N (

N − 1)/2 equations as:

each of which is defined in a triangle ∆

p0pipj in X

gY

gZ

g according to the law of cosine. Here,

θij denotes the vertex angle associated with

p0 in ∆

p0pipj (which is also known as the visual angle that

pipj subtends at

p0), and

rij the distance between

pi and

pj. In principle, given

rij and cos

θij, one can estimate r

i by solving Equation (6). At least two general schemes can be adopted to obtain

ri:

- (1)

Solving

N equations for

N variables: first, choose a subset of

N independent equations from Equation (6) which contain

N variables {

ri|

i = 1,…,

N}; next, by combining the

N equations and eliminating

N − 1 variables {

ri|

i = 2,…,

N}, obtain a 2

N−1-degree polynomial equation of

r12, i.e.,

then, solve Equation (7) for r

1, and substitute r

1 into the chosen N equations to solve for {

ri|

i = 2,…,

N} iteratively. When

N = 3, (7) is a 4-degree polynomial equation which can be solved in the closed form. When

N > 3, (7) is a polynomial equation of degree 8 or greater. Although it has been proven (by Niels Henrik Abel in 1824) that there cannot be any closed-form formula for such a polynomial equation, a number of numerical algorithms are available, such as Laguerre’s method [

65], the Jenkins–Traub method [

66], the Durand–Kerner method [

67], Aberth method [

68], splitting circle method [

69] and the Dandelin–Gräffe method [

70]. The roots of a polynomial can also be found as the eigenvalues of its companion matrix [

71,

72,

73].

- (2)

Solving an associated optimization problem: A nonlinear least-squares problem can be formulated to estimate the optimal

ri that minimizes the residue of Equation (6), i.e.,

where

r denotes the vector of {

ri|

i = 1,…,

N}. Search-based optimization algorithms are commonly used to solve this category of problems, including both local optimization algorithms, e.g., the steepest descent method and the Newton–Raphson method (also known as Newton’s method), and global optimization algorithms, e.g., the simulated annealing and the genetic algorithm [

74,

75,

76,

77,

78].

To solve Equations (6)–(8), we need to know rij and cosθij. Here, , but cosθij are yet to be determined.

In order to determine cos

θij, we have the following equation in the triangle ∆

p0qiqj in X

cY

cZ

c according to the law of cosine:

where

denotes the distance between

p0 and

qi, and

denotes the distance between

qi and

qj. Substituting Equation (4) into Equation (9), we obtain:

where

Since mq0 = [u0,v0]T and mf = [fu,fv]T are determined through camera calibration and mqi are obtained by segmenting the visual landmarks from the image, cosθij can be calculated from Equation (10) directly.

Based on the above derivation, it is clear that the camera can be positioned by reversing the above steps. That is, first calculate cosθij from Equation (10), next estimate ri from Equation (6), and then estimate gp0 from Equation (5).

2.3. Camera Orientation Estimation

Knowing

gp0 and the set of

gpi, we obtain from Equation (2):

where

g∆

P is a 3 × N matrix with the

ith column as

g∆

pi =

gpi −

gp0, and

cP is a 3 × N matrix with the

ith column as

cpi. Here,

cpi can be determined by substituting Equation (4) into Equation (3):

where

czi can be calculated as

Given

g∆

P and

cP,

gRc can in principle be calculated from Equation (11) as

However, due to input errors, such as those in reference positioning, camera calibration and image segmentation, the resulting gRc may not strictly be a rotation matrix. In particular, the orthonormality of the matrix may not be guaranteed. Thus, instead of using Equation (14), we go through the following process to obtain a valid rotation matrix.

We define an optimal approximation of

gRc as the one that minimizes the residue of Equation (11), i.e.,

where

Tr (

M) denotes the trace of the matrix

M. Since

Tr (

M1M2) =

Tr (

M2M1) for two matrices

M1 and

M2, we have:

where

ADBT is the singular value decomposition of the 3 × 3 matrix

cPg∆

PT in which

A and

B are orthogonal matrices and

D is an diagonal matrix with non-negative real numbers on the diagonal. Since

BTgRcA is an orthogonal matrix and

D is a non-negative diagonal matrix, we must have:

and correspondingly

Then, we obtain from Equation (18)

which is guaranteed to be an orthogonal matrix.

Based on the above derivation, it is clear that the camera orientation can be determined by first calculating cpi from Equation (12), next conducting the singular value decomposition of the matrix cPg∆PT, and then getting gRc from Equation (19).

2.4. Algorithm Summary

Following the discussions in the above two subsections, we summarize the proposed single-camera trilateration algorithm as Algorithm 1. It estimates the instantaneous 3D pose of a camera, which can be moving, from a single image of landmarks at known positions. Strictly speaking, Algorithm 1 provides a general framework. In particular, Equation (6) in Step (2) and Equation (5) in Step (3) can be solved by a variety of algorithms specific for those steps, as we pointed out.

| Algorithm 1:Single-Camera Trilateration in P3 |

| Input: The camera parameters mq0 (image center) and mf (focal length), the positions of a set of N ≥ 3 landmarks in XgYgZg, {gpi|i∈Z+, 1 ≤ i ≤ N}, and their corresponding projections in UV, {mqi|i∈Z+, 1 ≤ i ≤ N}. |

| Output: The position gp0 and orientation gRc of the camera in XgYgZg. |

- (1)

Calculate cosθij from Equation (10); - (2)

Solve Equation (6) for {ri| i∈Z+, 1 ≤ i ≤ N}; - (3)

Solve Equation (5) for gp0; - (4)

Calculate {cpi| i∈Z+, 1 ≤ i ≤ N} from Equation (12); - (5)

Conduct the singular value decomposition of the matrix cPg∆PT; - (6)

Calculate gRc from Equation (19); - (7)

Return gp0 and gRc.

|

3. Performance Analysis

The input to the proposed single-camera trilateration algorithm consists of the global positions of the involved landmarks gpi, their positions in the image mqi, the camera focal length mf and image center mq0. In practice, the uncertainty in camera calibration and inaccuracy of image segmentation cause errors in mf, mq0 and mqi, and imperfect mapping brings errors to gpi. In this section, we analyze the effect of these input errors on the accuracy of the estimation output of the camera position gp0 and orientation gRc.

3.1. Performance Indices

We define an input vector x which contains all the above input parameters, i.e., x = [gpiTmqiTmfT mq0T]T. Then gp0 is a function of x, i.e., . Denoting the actual value and random error of x as and δx respectively, we have . Correspondingly, the actual value and output estimate of gp0 can be written as and , respectively, and the estimation error of gp0 is .

We denote the errors of input quantities

gpi,

mqi,

mf and

mq0 as

δgpi,

δmqi,

δmf and

δmq0 respectively. Adopting the classical scheme of error analysis used in [

2,

6], we evaluate the effects of

δgpi,

δmqi,

δmf and

δmq0 on

δgp0, respectively. For any of these input error vectors (generally denoted as

δv), we assume that the vector components are zero-mean random variables and uncorrelated with one another with a common standard deviation

δv. We adopt two well-accepted performance indices [

2,

6], the normalized total bias B

p which represents the sensitivity of the systematic position estimation error to the input error, and the normalized total standard deviation error S

p which represents the sensitivity of the position estimation uncertainty to the input error:

where |

a|denotes the norm of a vector

a,

Tr (

M) denotes the trace of a matrix

M, E(.) denotes the mean of a random variable, and var(.) denotes the variance of a random variable.

Similar to

gp0,

gRc is also a function of

x, i.e.,

. To make the presentation consistent, we discuss the orientation estimation error in the vector form, and correspondingly define an error metric of

gRc as:

where

in the unit of degrees. Here

ni denotes the

ith column of

gRc which corresponds to the x, y or z directional vector of the camera frame with respect to the global frame. As a result,

δgθc shows the angular difference between the actual and erroneous camera orientations. Similar to Equations (20) and (21), we define the corresponding performance indices—the normalized total bias B

θ and the normalized total standard deviation error S

θ as:

where B

θ represents the sensitivity of the systematic orientation estimation error to the input error, and S

θ represents the sensitivity of the orientation estimation uncertainty to the input error.

In the following performance analysis, we will report the variation of Bp, Sp, Bθ and Sθ across the simulated spaces of gp0 under the representative σv values of δgpi, δmqi, δmf and δmq0.

3.2. Simulation Settings

A performance analysis has been conducted on the proposed single-camera trilateration algorithm with representative examples in which a moving camera is localized in P3 based on three landmarks, simulated in Matlab.

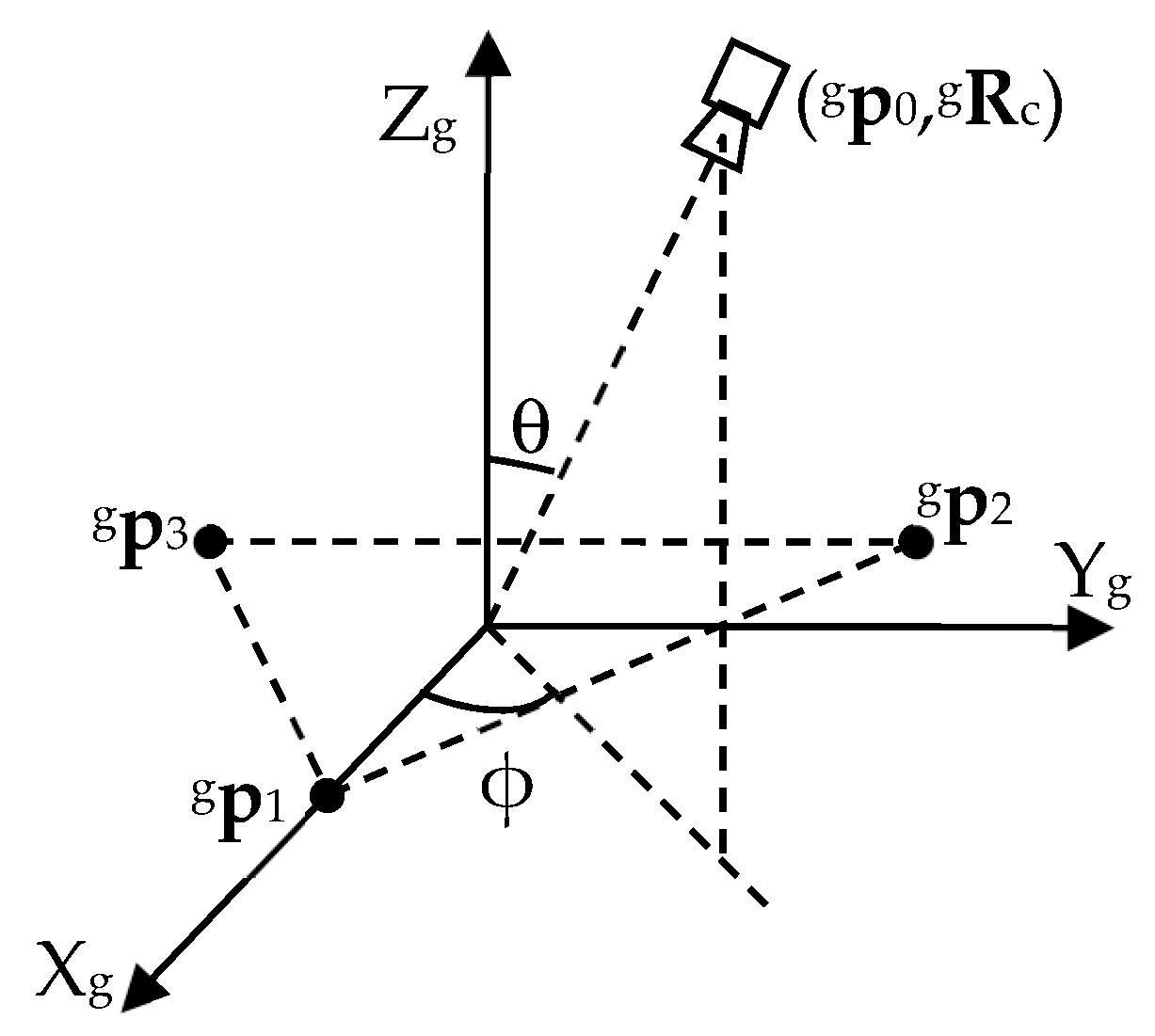

In the simulated global frame of reference, the landmarks (reference points) are placed at

,

and

. They form an equilateral triangle on the XY plane, inscribed in a circle centered at the origin of the global frame with a radius of r = 500 length units (

Figure 2).

To study the effect of the geometric arrangement of the camera relative to the landmarks on the performance indices, we let the camera move on a data acquisition sphere S centered at the origin of the global frame with a radius of R, and keep the optical axis of the camera pointing towards the origin. We define a tilt angle

θ as the angle between Z

g and −

n3, and a pan angle ϕ as the angle between X

g and the orthogonal projection of −

n3 on the XY plane (

Figure 2). To study the effect of the observation distance on the performance indices, we test the algorithm with different values of R. To keep the inscribing circle of the landmarks in the camera field of view all the time, we use R ≥ 3000. It means that the required maximal angle of view is about 19° (corresponding to R = 3000 and

θ = 0°), which is a mild requirement met by a large number of lenses. For a specific R, by changing the tilt angle

θ and pan angle ϕ, we move the camera on the sphere, and check the variation of the performance indices on the sphere.

The intrinsic camera parameters used in the simulation are obtained by calibrating a real camera (a MatrixVision BlueFox−120 640 × 480 CCD (Charged Coupled Device) grayscale camera with a Kowa 12 mm lens) using the camera calibration toolbox in [

62] and a printed black/white checkerboard pattern. In particular,

mf = [1627.5609, 1629.9348]

T and

mq0 = [333.9088, 246.3799]

T.

The standard deviations of input errors are chosen mainly according to the calibration results of the above camera and lens. Specifically, using the camera calibration toolbox in [

62] to calibrate the camera and lens, we obtained the estimation error for

mf as [1.1486, 1.1069]. Since the estimation errors are approximately three times the standard deviation of

δmf [

62], a representative standard deviation of the

δmf components can be taken as

σf = 0.4. Meanwhile, the estimation error for

mq0 was obtained as [1.9374, 1.5782], which means that a representative standard deviation of the

δmq0 components can be taken as

σc = 0.6. In addition, we take

σp = 25 as a representative standard deviation of the

δgpi components, which corresponds to 5% of the base radius r. Besides,

σq = 0.5 is taken as a representative standard deviation of the

δmqi components based on a conservative estimation of the average image segmentation operation.

For performance analysis, we check the effect of δgpi, δmqi, δmf and δmq0 separately on δgp0 and δgθc. For each of these input error vectors, we assume that the vector components are zero-mean random variables following the corresponding Gaussian distribution and uncorrelated with one another with the same standard deviation; we discretize the data acquisition sphere S with a set of equally gapped θ and ϕ; for each point (θ, ϕ) on S, 10,000 samples of the erroneous input are generated according to the random distribution; the corresponding erroneous output estimates are obtained using the proposed single-camera trilateration algorithm; by comparing the erroneous estimates with the correct camera pose corresponding to (θ, ϕ) on S, the statistics of the output error are generated, and then the performance indices are calculated.

In order to optimally estimate

gp0 and

ri, we carry out the steps (2) and (3) of Algorithm 1 by solving the corresponding least-squares problems of Equations (5) and (6) respectively. The Newton–Raphson method [

74] is used to search for the solutions, which has a sufficient rate of convergence. To avoid being trapped in local minima, for continuous camera position estimation, the previous camera position is used as the initial guess to estimate the current camera position, which guarantees the convergence of the solution to the actual camera position.

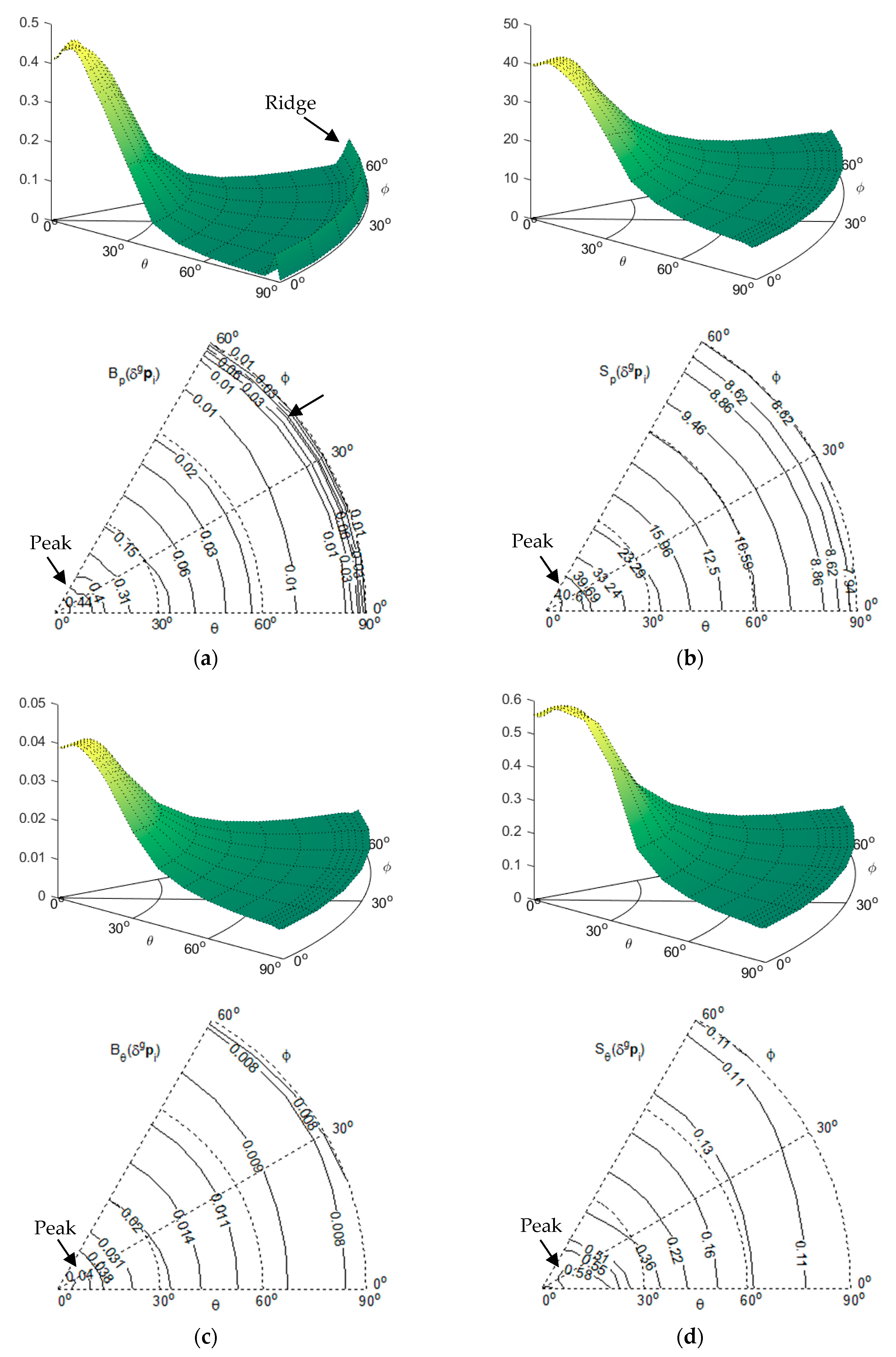

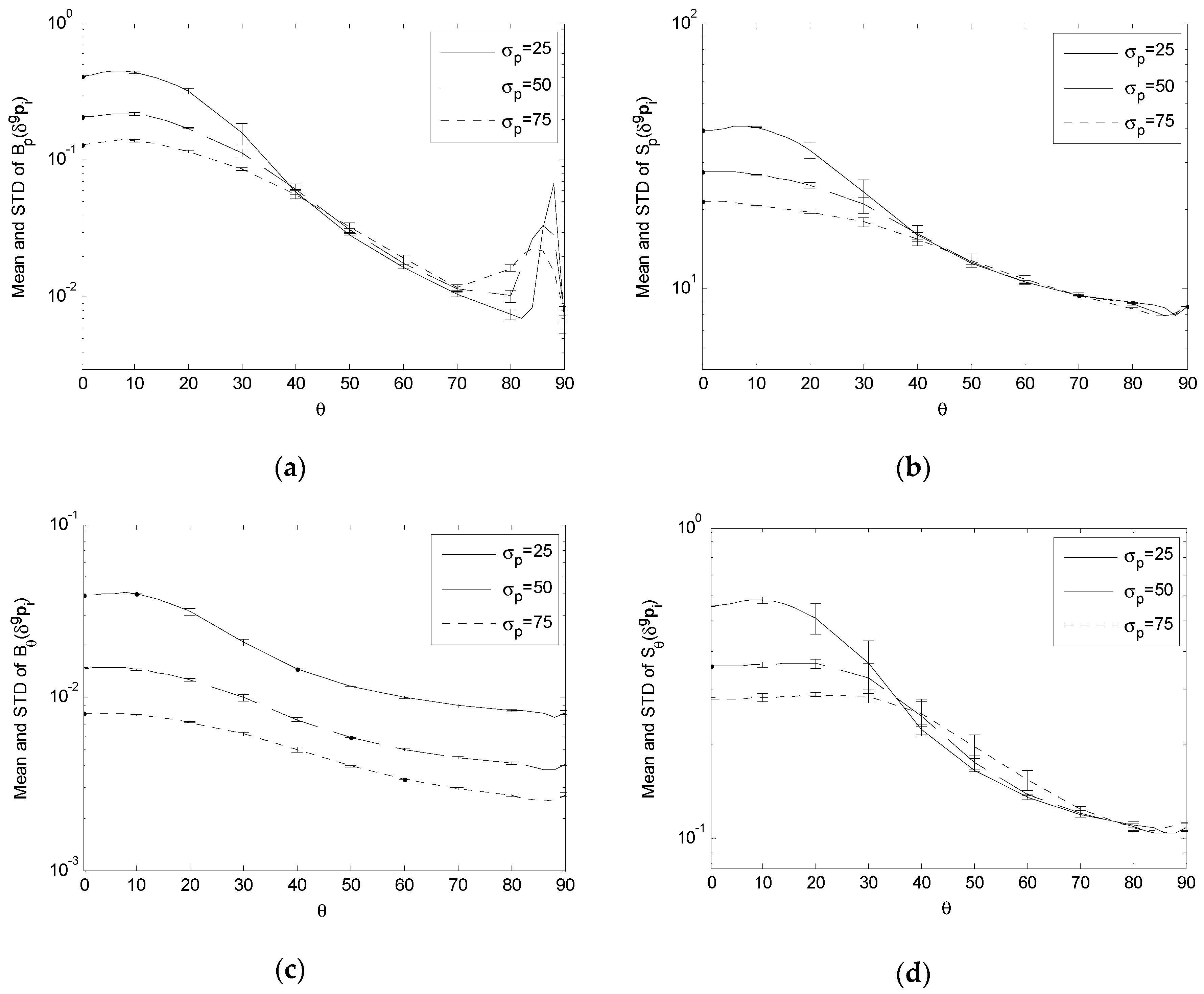

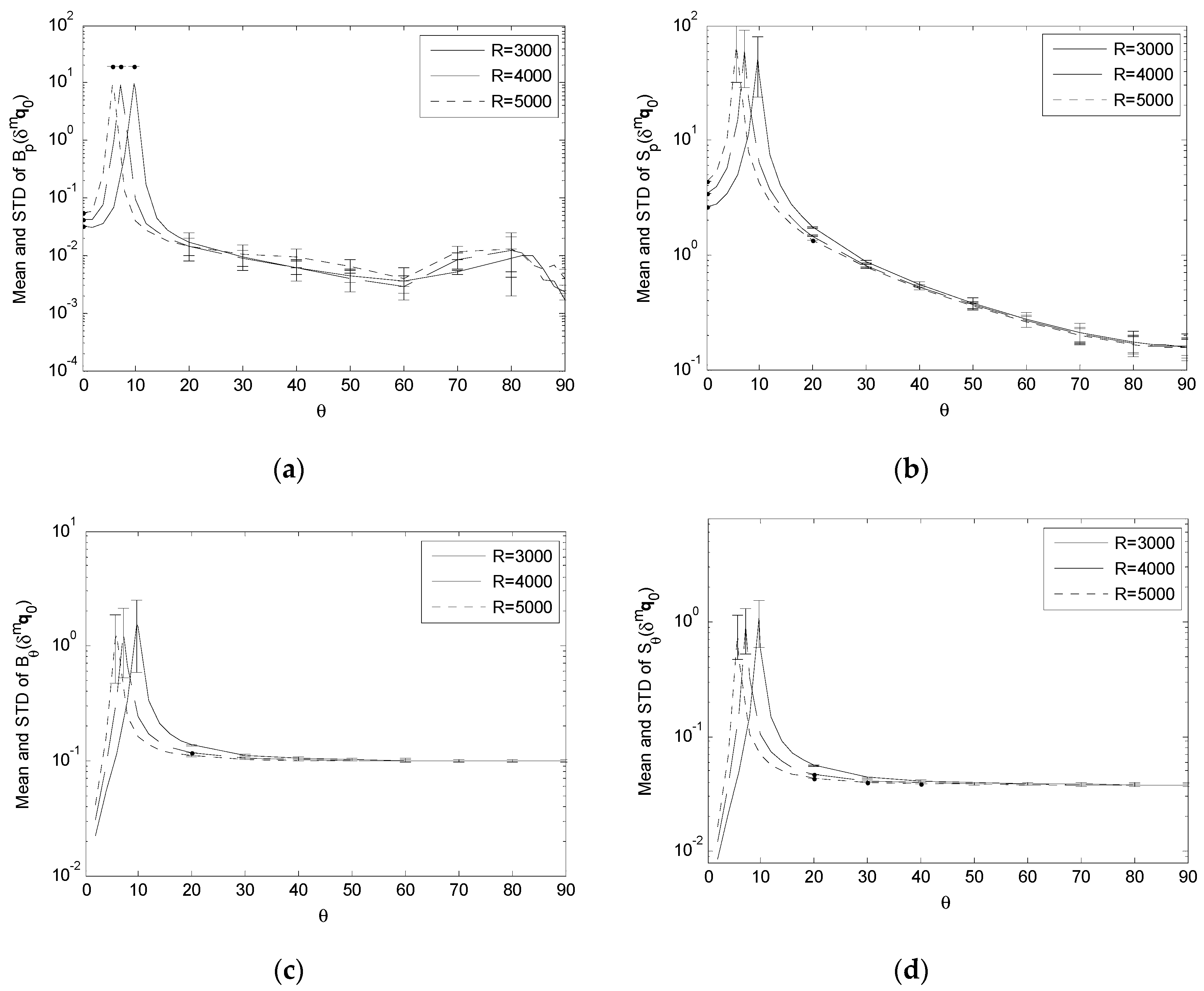

3.3. Trends of Performance Indices under Representative Input Error Standard Deviations

To evaluate the impact of

δgpi,

δmqi,

δmf and

δmq0 on

δgp0 and

δgθc, B

p, S

p, B

θ and S

θ are at first estimated with representative input error standard deviations across the data acquisition sphere S with R = 3000. Specifically, B

p(

δgpi), S

p(

δgpi), B

θ(

δgpi) and S

θ(

δgpi) are estimated with

σp = 25 (

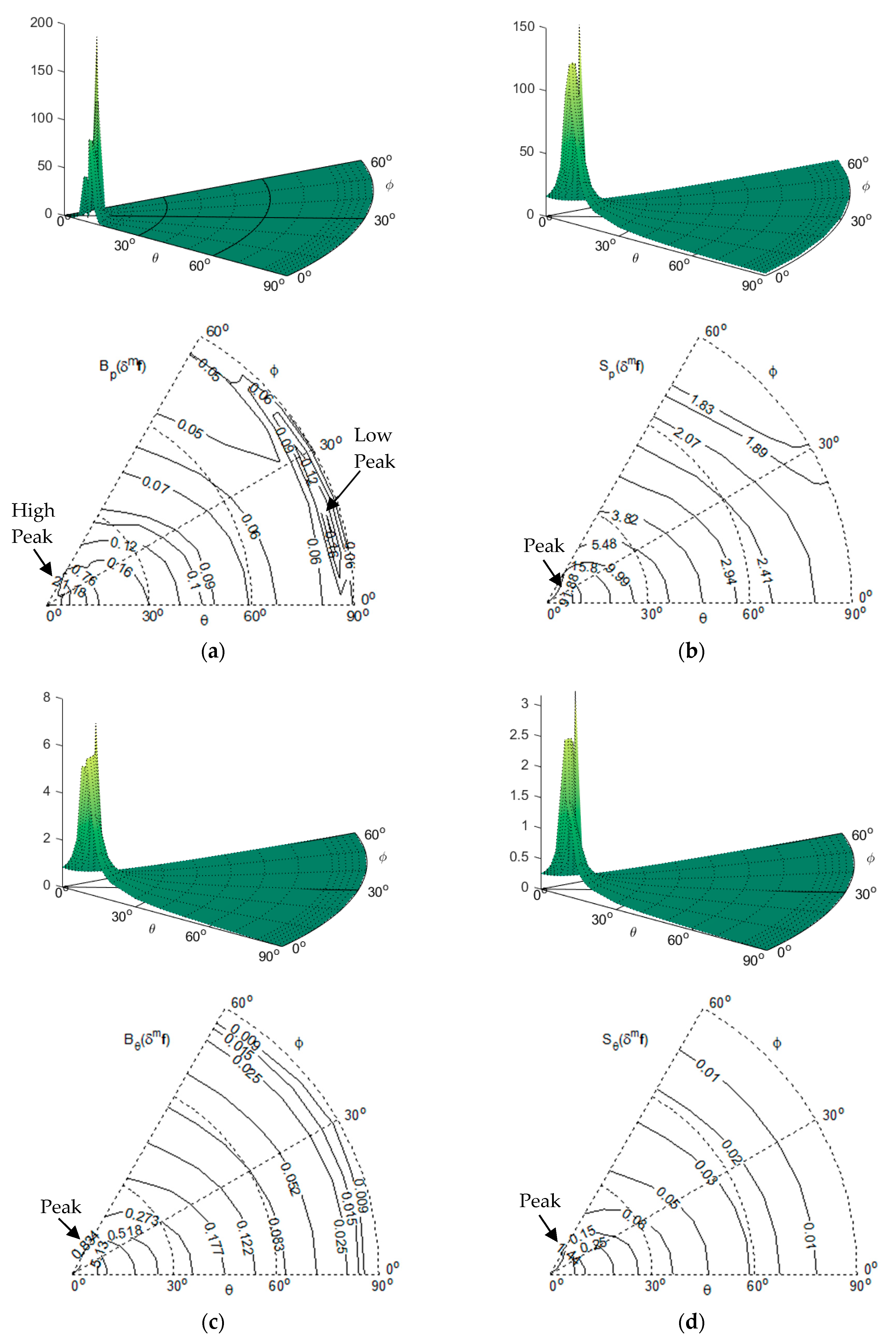

Figure 3); B

p(

δmf), S

p(

δmf), B

θ(

δmf) and S

θ(

δmf) are estimated with

σf = 0.4 (

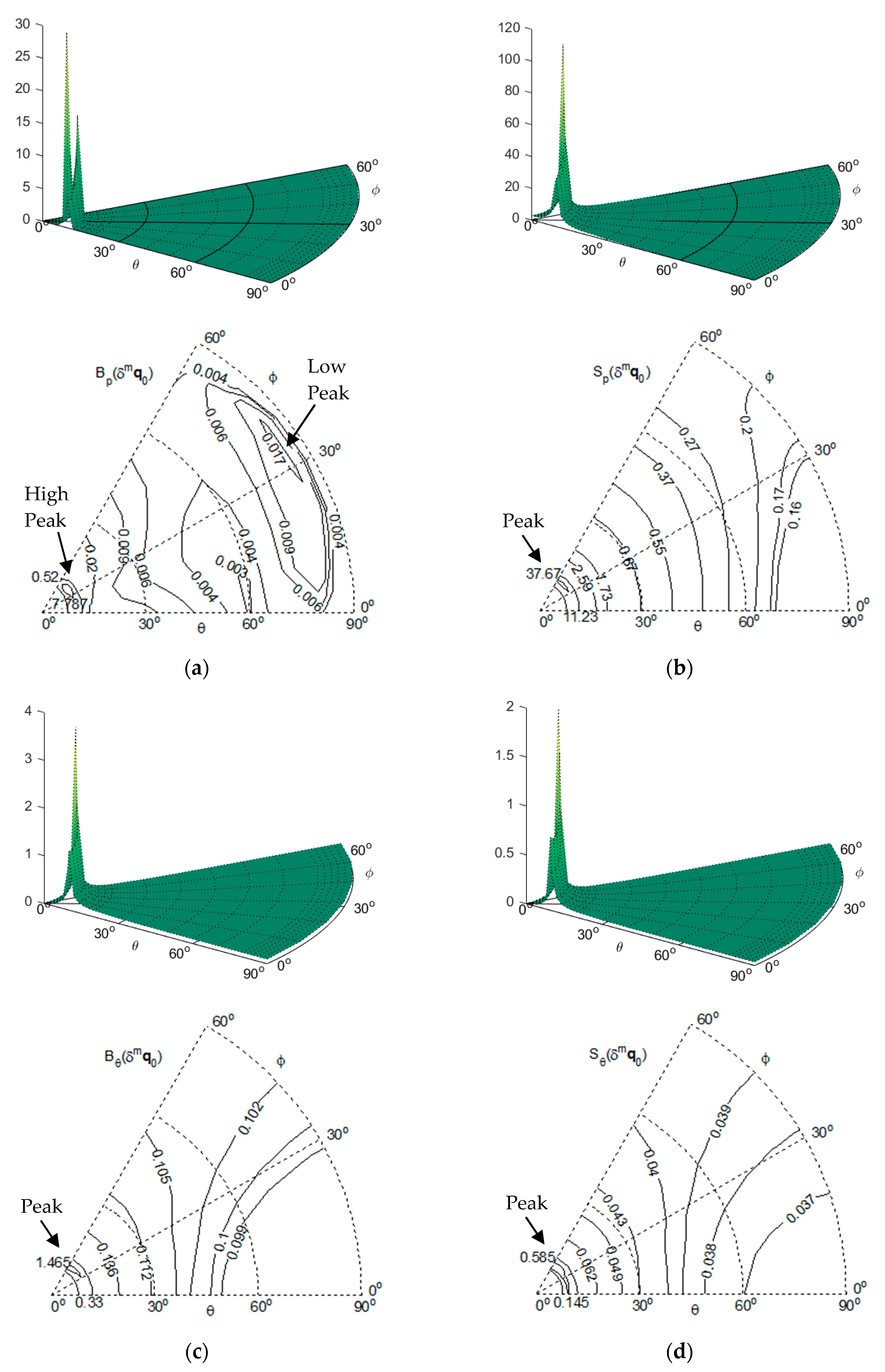

Figure 4); B

p(

δmq0), S

p(

δmq0), B

θ(

δmq0) and S

θ(

δmq0) are estimated with

σc = 0.6 (

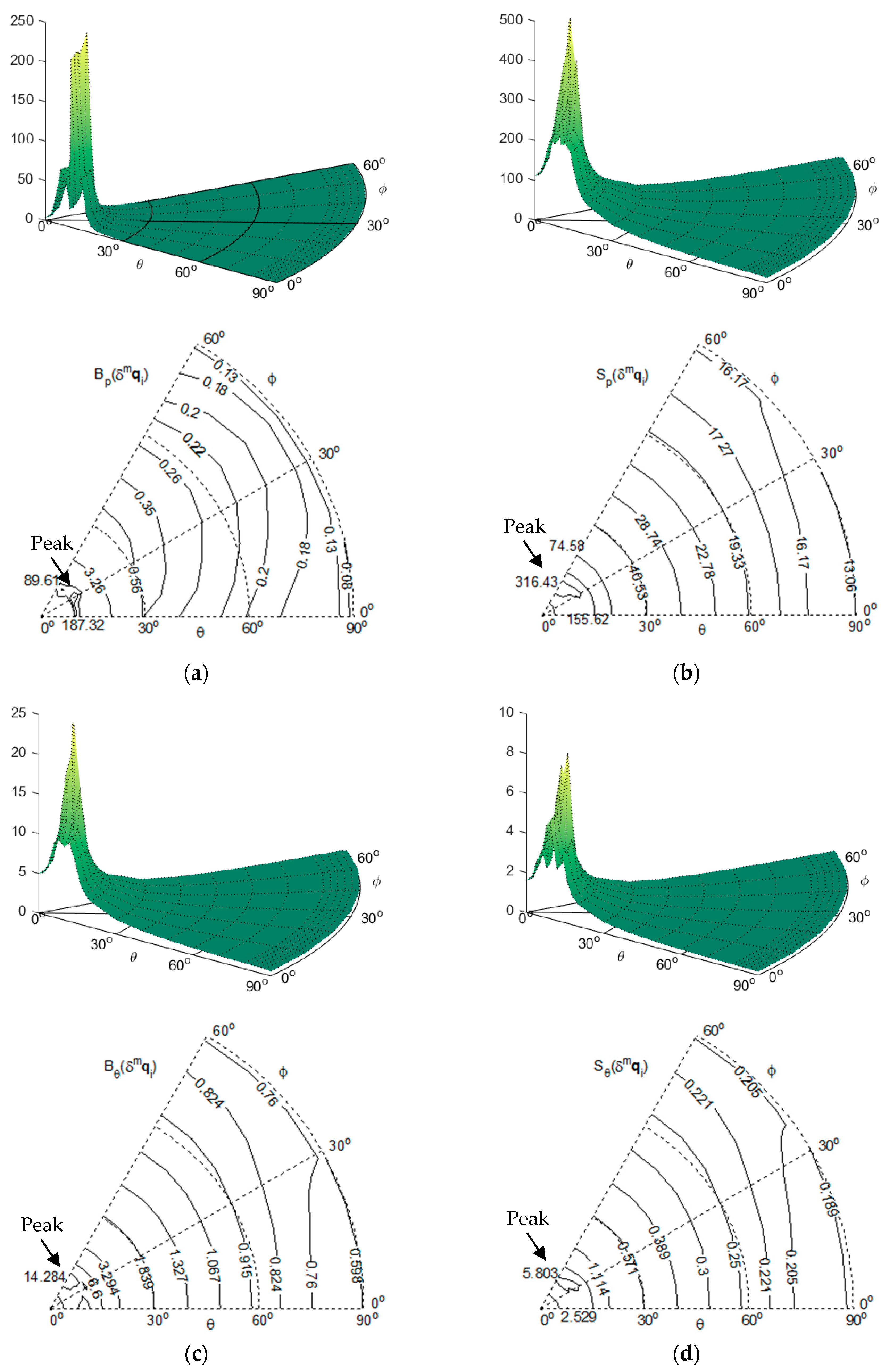

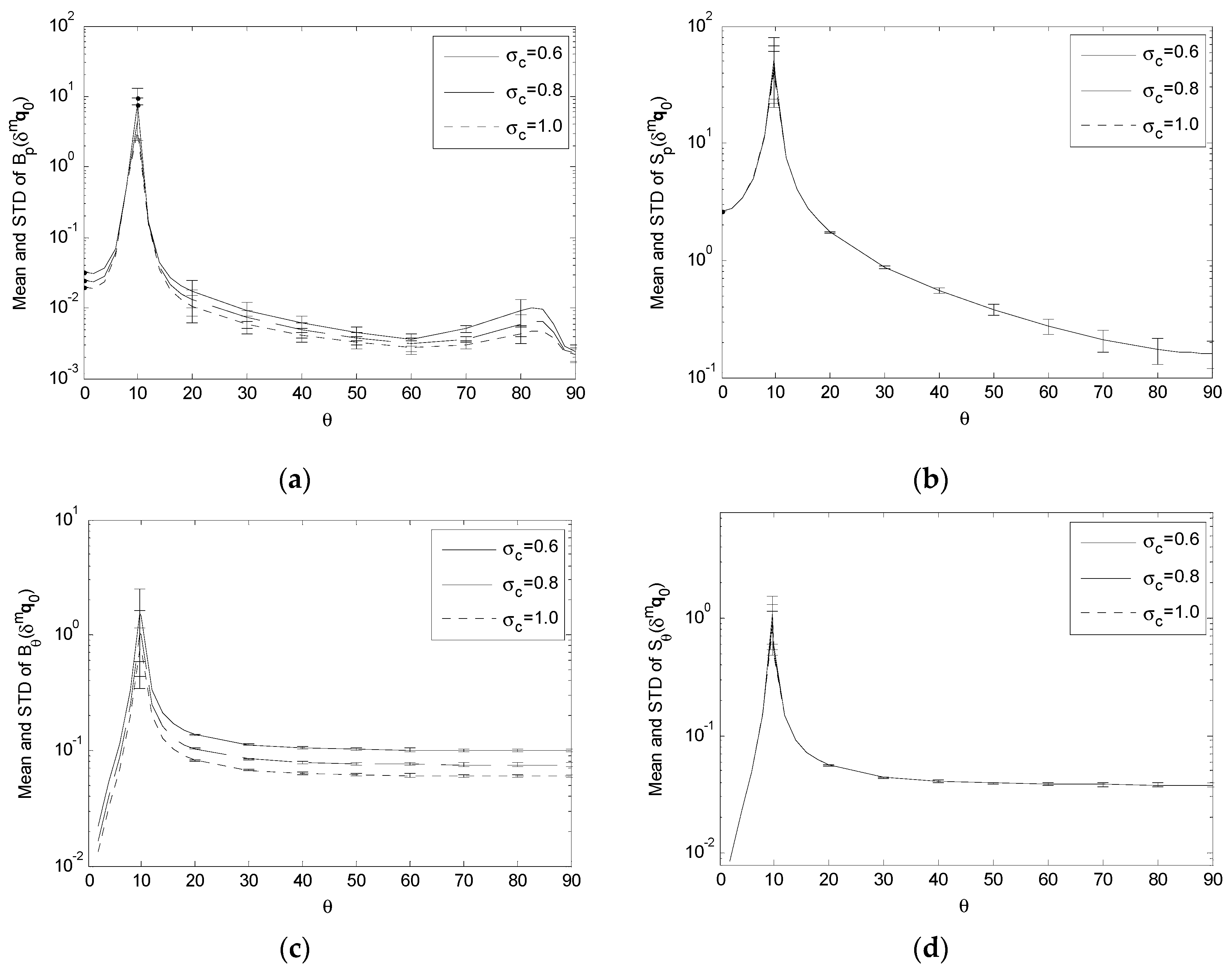

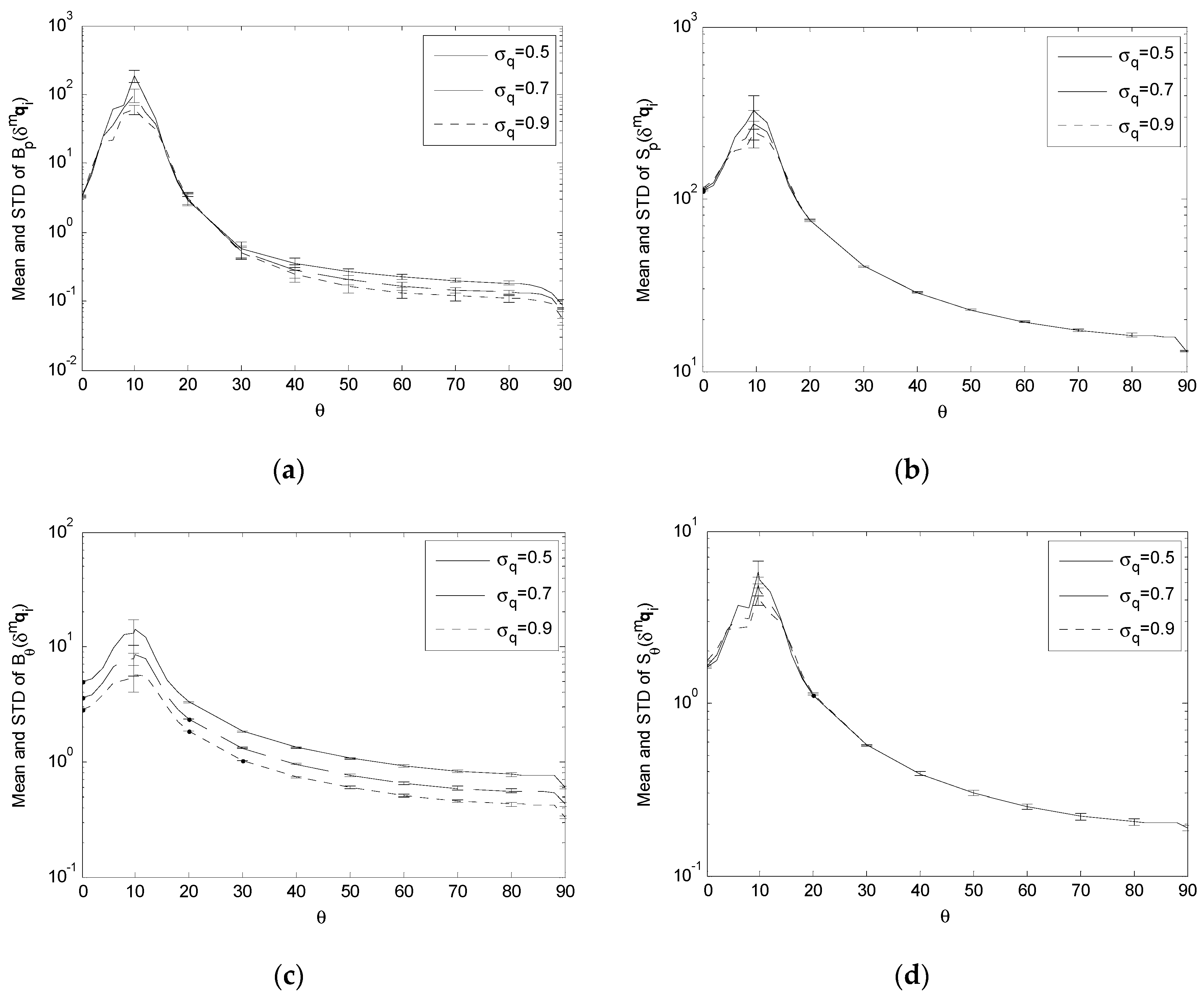

Figure 5); and B

p(

δmqi), S

p(

δmqi), B

θ(

δmqi) and S

θ(

δmqi) are estimated with

σq = 0.5 (

Figure 6). Since S is vertically symmetrical to the base plane (XY plane) and horizontally symmetrical to the vertical planes containing the medians of the equilateral base triangle, we only need to display the variation of B

p, S

p, B

θ and S

θ across a patch constrained by

θ∈[0°, 90°] and ϕ∈[0°, 60°], while the values of these performance indices at any point on S outside the patch can be obtained according to the symmetry. Each sub-figure in

Figure 3,

Figure 4,

Figure 5 and

Figure 6 presents the variation of a performance index, under a specific input error standard deviation, with respect to the observation direction (defined by the tilt angle

θ and pan angle ϕ) at a specific distance. Each sub-figure includes one surface plot and one contour plot, both generated using Matlab. The surface plot presents a 3D surface which visualizes the values of the corresponding performance index as heights and colors above a horizontal grid defined by

θ and ϕ. The general trend of the performance index’s variation is reflected by the variation in the height and color of the surface. Meanwhile, the contour plot presents a set of isolines (contour lines) at different value levels of the corresponding performance index which varies across

θ and ϕ. The contour lines are labeled with the associated values of the performance index in order to provide more numerical details of the variation. To match with the aforementioned data acquisition patch on S, the

θ − ϕ grid in each plot is presented in the form of a 2D sector, where the tilt angle of the observation direction

θ varies in the radial direction of the sector from 0° to 90° and the pan angle ϕ varies along the arc from 0° to 60°.

For the effect of the landmark position error δgpi:

- (1)

As shown in

Figure 3a, B

p(

δgpi) in general decreases as

θ increases from 0° towards 90°, although its maximum may not take place exactly at

θ = 0°. However, it forms a ridge when

θ approaches 90°, which is lower than the crest near

θ = 0°. When

σp = 25, the ratio between the two peaks is about 6.73.

- (2)

As shown in

Figure 3b–d respectively, S

p(

δgpi), B

θ(

δgpi) and S

θ(

δgpi) in general decrease as

θ increases from 0° to 90°, although their maxima may not be exactly at

θ = 0°.

For the effect of the imaging-related errors δmqi, δmf and δmq0:

- (1)

As shown in

Figure 4a and

Figure 5a, as

θ increases from 0°, B

p(

δmf) and B

p(

δmq0) increase at first to form a high peak, and then decrease generally as

θ increases further towards 90°. However, they reach a second, local peak when

θ approaches 90°, which is much lower than the first peak. Compared with the first peak, the second peak is far less significant: when

σf = 0.4, the ratio between the peaks of B

p (

δmf) is about 1095.84; when

σc = 0.6, the ratio between the peaks of B

p (

δmq0) is about 1525.50.

- (2)

As shown in

Figure 4b–d,

Figure 5b–d and

Figure 6, as

θ increases from 0°, B

p(

δmq0), S

p(

δmf), S

p(

δmq0), S

p(

δmqi), B

θ(

δmf), B

θ(

δmq0), B

θ(

δmqi), S

θ(

δmf), S

θ(

δmq0) and S

θ(

δmqi) all increase at first to form a high peak, and then decreases as

θ increases further towards 90°.

The general decreasing trend of these performance indices towards

θ = 90° implies that the camera pose estimation is less sensitive to the input errors as the observations of landmarks are made more parallel and closer to the base plane. Moreover, the non-circular patterns from the contour plots in

Figure 3,

Figure 4,

Figure 5 and

Figure 6 reflect the influence of the geometric arrangement of the landmarks.

Figure 3.

Bp(δgpi), Sp(δgpi), Bθ(δgpi) and Sθ(δgpi) with σp = 25 and R = 3000. (a) Surface plot and contour plot of Bp(δgpi); (b) Surface plot and contour plot of Sp(δgpi); (c) Surface plot and contour plot of Bθ(δgpi); (d) Surface plot and contour plot of Sθ(δgpi).

Figure 3.

Bp(δgpi), Sp(δgpi), Bθ(δgpi) and Sθ(δgpi) with σp = 25 and R = 3000. (a) Surface plot and contour plot of Bp(δgpi); (b) Surface plot and contour plot of Sp(δgpi); (c) Surface plot and contour plot of Bθ(δgpi); (d) Surface plot and contour plot of Sθ(δgpi).

Figure 4.

Bp(δmf), Sp(δmf), Bθ(δmf) and Sθ(δmf) with σf = 0.4 and R = 3000. (a) Surface plot and contour plot of Bp(δmf); (b) Surface plot and contour plot of Sp(δmf); (c) Surface plot and contour plot of Bθ(δmf); (d) Surface plot and contour plot of Sθ(δmf).

Figure 4.

Bp(δmf), Sp(δmf), Bθ(δmf) and Sθ(δmf) with σf = 0.4 and R = 3000. (a) Surface plot and contour plot of Bp(δmf); (b) Surface plot and contour plot of Sp(δmf); (c) Surface plot and contour plot of Bθ(δmf); (d) Surface plot and contour plot of Sθ(δmf).

Figure 5.

Bp(δmq0), Sp(δmq0), Bθ(δmq0) and Sθ(δmq0) with σc = 0.6 and R = 3000. (a) Surface plot and contour plot of Bp(δmq0); (b) Surface plot and contour plot of Sp(δmq0); (c) Surface plot and contour plot of Bθ(δmq0); (d) Surface plot and contour plot of Sθ(δmq0).

Figure 5.

Bp(δmq0), Sp(δmq0), Bθ(δmq0) and Sθ(δmq0) with σc = 0.6 and R = 3000. (a) Surface plot and contour plot of Bp(δmq0); (b) Surface plot and contour plot of Sp(δmq0); (c) Surface plot and contour plot of Bθ(δmq0); (d) Surface plot and contour plot of Sθ(δmq0).

Figure 6.

Bp(δmqi), Sp(δmqi), Bθ(δmqi) and Sθ(δmqi) with σc = 0.5 and R = 3000. (a) Surface plot and contour plot of Bp(δmqi); (b) Surface plot and contour plot of Sp(δmqi); (c) Surface plot and contour plot of Bθ(δmqi); (d) Surface plot and contour plot of Sθ(δmqi).

Figure 6.

Bp(δmqi), Sp(δmqi), Bθ(δmqi) and Sθ(δmqi) with σc = 0.5 and R = 3000. (a) Surface plot and contour plot of Bp(δmqi); (b) Surface plot and contour plot of Sp(δmqi); (c) Surface plot and contour plot of Bθ(δmqi); (d) Surface plot and contour plot of Sθ(δmqi).

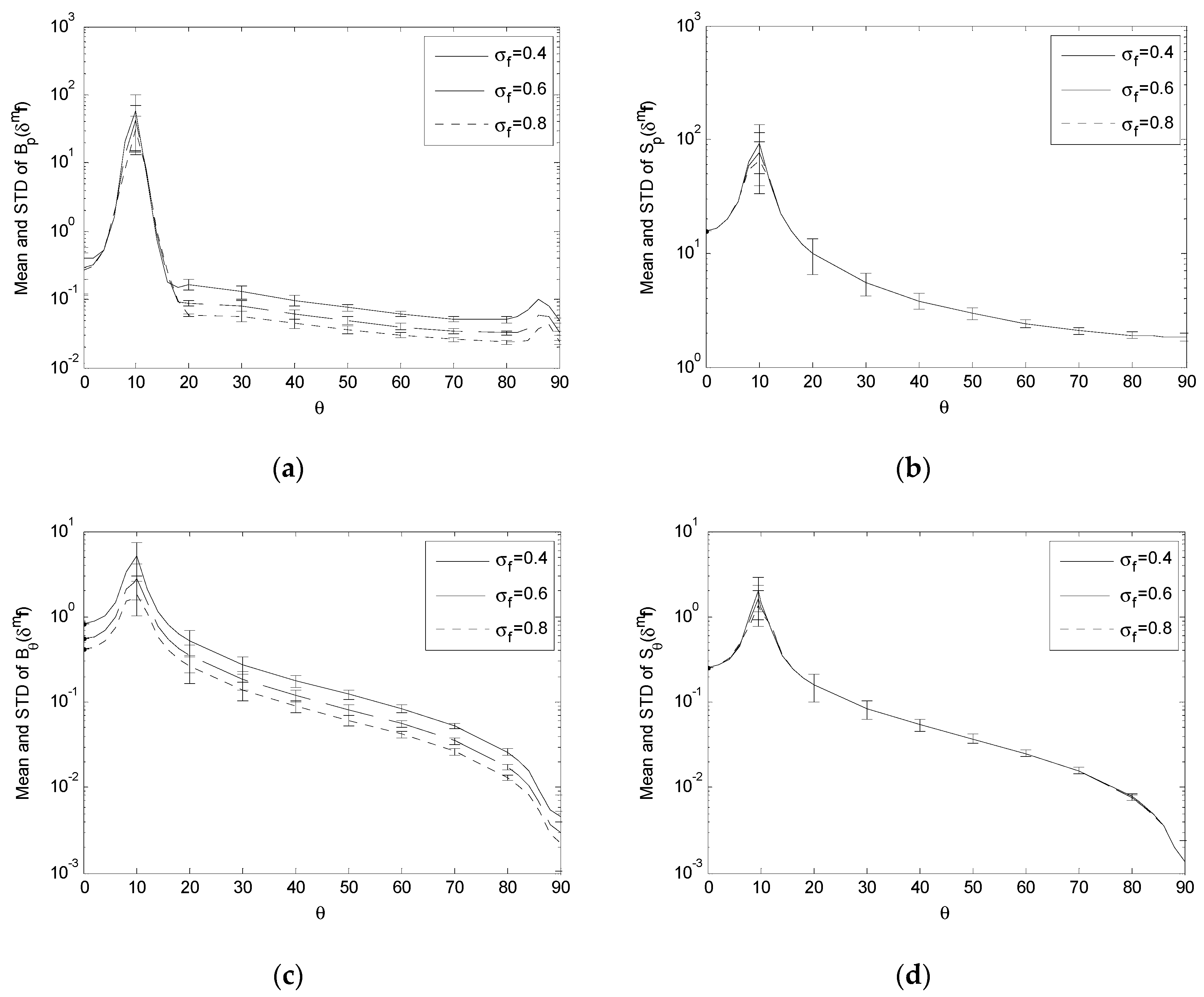

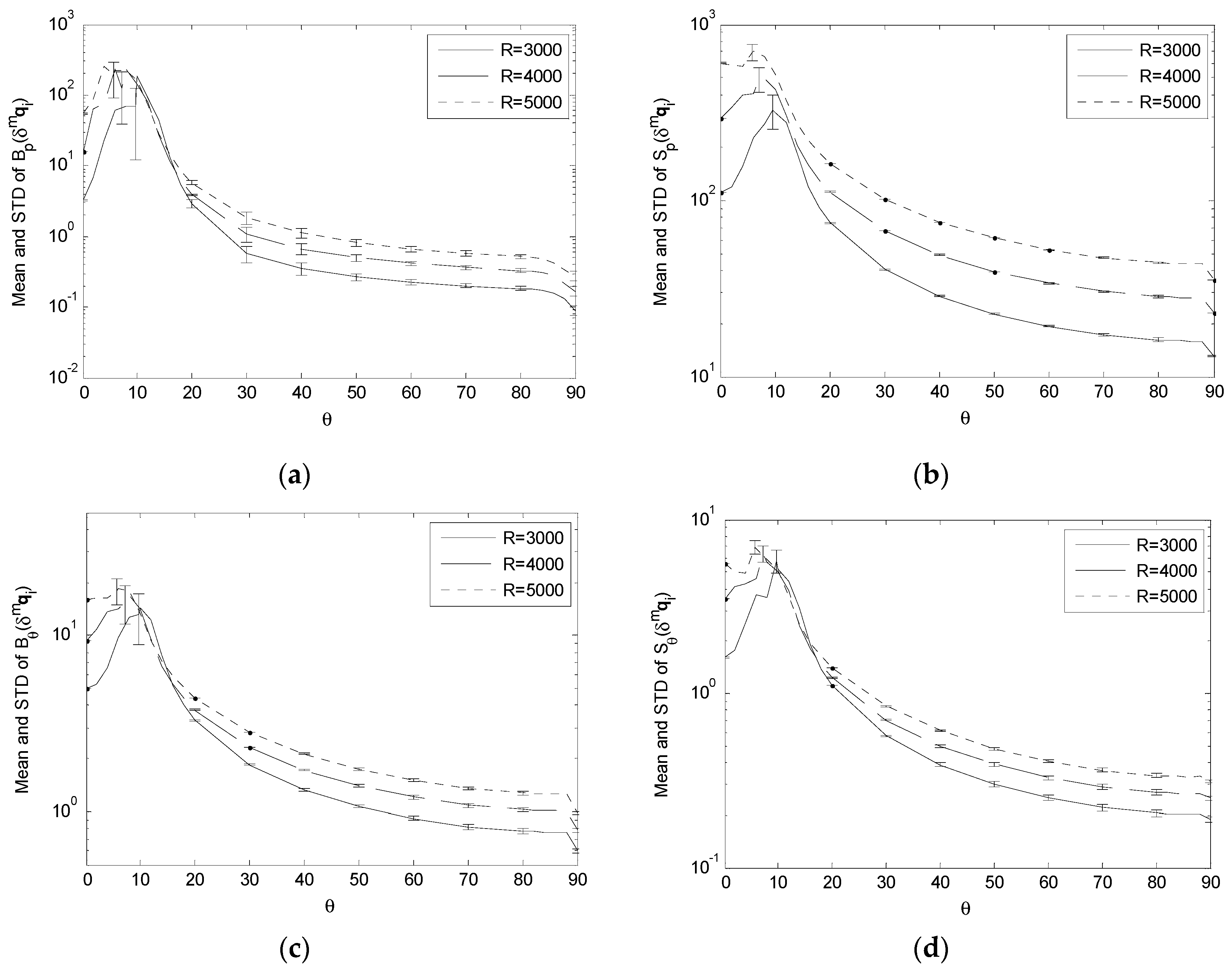

3.4. Variation of Performance Indices under Variation of Input Error Standard Deviations

To examine the variation of B

p, S

p, B

θ and S

θ under the variation of the input error standard deviations, we compare the values of these performance indices obtained with a set of different values of the input error standard deviations on the data acquisition sphere S with R = 3000. Specifically, B

p(

δgpi), S

p(

δgpi), B

θ(

δgpi) and S

θ(

δgpi) are estimated with

σp = {25,50,75} (

Figure 7); B

p(

δmf), S

p(

δmf), B

θ(

δmf) and S

θ(

δmf) are estimated with

σf = {0.4,0.6,0.8} (

Figure 8); B

p(

δmq0), S

p(

δmq0), B

θ(

δmq0) and S

θ(

δmq0) are estimated with

σc = {0.6,0.8,1.0} (

Figure 9); and B

p(

δmqi), S

p(

δmqi), B

θ(

δmqi) and S

θ(

δmqi) are estimated with

σq = {0.5,0.7,0.9} (

Figure 10). For the convenience of visualization, we compute the mean of each performance index across ϕ at each

θ, plot the curve of variation for this mean value with respect to

θ, and include the standard deviation across ϕ as the vertical error bar at each

θ. Each vertical error bar has a length equal to the standard deviation both above and below the specific mean value at a specific

θ. Moreover, to accommodate the large span between the maxima and minima in these performance indices, we plot the data as logarithmic scale for the vertical axis.

For the effect of δgpi, the simulation results show that, as σp increases,

- (1)

B

p(

δgpi), S

p(

δgpi) and S

θ(

δgpi) have lower peaks, resulting in flatter variation across S (

Figure 7a,b,d);

- (2)

B

θ(

δgpi) decreases across S (

Figure 7c).

For the effect of δmqi, δmf and δmq0, the simulation results show that, as σq, σf and σc increase,

- (1)

B

p(

δmf), B

p(

δmq0), B

p(

δmqi), B

θ(

δmf), B

θ(

δmq0) and B

θ(

δmqi) tend to have lower crests and decrease across the rest of S (

Figure 8,

Figure 9 and

Figure 10a,c);

- (2)

S

p(

δmf), S

p(

δmq0), S

p(

δmqi), S

θ(

δmf), S

θ(

δmq0) and S

θ(

δmqi) tend to have lower crests and are essentially independent of the variation of

σf,

σc and

σq for the rest of S (

Figure 8,

Figure 9 and

Figure 10b,d).

The decrease in these performance indices across the whole or portion of S, corresponding to the increase in the input error standard deviations, means that the proposed single-camera trilateration algorithm has a reduced sensitivity to higher input uncertainty under the corresponding geometric arrangements of the camera and landmarks.

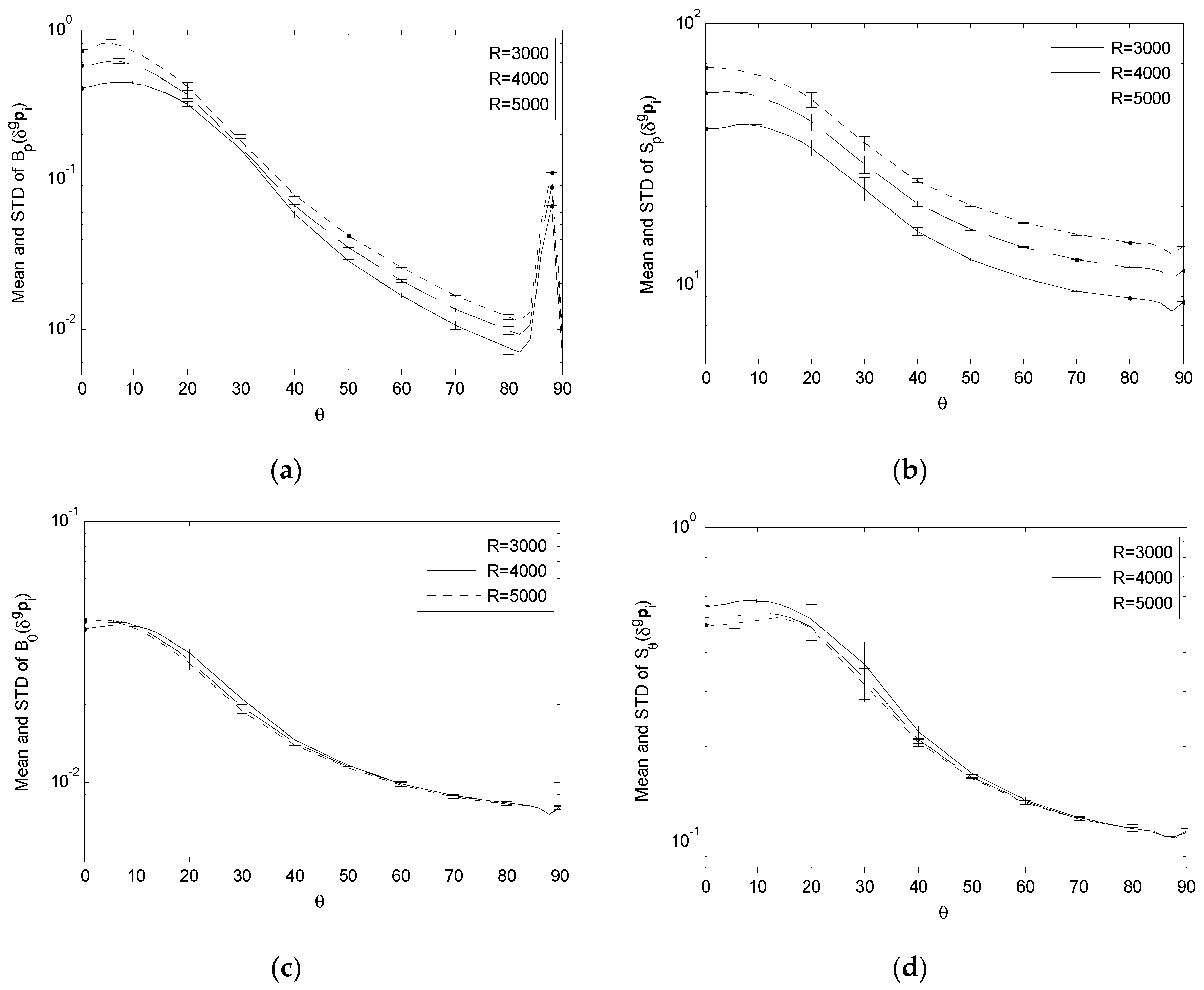

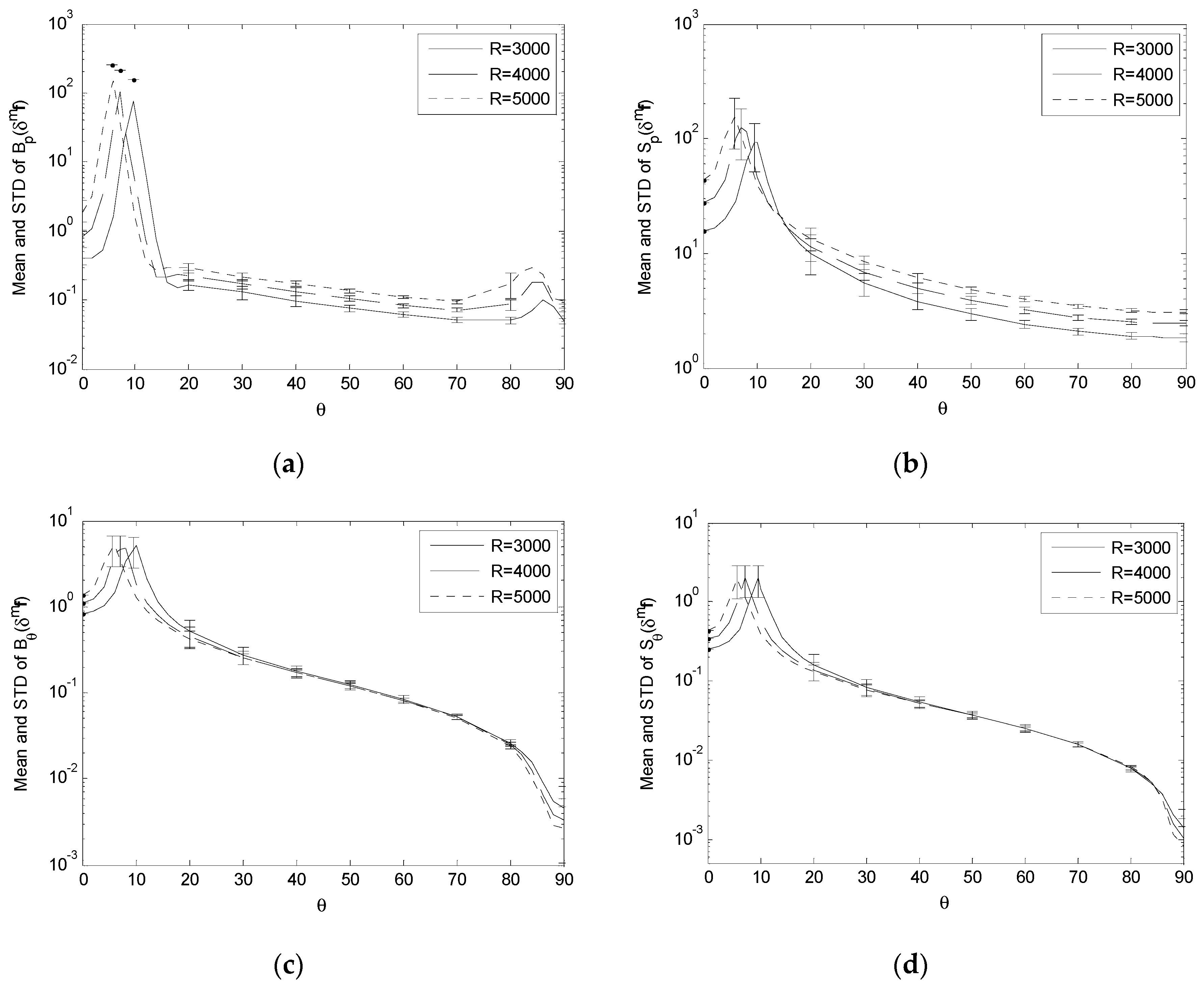

3.5. Variation of Performance Indices under Variation of Observation Distance

To examine the variation of B

p, S

p, B

θ and S

θ under the variation of R, we compare the values of these performance indices obtained with representative input error standard deviations on different data acquisition spheres with different radii R = {3000, 4000, 5000} (

Figure 11,

Figure 12,

Figure 13 and

Figure 14). For the convenience of comparison, we adopt the same scheme of data plotting as

Figure 7,

Figure 8,

Figure 9 and

Figure 10.

For the effect of δgpi, the simulation results show that, as R increases,

- (1)

B

p(

δgpi) and S

p(

δgpi) increases across S (

Figure 11a,b);

- (2)

B

θ(

δgpi) and S

θ(

δgpi) have very small changes and can be considered being essentially independent of the variation of R (

Figure 11c,d).

It means that a longer observation distance tends to increase the sensitivity of the positioning bias and uncertainty to the landmark position error, while it has little impact on the corresponding estimation bias and uncertainty of the camera orientation.

For the effect of δmqi, δmf and δmq0, the simulation results show that, as R increases,

- (1)

B

p(

δmf), S

p(

δmf), B

p(

δmqi), S

p(

δmqi), B

θ(

δmqi) and S

θ(

δmqi) in general have an “increasing and shifting” trend, i.e., their variation can be considered as increasing across

θ with the peaks shifting towards

θ = 0° (

Figure 12a,b and

Figure 14a–d);

- (2)

B

θ(

δmf), S

θ(

δmf), B

p(

δmq0), S

p(

δmq0), B

θ(

δmq0) and S

θ(

δmq0) essentially have their peaks shift towards

θ = 0° (

Figure 12c,d and

Figure 13a–d).

This means that a longer observation distance tends to increase the sensitivity of the positioning bias and uncertainty to the focal length error, and the sensitivity of the positioning and orienting bias and uncertainty to the landmark segmentation error while, except for causing the peaks to shift, it has little impact on the orienting bias and uncertainty due to the focal length error, and the positioning and orienting bias and uncertainty due to the image center error.

3.6. Error-Prone Regions in Performance Indices

Figure 4,

Figure 5,

Figure 6,

Figure 8,

Figure 9,

Figure 10,

Figure 12,

Figure 13 and

Figure 14 indicate that a sharp high peak consistently appears in B

p, S

p, B

θ and S

θ of

δmf,

δmqi and

δmq0. Each performance index increases to form a high peak as

θ increases from 0°, and in general decreases as

θ further increases towards 90°. (Though B

p(

δmf) and B

p(

δmq0) have a second peak later on, it is not so significant as the first peak). The high peak stands out sharply from its neighborhood. On the other side, the crests and transitions in

Figure 3,

Figure 7 and

Figure 11, which show the effect of landmark position errors on the performance indices, are relatively mild.

This high peak is of particular interest. It reflects:

- (1)

The camera localization algorithm is more sensitive to the errors from the calibration of the camera parameters and segmentation of the landmarks from the image than the errors in positioning the landmarks in the environment.

- (2)

The neighborhood of a high peak represents a range of camera orientations (relative to the base plane) from which the camera localization is highly sensitive to the errors in camera parameters and image segmentation, resulting in high estimation bias and uncertainty.

Thus, improving the accuracy of camera calibration and image segmentation will help to improve the accuracy of camera localization.

Moreover, knowing the values of θ corresponding to the peaks will help to avoid those error-prone regions in practice. The simulation results reveal that:

- (1)

Given a specific geometric arrangement of the landmarks and a specific observation distance, the peaks of all these performance indices appear at the same

θ value, as shown in

Figure 8,

Figure 9 and

Figure 10;

- (2)

This means that the θ value corresponding to the peaks in the above performance indices is mostly determined by the geometric arrangement of the landmarks and camera.

Although a closed-form estimation of the peak

θ value is not available yet, a close approximation can be found through simulations. Specifically in our example where the three reference points define an equilateral triangle, the simulation results show that the peaks occur when the camera is located above the inscribing circle of the base triangle. That is, the

θ value corresponding to the peaks is well approximated as:

where

r denotes the base radius. This relationship has been verified by comparing the numerically estimated peak locations in these performance indices with

θ calculated from Equation (26) (

Table 1). Since the

θ value at which the peaks appear is consistent across B

p, S

p, B

θ and S

θ of

δmf,

δmqi and

δmq0, only B

p(

δmf) is reported here as a representative.

Table 1 shows a high consistency between the numerically estimated

θ and that calculated from Equation (26). Since the high peaks in these performance indices mean high bias and uncertainty in camera pose estimation, tracking

θ at which the observation is made can alert the localization process the error-prone region in practice.

The above discussion shows that the singular cases represented by the sharp high peaks existing in the plots of performance analysis are related to both the spatial relationship between the camera and landmarks and the usage of camera imaging as the input. Equation (26) provides an effective tool for predicting/estimating potentially singular observation positions. Ultimately, a closed-form representation of the performance indices will lead to analytical analysis of the singular cases. This will be explored in our future work.

4. Experimental Test

An experiment was also carried out to further verify the effectiveness of the proposed single-camera trilateration algorithm, and check the estimation error under the combined effect of various input errors.

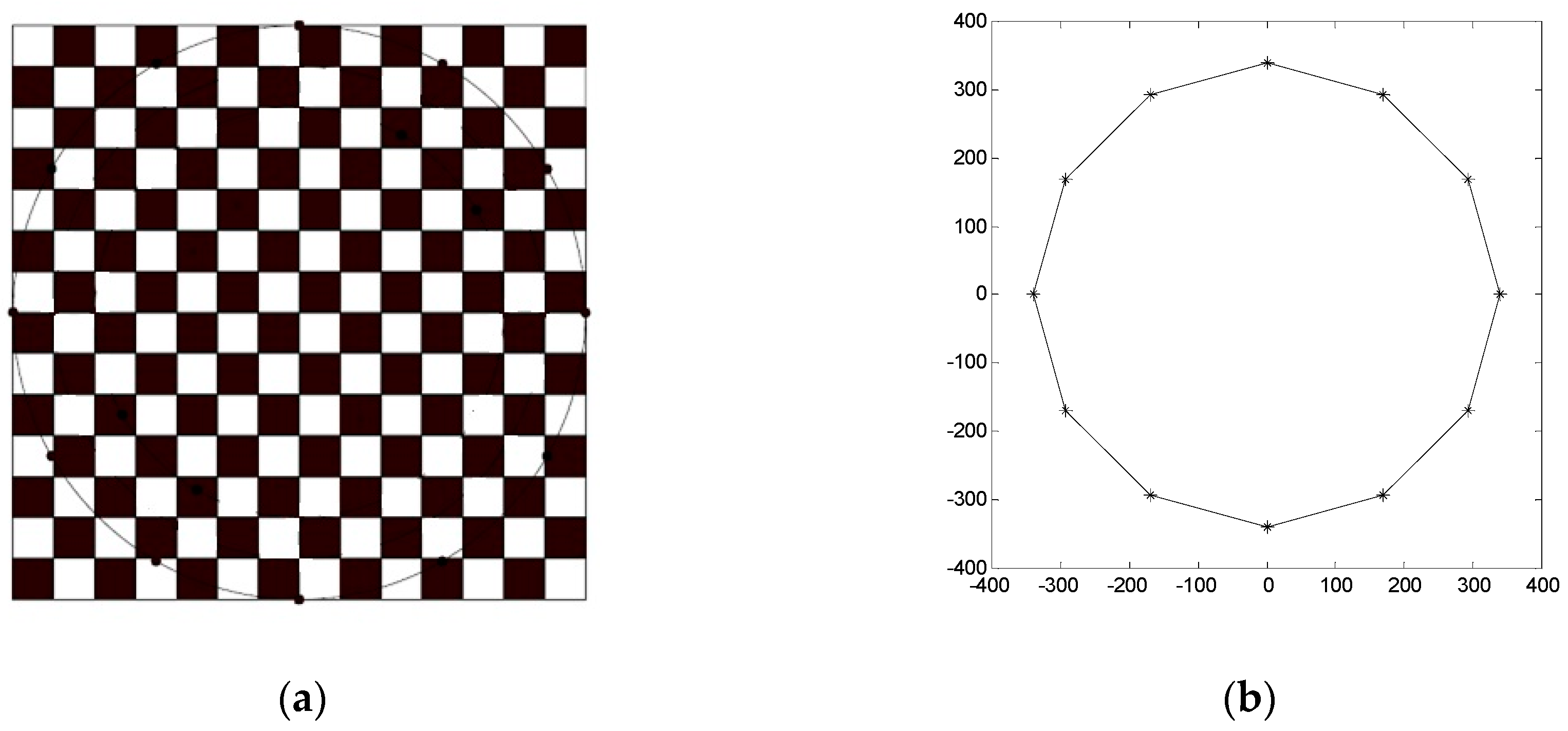

A landmark pattern was designed as shown in

Figure 15a. It consists of a 679 mm × 679 mm square-shaped checker board pattern and a circle co-centered with the checker board and with a diameter of 679 mm. There are 12 landmark dots equally spaced on the circle, as shown in

Figure 15b. The pattern was hung under the ceiling of an indoor experimental space with the base plane parallel to the flat floor. We used the base plane as the XY plane of the global frame of reference, with the Z axis pointing downwards and the center of the pattern as the origin of the frame.

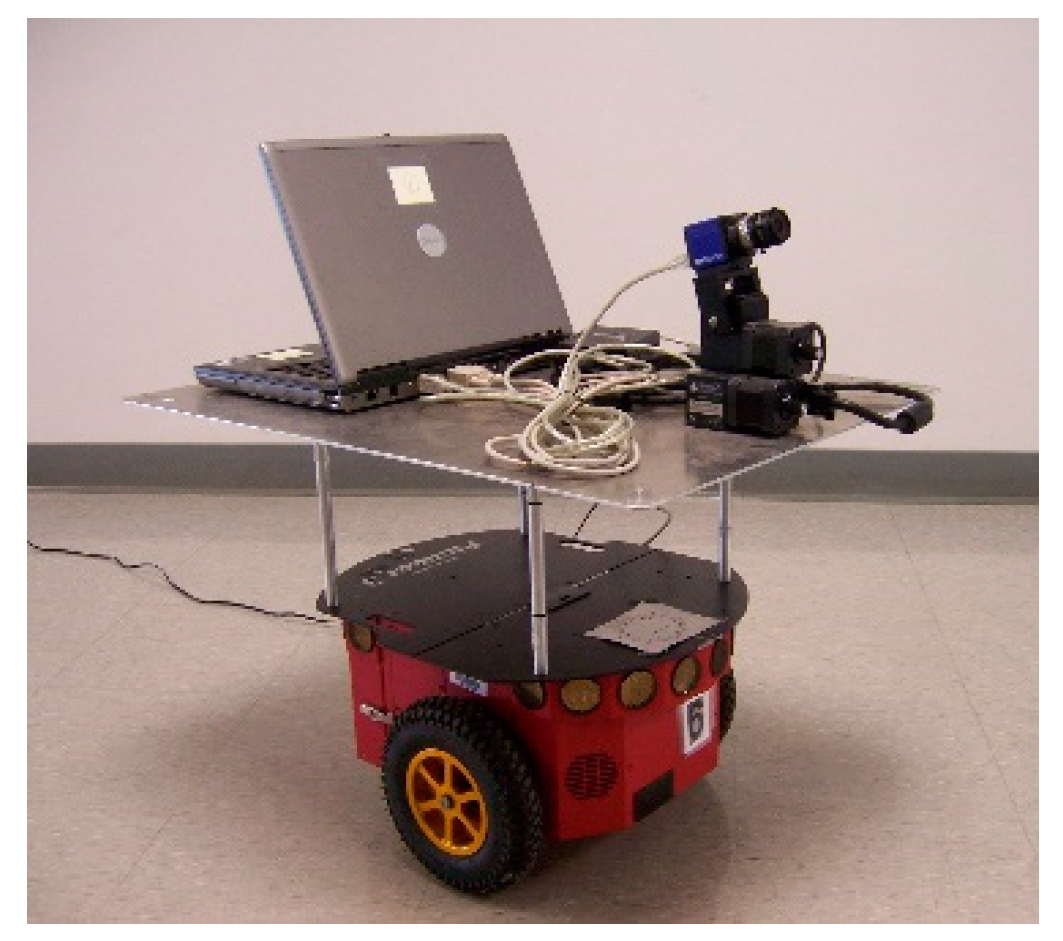

The exact camera, which was calibrated to provide the realistic values of camera parameters for the above simulations, was installed on a Directed Perception PTU-D46-17 controllable pan-tile unit on the top of a Pioneer 3-DX mobile robot (

Figure 16) such that the camera was kept on a plane parallel to the base plane at a distance of 1600 mm.

We moved the camera (by moving the mobile robot and adjusting the pan-tilt unit) to a number of locations at different observation distances R (which were defined in

Section 3.2), one location at one distance. At each location (each R), we adjusted the tilt angle

θ and pan angle ϕ (which were defined in

Section 3.2) of the camera using the pan-tile unit such that the center of the landmark pattern fell on the image center of the camera, and thus kept the camera oriented towards the landmark pattern. At each location, an image of the landmark pattern was taken by the camera, and stored in the onboard laptop computer.

The collected landmark images were processed after the data acquisition process. Focusing on evaluating the proposed single-camera trilateration algorithm itself, we segmented the landmarks from each image manually after correcting the image distortion. Because the camera optical center is not a physical point on the camera, it is highly difficult, if not impossible, to directly measure the position of the camera optical center and the orientation of the camera optical axis. Thus it is highly difficult to evaluate the localization accuracy of the proposed algorithm according to the golden truth of the camera poses in the global frame. Instead, we chose to compare the camera localization results from the proposed single-camera trilateration algorithm with those obtained from camera calibration (using the camera calibration toolbox in [

62]). The camera calibration process calibrated both the intrinsic parameters of the camera, which were input to the proposed algorithm for camera pose estimation, and the extrinsic parameters associated with each calibration image of the checker board pattern, which were used as the reference camera pose estimate to reflect the localization accuracy of the proposed algorithm.

To evaluate the accuracy of the proposed single-camera localization algorithm at different observation distances, we would like to obtain a collection of camera localization results corresponding to different pan angles ϕ at each observation distance R so that we can derive the statistics of localization accuracy at each R, where, as we adjusted the pan/tile unit to align the camera optical axis with the center of the landmark pattern at each R, the tilt angle

θ is fixed at each R. Due to the size limitation on the experimental space, it was difficult for us to pan the camera relative to the Z axis of the global frame all around as we kept increasing R. Instead, we took advantage of the design of our landmark pattern. With 12 landmark dots equally spaced on the circle as shown in

Figure 15b, we have 12 equally spaced landmark sets labeled as (1, 5, 9), (2, 6, 10), (3, 7, 11), (4, 8, 12), (5, 9, 1), (6, 10, 2), (7, 11, 3), (8, 12, 4), (9, 1, 5), (10, 2, 6), (11, 3, 7) and (12, 4, 8). As mentioned above, at each R (and

θ), we took one camera image of the landmark pattern. By using different 3-landmark sets from the same image, we in fact localize the camera corresponding to different ϕ. For example, using landmark set (2, 6, 10) is equivalent to panning the camera from landmark set (1, 5, 9) about the global Z direction by ∆ϕ = 30°. In this way, instead of physically panning the camera, by using different landmark sets to localize the camera, we equivalently obtained the camera localization results corresponding to different ϕ. On the other side, at each R (and

θ), the reference camera pose estimate corresponding to each landmark set was obtained by rotating the calibrated camera pose, which was calculated based on the image of the checker board pattern at R (and

θ) using the camera calibration toolbox and corresponds to the landmark set (1,5,9), about the global Z axis with a proper ∆ϕ.

The localization results from the proposed single-camera localization algorithm are compared with those from the camera calibration process in

Table 2. The calibrated camera pose is used as the reference estimate, and the corresponding calibration error reported by the camera calibration toolbox is presented as the standard deviation of the camera pose calibration. The difference between the camera pose estimated using the proposed algorithm and the calibrated camera pose is recorded as ∆

p0 (position estimation difference) and ∆

θc (orientation estimation difference), and the mean and standard deviation of these differences are reported. The results in

Table 2 show that on average the proposed algorithm attains a localization estimation very close to that attained with camera calibration. The average difference in position estimation is only about 6 mm at an observation distance of R = 4.3 m (corresponding to R

h = 4 m in

Table 2), and is smaller with shorter observation distances; the average difference in orientation estimation remains lower than 0.3 degree at an observation distance of R ≤ 4.3 m. While camera calibration cannot be used for real-time localization of a moving camera, the proposed algorithm, which provides a comparable estimation of the camera pose on the go, presents an effective online localization approach for many mobile robot applications. We also notice that the difference in camera position estimation between the two compared methods and the associated standard deviation increase as the observation distance increases. This is mainly because the effective size of the pixels increases as the distance increases. Since the proposed algorithm depends on the segmented landmarks from the same image, the camera positioning error clearly increases as the observation distance and thus the effective pixel size increase; while the camera position estimation error with camera calibration increases much slower, because the calibration process takes advantage of all the images taken at different distances and all the grid corners of the checker board pattern to average out the effect of the increasing effective pixel size. On the other side, the difference in camera orientation estimation between the two compared methods and the associated standard deviation do not appear to be affected as the observation distance increases, due to the cancellation between the effect of the observation distance and that of the effective pixel size. Other factors contributing to the localization error of both methods include the calibration error of the camera intrinsic parameters, and the positioning errors of the landmarks and grid corners due to the printing inaccuracy of the landmark pattern and manual measurement inaccuracy.

In addition, to test the speed of the proposed single-camera trilateration algorithm, we implemented a compiled standalone executable (exe file) of the algorithm on a laptop PC with a 1.66 GHz Intel Core 2 CPU, when processing the experimental data. The average estimation speed was about 0.06762 s. In our implementation, the main time-consuming steps were to solve Equations (5) and (6) by using the Newton–Raphson method to search for the solutions of the associated least-squares optimization problems in order to obtain an optimal camera pose estimation.

5. Conclusions and Future Work

This paper has introduced an effective single-camera trilateration scheme for regular forward-looking cameras. Based on the classical pin-hole model and principles of perspective geometry, the position and orientation of such a camera can be calculated successively from a single image of a few landmarks at known positions. The proposed scheme is an advantageous addition to the category of trilateration techniques:

- (1)

The camera position is trilaterated from multiple simultaneously captured landmarks, which naturally resolves the common concern of the synchronization among multiple distance measurements in ranging-based trilateration systems.

- (2)

The estimation of the camera orientation becomes an integrated part of the proposed trilateration scheme, taking advantage of the geometrical information contained in a single image of multiple landmarks.

- (3)

The instantaneous camera pose is estimated from only one single image, which naturally resolves the issue of mismatching among a pair of images in the stereovision-based trilateration.

The proposed single-camera trilateration scheme provides a convenient tool for the self-localization of mobile robots and other vehicles which are equipped with cameras. Depending on the identification of the landmarks involved, the proposed algorithm targets environments with identifiable landmarks, such as indoor and outdoor environments with artificial landmarks, metropolitan environments, and natural environments with distinct landmarks.

The performance of the proposed algorithm has been analyzed in this work based on extensive simulations with a representative set of geometric arrangements among the landmarks and camera and a representative set of input errors. In practice, simulations with the settings of a targeted environment and the input errors of a targeted camera will provide an effective way to predict the algorithm performance in the specific environment and system. The simulation results will provide valuable guidance for the implementation of the proposed algorithm, and facilitate mobile robots to achieve accurate self-localization by avoiding sensitive regions of the performance indices.

Nevertheless, a closed-form solution will further reduce the time complexity of the proposed algorithm, and a closed-form estimation of the performance indices will enable more efficient performance analysis and prediction of the proposed algorithm. As pointed out, existing closed-form solutions can be adopted into the steps (2) and (3) of the proposed framework (Algorithm 1) to solve the 3-landmark case. However, it is challenging to find a closed-form solution which is globally optimal and applies to the general case of 3D trilateration based on more than 3 landmarks. This will be considered in our future work.

Moreover, we target the development of an automatic localization system for mobile robot self-localization based on the proposed single-camera trilateration scheme. The experimental system shown in

Figure 16 has provided the necessary onboard hardware for the proposed scheme. Our future work will focus on the development of the software system and supporting vision algorithms.

In addition, over the years of research and development, numerous localization algorithms/approaches in different categories have been proposed in the literature, from deterministic to probabilistic, from ranging-based to image-based. The work of this paper is carried out within the scope of trilateration, with the intention to provide a convenient addition to this category of localization approaches. Meanwhile, the development in other categories of localization approaches are highly significant, e.g., the approaches in the big category of computer vision-based localization [

79,

80,

81]. Our future work on localization may expand into those categories, and comparison study among related approaches is expected.