Using a GPU to Accelerate a Longwave Radiative Transfer Model with Efficient CUDA-Based Methods

Abstract

1. Introduction

2. Related Work

3. Model Description and Experiment Platform

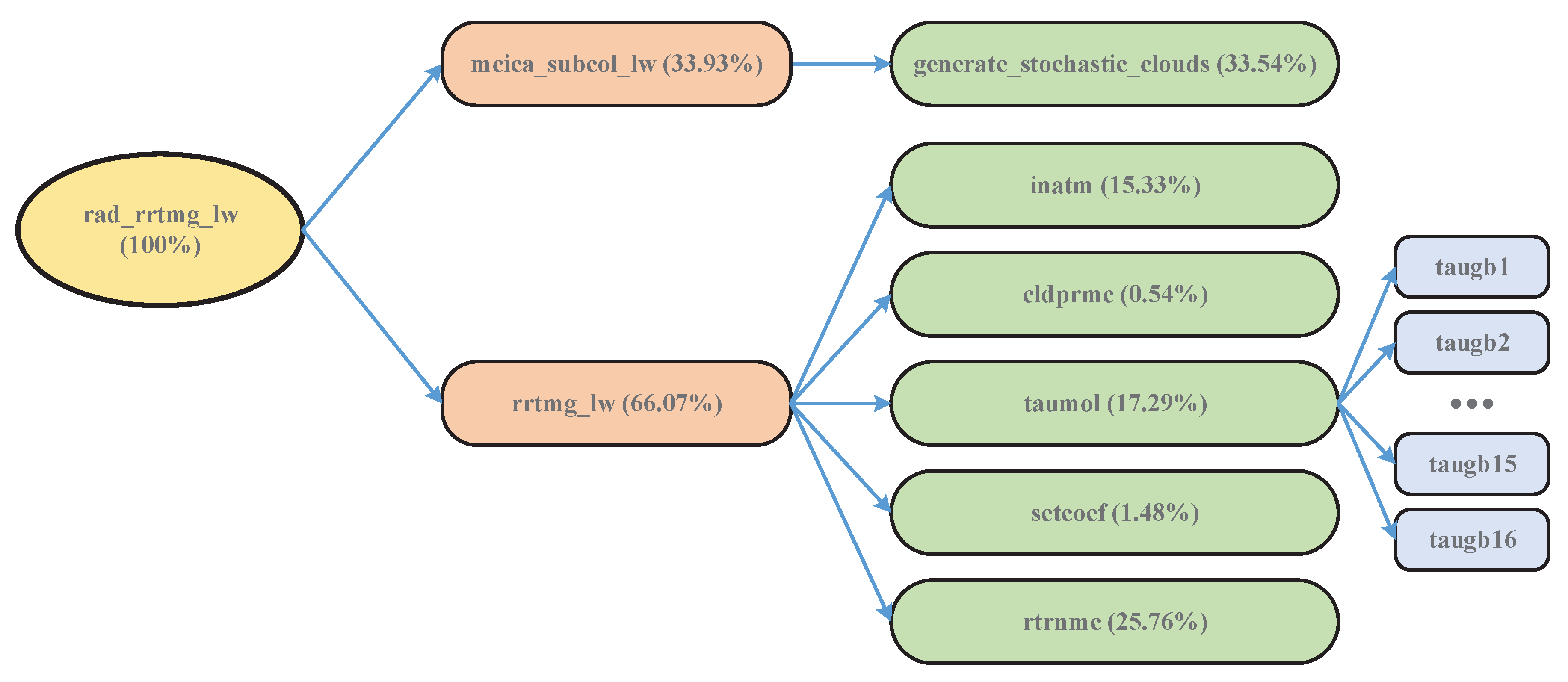

3.1. RRTMG Radiation Scheme

3.2. RRTMG_LW Code Structure

| Algorithm 1: Computing procedure of original rrtmg_lw. |

| subroutinerrtmg_lw(parameters) //ncol is the number of horizontal columns |

| 1. do iplon=1, ncol |

| 2. call inatm(parameters) |

| 3. call cldprmc(parameters) |

| 4. call setcoef(parameters) |

| 5. call taumol(parameters) |

| 6. if aerosol is active then //Combine gaseous and aerosol optical depths |

| 7. taut(k, ig) = taug(k, ig) + taua(k, ngb(ig)) |

| 8. else |

| 9. taut(k, ig) = taug(k, ig) |

| 10. end if |

| 11. call rtrnmc(parameters) |

| 12. Transfer fluxes and heating rate to output arrays |

| 13.end do end subroutine |

3.3. Experimental Platform

4. GPU-Enabled Acceleration Algorithms

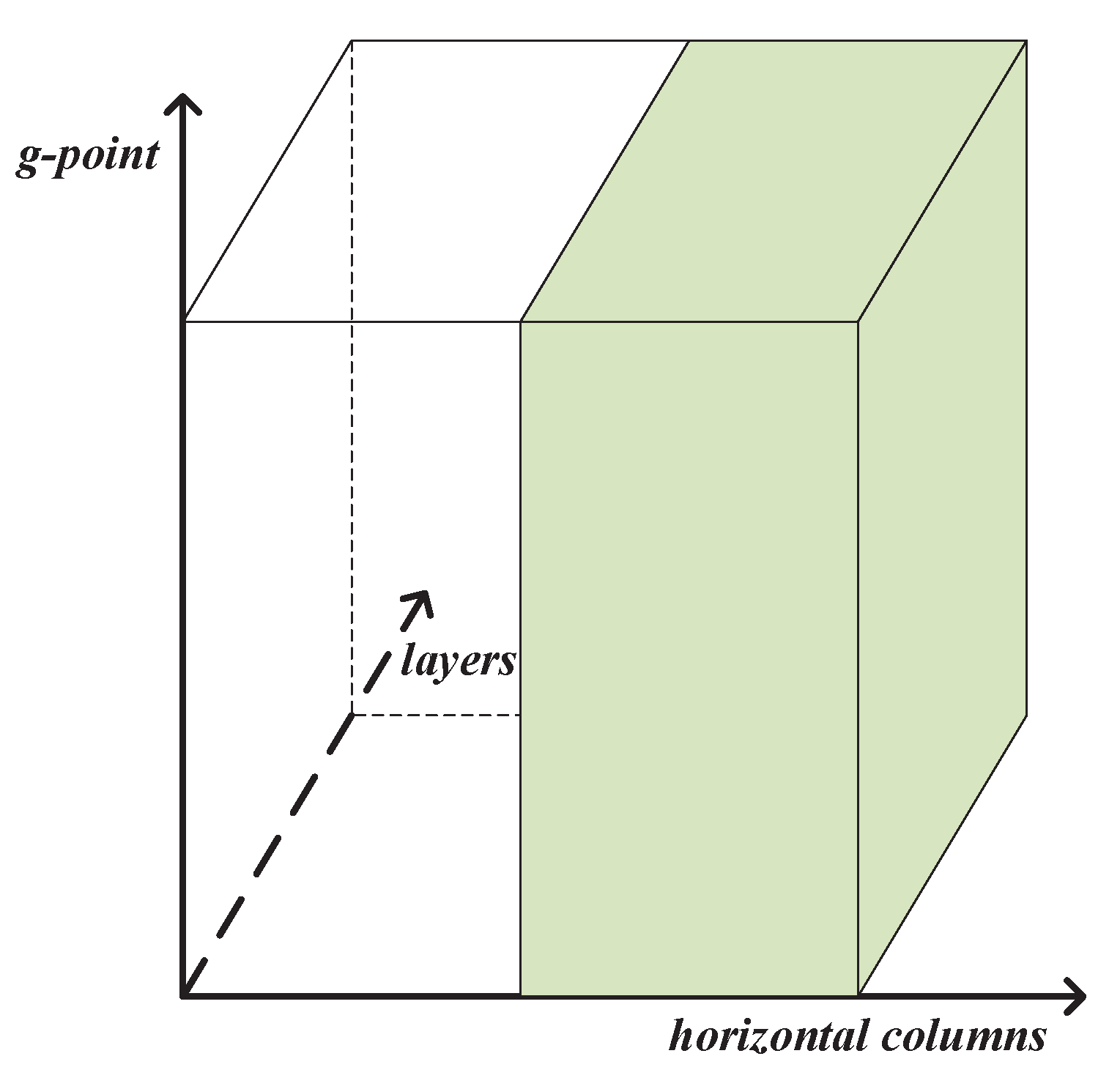

4.1. Parallel Strategy

4.2. Acceleration Algorithm with One-Dimensional Domain Decomposition

- (1)

- The implementation of the kernel based on CUDA Fortran is illustrated in Algorithm 3. Within, identifies a unique thread inside a thread block, identifies a unique thread block inside a kernel grid, and identifies the number of threads in a thread block. , the coordinate of the horizontal grid points, also represents the ID of a global thread in CUDA that can be expressed as = ( + . According to the design shown in Algorithm 3, threads can execute concurrently.

- (2)

- The other kernels are designed in the same way, as shown in Algorithms A1–A4 of Appendix A.1. To avoid needless repetition, these algorithms are described only in rough form.

- (3)

- needs to call 16 subroutines (∼). In Algorithm A3 of Appendix A.1, with the device attribute is described. With respect to the algorithm, the other 15 subroutines (∼) are similar to , so they are not described further here.

| Algorithm 2: CUDA-based acceleration algorithm of RRTMG_LW with 1-D domain decomposition and the implementation of 1-D GPU version of the RRTMG_LW (G-RRTMG_LW). |

| subroutinerrtmg_lw_d1(parameters) |

| 1. Copy input data to GPU device //Call the kernel inatm_d |

| 2. call inatm_d(parameters) //Call the kernel cldprmc_d |

| 3. call cldprmc_d(parameters) //Call the kernel setcoef_d |

| 4. call setcoef_d(parameters) //Call the kernel taumol_d |

| 5. call taumol_d(parameters) //Call the kernel rtrnmc_d |

| 6. call rtrnmc_d(parameters) |

| 7. Copy result to host //Judge whether atmospheric horizontal profile data is completed |

| 8. if it is not completed goto 1 end subroutine |

| Algorithm 3: Implementation of inatm_d based on CUDA Fortran in 1-D decomposition. |

| attributes(global) subroutine inatm_d(parameters) |

| 1. iplon=(blockIdx%x−1) × blockDim%x+threadIdx%x |

| 2. if (iplon≥1 .and. iplon≤ncol) then |

| 3. Initialize variable arrays //nlayers=nlay + 1, nlay is number of model layers |

| 4. do lay = 1, nlayers-1 |

| 5. pavel(iplon,lay) = play_d(iplon,nlayers-lay) |

| 6. tavel(iplon,lay) = tlay_d(iplon,nlayers-lay) |

| 7. pz(iplon,lay) = plev_d(iplon,nlayers-lay) |

| 8. tz(iplon,lay) = tlev_d(iplon,nlayers-lay) |

| 9. wkl(iplon,1,lay) = h2ovmr_d(iplon,nlayers-lay) |

| 10. wkl(iplon,2,lay) = co2vmr_d(iplon,nlayers-lay) |

| 11. wkl(iplon,3,lay) = o3vmr_d(iplon,nlayers-lay) |

| 12. wkl(iplon,4,lay) = n2ovmr_d(iplon,nlayers-lay) |

| 13. wkl(iplon,6,lay) = ch4vmr_d(iplon,nlayers-lay) |

| 14. wkl(iplon,7,lay) = o2vmr_d(iplon,nlayers-lay) |

| 15. amm = (1._r8 - wkl(iplon,1,lay)) * amd + wkl(iplon,1,lay) * amw |

| 16. coldry(iplon,lay) = (pz(iplon,lay-1)-pz(iplon,lay)) * 1.e3_r8 * avogad / (1.e2_r8 * grav * amm * (1._r8 + wkl(iplon,1,lay))) |

| 17. end do |

| 18. do lay = 1, nlayers-1 |

| 19. wx(iplon,1,lay) = ccl4vmr_d(iplon,nlayers-lay) |

| 20. wx(iplon,2,lay) = cfc11vmr_d(iplon,nlayers-lay) |

| 21. wx(iplon,3,lay) = cfc12vmr_d(iplon,nlayers-lay) |

| 22. wx(iplon,4,lay) = cfc22vmr_d(iplon,nlayers-lay) |

| 23. end do |

| 24. do lay = 1, nlayers-1 //ngptlw is total number of reduced g-intervals |

| 25. do ig = 1, ngptlw |

| 26. cldfmc(ig,iplon,lay) = cldfmcl_d(ig,iplon,nlayers-lay) |

| 27. taucmc(ig,iplon,lay) = taucmcl_d(ig,iplon,nlayers-lay) |

| 28. ciwpmc(ig,iplon,lay) = ciwpmcl_d(ig,iplon,nlayers-lay) |

| 29. clwpmc(ig,iplon,lay) = clwpmcl_d(ig,iplon,nlayers-lay) |

| 30. end do |

| 31. end do |

| 32.end if end subroutine |

4.3. Acceleration Algorithm with Two-Dimensional Domain Decomposition

| Algorithm 4: CUDA-based acceleration algorithm of RRTMG_LW with 2-D domain decomposition and the implementation of 2-D G-RRTMG_LW. |

| subroutinerrtmg_lw_d2(parameters) |

| 1. Copy input data to GPU device //Call inatm_d1 with 2-D decomposition |

| 2. call inatm_d1(parameters) //Call inatm_d2 with 2-D decomposition |

| 3. call inatm_d2(parameters) //Call inatm_d3 with 1-D decomposition |

| 4. call inatm_d3(parameters) //Call inatm_d4 with 2-D decomposition |

| 5. call inatm_d4(parameters) //Call cldprmc_d with 1-D decomposition |

| 6. call cldprmc_d(parameters) //Call setcoef_d1 with 2-D decomposition |

| 7. call setcoef_d1(parameters) //Call setcoef_d2 with 1-D decomposition |

| 8. call setcoef_d2(parameters) //Call taumol_d with 2-D decomposition |

| 9. call taumol_d(parameters) //Call rtrnmc_d with 1-D decomposition |

| 10.call rtrnmc_d(parameters) |

| 11.Copy result to host //Judge whether atmospheric horizontal profile data is completed |

| 12.if it is not completed goto 1 end subroutine |

| Algorithm 5: Implementation of inatm_d based on CUDA Fortran in 2-D decomposition. |

| attributes(global) subroutine inatm_d(parameters) |

| 1. iplon = (blockIdx%x − 1) × blockDim%x + threadIdx%x |

| 2. lay = (blockIdx%y − 1) × blockDim%y + threadIdx%y |

| 3. if ((iplon≥1 .and. iplon≤ncol) .and. (lay≥1 .and. lay≤nlayers-1)) then |

| 4. Initialize variable arrays |

| 5. do ig = 1, ngptlw |

| 6. cldfmc(ig,iplon,lay) = cldfmcl_d(ig,iplon,nlayers-lay) |

| 7. taucmc(ig,iplon,lay) = taucmcl_d(ig,iplon,nlayers-lay) |

| 8. ciwpmc(ig,iplon,lay) = ciwpmcl_d(ig,iplon,nlayers-lay) |

| 9. clwpmc(ig,iplon,lay) = clwpmcl_d(ig,iplon,nlayers-lay) |

| 10. end do |

| 11. end if end subroutine |

5. Result and Discussion

5.1. Performance of 1-D G-RRTMG_LW

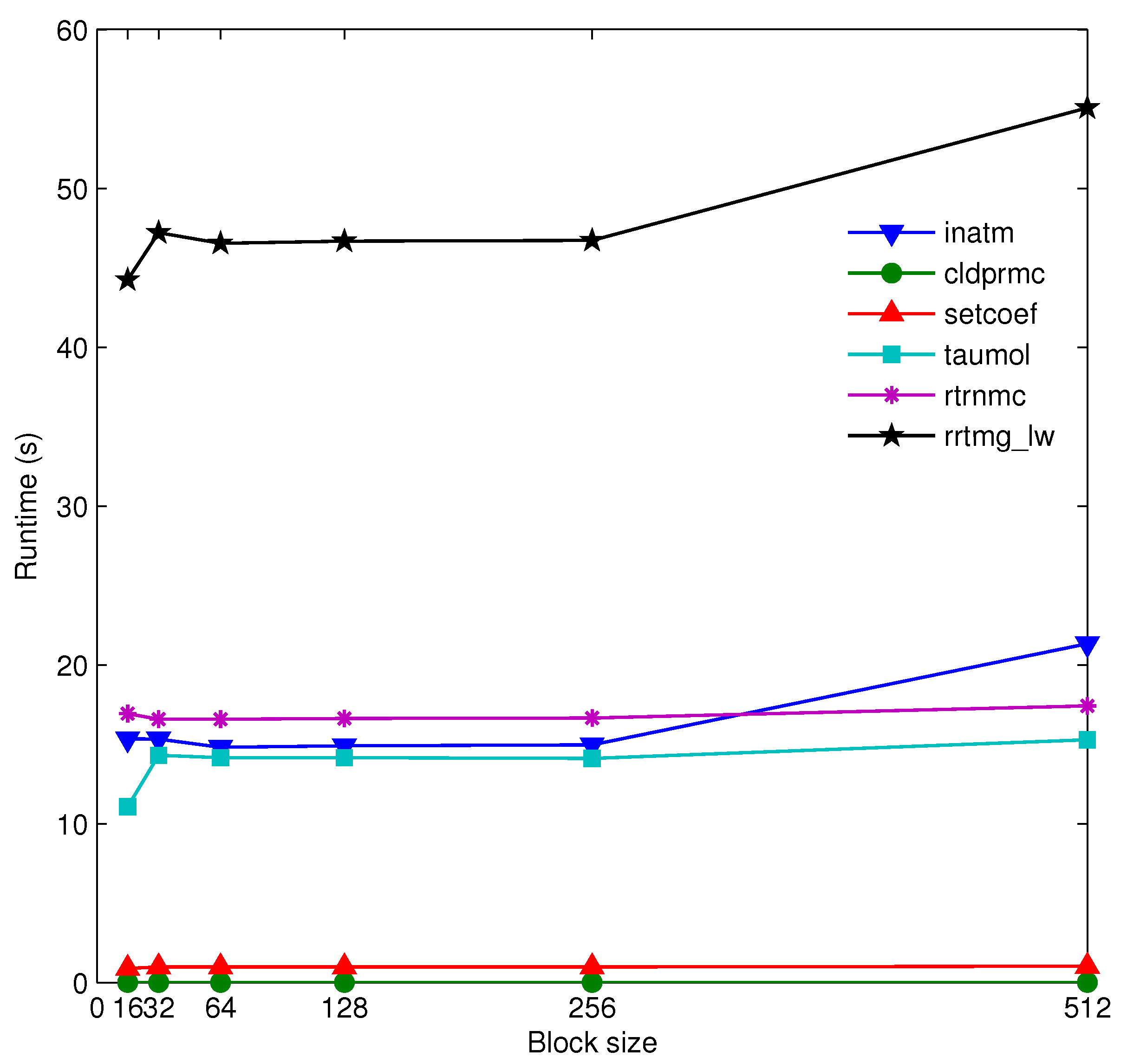

5.1.1. Influence of Block Size

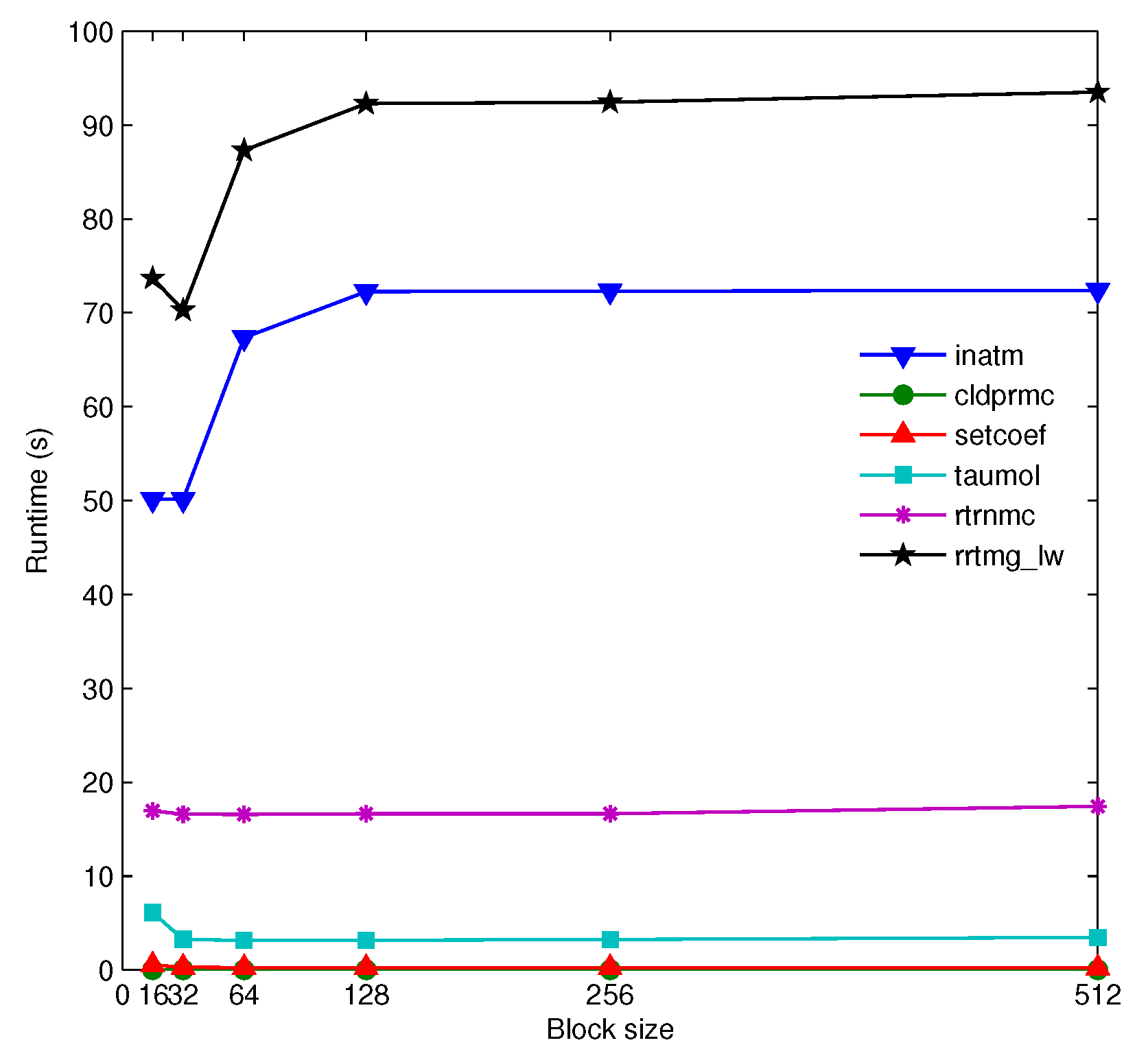

- (1)

- Generally speaking, increasing the block size can hide memory access latency to some extent and can improve the computational performance of parallel algorithms. Therefore, kernels with simple computations usually show optimal performance when the block size is 128 or 256.

- (2)

- The kernel with a large amount of calculation achieved optimal performance when the block size was 16. With the increment of the block size, its computational time increased as a whole. During the 1-D acceleration of , each thread with a large amount of calculation produced numerous temporary and intermediate values, which consumed a great deal of hardware resources. Due to limited hardware resources, each thread will have fewer resources if the block size is larger. Therefore, along with the increase of the block size, the speed of will be slower.

5.1.2. Evaluations on Different GPUs

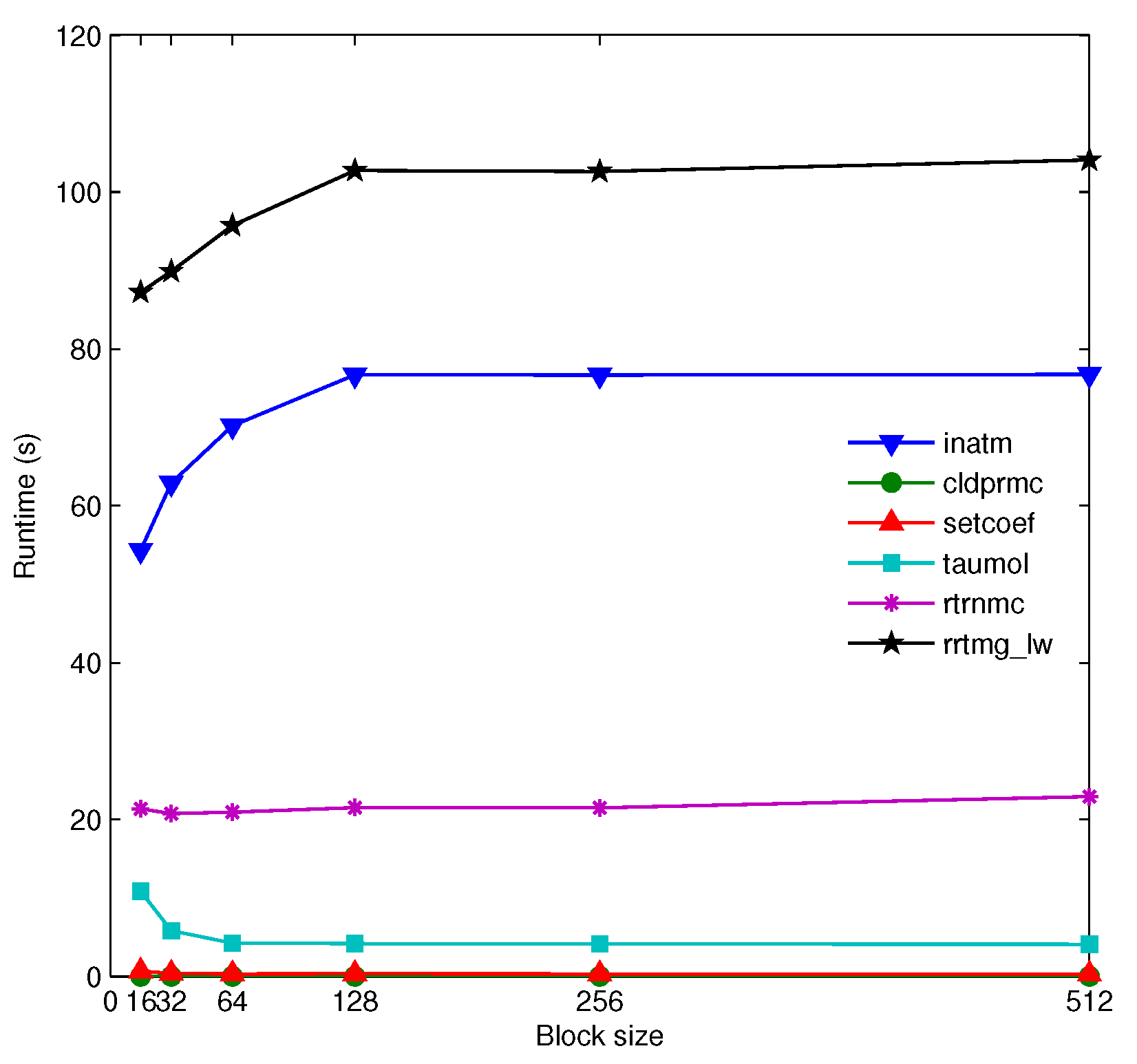

5.2. Performance of 2-D G-RRTMG_LW

5.2.1. Influence of Block Size

- (1)

- The 2-D costs more computational time than its 1-D version. This indicated that was not fit for 2-D acceleration. According to the testing, it was found that the assignment computing of four three-dimensional arrays in the 2-D required about 95% of the computational time. In the 2-D acceleration, more CUDA threads were started. Each CUDA thread needs to finish the assignment computing of the four arrays using a do-loop form as shown in Algorithm 5, so the computing costs too much time.

- (2)

- The 2-D achieved optimal performance when the block size was 256 or 512. During the 2-D acceleration, a larger number of threads were assigned, so each thread had fewer calculations, requiring fewer hardware resources. When the block size was 256 or 512, the assigned hardware resources of each thread were in a state of equilibrium, so in this case, the 2-D showed better performance.

5.2.2. Evaluations on Different GPUs

- (1)

- In the same way, the K40 GPU had stronger computing power than the K20 GPU, the 2-D G-RRTMG_LW on the K40 GPU showed better performance.

- (2)

- The 2-D and showed excellent performance improvements compared to their 1-D versions, especially . There was no data dependence in with intensive computing, so a finer-grained parallelization of was executed when more threads were used.

5.3. I/O Transfer

- (1)

- In the simulation with one model day, the RRTMG_LW required integral calculations 24 times. During each integration for all 128 × 256 grid points, the subroutine must be invoked 16 (128 × 256/) times when is 2048. Due to the memory limitation of the GPU, the maximum value of on the K40 GPU was 2048. This means that the 2-D G-RRTMG_LW was invoked repeatedly 16 × 24 = 384 times in the experiment. For each invocation, the input and output of the three-dimensional arrays must be updated between the CPU and GPU, so the I/O transfer incurs a high communication cost.

- (2)

- After the 2-D acceleration for the RRTMG_LW, its computational time in the case without I/O transfer was fairly shorter, so the I/O transfer cost was a huge bottleneck for the maximum level of performance improvement of the G-RRTMG_LW. In the future, compressing data and improving network bandwidth will be beneficial for reducing this I/O transfer cost.

5.4. Discussion

- (1)

- The WRF RRTMG_LW on eight CPU cores achieved a speedup of 7.58× compared to its counterpart running on one CPU core [28]. Using one K40 GPU in the case without I/O transfer, the 2-D G-RRTMG_LW achieved a speedup of 18.52×. This shows that the RRTMG_LW on one GPU can still obtain a better performance improvement than on one CPU with eight cores.

- (2)

- The RRTM_LW on the C2050 obtained an 18.2× speedup [13]. This shows that the 2-D G-RRTMG_LW obtained a similar speedup with the RRTM_LW accelerated in CUDA Fortran.

- (3)

- The CUDA Fortran version of the RRTM_LW in the GRAPES_Meso model obtained a 14.3× speedup [27], but the CUDA C version of the RRTMG_SW obtained a 202× speedup [31]. The reasons are as follows. (a) Zheng et al. ran the original serial RRTM_LW on Intel Xeon 5500 and ran its GPU version on NVIDIA Tesla C1060. Mielikainen et al. ran the original serial RRTMG_SW on Intel Xeon E5-2603 and ran its GPU version on NVIDIA K40. Xeon 5500 has higher computing performance than Xeon E5-2603. Moreover, K40 has higher computing performance than Tesla C1060. Therefore, Mielikainen et al. obtained higher speedup. (b) Zheng et al. used CUDA Fortran to write the GPU code. Mielikainen et al. used CUDA C to write the GPU code. CUDA C with more mature techniques usually can perform better than CUDA Fortran.

- (4)

- If there is no I/O transfer between the CPU and GPU, the highest speedup value expected for theoretical algorithms of numerical schemes during GPU parallelization is equal to the number of CUDA threads started in the accelerating. One K40 GPU has 2880 CUDA cores, so the highest speedup value of the 2-D G-RRTMG_LW on one K40 GPU should be 2880. Due to the limitation of GPU memory and inevitable I/O transfer cost, it is very hard to get the theoretical speedup. However, the acceleration algorithm can be optimized further and I/O transfer cost between the CPU and GPU can be reduced to the furthest.

6. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| HPC | high-performance computing |

| ESM | earth system model |

| GPU | graphics processing unit |

| CPU | central processing unit |

| CUDA | compute unified device architecture |

| GCM | general circulation model |

| LBLRTM | line-by-line radiative transfer model |

| RRTM | rapid radiative transfer model |

| RRTMG | rapid radiative transfer model for general circulation models |

| CAS–ESM | Chinese Academy of Sciences–Earth System Model |

| IAP | Institute of Atmospheric Physics |

| IAP AGCM4.0 | IAP Atmospheric General Circulation Model Version 4.0 |

| RRTMG_LW | RRTMG long-wave radiation scheme |

| G-RRTMG_LW | GPU version of the RRTMG_LW |

| WRF | Weather Research and Forecasting model |

| RRTM_LW | RRTM long-wave radiation scheme |

| RRTMG_SW | RRTMG shortwave radiation scheme |

| HOMME | High-Order Methods Modeling Environment |

| AER | Atmospheric and Environmental Research |

| RRTM_SW | RRTM shortwave radiation scheme |

| McICA | Monte–Carlo Independent Column Approximation |

| SM | streaming multiprocessor |

| SP | streaming processors |

| 1-D | one-dimensional |

| 2-D | two-dimensional |

Appendix A

Appendix A.1. Implementation of the Other Kernels Based on CUDA Fortran in 1-D Decomposition

| Algorithm A1: Implementation of cldprmc_d based on CUDA Fortran in 1-D decomposition. |

| attributes(global) subroutine cldprmc_d(parameters) |

| 1. iplon = (blockIdx%x−1) × blockDim%x + threadIdx%x |

| 2. if (iplon≥1 .and. iplon≤ncol) then |

| 3. Initialize variable arrays |

| 4. do lay = 1, nlayers |

| 5. do ig = 1, ngptlw |

| 6. do some corresponding work |

| 7. end do |

| 8. end do |

| 9. end if end subroutine |

| Algorithm A2: Implementation of setcoef_d based on CUDA Fortran in 1-D decomposition. |

| attributes(global) subroutine setcoef_d(parameters) |

| 1. iplon=(blockIdx%x−1) × blockDim%x+threadIdx%x |

| 2. if (iplon≥1 .and. iplon≤ncol) then |

| 3. Initialize variable arrays //Calculate the integrated Planck functions for each band at the surface, level, and layer //temperatures |

| 4. do lay = 1, nlayers |

| 5. do iband = 1, 16 |

| 6. do some corresponding work |

| 7. end do |

| 8. end do |

| 9. end if end subroutine |

| Algorithm A3: Implementation of taumol_d based on CUDA Fortran in 1-D decomposition. |

| attributes(global) subroutine taumol_d(parameters) |

| 1. iplon=(blockIdx%x−1) × blockDim%x+threadIdx%x |

| 2. if (iplon≥1 .and. iplon≤ncol) then //Calculate gaseous optical depth and Planck fractions for each spectral band |

| 3. call taugb1_d(parameters) |

| 4. call taugb2_d(parameters) |

| 5. call taugb3_d(parameters) |

| 6. call taugb4_d(parameters) |

| 7. call taugb5_d(parameters) |

| 8. call taugb6_d(parameters) |

| 9. call taugb7_d(parameters) |

| 10. call taugb8_d(parameters) |

| 11. call taugb9_d(parameters) |

| 12. call taugb10_d(parameters) |

| 13. call taugb11_d(parameters) |

| 14. call taugb12_d(parameters) |

| 15. call taugb13_d(parameters) |

| 16. call taugb14_d(parameters) |

| 17. call taugb15_d(parameters) |

| 18. call taugb16_d(parameters) |

| 19.end if end subroutine attributes(device) subroutine taugb1_d(parameters) //Lower atmosphere loop //laytrop is tropopause layer index |

| 1. do lay = 1, laytrop(iplon) //ng1 is an integer parameter used for 140 g-point model |

| 2. do ig = 1, ng1 |

| 3. do some corresponding work |

| 4. end do |

| 5. end do //Upper atmosphere loop |

| 6. do lay = laytrop(iplon)+1, nlayers |

| 7. do ig = 1, ng1 |

| 8. do some corresponding work |

| 9. end do |

| 10.end do end subroutine |

| Algorithm A4: Implementation of rtrnmc_d based on CUDA Fortran in 1-D decomposition. |

| attributes(global) subroutine rtrnmc_d(parameters) |

| 1. iplon=(blockIdx%x−1) × blockDim%x+threadIdx%x |

| 2. if (iplon≥1 .and. iplon≤ncol) then |

| 3. Initialize variable arrays |

| 4. do lay = 1, nlayers |

| 5. do ig = 1, ngptlw |

| 6. do some corresponding work |

| 7. end do |

| 8. end do //Loop over frequency bands //istart is beginning band of calculation //iend is ending band of calculation |

| 9. do iband = istart, iend //Downward radiative transfer loop |

| 10. do lay = nlayers, 1, −1 |

| 11. do some corresponding work |

| 12. end do |

| 13. end do |

| 14.end if end subroutine |

Appendix A.2. Implementation of the Other Kernels Based on CUDA Fortran in 2-D Decomposition

| Algorithm A5: Implementation of setcoef_d based on CUDA Fortran in 2-D decomposition. |

| attributes(global) subroutine setcoef_d(parameters) |

| 1. iplon=(blockIdx%x−1) × blockDim%x+threadIdx%x |

| 2. lay=(blockIdx%y−1) × blockDim%y+threadIdx%y |

| 3. if ((iplon≥1 .and. iplon≤ncol) .and. (lay≥1 .and. lay≤nlayers)) then |

| 4. Initialize variable arrays |

| 5. do iband = 1, 16 |

| 6. do some corresponding work |

| 7. end do |

| 8. end if end subroutine |

| Algorithm A6: Implementation of taumol_d based on CUDA Fortran in 2-D decomposition. |

| attributes(global) subroutine taumol_d(parameters) |

| 1. iplon=(blockIdx%x−1) × blockDim%x+threadIdx%x |

| 2. lay=(blockIdx%y−1) × blockDim%y+threadIdx%y |

| 3. if ((iplon≥1 .and. iplon≤ncol) .and. (lay≥1 .and. lay≤nlayers)) then |

| 4. call taugb1_d(parameters) |

| 5. call taugb2_d(parameters) |

| 6. call taugb3_d(parameters) |

| 7. call taugb4_d(parameters) |

| 8. call taugb5_d(parameters) |

| 9. call taugb6_d(parameters) |

| 10. call taugb7_d(parameters) |

| 11. call taugb8_d(parameters) |

| 12. call taugb9_d(parameters) |

| 13. call taugb10_d(parameters) |

| 14. call taugb11_d(parameters) |

| 15. call taugb12_d(parameters) |

| 16. call taugb13_d(parameters) |

| 17. call taugb14_d(parameters) |

| 18. call taugb15_d(parameters) |

| 19. call taugb16_d(parameters) |

| 20.end if end subroutine attributes(device) subroutine taugb1_d(parameters) |

| 1. if ((iplon≥1 .and. iplon≤ncol) .and. (lay≥1 .and. lay≤laytrop(iplon))) then |

| 2. do ig = 1, ng1 |

| 3. do some corresponding work |

| 4. end do |

| 5. end if |

| 6. if ((iplon≥1 .and. iplon≤ncol) .and. (lay≥laytrop(iplon)+1 .and. lay≤nlayers)) then |

| 7. do ig = 1, ng1 |

| 8. do some corresponding work |

| 9. end do |

| 10.end if end subroutine |

References

- Xue, W.; Yang, C.; Fu, H.; Wang, X.; Xu, Y.; Liao, J.; Gan, L.; Lu, Y.; Ranjan, R.; Wang, L. Ultra-scalable CPU-MIC acceleration of mesoscale atmospheric modeling on tianhe-2. IEEE Trans. Comput. 2014, 64, 2382–2393. [Google Scholar] [CrossRef]

- Imbernon, B.; Prades, J.; Gimenez, D.; Cecilia, J.M.; Silla, F. Enhancing large-scale docking simulation on heterogeneous systems: An MPI vs. rCUDA study. Future Gener. Comput. Syst. 2018, 79, 26–37. [Google Scholar] [CrossRef]

- Lu, G.; Zhang, W.; He, H.; Yang, L.T. Performance modeling for MPI applications with low overhead fine-grained profiling. Future Gener. Comput. Syst. 2019, 90, 317–326. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, J.; Zhang, J.; He, J.; Zhang, H.; Chi, X.; Yue, T. An efficient parallel algorithm for the coupling of global climate models and regional climate models on a large-scale multi-core cluster. J. Supercomput. 2018, 74, 3999–4018. [Google Scholar] [CrossRef]

- Nickolls, J.; Dally, W.J. The GPU computing era. IEEE Micro 2010, 30, 56–69. [Google Scholar] [CrossRef]

- Deng, Z.; Chen, D.; Hu, Y.; Wu, X.; Peng, W.; Li, X. Massively parallel non-stationary EEG data processing on GPGPU platforms with Morlet continuous wavelet transform. J. Internet Serv. Appl. 2012, 3, 347–357. [Google Scholar] [CrossRef]

- Chen, D.; Wang, L.; Tian, M.; Tian, J.; Wang, S.; Bian, C.; Li, X. Massively parallel modelling & simulation of large crowd with GPGPU. J. Supercomput. 2013, 63, 675–690. [Google Scholar]

- Chen, D.; Li, X.; Wang, L.; Khan, S.U.; Wang, J.; Zeng, K.; Cai, C. Fast and scalable multi-way analysis of massive neural data. IEEE Trans. Comput. 2015, 64, 707–719. [Google Scholar] [CrossRef]

- Candel, F.; Petit, S.; Sahuquillo, J.; Duato, J. Accurately modeling the on-chip and off-chip GPU memory subsystem. Future Gener. Comput. Syst. 2018, 82, 510–519. [Google Scholar] [CrossRef]

- Norman, M.; Larkin, J.; Vose, A.; Evans, K. A case study of CUDA FORTRAN and OpenACC for an atmospheric climate kernel. J. Comput. Sci. 2015, 9, 1–6. [Google Scholar] [CrossRef]

- Schalkwijk, J.; Jonker, H.J.; Siebesma, A.P.; Van Meijgaard, E. Weather forecasting using GPU-based large-eddy simulations. Bull. Am. Meteorol. Soc. 2015, 96, 715–723. [Google Scholar] [CrossRef]

- NVIDIA. CUDA C Programming Guide v10.0. Technical Document. 2019. Available online: Https://docs.nvidia.com/pdf/CUDA_C_Programming_Guide.pdf (accessed on 26 September 2019).

- Lu, F.; Cao, X.; Song, J.; Zhu, X. GPU computing for long-wave radiation physics: A RRTM_LW scheme case study. In Proceedings of the IEEE 9th International Symposium on Parallel and Distributed Processing with Applications Workshops (ISPAW), Busan, Korea, 26–28 May 2011; pp. 71–76. [Google Scholar]

- Clough, S.A.; Iacono, M.J.; Moncet, J.L. Line-by-line calculations of atmospheric fluxes and cooling rates: Application to water vapor. J. Geophys. Res. Atmos. 1992, 97, 15761–15785. [Google Scholar] [CrossRef]

- Clough, S.A.; Iacono, M.J. Line-by-line calculation of atmospheric fluxes and cooling rates II: Application to carbon dioxide, ozone, methane, nitrous oxide and the halocarbons. J. Geophys. Res. Atmos. 1995, 100, 16519–16535. [Google Scholar] [CrossRef]

- Lu, F.; Song, J.; Cao, X.; Zhu, X. CPU/GPU computing for long-wave radiation physics on large GPU clusters. Comput. Geosci. 2012, 41, 47–55. [Google Scholar] [CrossRef]

- Mlawer, E.J.; Taubman, S.J.; Brown, P.D.; Iacono, M.J.; Clough, S.A. Radiative transfer for inhomogeneous atmospheres: RRTM, a validated correlated-k model for the long-wave. J. Geophys. Res. Atmos. 1997, 102, 16663–16682. [Google Scholar] [CrossRef]

- Clough, S.A.; Shephard, M.W.; Mlawer, E.J.; Delamere, J.S.; Iacono, M.J.; Cady-Pereira, K.; Boukabara, S.; Brown, P.D. Atmospheric radiative transfer modeling: A summary of the AER codes. J. Quant. Spectrosc. Radiat. Transf. 2005, 91, 233–244. [Google Scholar] [CrossRef]

- Iacono, M.J.; Mlawer, E.J.; Clough, S.A.; Morcrette, J.J. Impact of an improved long-wave radiation model, RRTM, on the energy budget and thermodynamic properties of the NCAR community climate model, CCM3. J. Geophys. Res. Atmos. 2000, 105, 14873–14890. [Google Scholar] [CrossRef]

- Iacono, M.J.; Delamere, J.S.; Mlawer, E.J.; Shephard, M.W.; Clough, S.A.; Collins, W.D. Radiative forcing by long-lived greenhouse gases: Calculations with the AER radiative transfer models. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Xiao, D.; Tong-Hua, S.; Jun, W.; Ren-Ping, L. Decadal variation of the Aleutian Low-Icelandic Low seesaw simulated by a climate system model (CAS–ESM-C). Atmos. Ocean. Sci. Lett. 2014, 7, 110–114. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, J.; Ye, H.; He, J. A distributed load balancing algorithm for climate big data processing over a multi-core CPU cluster. Concurr. Comput. Pract. Exp. 2016, 28, 4144–4160. [Google Scholar] [CrossRef]

- Wang, Y.; Hao, H.; Zhang, J.; Jiang, J.; He, J.; Ma, Y. Performance optimization and evaluation for parallel processing of big data in earth system models. Clust. Comput. 2017. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, M.; Zeng, Q.C. Sensitivity of simulated climate to two atmospheric models: Interpretation of differences between dry models and moist models. Mon. Weather. Rev. 2013, 141, 1558–1576. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, J.; Zhang, H.; Dong, X.; Wang, L.; Ranjan, R.; Zomaya, A.Y. A scalable parallel algorithm for atmospheric general circulation models on a multi-core cluster. Future Gener. Comput. Syst. 2017, 72, 1–10. [Google Scholar] [CrossRef]

- Morcrette, J.J.; Mozdzynski, G.; Leutbecher, M. A reduced radiation grid for the ECMWF Integrated Forecasting System. Mon. Weather Rev. 2008, 136, 4760–4772. [Google Scholar] [CrossRef]

- Zheng, F.; Xu, X.; Xiang, D.; Wang, Z.; Xu, M.; He, S. GPU-based parallel researches on RRTM module of GRAPES numerical prediction system. J. Comput. 2013, 8, 550–558. [Google Scholar] [CrossRef]

- Iacono, M.J. Enhancing Cloud Radiative Processes and Radiation Efficiency in the Advanced Research Weather Research and Forecasting (WRF) Model; Atmospheric and Environmental Research: Lexington, MA, USA, 2015. [Google Scholar]

- NVIDIA. CUDA Fortran Programming Guide and Reference. Technical Document. 2019. Available online: Https://www.pgroup.com/resources/docs/19.1/pdf/pgi19cudaforug.pdf (accessed on 26 September 2019).

- Ruetsch, G.; Phillips, E.; Fatica, M. GPU acceleration of the long-wave rapid radiative transfer model in WRF using CUDA Fortran. In Proceedings of the Many-Core and Reconfigurable Supercomputing Conference, Roma, Italy, 22–24 March 2010. [Google Scholar]

- Mielikainen, J.; Price, E.; Huang, B.; Huang, H.L.; Lee, T. GPU compute unified device architecture (CUDA)-based parallelization of the RRTMG shortwave rapid radiative transfer model. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2016, 9, 921–931. [Google Scholar] [CrossRef]

- Bertagna, L.; Deakin, M.; Guba, O.; Sunderl, D.; Bradley, A.M.; Tezaur, I.K.; Taylor, M.A.; Salinger, A.G. HOMMEXX 1.0: A performance portable atmospheric dynamical core for the energy exascale earth system model. Geosci. Model Dev. 2018, 12, 1423–1441. [Google Scholar] [CrossRef]

- Iacono, M.J.; Delamere, J.S.; Mlawer, E.J.; Clough, S.A. Evaluation of upper tropospheric water vapor in the NCAR Community Climate Model (CCM3) using modeled and observed HIRS radiances. J. Geophys. Res. Atmos. 2003, 108, ACL 1-1–ACL 1-19. [Google Scholar] [CrossRef]

- Morcrette, J.J.; Barker, H.W.; Cole, J.N.; Iacono, M.J.; Pincus, R. Impact of a new radiation package, McRad, in the ECMWF Integrated Forecasting System. Mon. Weather Rev. 2008, 136, 4773–4798. [Google Scholar] [CrossRef]

- Mlawer, E.J.; Iacono, M.J.; Pincus, R.; Barker, H.W.; Oreopoulos, L.; Mitchell, D.L. Contributions of the ARM program to radiative transfer modeling for climate and weather applications. Meteorol. Monogr. 2016, 57, 15.1–15.19. [Google Scholar] [CrossRef]

- Pincus, R.; Barker, H.W.; Morcrette, J.J. A fast, flexible, approximate technique for computing radiative transfer in inhomogeneous cloud fields. J. Geophys. Res. Atmos. 2003, 108. [Google Scholar] [CrossRef]

- Price, E.; Mielikainen, J.; Huang, M.; Huang, B.; Huang, H.L.; Lee, T. GPU-accelerated long-wave radiation scheme of the Rapid Radiative Transfer Model for General Circulation Models (RRTMG). IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3660–3667. [Google Scholar] [CrossRef]

- D’Azevedo, E.F.; Lang, J.; Worley, P.H.; Ethier, S.A.; Ku, S.H.; Chang, C. Hybrid MPI/OpenMP/GPU parallelization of xgc1 fusion simulation code. In Proceedings of the Supercomputing Conference 2013, Denver, CO, USA, 17–22 November 2013. [Google Scholar]

| Specification of Central Processing Unit (CPU) | K20 Cluster | K40 Cluster |

|---|---|---|

| CPU | E5-2680 v2 at 2.8 GHz | E5-2695 v2 at 2.4 GHz |

| Operating System | CentOS 6.4 | Red Hat Enterprise Linux Server 7.1 |

| Specification of GPU | K20 cluster | K40 cluster |

| GPU | Tesla K20 | Tesla K40 |

| Compute Unified Device Architecture (CUDA) Cores | 2496 | 2880 |

| Standard Memory | 5 GB | 12 GB |

| Memory Bandwidth | 208 GB/s | 288 GB/s |

| CUDA Version | 6.5 | 9.0 |

| Subroutines | CPU Time (s) | K20 Time (s) | Speedup |

| inatm | 150.48 | 20.4440 | 7.36 |

| cldprmc | 5.27 | 0.0020 | 2635.00 |

| setcoef | 14.52 | 0.9908 | 14.65 |

| taumol | 169.68 | 18.3980 | 9.22 |

| rtrnmc | 252.80 | 21.3712 | 11.83 |

| rrtmg_lw | 647.12 | 61.206 | 10.57 |

| Subroutines | CPU Time (s) | K40 Time (s) | Speedup |

| inatm | 150.48 | 15.3492 | 9.80 |

| cldprmc | 5.27 | 0.0016 | 3293.75 |

| setcoef | 14.52 | 0.8920 | 16.28 |

| taumol | 169.68 | 11.0720 | 15.33 |

| rtrnmc | 252.80 | 16.9344 | 14.93 |

| rrtmg_lw | 647.12 | 44.2492 | 14.62 |

| Subroutines | CPU Time (s) | K20 Time (s) | Speedup |

| inatm | 150.48 | 18.2060 | 8.27 |

| cldprmc | 5.27 | 0.0020 | 2635.00 |

| setcoef | 14.52 | 0.3360 | 43.21 |

| taumol | 169.68 | 4.1852 | 40.54 |

| rtrnmc | 252.80 | 21.5148 | 11.75 |

| rrtmg_lw | 647.12 | 44.2440 | 14.63 |

| Subroutines | CPU Time (s) | K40 Time (s) | Speedup |

| inatm | 150.48 | 14.9068 | 10.09 |

| cldprmc | 5.27 | 0.0020 | 2635 |

| setcoef | 14.52 | 0.2480 | 58.55 |

| taumol | 169.68 | 3.1524 | 53.83 |

| rtrnmc | 252.80 | 16.6292 | 15.20 |

| rrtmg_lw | 647.12 | 34.9384 | 18.52 |

| Subroutines | CPU Time (s) | K20 Time (s) | Speedup |

| rrtmg_lw | 647.12 | 105.63 | 6.13 |

| Subroutines | CPU Time (s) | K40 Time (s) | Speedup |

| rrtmg_lw | 647.12 | 94.24 | 6.87 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhao, Y.; Li, W.; Jiang, J.; Ji, X.; Zomaya, A.Y. Using a GPU to Accelerate a Longwave Radiative Transfer Model with Efficient CUDA-Based Methods. Appl. Sci. 2019, 9, 4039. https://doi.org/10.3390/app9194039

Wang Y, Zhao Y, Li W, Jiang J, Ji X, Zomaya AY. Using a GPU to Accelerate a Longwave Radiative Transfer Model with Efficient CUDA-Based Methods. Applied Sciences. 2019; 9(19):4039. https://doi.org/10.3390/app9194039

Chicago/Turabian StyleWang, Yuzhu, Yuan Zhao, Wei Li, Jinrong Jiang, Xiaohui Ji, and Albert Y. Zomaya. 2019. "Using a GPU to Accelerate a Longwave Radiative Transfer Model with Efficient CUDA-Based Methods" Applied Sciences 9, no. 19: 4039. https://doi.org/10.3390/app9194039

APA StyleWang, Y., Zhao, Y., Li, W., Jiang, J., Ji, X., & Zomaya, A. Y. (2019). Using a GPU to Accelerate a Longwave Radiative Transfer Model with Efficient CUDA-Based Methods. Applied Sciences, 9(19), 4039. https://doi.org/10.3390/app9194039