Automatic Object-Detection of School Building Elements in Visual Data: A Gray-Level Histogram Statistical Feature-Based Method

Abstract

1. Introduction

2. Background

2.1. Color/Texture-Based Object Detection Methods

2.2. Shape-Based Object Detection Methods

2.3. Grayscale Statistical Feature-Based Object Detection Methods

3. Methodology

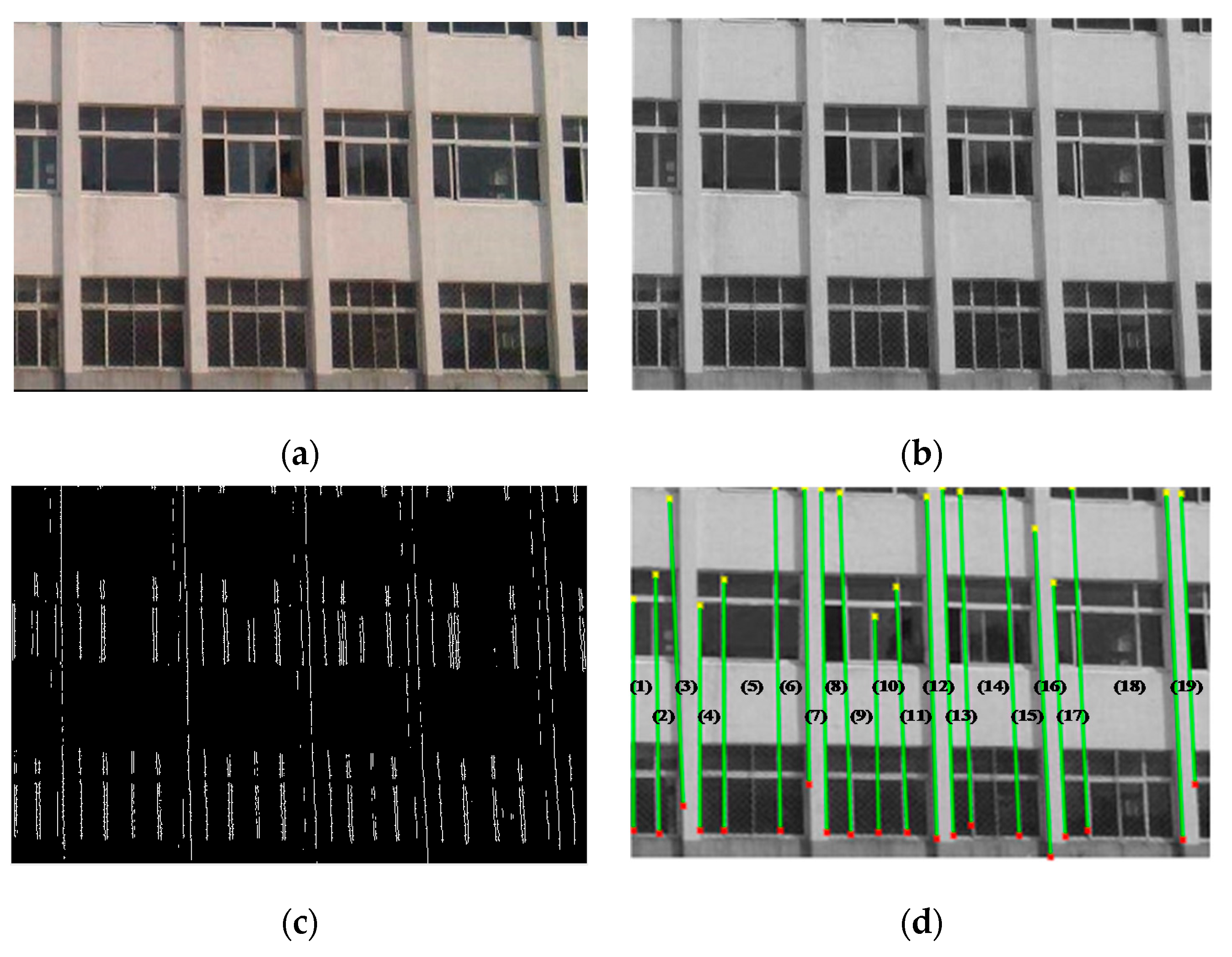

3.1. Image Preprocessing

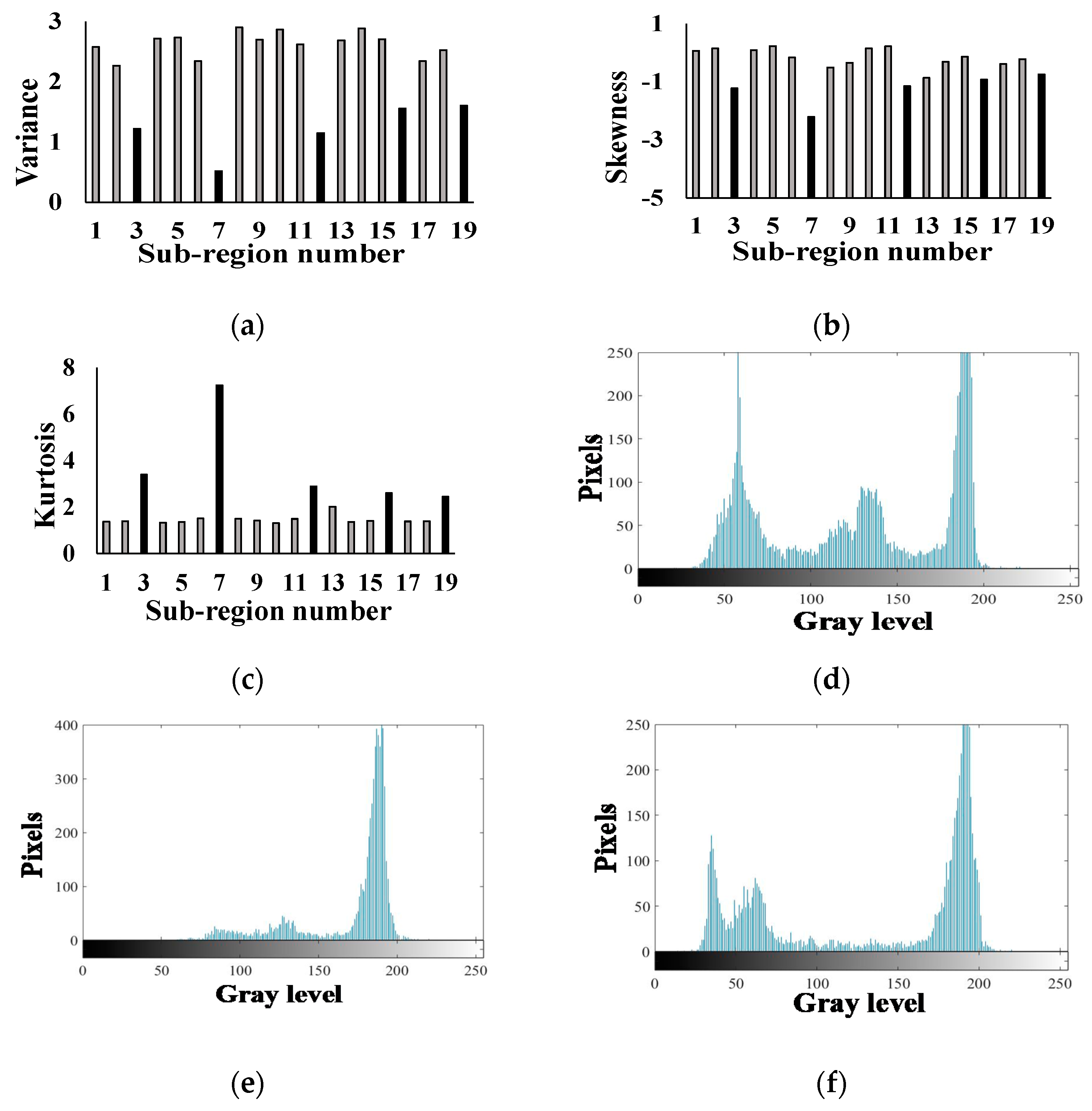

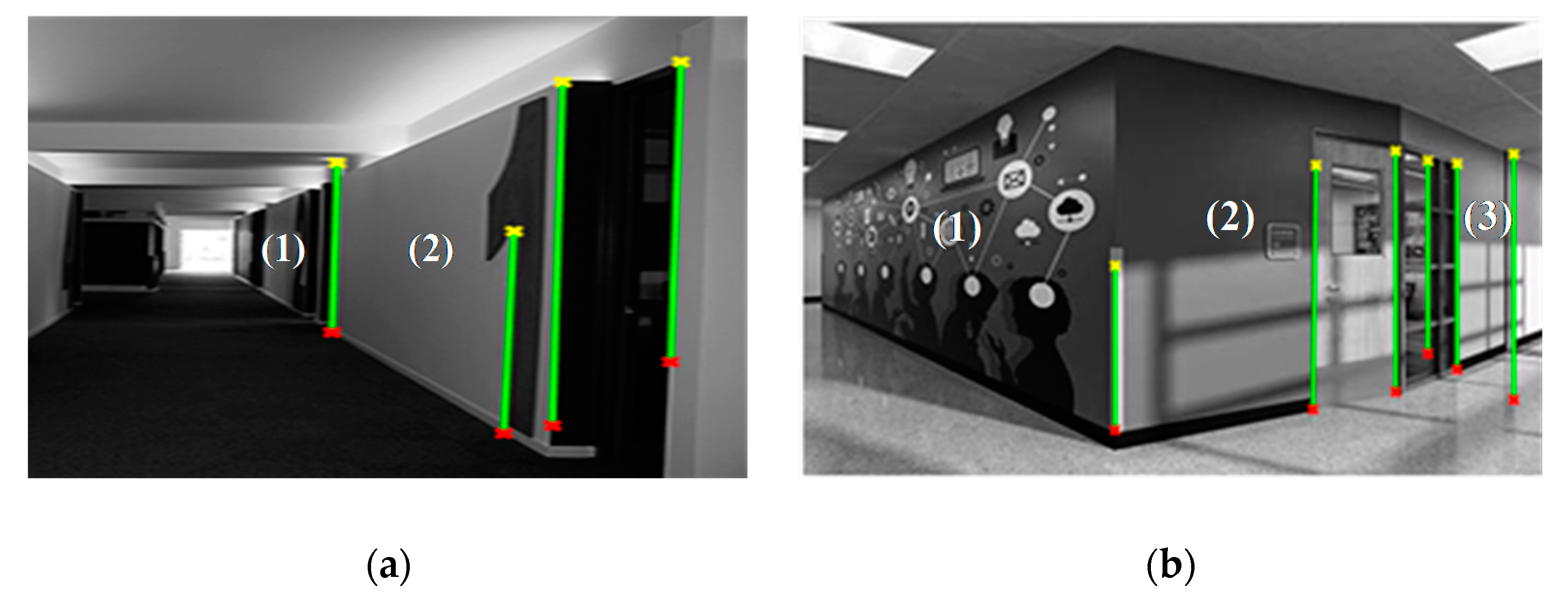

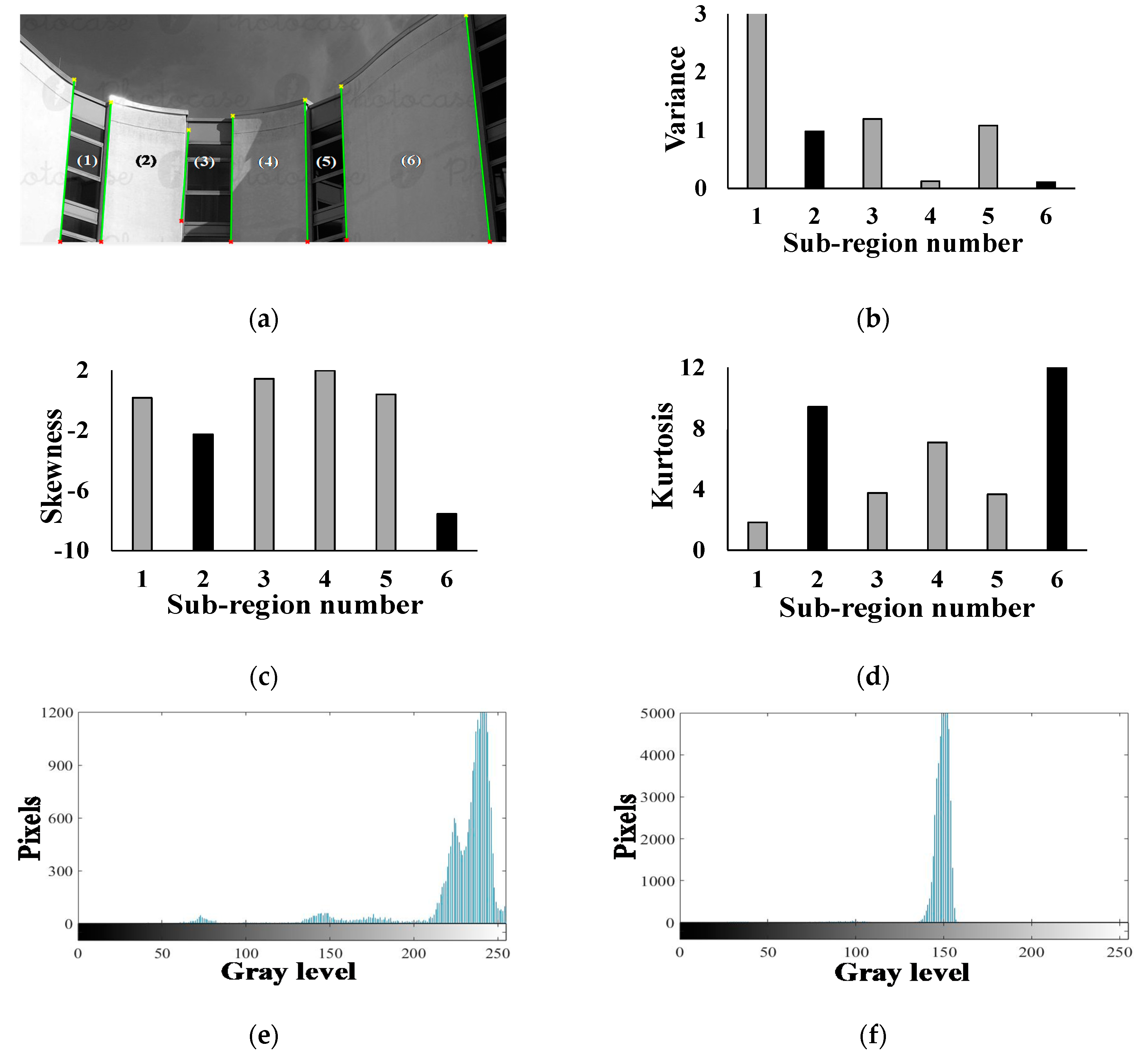

3.2. Columns and Walls Recognition using GLH Statistical Parameters

- (1)

- the intersection angle of a sub-region’s two adjacent boundary lines is less than 5;

- (2)

- variance and skewness values of the sub-region’s GLH are local minimum while the kurtosis value is local maximum among adjacent sub-regions;

- (3)

- the ratio of length to width of a sub-region is set as no less than two for a column, and less than two for a wall. The length of a sub-region is defined by the length of its longer boundary line, and the width is defined as the distance between its two boundary lines [51].

4. Implementation and Results

4.1. Validation

4.2. Implementation and Discussion

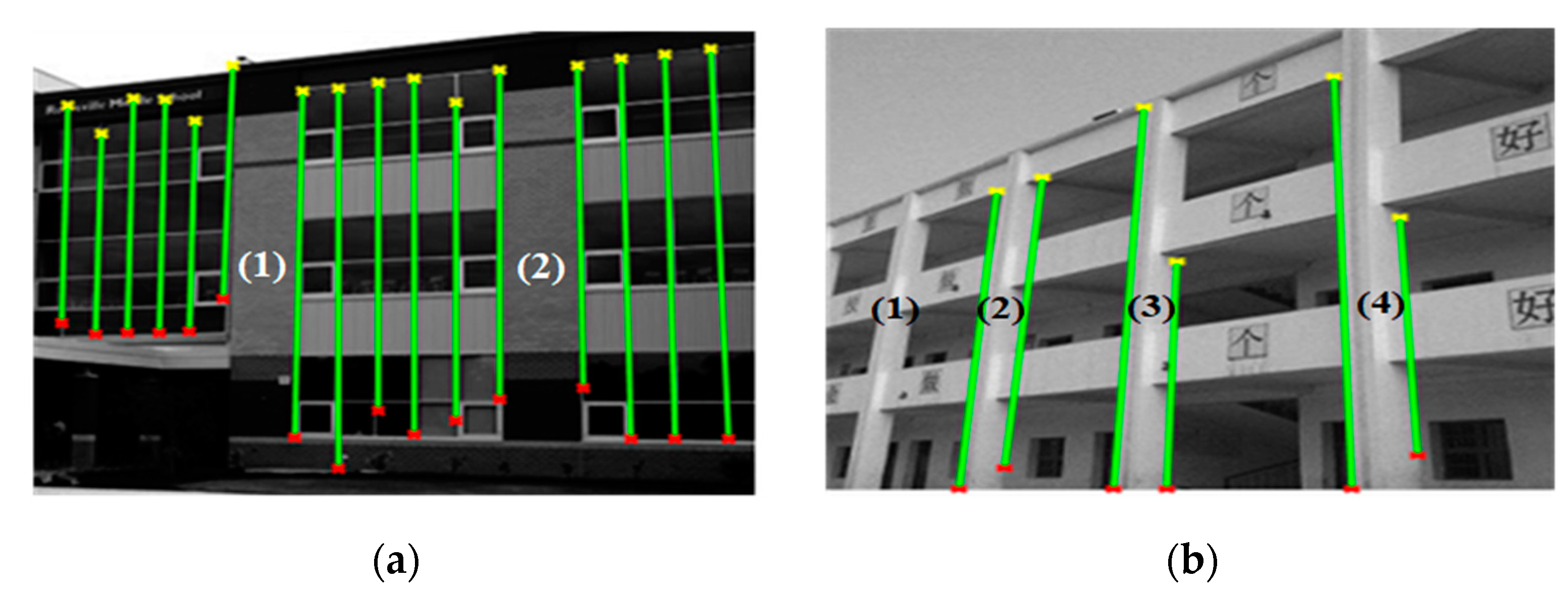

4.2.1. Column Detection Results and Discussion

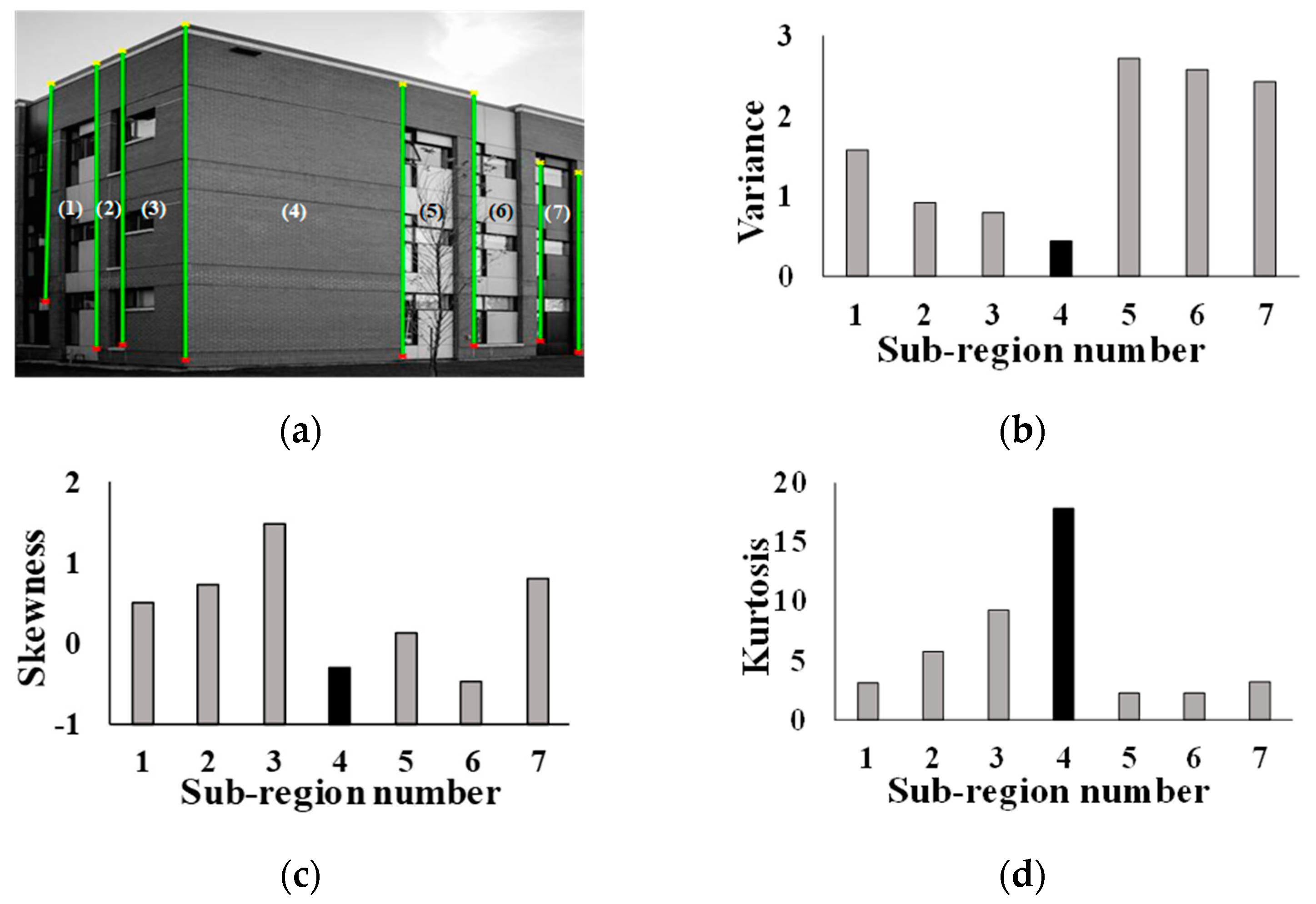

4.2.2. Wall detection results and discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, Z.; Hsu, T.-Y.; Wei, H.-H.; Chen, J.-H. Development of a Data-Mining Technique for Regional-Scale Evaluation of Building Seismic Vulnerability. Appl. Sci. 2019, 9, 1502. [Google Scholar] [CrossRef]

- Zhong, L.-L.; Lin, K.-W.; Su, G.-L.; Huang, S.-J.; Wu, L.-Y. Primary assessment and statistical analysis for the seismic resistance ability of middle school buildings. Struct. Eng. 2012, 27, 61–81. [Google Scholar]

- Ploeger, S.; Sawada, M.; Elsabbagh, A.; Saatcioglu, M.; Nastev, M.; Rosetti, E. Urban RAT: New tool for virtual and site-specific mobile rapid data collection for seismic risk assessment. J. Comput. Civ. Eng. 2015, 30, 04015006. [Google Scholar] [CrossRef]

- Ye, S.; Zhu, D.; Yao, X.; Zhang, X.; Li, L. Developing a mobile GIS-based component to collect field data. In Proceedings of the International Conference on Agro-Geoinformatics, Tianjin, China, 18–20 July 2016; pp. 1–6. [Google Scholar]

- Mayer, H. Automatic object extraction from aerial imagery—A survey focusing on buildings. Comput. Vis. Image Underst. 1999, 74, 138–149. [Google Scholar] [CrossRef]

- Lee, S.C.; Nevatia, R. Extraction and integration of window in a 3D building model from ground view images. In Proceedings of the 2004 IEEE Computer on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Zhu, Z.; Brilakis, I. Automated detection of concrete columns from visual data. In Proceedings of the Computing in Civil Engineering, Austin, TX, USA, 24–27 June 2009; pp. 135–145. [Google Scholar]

- Li, Z.; Liu, Z.; Shi, W. A fast level set algorithm for building roof recognition from high spatial resolution panchromatic images. Geosci. Remote Sens. Lett. 2014, 11, 743–747. [Google Scholar]

- Wu, H.; Cheng, Z.; Shi, W.; Miao, Z.; Xu, C. An object-based image analysis for building seismic vulnerability assessment using high-resolution remote sensing imagery. Nat. Hazards 2014, 71, 151–174. [Google Scholar] [CrossRef]

- Koch, C.; Georgieva, K.; Kasireddy, V.; Akinci, B.; Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Inform. 2015, 29, 196–210. [Google Scholar] [CrossRef]

- Liang, X. Image-based post-disaster inspection of reinforced concrete bridge systems using deep learning with Bayesian optimization. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 415–430. [Google Scholar] [CrossRef]

- Koch, C.; Paal, S.G.; Rashidi, A.; Zhu, Z.; König, M.; Brilakis, I. Achievements and challenges in machine vision-based inspection of large concrete structures. Adv. Struct. Eng. 2014, 17, 303–318. [Google Scholar] [CrossRef]

- German, S.; Jeon, J.-S.; Zhu, Z.; Bearman, C.; Brilakis, I.; DesRoches, R.; Lowes, L. Machine vision-enhanced postearthquake inspection. J. Comput. Civ. Eng. 2013, 27, 622–634. [Google Scholar] [CrossRef]

- Mansour, A.K.; Romdhane, N.B.; Boukadi, N. An inventory of buildings in the city of Tunis and an assessment of their vulnerability. Bull. Earthq. Eng. 2013, 11, 1563–1583. [Google Scholar] [CrossRef]

- Riedel, I.; Guéguen, P.; Dalla Mura, M.; Pathier, E.; Leduc, T.; Chanussot, J. Seismic vulnerability assessment of urban environments in moderate-to-low seismic hazard regions using association rule learning and support vector machine methods. Nat. Hazards 2015, 76, 1111–1141. [Google Scholar] [CrossRef]

- Dimitrov, A.; Golparvar-Fard, M. Vision-based material recognition for automated monitoring of construction progress and generating building information modeling from unordered site image collections. Adv. Eng. Inform. 2014, 28, 37–49. [Google Scholar] [CrossRef]

- Abeid Neto, J.; Arditi, D.; Evens, M.W. Using colors to detect structural components in digital pictures. Comput. Aided Civ. Infrastruct. Eng. 2002, 17, 61–67. [Google Scholar] [CrossRef]

- Brilakis, I.K.; Soibelman, L. Shape-based retrieval of construction site photographs. J. Comput. Civ. Eng. 2008, 22, 14–20. [Google Scholar] [CrossRef]

- Luo, H.; Paal, S.G. Machine learning–based backbone curve model of reinforced concrete columns subjected to cyclic loading reversals. J. Comput. Civ. Eng. 2018, 32, 04018042. [Google Scholar] [CrossRef]

- DeGol, J.; Golparvar-Fard, M.; Hoiem, D. Geometry-Informed Material Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1554–1562. [Google Scholar]

- Rashidi, A.; Sigari, M.H.; Maghiar, M.; Citrin, D. An analogy between various machine-learning techniques for detecting construction materials in digital images. KSCE J. Civ. Eng. 2016, 20, 1178–1188. [Google Scholar] [CrossRef]

- Han, K.; Degol, J.; Golparvar-Fard, M. Geometry-and Appearance-Based Reasoning of Construction Progress Monitoring. J. Constr. Eng. Manag. 2017, 144, 04017110. [Google Scholar] [CrossRef]

- Park, M.-W.; Brilakis, I. Construction worker detection in video frames for initializing vision trackers. Autom. Constr. 2012, 28, 15–25. [Google Scholar] [CrossRef]

- Wiebel, C.B.; Valsecchi, M.; Gegenfurtner, K.R. The speed and accuracy of material recognition in natural images. Atten. Percept. Psychophys. 2013, 75, 954–966. [Google Scholar] [CrossRef]

- Cimpoi, M.; Maji, S.; Vedaldi, A. Deep filter banks for texture recognition and segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3828–3836. [Google Scholar]

- Jung, C.R.; Schramm, R. Rectangle detection based on a windowed Hough transform. In Proceedings of the Brazilian Symposium on Computer Graphics and Image, Curitiba, Brazil, 20 October 2004; pp. 113–120. [Google Scholar]

- Zingman, I.; Saupe, D.; Lambers, K. Automated search for livestock enclosures of rectangular shape in remotely sensed imagery. In Proceedings of the SPIE Remote Sensing, Dresden, Germany, 23–26 September 2013; p. 88920F. [Google Scholar]

- Zhu, Z.; Brilakis, I. Concrete column recognition in images and videos. J. Comput. Civ. Eng. 2010, 24, 478–487. [Google Scholar] [CrossRef]

- Zhang, Y.; Huo, L.; Li, H. Automated Recognition of a Wall between Windows from a Single Image. J. Sens. 2017, 2017, 1–8. [Google Scholar] [CrossRef]

- Hamledari, H.; McCabe, B.; Davari, S. Automated computer vision-based detection of components of under-construction indoor partitions. Autom. Constr. 2017, 74, 78–94. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K. Textural features for image classification. Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Jobanputra, R.; Clausi, D.A. Texture analysis using Gaussian weighted grey level co-occurrence probabilities. In Proceedings of the Conference on Computer and Robot Vision, London, ON, Canada, 17–19 May 2004; pp. 51–57. [Google Scholar]

- Liu, H.; Guo, H.; Zhang, L. SVM-based sea ice classification using textural features and concentration from RADARSAT-2 Dual-Pol ScanSAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1601–1613. [Google Scholar] [CrossRef]

- Zhu, T.; Li, F.; Heygster, G.; Zhang, S. Antarctic Sea-Ice Classification Based on Conditional Random Fields From RADARSAT-2 Dual-Polarization Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2451–2467. [Google Scholar] [CrossRef]

- Paneque-Gálvez, J.; Mas, J.-F.; Moré, G.; Cristóbal, J.; Orta-Martínez, M.; Luz, A.C.; Guèze, M.; Macía, M.J.; Reyes-García, V. Enhanced land use/cover classification of heterogeneous tropical landscapes using support vector machines and textural homogeneity. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 372–383. [Google Scholar] [CrossRef]

- Nawaz, S.; Dar, A.H. Hepatic lesions classification by ensemble of SVMs using statistical features based on co-occurrence matrix. In Proceedings of the International Conference on Emerging Technologies, Rawalpindi, Pakistan, 18–19 October 2008; pp. 21–26. [Google Scholar]

- Patil, R.; Sannakki, S.; Rajpurohit, V. A Survey on Classification of Liver Diseases using Image Processing and Data Mining Techniques. Int. J. Comput. Sci. Eng. 2017, 5, 29–34. [Google Scholar]

- Liu, Y.X.; Guo, Y.Z. Grayscale Histograms Features Extraction Using Matlab. Comput. Knowl. Technol. 2009, 5, 9032–9034. [Google Scholar]

- Sharma, B.; Venugopalan, K. Classification of hematomas in brain CT images using neural network. In Proceedings of the International Conference on Issues and Challenges in Intelligent Computing Techniques, Ghaziabad, India, 7–8 February 2014; pp. 41–46. [Google Scholar]

- Ozkan, E.; West, A.; Dedelow, J.A.; Chu, B.F.; Zhao, W.; Yildiz, V.O.; Otterson, G.A.; Shilo, K.; Ghosh, S.; King, M. CT gray-level texture analysis as a quantitative imaging biomarker of epidermal growth factor receptor mutation status in adenocarcinoma of the lung. Am. J. Roentgenol. 2015, 205, 1016–1025. [Google Scholar] [CrossRef]

- Zhang, W.L.; Wang, X.Z. Feature extraction and classification for human brain CT images. In Proceedings of the International Conference on Machine Learning and Cybernetics, Hong Kong, China, 19–22 August 2007; pp. 1155–1159. [Google Scholar]

- Fallahi, A.R.; Pooyan, M.; Khotanlou, H. A new approach for classification of human brain CT images based on morphological operations. J. Biomed. Sci. Eng. 2010, 3, 78–82. [Google Scholar] [CrossRef][Green Version]

- An, J.; Liu, H.; Pan, L.; Zhang, K. Classification of traffic signs based on fusion of PCA and gray level histogram. Highway 2017, 62, 185–197. [Google Scholar]

- Peli, T.; Malah, D. A study of edge detection algorithms. Comput. Graph. Image Process. 1982, 20, 1–21. [Google Scholar] [CrossRef]

- Prewitt, J.M. Object enhancement and extraction. In Picture Processing and Psychopictorics; Academic Press: London, UK, 1970; Volume 10, pp. 15–19. [Google Scholar]

- Kanopoulos, N.; Vasanthavada, N.; Baker, R.L. Design of an image edge detection filter using the Sobel operator. J. Solid State Circuits 1988, 23, 358–367. [Google Scholar] [CrossRef]

- Jain, R.; Kasturi, R.; Schunck, B.G. Machine Vision; McGraw-Hill: New York, NY, USA, 1995; Volume 5. [Google Scholar]

- Canny, J. A computational approach to edge detection. Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Chaple, G.N.; Daruwala, R.; Gofane, M.S. Comparisions of Robert, Prewitt, Sobel operator based edge detection methods for real time uses on FPGA. In Proceedings of the International Conference on Technologies for Sustainable Development, Mumbai, India, 4–6 February 2015; pp. 1–4. [Google Scholar]

- Pratt, W.K. Digital Image Processing, 3rd ed.; John Wiley & Sons, Inc.: New York, NY, USA, 2001. [Google Scholar]

- Japan Building Disaster Prevention Association. Standard for Seismic Evaluation of Existing Reinforced Concrete Buildings; Japan Building Disaster Prevention Association: Tokyo, Japan, 2001. [Google Scholar]

- Bedon, C.; Zhang, X.; Santos, F.; Honfi, D.; Kozłowski, M.; Arrigoni, M.; Figuli, L.; Lange, D. Performance of structural glass facades under extreme loads—Design methods, existing research, current issues and trends. Constr. Build. Mater. 2018, 163, 921–937. [Google Scholar] [CrossRef]

| Number of the Image | TP | FP | FN | Precision | Recall |

|---|---|---|---|---|---|

| TP/(TP+FP) | TP/(TP+FN) | ||||

| 1 | 5 | 0 | 0 | 100.0% | 100.0% |

| 2 | 2 | 0 | 2 | 100.0% | 50.0% |

| 3 | 2 | 0 | 0 | 100.0% | 100.0% |

| 4 | 5 | 0 | 1 | 100.0% | 83.3% |

| 5 | 5 | 0 | 2 | 100.0% | 71.4% |

| 6 | 1 | 0 | 1 | 100.0% | 50.0% |

| 7 | 4 | 0 | 1 | 100.0% | 80.0% |

| 8 | 4 | 0 | 2 | 100.0% | 66.7% |

| 9 | 4 | 0 | 1 | 100.0% | 80.0% |

| 10 | 6 | 0 | 0 | 100.0% | 100.0% |

| 11 | 4 | 0 | 0 | 100.0% | 100.0% |

| 12 | 6 | 0 | 0 | 100.0% | 100.0% |

| 13 | 6 | 0 | 3 | 100.0% | 66.7% |

| 14 | 4 | 0 | 4 | 100.0% | 50.0% |

| 15 | 3 | 0 | 1 | 100.0% | 75.0% |

| 16 | 3 | 0 | 1 | 100.0% | 75.0% |

| 17 | 2 | 0 | 0 | 100.0% | 100.0% |

| 18 | 2 | 0 | 2 | 100.0% | 50.0% |

| 19 | 6 | 0 | 0 | 100.0% | 100.0% |

| 20 | 2 | 0 | 0 | 100.0% | 100.0% |

| Total | 76 | 0 | 21 | 100.0% | 78.4% |

| Number of the Image | TP | FP | FN | Precision | Recall |

|---|---|---|---|---|---|

| TP/(TP+FP) | TP/(TP+FN) | ||||

| 1 | 1 | 0 | 0 | 100.0% | 100.0% |

| 2 | 2 | 0 | 1 | 100.0% | 66.7% |

| 3 | 1 | 0 | 1 | 100.0% | 50.0% |

| 4 | 2 | 0 | 0 | 100.0% | 100.0% |

| 5 | 1 | 0 | 1 | 100.0% | 50.0% |

| 6 | 1 | 0 | 0 | 100.0% | 100.0% |

| 7 | 2 | 0 | 1 | 100.0% | 66.7% |

| 8 | 1 | 0 | 0 | 100.0% | 100.0% |

| 9 | 1 | 0 | 1 | 100.0% | 50.0% |

| 10 | 1 | 0 | 0 | 100.0% | 100.0% |

| 11 | 1 | 0 | 1 | 100.0% | 50.0% |

| 12 | 1 | 0 | 0 | 100.0% | 100.0% |

| 13 | 2 | 0 | 1 | 100.0% | 66.6% |

| 14 | 1 | 0 | 1 | 100.0% | 50.0% |

| 15 | 3 | 0 | 1 | 100.0% | 75.0% |

| 16 | 2 | 0 | 0 | 100.0% | 100.0% |

| 17 | 1 | 0 | 0 | 100.0% | 100.0% |

| 18 | 1 | 0 | 1 | 100.0% | 50.0% |

| 19 | 3 | 0 | 0 | 100.0% | 100.0% |

| 20 | 2 | 0 | 1 | 100.0% | 66.7% |

| Total | 30 | 0 | 11 | 100.0% | 73.2% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Wei, H.-H.; Yum, S.G.; Chen, J.-H. Automatic Object-Detection of School Building Elements in Visual Data: A Gray-Level Histogram Statistical Feature-Based Method. Appl. Sci. 2019, 9, 3915. https://doi.org/10.3390/app9183915

Zhang Z, Wei H-H, Yum SG, Chen J-H. Automatic Object-Detection of School Building Elements in Visual Data: A Gray-Level Histogram Statistical Feature-Based Method. Applied Sciences. 2019; 9(18):3915. https://doi.org/10.3390/app9183915

Chicago/Turabian StyleZhang, Zhenyu, Hsi-Hsien Wei, Sang Guk Yum, and Jieh-Haur Chen. 2019. "Automatic Object-Detection of School Building Elements in Visual Data: A Gray-Level Histogram Statistical Feature-Based Method" Applied Sciences 9, no. 18: 3915. https://doi.org/10.3390/app9183915

APA StyleZhang, Z., Wei, H.-H., Yum, S. G., & Chen, J.-H. (2019). Automatic Object-Detection of School Building Elements in Visual Data: A Gray-Level Histogram Statistical Feature-Based Method. Applied Sciences, 9(18), 3915. https://doi.org/10.3390/app9183915