Featured Application

The method proposed in this paper can be extended to the classification of vegetable and flower seedlings cultivated in plug trays.

Abstract

The classification of plug seedlings is important work in the replanting process. This paper proposed a classification method for plug seedlings based on transfer learning. Firstly, by extracting and graying the interest region of the original image acquired, a regional grayscale cumulative distribution curve is obtained. Calculating the number of peak points of the curve to identify the plug tray specification is then done. Secondly, the transfer learning method based on convolutional neural network is used to construct the classification model of plug seedlings. According to the growth characteristics of the seedlings, 2286 seedlings samples were collected to train the model at the two-leaf and one-heart stages. Finally, the image of the interest region is divided into cell images according to the specification of the plug tray, and the cell images are put into the classification model, thereby classifying the qualified seedling, the unqualified seedling and the lack of seedling. After testing, the identification method of the tray specification has an average accuracy of 100% for the three specifications (50 cells, 72 cells, 105 cells) of the 20-day and 25-day pepper seedlings. Seedling classification models based on the transfer learning method of four different convolutional neural networks (Alexnet, Inception-v3, Resnet-18, VGG16) are constructed and tested. The classification accuracy of the VGG16-based classification model is the best, which is 95.50%, the Alexnet-based classification model has the shortest training time, which is 6 min and 8 s. This research has certain theoretical reference significance for intelligent replanting classification work.

1. Introduction

In greenhouse plug seedling cultivation, it is necessary to replant unqualified seedlings or cells without seedlings in plug trays, to improve the utilization of the trays and promote the unified mechanization of greenhouse seedlings. The classification of unqualified seedlings, qualified seedlings and lack of seedlings during the replanting process is an important exercise. At present, relevant researchers use image segmentation to measure the leaf area or other features of seedlings to identify unqualified seedlings and lack of seedlings. Jiang et al. used tomato seedlings as test samples and applied a morphological-based watershed algorithm to complete leaf edge segmentation, extracting each leaf area, and leaf circumference of the seedlings in a plug tray was used to identify the seedlings; recognition accuracy reached 98% [1]. Tong et al. aimed at the leaf overlap of the plug seedlings, proposed a decision-making method combining the central information of the leaflet area and improved the watershed method to segment the seedlings, and then calculated the seedling leaf area to realize the seedling identification judgment; the recognition accuracy rate was 95% [2,3]. Qingchun et al. used structured light and industrial camera methods to identify seedlings by detecting leaf area and stem height, and the recognition accuracy was over 90% [4]. Tian et al. extracted the measurements of scion and rootstock stem diameters to grade scion and rootstock seedlings [5]. Wang et al. compared the pixel value of seedling with threshold value to determine whether the cell was short of seedlings according to the binary images of the plug tray. The accuracy rate of judging the null plug cells and the seedling cells with the Arabidopsis plug seedlings of 25- and 35-days were all 100% [6]. In the process of seedlings classification with the traditional image processing method, the features used for the depiction of information will be more or less lost in the image process; the artificial extraction of certain feature algorithms is complex, and the recognition accuracy depends largely on the judgment of the selected features. In the process of image segmentation and feature extraction of the plug seedlings, the classification accuracy of the sample image is easily reduced by the influence of uncertain factors, such as changes in the light intensity of the working environment, differences in the matrix of the plug tray, and differences in the growth morphology of the plug seedlings. The robustness of this method for classifying seedlings needs to be further improved.

Convolutional neural network is a robust method for classification [7,8,9], which has been widely verified in the fields of agricultural product classification and agricultural disease identification [10,11,12,13]. Convolutional neural network method has the advantage that the seedlings image can be directly used as the input of the network, avoiding the complicated feature extraction and data reconstruction process in the traditional recognition algorithm. In addition, with the data augmentation before training the network, this method has strong robustness in classification. Transfer learning is a machine learning method based on pre-training model, which can effectively solve the problem of small training sample size [14,15,16,17]. Due to the limited number of samples of plug seedlings in different types of plug trays and the high cost of sample acquisition, this paper used a transfer learning method based on convolutional neural network to study the classification of unqualified seedlings, qualified seedlings and lack of seedlings. The plug seedlings classification method proposed in this paper uses pepper seedlings as the test object, and divides qualified seedlings and unqualified seedlings according to the growth characteristics of pepper seedlings. First, image processing was used to identify the plug tray specification before seedling classification. Secondly, the plug seedling classification model was established by transfer learning based on the convolutional neural network, seedling samples were collected, and the established model is trained and tested. Finally, a set of replanting classification prototype and software were developed to realize the intelligent classification control of greenhouse seedlings replanting.

2. Materials and Methods

2.1. Configuration and Main Components of the Classification System

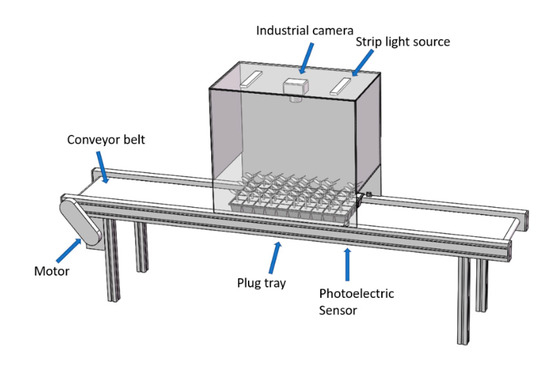

The classification system is composed of computer, motor, conveyor belt, photoelectric sensor, industrial camera and strip light source. The samples were collected using a MER-500-7UC industrial camera manufactured by Daheng Image company, with a resolution of 2592 pixels × 1944 pixels. The lens is an 8 mm lens produced by Computar which is a lens company from Japan. The computer CPU used for image processing, classification model training and testing is 8th Intel Core i7 Processor, the graphics card is GTX1060, and the model training adopts single GPU acceleration. Main components of the classification system are shown in Figure 1.

Figure 1.

Main components of the classification system.

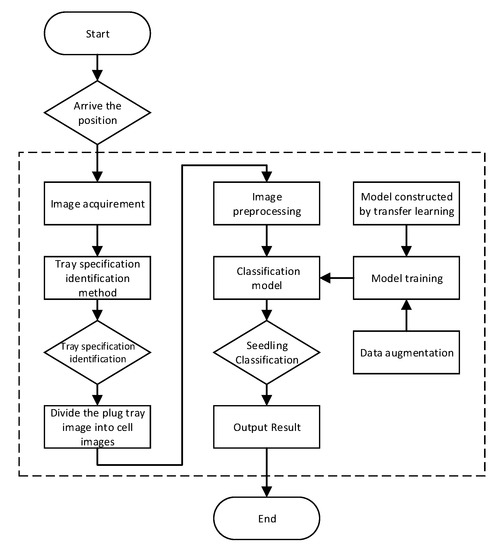

The classification method mainly includes two parts: One is the identification of the plug tray specification, and the other is the classification of the plug seedlings. The main steps involved in the classification of plug seedlings are shown in Figure 2. After the classification system starts, industrial camera captures the original image when the plug tray reaches the designated location. The image specification method is used to identify the plug specification. The image of the plug tray is divided into cell images according to the specification of the tray. Through image preprocessing, the cell images are put into the pre-trained convolutional neural network model for classification, and the classification results of unqualified seedlings, qualified seedlings and lack of seedlings are output. The transfer learning method is used to construct the classification model, and the training sample is trained after the data augmentation.

Figure 2.

Main steps involved in the classification of plug seedlings.

2.2. Sample Preparation

2.2.1. Unqualified Seedling Standard

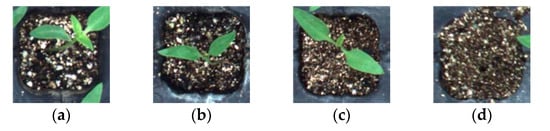

The Agricultural and Rural Department of Qinghai Province of China suggested that the pepper seedlings should be replanted during the period of two leaves and one heart [18]. Tang et al. proposed that pepper seedlings need to be replanted when 1 to 2 true leaves are grown, to improve the effective utilization rate of greenhouse and reduce energy consumption [19]. Zhang et al. claimed that the seedling’s survival rate could be 95% when they were transplanted with 2 to 3 true leaves [20]. Considering comprehensively, we choose two leaves and one heart period (with 2 true leaves) of pepper seedling to transplant in plug tray. Two leaves and one heart refer to the period in which the pepper seedlings have grown two true leaves and one top bud besides the two cotyledons. According to this, the standard of qualified and unqualified seedlings in this study was set. The qualified seedling refers to the pepper seedlings which have obviously grown two true leaves and one top bud during the two-leaf and one-heart period, as shown in Figure 3a. Unqualified seedlings refer to the seedlings which have not yet grown true leaves as shown in Figure 3b, or which have only one true leaf as shown in Figure 3c. Lack of seedlings refers to no seedling growth in the cell as shown in Figure 3d. There are two types of cells that need to be replanted in the plug tray. The first type is unqualified seedlings, and the second type is lack of seedlings in the cells.

Figure 3.

Different classes of plug seedlings. (a) Example of seedlings with two true leaves. (b) Examples of seedlings with no true leaf. (c) Example of seedling with one true leaf. (d) Example of lack of seedlings.

2.2.2. Sample Collection

Samples collection were implemented on the prototype platform. The seedling cultivation time is March 2019, and the cultivation company is Beijing Zhongnong Futong Horticulture Co., Ltd. The scientific name of the pepper is capsicum which belongs to the Solanaceae capsicum. A total of 2286 sample images were collected in this research, of which 1243 were qualified seedling samples, 256 were unqualified seedling samples, and 787 were lack of seedling samples. 70% of the sample number is used as the model training set, and 30% is used as the verification set of the model. The sample collection data is shown in Table 1.

Table 1.

Sample data distribution.

2.3. Identification of Plug Tray Specification

Before classifying the plug seedlings, it is necessary to divide the ROI (region of interest) image into cell images according to the specification of the plug tray, and then put it into the seedling classification model for processing. Therefore, it is very important to identify the specification of plug tray. The size of length and width of the plug tray in the greenhouse transplanting is fixed, but the plug tray specifications are different. The commonly used plug tray specifications are 50 cells (5 rows × 10 columns), 72 cells (6 rows × 12 columns), and 105 cells (7 rows × 15 columns). Therefore, as long as the number of rows of the trays is identified, the specification of the trays can be determined.

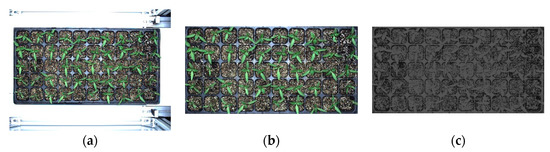

The original image acquired by the classification system is as shown in Figure 4a, and the ROI image is extracted by the acquired image according to the calibration position, as shown in Figure 4b.

Figure 4.

(a) Original image. (b) ROI RGB image. (c) ROI grayscale image.

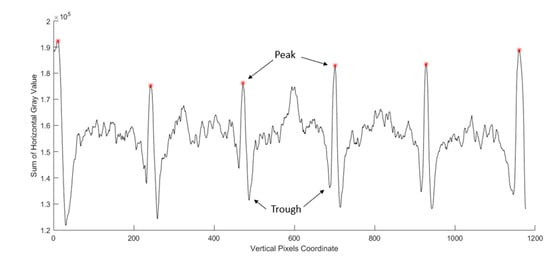

The ROI RGB image is grayed by (R + G + B)/3, as shown in Figure 4c. After the ROI RGB image is converted to grayscale image, the sum of horizontal grayscale value of the image is calculated to obtain a one-dimensional array, and the array is drawn into a curve, as shown in Figure 5.

Figure 5.

Grayscale horizontal distribution curve of the 50 cells plug tray.

By analyzing the grayscale image of the ROI, we found that the part in the middle of the cells has a small gray value, so it will produce a wave peak in the curve. The gray value of the matrix and seedling leaves is small, but the edge of the cells gray value is large, so it will produce a wave trough in the curve. From the grayscale cumulative curve, it appears that each row of cells will produce two peaks and two troughs on both sides. By counting the number of peak points of the grayscale cumulative curve, the specification of the plug tray can be determined. The calculation was as follows.

where M is the number of rows of the plug tray, N is the number of peak points.

M = N − 1

The steps of the grayscale cumulative curve finding peak points algorithm are as follows.

- Find all the maxima in the array. The maximum point A must satisfy the following conditions:where A is the maximum value point and data(a) is the grayscale cumulative value of point A.(data(a) > data(a − 1)) && (data(a) ≥ data(a + 1))

- Find the maximum of all poles and archive the maximum value of this pole and its position.

- Delete the pole of the archive pole with a distance less than or equal to the minimum distance.

- Repeat steps 2 and 3 for the remaining extreme points.

- Sort the extreme points of the archive by location.

As shown in Figure 5, the number of rows of the tray can be calculated by the method to be 5, and the tray specification was 50 cells.

2.4. Classification Method of Plug Seedlings

2.4.1. Convolutional Neural Networks

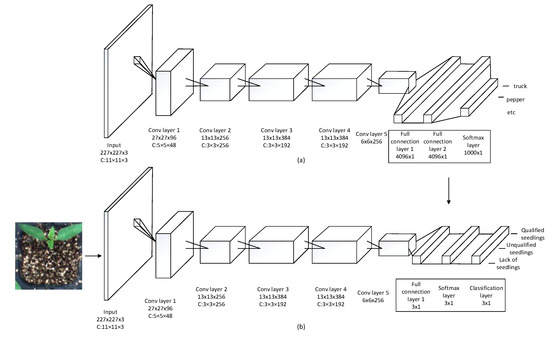

Convolutional neural networks (CNN) is a neural network with convolutional structure. The convolution structure can reduce the amount of memory occupied by deep networks, and can also reduce the number of parameters of the network and alleviate the over-fitting of the model. This advantage is more obvious when the input of the network is a multi-dimensional image. The image can be directly used as the input of the network, avoiding the complicated feature extraction and data reconstruction process in the traditional recognition algorithm. Currently, convolutional neural networks are widely used in agriculture. This study is based on four convolutional neural networks (Alexnet, Inception-v3, VGG16, Resnet-18) [21,22,23,24]. The transfer model is used to construct the seedling model. The main parameters of the four network structures are shown in Table 2. Taking the Alexnet convolutional neural network as an example, the structure of the convolutional neural network is as shown in Figure 6a. The network has eight layers, of which the first five layers are convolutional layers and the last three layers are 2 fully connected layers and 1 softmax layer.

Table 2.

Main parameters of 4 convolutional neural networks.

Figure 6.

Alexnet convolutional neural network and transfer learning model structure. (a) Alexnet convolutional neural network structure. (b) Transfer learning model structure based on Alexnet.

2.4.2. Transfer Learning

Transfer learning refers to the automatic extraction of features from new data sets using pre-trained models. This method is a convenient way to apply deep learning without the need for large data sets and time-consuming calculations and training. In some cases where the sample size is small, transfer learning is an effective machine learning method [25,26,27]. Pre-trained convolutional neural network models are used. These models have been trained in 1000 classes and 1.2 million samples of ImageNet datasets, and have strong feature extraction capabilities.

This study is based on four pre-trained convolutional neural network models (Alexnet, Inception-v3, Resnet-18, VGG16), and the last three layers of the networks’ structure are modified to make them suitable for the intelligent plug seedlings classification applications. Taking the Alexnet network model as an example, the last three layers of the Alexnet convolutional neural network are discarded, and then the bottleneck layer is taken as the feature extraction result of the new model. Then add a fully connected layer, a softmax layer and a classified output layer to form a new classification model, as shown in Figure 6b.

- A fully connected layer has been added to map multidimensional features to flat features. The fully connected layer in the convolutional neural network is the same as the role in the shallow neural network and is responsible for logical inference. All parameters in this layer need to be learned. This fully connected layer is used to link the output of the convolutional layer and remove the spatial information (the number of channels) which is a process of turning a three-dimensional matrix into a vector.

- A softmax layer has been added to calculate the probability that each sample belongs to each class. The softmax function was defined as follows.where , , K = 3.

- A classification layer is added to calculate the final classification probability of the sample and calculate the cross entropy [28]. Cross entropy loss function was defined as follows.where N is the number of the samples, K is the number of classes, is the indicator that the ith sample belongs to the jth class, and is the output for sample i for class j, which in this case, is the value from the softmax function. This is to say, it is the probability that the network associates the ith input with class j.

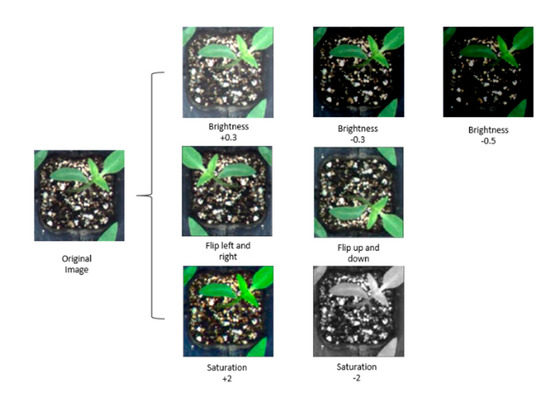

2.4.3. Data Augmentation

There are relatively few samples of the three types of seedlings. In this study, the sample image brightness is randomly transformed from −0.5 to 0.5, the direction is randomly flipped up and down, right and left, and the saturation is randomly transformed from −2 to +2 to achieve sample data augmentation, thereby enhancing the classification model’s robustness, as shown in Figure 7.

Figure 7.

Data augmentation examples.

3. Results and Discussion

3.1. Plug Tray Specification Test Result

Three types of plug trays (50 cells, 72 cells, 105 cells) were used to cultivate 20-day and 25-day pepper seedlings, and the test was carried out by using the tray specification identification method. Plug tray specification test result data are shown in Table 3.

Table 3.

Plug tray specification test result data.

It can be seen from the test results that the accuracy of the tray specification identification method proposed in this paper is 100% for the three tray specifications of 50 cells, 72 cells and 105 cells. This method can effectively identify the specifications of plug trays.

3.2. Plug Seedlings Classification Test Rusult

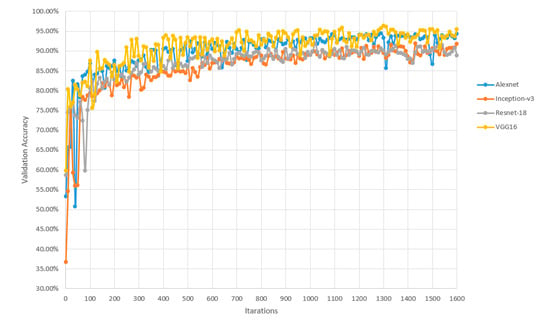

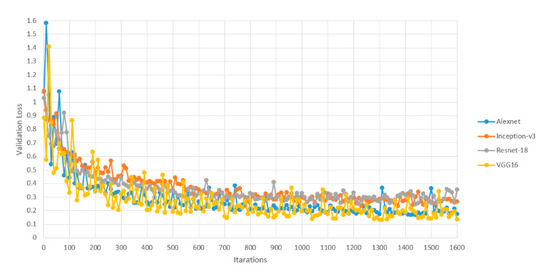

The transfer learning classification models based on four convolutional neural networks (Alexnet, Inception-V3, Resnet-18, VGG16) had been constructed, and the collected samples will be used for each model training and validation. The iteration steps were set to 1600 steps, and the minibatch size was set to 10. The learning rate was 0.0001, and the four classification model test results are shown in Table 4.

Table 4.

Four classification models test result.

The test results show that the classification accuracy of the four transfer learning methods based on convolution neural network are more than 92%. Among them, the classification model based on VGG16 has the highest classification accuracy (95.5%) and the classification model based on Alexnet has the shortest training time (6 min and 8 s).

The validation accuracy rate iteration change graph is shown in Figure 8. It can be seen from the graph that the accuracy of validation increased very quickly when training four types of neural network models. After about 200 iterations, the accuracy of validation had basically reached more than 80%. In 400 iterations, Alexnet and VGG16’s validation accuracy reached 90%, while Resnet-18 and Inception-v3’s validation accuracy reached 85%. After 1400 iterations, the validation accuracy of Alex and VGG18 was basically maintained at about 95%, and that of Resnet-18 and Inception-v3 was maintained at about 90%. The validation loss iteration change graph is shown in Figure 9. It can be seen from the graph that the validation loss of the four neural network models dropped rapidly when training them. After 200 iterations, the value of loss function decreased to 0.5. After 1400 iterations, the value of loss function of Alexnet and VGG18 basically maintained at about 0.2. The loss function values of Resnet-18 and Inception-v3 basically remained around 0.3.

Figure 8.

Validation accuracy rate iteration change graph.

Figure 9.

Validation loss iteration change graph.

According to the test results, the transfer learning algorithm based on convolution neural network had a good classification effect for seedling classification. By adopting this method, we used the cell RGB images as the input to this classification model, avoided the features extracted by manual, and reduced the information loss. With the data augmentation, such as the flip, brightness and saturation change, we enlarged the training data and created samples in different status, and this classification method we constructed still had a high accuracy. It showed strong robustness in classification ability against the traditional image processing method [1,2,3,4,5,6]. Because the different brightness or saturation of image samples may affect the segmentation result or any other algorithm effect in traditional image processing, so as to reduce the accuracy. After being trained and tested with 2286 images samples, the transfer learning method achieved a maximum 95.50% accuracy.

Through the analysis of misjudged samples, we found that there were two frequently made mistakes in classification, and the four models shared the same problems. The first one was that the lack of seedlings was recognized as unqualified seedlings when the cell without seedlings was intruded by several leaves from adjacent seedlings. The second one was that the qualified seedlings were recognized as unqualified seedlings when the qualified seedlings’ leaves intruded into the adjacent cells. The improvement mainly includes two aspects: One is the further optimization of the network parameters, so that the recognition network could have better recognition ability; the other is to increase the number of samples, especially including the above two kinds of samples which may easily lead to misjudgment, so that the network can learn more comprehensive and effective information, and further improve the classification accuracy.

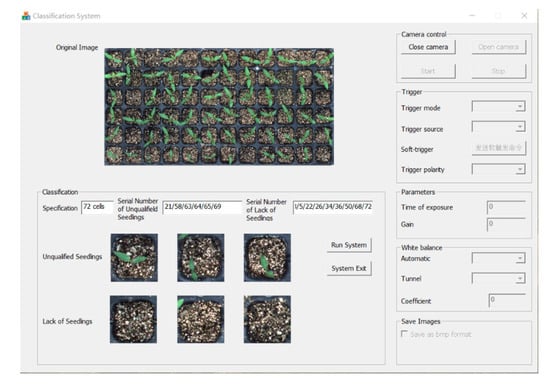

3.3. Prototype and Software Design

In this research, a prototype of plug seedling classification system and classification software were designed, shown in Figure 10 and Figure 11. We designed the classification system software with C++ language based on the Visual Studio framework. In the classification system, the plug tray specification identification method was designed with the Opencv development kit. The seedlings classification model based on transfer learning method was designed in the TensorFlow environment using the Python language.

Figure 10.

Classification system prototype.

Figure 11.

Classification system software.

4. Conclusions

This paper proposed a seedling classification method based on transfer learning. Four classification models by using transfer learning based on four different convolutional neural networks (Alexnet, Inception-v3, Resnet-18, VGG16) were constructed and tested. The classification accuracy of the VGG16-based classification model was the best, which was 95.50%, the Alexnet-based classification model had the shortest training time, which was 6 min and 8 s. In the pretreatment of seedling classification, we proposed an identification method of tray specification, which could effectively identify the specifications of plug trays. We designed the prototype and the classification software based on the Visual Studio 2010 framework and the TensorFlow environment.

Author Contributions

Conceptualization, Z.X. and Y.T.; data curation, X.L.; methodology, Z.X. and S.Y.; validation, X.L. and S.Y.; writing—original draft, Z.X.; writing—review and editing, Y.T.

Funding

This research was funded by The National Key Research and Development Program of China (grant number: No. 2016YFD0700302) from China Ministry of Science and Technology.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jiang, H.Y.; Shi, J.H.; Ren, Y.; Ying, Y.B. Application of machine vision on automatic seedling transplanting. Trans. Chin. Soc. Agric. Eng. 2009, 25, 127–131. [Google Scholar] [CrossRef]

- Tong, J.H.; Li, J.B.; Jiang, H.Y. Machine vision techniques for the evaluation of seedling quality based on leaf area. Biosyst. Eng. 2013, 115, 369–379. [Google Scholar] [CrossRef]

- Tong, J.H.; Shi, H.F.; Wu, C.Y.; Jiang, H.Y.; Yang, T.W. Skewness correction and qualitye valuation of plug seedling images based on Canny operator and Hough transform. Comput. Electron. Agric. 2018, 155, 461–472. [Google Scholar] [CrossRef]

- Qingchun, F.; Chunjiang, Z.; Kai, J.; Pengfei, F.; Xiu, W. Design and test of tray-seedling sorting transplanter. Int. J. Agric. Biol. Eng. 2015, 8, 14–20. [Google Scholar] [CrossRef]

- Tian, S.; Wang, Z.; Yang, J.; Huang, Z.; Wang, R.; Wang, L.; Dong, J. Development of an automatic visual grading system for grafting seedlings. Adv. Mech. Eng. 2017, 9, 1–12. [Google Scholar] [CrossRef]

- Wang, Y.W.; Xiao, X.Z.; Liang, X.F.; Wang, J.; Wu, C.Y.; Xu, J.K. Plug cell positioning and seedling shortage detecting system on automatic seedling supplementing test-bed for vegetable plug seedlings. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2018, 34, 35–41. [Google Scholar] [CrossRef]

- Zhou, F.Y.; Jin, L.P.; Dong, J. Review of Convolutional Neural Network. Chin. J. Comput. 2017, 40, 1229–1251. [Google Scholar] [CrossRef]

- Koray, K.; Pierre, S.; Y.-Lan, B.; Karol, G.; Michael, M.; Yann, L. Learning convolutional feature hierarchies for visual recognition. In Proceedings of the 23rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; pp. 1090–1098. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Bhatt, P.; Sarangi, S.; Pappula, S. Comparison of CNN models for application in crop health assessment with participatory sensing. In Proceedings of the 2017 IEEE Global Humanitarian Technology Conference (GHTC), San Jose, CA, USA, 19–22 October 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Guan, W.; Yu, S.; Jianxin, W. Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017, 2017, 2917536. [Google Scholar] [CrossRef]

- Ng, H.W.; Nguyen, V.D.; Vonikakis, V.; Winkler, S. Deep learning for emotion recognition on small datasets using transfer learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 13 November 2015; pp. 443–449. [Google Scholar] [CrossRef]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2009; pp. 242–264. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the 27th International Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Christian, S.; Wei, L.; Yangqing, J.; Pierre, S.; Scott, R.; Dragomir, A.; Dumitru, E.; Vincent, V.; Andrew, R. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Main Vegetable Varieties and Promotion Techniques in Qinghai Province in 2018. Available online: http://nynct.qinghai.gov.cn/Html/2018_03_30/2_211390_2018_03_30_226663.html (accessed on 21 June 2019).

- Tang, Y.X.; Qu, P.; Liu, X.H.; Xu, H.C.; Gao, J.; Zhang, D.X.; Li, S. Technical Regulations of Raising Pepper Plug Seedling for Mechanized Transplantation. Jiangsu Agric. Sci. 2017, 45, 112–115. [Google Scholar] [CrossRef]

- Zhang, J.J.; Zhang, X.Q.; Peng, F.Z.; Chen, J.Z. The Vegetable Industralized Sowing Seedling Technology and Its Application Prospect. J. Hebei Agric. Sci. 2013, 17, 20–23. [Google Scholar] [CrossRef]

- Alex, K.; Ilya, S.; Geoffrey, E.H. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 2012, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1106–1114. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Gogul, I.; Kumar, V.S. Flower species recognition system using convolution neural networks and transfer learning. In Proceedings of the 2017 Fourth International Conference on Signal Processing, Communication and Networking (ICSCN), Chennai, India, 16–18 March 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Ghazi, M.M.; Yanikoglu, B.; Aptoula, E. Plant identification using deep neural networks via optimization of transfer learning parameters. Neurocomputing 2017, 235, 228–235. [Google Scholar] [CrossRef]

- Suh, H.K.; IJsselmuiden, J.; Hofstee, J.W.; van Henten, E.J. Transfer learning for the classification of sugar beet and volunteer potato under field conditions. Biosyst. Eng. 2018, 174, 50–65. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics); Springer-Verlag New York, Inc.: New York, NY, USA, 2006. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).