Abstract

The extraction of buildings from remote sensing images is of crucial significance in urban management and planning, but it remains difficult to automatically extract buildings with precise boundaries from remote sensing images. In this paper, we propose the FEPA-Net network model, which integrates the feature extraction and position attention module for the extraction of buildings in remote sensing images. The suggested model is implemented by employing U-Net as a base model. Firstly, the number of convolutional operations in the model was increased to extract more abstract features of the objects on the ground; secondly, within the network, the ordinary convolution is substituted with the dilated convolution. This substitution aims to broaden the receptive field, with the primary intention of enabling the output of each convolution layer to incorporate a broader spectrum of feature information. Additionally, a feature extraction module is added to mitigate the loss of detailed features. Finally, the position attention module is introduced to obtain more context information. The model undergoes validation and analysis using the Massachusetts dataset and the WHU dataset. The experimental results demonstrate that the FEPA-Net model outperforms other comparative methods in quantitative evaluation. Specifically, compared to the U-Net model, the average cross-merge ratio on the two datasets improves by 1.41% and 1.43%, respectively. The comparison of the results shows that the FEPA-Net model effectively improves the accuracy of building extraction, reduces the phenomenon of wrong detection and omission, and can more clearly identify the building outline.

1. Introduction

Buildings are essential components of cities and the most variable basic geographic data. Accurate extraction of building information holds significant application value in aspects such as urban planning, urban management, urban digitization, and the updating of geodatabases [1,2,3]. Buildings in images possess richer detailed information due to the continuous improvement of remote sensing image quality and resolution. Nevertheless, this situation also brings in a greater amount of noise, leading to a more pronounced differentiation between building areas and non-building areas [4]. Additionally, there are issues such as diverse building scales and complex structures. Therefore, accurate and efficient high-resolution remote sensing image-based building extraction is currently a key research area.

In large-scale practical applications, employing manual extraction of information will consume a lot of human and material resources, and accuracy cannot be guaranteed. In recent years, many building extraction methods have been proposed by related scholars, including traditional methods and deep learning methods. Traditional methods, such as maximum likelihood algorithms [5,6], support vector machines [7,8,9,10], decision trees [11,12], and random forests [13,14,15,16], etc., face challenges. Since top-quality remote sensing images are packed full of diverse feature details, these algorithms rely on a priori knowledge. They utilize the shape, color, and contour of the target objects to obtain feature parameters and establish decision rules. However, they are easily restricted to building areas with specific shapes. As a result, the “salt-and-pepper” phenomenon occurs when extracting building information. Moreover, the difference in spectral brightness between features can lead to the situations of “the same object with different spectra” and “different objects with the same spectrum”, which ultimately results in low extraction accuracy and blurred boundaries. Object-oriented classification approaches have the ability to efficiently prevent classification errors that are induced by spectral disparities. These methods mainly encompass the region segmentation method [17], texture-based segmentation [18], and several other techniques. The segmentation parameters play a vital part in determining the precision of image segmentation. Moreover, they also augment the complexity of the classification task. Owing to the intricacy of buildings, their high density, and the occlusion caused by trees, it becomes rather arduous to extract useful information from the images.

With the rapid advancement in the realm of computer vision, deep learning models have increasingly come into the spotlight. In particular, the capability of convolutional neural networks (CNNs) to autonomously extract features has been extensively utilized in the domain of image recognition. Prominent examples of such CNN architectures include VGG, GoogLeNet, and ResNet, among others [19,20,21]. Mnih [22] adopted a convolutional neural network-based method for the extraction of buildings. Nevertheless, the relatively high computational demands associated with this approach, which influenced the segmentation efficiency, had an impact on the quality of the processed images. Shelhamer et al. [23] proposed the fully convolutional neural network (FCN) to solve the problem, and it became the mainstream semantic segmentation framework, making use of convolutional layers in lieu of fully connected layers to bring about the first end-to-end trained network, which effectively improved training efficiency.

Generalized convolutional neural network (CNN) models cannot accurately extract the contours of buildings. In an attempt to further enhance the precision of building extraction, scholars have proposed some improved methods based on the FCN, including PSPNet [24], DeepLabV3+ [25], U-Net [26], and other networks. The pyramid pooling module plays the role of the essential component within the PSPNet network. This module has the function of aggregating the contextual information from diverse regions. Through such aggregation, it effectively boosts the capacity of the network to acquire global information. DeepLabV3+ balances the exactness in segmentation with the model training time by the null convolution, which has the capacity to extract the output features from the network and use them to work out images with distinct resolutions. It is able to adjust the perceptual field with constant feature map size to extract multi-scale information. The U-Net network model, which is an improved fully convolutional neural network, inherits the features of fully convolutional neural networks, allowing the model to utilize the feature connections to the fullest between the higher and lower layers to obtain accurate output results. Among various deep learning models, the U-Net network model has stable performance, strong learning power, and high robustness. It was initially used for detecting medical images and later widely used in building extraction, while for complex remote sensing image information extraction, detailed information would be lost in maximum pooling sampling, so related scholars proposed improved algorithms. Li et al. [27] put forward a fully convolutional U-network model to establish close connections to pull out contextual information fully and completely, which boosts the exactness in building extraction by improving the model structure and post-processing but increases the complexity of building extraction. Lei et al. [28] introduced a U-Net (DHAU-Net) based on a dual hybrid attention mechanism, which enhances feature integration and uses the attention mechanism to focus on relevant image details. Qiu et al. [29] proposed a refined U-Net network (Refined-UNET) with the aim of pulling out buildings more accurately by introducing an expanded spatial convolution pyramid pooling (ASPP) module and an improved depth detachable convolution (IDSC) module. Ye et al. [30] incorporated a spatial attention mechanism and a channel attention mechanism within the U-Net network. The aim of this incorporation was to mitigate the information disparities among features at various hierarchical levels. The U-Net-based multi-loss neural network model proposed by Guo et al. [31] added the attention module to improve model sensitivity and curb the effect of unrelated feature regions. To solve the problem of low computational efficiency, Liu et al. [32] proposed an asymmetric convolutional residual block network to reduce the model’s size. Even though these approaches possess certain benefits for extracting buildings, the following drawbacks remain: (1) For the scale invariance and structural complexity of buildings within high-resolution remote sensing images, the ability to extract small-scale or irregular buildings is insufficient, and there are problems of wrong detection, missed detection and unclear building contours. (2) The model’s expression of global features is not enough, and voids appear within buildings. (3) The inter-class variability of buildings is low.

To address the abovementioned problems, this paper puts forward FEPA-Net. A high-resolution remote sensing image building extraction network is what it is, which integrates feature extraction and position attention modules. This network is established on the architectural framework of the U-Net model. The model employs parallel dilated convolution with different receptive field in the input feature mapping by increasing the times of convolutions and fusing them together to reduce the computation, expanding the receptive field, and avoiding the convolutional degradation caused by convolution with a too large expansion rate while applying global averaging on the final feature mapping of the feature extraction module, convolving the result by 1 × 1, and then bilinear up-sampling to obtain the required spatial dimension. The issue of unclear outline of the buildings obtained through extraction is solved, and the position channel attention module is added to the network for the purpose of obtaining multi-scale contextual information, which enhances the inter-class variability of buildings, reduces the occurrence of error and omission detection, and improves the efficiency of building extraction.

The model is validated on the Massachusetts dataset [22] and the WHU aerial image dataset [33], and the findings indicate that the approach presented in this paper extracts buildings completely with higher accuracy and clear building contours.

2. Materials and Methods

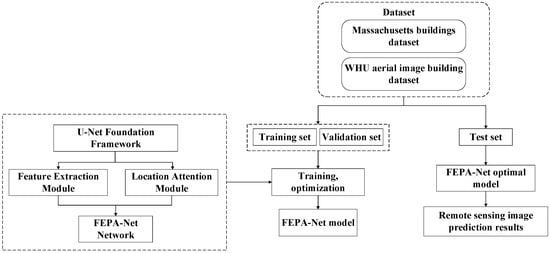

Since the classical U-Net network model based on the coding and decoding structure is prone to spatial accuracy loss, the study presented in this paper takes the improved network model FEPA-Net as the core, obtains deep multi-scale feature perceptual field information through the feature extraction module, increases the number of network convolutions, and adds the position attention module, which can effectively enhance the segmentation precision of building-related information in remote sensing images. The technical roadmap of this paper is shown in Figure 1. First, we use two datasets, namely the Massachusetts dataset and the WHU aerial imagery dataset. The training set and the validation set are input into the FEPA-Net model. Through training and optimization, the parameters are adjusted to improve performance. Finally, the obtained optimal model is used to evaluate the accuracy of the test set and obtain the prediction results of the remote sensing images.

Figure 1.

Technical route of this article.

2.1. Dilated Convolution

To reduce the image size while increasing the field of perception, continuous convolution and pooling are usually performed on the image, or down-sampling operations are used to obtain multi-scale contextual information, which can reduce the resolution of the image as well as missing detail information. In this study, dilated convolution is incorporated into the U-Net network. This incorporation enables an expansion of the scope of perception of the convolution kernel without altering the number of parameters. As a result, each convolution output encompasses information from a broader area, and it guarantees that the extent of the output feature map remains unaltered.

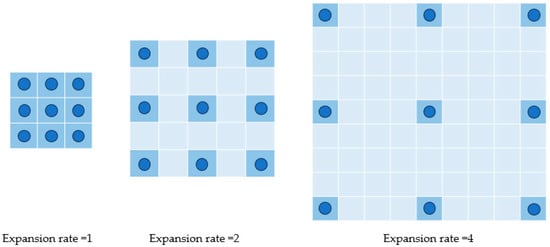

The expansion rate of the dilated convolution defines the spacing of each value when the convolution kernel processes the data, which determines the size of the perceptual field, as shown in Figure 2. The feature maps are obtained by the dilated convolution operations with different void rates, and the fused convolutional feature maps can obtain the image’s global information and enrich the image features without increasing the number of parameters.

Figure 2.

Dilated convolution.

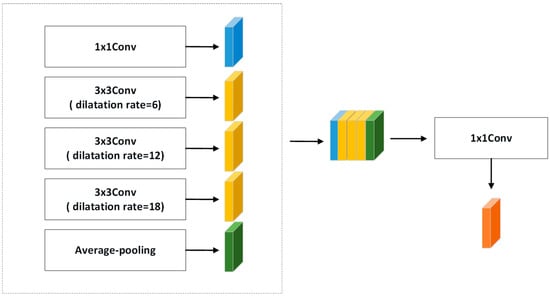

2.2. Feature Extraction Module

Continuous cavity convolution will image the target convolution, and the larger expansion rate cannot segment small objects completely. To address this issue, we used a feature extraction module to obtain multi-scale information using dilated convolution with different void rates. For the input image, the features are first downscaled by 1 × 1 convolution and then extracted using three parallel, kernel-sized 3 × 3 cavity convolutions with dilation rates of 6, 12, and 18 and filter numbers of 512, and then global average pooling is performed to derive the feature image, and finally the feature map is fused and the channels are adjusted by 1 × 1 convolution, which limits the model size and computational effort to derive the channel number of 512 for the new feature map, as shown in Figure 3.

Figure 3.

Feature extraction module.

Through a series of cascades of dilated convolution combinations, the sensory field becomes larger and larger and also avoids the convolution degradation caused by convolution with too large an expansion rate. Feature maps are responsible for encoding semantic information at multiple scales. Specifically, various intermediate feature maps are utilized to encode information from distinct scales. As a result, the final output features encompass a broad spectrum of semantic information.

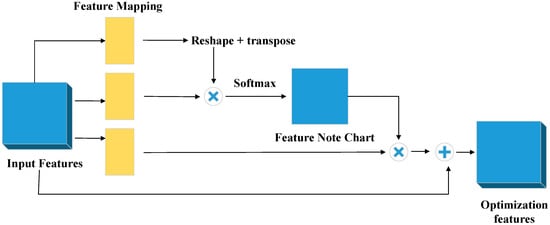

2.3. Position Attention Module

In this study, the position attention module [34] is introduced with the aim of establishing abundant contextual relationships among local features. By leveraging the correlation between any two features, this approach enables the mutual enhancement of the representation of these individual features. Specifically, it encodes more extensive contextual information into the local features, thereby strengthening their representational capabilities. The specific working principle of the position attention module is presented in Figure 4.

Figure 4.

Position attention module.

2.4. Loss Function

When aiming to train a convolutional neural network model that accurately captures the extent of divergence between the model’s predictions and the factual data, the loss function proves to be of utmost significance. It is formulated as a function of the prediction segmentation error. This error metric has the ability to be backpropagated to the preceding layers of the neural network. Through this backpropagation process, it enables the update and optimization of the weights within the network, thereby facilitating the model’s learning and improvement. The Cross-Entropy Loss (CEL) acts as a highly useful tool for assessing the performance of classification or segmentation models. It is designed to quantitatively measure the disparity between the real probability distribution state of the data and the probability distribution predicted by the model. By providing a numerical value that represents this difference, this function evaluates each pixel by considering the output of the softmax layer as a pixel classification problem; the lower the value of cross-entropy, the better the model prediction. For the binary classification problem, the cross-entropy loss function can be expressed as a binary cross-entropy (BCE) loss function as follows:

Previously, the Dice Coefficient (DC) was mainly applied as a metric for assessing the quality of segmentation results. In recent times, it has demonstrated remarkable performance when utilized as a loss function throughout the training of models. represents the true label of the -th sample. represents the probability value that the model predicts the -th sample as the positive class. DC measures the extent of overlap between the labeled image and the predicted image within the range [0, 1]; when a DC value reaches 1, it signifies a complete overlap. DC can be defined as follows:

In this paper, a combination of cross-entropy loss function and DC is employed for the training of the network model in order to facilitate the solution of the sample imbalance problem and prevent the model from overfitting.

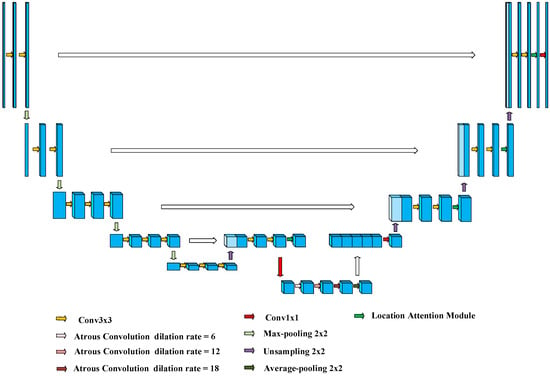

2.5. FEPA-Net Network Model

In this study, the network structure refers to the encoding–decoding structure of classical U-Net. The encoding component on the left side serves as the backbone feature extraction network. It is constructed by incorporating convolutional and max-pooling layers, enabling the acquisition of low-level spatial features. In contrast, the decoding component on the right side functions as the enhanced feature extraction network. This network is composed of up-sampling and convolutional layers, which are employed to restore the high-level abstract features of the feature map that were lost during the up-sampling process. Additionally, a stacking approach is utilized to integrate the information from diverse features, facilitating a more comprehensive feature representation, and the final obtained feature map is of the same height and width as the image taken as input.

The composition of the FEPA-Net network is presented in Figure 5, which also adopts a symmetric encoding–decoding structure. The left half of the network is an ordinary convolutional network structure, which consists of five groups of convolution and four groups of maximum pooling; the first two groups of convolution have two 3 × 3 convolution operations, and the last three groups have three 3 × 3 convolutions, and each convolution is followed by a RELU activation function. The number of channels is 64, 128, 256, 512, and 1024. The pooling layer reduces the scale of the input feature map, allowing the model to have fewer parameters. The right portion of the network conducts up-sampling on the outputted deep abstract features and down-sampling on the outputted shallow sub-local features, with the aim of fusing these two types of features. Instead of using ordinary convolution, a feature extraction module is applied to acquire multi-scale feature information. Moreover, a position attention module is incorporated. This module is employed to fuse the feature maps at different levels for the purpose of implementing jump connections. By doing so, it enables the acquisition of contextual information relying on the feature maps of various levels; thus, it improves the network’s capability to represent features. To avoid gradient explosion and gradient disappearance, a BN (Batch Normalization) layer and a RELU activation function are added after the convolutional layer of the feature extraction module, and finally, dropout regularization is added to prevent overfitting after performing 1 × 1 convolutional compression.

Figure 5.

FEPA-Net network model.

2.6. Experimental Dataset

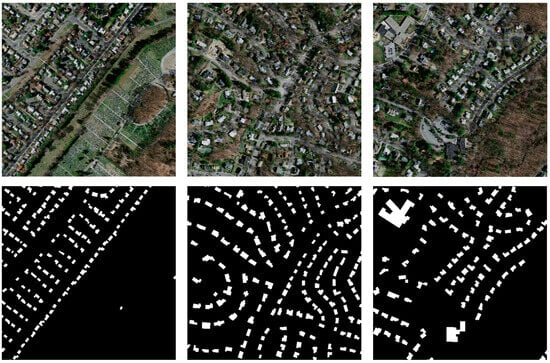

In 2013, Mnih put forward the Massachusetts dataset [22] (https://www.cs.toronto.edu/~vmnih/data, accessed on 15 October 2023) from the University of Toronto. This dataset consists of 151 remotely sensed images. Each image has a dimension of 1500 × 1500 pixels in terms of resolution, and the spatial resolution of these images is 1 m. The ground truth was obtained through the combination of high-resolution aerial imagery and professional geospatial analysis software. This remote sensing image dataset is divided into 137 training sets, 4 validation sets, and 10 test sets. In this study, the image size is cropped to 512 × 512 pixels, and considering the small number of datasets, the cut images are rotated, flipped horizontally and vertically and thus expanded to four times the original dataset. An example image of the Massachusetts dataset is shown in Figure 6.

Figure 6.

Example of the Massachusetts dataset.

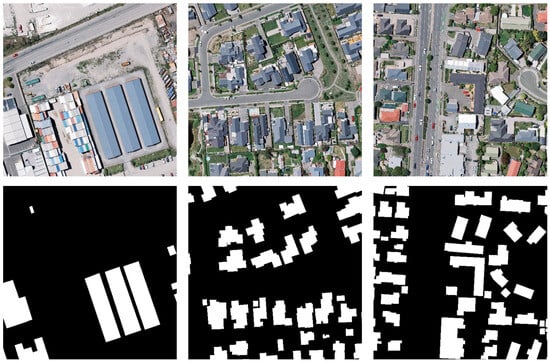

The WHU buildings dataset (https://gpcv.whu.edu.cn/data/building_dataset.html, 15 October 2023) from Wuhan University [33] includes an aerial dataset along with a satellite dataset, and this study uses an aerial image dataset, which covers an area of about 450 km2 in New Zealand with a spatial resolution of 0.3 m. The ground truth data were created based on advanced photogrammetric techniques and multi-spectral remote sensing data processing. The image size in the dataset is 512 × 512 pixels, including 4736 training set, 1036 validation set, and 2416 test set images. An example image of the WHU aerial imagery dataset is shown in Figure 7.

Figure 7.

Example of the WHU aerial imagery dataset.

2.7. Experimental Conditions and Configuration

The hardware and software environment for the experiments in this study is the Ubuntu 20.04 operating system, the GPU model used is the NVIDIA GeForce RTX 3080 Ti, which is made by NVIDIA that is located in Santa Clara, CA, USA. the memory size is 12 G, and the CUDA driver and running version is 10.0. The development language used for the experiments in this study is Python 3.10, the main parameters of the network model are set before training, and the optimizer selects the Adam algorithm for network parameter update. The iteration count is set at 100 to speed up the training rate by freezing the training and also to prevent the weights from being destroyed. The starting learning rate is configured as 0.0001 for the first 50 epochs and 1 × 10−5 for the next 50; additionally, the learning rate is automatically regulated by means of stratified descent, while the values of the batch size are set to 8 and 4 in sequence.

2.8. Evaluation Index

In this paper, the confusion matrix approach is used to evaluate the model, and the following equations are chosen as the evaluation indexes of semantic segmentation: overall accuracy (OA), precision (Precision), recall (Recall), and Mean Intersection over Union (MIoU):

where TP denotes the real class, where the real class of the sample is positive and the model extracts it as positive; FN represents the class with false negative instances, in which the actual class of the sample is positive, yet the model classifies it as negative; FP is the false positive class, where the real class of the sample is negative but the model determines it to be positive; and TN is the real negative class, referring to the situation where the real class of the sample is negative and the model correctly classifies it as negative.

3. Results

3.1. Experimental Results and Analysis

To efficiently assess the performance of the FEPA-Net model proposed in this paper, the FEPA-Net model is contrasted with the U-Net, DeepLabV3+, and PSPNet networks. Additionally, it is respectively verified on the test sets of the Massachusetts dataset and the WHU aerial image dataset. All network models in the experiments have the same operating environment and the same network optimization parameters.

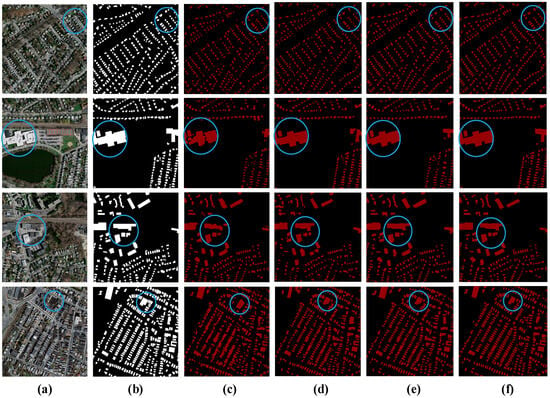

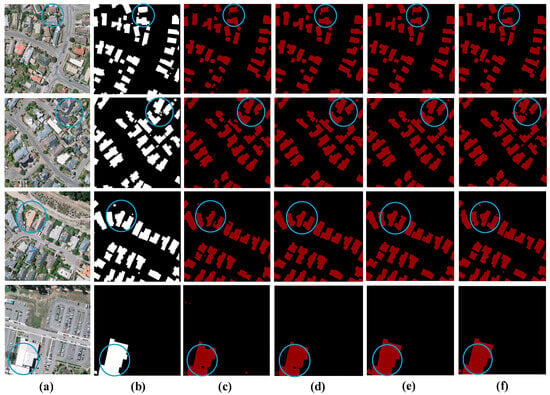

This study can verify the validity of the model under the large-scale complex regions by the Massachusetts dataset. The numerous morphologically complex buildings in the image can test the effectiveness of the application of the model, and the outcomes of the test are presented in Figure 8. It can be clearly seen from it that the FEPA-Net model can accurately extract buildings at different scales by discriminating features with contextual information, and it can retain the edges of buildings well. In contrast, the FEPA-Net model is capable of precisely recognizing the neighboring small buildings, and the edges are clearer. When there are large buildings within the image, the large buildings that are obtained by the PSPNet and DeepLabV3+ models are incomplete, with voids inside and blurred edges, and the U-Net model can solve the above problems to some extent but still cannot accurately extract large buildings. In comparison, the FEPA-Net model demonstrates the most optimal extraction performance. It not only retains the building edges during the process of building extraction but also alleviates the problem of missed extractions.

Figure 8.

Comparison results of different models on the Massachusetts building dataset. (a) Original image. (b) Ground truth. (c) PSPNet. (d) DeepLabV3+. (e) U-Net. (f) FEPA-Net.

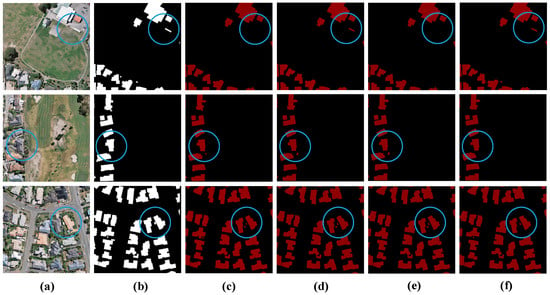

The shape of the WHU aerial imagery dataset is regular. To further verify the validity of the FEPA-Net model, the results of building extraction using the PSPNet, DeepLabV3+, U-Net, and FEPA-Net models are compared in this paper based on the WHU dataset, as shown in Figure 9. The overall analysis shows that the extraction effect of the FEPA-Net model is better than the other methods. Compared with the PSPNet and DeepLabV3+ models, the buildings extracted by the U-Net and FEPA-Net models are more complete; in particular, the FEPA-Net model is capable of precisely extracting the edges of buildings, and the model enhances the situation of misclassification and omission of small buildings, can finely extract adjacent buildings, and addresses the issue of internal voids in building extraction. Regarding large buildings, every method is capable of extracting the main structure of the building. However, when compared to other methods, the FEPA-Net model extracts the building edges with greater clarity.

Figure 9.

Comparison results of different models on the WHU aerial imagery dataset. (a) Original image. (b) Ground truth. (c) PSPNet. (d) DeepLabV3+. (e) U-Net. (f) FEPA-Net.

Table 1 shows the accuracy comparison results of different models on different building datasets. It can be seen that the PSPNet model has the lowest extraction accuracy, and the FEPA-Net model has the best four accuracy indexes compared with the comparison models, in which the overall accuracy and the average cross-merge rate on the Massachuset dataset reach 94.25% and 81.47%, respectively, compared with the U-Net. The overall accuracy and the average cross-merge rate on the Massachuset dataset reached 94.25% and 81.47%, respectively, which are 1.29% and 1.41% higher than U-Net. On the WHU building dataset, when compared with all other methods, the FEPA-Net model attained the highest degree of precision, with the overall accuracy and average cross-merge rate reaching 98.93% and 94.83%, respectively, which are 1.6% and 1.43% better than the extraction outcomes yielded by the U-Net model, respectively.

Table 1.

Comparison of the accuracy of different models on the two datasets.

3.2. Ablation Experiments

To further examine the validity of the FEPA-Net model and verify the influence of each module on model performance, experiments were conducted for each module and accuracy analysis, including (1) U-Net; (2) U-Net+ Feature Extraction Module; (3) U-Net+ Position Attention; (4) U-Net+ Feature Extraction–Position Attention. Under identical conditions of computer hardware, software, and model-related parameters, each of the models in the four experiments underwent training for a duration of 100 epochs. The WHU building dataset was employed for these experiments, and the experimental outcomes are presented in Table 2. Compared with U-Net, adding the feature extraction module can efficiently enhance the precision of building boundary extraction, and the recall rate is improved by 1.08%. After incorporating the position attention model into the network, there is a notable enhancement in addressing the misclassification issue during building extraction. Specifically, the recall rate was elevated by 0.95%. In terms of the average cross-comparison accuracy, the model in this paper improves by 1.43%, 1.07%, and 0.79% compared to the U-Net infrastructure, the model with only the feature extraction module, and the model with only the position attention module, respectively, which indicates that the module for extracting features and the position attention module improve the outcome of extraction by the model to some extent, and the U-Net+ Feature Extraction- Position Attention (FEPA-Net) model has the highest accuracy.

Table 2.

Results of ablation experiments.

From Figure 10, it is evident that the buildings extracted using the U-Net method have missed and wrong detection, while using the U-Net+ Feature Extraction- Position Attention method can extract all of the buildings in the image, which has the best effect on building extraction compared with other methods.

Figure 10.

Comparison results of different module on the WHU building dataset. (a) Original image. (b) Ground truth. (c) U-Net. (d) U-Net+ Feature Extraction Module. (e) U-Net+ Position Attention. (f) U-Net+ Feature Extraction–Position Attention.

4. Discussion

4.1. Sensitivity Analysis

The experimental outcomes indicate that the method put forward is capable of attaining superior performance in various datasets through the integration of feature extraction and position attention modules. The put-forward method’s effectiveness predominantly stems from feature selection in both the spatial and channel dimensions, as well as label refinement by learning higher-order structural features. When dealing with building edge details, FEPA-Net can capture multi-scale features from local to global scales, facilitating the more effective capture of multi-scale spatial correlations among pixels. In particular, the spatial and channel attention mechanisms enhance useful features; they enable the model to segment buildings with high precision across various terrains and effectively filter out background information. Moreover, the designed model demonstrates outstanding scalability, and it is possible to further optimize this scalability by strengthening the encoder structure.

Although the ablation experiments described in this paper are mainly used to assess the influence of each module on the performance of the model, they also provide a certain basis for analyzing the influence of the above-mentioned features. Through the comparison of the findings from the experiment between U-Net and the U-Net+ Feature Extraction Module, it is evident that upon the insertion of the feature extraction module, the recall rate of the model rises when handling complex features. This observation suggests that the feature extraction module can identify the multi-scale features of buildings more effectively. Moreover, it mitigates, to some degree, the interference brought about by elements like lighting, color, image resolution, and texture features.

4.2. Digitization Footprint

In terms of computing resources, due to the adoption of dilated convolution, a feature extraction module, and a position attention module, compared with some basic neural network models, the demand for computing resources during its operation has increased. When conducting experiments using an NVIDIA GeForce RTX 3080 Ti GPU, although the training and inference tasks can be effectively completed, compared with the simple U-Net model, its memory usage and computational load are relatively higher. This is because dilated convolution expands the receptive field while escalating he complication of the convolution operation; the feature extraction module obtains information through multi-scale dilated convolution operations, further increasing the computational amount; and the position attention module calculates the correlation between features, which also consumes a certain amount of computing resources.

4.3. Application of the Actual Results

In the domain of urban planning, precise building extraction outcomes can offer essential foundations for urban zoning and land use planning. By accurately grasping the distribution information of buildings, planners can rationally optimize the urban spatial structure. Regarding disaster management, by contrasting the building extraction results prior to and following a disaster, the damage state of buildings can be swiftly assessed. This assessment offers support for the reasonable distribution of rescue resources and expedites the post-disaster reconstruction process. In the context of urban digitization and the updating of geographical databases, accurate building information serves as the basis for creating high-precision digital city models. Whether the building data in the geographical database are accurate directly affects the accuracy and practicality of map navigation, urban information management systems, and the like.

4.4. Limitations of the Study

Even though the FEPA-Net model has obtained favorable outcomes in building segmentation, there remain certain aspects requiring further improvement. In actuality, buildings can be influenced by multiple factors like vegetation obstruction and shadow interference. This makes it arduous to precisely extract the features of some buildings. In the future, it is essential to explore ways to further optimize the model structure. This will enable the model to more efficiently handle these intricate scenarios and accurately recognize buildings that are obscured or located in shadow areas. Meanwhile, this study is based on a vast quantity of sample data. In future work, self-learning signals generated from unlabeled data can be utilized to improve the segmentation network. Additionally, the extended applications of FEPA-Net in scenarios such as road extraction and water body extraction should be explored.

5. Conclusions

By using the FEPA-Net model to precisely extract buildings from high-resolution remote sensing images, the following conclusions were drawn:

(1) The FEPA-Net model increases the number of convolutional operations in the main part of feature extraction. It utilizes dilated convolutions with varying dilation rates on the input feature maps and integrates a module for extracting features, with the aim of obtaining the global and comprehensive information of high-resolution images. This expands the receptive field and prevents the occurrence of holes when extracting buildings.

(2) The FEPA-Net model incorporates the position attention module to obtain more abundant contextual information, thereby reducing the loss of building detail features, which not only boosts the network’s generalization ability but also enhances the completeness of building extraction.

(3) To validate the effectiveness of the FEPA-Net model, optimization and training are performed based on the Massachusetts dataset and WHU aerial image dataset, respectively, and automated building extraction is performed with PSPNet, DeepLabv3+, U-Net, and other networks for remote sensing images, and the results of qualitative and quantitative analyses show that the FEPA-Net model achieved improved results compared with the other methods.

Although the method presented in this paper can extract building information effectively, its prediction accuracy has the potential to improve. Regarding the model structure, it is feasible to contemplate incorporating other algorithms to enhance the applicability of the building extraction method, and the extraction performance merits further exploration.

Author Contributions

Y.L., W.Z. and Y.D. conceived the project, formulated the key conceptual notions, and produced the proof framework. Y.L. carried out the neural network training and wrote the initial draft. Y.L. and Y.D. conducted an analysis of the background literature. W.Z., X.Z. and C.W. took charge of timely communication and coordination with all co-authors. W.Z., X.Z. and C.W. made critical revisions to the article. Y.L., Y.D., X.Z., W.Z. and C.W. participated in the preparation of figure items at different stages. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Education Bureau of Liaoning Province, China (LJKMZ20220638), and the National Natural Science Foundation of China (42471371).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available at [https://www.cs.toronto.edu/~vmnih/data (accessed on 15 October 2023)], [https://gpcv.whu.edu.cn/data/building_dataset.html (accessed on 15 October 2023)].

Acknowledgments

We deeply appreciate all those who lent us a hand during the research and writing process of this paper. Moreover, we sincerely express our gratitude to everyone who dedicated a significant amount of time to reading this thesis and provided us with numerous valuable suggestions that will be beneficial for our future studies.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, Q.; Wang, L.; Waslander, S.L.; Liu, X. An end-to-end shape modeling framework for vectorized building outline generation from aerial images. ISPRS J. Photogramm. Remote Sens. 2020, 170, 114–126. [Google Scholar] [CrossRef]

- Janalipour, M.; Mohammadzadeh, A. Evaluation of effectiveness of three fuzzy systems and three texture extraction methods for building damage detection from post-event LiDAR data. Int. J. Digit. Earth 2018, 11, 1241–1268. [Google Scholar] [CrossRef]

- Wang, H.; Miao, F. Building extraction from remote sensing images using deep residual U-Net. Eur. J. Remote Sens. 2022, 55, 71–85. [Google Scholar] [CrossRef]

- Xiao, X.; Guo, W.; Chen, R.; Hui, Y.; Wang, J.; Zhao, H. A swin transformer-based encoding booster integrated in u-shaped network for building extraction. Remote Sens. 2022, 14, 2611. [Google Scholar] [CrossRef]

- Mitomi, H.; Yamazaki, F.; Matsuoka, M. Development of automated extraction method for building damage area based on maximum likelihood classifier. In Proceedings of the 8th International Conference on Structural Safety and Reliability, Newport Beach, CA, USA, 17–22 June 2001; p. 8. [Google Scholar]

- Ha, N.T.; Manley-Harris, M.; Pham, T.D.; Hawes, I. A comparative assessment of ensemble-based machine learning and maximum likelihood methods for mapping seagrass using sentinel-2 imagery in Tauranga Harbor, New Zealand. Remote Sens. 2020, 12, 355. [Google Scholar] [CrossRef]

- Turker, M.; Koc-San, D. Building extraction from high-resolution optical spaceborne images using the integration of support vector machine (SVM) classification, Hough transformation and perceptual grouping. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 58–69. [Google Scholar] [CrossRef]

- Avudaiammal, R.; Elaveni, P.; Selvan, S.; Rajangam, V. Extraction of buildings in urban area for surface area assessment from satellite imagery based on morphological building index using SVM classifier. J. Indian Soc. Remote Sens. 2020, 48, 1325–1344. [Google Scholar] [CrossRef]

- Aslani, M.; Seipel, S. A fast instance selection method for support vector machines in building extraction. Appl. Soft Comput. 2020, 97, 106716. [Google Scholar] [CrossRef]

- Bachofer, F.; Hochschild, V. A SVM-based approach to extract building footprints from Pléiades satellite imagery. Geotech Rwanda 2015, 2015, 1–4. [Google Scholar]

- Song, Y.Y.; Ying, L.U. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar]

- Liu, Z.J.; Wang, J.; Liu, W.P. Building extraction from high resolution imagery based on multi-scale object oriented classification and probabilistic Hough transform. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, IGARSS’05, Seoul, Republic of Korea, 25–29 July 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 4, pp. 2250–2253. [Google Scholar]

- Du, S.; Zhang, F.; Zhang, X. Semantic classification of urban buildings combining VHR image and GIS data: An improved random forest approach. ISPRS J. Photogramm. Remote Sens. 2015, 105, 107–119. [Google Scholar] [CrossRef]

- Parsian, S.; Amani, M. Building extraction from fused LiDAR and hyperspectral data using Random Forest Algorithm. Geomatica 2017, 71, 185–193. [Google Scholar] [CrossRef]

- Li, X.; Chen, W.; Zhang, Q.; Wu, L. Building auto-encoder intrusion detection system based on random forest feature selection. Comput. Secur. 2020, 95, 101851. [Google Scholar] [CrossRef]

- Thottolil, R.; Kumar, U. Automatic Building Footprint Extraction using Random Forest Algorithm from High Resolution Google Earth Images: A Feature-Based Approach. In Proceedings of the 2022 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 8–10 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Masaharu, H.; Hasegawa, H. Three-dimensional city modeling from laser scanner data by extracting building polygons using region segmentation method. Int. Arch. Photogramm. Remote Sens. 2000, 33 Pt 3, 556–562. [Google Scholar]

- Gaetano, R.; Scarpa, G.; Poggi, G. Hierarchical texture-based segmentation of multiresolution remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2129–2141. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-ResNet and the impact of residual connections on learning. In Proceedings of the AAAI’17: Proceedings of the Thirty- First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; p. 42784284. [Google Scholar]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceeding of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Li, L.; Liang, J.; Weng, M.; Zhu, H. A multiple-feature reuse network to extract buildings from remote sensing imagery. Remote Sens. 2018, 10, 1350. [Google Scholar] [CrossRef]

- Lei, J.; Liu, X.; Yang, H.; Zeng, Z.; Feng, J. Dual Hybrid Attention Mechanism-Based U-Net for Building Segmentation in Remote Sensing Images. Appl. Sci. 2024, 14, 1293. [Google Scholar] [CrossRef]

- Qiu, W.; Gu, L.; Gao, F.; Jiang, T. Building extraction from very high-resolution remote sensing images using refine-UNet. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Ye, Z.; Fu, Y.; Gan, M.; Deng, J.; Comber, A.; Wang, K. Building extraction from very high resolution aerial imagery using joint attention deep neural network. Remote Sens. 2019, 11, 2970. [Google Scholar] [CrossRef]

- Guo, M.; Liu, H.; Xu, Y.; Huang, Y. Building extraction based on U-Net with an attention block and multiple losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, J.; Qi, W.; Li, X.; Gross, L.; Shao, Q.; Zhao, Z.; Ni, L.; Fan, X.; Li, Z. ARC-net: An efficient network for building extraction from high- resolution aerial images. IEEE Access 2020, 8, 154997–155010. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multi-Source Building Extraction from An Open Aerial and Satellite Imagery Dataset. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).