A Comparative Study of Convolutional Neural Network and Recurrent Neural Network Models for the Analysis of Cardiac Arrest Rhythms During Cardiopulmonary Resuscitation

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection and Processing

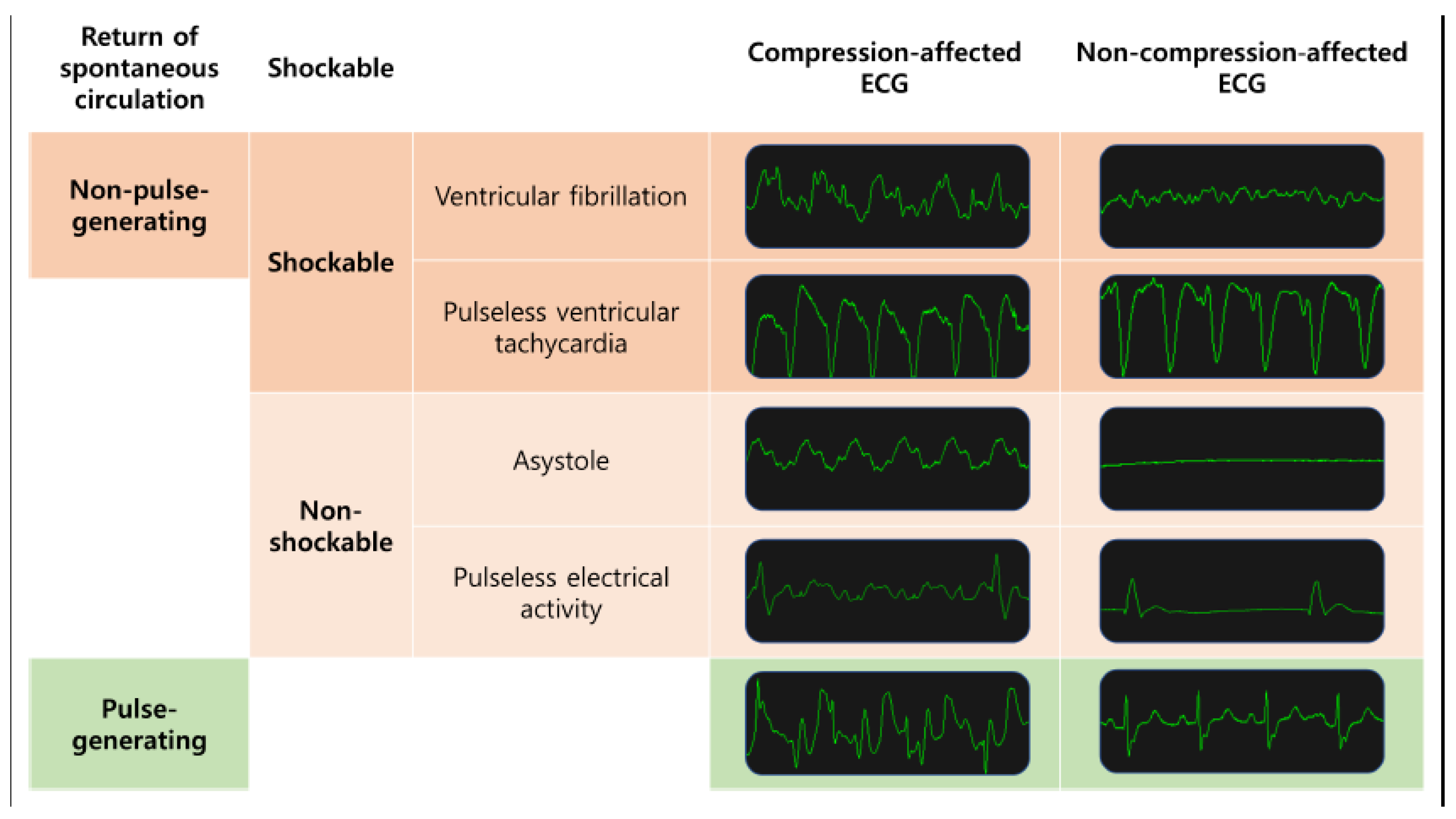

2.2. Classification Tasks

- Shockable rhythms (VF and pVT) versus non-shockable rhythms (asystole and PEA) in all ECG segments;

- Shockable rhythms (VF and pVT) versus non-shockable rhythms (asystole and PEA) in compression-affected ECG segments;

- Pulse-generating rhythms (ROSC rhythm) vs. non-pulse-generating rhythms (asystole, PEA, VF and pVT) in all ECG segments;

- Pulse-generating rhythms vs. non-pulse-generating rhythms (for non-compression ECG segments only) in compression-affected ECG segments.

2.3. Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CPR | Cardiopulmonary resuscitation |

| ECG | Electrocardiogram |

| ROSC | Return of spontaneous circulation |

| PEA | Pulseless electrical activity |

| VF | Ventricular fibrillation |

| pVT | Pulseless ventricular tachycardia |

| 1D-CNN | 1-dimensional convolutional neural network |

| RNN | Recurrent neural network |

| OHCA | Out-of-hospital cardiac arrest |

| AED | Automated external defibrillator |

| EMR | Electronic medical record |

| Grad-CAM | Gradient-weighted class activation map |

References

- Berdowski, J.; Berg, R.A.; Tijssen, J.G.; Koster, R.W. Global incidences of out-of-hospital cardiac arrest and survival rates: Systematic review of 67 prospective studies. Resuscitation 2010, 81, 1479–1487. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.; Gan, Y.; Jiang, N.; Wang, R.; Chen, Y.; Luo, Z.; Zong, Q.; Chen, S.; Lv, C. The global survival rate among adult out-of-hospital cardiac arrest patients who received cardiopulmonary resuscitation: A systematic review and meta-analysis. Critical Care 2020, 24, 1–13. [Google Scholar] [CrossRef]

- Ho, A.F.W.; Lee, K.Y.; Nur, S.; Fook, S.C.; Pek, P.P.; Tanaka, H.; Sang, D.S.; Chow, P.I.-K.; Tan, B.Y.-Q.; Lim, S.L. Association between conversion to shockable rhythms and survival with favorable neurological outcomes for out-of-hospital cardiac arrests. Prehospital Emerg. Care 2024, 28, 126–134. [Google Scholar] [CrossRef]

- Hammad, M.; Kandala, R.N.; Abdelatey, A.; Abdar, M.; Zomorodi-Moghadam, M.; San Tan, R.; Acharya, U.R.; Pławiak, J.; Tadeusiewicz, R.; Makarenkov, V. Automated detection of shockable ECG signals: A review. Inf. Sci. 2021, 571, 580–604. [Google Scholar] [CrossRef]

- Herlitz, J.; Bång, A.; Axelsson, Å.; Graves, J.R.; Lindqvist, J. Experience with the use of automated external defibrillators in out of hospital cardiac arrest. Resuscitation 1998, 37, 3–7. [Google Scholar] [CrossRef]

- Van Alem, A.P.; Sanou, B.T.; Koster, R.W. Interruption of cardiopulmonary resuscitation with the use of the automated external defibrillator in out-of-hospital cardiac arrest. Ann. Emerg. Med. 2003, 42, 449–457. [Google Scholar] [CrossRef]

- Cheskes, S.; Schmicker, R.H.; Christenson, J.; Salcido, D.D.; Rea, T.; Powell, J.; Edelson, D.P.; Sell, R.; May, S.; Menegazzi, J.J. Perishock pause: An independent predictor of survival from out-of-hospital shockable cardiac arrest. Circulation 2011, 124, 58–66. [Google Scholar] [CrossRef]

- Cheskes, S.; Schmicker, R.H.; Verbeek, P.R.; Salcido, D.D.; Brown, S.P.; Brooks, S.; Menegazzi, J.J.; Vaillancourt, C.; Powell, J.; May, S. The impact of peri-shock pause on survival from out-of-hospital shockable cardiac arrest during the Resuscitation Outcomes Consortium PRIMED trial. Resuscitation 2014, 85, 336–342. [Google Scholar] [CrossRef]

- Isin, A.; Ozdalili, S. Cardiac arrhythmia detection using deep learning. Procedia Comput. Sci. 2017, 120, 268–275. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Hannun, A.Y.; Haghpanahi, M.; Bourn, C.; Ng, A.Y. Cardiologist-level arrhythmia detection with convolutional neural networks. arXiv 2017, arXiv:1707.01836. [Google Scholar]

- Rahman, S.; Pal, S.; Yearwood, J.; Karmakar, C. Robustness of Deep Learning models in electrocardiogram noise detection and classification. Comput. Methods Prog. Biomed. 2024, 253, 108249. [Google Scholar] [CrossRef] [PubMed]

- Ansari, Y.; Mourad, O.; Qaraqe, K.; Serpedin, E. Deep learning for ECG Arrhythmia detection and classification: An overview of progress for period 2017–2023. Front. Physiol. 2023, 14, 1246746. [Google Scholar] [CrossRef]

- Li, D.; Zhang, J.; Zhang, Q.; Wei, X. Classification of ECG signals based on 1D convolution neural network. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom), Dalian, China, 12–15 October 2017; pp. 1–6. [Google Scholar]

- Sannino, G.; De Pietro, G. A deep learning approach for ECG-based heartbeat classification for arrhythmia detection. Future Gener. Comput. Syst. 2018, 86, 446–455. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, G.; Zhao, J.; Gao, P.; Lin, J.; Yang, H. Patient-specific ECG classification based on recurrent neural networks and clustering technique. In Proceedings of the 2017 13th IASTED International Conference on Biomedical Engineering (BioMed), Innsbruck, Austria, 20–21 February 2017; pp. 63–67. [Google Scholar]

- Eftestøl, T.; Hognestad, M.A.; Søndeland, S.A.; Rad, A.B.; Aramendi, E.; Wik, L.; Kramer-Johansen, J. A Convolutional Neural Network Approach for Interpreting Cardiac Rhythms from Resuscitation of Cardiac Arrest Patients. In Proceedings of the 2023 Computing in Cardiology (CinC), Atlanta, GA, USA, 1–4 October 2023; pp. 1–4. [Google Scholar]

- Isasi, I.; Irusta, U.; Aramendi, E.; Olsen, J.-Å.; Wik, L. Detection of shockable rhythms using convolutional neural networks during chest compressions provided by a load distributing band. In Proceedings of the 2020 Computing in Cardiology, Rimini, Italy, 13–16 September 2020; pp. 1–4. [Google Scholar]

- Hajeb-M, S.; Cascella, A.; Valentine, M.; Chon, K. Deep neural network approach for continuous ECG-based automated external defibrillator shock advisory system during cardiopulmonary resuscitation. J. Am. Heart Assoc. 2021, 10, e019065. [Google Scholar] [CrossRef]

- Jekova, I.; Krasteva, V. Optimization of end-to-end convolutional neural networks for analysis of out-of-hospital cardiac arrest rhythms during cardiopulmonary resuscitation. Sensors 2021, 21, 4105. [Google Scholar] [CrossRef]

- Isasi, I.; Irusta, U.; Aramendi, E.; Eftestøl, T.; Kramer-Johansen, J.; Wik, L. Rhythm analysis during cardiopulmonary resuscitation using convolutional neural networks. Entropy 2020, 22, 595. [Google Scholar] [CrossRef]

- Sashidhar, D.; Kwok, H.; Coult, J.; Blackwood, J.; Kudenchuk, P.J.; Bhandari, S.; Rea, T.D.; Kutz, J.N. Machine learning and feature engineering for predicting pulse presence during chest compressions. R. Soc. Open Sci. 2021, 8, 210566. [Google Scholar] [CrossRef]

- Lee, H.-C.; Jung, C.-W. Vital Recorder—A free research tool for automatic recording of high-resolution time-synchronised physiological data from multiple anaesthesia devices. Sci. Rep. 2018, 8, 1527. [Google Scholar] [CrossRef]

- Altay, Y.A.; Kremlev, A.S.; Zimenko, K.A.; Margun, A.A. The effect of filter parameters on the accuracy of ECG signal measurement. Biomed. Eng. 2019, 53, 176–180. [Google Scholar] [CrossRef]

- Sohn, J.; Yang, S.; Lee, J.; Ku, Y.; Kim, H.C. Reconstruction of 12-lead electrocardiogram from a three-lead patch-type device using a LSTM network. Sensors 2020, 20, 3278. [Google Scholar] [CrossRef]

- Baek, S.J.; Park, A.; Ahn, Y.J.; Choo, J. Baseline correction using asymmetrically reweighted penalized least squares smoothing. Analyst 2015, 140, 250–257. [Google Scholar] [CrossRef] [PubMed]

- Panchal, A.R.; Bartos, J.A.; Cabañas, J.G.; Donnino, M.W.; Drennan, I.R.; Hirsch, K.G.; Kudenchuk, P.J.; Kurz, M.C.; Lavonas, E.J.; Morley, P.T. Part 3: Adult basic and advanced life support: 2020 American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation 2020, 142, S366–S468. [Google Scholar] [CrossRef]

- Lee, K.-S.; Jung, S.; Gil, Y.; Son, H.S. Atrial fibrillation classification based on convolutional neural networks. BMC Med. Inform. Decis. Mak. 2019, 19, 1–6. [Google Scholar] [CrossRef]

- Lee, K.-S.; Park, H.-J.; Kim, J.E.; Kim, H.J.; Chon, S.; Kim, S.; Jang, J.; Kim, J.-K.; Jang, S.; Gil, Y. Compressed deep learning to classify arrhythmia in an embedded wearable device. Sensors 2022, 22, 1776. [Google Scholar] [CrossRef]

- Lee, K.S.; Park, K.W. Social determinants of the association among cerebrovascular disease, hearing loss and cognitive impairment in a middle-aged or older population: Recurrent neural network analysis of the Korean Longitudinal Study of Aging (2014–2016). Geriatr. Gerontol. Int. 2019, 19, 711–716. [Google Scholar] [CrossRef]

- Kim, R.; Kim, C.-W.; Park, H.; Lee, K.-S. Explainable artificial intelligence on life satisfaction, diabetes mellitus and its comorbid condition. Sci. Rep. 2023, 13, 11651. [Google Scholar] [CrossRef]

- Lee, K.-S.; Ahn, K.H. Application of artificial intelligence in early diagnosis of spontaneous preterm labor and birth. Diagnostics 2020, 10, 733. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans. Biomed. Eng. 2015, 63, 664–675. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M.; Gertych, A.; San Tan, R. A deep convolutional neural network model to classify heartbeats. Comput. Biol. Med. 2017, 89, 389–396. [Google Scholar] [CrossRef]

- Zahid, M.U.; Kiranyaz, S.; Ince, T.; Devecioglu, O.C.; Chowdhury, M.E.; Khandakar, A.; Tahir, A.; Gabbouj, M. Robust R-peak detection in low-quality holter ECGs using 1D convolutional neural network. IEEE Trans. Biomed. Eng. 2021, 69, 119–128. [Google Scholar] [CrossRef]

- Thanapol, P.; Lavangnananda, K.; Bouvry, P.; Pinel, F.; Leprévost, F. Reducing overfitting and improving generalization in training convolutional neural network (CNN) under limited sample sizes in image recognition. In Proceedings of the 2020-5th International Conference on Information Technology (InCIT), Online, 6–7 December 2020; pp. 300–305. [Google Scholar]

- Krasteva, V.; Didon, J.-P.; Ménétré, S.; Jekova, I. Deep learning strategy for sliding ECG analysis during cardiopulmonary resuscitation: Influence of the hands-off time on accuracy. Sensors 2023, 23, 4500. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Jung, S.; Ahn, S.; Cho, H.; Moon, S.; Park, J.-H. Comparison of Neural Network Structures for Identifying Shockable Rhythm During Cardiopulmonary Resuscitation. J. Clin. Med. 2025, 14, 738. [Google Scholar] [CrossRef] [PubMed]

- Ramprasaath, R.; Selvaraju, M.; Das, A. Visual Explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 618–626. [Google Scholar]

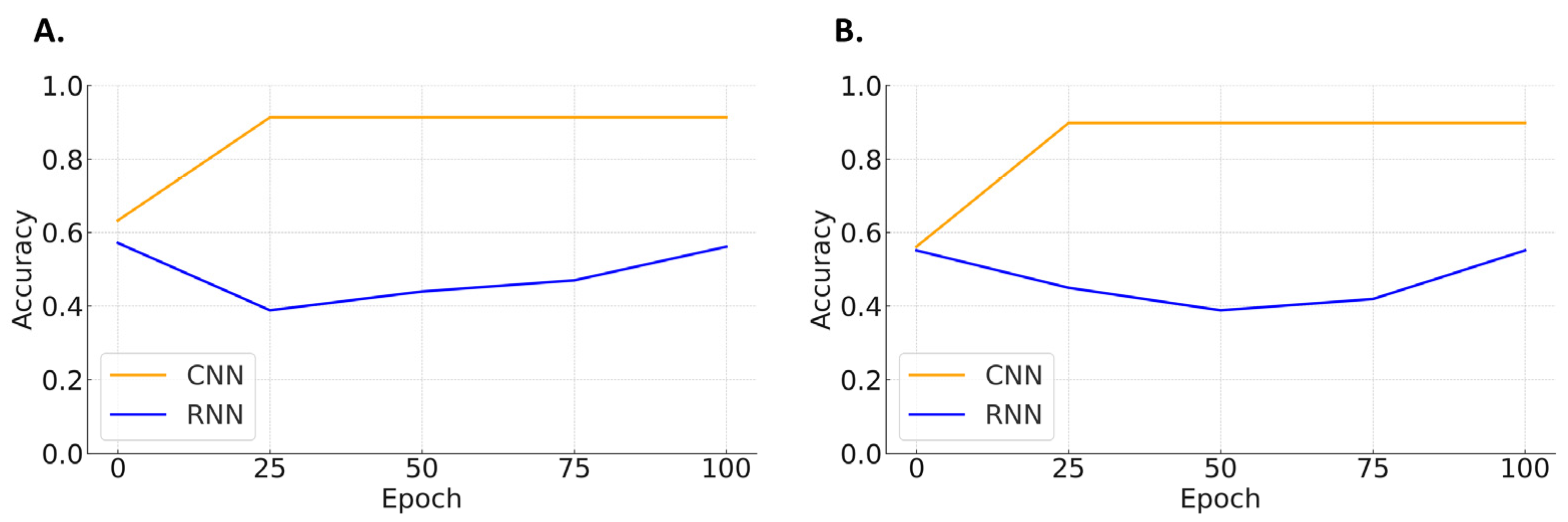

| Shockable Rhythms vs. Non-Shockable Rhythms | ||||

| Sensitivity | Specificity | F1-Score | Accuracy | |

| CNN | 98.0 | 84.5 | 90.8 | 91.3 |

| RNN | 22.2 | 75.8 | 34.3 | 50.6 |

| Performance in compression-affected ECG segments | ||||

| CNN | 88.6 | 90.7 | 89.6 | 89.8 |

| RNN | 40.9 | 66.7 | 50.7 | 54.4 |

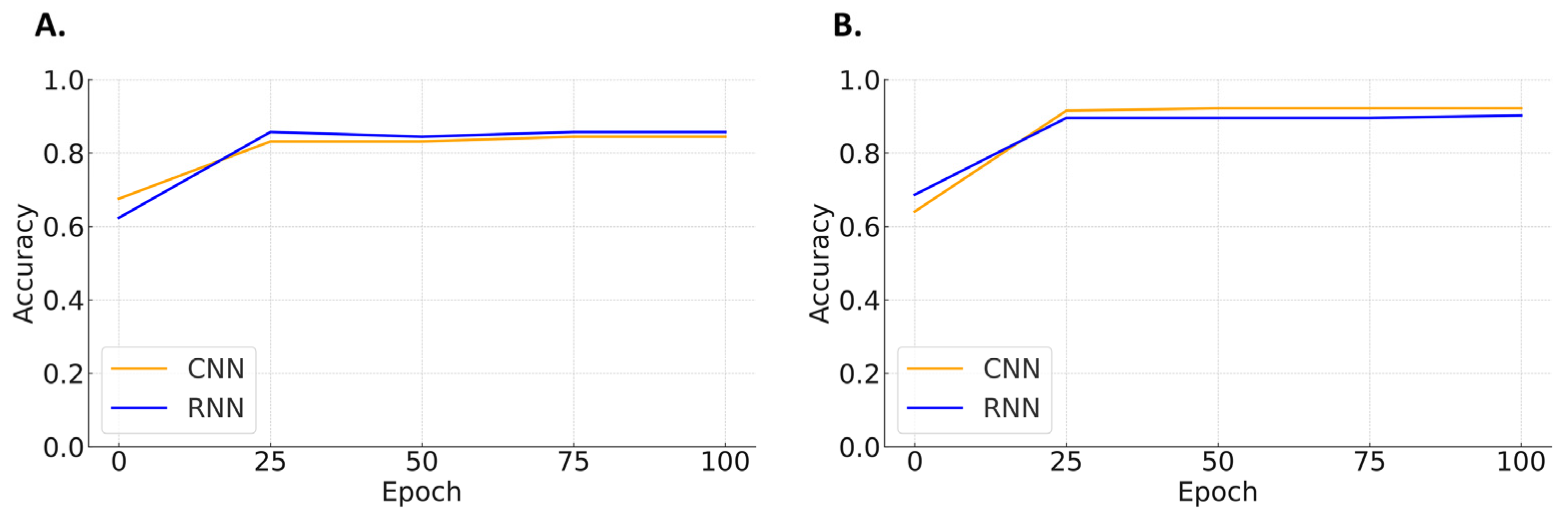

| Pulse-Generating Rhythms vs. Non-Pulse-Generating Rhythms | ||||

| Specificity | Sensitivity | F1-Score | Accuracy | |

| CNN | 100 | 80.3 | 89.1 | 90.9 |

| RNN | 97.4 | 86.8 | 91.8 | 92.2 |

| Performance in compression-affected ECG segments | ||||

| CNN | 90.2 | 80.6 | 85.1 | 85.7 |

| RNN | 92.7 | 75.0 | 82.9 | 84.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Lee, K.-S.; Park, H.-J.; Han, K.S.; Song, J.; Lee, S.W.; Kim, S.J. A Comparative Study of Convolutional Neural Network and Recurrent Neural Network Models for the Analysis of Cardiac Arrest Rhythms During Cardiopulmonary Resuscitation. Appl. Sci. 2025, 15, 4148. https://doi.org/10.3390/app15084148

Lee S, Lee K-S, Park H-J, Han KS, Song J, Lee SW, Kim SJ. A Comparative Study of Convolutional Neural Network and Recurrent Neural Network Models for the Analysis of Cardiac Arrest Rhythms During Cardiopulmonary Resuscitation. Applied Sciences. 2025; 15(8):4148. https://doi.org/10.3390/app15084148

Chicago/Turabian StyleLee, Sijin, Kwang-Sig Lee, Hyun-Joon Park, Kap Su Han, Juhyun Song, Sung Woo Lee, and Su Jin Kim. 2025. "A Comparative Study of Convolutional Neural Network and Recurrent Neural Network Models for the Analysis of Cardiac Arrest Rhythms During Cardiopulmonary Resuscitation" Applied Sciences 15, no. 8: 4148. https://doi.org/10.3390/app15084148

APA StyleLee, S., Lee, K.-S., Park, H.-J., Han, K. S., Song, J., Lee, S. W., & Kim, S. J. (2025). A Comparative Study of Convolutional Neural Network and Recurrent Neural Network Models for the Analysis of Cardiac Arrest Rhythms During Cardiopulmonary Resuscitation. Applied Sciences, 15(8), 4148. https://doi.org/10.3390/app15084148