Semi-Supervised Method for Underwater Object Detection Algorithm Based on Improved YOLOv8

Abstract

1. Introduction

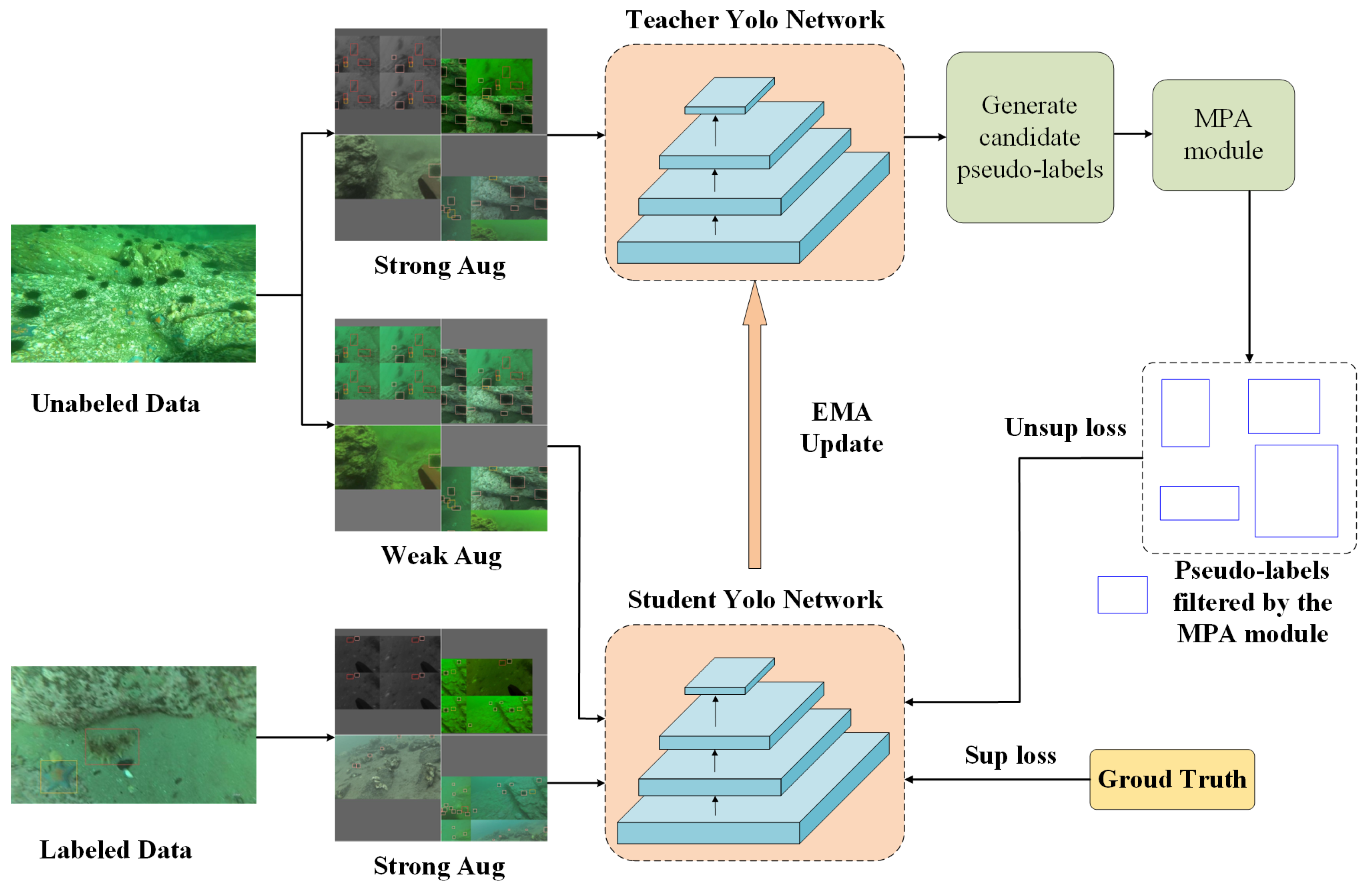

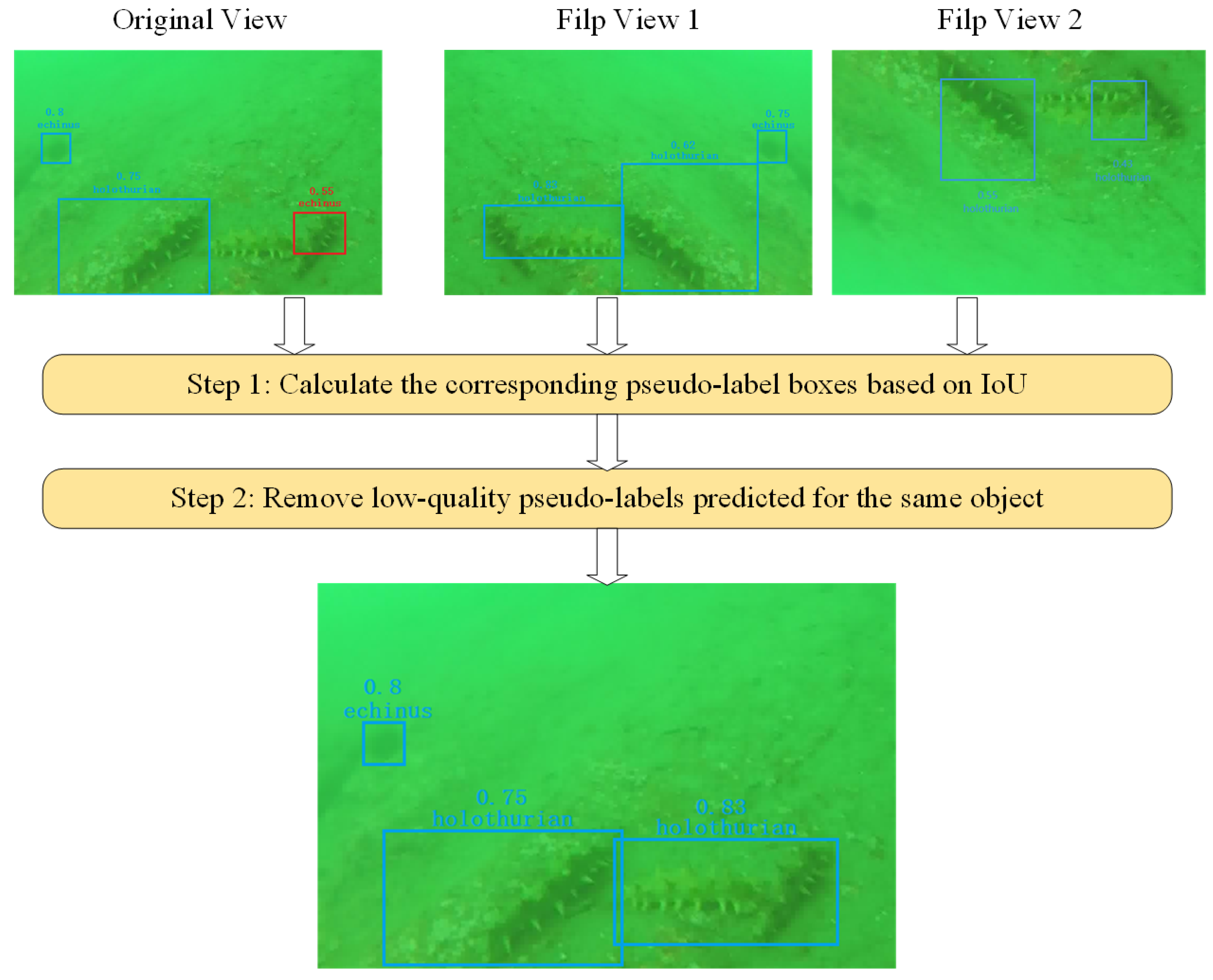

- This study proposes a novel underwater object detection method based on the Mean Teacher semi-supervised learning method, which combines a limited number of labeled samples with a large number of unlabeled samples. The teacher model is used to guide the generation of pseudo-labels, and a multi-scale pseudo-label enhancement module is specially developed to address the issue of low-quality pseudo-labels.

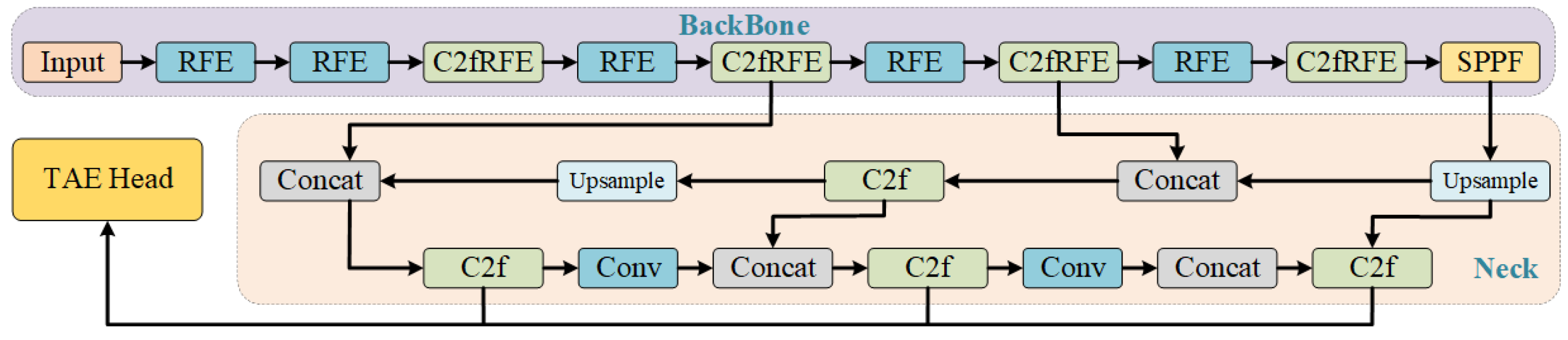

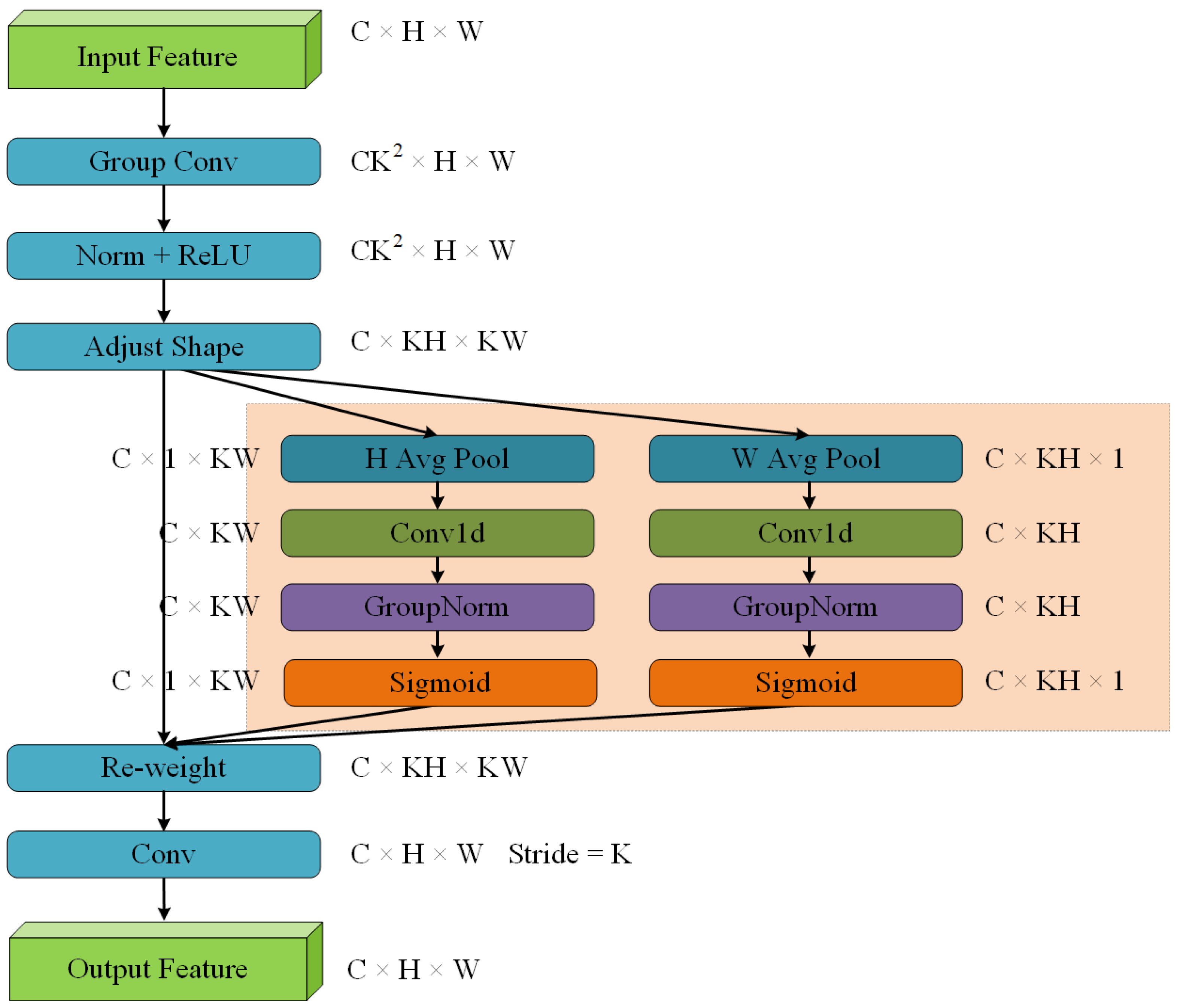

- This study proposes a module that integrates the receptive-field attention mechanism with local spatial features, significantly improving the feature extraction capability in underwater images.

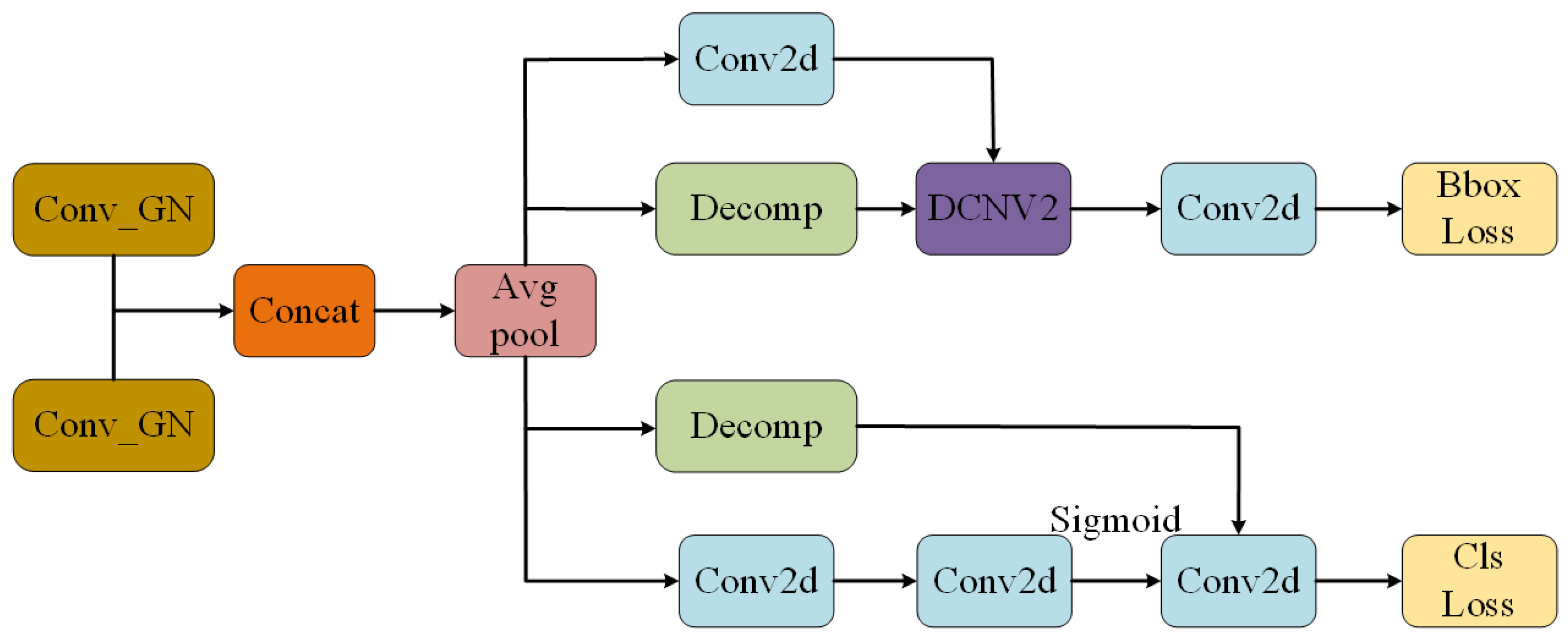

- This study proposes a lightweight detection head based on task alignment, which introduces shared convolution to reduce parameters while improving the feature delicacy in small target detection layers, allowing the model to observe more detailed features.

2. Related Works

2.1. Semi-Supervised Objection Detection

2.2. Underwater Objection Detection

3. Materials and Methods

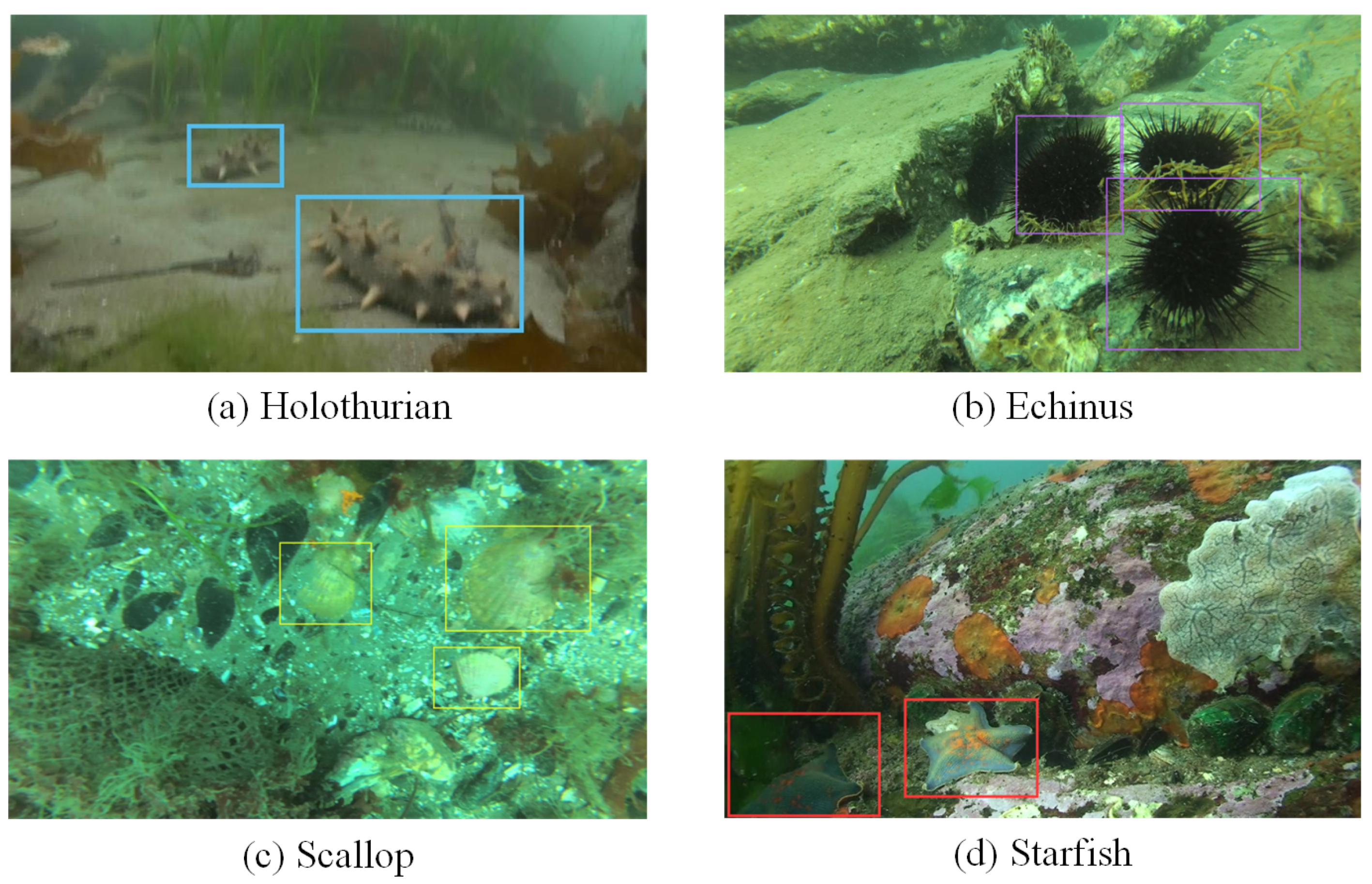

3.1. Dataset Information

3.2. The Proposed Detection Model, SUD-YOLO

3.2.1. The Proposed Semi-Supervised Learning Method for Training SUD-YOLO

3.2.2. Receptive-Field Attention and Local Feature Fusion Module

3.2.3. Task-Aligned Lightweight Detection Head Module (TAE)

| Algorithm 1 The semi-supervised algorithm for training SUD-YOLO. |

|

1. Data Preparation: The underwater object detection dataset consists of both labeled and unlabeled data, with a total of samples, where I is the number of labeled samples and J is the number of unlabeled samples, with typically . represents the labeled dataset, and represents the unlabeled dataset. Here, x and y represent the sample images and the annotation boxes, respectively. 2. Pre-training: Use the small labeled dataset to train the model and obtain the M initial supervised model weights. 3. Input: , , and the initial weights M. 4. Output: The final weights file F of the underwater object detection model. 5. Repeat:

6. Until: The model training converges. 7. Return: Weights F. 8. End. |

4. Results

4.1. Evaluation Criteria

4.2. Implementation Details

- Experimental EnvironmentThe hardware configuration for this experiment includes an Intel(R) Xeon(R) Platinum 8457C processor and a single L20 GPU. The operating system is Linux, and PyTorch 2.0.1 is used as the deep learning framework, with CUDA version 11.8.

- Experimental Parameter SettingsThe model’s input size is 640 × 640, and the batch size is set to 64. The optimizer is SGD, with an initial learning rate of 0.01, a momentum of 0.937, and a weight decay of 0.0005. The hyper-parameters for semi-supervised learning framework are set as follows: weight of the semi-supervised loss weight, 0.5; pseudo-label’s confidence threshold, 0.5.

4.3. Experimental Results

4.4. Visual Analysis

4.5. Ablation Studies

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fayaz, S.; Parah, S.A.; Qureshi, G.J. Underwater object detection: Architectures and algorithms—A comprehensive review. Multimed. Tools Appl. 2022, 81, 20871–20916. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; Volume 14. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1922–1933. [Google Scholar] [CrossRef] [PubMed]

- Zeng, L.; Sun, B.; Zhu, D. Underwater target detection based on Faster R-CNN and adversarial occlusion network. Eng. Appl. Artif. Intell. 2021, 100, 104190. [Google Scholar] [CrossRef]

- Dulhare, U.N.; Ali, M.H. Underwater human detection using Faster R-CNN with data augmentation. Mater. Today Proc. 2023, 80, 1940–1945. [Google Scholar] [CrossRef]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-time detection of uneaten feed pellets in underwater images for aquaculture using an improved YOLO-V4 network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar] [CrossRef]

- Liu, C.-H.; Lin, C.H. Underwater object detection based on enhanced YOLOv4 architecture. Multimed. Tools Appl. 2024, 83, 53759–53783. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Zhou, K.; Zhang, Y.; Chen, H.; Yan, X. An improved YOLOv5-based underwater object-detection framework. Sensors 2023, 23, 3693. [Google Scholar] [CrossRef]

- Song, G.; Chen, W.; Zhou, Q.; Guo, C. Underwater Robot Target Detection Algorithm Based on YOLOv8. Electronics 2024, 13, 3374. [Google Scholar] [CrossRef]

- Zhang, F.; Cao, W.; Gao, J.; Liu, S.; Li, C.; Song, K.; Wang, H. Underwater Object Detection Algorithm Based on an Improved YOLOv8. J. Mar. Sci. Eng. 2024, 12, 1991. [Google Scholar] [CrossRef]

- Liu, L.; Hua, Y.; Zhao, Q.; Huang, H.; Bovik, A.C. Blind Image Quality Assessment by Relative Gradient Statistics and AdaBoosting Neural Network. Signal Process. Image Commun. 2016, 40, 1–15. [Google Scholar] [CrossRef]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Jeong, J.; Lee, S.; Lee, J.; Park, H. Consistency-Based Semi-Supervised Learning for Object Detection. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Liu, Y.C.; Ma, C.Y.; Kira, Z. Unbiased teacher v2: Semi-supervised object detection for anchor-free and anchor-based detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 9819–9828. [Google Scholar]

- Sohn, K.; Kim, Y.; Lee, D.; Kim, H.; Yoo, J. A Simple Semi-Supervised Learning Framework for Object Detection. arXiv 2020, arXiv:2005.04757. [Google Scholar]

- Liu, Y.-C.; Chen, C.-H.; Chou, C.-P. Unbiased Teacher for Semi-Supervised Object Detection. arXiv 2021, arXiv:2102.09480. [Google Scholar]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-End Semi-Supervised Object Detection with Soft Teacher. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Xu, B.; Chen, M.; Guan, W.; Hu, L. Efficient Teacher: Semi-Supervised Object Detection for YOLOv5. arXiv 2023, arXiv:2302.07577. [Google Scholar]

- Tang, Y.; Chen, W.; Luo, Y.; Zhang, Y. Humble Teachers Teach Better Students for Semi-Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Liu, L.; Zhang, B.; Zhang, J.; Zhang, W.; Gan, Z.; Tian, G.; Zhu, W.; Wang, Y.; Wang, C. MixTeacher: Mining Promising Labels with Mixed Scale Teacher for Semi-Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhou, H.; Ge, Z.; Liu, S.; Mao, W.; Li, Z.; Yu, H.; Sun, J. Dense Teacher: Dense Pseudo-Labels for Semi-supervised Object Detection. arXiv 2022, arXiv:2207.02541. [Google Scholar]

- Zhang, J.; Lin, X.; Zhang, W.; Wang, K.; Tan, X.; Han, J.; Ding, E.; Wang, J.; Li, G. Semi-DETR: Semi-Supervised Object Detection with Detection Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Mahmood, A.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Kendrick, G.; Fisher, R.B. Coral Classification with Hybrid Feature Representations. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 519–523. [Google Scholar]

- Mahmood, A.; Bennamoun, M.; An, S.; Sohel, F.A.; Boussaid, F.; Hovey, R.; Kendrick, G.A.; Fisher, R.B. Deep Image Representations for Coral Image Classification. IEEE J. Ocean. Eng. 2018, 44, 121–131. [Google Scholar] [CrossRef]

- Hu, K.; Lu, F.; Lu, M.; Deng, Z.; Liu, Y. A Marine Object Detection Algorithm Based on SSD and Feature Enhancement. Complexity 2020, 2020, 5476142. [Google Scholar] [CrossRef]

- Chen, L.; Liu, Z.; Tong, L.; Jiang, Z.; Wang, S.; Dong, J.; Zhou, H. Underwater Object Detection Using Invert Multi-Class Adaboost with Deep Learning. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Liu, C.; Li, H.; Wang, S.; Zhu, M.; Wang, D.; Fan, X.; Wang, Z. A dataset and benchmark of underwater object detection for robot picking. In Proceedings of the IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Lin, J.; Lin, H.; Wang, F. A Semi-Supervised Method for Real-Time Forest Fire Detection Algorithm Based on Adaptively Spatial Feature Fusion. Forests 2023, 14, 361. [Google Scholar] [CrossRef]

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Xu, W.; Wan, Y. ELA: Efficient Local Attention for Deep Convolutional Neural Networks. arXiv 2024, arXiv:2403.01123. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating spatial attention and standard convolutional operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned One-Stage Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; IEEE Computer Society: New York, NY, USA, 2021. [Google Scholar]

- Wang, R.; Shivanna, R.; Cheng, D.Z.; Jain, S.; Lin, D.; Hong, L.; Chi, E.H. DCN v2: Improved Deep & Cross Network and Practical Lessons for Web-Scale Learning to Rank Systems. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Springer: New York, NY, USA, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap Between Anchor-Based and Anchor-Free Detection via Adaptive Training Sample Selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the European Conference on Computer Vision, London, UK, 15–16 January 2025; Springer: Cham, Switzerland, 2025. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

| Category | Instances (Train) | Instances (Test) |

|---|---|---|

| Holothurian | 6808 | 1079 |

| Echinus | 45,954 | 7201 |

| Scallop | 1707 | 217 |

| Starfish | 12,528 | 2020 |

| Alogrithm | Params | GFlops | ||||||

|---|---|---|---|---|---|---|---|---|

| Supervised algorithms | ||||||||

| Faster R-CNN | 41.36 | 208 | 29.3 | 49.5 | 30.6 | 20.8 | 29.6 | 29.6 |

| Cascade R-CNN | 69.2 | 236 | 30.7 | 51.2 | 32.0 | 27.1 | 31.0 | 30.7 |

| ATSS | 19.1 | 153 | 37.7 | 61.1 | 40.1 | 35.0 | 38.5 | 37.8 |

| YOLOv5 | 7.06 | 16.3 | 35.9 | 58.4 | 38.8 | 23.9 | 37.1 | 35.1 |

| YOLOv7 | 6.02 | 13.0 | 33.4 | 55.8 | 25.4 | 22.6 | 35.5 | 31.6 |

| YOLOv8 | 11.1 | 28.7 | 39.8 | 59.3 | 44.5 | 25.8 | 41.2 | 39.3 |

| YOLOv9 | 9.6 | 38.8 | 38.8 | 58.8 | 43.5 | 26.3 | 41.8 | 36.1 |

| YOLOv10 | 8.0 | 24.5 | 36.0 | 53.8 | 39.5 | 30.8 | 37.7 | 34.6 |

| YOLOv11 | 9.4 | 21.3 | 39.5 | 59.7 | 43.3 | 27.5 | 40.9 | 38.5 |

| Semi-supervised algorithms | ||||||||

| Unbiased Teacher | 41.3 | 173.6 | 47.4 | 71.0 | 52.6 | 34.6 | 48.2 | 47.7 |

| Efficient Teacher | 7.38 | 16.5 | 41.5 | 63.2 | 45.9 | 26.7 | 42.6 | 41.1 |

| Ours | 9.05 | 34.1 | 50.8 | 71.8 | 58.0 | 35.5 | 53.1 | 48.9 |

| Alogrithm | Params | GFlops | ||||||

|---|---|---|---|---|---|---|---|---|

| Supervised algorithms | ||||||||

| Faster R-CNN | 41.36 | 208 | 22.3 | 52.5 | 13.6 | 9.7 | 20.9 | 24.6 |

| Cascade R-CNN | 69.2 | 236 | 25.3 | 57.3 | 17.0 | 11.4 | 23.3 | 28.1 |

| ATSS | 19.1 | 153 | 22.7 | 52.0 | 14.9 | 10.3 | 21.7 | 24.5 |

| YOLOv5 | 7.06 | 16.3 | 24.8 | 57.0 | 15.9 | 10.5 | 23.5 | 26.6 |

| YOLOv8 | 11.1 | 28.7 | 29.6 | 61.1 | 24.3 | 12.3 | 27.2 | 32.5 |

| YOLOv9 | 9.6 | 38.8 | 31.1 | 62.3 | 26.9 | 13.6 | 28.9 | 34.1 |

| YOLOv10 | 8.0 | 24.5 | 25.6 | 53.2 | 21.4 | 10.7 | 23.4 | 28.6 |

| YOLOv11 | 9.4 | 21.3 | 29.1 | 60.0 | 24.1 | 12.2 | 26.6 | 32.2 |

| Semi-supervised algorithms | ||||||||

| Unbiased Teacher | 41.3 | 173.6 | 36.6 | 73.1 | 31.6 | 18.0 | 34.0 | 40.2 |

| Efficient Teacher | 7.38 | 16.5 | 25.9 | 57.6 | 19.0 | 9.2 | 23.5 | 29.5 |

| Ours | 9.05 | 34.1 | 34.5 | 67.5 | 31.0 | 14.2 | 31.5 | 38.9 |

| Alogrithm | Params | GFlops | ||||||

|---|---|---|---|---|---|---|---|---|

| YOLOv8 | 11.1 | 28.7 | 43.4 | 63.1 | 49.7 | 37.3 | 45.7 | 41.9 |

| YOLOv8 + RFE | 11.3 | 29.5 | 45.3 | 65.6 | 52.4 | 33.7 | 46.8 | 44.5 |

| YOLOv8 + RFE + TAE | 9.05 | 34.1 | 48.6 | 69.3 | 56.2 | 38.0 | 51.0 | 46.9 |

| YOLOv8 + RFE + TAE + MPA | 9.05 | 34.1 | 50.8 | 71.8 | 58.0 | 35.5 | 53.1 | 48.9 |

| Thresholds | ||

|---|---|---|

| 0.20 | 45.5 | 64.1 |

| 0.30 | 47.1 | 66.3 |

| 0.40 | 49.6 | 70.9 |

| 0.50 | 50.8 | 71.8 |

| 0.60 | 49.8 | 70.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, S.; Wang, J.; Sang, Q. Semi-Supervised Method for Underwater Object Detection Algorithm Based on Improved YOLOv8. Appl. Sci. 2025, 15, 1065. https://doi.org/10.3390/app15031065

Xu S, Wang J, Sang Q. Semi-Supervised Method for Underwater Object Detection Algorithm Based on Improved YOLOv8. Applied Sciences. 2025; 15(3):1065. https://doi.org/10.3390/app15031065

Chicago/Turabian StyleXu, Siyi, Jian Wang, and Qingbing Sang. 2025. "Semi-Supervised Method for Underwater Object Detection Algorithm Based on Improved YOLOv8" Applied Sciences 15, no. 3: 1065. https://doi.org/10.3390/app15031065

APA StyleXu, S., Wang, J., & Sang, Q. (2025). Semi-Supervised Method for Underwater Object Detection Algorithm Based on Improved YOLOv8. Applied Sciences, 15(3), 1065. https://doi.org/10.3390/app15031065