1. Introduction

Augmented reality (AR) is an increasingly adopted technology that superimposes digital computer-generated elements onto the real-world environment. Its usage in medicine gained popularity for providing clinicians with enhanced 3D guiding elements during surgical and operative procedures [

1,

2]. Recent advances in mobile devices and high-definition visualization have led to significant advantages in the usage of AR in the dental field, with applications including the procedures of implant planning, endodontics, and maxillofacial interventions [

3,

4,

5,

6]. Among the category of extended realities (XRs), AR has the capacity to merge real-time patient imaging with digitally rendered models or guides, while still allowing the operator to see the physical operative field [

7,

8].

Among the field of guided surgeries, there are different alternatives such as 3D-printed guides or robotic-driven surgeries. Previous studies investigated the use of 3D-printed guides in guided endodontics and found that they can achieve highly precise access cavity preparation, especially in cases of severe pulp canal obliteration. By virtually planning the drilling path on CBCT data and fabricating custom guides, researchers reported minimal coronal and apical deviations, resulting in a safe and predictable treatment option [

9,

10,

11]. However, despite providing great advantages and redefining the golden standards, the method requires an additional visit for the patient to produce the guide, and advanced 3D technical skills or the support from of a specialized laboratory is needed. Also, the clinical space needed for the 3D-printed guides is not always optimal and is difficult to measure in advance. In posterior teeth or when patients exhibit limited mouth opening, the physical guide may not seat properly, complicating its adaptation. The workflow usually requires an additional visit for guide design and printing, which can add both cost and time to the treatment process. Similarly, recently proposed robotic surgeries have been associated with high initial costs [

12,

13,

14]. Also, until now, only a few case reports are available in the literature.

Apicectomy, which is the procedure defining root-end resection, remains a core endodontic surgery for treating persistent periapical pathologies that fail to solve with conventional root canal therapy or re-treatments. Despite considerable advancements in magnification and ultrasonic instruments and, in general, in the field of endodontics, variations in root canal morphology, restricted surgical access, and the close proximity of critical anatomic structures can complicate the procedure, leading to failure [

15].

Recent studies found that three-dimensional (3D) imaging and computer-aided navigation can substantially improve therapeutic outcomes by allowing precise localization of the root apical point [

11]. However, the real usability of some different image-guided modalities has been questioned, and their reliance on additional hardware or time-consuming planning procedures can be an obstacle to routine clinical usage or urgent treatment care.

Previously, a novel AR approach based on a visual–inertial odometry (VIO) algorithm proved effective in guiding endodontic access cavities and maxillofacial interventions by stabilizing patient-specific 3D data in real time over the surgically rigid scene [

3,

4,

5]. This technique does not require the need for physical markers, using real-time mapping of the clinical environment to anchor the digital objects (i.e., segmented CBCT scans) to the operative field.

Given the previous results, this study preliminarily explores the feasibility of this method for guiding apicectomy. This report details a preliminary case series using the same AR-based VIO approach for guided apicectomy. Specifically, we aimed to assess the feasibility, overall procedure time, patient-perceived pain outcomes, and clinical signs over time as well as general operator experience. Potentially, the clinical significance of having a rapidly available system for guided interventions unlocks the possibility for minimally invasive guided intervention with safer clinical guidance, without an increase in timing or costs for the patient.

2. Materials and Methods

2.1. Study Design

The present study was conducted at the Department of Dentistry, Ospedale Maggiore Policlinico di Milano, The study adhered to the principles outlined in the Declaration of Helsinki. All patients gave written informed consent, agreeing to the treatment procedures and the use of their anonymized data for research purposes.

2.2. Participants and Inclusion Criteria

Three patients (two females, one male; aged 18–55 years) presenting with persistent periapical lesions in anterior or posterior teeth were included over a recruiting period of six months after passing the inclusion and exclusion criteria. The lesions were confirmed with periapical radiographs and CBCT imaging. The inclusion criteria consisted of the following:

Radiographic evidence of persistent apical periodontitis despite conventional root canal therapy. Adequate bone support and root length for apicectomy; absence of active suppuration or signs of abscess; and no history of allergies to local anesthetics or sedation agents used in the procedure.

Exclusion criteria included the following: patients with systemic conditions such as diabetes, usage of pharmaceutics and patients who declined the therapy proposed, those at risk or suspect of target tooth fracture, uncertainty of apical target, minimal apical radio-translucency observed, adjacent implants, and low quality of the CBCT scan.

2.3. Digital Images Preparation Protocol

All patients underwent CBCT scanning to capture the target root apex and anatomical landmarks. The DICOM files were segmented in Mimics 22.0 (Materialise, Leuven, Belgium) by two expert operators with more than 5 years of experience in conducting research in the field of CBCT segmentation. A threshold-based segmentation protocol was performed according to the manufacturer’s instructions, isolating the apex and relevant bony structures, according to previously described segmentation methodology [

4]. The periapical lesion was segmented with a preset threshold (Bone—Adult and Soft Tissues) including radio-transparent and soft tissues density range. Teeth were segmented under the corresponding HU saturation levels according to suggested thresholds (Teeth—Permanent) and superimposed with the intra-oral scans of the patient according to rigid superimposition by the use of open-source software MeshLab 2023.12 function ICP (Iterative Closest Point) by a previously described technique [

16]. Correct superimposition was assessed by two different operators until reaching a global superimposition precision of less than 0.1 mm, which can be extracted directly after the superimposition action performed by the software. When superimposition was judged as satisfying, all the objects were exported separately in the .stl (standard triangulation language) extension, while maintaining the co-respective reciprocal position.

2.4. AR Software and Hardware

The obtained surface models (.stl format) were uploaded to a secure dedicated cloud storage, accessible through personal encrypted credentials given to each operator.

The cloud server was remotely linked to hand-held touch screen smart device used for the study by Apple (iPad, Apple, Cupertino, CA, USA). A custom AR software application previously developed by the authors was installed in the device by the use of the TestFlight app for Apple developers (Apple, Cupertino, CA, USA). The custom software integrates a visual–inertial odometry (VIO) algorithm and a multilayered system that allowed us to selectively display the layers of the segmented files: for example, the operator can display the roots and hide the crowns or keep visible the periapical lesion only [

5].

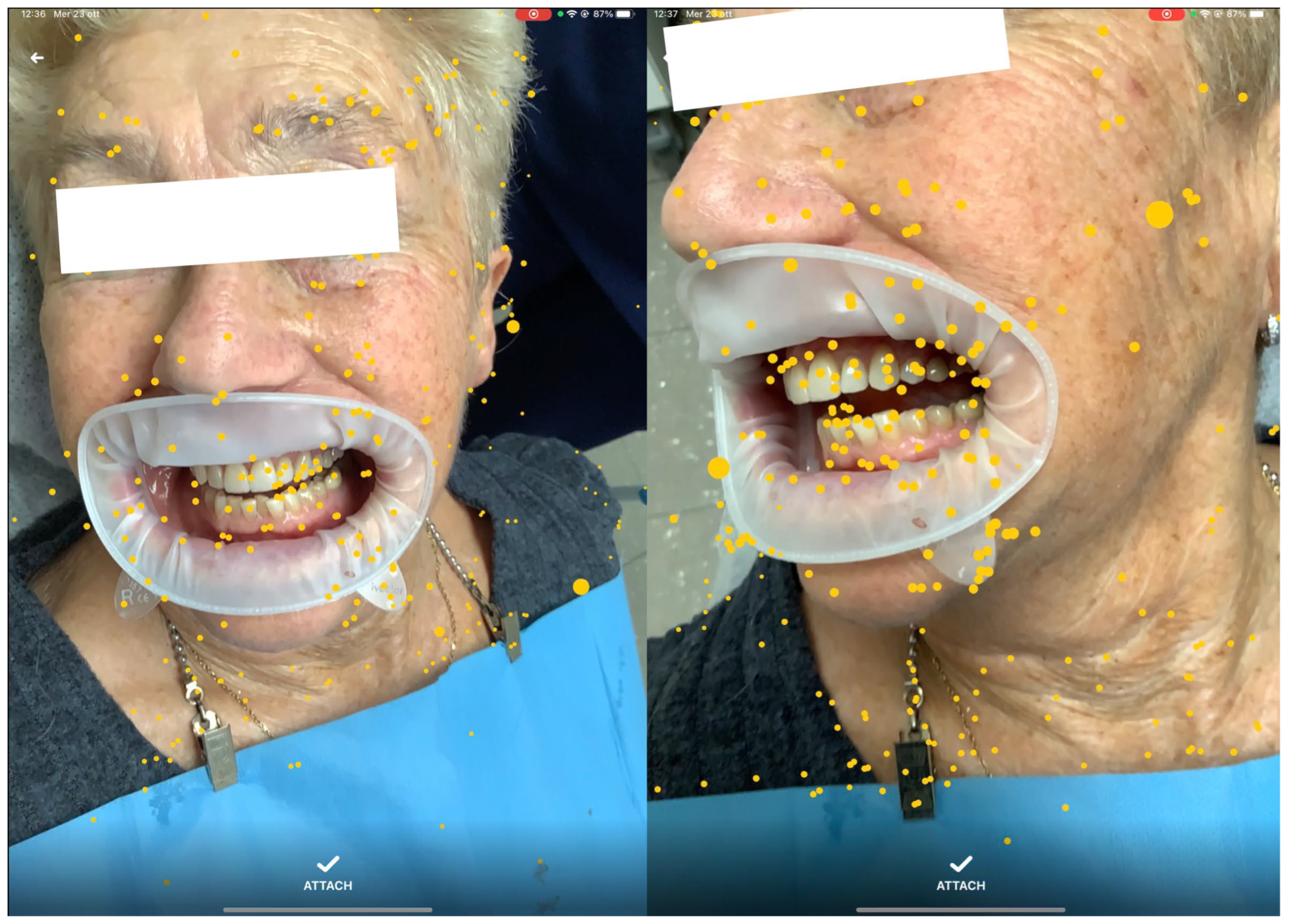

The software was installed on an iPad (Pro 11, software 14.3, Apple, Cupertino, CA, USA) portable device, integrated with dual-camera systems and a LiDAR scanner for real-time precise mapping. By moving the device around the patient’s face, the operator allowed the software to anchor a series of automatically generated points of interest (POIs) to the environment [

Figure 1]. Once enough POIs were established, the segmented 3D models were manually aligned with the patient’s visible anatomy and locked in position, maintaining alignment despite device or patient movement [

Figure 2]. The operators selected for the overlap of the digital images were previously extensively trained by the creators of the software in 3 different 30 min sessions. The training sessions were performed by overlapping 3d lego™ models to their physical pieces. After successful overlap was achieved, 3D-printed versions of the IOS scans were then overlapped.

2.5. Surgical Procedure

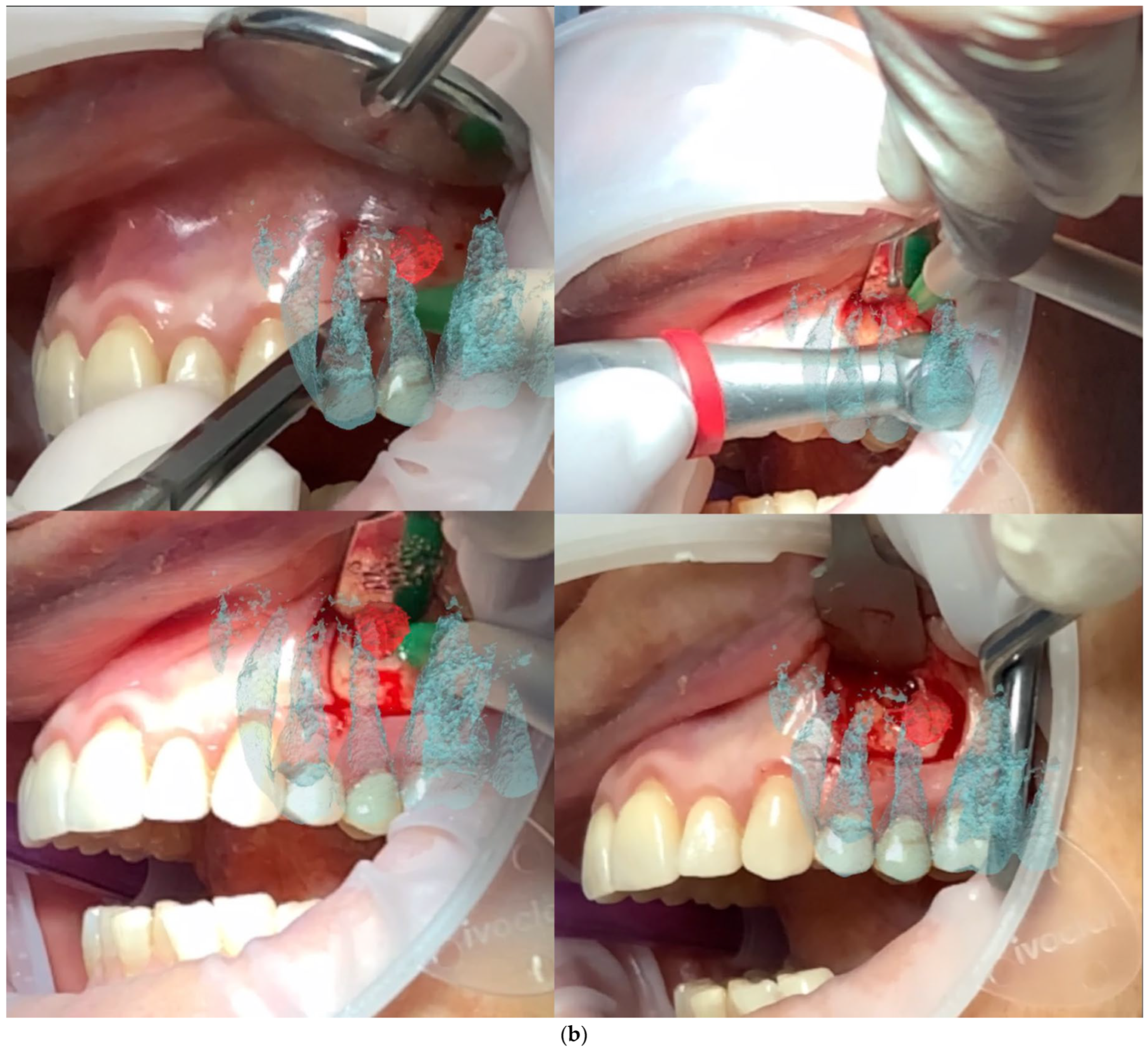

All patients were treated under local anesthesia using (4% Articaine with Adrenaline 1:100,000 or 1:50,000 for better bleeding control). The iPad device was positioned in front of the surgical field, and the operator visualized the overlapped digital apex and root canal. The correct overlap was checked in the six degrees of freedom by rotating the device around the clinical scene in different projections (

Figure 3 and

Figure 4). A semilunar flap was elevated to expose the surgical site most proximal to the root’s apex according to the AR guide. The alveolar bone was carefully removed using a low-speed round bur under copious irrigation, guided by the superimposed 3D models. The root was resected at least 3 mm coronal to the apex to cut the apical portion of the root, with a buccal bevel, to grant clinical view and access. After the resection of the root, a blu methylene with a micro-brush was inserted into the surgery zone and over the cut root to highlight the contour of the root, the main and accessory canal for the retrograde preparation. In the meanwhile, minimal bone removal as well as a lack of trauma to the neighbour roots and apexes was ensured (

Figure 5). The infra bony lesion was removed carefully, and the residual root was cleaned through courettes and an ultrasonic scaler. The retrograde preparation was carried out with ultrasonic diamond tips and a bioceramic root-end filling material was placed. The flap was sutured with 6–0 sutures, and a postoperative periapical rx was taken to confirm the resection margin and retrograde filling material location.

2.6. Outcome Measures

Operative time: The total procedure time was measured from initial flap elevation to suture placement. AR overlap time: The duration required to calibrate and lock the 3D model onto the patient’s anatomy.

Pain assessment: Patients rated their pain levels on a Visual Analogue Scale (VAS; 0 = no pain, 10 = worst pain imaginable) at baseline (T0) and at 6 months (T2) postoperatively.

Healing: Follow-up radiographs/CBCT scans were taken at 6 weeks (T1) and at 6 months (T2) to evaluate periapical healing. Lesion size (mm) and presence of radiopaque healing were recorded.

Complications: Any adverse events (for example: bleeding, swelling, infection, and vascular involvement) were checked, as well as the presence of iatrogenic damage to proximal roots of non-target teeth. The outcomes were measured according to previous studies [

17,

18].

2.7. Statistical Analysis

Given the small sample size, statistical analysis was limited to descriptive measures (mean ± standard deviation).

3. Results

All three patients completed the study without intraoperative or postoperative complications. The AR overlap required an average of [1.49 ± 0.34] min [

Figure 3,

Figure 4 and

Figure 5]. The overall surgical time ranged between (27 and 42) min.

Postoperative pain decreased markedly in all patients from a mean VAS score [4 ± 2] at T0 to [1 ± 1] at T2. Radiographic examinations revealed progressive healing of the periapical areas, with a clear reduction in lesion size in all cases [

Figure 6]. None of the patients reported persistent swelling or adverse effects. A summary of the main findings is presented in [

Table 1], including individual case data on apex resection length, final retrograde filling, and healing status at T2.

4. Discussion

This case series demonstrates the potential of a visual–inertial odometry (VIO)-based AR approach for enhancing apicectomy procedures. The previous literature showed the application of augmented reality in apicectomies, although they were in vitro studies performed on models [

19] or on animal cadavers [

20]. Thus, this might be the first in vivo application of AR for surgical apical procedures.

Although the overall technique is reminiscent of existing image-guided techniques, the advantages lie in the markerless tracking of the anatomy via LiDAR scanning and the real-time generation of point clouds. The ability to calibrate and lock the 3D data within seconds could potentially reduce overall chair-side time compared to other navigation or static guide protocols, reducing the number of appointments needed, the side planning, and the costs related to the intervention. Our findings are consistent with prior AR-guided endodontic or maxillofacial interventions showing significant improvement in surgical accuracy and reduced operative variability. A few technical clarifications are needed about the system. Although we tested on expert operators specifically trained with the system, its development might require further improvements to extend the usability to a wider range of operators and to reduce the inevitable errors that might be present. Regarding the depth perception of the apical portion, the AR system provides a three-dimensional alignment in the six degrees of freedom that does not rely on the two visible planes shown in the figures only, but the operator is free to roam their vision around the clinical scene freely in 3D. Therefore, once the digital model is stabilized, the operator can visualize the in-depth position of the root apex by slightly changing the viewing angle of the device. This action preserves the overlap while revealing additional spatial information. The VIO algorithm maintains the registration through inertial and LiDAR-based distance estimation, allowing the operator to visualize the depth of the target structure when the camera is tilted.

In this way, the technique enables a real-time understanding of the tridimensional position of the apex, which helps to minimize unnecessary bone removal and reduces traumaticity. The operator can therefore integrate the projected guide with natural depth cues given by parallax movements, achieving a more comprehensive perception of the apical anatomy.

We recorded that the alignment procedure added only a low additional time to the total operative time (1.49 min on average), which may decrease further as the operator gains experience and manuality with the novel system proposed. In general, according to our experience with the technique, the overlap obtained is very useful in the first stages of the treatment, especially for localizing the correct entrance point. However, during the procedure the operator might introduce new visual elements into the clinical scene, such as the handpiece or their own hands. These elements may partially obstruct the cameras and limit the field of view. Nevertheless, the software was specifically designed to maintain the original overlap. Visual–inertial odometry, in fact, operates through POI generation during the pre-surgical phase, together with the internal gyroscope of the iPad and distance guidance provided by the LiDAR scanner. These components reduce the possibility of disturbing the positioning of the 3D guide, which could otherwise occur if the camera becomes completely obscured. However, during prolonged periods in which instruments or hands cover the scene and obstruct the camera, recalibration should be considered.

The technique relies also on segmentation, a well-established methodology for digitalizing the CBCT-derived images. The previous literature showed great results achieved with the use of 3D-printed guides; however, AR is immediately available and does not require and additional patient visits [

21]. Furthermore, improved intraoperative visualization enabled by AR may provide a better preservation of surrounding bone and vascular structures, and unlock minimally invasive procedures [

22].

5. Limitations of the Study

The study was intended to show a potential preliminary feasibility study for AR-driven apicectomies of previously failed endodontic retreatments. The scope of this study did not include measurements of the precision or other quantitative bone measurements of the system proposed. While a previous study assessed the precision of the system used between 0.51 mm and 0.77 mm with a mean angular deviation of 8.5° for in vitro endodontic canals mini-invasive surgical access, we could not measure the precision under the circumstances examined. We believe that a CBCT image taken right after the surgery for each patient, as well as a case–control design would have allowed for a more precise measurement of the numerical outcomes, but the sample size needed as well as the concerns due to radioprotection guidelines suggested a more cautious approach. Also, the sample used was recruited during a period of six months but given the unpredictable flow of patients requiring apicectomy and the rarity of endodontics failure, we maintained a relatively small sample. Finally, although AR can provide visually improving guidance, the success of apicectomy also depends on operator skill, flap management, and the retrograde sealing technique.

6. Conclusions

Within the limits of this study’s design, AR-assisted apicectomy using visual–inertial odometry showed promising results. The technique allowed for enhanced surgical visualization and minimal invasiveness observed in the bone structure around the target apex as well as good patient clinical results. Future investigations with larger samples are needed to quantitatively assess the outcomes, and to improve the learning curve associated with AR-assisted apical surgeries.

Author Contributions

Conceptualization: M.F. and G.R.; Methodology: M.F.; Surgical Procedures: D.F., G.R. and F.M.; Data Curation: M.F. and F.M.; Formal Analysis: D.F.; Writing—Original Draft: M.F.; Writing—Review and Editing: M.F. and D.F.; Supervision: F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study was partially funded by Italian Ministry of Health—Current research IRCCS. No additional external funding sources were used.

Informed Consent Statement

All patients provided informed consent, permitting the use of their anonymized data for research and publication. The study complied with Helsinki’s principles.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

One of the authors has patent #11,890,148 issued. The other authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Farronato, M.; Maspero, C.; Lanteri, V.; Fama, A.; Ferrati, F.; Pettenuzzo, A.; Farronato, D. Current state of the art in the use of augmented reality in dentistry: A systematic review of the literature. BMC Oral. Health 2019, 19, 135. [Google Scholar] [CrossRef]

- Mangano, F.G.; Admakin, O.; Lerner, H.; Mangano, C. Artificial intelligence and augmented reality for guided implant surgery planning: A proof of concept. J. Dent. 2023, 133, 104485. [Google Scholar] [CrossRef]

- Farronato, M.; Crispino, R.; Fabbro, M.D.; Tartaglia, G.M.; Cenzato, N. TMJ Pericapsular Guided Injection with Visual-Inertial Odometry (Augmented/Mixed Reality): A Novel Pilot Clinical Approach for Joint Osteoarthrosis Drug Delivery. J. Dent. 2025, 157, 105659. [Google Scholar] [CrossRef]

- Farronato, M.; Torres, A.; Pedano, M.S.; Jacobs, R. Novel method for augmented reality guided endodontics: An in vitro study. J. Dent. 2023, 132, 104476. [Google Scholar] [CrossRef] [PubMed]

- Farronato, M. System and Method for Dynamic Augmented Reality Imaging of an Anatomical Site. U.S. Patent No. 11,890,148, 6 February 2024. [Google Scholar]

- Engelschalk, M.; Al Hamad, K.Q.; Mangano, R.; Smeets, R.; Molnar, T.F. Dental implant placement with immersive technologies: A preliminary clinical report of augmented and mixed reality applications. J. Prosthet. Dent. 2024, 133, 346–351. [Google Scholar] [CrossRef]

- Al Hamad, K.Q.; Said, K.N.; Engelschalk, M.; Matoug-Elwerfelli, M.; Gupta, N.; Eric, J.; Ali, S.A.; Ali, K.; Daas, H.; Alhaija, E.S.A. Taxonomic discordance of immersive realities in dentistry: A systematic scoping review. J. Dent. 2024, 146, 105058. [Google Scholar] [CrossRef] [PubMed]

- Pellegrino, G.; Mangano, C.; Mangano, R.; Ferri, A.; Taraschi, V.; Marchetti, C. Augmented reality for dental implantology: A pilot clinical report of two cases. BMC Oral. Health 2019, 19, 158. [Google Scholar] [CrossRef] [PubMed]

- Torres, A.; Dierickx, M.; Lerut, K.; Bleyen, S.; Shaheen, E.; Coucke, W.; Pedano, M.S.; Lambrechts, P.; Jacobs, R. Clinical outcome of guided endodontics versus freehand drilling: A controlled clinical trial, single arm with external control group. Int. Endod. J. 2025, 58, 209–224. [Google Scholar] [CrossRef]

- Torres, A.; Dierickx, M.; Coucke, W.; Pedano, M.S.; Lambrechts, P.; Jacobs, R. In vitro study on the accuracy of sleeveless guided endodontics and treatment of a complex upper lateral incisor. J. Dent. 2023, 131, 104466. [Google Scholar] [CrossRef]

- Moreno-Rabié, C.; Torres, A.; Lambrechts, P.; Jacobs, R. Clinical applications, accuracy and limitations of guided endodontics: A systematic review. Int. Endod. J. 2020, 53, 214–231. [Google Scholar] [CrossRef]

- Huang, X.; Mao, L.; Hou, B. Locating a Calcified Root Canal with Robotic Assistance: A Case Report. J. Endod. 2025, 51, 1294–1300. [Google Scholar] [CrossRef]

- Liu, C.; Liu, X.; Wang, X.; Liu, Y.; Bai, Y.; Bai, S.; Zhao, Y. Endodontic Microsurgery With an Autonomous Robotic System: A Clinical Report. J. Endod. 2024, 50, 859–864. [Google Scholar] [CrossRef]

- Alqutaibi, A.Y.; Hamadallah, H.H.; Aloufi, A.M.; Qurban, H.A.; Hakeem, M.M.; Alghauli, M.A. Contemporary Applications and Future Perspectives of Robots in Endodontics: A Scoping Review. Int. J. Med. Robot.+Comput. Assist. Surg. MRCAS 2024, 20, e70001. [Google Scholar] [CrossRef]

- Dioguardi, M.; Stellacci, C.; La Femina, L.; Spirito, F.; Sovereto, D.; Laneve, E.; Manfredonia, M.F.; D′Alessandro, A.; Ballini, A.; Cantore, S.; et al. Comparison of Endodontic Failures between Nonsurgical Retreatment and Endodontic Surgery: Systematic Review and Meta-Analysis with Trial Sequential Analysis. Medicina 2022, 58, 894. [Google Scholar] [CrossRef] [PubMed]

- Maggiordomo, A.; Farronato, M.; Tartaglia, G.; Tarini, M. A method based on 3D affine alignment for the quantification of palatal expansion. PLoS ONE 2022, 17, e0278301. [Google Scholar] [CrossRef]

- Abu Hasna, A.; Pereira Santos, D.; Gavlik de Oliveira, T.R.; Pinto, A.B.A.; Pucci, C.R.; Lage-Marques, J.L. Apicoectomy of Perforated Root Canal Using Bioceramic Cement and Photodynamic Therapy. Int. J. Dent. 2020, 2020, 6677588. [Google Scholar] [CrossRef] [PubMed]

- Torres, A.; Shaheen, E.; Lambrechts, P.; Politis, C.; Jacobs, R. Microguided Endodontics: A case report of a maxillary lateral incisor with pulp canal obliteration and apical periodontitis. Int. Endod. J. 2019, 52, 540–549. [Google Scholar] [CrossRef] [PubMed]

- Tamayo-Estebaranz, N.; Viñas, M.J.; Arrieta-Blanco, P.; Zubizarreta-Macho, Á.; Aragoneses-Lamas, J.M. Is Augmented Reality Technology Effective in Locating the Apex of Teeth Undergoing Apicoectomy Procedures? J. Pers. Med. 2024, 14, 73. [Google Scholar] [CrossRef]

- Bosshard, F.A.; Valdec, S.; Dehghani, N.; Wiedemeier, D.; Fürnstahl, P.; Stadlinger, B. Accuracy of augmented reality-assisted vs template-guided apicoectomy-an ex vivo comparative study. Int. J. Comput. Dent. 2023, 26, 11–18. [Google Scholar] [CrossRef]

- Sánchez-Herrera, G.; Facchera, M.; Palma-Carrió, C.; Pérez-Leal, M. Approaches in apical microsurgery: Conventional vs. guided. A systematic review. Oral. Maxillofac. Surg. 2025, 29, 76. [Google Scholar] [CrossRef]

- Kim, J.E.; Shim, J.S.; Shin, Y. A new minimally invasive guided endodontic microsurgery by cone beam computed tomography and 3-dimensional printing technology. Restor. Dent. Endod. 2019, 44, e29. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).