2. Background

Researchers of road traffic problems have pointed out that driving a car (or any other means of transport) is primarily based on the effective use of the organ of vision. This is a cliché, to a certain extent, but there are numerous aspects of it that apply to the problem at hand, as described below. The knowledge base regarding the role of vision in driving has been presented in publication [

1], also highlighting the matter of rehabilitation for visually impaired persons [

1]. In the context addressed in this article, this is particularly important, because there is a certain group of persons with disabilities (PWD) who—in addition to musculoskeletal disorders—also suffer from vision problems. Consequently, this group is doubly excluded from riding cycles intended for PWSN. Regarding the driver’s vision, researchers often focus on discussing factors that determine road traffic safety (RTS). Both in the field of applied science as well as in relation to the human factor, as it is commonly referred to, the problem of vision appears to be dominant. Those who study this factor tend to highlight its complexity. Eye tracking (ET) techniques, implemented in research on the said problems over the last two decades, enable an effective analysis of the impact of vision problems while driving. One of the articles on this subject stated that the efficiency and nature of cognitive processes affect human activity in road traffic [

2]. This seems to be a key hypothesis in the context of this article. The method proposed by the authors for controlling the movement of a vehicle for PWSN using the organ of vision is intended to enable exactly this kind of activity, since otherwise it is often impossible. The study involved a literature review and synthesis of knowledge about cognitive processes and their relevance to drivers’ behaviour. The authors conclude that a significant share of road accidents result from inadequate perception of environmental stimuli as well as storage and use of information [

2]. In this context, researchers are often interested in stereoscopic vision, which is used to drive autonomous vehicles (AV) using cameras. Similarly to our method, other authors processed images obtained from cameras using vision techniques (VT). However, they used different software (Matlab), while ours is an original solution. The software in question is diversified, and the authors of this paper have presented an algorithm for lane detection and motion angle calculation for vehicle control along a path. For this purpose, known VT procedures were employed. A test vehicle (P3-DX Pioneer) was examined under laboratory conditions, moving along a given trajectory with a maximum deviation error of 2 cm [

3]. The study described in that article is like the one we have discussed in the present paper, but the former involved different procedures, and the basis for vehicle control was not human vision, but a camera image. Another publication on the same subject addressed different vectors of the development of future vision systems for ground vehicles [

4]. Its authors used analogies to biological vision systems, criticising technical systems in this regard, stating that this is the optimal solution for vehicle driving (i.e., biological and pseudo-biological). They described compact n-camera systems with a high level of performance in dynamic vision. It was assumed that such systems make it possible to understand the traffic scene (TS). Some authors concluded that the real challenge faced in this area is software (AI algorithms, deep learning) rather than detection and computing hardware [

4]. At this point (as the authors of this study note), it is difficult to agree with the foregoing, since detailed FHD or 4K images involve processing gigabytes of data in a short time. When in motion or in field conditions, this is difficult and often expensive. This issue was also addressed in yet another article [

5]. It discusses deep learning (DL) problems in the context of the use of image-derived data. Its authors point out that supervised methods are flawed: they require large volumes of training data and are unreliable. This is particularly true when the contextual situations of traffic scenes do not match the training set. The authors proposed a solution which entails using a non-labelled sequence of images. According to this method, predictive control is employed by utilising visualised trajectories to select vehicle control parameters. The images obtained at deflected trajectories, as they are referred to, are used to increase the model’s robustness. Experiments confirm that the performance of such a neural network is comparable to methods which involve additional data collection/supervision [

5]. Similarly, in line with our approach, vision is supported by certain additional data from outside the biological system, e.g., a GPS signal. Given the body of problems addressed in this article, interesting research was conducted under the EU HASTE project [

6]. The organ of vision was studied while driving tasks were performed with the assistance of artificial information systems on board vehicles (S-IVIS). Data was collected from over 100 people while driving on a motorway, a motorway in a simulator, and a country road. The authors noted that when the visual task assigned was more difficult, drivers shifted their gaze from the centre of the road more frequently to look at the displays. As the complexity of the driving task increased, so did the concentration of sight on the centre of the road [

6]. The authors studying the problem at hand generally agree that a vehicle equipped with a vision system can visualise a traffic scene (i.e., the situation one has encountered) [

7]. These objectives were pursued in the PROMETHEUS project, where vehicles travelled in AV mode in heavy traffic on motorways at speeds of up to 130 km/h. The tasks analysed during the tests were group driving, lane changing, and overtaking. The authors stated that, when a third-generation EMS-vision system was in use, the behavioural capabilities of agents were represented at an abstract level to characterise their potential behaviour patterns. What was demonstrated was the capacity of vehicles to drive in a network of secondary roads as well as off-road and to avoid obstacles [

7]. Note that driving a vehicle for PWSM is easier in this case, because it involves less complex infrastructure.

Official manuals state that approx. 90 per cent of the information used by drivers is visual [

8]. The referenced paper contains interesting information about night driving, the drivers’ ability to distinguish details from afar, to detect luminance differences, etc. One can also read in this publication that drivers with poor eyesight need to be closer to road signs or hazards to notice them. Drivers usually misjudge the distance at which pedestrians can be seen, while lighting can increase contrast and make objects on the road more visible. All such information is provided by the FHWA manual, which also addresses the issue of visual function degradation progressing with age [

8]. This is relevant in the context of the method proposed in this article, as it is based on eye measurement without taking the associated cognitive processes into account. This matter is discussed further in this article. The low RTS level observed prompted further researchers to conduct literature studies in the field of visual navigation, smart vehicles, and visual clarity improvement theories [

9]. The research shows that a smart vehicle with improved visual clarity features is better than a conventional one, and the vehicle’s performance is higher. The authors propose improvements to the steering algorithm by increasing the visual clarity of images through smart filtering [

9]. Many researchers address the problem of the capacity of vehicles featuring vision techniques (VT) to navigate effectively in difficult conditions. To this end, deep learning is often used to improve the perception of machine vision in AVs. In one of the articles on this subject, the authors focused on the problem of detection of small targets. Tests conducted with a camera mounted in a vehicle allowed them to verify the effectiveness of road vehicle identification [

10]. Most studies analyse vehicle control based on the visual information provided by cameras. In our research, the camera was directed at the driver’s eyes, and not at the TS itself, which represents a reversed arrangement. It is the driver’s eyes/mind that determine the direction and parameters of movement, not the processed TS. However, the various methods in question are similar in other aspects, since VTs are used to determine the vehicle control parameters. Vision image processing procedures include: binarization, edge detection, and transformations. These procedures are simplified by taking the configuration of the driving area (traffic scene) into account [

11]. In the context of the problem at hand, one should mention the Vision Zero strategy. It represents a set of rules applicable to the automation of the road transport system, according to which AVs will need to perform better than human drivers. Therefore, according to the authors of this study, the road transport system will have to be adapted to both unreliable humans and unreliable automated vehicles [

12]. Road traffic scene acquisition is a basic visual–motor task required of every driver. However, researchers point out that the way the task is performed is unclear [

13]. And this is the case despite the widespread use of eye tracking (ET) and brain imaging research methods. Such studies often use measurements of the angular variables, head, and gaze of test participants. In this way, hypotheses concerning the visual strategies of the people examined are verified. According to the authors of this study, intercept control is best described as a constant target tracking strategy, with the gaze and head coordinated to continuously acquire visual information enabling the strategy to be pursued [

13]. Other authors introduced models of what is referred to as active visual scanning [

14]. This method consists of lateral control (position adaptation) and longitudinal control (speed adaptation), and is based on drivers’ visual input. The authors used scanning path analysis to examine visual scanning sequences over the course of driving. They have identified several stereotypical visual sequences: forward, steering, backward, landscape, and speed monitoring paths. According to the authors of the study, their results shed some new light on the patterns used while driving [

14]. The authors of another publication highlighted numerous challenges, such as real-time data processing, decision-making under uncertainty, and navigating complex environments [

15]. In this regard, they reviewed deep learning methodologies, including convolutional neural networks (CNN) and the recurrent neural networks used in AVs, against tasks such as object detection, scene understanding, and path planning [

15]. Importantly, their study makes it possible to identify gaps in the attempts to achieve full autonomy, improve sensor fusion, and optimise costs [

15]. Vision-based control systems were also studied under the C-ITS research project, where increased safety requirements, computing power in embedded systems, and the introduction of AVs were all relevant matters. The authors of this emphasised the interdisciplinary nature of their research, comprising aspects such as computer vision, machine learning, robotic navigation, embedded systems, and automotive electronics [

16]. They reviewed a list of advanced vision-based control systems [

16], where in addition to the control, per se, the operation of on-board devices is also considered important in this process. This problem was referred to in yet another article on the perception of vehicle interiors [

17]. The authors studied manipulators on board a car using all human senses under various conditions of sensory deprivation, spanning hearing, sight, and touch. Unlike in direct driving, touch plays a more considerable role (three times greater) in vehicle operation [

17].

In the field of VT and AV, the Defence Advanced Research Projects Agency programme is widely known. Another scheme, UGCV Percept OR Integration, introduced a new approach to all aspects of the design of autonomous mobile robots (MR), i.e., machines whose main purpose is to enable transport between points in a limited time and in a complex environment [

18]. The article provided a discussion on the capacity of these machines to detect terrain features and introduces various devices serving this purpose to analyse the differences between AVs [

18]. Another important study concerned divided attention, analysed in terms of environmental events and vehicle driving-related tasks. In the said study, drivers’ eye movements were recorded using an eye tracker [

19]. As was easy to predict, eye movements proved to be highly dependent on the situation, with the main areas of interest being the windscreen, mirrors, and dashboard. The tasks assigned to the study participants affected the distribution of their attention in an interpretable manner [

19]. A subject like our research was addressed in yet another study, where an intelligent wireless car control system based on eye tracking was designed. Tobii ET was used to track and recognise lines of human sight. ZigBee wireless communication technology was used to provide connectivity [

20]. According to the authors, this form of control can perform forward and reverse driving, left and right turns, parking, and avoiding obstacles with an accuracy of approx. 98% [

20]. Problems with perception in traffic constitute enough of a reason to try to improve technology. To this end, the authors of another article proposed a cooperative visual perception model using 506 images of complex road traffic scenarios [

21]. An improved object detection algorithm intended for AVs was applied in their study. The average perception accuracy for individual TS elements reached 75%. Using the image fusion method, drivers’ points of view were combined with vehicle monitoring screens. This cooperative perception was able to cover the whole risk zone and predict the trajectory of a potential collision [

21]. Regarding the foregoing, it should be noted that perception errors are a major factor in accidents, and few of them can be attributed to vision. According to the authors of this paper, in the case of all drivers, the fact that visual acuity declines abruptly as angular distance increases poses problems [

22]. A phrase used by the authors to reflect these visibility limitations in the context of accidents is the statement “he was looking but did not see” [

22]. This is because drivers often operate beyond their visual/perceptual capabilities, especially at night. The authors believe that errors in assessing the situation at hand are inevitable, but they do not necessarily lead to accidents, owing to the safety margins of drivers and other road users. An interesting conclusion drawn by the authors is that, despite certain limitations and fallibility, an average driver takes part in few incidents [

22]. The aspects generally addressed in the research on vision driving are as follows: steering, visual cues, and human–vehicle interaction. This analysis comprises various modes of interaction between humans and vehicles in various scenarios, such as calling, stopping, steering, etc. [

23]. With this context in mind, the researchers demonstrated vehicles’ capacity to detect and understand human intentions and gestures. The authors concluded that safety can be improved by way of vision-controlled human–vehicle interactions [

23].

From the opposite perspective (namely, that of pedestrians), scenarios for road crossing were studied. Spontaneous gaze behaviour was among the problems investigated [

24]. The tests consisted of distinguishing between running speed and the time of a car’s arrival at a crossing. The role of saccadic eye movements was emphasised. These make it possible to predict the time of a vehicle’s arrival at a crossing [

24]. The knowledge of the driver’s role in the driver–vehicle system is very limited. There are many models of drivers (e.g., as following a leader), but few of them take the driver’s sensory dynamics into account [

25]. Therefore, the authors of another study reviewed the literature about sensory dynamics, delays, thresholds, and sensory stimulus integration [

25]. The concept of using vision in AVs has shown benefits in AV–pedestrian interactions. In the next study of interest, the researchers analysed a method of intuitive behaviour-based control. They proposed three algorithms for the control of AVs [

26]. An oculomotor system became the basis for developing a vision sensor system intended for AVs under yet another study [

27]. According to this approach, a stereoscopic control system mimicked the human vision system, performing tracking and cooperative movement tasks. The project culminated in a field experiment covering a pedestrian crossing [

27]. According to the authors of another article, visual interactions play a central role in the performance of a spatial task on a TS. Perception-based control is grounded on the idea that the visual and motor systems form a unified system in which the necessary information is naturally extracted by vision [

28]. The sensory/motor response is limited by the visual and motor mechanisms. This hypothesis was investigated in that article, referring to tasks involving indoor remote control of rotorcraft. The authors of the paper posed the following questions: what is the operator’s general control and steering strategy, and how does the operator obtain information about the vehicle and the environment [

28]. The model proposed in the article enables profiling of the perception–action system. The purpose of this visual–motor model is to unburden the operator [

28]. Another review of the literature on the subject focuses on methods for modelling and detecting the spatio-temporal aspects of a driver’s attention [

29]. The paper refers to a machine learning-based approach to modelling and detection of the gaze for driver monitoring. Publicly available datasets containing recordings of drivers’ gaze have been provided [

29]. Numerous studies on the driver attention problem assume that attention is placed where the gaze rests. Drivers can use peripheral vision to perform certain tasks. With this goal in mind, the authors of another study analysed the influence of peripheral vision on lane keeping. The performance of novice drivers deteriorated when the central task was close to the periphery, while the performance of experienced drivers only dwindled when the central task was at the bottom of the console. The authors concluded that novice drivers learn to cope with peripheral vision as they gain experience dealing with road traffic [

30]. The research on the perception of TS raises many questions; for instance, about the way in which active-gaze-fixing patterns promote effective steering [

31]. According to the authors of this article, the role of stereotypical gazing patterns used while driving remains unclear. The key question is whether there is enough information available in the direction of driving to use it for steering. To answer the above questions, the authors validated driving models using data obtained from human vision [

31]. The vision control model can function based on what is referred to as a pure-pursuit controller, which calculates the trajectory towards the steering point, or a proportional controller. These foregoing studies imply that “looking where one is headed” can provide information for the steering system. According to the authors, capturing the variability in steering requires more sophisticated models or additional sensory information [

31]. Another literature review focuses on discussing gazing behaviour patterns and their implications for understanding vision-based steering. This study has made it possible to build a knowledge base for researchers investigating oculomotor behaviour and physiology. The problem of utilizing gaze-fixing strategies while performing closed tasks at one’s own pace (with lesser dynamics) has been raised. The paper highlights the problem with separating vision-based steering strategies (closed) from open behaviour patterns [

32]. A wide range of adaptation problems in the driver–vehicle system was discussed in another article [

33]. A separate aspect of research, resulting from the implementation of AVs, is what is called situational awareness. It involves developing interfaces which will enable drivers to “be up to date/participate” in a traffic scene. In this context, a systematic review of human–vehicle interactions (HVI) ensuring situation awareness was proposed regarding AVs [

34]. They were analysed in terms of modality, location, information transmitted, evaluation, and experimental conditions [

34]. Another article also concerned the peripheral vision driving model, where the AV predicts vehicle speed based on camera recordings. The vehicle operates based on information from the driver’s sight. According to the authors, adding high-resolution input data based on predicted driver gaze-fixing locations improves driving accuracy [

35]. Drivers’ physical limitations are the subject of further studies [

34]. Specific driver models comprising such limitations have been identified to enable researchers to predict the performance of a driver–vehicle system under lateral and longitudinal control tasks [

36]. Eye tracking technologies are considered to play an important role in the context of these problems, clearly demonstrating how such solutions improve driving safety. The authors of that study managed to build a theoretical model of a human–machine system integrated with the eye tracking technology. The specific impact on improving driving safety and interaction efficiency was also investigated [

37]. In terms of road traffic safety, drivers rely heavily on visual perception. Its key elements, those that contribute to safe driving, include situational awareness, vehicle control, responsiveness, and anticipation of potential hazards. In this context, AVs are predominantly based on two basic technologies: LiDAR and others. In one of the articles concerning this subject, an image classification model was proposed, aimed at evaluating AV safety, and a RTS framework was defined. The framework encompasses the application of the two groups of technologies, installed on board AVs for use under different weather conditions [

38]. With reference to that study, it should be noted that the role of sensors in terms of control efficiency depends on the data obtained. The sensors and cameras incorporated into a vehicle or tool can be used to provide such information, while the number of sensors and their arrangement will depend on the task at hand [

39]. The experiments conducted in this context concerned monocular, stereoscopic, or enhanced stereoscopic (hyperstereoscopic) information. They evidenced that the efficiency of remote control depended on the specific driving task, and that no significant efficiency difference was revealed between monocular and stereoscopic tasks [

39]. This is important for the methodology proposed, focusing mainly on monocular measurements. Further researchers have also highlighted the importance of predicting the temporal aspects of gaze-fixing patterns in natural multitasking situations. One of the solutions they propose is to break down complex tasks into modules which require independent sources of visual information. To this end, a softmax barrier model was introduced based on the use of two key elements: a priority parameter, representing the importance of a given task, and a noise estimate (representing uncertainty) regarding the state of the visual information relevant to the task [

40]. Another technique which has recently been growing in popularity in the research on perception is immersive virtual reality (VR). In this context, researchers have undertaken projects intended to assess the behavioural gap. It defines the disparity in a participant’s behaviour over the course of a VR experiment compared to an equivalent role in the real world. A digital twin, being a model of a pedestrian crossing, was created for this purpose. It is used to study pedestrian interaction with AVs in both real-life and simulated driving conditions. Experimental results show that pedestrians are more cautious and curious in VR [

41]. However, the outcome of the study was dependent on the interface used, which highlights the role of visual information in these processes [

41]. In terms of vehicle driving, vision impairments affect two areas. Where the eye is the problem, these include conditions such as glaucoma and macular degeneration [

42]. At the brain level, stroke and Alzheimer’s disease are the main issues, especially in older adults. Such disorders can increase the number of errors attributable to reduced visual acuity, sensitivity to contrast, and field of vision [

42]. Ageing and brain damage can also reduce the useful field of vision, increase the frequency of blinking, impair the perception of structure and depth, and reduce the perception of direction [

42]. Another study of relevance concerns the relationship between drivers’ eye movement patterns and driving performance against a dual-task driving paradigm. The first task consisted of following a car while maintaining a set headway from the preceding vehicle. The second task was used by the authors to examine the updating of traffic light signals. The performance under task one was measured by the distance, and under task two, by the reaction time and accuracy. The authors concluded that the frequency of fixations, as well as their duration and spatial distribution, were significantly correlated with the drivers’ performance. Driving performance improved with fewer eye movements, longer fixation time, and less spacious distribution of fixations [

43]. That study is counterproductive vis-à-vis the goals and assumptions of the project in question, since the latter is focused on maximising the utilisation of eye movements for steering vehicles intended for PWSN. This will, consequently, be examined in detail in subsequent studies. The performance of a steering task is impacted by a visual field deficit [

44]. Binocular and monocular visual field deficits exert a negative impact on driving skills [

44]. Both central and peripheral visual field deficits cause various difficulties, but the degree thereof depends on the deficit’s severity and the driver’s capacity to compensate [

44]. In this context, the authors of one study discussed the problem of visual field deficit compensation. The measures undertaken in the case of central compensation for a visual field deficit include driving speed reduction, while in compensating for a peripheral visual field deficit, these include increased adaptive scanning [

44]. Many researchers point out that eye movements are determined by space-based attention, or, more specifically, by the distinctive features of a traffic scene. The authors noted that some research indicates that visual attention does not specifically select places of high salience, but functions on the level of the units of attention assigned by objects in the scene [

45]. The authors of that study analysed the relevance of such objects in directing attention using spatial models, including those based on low and high levels of salience, object-based models, and a mixed model. The authors conclude that scanning path models use object-based attention and selection, which implies that the units of object-level attention play an important role in attention processing [

45]. Some other authors note that navigation and event prediction depend on the optimal distribution of attention through overt eye movements. They refer to the essential parameters: fixations and saccades. In their perspective, fixations are periods of relative stability, when the eyes focus on the TS. Fixation means that the brain is processing the information it has fixed upon. Saccades are used to direct the focus of the eyes from one point of interest to another. No visual information is acquired during saccadic movements [

46]. Another of the articles reviewed describes new elements in the research on drivers’ gaze. It introduces IV Gaze—a pioneering dataset used to record gaze in a vehicle (in a sample of 125 persons). This dataset covers a wide range of gazes and head positions observed in vehicles. The author’s research focuses on in-vehicle gaze estimation using IV Gaze. They have investigated a new strategy for classifying gaze zones by extending the solution known as GazeDPTR [

47]. This research is considered important on account of the fixed datasets utilised in our method, which, however, caused more or less significant errors in identifying the driver’s gaze or even head position, which is discussed in more detail in the method validation section. In this highly specific study, eye tracking technology was used to examine the impact of display icon colours on the preferences and perceptions of elderly drivers in human–vehicle interfaces. The study comprised an analysis of six foreground colours against two background colours. The results have shown that elderly drivers find orange icons easier to locate, while yellow icons are the most difficult to spot [

48]. According to further researchers, continuous tracking of the fixation point enables smoother motion control, which means that predicting the road curvature by tracking a distant point contributes to driving stability. The authors concur with the hypothesis that drivers focus their sight in-between traffic lane boundaries to set the path of movement [

49]. Yet another aspect to this discourse is the research on the effect of cognitive load on visual attention, given that cognitive load can impair driving performance. Cognitive load combines with a deficit of external cues when a driver briefly takes their eyes off the road. This problem was studied in the context of an auditory task. The performance measures applied included drivers’ adaptations to changes and their confidence in detecting them. According to the authors, cognitive load reduced the participants’ sensibility and self-confidence, regardless of the external cues [

50].

3. Materials and Methods

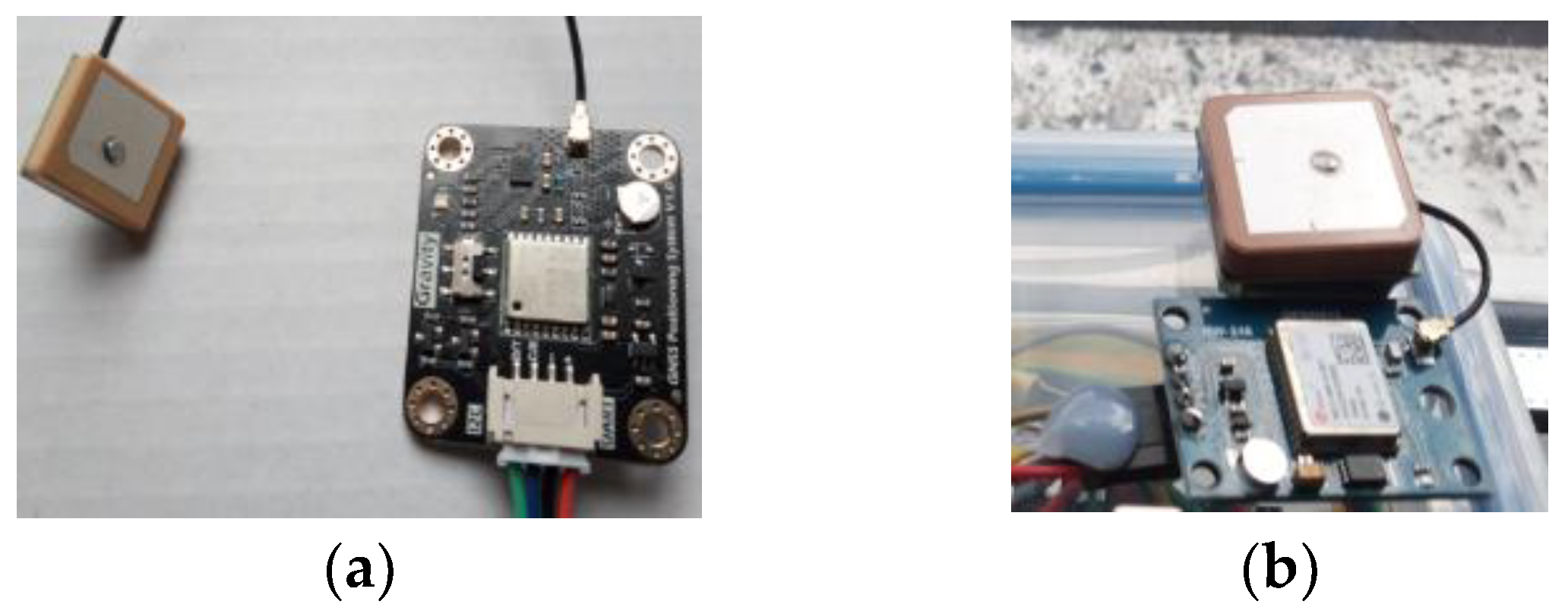

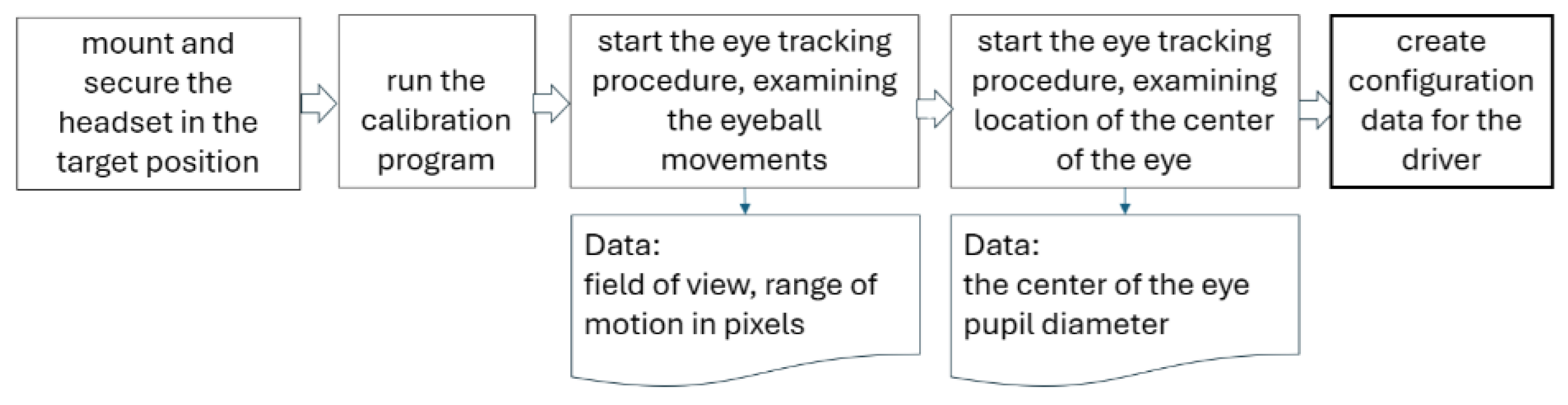

The problem of using the organ of vision to control vehicle movement is complex and involves an analysis of characteristics and data retrieved from many different information channels. One of the objectives underpinning the study addressed in this paper was to develop a method for safe control of a cycle intended for PWSN using real-time analysis of the vision organ’s characteristics. At the same time, the solution was to be inexpensive. It was further envisaged that this system would be GPS-supported. The fundamental problem is related to answering the question of whether the measurement data from vision that are obtained using a measurement system are sufficient for the safe control of such a vehicle. Another problem is the application of an appropriate signal separation methodology in the scope of the characteristics of vision acquired from a person driving an off-road vehicle designed for people with special needs. Therefore, the very essence of the study is to select adequate tools for tracking eye movements as well as to determine which movements and what range thereof can be effectively used to control a vehicle for PWSN. Another problem to be solved is whether it is possible to use only the organ of vision to control the vehicle’s movement without resorting to other information channels, i.e., data extracted from speech or gestures. The GPS is necessary in this context, since it is incorporated into the vehicle’s steering system to increase its safety (taking precedence over the designed system, and being installed as such).

The methods presented in this article for tracking driver gaze have been proven for approximately 20 years. They have a rich citation base [

51,

52,

53,

54,

55,

56,

57]. They have been tested in numerous practical applications. However, they will be expanded to include the current state-of-the-art object tracking methods based on neural networks. It is worth remembering that data about the visual system is not only provided by the eye itself. Such data can be obtained from the electromagnetic potential of the eye muscles. Furthermore, processes occurring in the eye, such as blinking, provide many other valuable clues about this organ. Below, we present one of them: blinking.

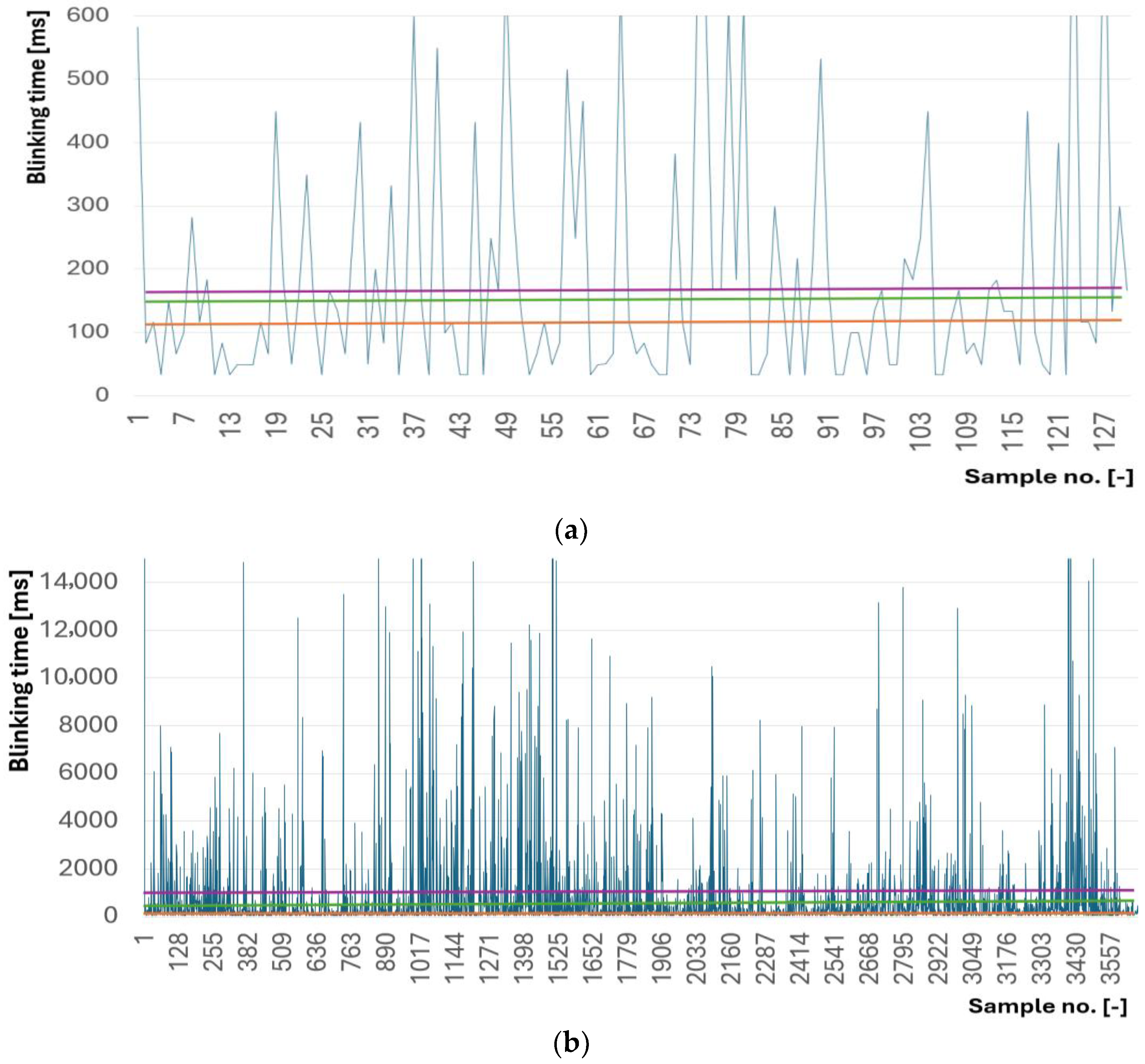

The authors have some experience in analysing the phenomena of microsleep and blinking in drivers [

58,

59]. In our opinion, data concerning driver microsleep and blinking phenomena can be successfully analysed to use this behaviour to start, steer, and brake vehicles intended for PWSN, as discussed further on in the paper. This phenomenon (closing eyes for a shorter or longer period) has the potential to be developed for use in vision control systems.

Although the problem of using vision techniques to process visual characteristics is not essentially new, their implementation for the control of off-road vehicles for PWSN is. This approach attempts to analyse the possibility of using visual characteristics to steer an off-road vehicle intended for PWSN, one which typically negotiates terrain that is unfavourable from the point of view of motion control: uneven, with large elevation differences, often narrow, and effectively reducing vehicle ground clearance. In contrast, the studies found in the literature mainly concern the movement of AVs on public roads, which are even and generally straight, with one exception being the subject of the DARPA project. Contrary to autonomous vehicle control on public roads, our study benefits from the typically low traffic density on the mountain trails used by PWSN.

Another important aspect of the problem is the development of an entire control system to be installed on board the vehicles intended for PWSN at the lowest possible cost compared to the total vehicle price. Another novelty is defining the vehicle’s control logic using characteristics obtained from the organ of vision. The method of their processing as well as selective and sequential use is specifically defined to achieve effective and safe vehicle control. In a broader sense, controlling such a vehicle also entails operating its subsystems using the organ of sight, at least as far this is possible. However, across the whole process, one should keep in mind that the problem at hand concerns a vehicle driven and operated by people with special needs, who must be provided with a higher level of safety.

The total cost of the prototype devices engineered under the research project in question varies, while still being low across all cases and amounting to several hundred PLN. As of 29 September 2025, the EUR to PLN exchange rate is 4.29. The cost of a reference device available on the market and intended for commercial use, e.g., Tobii Eye Glasses, also used over the course of the study, is approx. PLN 130,000, which exceeds that of even expensive cycles for PWSN [

60]. The same can be said about the cost of the ET solutions from the now defunct company SMI (which used to supply the most popular mobile ET solutions for the last dozen or so years). When intended to be used to control the movement of vehicles for PWSN, such glasses must be additionally programmed with SDK. Furthermore, the operation of the ET glasses is no simpler than that of the devices we propose.

Essentially, there were several prototype variants studied, all based on the measurement of vision characteristics using small cameras mounted opposite the right and/or left eye, or possibly in front of the whole face. The eye tracker of our design is known as People GBB_eye_tracker (GBB_ET). The cameras or their sub-systems, as presented in this paper, are prototypes and are typically installed on jeweller’s goggles or in other systems enabling their flexible mounting on the driver’s head. They can be incorporated into a GoPro-type set or mounted on a cap resembling a jeweller’s headpiece (or goggles) with a visor (including an add-on scene camera on top of the eye monitoring camera). The jeweller’s type goggles or headpiece are devices designed for precision work, featuring a frame enabling a magnifying glass to be fitted. In the prototype version, a high-speed camera (120 FPS) is often mounted on special glasses. The cameras used in the prototypes operate within frequencies ranging from 15 to 30 to 120 fps. The resolutions of the cameras typically used in practice range from 1280 × 720 to 1920 × 1080 pixels (although the capabilities of the cameras used in this area are much greater, reaching 5–12 Mpx).

The first of the prototypes in question is based on a single camera installed in front of the eye being monitored in the PWSN vehicle driver (equivalent to a monocular). The idea is that the driver’s field of view should be obscured by the camera as little as possible. Ultimately, the cameras are to be built into the frames of the goggles, as is the case of the commercial devices from SMI (originally a German, Berlin company acquired in 2017 by Apple, Cupertino) or Tobii (Danderyd, Sweden) [

61]. A variant of this prototype is a group of devices built using a camera installed centrally in front of the driver’s eyes on a GoPro-type boom. This kind of prototype features one or two independent cameras, covering the field of view of both eyeballs or the entire face. Further variations in these prototypes are devices mounted on the jeweller’s goggles or headpiece with a visor. The headpiece reduces reflections, while the visor is used to fit a separate scene-oriented camera. The scene camera monitors the area in front of the vehicle. The device with a scene camera is similar in terms of functionality to commercial glasses designed for eye tracking. Such a camera, observing the area in front of the vehicle, paired with the headpiece with two cameras fitted at the same time—one for eye movement tracking and the other to monitor what in front of the vehicle—provides functionality like that of a conventional eye tracker. Regardless of the foregoing, a situational (silhouette type) camera, as it is typically referred to, is mounted on board the vehicle for PWSN, supporting the driver’s analysis and monitoring the driver’s entire silhouette or only the head. The PWSN vehicle itself may also be equipped with a scene camera, independent of the driver’s scene camera (both providing two, often disparate, perspectives of the traffic scene). Two independent scene cameras also make it possible to separate the visual data used for control purposes. In the case of the prototypes presented in this article, the entire setup of such a system may seem complicated. In the commercial version, all the gear will be simplified as much as possible and integrated into the vehicle.

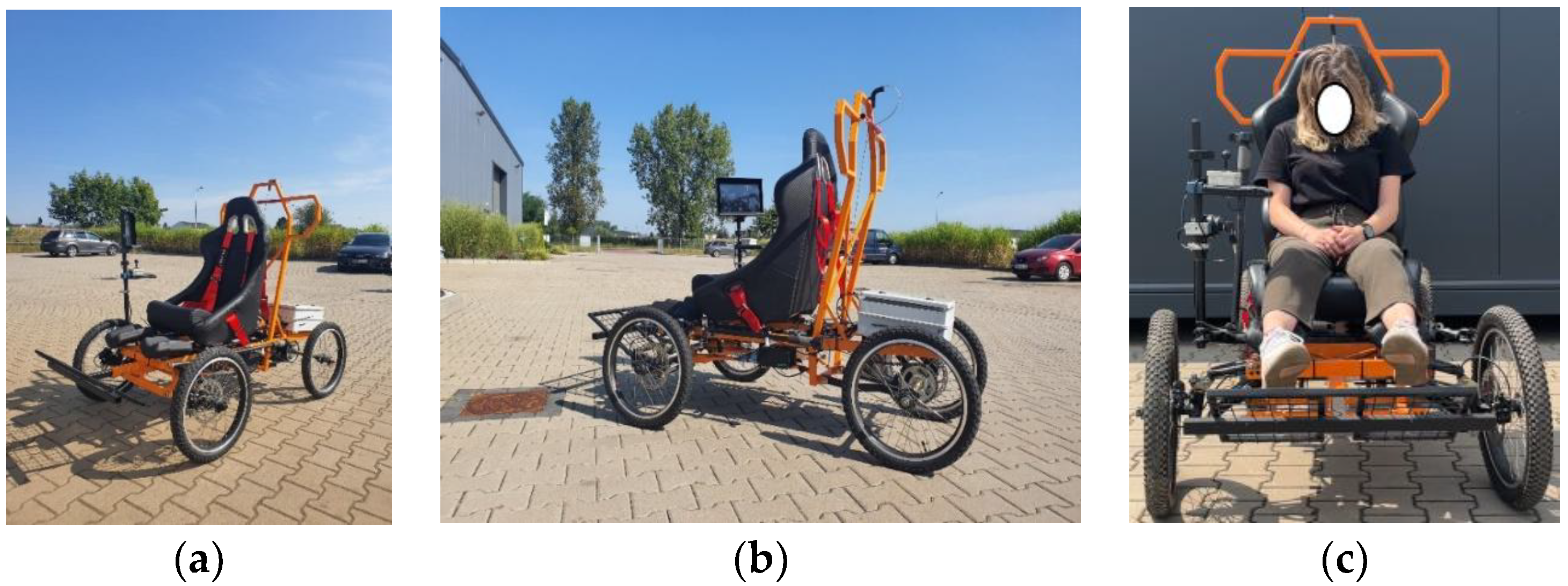

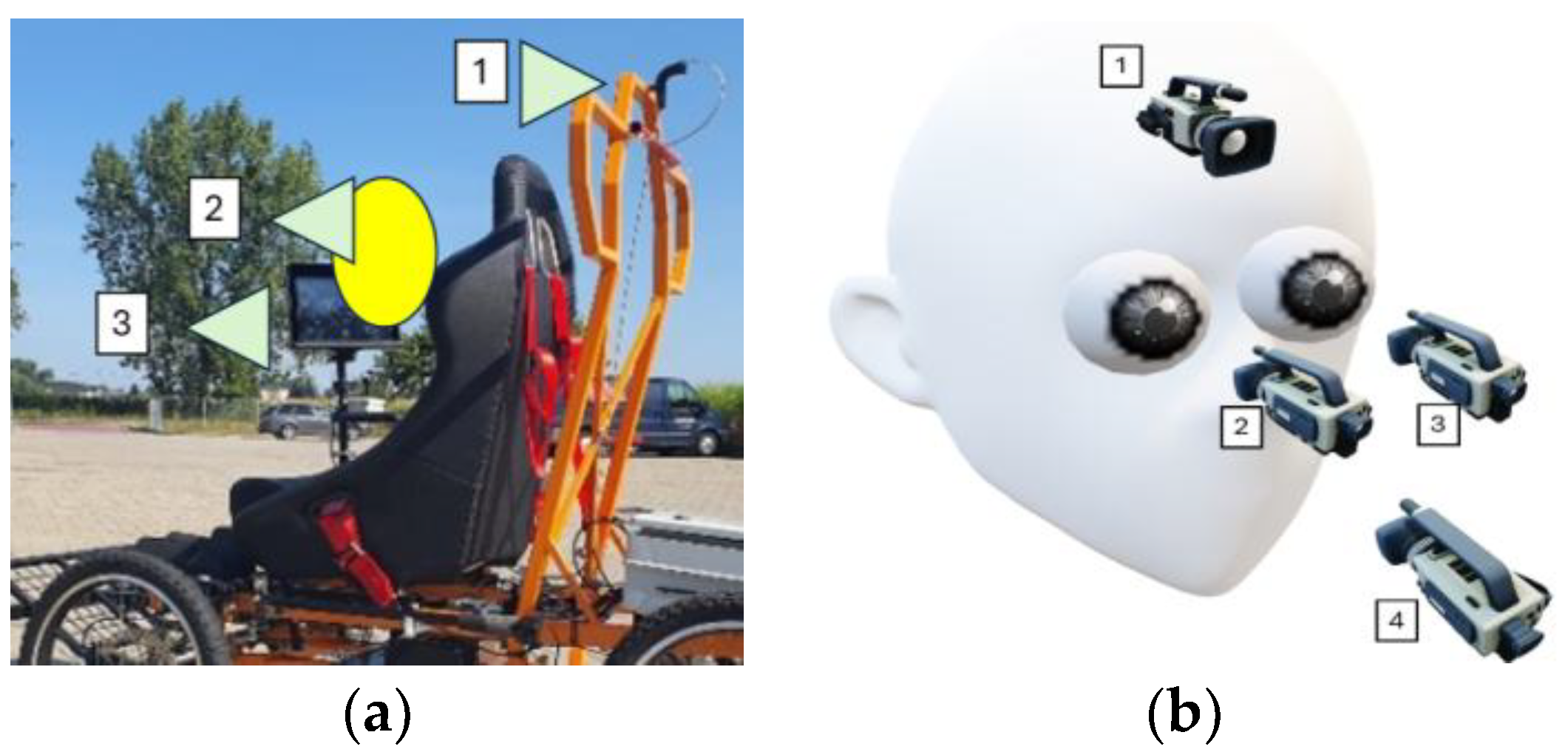

Figure 2 shows the general layout of the prototype measuring equipment in use against the background of the vehicle for PWSN and the driver’s face.

Figure 2a shows the layout of the vehicle’s cameras. The traffic scene camera, also known as the vehicle foreground camera (1), is mounted on the structural frame, at the highest point of the vehicle. The vehicle frame dimensions can be adjusted to the driver’s height. The camera or the system of cameras (2) for gaze monitoring are mounted on the driver. The silhouette camera (3) is installed on a special boom attached to the support frame.

Figure 2 also shows the seat belt system used to ensure the vehicle driver stability.

Figure 2b presents the spatial arrangement of individual cameras in relation to the driver’s face. Driver monitoring can cover the organs of vision, head, silhouette, and traffic scene. Procedures for detecting the biological and behavioural characteristics of a vehicle driver can be divided into four main groups: eye motion, face motion, body motion, and scene object detection, only the first two of which have been discussed in this article. In terms of the control of the vehicle for PWSN, one should distinguish between steering—meant as the operation of setting the direction of the vehicle movement and changing its speed—and the handling of other vehicle sub-systems. In the future, vision-based operation of certain PWSN vehicle sub-systems is also envisaged (employing the concept proposed). Essentially, the concept in question involves controlling the vehicle movement without the use of limbs. In this context, several vehicle control scenarios can be defined. The first, and most difficult to accomplish, consists of using only the analysis of movement and the visible position of the eyes, or more precisely—the pupils. The data utilised according to this approach is the information about fixations, saccadic eye movements, and the pupil size. In other words, once the data have been adequately converted, one extracts information about the points in the traffic scene where the driver’s gaze is fixed for a certain time (fixations) and the movements between these points (saccades), although both kinds are of completely different nature. Additional control information is derived from whether or not the driver closes their eyelids (blinking). The fact that blinking is used for vehicle control has been described further on in the paper with reference to the authors’ empirical observations of this process [

62]. The second scenario concerns the support for the characteristics of the sight organ using additional information retrieved from the peribulbar area, where adequate temporary markers (e.g., direction arrows) are placed. In this case, the marker itself can be a pictogram and contain information used to control the vehicle, e.g., concerning the direction of movement or speed control (up or down). The marker can be placed both on the eyelid as well as elsewhere near the eyes. For example, eye squinting causes the marker to be placed in the camera’s field of view. The third scenario for the vehicle control involves the use of an additional camera to monitor the head area, where facial gestures and/or whole head movements are also used for vehicle control. The fourth case concerns a situation where a general/situational (silhouette) camera placed on the PWSN vehicle frame covers the driver’s torso. With such a system layout, the driver can use upper limb movements or hand gestures to control the vehicle. This article addresses the first three scenarios. However, all the four cases of control of the driver’s characteristics have been illustrated in

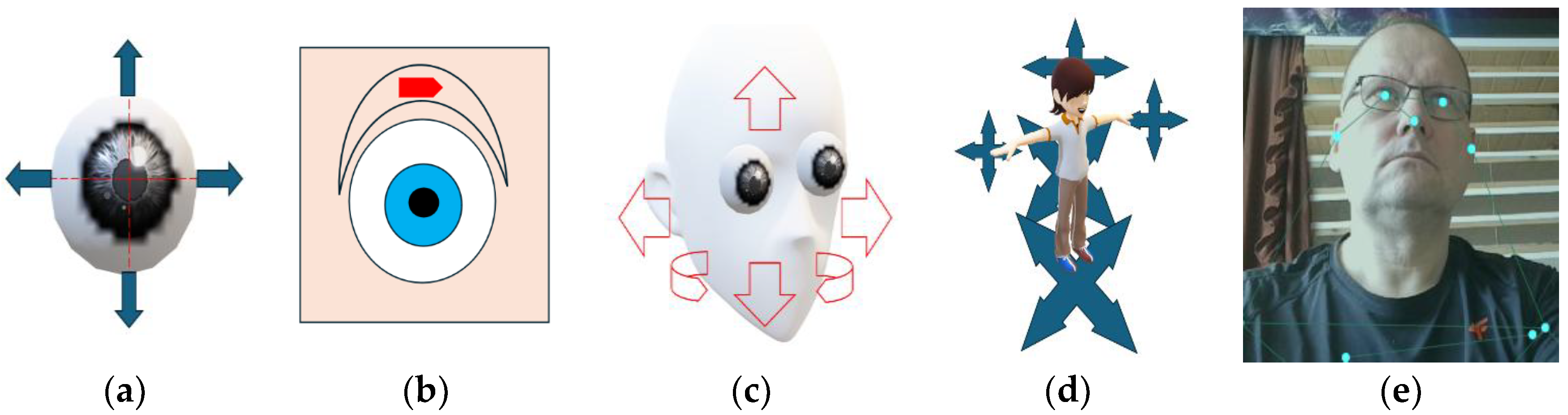

Figure 3.

Figure 3 shows the potential reference systems for the vision control system. The first (

Figure 3a) is based solely on eye tracking. The source of data is solely the eyeball. The second relies on the use of additional markers in the eye socket area (

Figure 3b). Such markers are placed on the eyelids or around the eye socket to obtain additional data for control. The third takes the driver’s head orientation into account, while the fourth one considers the orientation of the driver’s entire body. There are special systems on the market which are dedicated to driver silhouette modelling, available at prices starting around EUR 100–200. These are called pose estimation systems, wherein the head is represented by “five to seven joints”, and several dozen additional points from the rest of the body can serve as references for it. At this stage of research, however, we did not use such stereoscopic camera systems, yet.

The choice of the procedures used to control vehicle movement as well as of the on-board devices to be installed, as discussed in this article, ultimately depend on the driver’s health condition, the algorithm’s efficiency, and—from a commercial perspective—the final price of the entire control system. It has been assumed that the control system will be designed in such a way as to increase the accessibility of this form of (mountain) tourism for people with special needs. Nevertheless, all the prototypes presented in the paper cost relatively little vis-à-vis the entire construction of a regular cycle, the estimated price of the former being a maximum of several hundred PLN. Therefore, the cost of the most advanced PWSN vehicle control system will not exceed 5–10% of the market price of the available vehicles for PWSN. This will allow even the most excluded group of users to control such vehicles. The process of monitoring the vehicle driver’s eyes is effectively based on several approaches. One involves vehicle control using information retrieved from a single eye. Another entails using data from both eyes, which is much easier, and can be achieved using one or two cameras, depending on the mounting solution (

Figure 4). There is also an approach which considers the fact that it is difficult to separate the signals for control purposes in the first two cases, and so it assumes that some additional information is to be used.

Figure 4 shows views from a camera monitoring the eyes of a PWSN vehicle driver. The choice of the optimal headset mounting method has not yet been investigated.

Figure 4 illustrates some real-life problems encountered when attempting to keep track of the driver’s gaze. Firstly, the eye can be moved in the direction of an extreme end position with a large amplitude of movement (

Figure 4b). Moreover, this may be accompanied by a sudden turn of the entire head (

Figure 4a). Observation of only one eye does not consider the differences between the two eyeballs. Even if this is not visible, one may have a slight squint (

Figure 4b). The characteristic features of an individual can make it difficult to record extreme eye positions (

Figure 4c). As shown in the above three figures, selected from a wide range of problems related to eye position monitoring, this is by no means a simple issue.

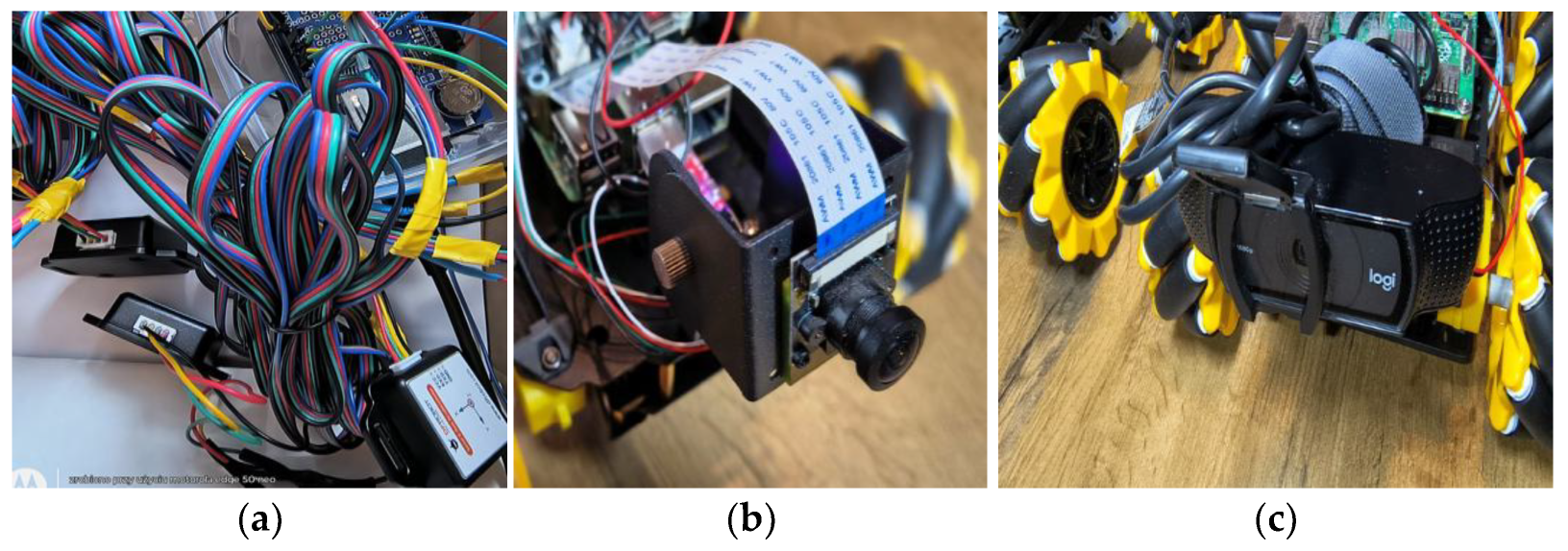

Different measuring device prototypes were used over the course of the study. The measuring equipment precursor was a device (video game controller) from 2018, based on three IR diode position measurements. This device served to control the position of a moving object in a 3D simulator game. It used to be a popular control method in the 1990s and early 2000s, especially in computer games and simulators. Another device was a prototype based on a HAM radio kit with a Logitech c270 camera (Logitech, Lozanna, Switzerland) (

Figure 5a). This prototype was the first to apply procedures representing the group of vision techniques to control the pupil position using the OpenCV library [

63].

The following

Figure 5b,c illustrate the successive stages of development of the measuring devices used for gaze monitoring in drivers of vehicles for PWSN. Inexpensive off-the-shelf components are typically used to mount the prototypes. In the future, these will be 3D-printed components. The sets shown in

Figure 5a run on Windows, while the others run on the Raspbian operating system (Raspberry Pi Holdings, Cambridge, UK) currently Raspberry Pi OS. Various types of cameras were used in the prototypes, both with and without the autofocus feature, for daytime and night-time operation (also without an IR filter). Most of them are cameras representing the spy camera category, as it is commonly referred to, i.e., units mounted without a PCB (Printed Circuit Board). In such cases, the camera’s electronic circuits are mounted directly on the Camera Serial Interface (CSI) tape. This makes it possible to generate a better field of view for the driver and causes less traffic scene obstruction. Additionally, the small size of the camera head (8 × 8 mm), in the commercial version, enables the device to stay hidden in the frame of the glasses. High-speed cameras operating at frequencies above 100 FPS (up to 300 fps, which is faster than the popular commercial eye trackers available on the market) are currently tested. The first (and the largest) of the cameras, shown in

Figure 5a, is representative of typical webcams. The Logitech C270 HD provides 720p of resolution at 30 fps. The other cameras depicted in

Figure 5 are devices based either on different CSI connectors or on USB (enabling operation under Windows, and only with native drivers). Another camera—the OV5693 5MPx manufactured by OmniVision Technologies, is compatible with Raspberry Pi (Raspberry Pi Holdings, Cambridge, UK) and Jetson Nano (Nvidia, Santa Clara, CA, USA) [

64]. The cameras mentioned further on are mainly intended for Raspberry Pi Zero. These include the ZeroCam OV5647 5MPx night vision camera, the ZeroCam OV5647 5MPx with a fisheye lens and a field of view of up to 160 degrees, and finally the smallest model—the OV5647 5MPx, known as a spy cam. The latter camera features a flexible cable without a PCB, which enables convenient installation in the field of view of the PWSN vehicle driver. The last unit tested, having a catalogue number of OV5640 5MP, represents USB cameras. These can also be used under the Windows OS, making it possible to process more complex video footage (on PCs with greater computing power). Where this is the case, images can be processed by the PWSN vehicle’s on-board computer. In some of the cases addressed in the study, the images of the eye/eyes are processed by the Raspberry Pi minicomputer systems. Where these computers do not have enough capacity, the video footage is processed under Windows 11. The following hardware platforms were used in the research: Raspberry Pi Zero 2 W, 512 MB RAM, 4 × 1 GHz, WiFi, Bluetooth, and Raspberry Pi version 4 B with 8 GB of RAM. The latter minicomputer was powered by the Broadcom (San Jose, CA, USA) BCM2711 quad-core 64-bit ARM-8 Cortex-A72 processor running at 1.8 GHz. It features a dual-band 2.4 and 5 GHz WiFi unit, Bluetooth 5/BLE, and an Ethernet port with speeds up to 1000 Mbps [

65,

66]. It was a deliberate choice to use minicomputers enabling wireless connectivity for the communication in the PWSN cycle prototype system.

The Dell 5070 computer powered by the Intel (R) Core (TM) i3-8100 CPU operating at 3.60 GHz with 32 GB of memory and the Microsoft Surface Laptop Go 2 platform powered by the Intel Core™ i5-1135G7 processor were used for video analysis under Windows. Although the OpenCV library can be installed on the Raspberry Pi Zero, due to its severely limited computing power (merely ca. a few GFLOPS, e.g., five), it was mainly used for real-time image acquisition. Models starting from 4B upwards (8–35 GFLOPS) are better suited for processing, given their limitations in meeting the needs of mobile projects, resulting from their significantly larger dimensions [

67]. However, the discussion on further integration of the measuring system goes beyond the thematic scope of this article.

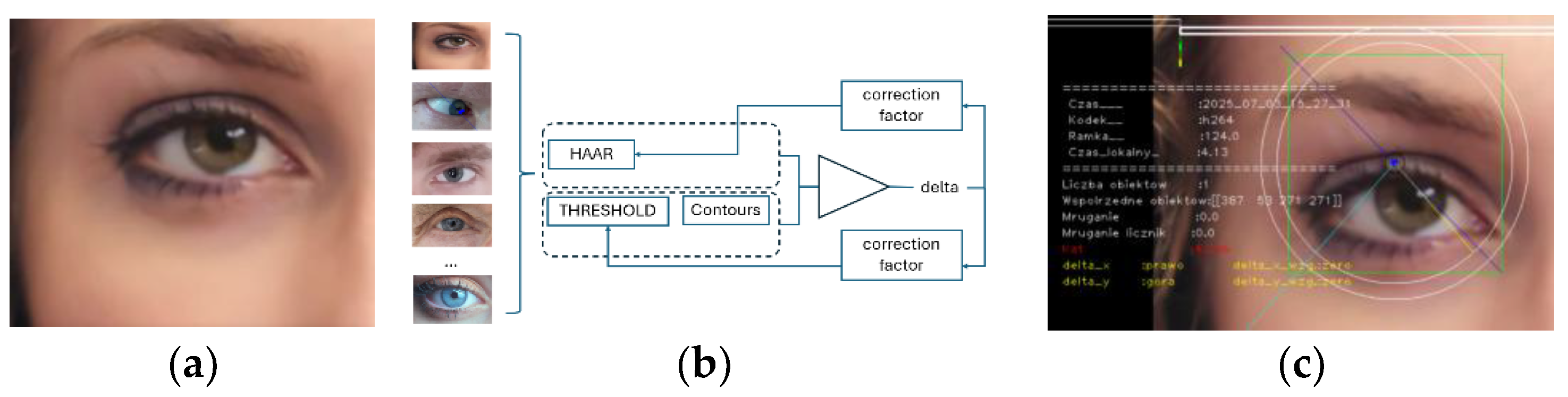

8. Software

In the GBB_ET software package, measurement applications designed for the analysis of eye characteristics can be fundamentally broken down by the camera’s field of view and the objects that can be analysed within that field. Accordingly, individual programmes can be divided into those which analyse eye movement (eye movement detector) and the entire face (face detector), the latter often being combined with eye analysis. This is because eye detection takes place in individual iterations related to face detection. Another application is a body detector, which analyses the movement of the entire body, and particularly that of the lower and upper limbs, including gestures made with the head or hands.

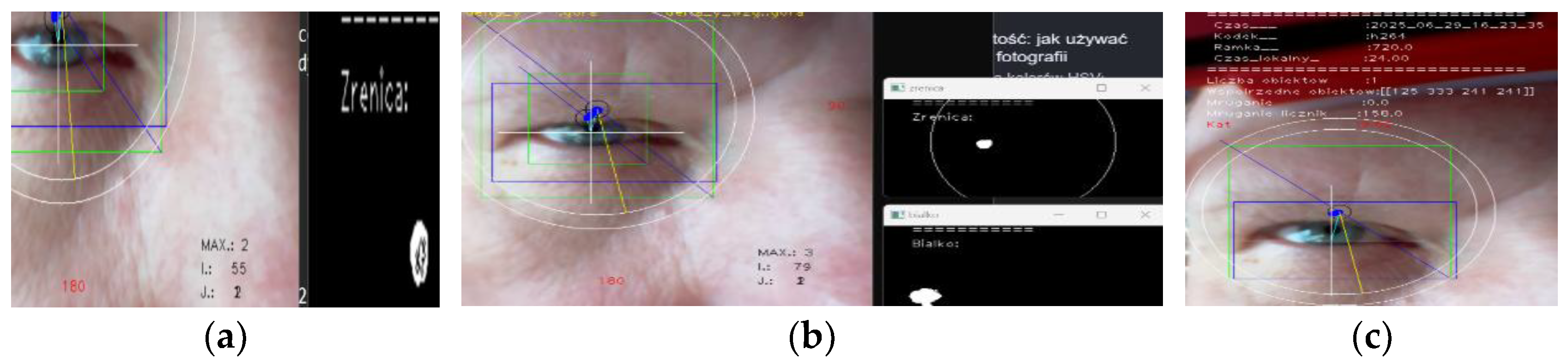

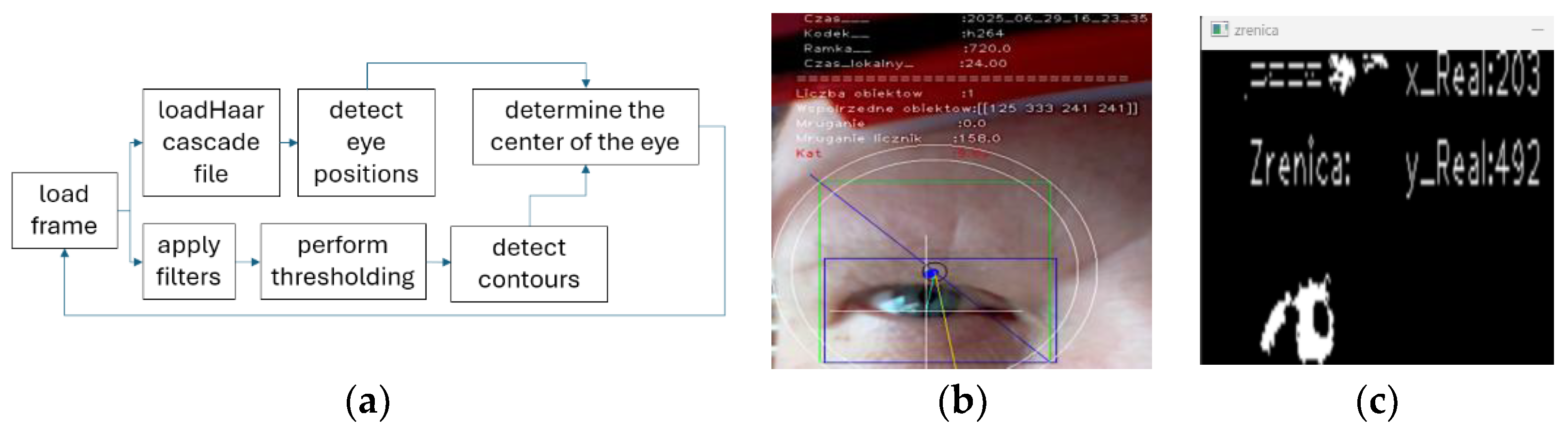

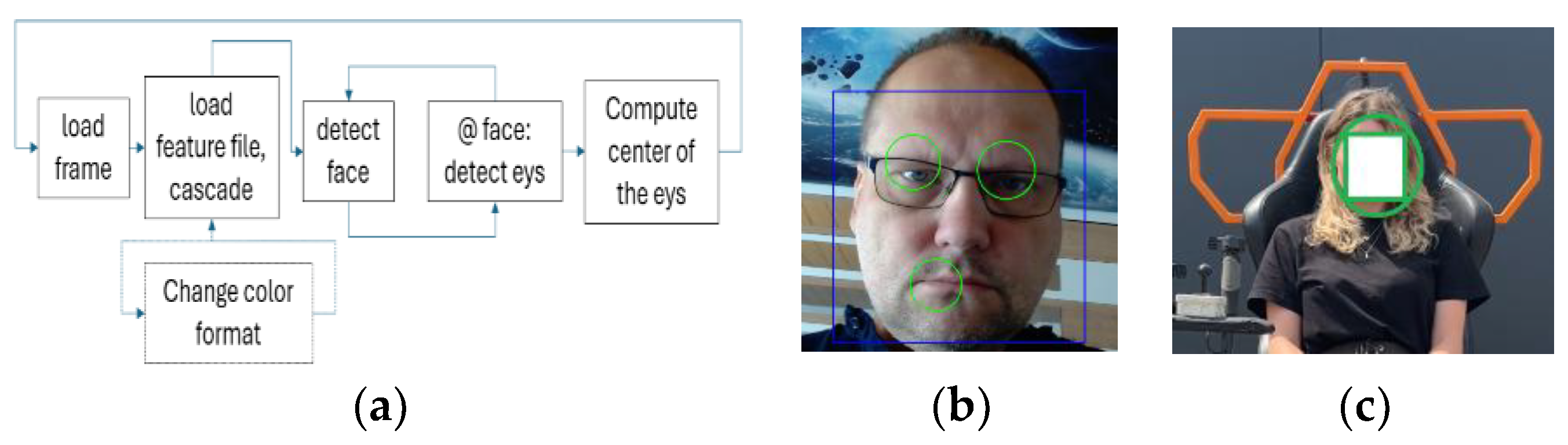

The eye position analysis programme comprises two procedures. One is based on Haar-like features, i.e., it finds the position of the eyes based on the information collected. This is possible owing to standard OpenCV library procedures. The Haar procedure enables face detection using Haar cascades, as they are commonly referred to. They provide an effective way of detecting faces, although they are relatively inaccurate (but fast). The algorithm functions by comparing the image with pre-defined features. The input data for the detection procedure are contained in XML files of the haarcascades.xml type. They determine the accuracy of the procedure. OpenCV and other providers supply ready-to-use files which can serve various purposes, including recognition of selected features. According to the procedure in question, these features are grouped in what are known as cascades, and they are compared in steps. In the first step, only a few of the most important features are checked. If there is a match, the procedure checks the next group, i.e., the cascade which is quantitatively larger, then the subsequent one, and so on, hence the high processing rate. If the comparisons of all the features are correct, what follows is identification, which is provided in the form of a rectangular area where the object subject to recognition has been identified. This procedure is also used to recognise road signage when a cycle for PWSN is used to navigate the road network.

As the research has revealed, although very fast and not requiring advanced (time-consuming) processing of additional image frames, the Haar procedure is not accurate. However, its precision can be improved in many ways, e.g., by changing (updating) the *.xml feature files, or by developing one’s own files based on the characteristics of known vehicle end users with special needs. A diagram of such a procedure is shown in

Figure 12. According to this approach, such features would be constructed based on the PWSN cycle users, thus increasing the algorithm’s precision. The problem of the accuracy of the methods in question is discussed at the end of the article. The second method requires more operations to be performed on the image but is much more accurate. Under this procedure, the image is first colour-converted. Next, colour thresholding (elimination of colour variability) takes place in the colour space of a given format. It is assumed that one is looking for the colour black in the frame, which corresponds to the pupil of the eye (ignoring health conditions that may affect this procedure, which pertain to ca. 1% of the population). Once the image has been processed accordingly, assuming that the eye has been correctly framed, only the pupil will remain. Next, its contour (pupil) is found using standard OpenCV library procedures. The actual centre of the pupil is thus established, typically with much more precision than the Haar procedure would otherwise provide. Unfortunately, as tests have shown, the second procedure is susceptible to various types of artefacts in the frame image, such as reflections from car windows, affecting the recorded colour space. This undesirable phenomenon is eliminated by the application of filters on glasses (as in a conventional ET device) or by using goggles mounted on jeweller’s caps with visors (

Figure 5b,c). The second procedure evidently requires more intermediate steps when using the OpenCV library (more time, which can be critical in the process of control of a vehicle for PWSN).

Information about the driver’s intentions is conveyed not only by the eyes, but also by head and body movements (this article only touches upon the characteristics obtained in the visible light spectrum). For this purpose, detection is also conducted using a vehicle camera, known as a situational/silhouette camera, as well as procedures for detecting the driver’s face and its elements. It should be mentioned that eye detection procedures are generally applied in conjunction with face detection procedures, as the detected face provides a spatial reference for the eye detection procedures. Nevertheless, in line with our approach, these procedures are sometimes combined, while being separated at other times, in different modules of the GBB_ET software package, depending on the system configuration. The foregoing has been schematically illustrated in

Figure 13.

Figure 13b clearly shows that the procedure can identify many objects incorrectly (in this case, the corner of the mouth is interpreted as a potential eye location). Therefore, it requires further modification, the results of which have been shown in

Figure 13c. In these two cases, an extension of the detection scheme is the detection of the entire body of the PWSN vehicle driver or of individual limbs, both separately and in pairs. Using this approach (and from such a perspective), it is also possible to detect gestures made with hands (sensors dedicated for microcontrollers can also be used for this purpose: Arduino, Monza, Italy, Raspberry Pi, STM, Plan-les-Ouates, Switzerland etc.). The authors have some experience in the use of LoRa networks for the navigation of vehicles intended for PWSN. People who are incapable of operating wheelchairs should be offered the possibility of their remote control by pilots/operators via this network (a kind of virtual guidance). Another option is to control the PWSN vehicle using a wireless LoRA network dedicated to PWSN and specifically designed by the research team. In such cases, the PWSN vehicles would be remotely controlled along a route by guides assigned to persons with special needs. Navigation using the LoRa network for PWSN has been described in a separate publication [

72]. Such remote navigation can be conducted from distances reaching up to several kilometres, while the person using the PWSN vehicle always remains under the guide’s strict oversight.

The purpose of the method of software operating on a recorded image with recorded eye movements is to develop steering and control signals for the PWSN vehicle, i.e., to determine when and where the vehicle should go left, right, or forward, start, stop, or reverse, etc. It is also to set specific objectives for the drive controller, transferred to and implemented as clear messages for the PWSN vehicle’s drive unit(s) (it has also been assumed that this measurement system is to be adapted to other mountain cycles for PWSN). Such messages as go, stop, brake, accelerate, go left/right, or turn lights on/off are intended to reflect the driver’s intentions, communicated via the organ of vision, potentially supported by markers and gestures, including facial expressions.

Figure 14a,b shows the messages assumed for specific pupil positions read while driving (complemented by the situational context, e.g., resulting from GPS data, and data from other sources). As the pictures demonstrate, the position of the pupils can be determined for each direction of movement within a certain range defined in the calibration procedure. Thus, a left turn can be defined within a range from slight to medium to full. In subsequent studies, we intend to develop a fuzzy logic system for these purposes. The same applies to moving to the right. Upward and downward eye movements mean acceleration and deceleration, respectively (it should be remembered that the vehicles are equipped with electric motors, generating high torque instantaneously, causing dynamic acceleration). Some of the tests have been performed in infrared, but apart from taking the specific lighting conditions of the traffic scene into account, they have not made much contribution to the research.

Figure 14 shows that during vision analysis, contextual information about the functioning of this organ is available.

One more problem to consider is that of torsional movements, which are best read using EMG electrodes installed on the temple.

Therefore, using only this form of the PWSN vehicle control (i.e., eye steering) can be dangerous. Another issue is the separation of pupil movements from involuntary movements or those related to observing the traffic scene (more broadly) in natural environment, since this is precisely the reason why mountain trails are used, after all. Consequently, the said procedures have been modified by introducing additional information and a system of two cameras or a face camera, where both eyes are monitored (mounted on a GoPro-type support). Movement to the left is achieved by closing the right eye (as a precaution when changing direction), depending on the current blinking characteristics measured on an ongoing basis, for a specific period of time, followed by briefly glancing in the direction of movement or—with the vehicle’s situational camera in use—turning the head slightly to the left. To turn in the other direction (right), one closes the left eye and, if possible, performs a short head motion to the left. Forward movement is commanded by moving the eyes upwards, possibly accompanied by a head movement or with a specific eye closed (this being a matter of configuration of the file containing settings for the adopted mode of control). When steering the vehicle, one should keep the eyes in a given position for longer. The health condition context of this approach has not been studied, but according to the literature, intensive activity in terms of mobility and visual acuity should also promote the rehabilitation of the sight organ. This is an additional benefit arising from this solution. Slowing the vehicle down adequately involves moving the eyes downwards, with the head moving downwards as well, if possible, and with one eye closed. To separate these signals from the motions performed in relation to nature observation, an additional gesture can be made, e.g., the free hand raised upwards, communicating such an intent in the field of view of the situational camera. The choice of the decision-making hand is determined by the functionality of the driver’s dominant hand and the corresponding way the movement manipulator has been installed on the left or right side of the PWSN vehicle (

Figure 1a,b).

The diagram below represents a simplified model of control of the vehicle in question. It is noteworthy that, in this context, the vision control system can be divided into the part associated with the control of the area in front of the vehicle obtained using a camera and that based on a Lidar system. Superimposed on this information are the data retrieved from the vision control subsystem, thus limiting the vehicle’s steering capabilities based solely on the control data obtained from the organ of vision. Such a limitation is implemented so that the target direction of the vehicle’s motion will cause no traffic safety issues. This is also controlled by accelerometer and GPS systems. Additionally, the GPS and navigation subsystems make use of GIS data. To this end, most of the mountain trails located in two Polish provinces were surveyed for this project.

As of now, the most unreliable of the subsystems is the one which uses the data extracted from the driver’s blinking process. Unfortunately, this problem requires further time-consuming and cost-intensive research. The control (drive control, CD) can be formulated as an ordered seven:

where

ACC—data from the accelerometer system,

ET—data from the eye tracker system,

GPS—data from the GPS system,

VC—scene camera data, silhouette camera,

GIS—selected data from the GIS system,

ENG—current data from the drive system,

LID—data from the LIDAR system.

A detailed vehicle control system will be presented in the next article, including the application of fuzzy logic. This approach stems from the different ranges of observed eye position variations in the context of a mountain trail.

Figure 15 shows a simplified control model.

9. Results

The article provides a discussion of the eye control device in question, its implementation and pilot test results. The results have been compared with the characteristics obtained using relatively more expensive eye-tracking solutions (three times more so). The results are presented in the context of laboratory tests of the prototype proposed. These results are continuously updated due to the magnitude and nature of the study.

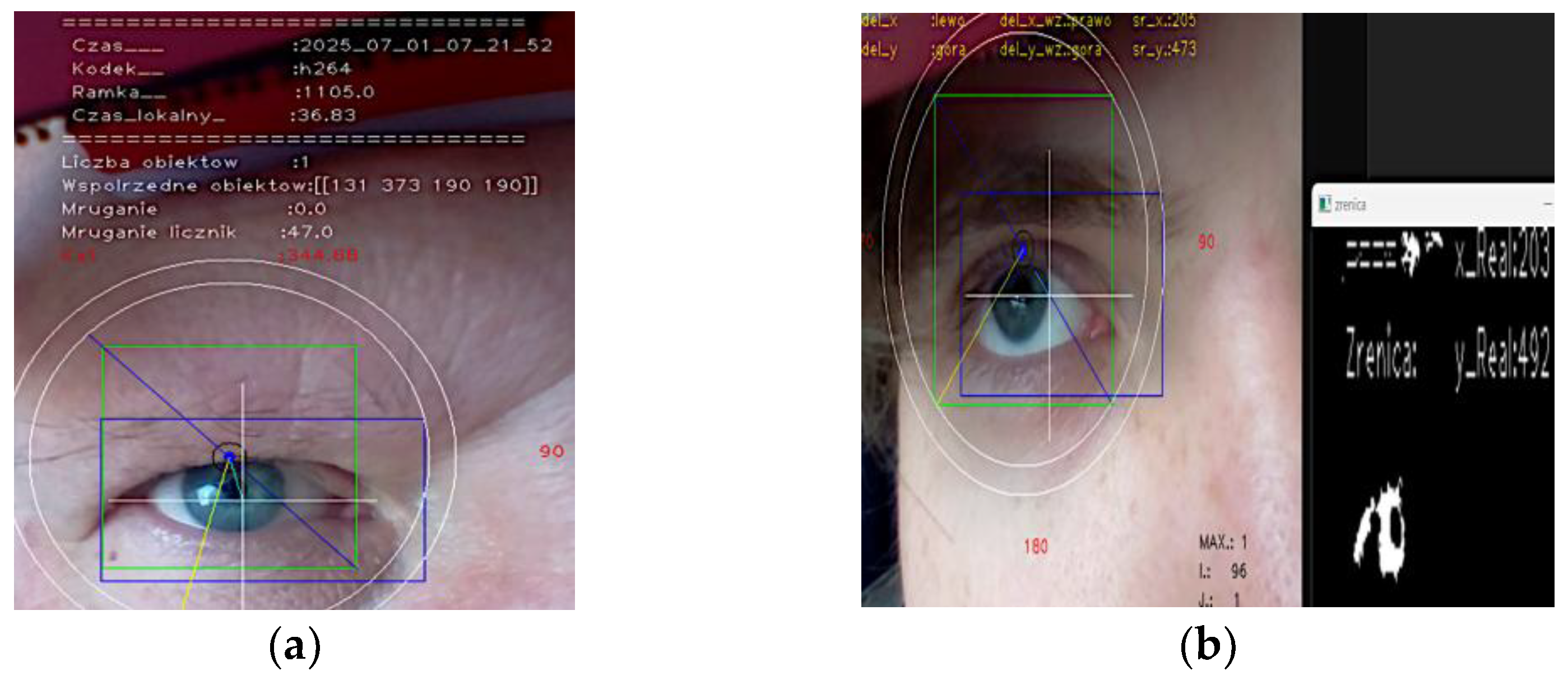

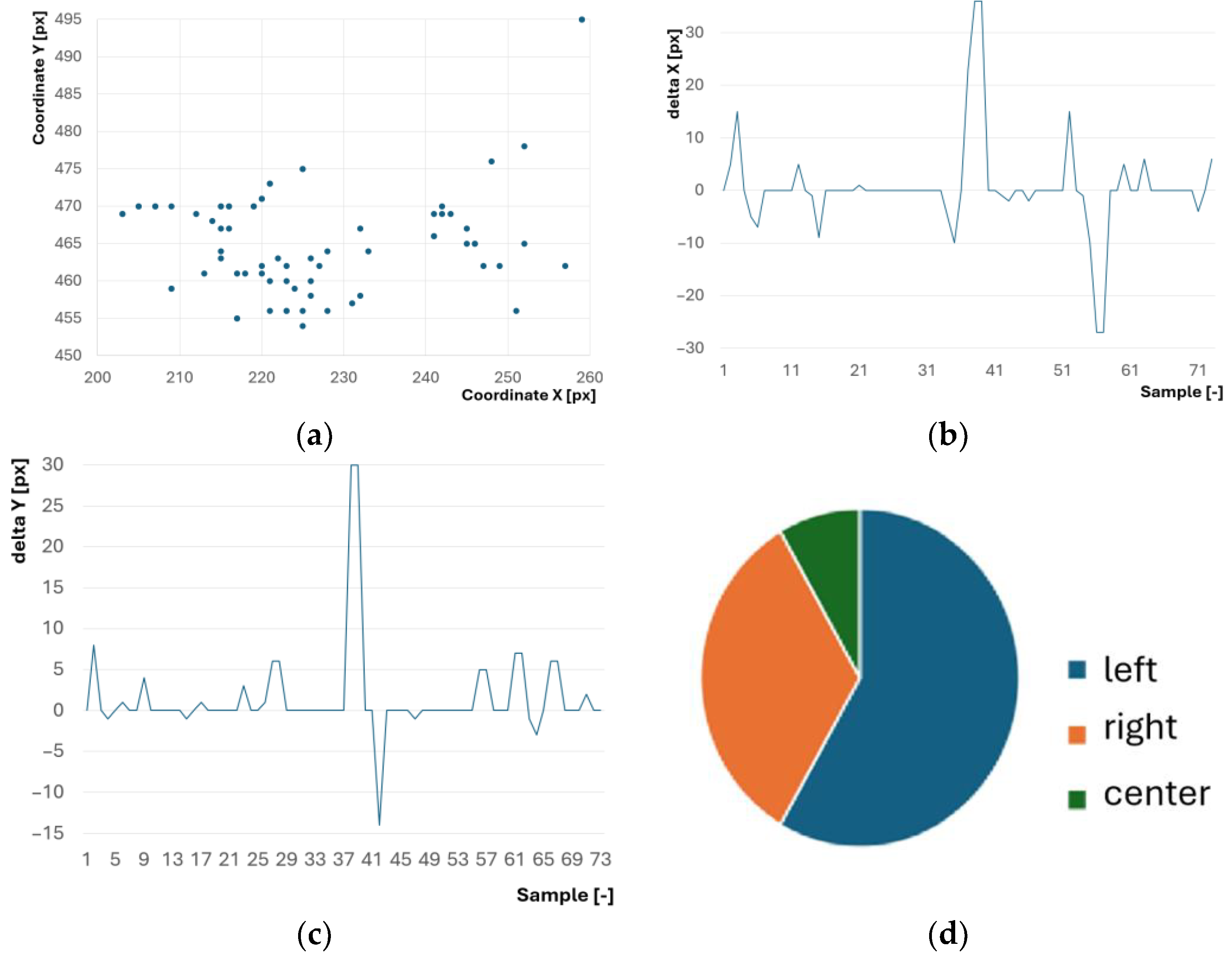

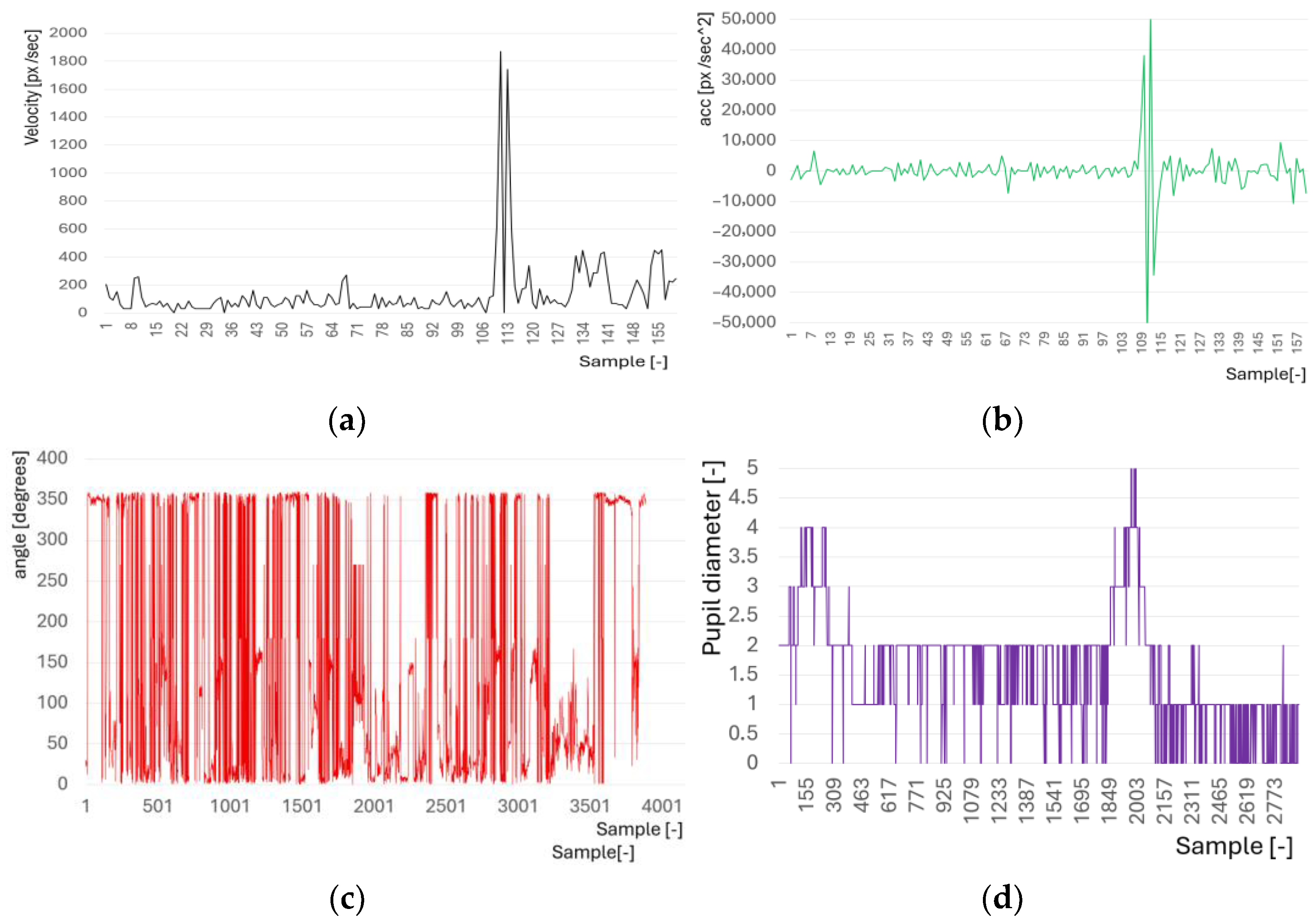

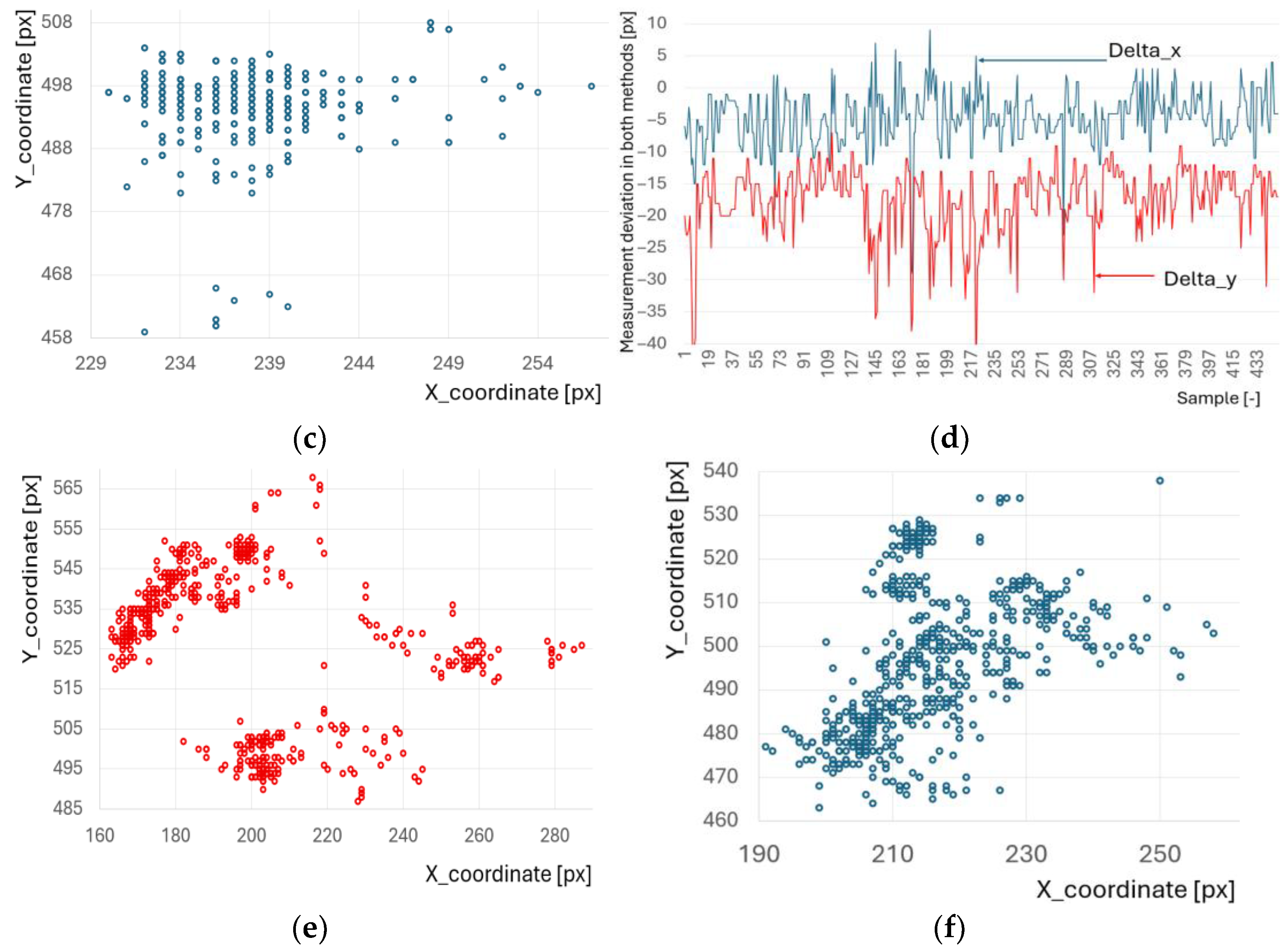

Figure 16 presents selected characteristics of the vehicle driver’s eye movements obtained using the procedures described above.

Figure 16a shows the distribution of fixation points on the traffic scene, typical of the ET technique. Every point in that graph corresponds to a specific measured position of the eye.

Figure 16b,c illustrate the movement of saccades (broken down into two axes), where successive eye movements to the left and right were recorded and accumulated between footage frames.

Figure 16d contains the statistics of left and right movements as well as of the zero position (central) fixation of the eye. The data provided in

Figure 16d indicates how complex it is to obtain information about the movement direction based on data retrieved from the driver’s sight. Such studies, apart from providing the possibility of applying their results to control the turning behaviour of a vehicle, also often show which eye is dominant. However, these characteristics were not used to analyse the upward and downward deviation of the pupil (as shown in

Figure 17c).

Figure 17 illustrates the data obtained from the authors’ signature measuring device, which is not a commercial eye tracker. It presents a selection of the eye movement characteristics obtained.

Figure 17a represents eye movement speed, which, however, was not converted to the traffic scene dimensions, but to those of the screen frame.

Figure 17b, on the other hand, depicts eye acceleration.

Figure 17c indicates the angle in the contour circumscribed on the eye socket to which the combined position of the eye on the x and y axes corresponds.

Figure 17d is a diagram which represents the pupil diameter. As aforementioned, the pupil itself is extracted from the eye image via a series of colour transformations performed on the image.

In the future, these characteristics will make it possible to identify the points of gaze fixation in the traffic scene (identify objects in the traffic scene). This can be achieved by using the measurement system shown in

Figure 9a,b. It is a device which features synchronised cameras: one for the traffic scene, focused on the area in front of the PWSN vehicle, and one for monitoring the driver’s eye movement. It is through the integration of such sub-systems that one can create a prototype with functionality like that of the typical commercially available ETs. The work pertaining to this problem is still ongoing. The dual-camera systems were controlled from a single computer with a common time base, set using a tool of appropriate accuracy (requiring synchronisation with the GPS control unit), and synchronised by frame [

73]. Over the course of the analyses, it was noticed that the Haar procedure was particularly far from accurate in pupil positioning. Significantly better results were obtained using image colour operations and contour detection. On the other hand, the former procedure is more or less susceptible to external conditions during measurements. Therefore, the accuracy of both procedures was compared with the known centre position of the eye.

Figure 18a–c concern one test subject, while

Figure 18d–f apply to another person (for comparison): the former being a young (22 years old) person with no vision impairments, and the latter being a person over 50 years of age with significant vision impairments.

Figure 18a shows the deviations between the two methods in terms of the pupil positions measured. It clearly shows that larger deviations are observed on the y-axis (vertically in the frame), while smaller ones are observed on the x-axis (horizontally in the frame). For the first person, most of the horizontal deviations revealed between the methods were up to a few pixels (a result almost as good as that of professional eye trackers). Vertically, the differences reached several dozen pixels. For the second person, most of the horizontal deviations between the methods in question came to several dozen pixels, with high variance. Vertically, the differences also reached several dozen pixels, but they were much smaller than in the horizontal plane, also with high variance.

Figure 18b,c,e,f show the distributions of the pupil centre locations calculated using both methods. The differences are significant, but it seems that certain factors can be implemented for the Haar method to correct it. Alternatively, one can improve the feature description files at the algorithm’s disposal or develop better datasets.

Table 1 summarises the statistics obtained for both people and methods.

The table above is provided for reference purposes only, and it has been compiled following tests of the project team members. It is intended to illustrate the problem of the high variability in the statistics subject to observation. This variability cannot be explained solely by the accuracy of the device; it also results from other factors such as the personal circumstances of the drivers, weather, and lighting conditions. As can be seen from the data included in

Table 1, the deviations on the Y axis are excessive. This is particularly problematic, because this is the axis where the observed range of motion is smaller. According to data from the literature, the correct horizontal field of view is approximately 180 degrees, and the vertical field is 130 degrees. The above field-of-view values may vary slightly due to individual parameters such as the field of view of a single eye, defects, ambient lighting, diseases, etc. In the context of this study, this is important, because controlling the lateral axis of movement is easier than controlling the forward axis, unless the axis is reversed, which is inconsistent with the intuitive sense of space observed in the population. Therefore, this issue requires further detailed research, including in trail conditions where additional stimuli cause significant differences in the observed ranges of movement. This table is therefore only an illustration of a significant problem in this matter.

The accuracy of the methods can also be tested by studying whether or not they ensure correct identification of the central eye position. Such tests are conducted using a static image of the eye or with a dummy featuring a display showing different eyes of different people in sequence. To this end, an eye database was developed and loaded into the pupil centre calculation procedures discussed in this article. This made it possible to measure the deviation of the actual eye position from the one established by measurements performed in line with a given method [

74].

The accuracy of the pupil centre identification algorithm was tested with reference to a collection of static stock-type images where a series of faces and eyes were collated to create a video [

74]. Each face modified the dataset in terms of the deviation of the actual eye centre (the pictures corresponded to one another in this respect) from the point at which the pupil is measured. Unfortunately, the measurement error reached several dozen pixels, which represented approximately 2%. The accuracy of the calculations applied depends on the test databases used in relation to the file containing features, such as haarcascade_righteye_2splits.xml, haarcascade_eye.xml, or a similar file. It is best to prepare and customise such a file based on a selection of actual vehicle users with special needs.

Every human eye is only similar. This problem is addressed by biometrics. However, we are only interested in the measures of eye position, which also pose certain issues when being determined. The eye position measurement can be affected by various anatomical features, such as eye folds, individual characteristics (congenital and acquired diseases), or even illumination, humidity of the environment, etc. This is the reason why we have been consistently building our own database of eye representations, matching diverse environmental conditions and aligned with the diagram provided in

Figure 19. It will be somewhat easier to restrict this database to the people who have consented to the processing of their personal data. Another solution available in this respect is to implement additional calibration procedures to be initiated each time before the vehicle is started.

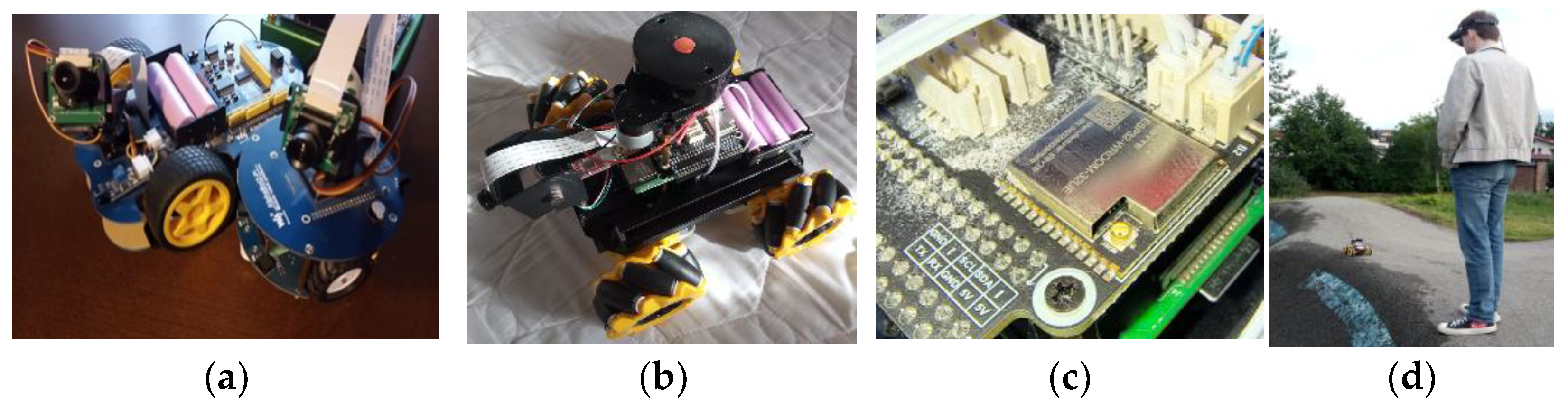

10. Method Validation

Four validation procedures were applied: with mobile robots, with a commercial eye tracker, with a comparison of data from different cameras, and an analytical one. The validation of the method proposed, i.e., of the capacity to control a moving wheeled object in a designated environment, was conducted under laboratory conditions and on a test track. For this purpose, a group of mobile robots with wireless communication systems was prepared, enabling communication by means of the device’s microcontroller. Some of the robots subject to the tests have been shown in

Figure 20a.

Figure 20b depicts the robot selected for further testing,

Figure 20c shows the robot drive controller, and

Figure 20d shows the vision-controlled robot on the test track.

The chosen robot is here described in terms of its chassis, control and communication systems, supplied separately, and sensor system. This robot (

Figure 20b) enables the dynamic testing of movement direction changes, which is why it was selected for further testing. The robot was built based on an intelligent chassis of the Robot Chassis series from Waveshare, Shenzhen, China (

Figure 20b). The advantage of the chassis is its shock-absorbing design, which is important for the measurement systems used in validation, especially the optical ones. The chassis is based on Mecanum wheels. They represent a special type of wheel which allow the vehicle to move in any direction, including diagonally. Such a wheel arrangement enables unconstrained movement in all planes. The chassis (

Figure 20b) is compatible with the Raspberry Pi 4B, Raspberry Pi Zero, and Jetson Nano microcomputers, although it can also be connected to other development boards. A unit from the same company, General Driver Board (Waveshare), was chosen for the controller. It is a multifunctional controller designed for the control of mobile robots, and it is based on the ESP32-WROOM-32, Espressif Systems, Shanghai, China module. This board cooperates with the following platforms: Raspberry Pi and Jetson Nano (Raspberry Pi 4B was used in the validation process). A device known as a general-purpose controller makes it possible to control DC motors both with and without encoders (in this case, the distances covered were established in a different manner). The board features standard terminals for connecting numerous typical robot components and accessories. These can be OLED displays, sensors, WiFi antennas, and sub-assemblies for environment scanning, e.g., Lidar. The device used under the research project in question was a Lidar from the same company, Waveshare, Shenzhen, China., namely D200 Developer Kit–LiDAR LD14P, offering 360-degree scanning and up to 8 m of operating range [

75].

Figure 20d shows the robot along with the person who controlled its movements on a test track designed for mountain bicycles. On such a track, each time the robot is overdriven, it typically causes it to capsize. However, this is a good testing ground for the problems at hand.

The robot controller supports 2.4 GHz WiFi, Bluetooth 4.2 BLE, and ESP-NOW wireless communication, and features a 9-axis QMI8658C IMU. Additionally, it has a built-in 40-pin GPIO connector, which is intended for connecting the Raspberry Pi, Jetson Nano, etc., boards. Communication with the system is provided through the serial/I2C port, which will make it possible to connect additional robot tracking and positioning sensors in future research.

The results obtained over the course of the robot motion control process performed under laboratory conditions and on a test track have been provided and discussed below. They depend on the mobile robots in use as well as their sensory equipment. The robots operate with different spatial orientation systems, ranging from cameras, limit sensors, and lines to a 2D planar Lidar, as shown in

Figure 20b (a 3D variant will be examined in further research). In order to assess whether the method proposed was correctly validated in relation to the capacity to move along a selected route, the number of contacts between the test robot and barriers, resulting from erroneous control commands obtained from the eyes, was calculated. The number of misinterpreted messages has also been provided. All these results have been collated in

Table 2.

The results are specific to the route being performed on the test track. They are also specific to the person conducting the tests, requiring further research on a large group of test drivers.

11. Conclusions