A Lightweight Teaching Assessment Framework Using Facial Expression Recognition for Online Courses

Abstract

1. Introduction

- (1)

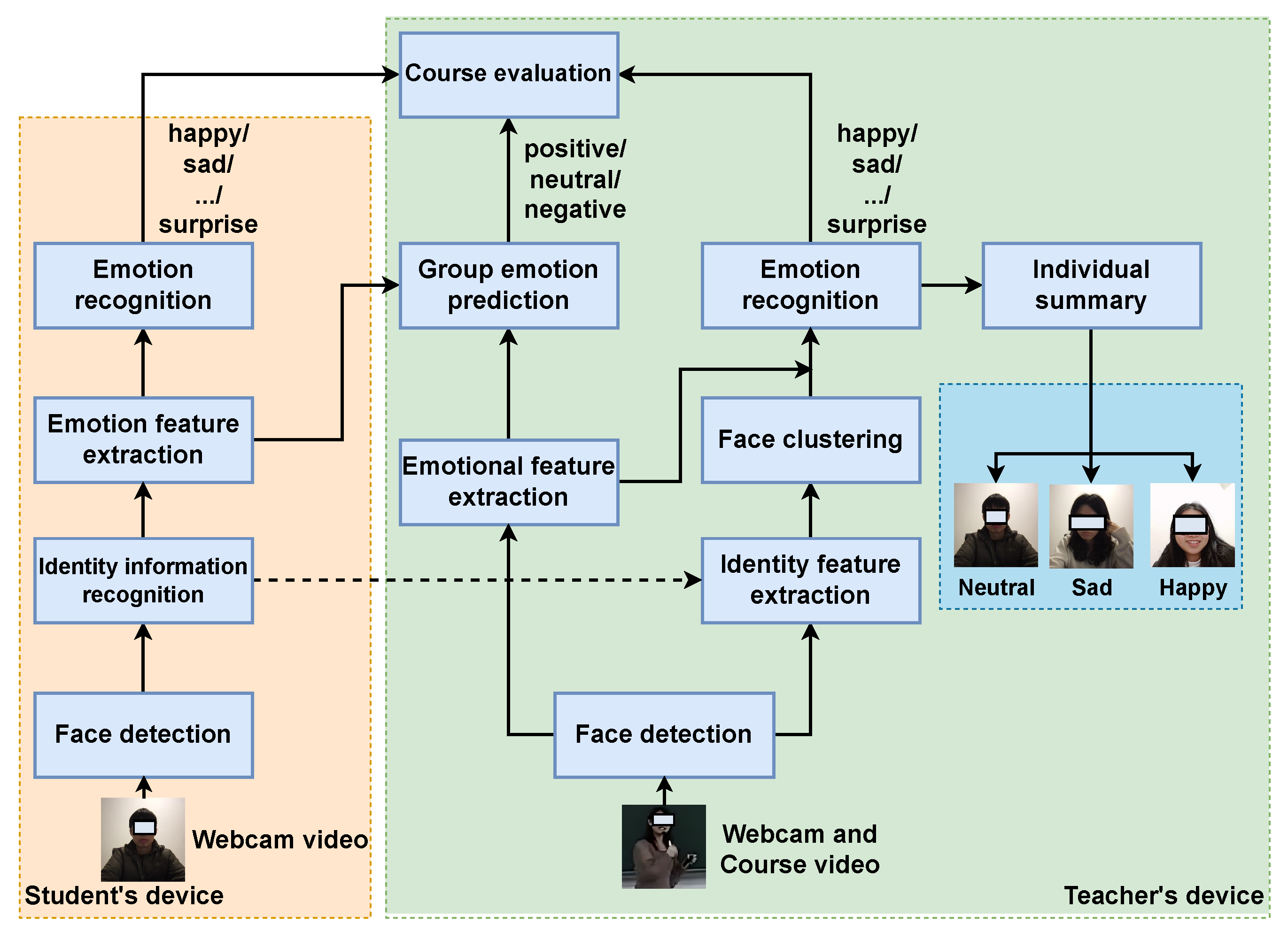

- A comprehensive, real-time emotion classification system is developed for online education, utilizing facial patterns for video-based analysis. The system predicts students’ emotions locally on their personal devices, thereby safeguarding privacy and autonomy. The resulting emotion feature vectors are then securely transmitted to the instructor’s device to facilitate classification of emotion trends across the entire student population. Moreover, the framework’s seamless integration features allow effortless incorporation into various online course platforms and conferencing software, enhancing emotional intelligence and interactivity in virtual learning environments.

- (2)

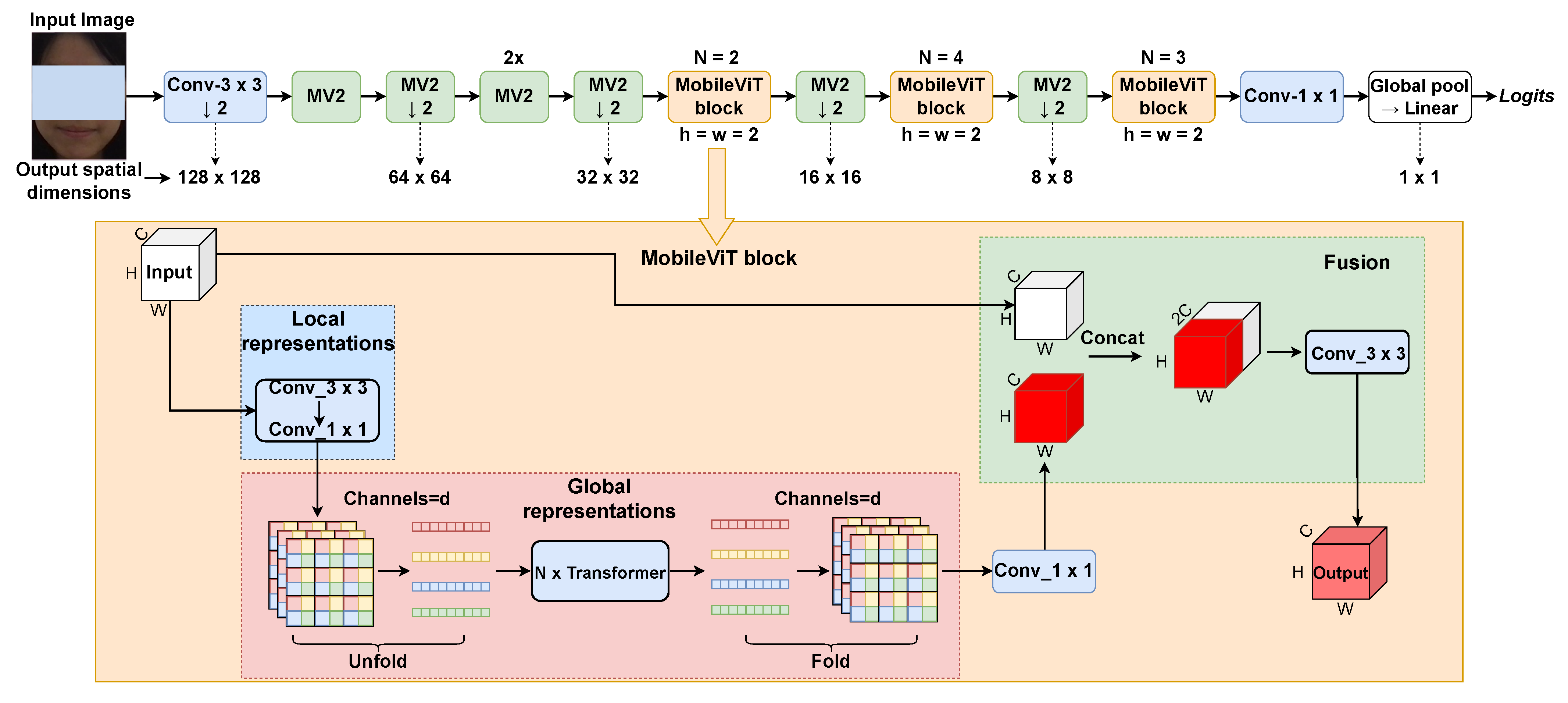

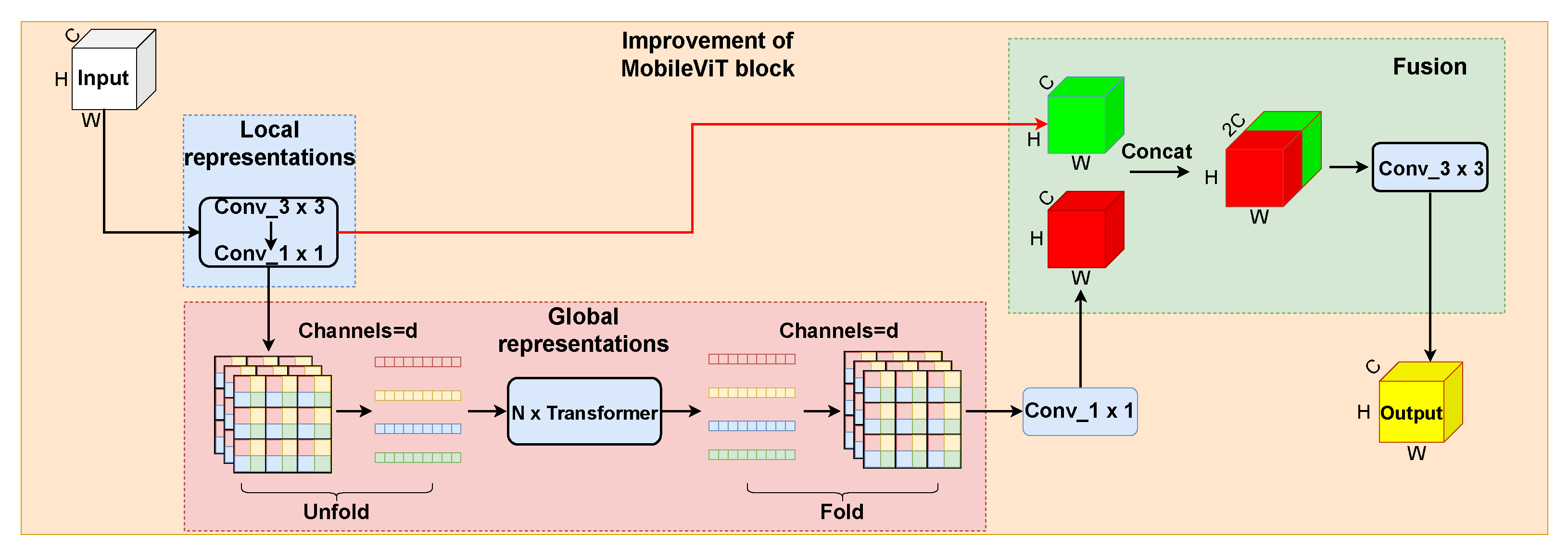

- To extract emotion features from facial images, this study proposes an enhanced lightweight facial expression recognition (FER) model based on the MobileViT architecture. By adjusting the input connections within the fusion module, the model acquires more representative features. Experimental results demonstrate a substantial improvement in prediction and classification accuracy on benchmark datasets.

- (3)

- To further improve prediction performance while minimally increasing memory usage and parameters, knowledge distillation techniques are incorporated into MobileViT-Local. Compared with mainstream baseline models, the proposed model achieves competitive results.

- (4)

- A customized emotion dataset is developed to support emotion recognition research in online learning environments. It includes facial recognition data collected from students during real learning sessions, followed by essential data preprocessing and augmentation.

2. Related Work

- (1)

- Face detection: A lightweight CNN-based detector identifies facial regions and generates bounding boxes around each face.

- (2)

- Alignment: Key facial landmarks (eyes, nose, and mouth) are detected, and an affine transformation aligns faces to a standardized frontal pose.

- (3)

- Rotation correction: Faces tilted beyond ±5° are rotated to ensure vertical alignment of the nasal bridge.

- (4)

- Resizing: Aligned faces are resized to a fixed resolution (e.g., 112 × 112 pixels) using bilinear interpolation, normalizing input dimensions for downstream modules.

- (1)

- Feature embedding: A lightweight face recognition model generates 128-dimensional feature vectors encoding identity-specific attributes.

- (2)

- Database matching: These features are compared with a pre-registered student database using cosine similarity, and matches exceeding a threshold authenticate identity.

- (1)

- Network architecture: Pre-trained on datasets, the network extracts spatiotemporal features. Bottleneck layers reduce dimensionality while preserving emotion-relevant patterns such as eyebrow furrows and lip curvature.

- (2)

- Feature representation: The network outputs feature vectors capturing probabilities of action unit activations.

- (1)

- Feature aggregation: Emotion features across frames are averaged or processed through a lightweight LSTM to model temporal dynamics.

- (2)

- Classification: A shallow fully connected layer maps aggregated features to seven emotion classes (anger, disgust, fear, happiness, sadness, surprise, and neutral) using a softmax function.

- (1)

- Data transmission: Packets containing emotion labels, timestamps, and confidence scores are transmitted to the teacher dashboard.

- (2)

- Edge optimization: Quantization and model pruning reduce network size to an optimal level.

- (3)

- Teacher interface: Real-time heatmaps display the emotion distribution across the class, with alerts triggered for persistent negative states.

- (1)

- Localization: Bounding-box regression identifies participants’ facial regions.

- (2)

- Feature encoding: High-dimensional embeddings are extracted through triplet-loss optimization, capturing invariant facial attributes across pose variations.

- (3)

- Computational workflow: Inference is parallelized on GPU-accelerated cloud instances to reduce per-frame latency.

- (1)

- Integrates sparse facial expression indicators (e.g., eyebrow movements, lip curvature) across temporal windows.

- (2)

- Applies attention mechanisms to weight salient behavioral cues.

- (3)

- Generates time-synchronized engagement metrics using recurrent neural architectures.

- (4)

- Produces continuous emotion-tendency estimates reflecting collective responses to instructional content.

- (1)

- Biometric matching: Facial embeddings are compared against registered identity databases using metric learning, with adaptive thresholds to accommodate occlusion scenarios.

- (2)

- Temporal clustering: Unsupervised association algorithms link discontinuous facial appearances into identity-continuous sequences.

- (3)

- Personalized baseline modeling: Individual neutral-expression benchmarks are established through historical analysis.

- (4)

- Adaptive recognition: Emotion classification adapts to individual expressiveness patterns via transfer learning approaches.

- (1)

- Macro-analysis: Correlates cohort engagement trajectories with pedagogical events.

- (2)

- Micro-analysis: Identifies learning anomalies through longitudinal emotion tracking.

- (3)

- Synthesis framework: Cross-references group-level engagement metrics with personalized emotion profiles to generate instructional-effectiveness indices, personalized intervention triggers, and content-optimization recommendations.

3. Proposed Model

3.1. Lightweight Vision Transformer

3.2. MobileViT-Local Through Distillation

3.3. Soft Distillation

4. Experimental Results

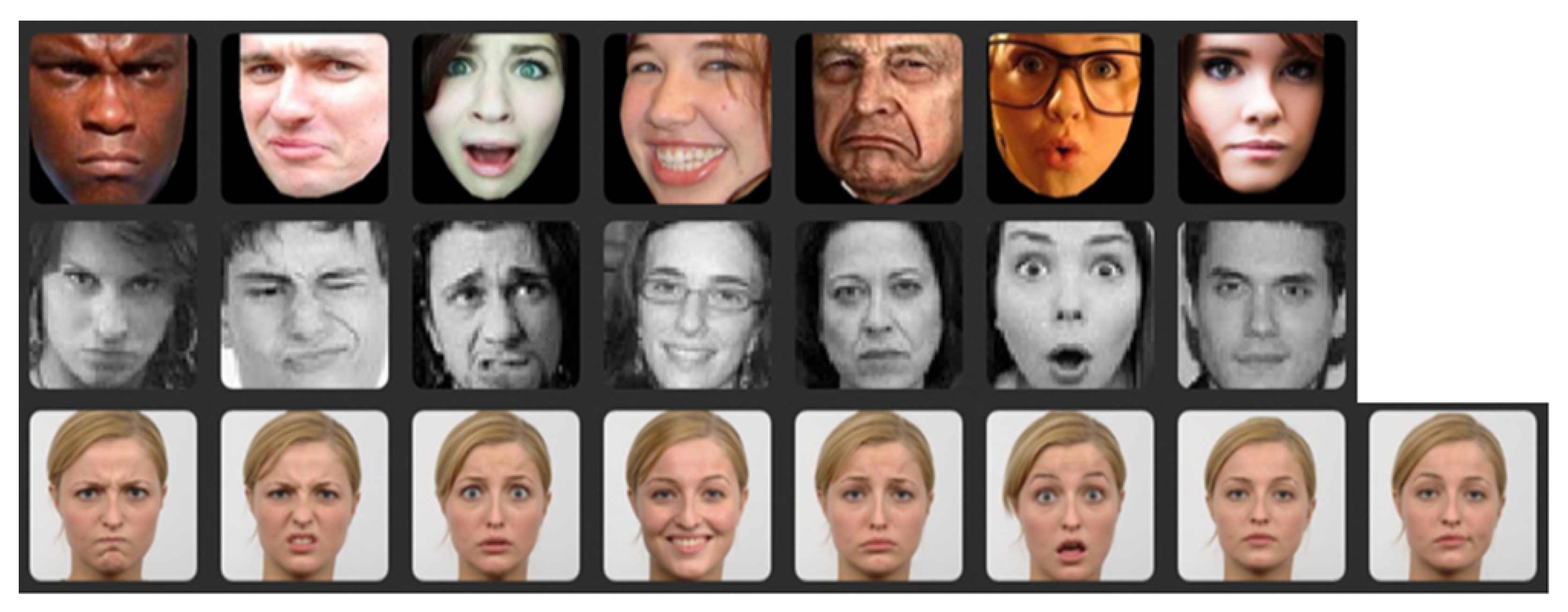

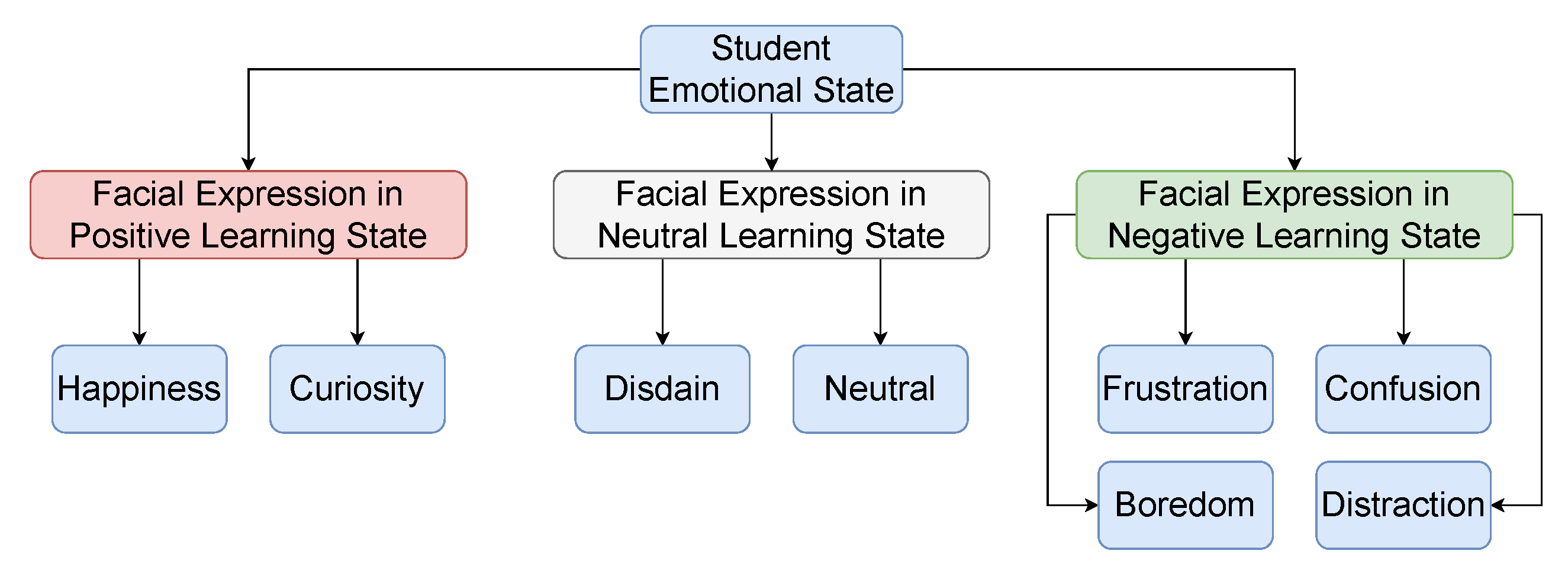

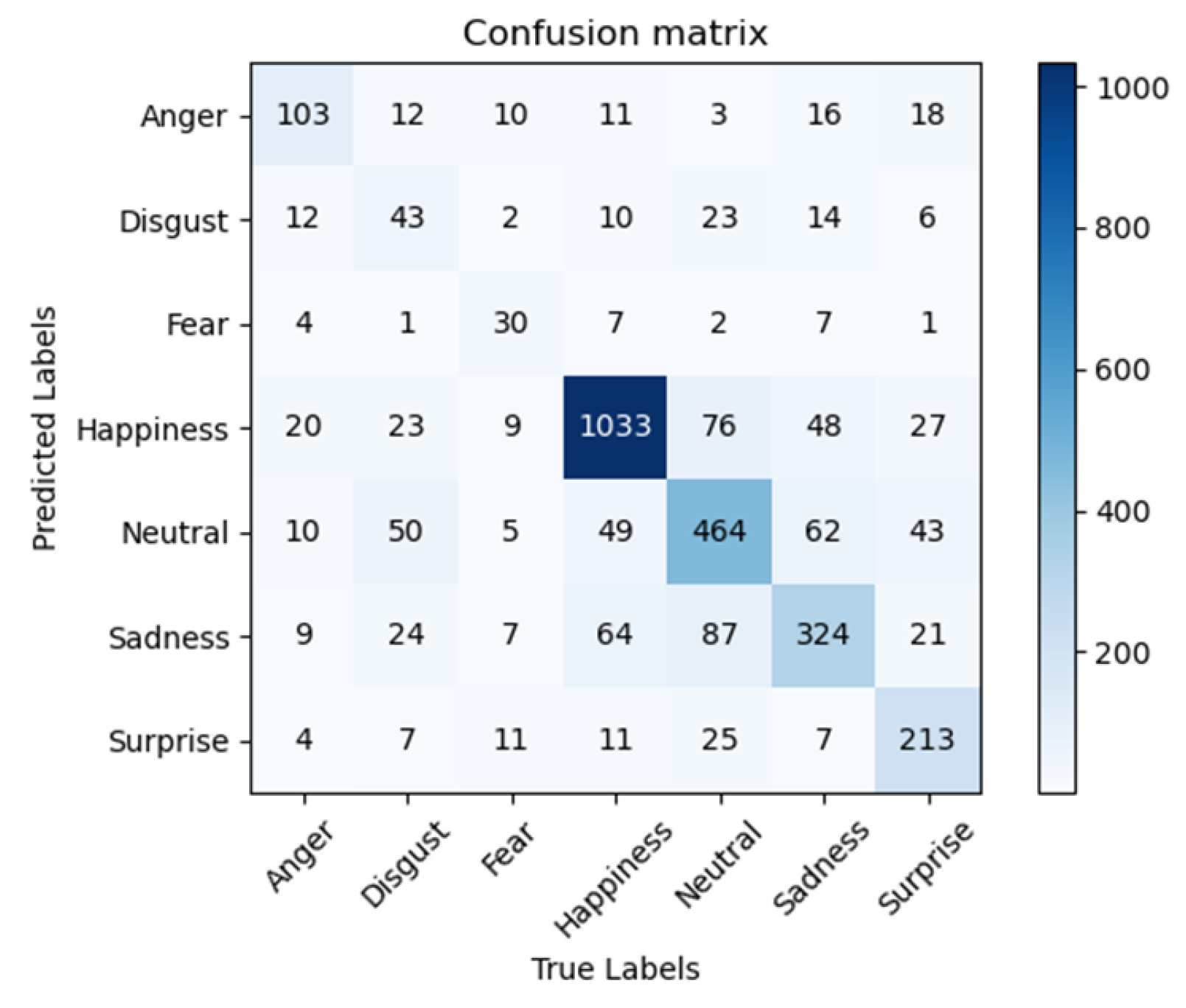

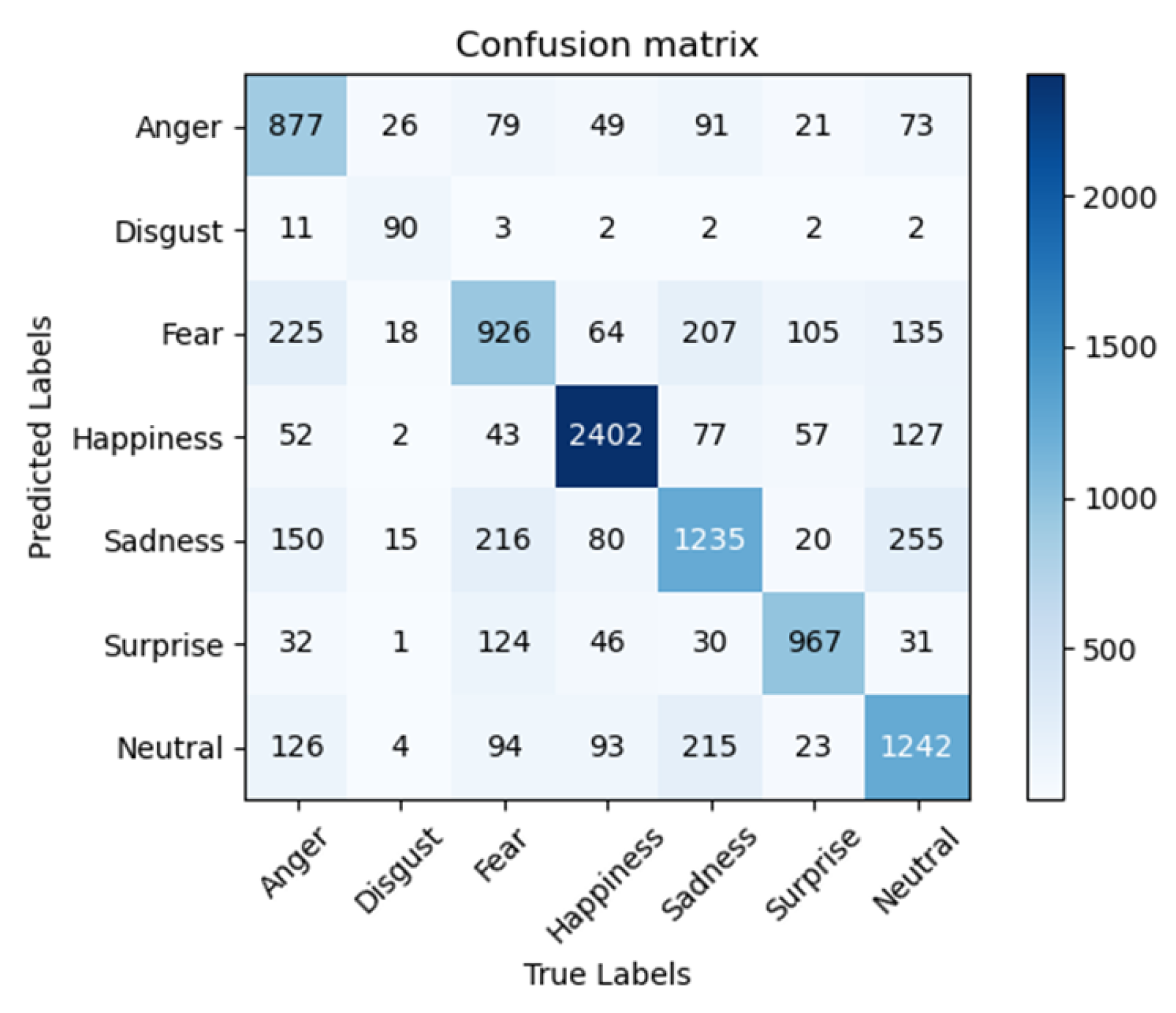

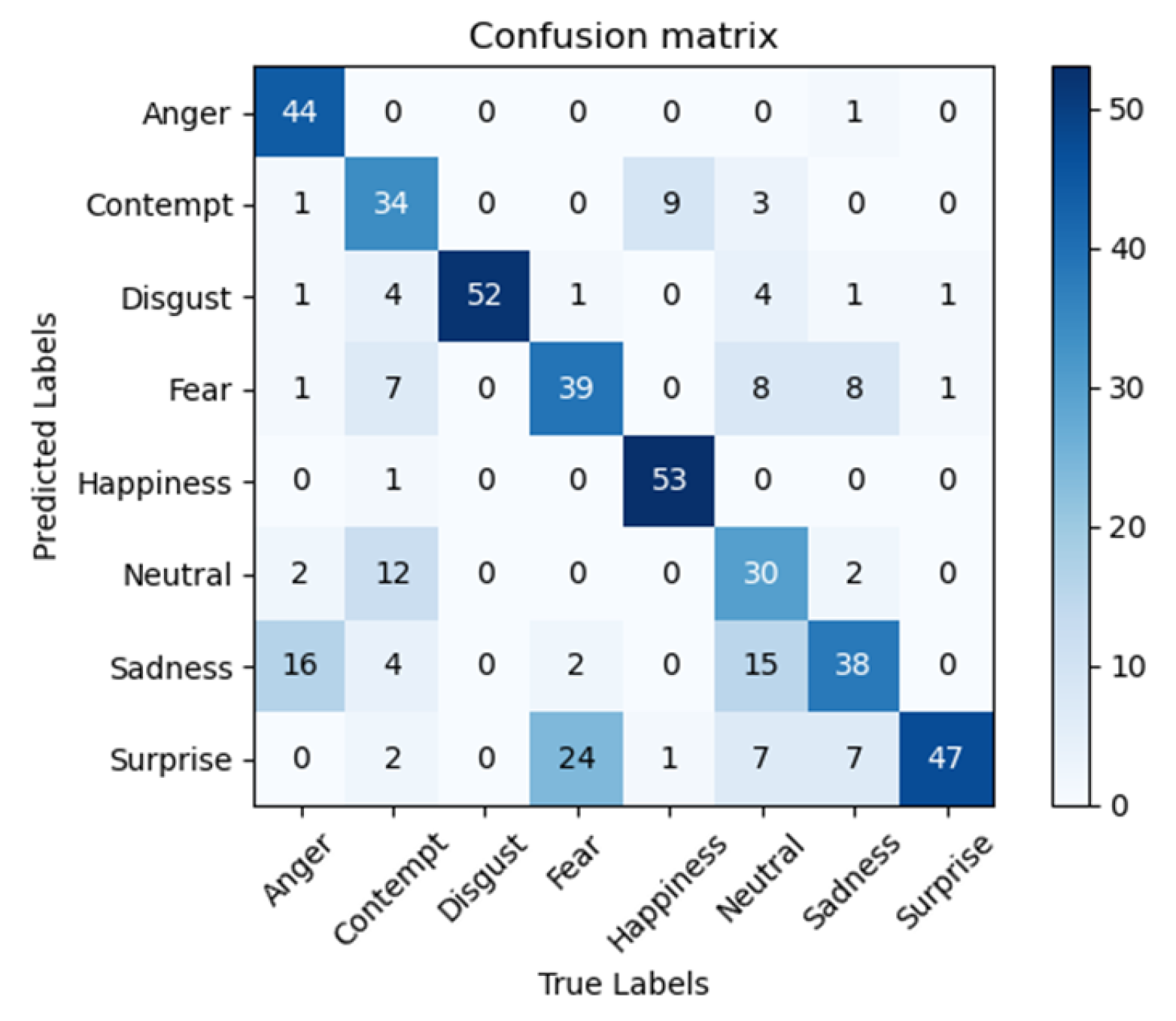

4.1. Dataset Description

- (1)

- FER2013 [30]: Introduced during the Representation Learning Challenge at ICML 2013, this large-scale, unconstrained dataset comprises 35,887 images. The images exhibit challenges such as occlusion, partial facial visibility due to cropping errors, and low resolution, closely reflecting the complexities of real-world scenarios.

- (2)

- RAF-DB [31,32]: A widely used public facial emotion dataset consisting of 15,339 images depicting seven fundamental emotions sourced from the internet. These images represent a variety of ethnicities, genders, and age groups under natural conditions and are accompanied by high-quality annotations. Emotion labels were determined by multiple independent annotators to ensure precision and consistency.

- (3)

- RAFD [33]: Compiled under controlled laboratory conditions, this dataset comprises 1607 high-resolution facial images from 67 subjects. Each subject’s expressions were captured from multiple angles, including frontal, lateral, and 45-degree oblique views, making it an exceptional resource for facial expression recognition and emotion analysis.

- (4)

- SCAUOL: This dataset comprises genuine experimental data collected by our institution from 26 participants aged between 19 and 25 years (18 males and 8 females). We identified eight distinct learning emotions: joy, inquisitiveness, contempt, neutral, irritation, perplexity, tedium, and diversion. Recordings were captured via computer cameras in participants’ natural, self-directed learning environments. After the videos were recorded, Python 3.9 was used as the development environment, and the FFMPEG framework was employed to extract and store every 48th frame from the captured video stream as an image. Following frame extraction, emotion annotation, object detection, and cropping were performed, resulting in 1519 facial expression images. To address the small size of the dataset, a StyleGAN-based data augmentation method [45] was applied, expanding the dataset to 2519 images.

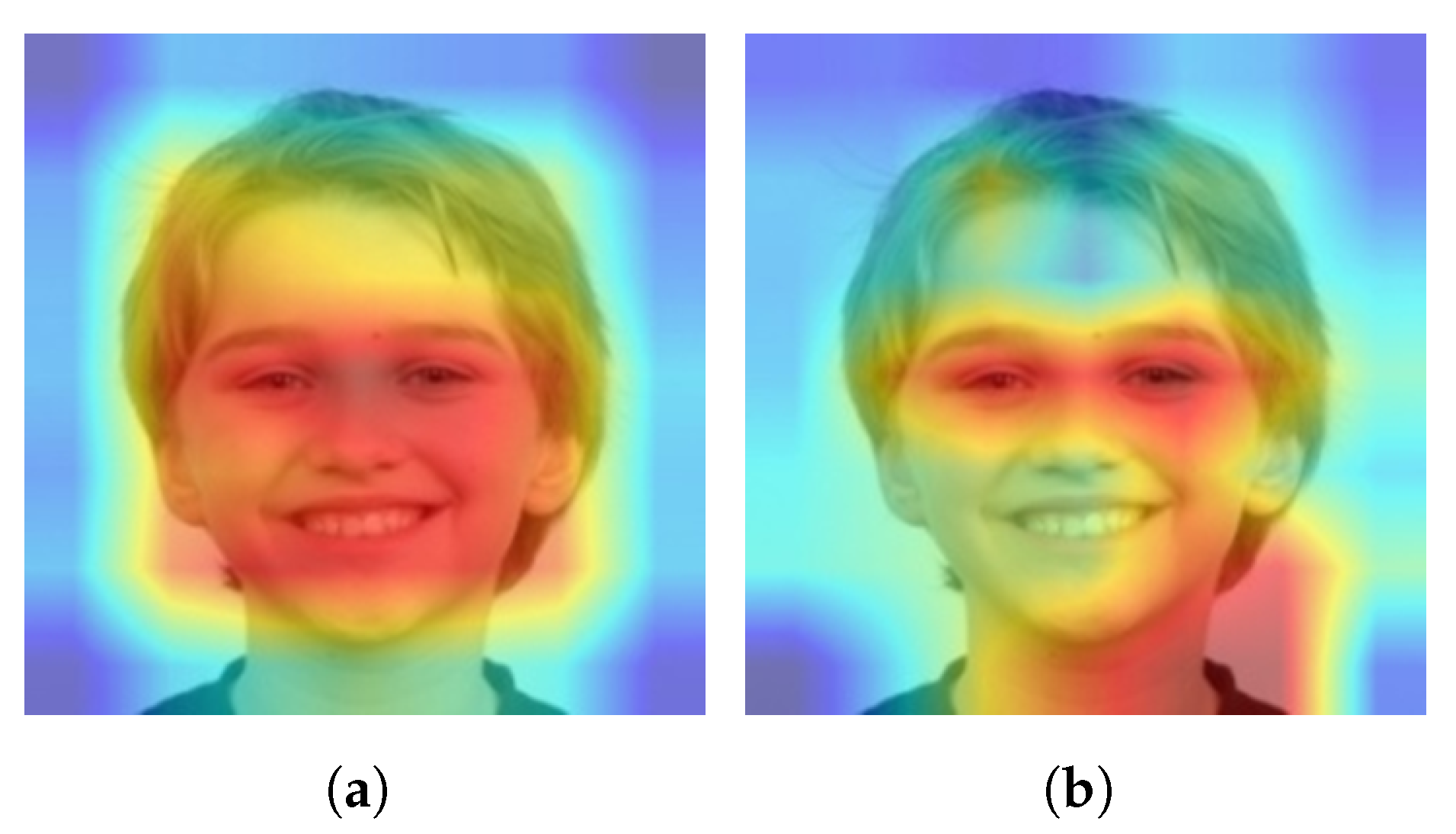

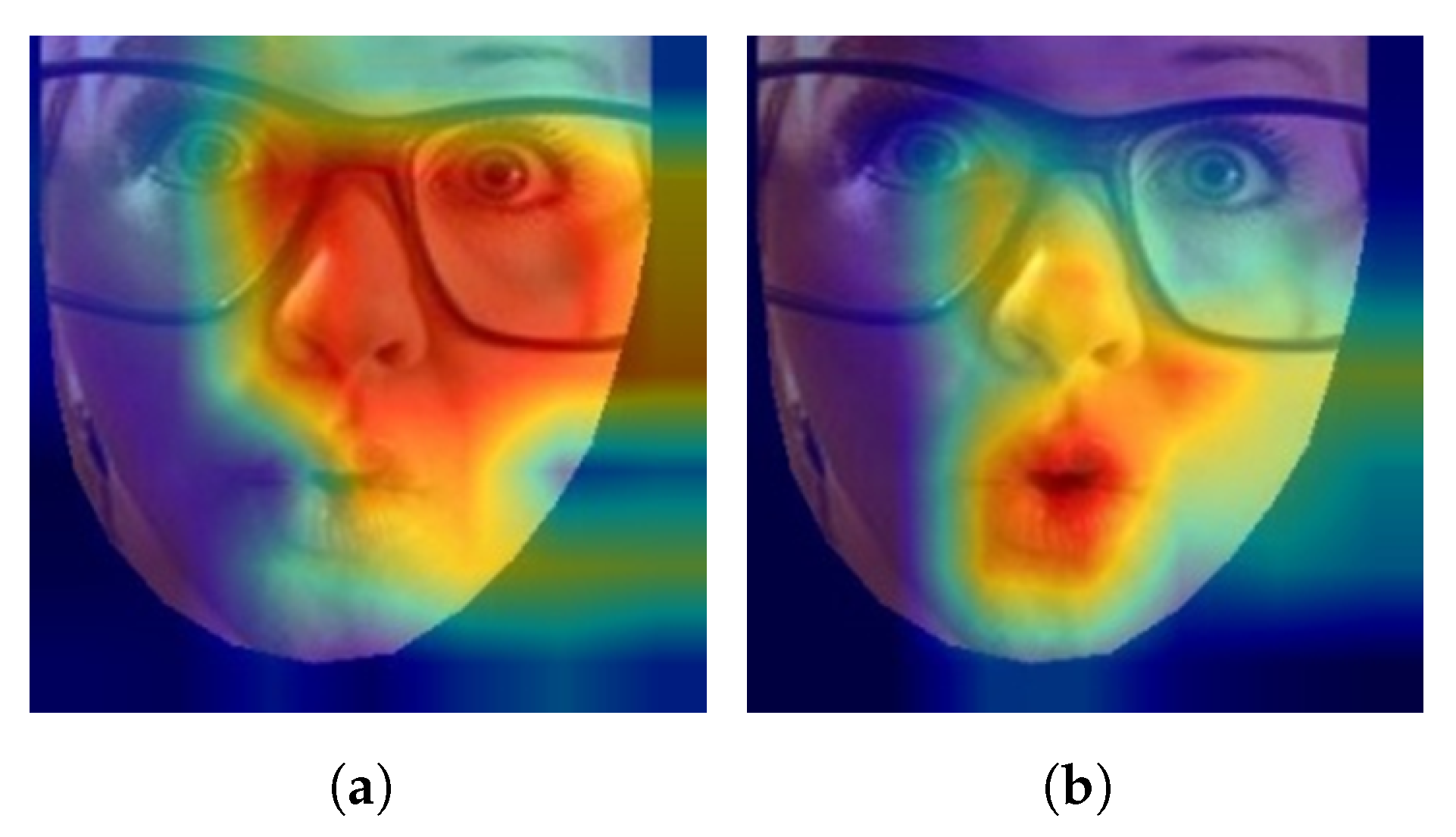

4.2. Emotion Feature Extraction

4.3. Ablation Study

| Dataset: RAFD | |

|---|---|

| Teacher Model: VOLO-D2 | |

| Student Model: MobileViT-Local-S | |

| Distillation Temperature and Balance Coefficient | Top-1 Accuracy |

| temp = 10.0, alpha = 0.7 | 97.93% |

| temp = 9.0, alpha = 0.7 | 98.76% |

| temp = 8.0, alpha = 0.7 | 98.55% |

| temp = 7.0, alpha = 0.7 | 99.17% |

| temp = 6.0, alpha = 0.7 | 98.96% |

| temp = 5.0, alpha = 0.7 | 98.55% |

| temp = 4.0, alpha = 0.7 | 98.34% |

| temp = 7.0, alpha = 0.9 | 98.76% |

| temp = 7.0, alpha = 0.8 | 98.55% |

| temp = 7.0, alpha = 0.7 | 99.17% |

| temp = 7.0, alpha = 0.6 | 98.14% |

| temp = 7.0, alpha = 0.5 | 98.76% |

| Teacher Model | Dataset | ||

|---|---|---|---|

| RAF-DB | FER2013 | RAFD | |

| Top-1 Accuracy | |||

| RegNetY-16GF | 79.24% | 62.68% | 97.52% |

| CoAtNet-2 | 82.89% | 64.76% | 98.96% |

| VOLO-D2 | 79.40% | 62.13% | 97.72% |

| Student Model with KD | |||

| MobileViT-XXS | 73.89% | 57.51% | 95.45% |

| MobileViT-XXS with RegNetY-16GF | 76.17% | 58.94% | 96.89% |

| MobileViT-XXS with CoAtNet-2 | 77.05% | 61.47% | 96.07% |

| MobileViT-XXS with VOLO-D2 | 77.64% | 60.50% | 95.65% |

| MobileViT-Local-XXS | 74.51% | 57.94% | 95.86% |

| MobileViT-Local-XXS with RegNetY-16GF | 76.14% | 59.51% | 97.52% |

| MobileViT-Local-XXS with CoAtNet-2 | 77.31% | 61.49% | 97.10% |

| MobileViT-Local-XXS with VOLO-D2 | 78.75% | 63.20% | 96.69% |

| MobileViT-XS | 75.36% | 58.71% | 97.10% |

| MobileViT-XS with RegNetY-16GF | 77.41% | 60.60% | 97.31% |

| MobileViT-XS with CoAtNet-2 | 78.36% | 62.59% | 97.52% |

| MobileViT-XS with VOLO-D2 | 78.75% | 61.28% | 97.72% |

| MobileViT-Local-XS | 75.72% | 58.92% | 97.72% |

| MobileViT-Local-XS with RegNetY-16GF | 78.23% | 60.71% | 97.93% |

| MobileViT-Local-XS with CoAtNet-2 | 79.50% | 62.70% | 98.34% |

| MobileViT-Local-XS with VOLO-D2 | 79.79% | 63.91% | 98.34% |

| MobileViT-S | 77.05% | 60.30% | 97.93% |

| MobileViT-S with RegNetY-16GF | 78.78% | 62.13% | 98.14% |

| MobileViT-S with CoAtNet-2 | 79.34% | 63.90% | 98.14% |

| MobileViT-S with VOLO-D2 | 81.03% | 63.50% | 98.96% |

| MobileViT-Local-S | 77.61% | 60.41% | 98.55% |

| MobileViT-Local-S with RegNetY-16GF | 79.89% | 62.15% | 98.76% |

| MobileViT-Local-S with CoAtNet-2 | 80.64% | 64.08% | 98.55% |

| MobileViT-Local-S with VOLO-D2 | 81.42% | 65.19% | 99.17% |

5. Analysis and Discussion

5.1. Results Analysis

5.2. Limitations and Implications

- (1)

- The lightweight emotion recognition model based on visual self-attention and knowledge distillation addresses the challenge of running large networks on low-end devices, but it currently relies solely on single-modal facial expression data. Real classroom environments are more complex, and leveraging multimodal information could improve emotion recognition. Therefore, future work should explore lightweight multimodal recognition methods.

- (2)

- The online learning emotion recognition model using generative adversarial data augmentation improved performance on the self-built dataset, but the limited original data means that generated samples may lack quality. Low-quality data could mislead model training. Future efforts should focus on collecting larger datasets and, for generative augmentation, combining large public facial emotion datasets with online learning-specific datasets to enhance data diversity and authenticity.

5.3. Ethical Considerations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Liu, T.; Wang, J.; Tian, J. Understanding learner continuance intention: A comparison of live video learning, pre-recorded video learning and hybrid video learning in COVID-19 pandemic. Int. J. Hum.-Interact. 2022, 38, 263–281. [Google Scholar] [CrossRef]

- Shen, J.; Yang, H.; Li, J.; Cheng, Z. Assessing learning engagement based on facial expression recognition in MOOC’s scenario. Multimed. Syst. 2022, 28, 469–478. [Google Scholar] [CrossRef] [PubMed]

- Darwin, C.; Prodger, P. The Expression of the Emotions in Man and Animals; Oxford University Press: Cary, NC, USA, 1998. [Google Scholar]

- Tian, Y.I.; Kanade, T.; Cohn, J.F. Recognizing action units for facial expression analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 97–115. [Google Scholar] [CrossRef]

- Walker, S.A.; Double, K.S.; Kunst, H.; Zhang, M.; MacCann, C. Emotional intelligence and attachment in adulthood: A meta-analysis. Personal. Individ. Differ. 2022, 184, 111174. [Google Scholar] [CrossRef]

- Whitehill, J.; Serpell, Z.; Lin, Y.C.; Foster, A.; Movellan, J.R. The faces of engagement: Automatic recognition of student engagementfrom facial expressions. IEEE Trans. Affect. Comput. 2014, 5, 86–98. [Google Scholar] [CrossRef]

- Dujaili, M.J.A. Survey on facial expressions recognition: Databases, features and classification schemes. Multimed. Tools Appl. 2023, 83, 7457–7478. [Google Scholar] [CrossRef]

- Sajjad, M.; Ullah, F.U.M.; Ullah, M.; Christodoulou, G.; Cheikh, F.A.; Hijji, M.; Muhammad, K.; Rodrigues, J.J. A comprehensive survey on deep facial expression recognition: Challenges, applications, and future guidelines. Alex. Eng. J. 2023, 68, 817–840. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: London, UK, 2016. [Google Scholar]

- Liu, T.; Wang, J.; Yang, B.; Wang, X. NGDNet: Nonuniform Gaussian-label distribution learning for infrared head pose estimation and on-task behavior understanding in the classroom. Neurocomputing 2021, 436, 210–220. [Google Scholar] [CrossRef]

- Zhu, B.; Lan, X.; Guo, X.; Barner, K.E.; Boncelet, C. Multi-rate attention based gru model for engagement prediction. In Proceedings of the 2020 International Conference on Multimodal Interaction, Utrecht, The Netherlands, 25–29 October 2020; pp. 841–848. [Google Scholar]

- Liu, C.; Jiang, W.; Wang, M.; Tang, T. Group level audio-video emotion recognition using hybrid networks. In Proceedings of the 2020 International Conference on Multimodal Interaction, Online, 25–29 October 2020; pp. 807–812. [Google Scholar]

- Liu, T.; Wang, J.; Yang, B.; Wang, X. Facial expression recognition method with multi-label distribution learning for non-verbal behavior understanding in the classroom. Infrared Phys. Technol. 2021, 112, 103594. [Google Scholar] [CrossRef]

- Savchenko, A.V.; Demochkin, K.V.; Grechikhin, I.S. Preference prediction based on a photo gallery analysis with scene recognition and object detection. Pattern Recognit. 2022, 121, 108248. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef]

- Ekman, P. Strong evidence for universals in facial expressions: A reply to Russell’s mistaken critique. Psychol. Bull. 1994, 115, 268–287. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. A new pan-cultural facial expression of emotion. Motiv. Emot. 1986, 10, 159–168. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Who knows what about contempt: A reply to Izard and Haynes. Motiv. Emot. 1988, 12, 17–22. [Google Scholar] [CrossRef]

- Ekman, P.; Heider, K.G. The universality of a contempt expression: A replication. Motiv. Emot. 1988, 12, 303–308. [Google Scholar] [CrossRef]

- Matsumoto, D. More evidence for the universality of a contempt expression. Motiv. Emot. 1992, 16, 363–368. [Google Scholar] [CrossRef]

- Mercado-Diaz, L.R.; Veeranki, Y.R.; Marmolejo-Ramos, F.; Posada-Quintero, H.F. EDA-Graph: Graph Signal Processing of Electrodermal Activity for Emotional States Detection. J. Biomed. Health Inform. J-BHI 2024, 28, 14. [Google Scholar] [CrossRef]

- Veeranki, Y.R.; Posada-Quintero, H.F.; Swaminathan, R. Transition Network-Based Analysis of Electrodermal Activity Signals for Emotion Recognition. Innov. Res. Biomed. Eng. IRBM 2024, 45, 100849. [Google Scholar] [CrossRef]

- Veeranki, Y.R.; Mercado-Diaz, L.R.; Posada-Quintero, H.F. Autoencoder Based Nonlinear Feature Extraction from EDA Signals for Emotion Recognition. In Proceedings of the 2024 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Eindhoven, The Netherlands, 26–28 June 2024; pp. 1–5. [Google Scholar]

- Allen, I.E.; Seaman, J. Digital Compass Learning: Distance Education Enrollment Report 2017; Babson Survey Research Group: Boston, MA, USA, 2017. [Google Scholar]

- Shea, P.; Bidjerano, T.; Vickers, J. Faculty Attitudes Toward Online Learning: Failures and Successes; SUNY Research Network: Albany, NY, USA, 2016. [Google Scholar]

- Lederman, D. Conflicted Views of Technology: A Survey of Faculty Attitudes; Inside Higher Ed: Washington, DC, USA, 2018. [Google Scholar]

- Cason, C.L.; Stiller, J. Performance outcomes of an online first aid and CPR course for laypersons. Health Educ. J. 2011, 70, 458–467. [Google Scholar] [CrossRef]

- Maloney, S.; Storr, M.; Paynter, S.; Morgan, P.; Ilic, D. Investigating the efficacy of practical skill teaching: A pilot-study comparing three educational methods. Adv. Health Sci. Educ. 2013, 18, 71–80. [Google Scholar] [CrossRef] [PubMed]

- Dolan, E.; Hancock, E.; Wareing, A. An evaluation of online learning to teach practical competencies in undergraduate health science students. Internet High. Educ. 2015, 24, 21–25. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the Neural Information Processing: 20th International Conference, ICONIP 2013, Daegu, Republic of Korea, 3–7 November 2013; Springer: Berlin/Heidelberg, Germany, 2013. Proceedings, Part III 20. pp. 117–124. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable Crowdsourcing and Deep Locality-Preserving Learning for Expression Recognition in the Wild. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: New York, NY, USA, 2017; pp. 2584–2593. [Google Scholar]

- Li, S.; Deng, W. Reliable Crowdsourcing and Deep Locality-Preserving Learning for Unconstrained Facial Expression Recognition. IEEE Trans. Image Process. 2018, 28, 356–370. [Google Scholar] [CrossRef]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.; Hawk, S.T.; Van Knippenberg, A. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yuan, L.; Hou, Q.; Jiang, Z.; Feng, J.; Yan, S. Volo: Vision outlooker for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6575–6586. [Google Scholar] [CrossRef] [PubMed]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Yu, J.; Markov, K.; Matsui, T. Articulatory and spectrum features integration using generalized distillation framework. In Proceedings of the 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Salerno, Italy, 13–16 September 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4217–4228. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Hervé, J. Training data-efficient image transformers & distillation through attention. arXiv 2020. [Google Scholar] [CrossRef]

| Model | Params | Dataset | ||

|---|---|---|---|---|

| RAF-DB | FER2013 | RAFD | ||

| Top-1 Accuracy | ||||

| MobileViT-XXS | 1.268 M | 73.89% | 57.51% | 95.45% |

| MobileViT-Local-XXS | 1.296 M | 74.51% | 57.94% | 95.86% |

| MobileViT-XS | 2.309 M | 75.36% | 58.71% | 97.10% |

| MobileViT-Local-XS | 2.398 M | 75.72% | 58.92% | 97.72% |

| MobileViT-S | 5.566 M | 77.05% | 60.30% | 97.93% |

| MobileViT-Local-S | 5.797 M | 77.61% | 60.41% | 98.55% |

| Model | Params | FLOPS | Memory | Model Size | Dataset | |||

|---|---|---|---|---|---|---|---|---|

| RAF-DB | FER2013 | RAFD | SCAUOL | |||||

| Top-1 Accuracy | ||||||||

| EfficientNet-B0 | 5.247 M | 0.386 G | 77.99 MB | 20.17 MB | 74.28% | 57.37% | 95.45% | 73.24% |

| MobileNet v3-Large 1.0 | 5.459 M | 0.438 G | 55.03 MB | 20.92 MB | 72.62% | 57.57% | 93.79% | 74.55% |

| Deit-T | 5.679 M | 1.079 G | 49.34 MB | 21.67 MB | 71.68% | - | - | 44.21% |

| ShuffleNet v2 2x | 7.394 M | 0.598 G | 39.51 MB | 28.21 MB | 75.13% | 58.30% | 96.07% | 75.43% |

| EfficientNet-B1 | 7.732 M | 0.570 G | 109.23 MB | 29.73 MB | 73.47% | 57.23% | 94.20% | 75.16% |

| MobileViT-XXS with VOLO-D2 | 1.268 M | 0.257 G | 53.65 MB | 4.85 MB | 77.64% | 60.50% | 95.65% | 74.60% |

| MobileViT-Local-XXS with VOLO-D2 | 1.296 M | 0.265 G | 53.65 MB | 4.96 MB | 78.75% | 63.20% | 96.69% | 76.36% |

| ResNet-18 | 11.690 M | 1.824 G | 34.27 MB | 44.59 MB | 79.40% | 61.19% | 98.14% | 78.16% |

| ResNet-50 | 25.557 M | 4.134 G | 132.76 MB | 97.49 MB | 80.15% | 61.35% | 97.72% | 82.97% |

| VOLO-D1 | 25.792 M | 6.442 G | 179.49 MB | 98.39 MB | 79.20% | 60.62% | 95.45% | 86.33% |

| Swin-T | 28.265 M | 4.372 G | 106.00 MB | 107.82 MB | 77.18% | - | 97.10% | 33.11% |

| ConvNeXt-T | 28.566 M | 4.456 G | 145.73 MB | 109.03 MB | 73.63% | 56.95% | 94.00% | 79.36% |

| EfficientNet-B5 | 30.217 M | 2.357 G | 274.87 MB | 115.93 MB | 75.07% | 58.90% | 94.82% | 79.96% |

| EfficientNet-B6 | 42.816 M | 3.360 G | 351.93 MB | 164.19 MB | 75.29% | 60.54% | 95.24% | 80.71% |

| ResNet-101 | 44.549 M | 7.866 G | 197.84 MB | 169.94 MB | 79.60% | 61.88% | 97.93% | 83.38% |

| MobileViT-XS with VOLO-D2 | 2.309 M | 0.706 G | 135.32 MB | 8.84 MB | 78.75% | 61.28% | 97.72% | 79.96% |

| MobileViT-Local-XS with VOLO-D2 | 2.398 M | 0.728 G | 135.32 MB | 9.18 MB | 79.79% | 63.91% | 98.34% | 80.43% |

| ConvNeXt-S | 50.180 M | 8.684 G | 233.58 MB | 191.54 MB | 74.97% | 58.74% | 92.75% | 86.58% |

| VOLO-D2 | 57.559 M | 13.508 G | 300.40 MB | 219.57 MB | 79.40% | 60.04% | 97.72% | 90.49% |

| ResNet-152 | 60.193 M | 11.604 G | 278.23 MB | 229.62 MB | 79.63% | 61.91% | 97.93% | 87.42% |

| CoAtNet-2 | 73.391 M | 15.866 G | 589.92 MB | 280.23 MB | 82.89% | 64.76% | 98.96% | 92.33% |

| RegNetY-16GF | 83.467 M | 15.912 G | 322.94 MB | 318.87 MB | 79.24% | 62.68% | 97.52% | 84.69% |

| MobileViT-S with VOLO-D2 | 5.566 M | 1.421 G | 162.18 MB | 21.28 MB | 81.03% | 63.50% | 98.96% | 83.78% |

| MobileViT-Local-S with VOLO-D2 | 5.797 M | 1.473 G | 162.18 MB | 22.16 MB | 81.42% | 65.19% | 99.17% | 85.33% |

| MobileViT-Local-S with VOLO-D2 * | 5.797 M | 1.473 G | 162.18 MB | 22.16 MB | 81.91% | 66.20% | 99.17% | 88.72% |

| Dataset: RAF-DB | |

|---|---|

| Teacher Model: VOLO-D2 | |

| Student Model: MobileViT-Local-S | |

| Distillation Temperature and Balance Coefficient | Top-1 Accuracy |

| temp = 10.0, alpha = 0.7 | 81.58% |

| temp = 9.0, alpha = 0.7 | 81.00% |

| temp = 8.0, alpha = 0.7 | 80.64% |

| temp = 7.0, alpha = 0.7 | 81.91% |

| temp = 6.0, alpha = 0.7 | 81.39% |

| temp = 5.0, alpha = 0.7 | 81.68% |

| temp = 4.0, alpha = 0.7 | 80.77% |

| temp = 7.0, alpha = 0.9 | 81.16% |

| temp = 7.0, alpha = 0.8 | 81.16% |

| temp = 7.0, alpha = 0.7 | 81.91% |

| temp = 7.0, alpha = 0.6 | 81.03% |

| temp = 7.0, alpha = 0.5 | 79.73% |

| Dataset: FER2013 | |

|---|---|

| Teacher Model: VOLO-D2 | |

| Student Model: MobileViT-Local-S | |

| Distillation Temperature and Balance Coefficient | Top-1 Accuracy |

| temp = 10.0, alpha = 0.7 | 65.51% |

| temp = 9.0, alpha = 0.7 | 66.20% |

| temp = 8.0, alpha = 0.7 | 65.24% |

| temp = 7.0, alpha = 0.7 | 65.54% |

| temp = 6.0, alpha = 0.7 | 65.35% |

| temp = 5.0, alpha = 0.7 | 65.43% |

| temp = 4.0, alpha = 0.7 | 65.18% |

| temp = 9.0, alpha = 0.9 | 66.15% |

| temp = 9.0, alpha = 0.8 | 65.68% |

| temp = 9.0, alpha = 0.7 | 66.20% |

| temp = 9.0, alpha = 0.6 | 65.26% |

| temp = 9.0, alpha = 0.5 | 64.00% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Chen, X.; Zhang, Z. A Lightweight Teaching Assessment Framework Using Facial Expression Recognition for Online Courses. Appl. Sci. 2025, 15, 12461. https://doi.org/10.3390/app152312461

Wang J, Chen X, Zhang Z. A Lightweight Teaching Assessment Framework Using Facial Expression Recognition for Online Courses. Applied Sciences. 2025; 15(23):12461. https://doi.org/10.3390/app152312461

Chicago/Turabian StyleWang, Jinfeng, Xiaomei Chen, and Zicong Zhang. 2025. "A Lightweight Teaching Assessment Framework Using Facial Expression Recognition for Online Courses" Applied Sciences 15, no. 23: 12461. https://doi.org/10.3390/app152312461

APA StyleWang, J., Chen, X., & Zhang, Z. (2025). A Lightweight Teaching Assessment Framework Using Facial Expression Recognition for Online Courses. Applied Sciences, 15(23), 12461. https://doi.org/10.3390/app152312461