1. Introduction

Mine rescue robots are indispensable for post-disaster environmental assessment, personnel localization, and hazard detection [

1,

2]. The stability and reliability of video data acquired at disaster sites directly determine the accuracy of rescue operations and command decisions [

3,

4]. However, the inherently complex underground environment often exposes these robots to severe vibration, uneven illumination, and dynamic disturbances, leading to image jitter, motion blur, and loss of critical visual information during data acquisition [

5]. Therefore, developing robust visual stabilization techniques is essential to ensure reliable perception and decision-making for mine rescue robots operating under hazardous conditions.

Achieving stable video quality in complex post-disaster mining environments has become a central challenge in robotic-vision research. Conventional video-stabilization techniques are generally divided into mechanical, optical, and electronic approaches [

6]. Mechanical stabilization is achieved through structural compensation mechanisms, whereas optical stabilization is realized by modifying the light path to reduce hardware latency and preserve image fidelity [

7]. Although these approaches are effective, they require intricate hardware configurations, entail high implementation costs, and pose considerable challenges in installation and maintenance. In contrast, electronic stabilization compensates for motion by estimating inter-frame transformations and performing image resampling. Representative methods include feature-based matching algorithms such as ORB, SURF, and SIFT, as well as motion-modeling techniques based on Kalman and particle filtering [

8]. A CLAHE–Kalman filter hybrid algorithm for onboard video stabilization in mining equipment was proposed [

9], and a feature-region-based filtering approach for ground-based real-time telescopic imaging was investigated [

10]. An electronic stabilization technique combining ORB features with sliding-mean filtering for flapping-wing robots was designed [

11]. The SUSAN operator was applied to extract inter-frame correspondences, and a pyramid-structured optical-flow model was established [

12]. Motion estimation was further enhanced through ORB-based feature matching [

13], and an improved vehicle-mounted stabilization algorithm for mining scenarios was introduced by integrating ORB features with particle filtering [

14]. Although these methods effectively reduce frame-to-frame jitter, their performance is still constrained by sparse feature distributions, unstable correspondences, and limited computational efficiency in highly dynamic and low-visibility mining environments.

Recent advances in deep learning have led to substantial breakthroughs in video stabilization. The integration of deep neural networks has improved inter-frame correspondence accuracy and enhanced adaptability in visually complex and dynamic conditions [

15]. Deep-learning-based approaches achieve precise motion estimation at both global and local feature levels through convolutional and temporal neural architectures [

16]. A Neural Video Depth Stabilizer (NVDS) framework was proposed for stabilizing water-surface imagery [

17]. A depth-assisted stabilization method that jointly estimates camera trajectories and scene depth using PoseNet and DepthNet, followed by trajectory smoothing and viewpoint synthesis, was developed [

18]. A deep stabilization framework fusing gyroscope sensor data with visual optical-flow cues to enhance motion-compensation accuracy was introduced [

19]. In addition, a meta-learning-based adaptive testing scheme (MAML) was proposed to improve the generalization of pixel-level stabilization networks [

20], while a self-supervised framework enabling interest-point detection and descriptor learning without manual annotation was presented [

21]. Although these deep-learning-based techniques achieve high matching accuracy and robustness under complex visual conditions, their real-world applications remain restricted by heavy computational demands and limited adaptability to specific operational contexts.

To mitigate video jitter caused by equipment vibrations in disaster environments, this study proposes a configurable dual-channel deep video stabilization algorithm. The ORB channel and the SuperPoint channel can be individually activated according to computational resources or environmental complexity, with only one channel used at any given time. The framework extends the conventional ORB–RANSAC–Kalman and SuperPoint-based methods by introducing a coordinated integration of adaptive non-maximum suppression (NMS), boundary keypoint filtering, and Gaussian motion smoothing, which jointly optimize spatial keypoint uniformity and motion stability—an aspect rarely addressed simultaneously in previous studies.

Moreover, the algorithm is specifically designed for mining environments, addressing the limitations of traditional feature-based approaches in handling sparse keypoints and unstable motion trajectories. These improvements not only enhance visual stability but also provide reliable technical support for visual monitoring, disaster assessment, and intelligent decision-making of mine rescue robots operating under harsh underground conditions.

2. Related Works

In complex mining environments, video data collected by rescue robots are often affected by low illumination and frequent motion jitter, which pose significant challenges to reliable feature detection and matching. To ensure stable visual perception, representative and robust feature points must be extracted before inter-frame motion estimation. First, an improved ORB-based approach is introduced to establish a reliable baseline for keypoint detection and matching. Subsequently, the SuperPoint framework is presented as the core module for feature detection and description. By introducing the SuperPoint network based on deep feature learning, the robustness of feature detection and the overall anti-shake performance are further enhanced.

2.1. Feature Matching Algorithm Based on Improved ORB

Conventional motion detection methods based on direct pixel-level comparison often fail in complex underground environments. To address this, a feature-based motion estimation method employing the Oriented FAST and Rotated BRIEF (ORB) descriptor is used to achieve reliable keypoint detection and matching between consecutive frames. Grayscale preprocessing removes redundant color information, while an improved FAST corner detector and centroid-based orientation correction enhance feature robustness. Feature matching is performed using the minimum Hamming distance, and mismatches are eliminated through the Random Sample Consensus (RANSAC) algorithm to ensure geometric consistency. A Kalman filter is then introduced to smooth and predict inter-frame motion trajectories, effectively reducing random jitter and local drift without additional hardware complexity.

- (1)

Image Preprocessing: Input frames are converted to grayscale to suppress illumination variation and enhance salient regions. This step removes redundant color information while emphasizing intensity-based structural details for more stable feature extraction.

- (2)

Keypoint Detection and Orientation: Keypoints are detected using an improved FAST corner detector. For each keypoint, the raw moment of an image region

S is defined as

where

denotes the pixel intensity. The centroid and principal orientation are computed as

ensuring rotation invariance and improved robustness under varying lighting conditions.

- (3)

Descriptor Generation: A multi-scale image pyramid is constructed, and each BRIEF descriptor is rotated by

to form a rotation-invariant binary vector. Descriptor similarity between consecutive frames is measured by the Hamming distance:

This compact representation enables efficient and robust keypoint matching.

- (4)

Outlier Removal and Motion Estimation: To ensure geometric consistency, mismatched feature pairs are removed using the RANSAC algorithm. The affine transformation parameters between consecutive frames are estimated based on the remaining inlier matches, yielding stable inter-frame motion vectors.

- (5)

Temporal Filtering and Motion Compensation: To reduce random jitter and local drift, a linear Kalman model predicts inter-frame motion:

where

A and

H are the transition and observation matrices, and

,

represent zero-mean Gaussian noise. This temporal filtering effectively smooths the estimated trajectory and stabilizes the video sequence without additional hardware cost.

Compared with conventional ORB, the proposed improvements increase keypoint density and temporal stability while maintaining low computational cost, providing a solid foundation for subsequent deep-learning-based video stabilization methods.

2.2. SuperPoint-Based Feature Extraction Framework for Mine Rescue Robots

The enhanced SuperPoint algorithm serves as the core module for feature detection and descriptor extraction in mine rescue robots, enabling high-precision motion estimation and compensation under complex post-disaster conditions. The keypoints and descriptors produced by SuperPoint exhibit strong robustness and maintain stable inter-frame correspondences, thereby suppressing high-frequency jitter induced by mechanical vibration, illumination variation, and structural deformation. Built upon a Fully Convolutional Network (FCN), SuperPoint integrates keypoint detection and description into a unified, end-to-end pipeline comprising an input module, a shared convolutional encoder, a detector head, and a descriptor head. This design removes the need for multi-stage “detector–descriptor” processing and yields dense, consistent feature representations that improve motion stability, visual clarity, and operational reliability in disaster monitoring.

Unlike conventional feature extraction pipelines that separate detection and description into two stages, the proposed unified formulation allows both processes to share the same learned feature space, thereby improving temporal coherence and reducing intermediate information loss. Although the original SuperPoint has verified the feasibility of such end-to-end learning, the present study extends this paradigm to low-light and vibration-prone mining environments, where multi-stage frameworks often suffer from latency and inconsistent keypoint–descriptor alignment. Consequently, the integration is not merely an implementation simplification but a necessary adaptation that enhances robustness, efficiency, and real-time performance in practical rescue robot vision systems.

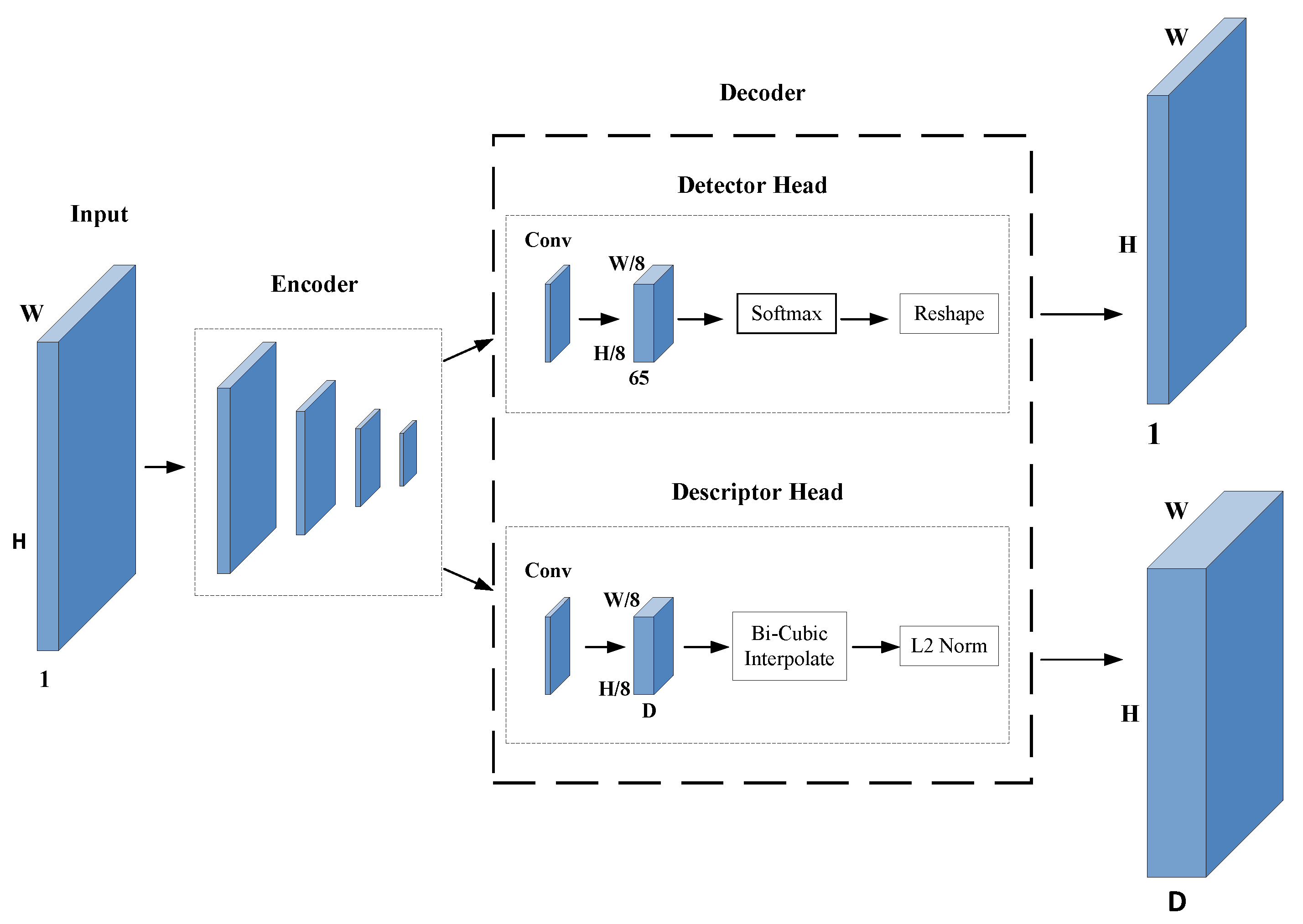

The overall network architecture is illustrated in

Figure 1. As shown in the diagram, the proposed network adopts an encoder–decoder architecture consisting of a shared convolutional encoder and two task-specific heads, namely the Detector Head and the Descriptor Head. Each block in the encoder represents two consecutive

convolutions followed by a

max-pooling operation (stride = 2). The number of feature channels increases progressively from 64 to 128 and 256, while the spatial resolution is reduced to

. This hierarchical structure aggregates multiscale contextual information and enhances robustness under low-light and vibration conditions. The shared encoder output is then divided into two branches corresponding to the detector and descriptor heads.

A single-channel grayscale image I of size is fed into the encoder for multiscale feature extraction. The encoder follows a VGG-like configuration composed of four convolutional modules, each containing two convolution layers and one max-pooling layer (stride = 2). The output feature map of size is shared by the two branches.

The Detector Head applies a convolution (256 channels) and a convolution (65 channels), corresponding to 64 spatial cells and 1 background channel. After softmax normalization, the background channel is removed, and the tensor is reshaped and upsampled to the original image size. Non-maximum suppression (NMS) is then applied to retain the most confident local maxima, yielding the final keypoint set.

The Descriptor Head consists of a convolution and a convolution that generate D-dimensional descriptors, followed by normalization to enable cosine similarity in feature matching. Because this branch outputs a descriptor map, bilinear interpolation is employed to sample 256-dimensional descriptors at the detected keypoint locations, forming a dense and consistent descriptor set that ensures strong correspondence between frames. Bilinear interpolation is adopted for descriptor upsampling as it preserves local spatial consistency and maintains smooth gradient transitions during dense descriptor generation. Compared with bicubic interpolation, it avoids excessive sharpening that could introduce spurious keypoint responses. In contrast, super-resolution-based upsampling methods, while capable of producing visually sharper textures, tend to alter feature distributions and significantly increase computational latency, which is undesirable for real-time deployment on embedded mine-rescue robotic platforms. Therefore, bilinear interpolation provides an optimal balance between feature fidelity and computational efficiency in this application scenario.

During inference, SuperPoint directly outputs keypoint coordinates, confidence scores, and normalized descriptors, removing the need for separate detector–descriptor stages. This unified framework provides uniform keypoint distributions and robustness against illumination and motion variations. The NMS step further enhances stability and improves global motion estimation accuracy.

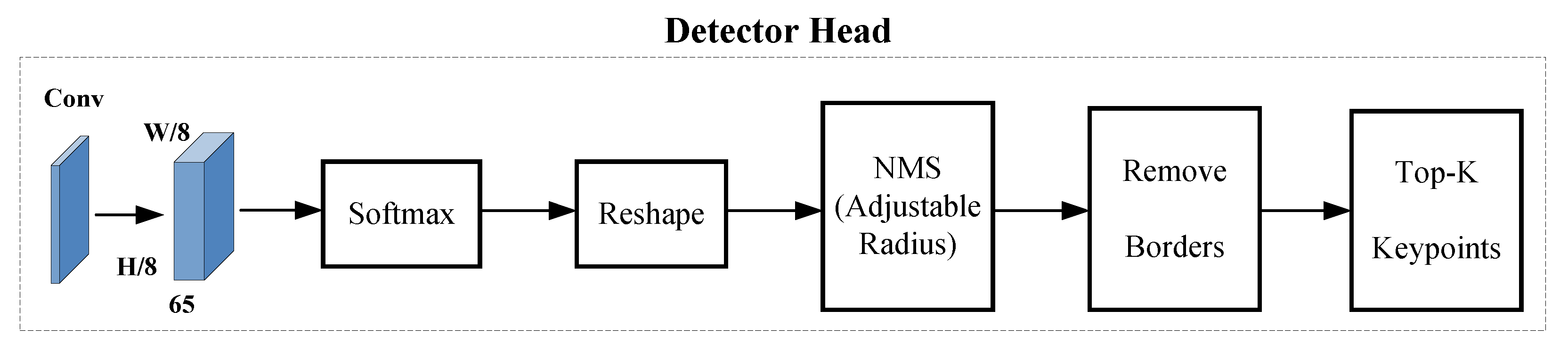

2.3. Optimized Detector Branch (Detector Head)

During mine rescue operations, visual systems often suffer from edge-point failures caused by vibrations or collapses in narrow tunnels, degrading feature-matching accuracy. A fixed NMS radius may produce overly dense detections in high-texture regions and insufficient detections in low-texture areas. To overcome this imbalance, an adaptive NMS radius and a dynamic threshold are introduced to adjust keypoint density according to local brightness and texture, enhancing feature stability for image stabilization and navigation tasks.

- (1)

Edge Keypoint Removal: Keypoints located near image boundaries are excluded to avoid potential errors caused by cropping and field-of-view variations during stabilization. Let the set of all detected keypoints be defined as

where

represent the pixel coordinates of the

i-th keypoint, and

denotes its confidence score obtained from the detector response map.

W and

H are the image width and height, respectively, and

b is the boundary radius threshold. After removing keypoints within the boundary margin, the filtered valid keypoint set is expressed as

- (2)

Top-K High-Score Keypoint Selection: To enhance feature stability and reduce computational complexity, only the Top-

K keypoints with the highest confidence scores are retained. Let

denote the confidence score set. The Top-

K keypoints are selected as

where

represents keypoints after boundary filtering and

their corresponding confidence values.

- (3)

Parametrized NMS with Adaptive Threshold: A parameterized NMS mechanism with a dynamic detection threshold regulates keypoint density based on local brightness and texture. Let

M denote the score map and

the neighborhood of pixel

p within Chebyshev distance

r. The NMS response is

An adaptive keypoint threshold

removes weak responses, and the final valid keypoint set is

This approach removes unstable boundary keypoints and preserves uniformly distributed interior features, which is designed to reduce computational cost and facilitate real-time implementation on low-power platforms. Although direct deployment on embedded devices was not conducted in this study, the reduction in computational redundancy is expected to be beneficial for deployment on lightweight hardware platforms. The architecture of the optimized detector branch is illustrated in

Figure 2. The adaptive non-maximum suppression (NMS) ensures sufficient detections in low-texture regions while suppressing redundancy in bright areas, maintaining balanced keypoint distribution and stable motion estimation. On this basis, a SuperPoint-based video stabilization algorithm combining dual-channel feature matching and Gaussian smoothing is constructed to perform motion estimation and enhance visual stability.

3. Methodology

In the aftermath of mining accidents, rescue robots frequently operate in environments characterized by unstable support structures, insufficient illumination, and pervasive dust. Here, “dual-channel” denotes two alternative feature branches—the traditional ORB features and the SuperPoint deep features—that can be flexibly switched according to environmental conditions. Only one feature branch is active at a time within the unified stabilization pipeline. This design allows flexible selection or combination of feature sources according to environmental conditions. Video sequences captured by onboard cameras in such conditions often exhibit substantial background displacement and motion blur. These distortions introduce motion noise into subsequent perception tasks—including environmental understanding, target detection, and path planning—thereby increasing the likelihood of false positives and false negatives. As a result, both operational safety and task efficiency are adversely affected. To address these challenges, this study proposes a dual-channel video stabilization algorithm based on SuperPoint feature detection, capable of producing stabilized video outputs under varying vibration intensities. The SuperPoint-based video stabilization process consists of five integrated stages designed to enhance video stability for mine rescue robots operating under low-light and high-vibration conditions.

Step 1: Grayscale Conversion and Gaussian Smoothing removes color interference and suppresses high-frequency noise to ensure stable input for subsequent feature detection.

Step 2: Keypoint Detection and Refinement employs an enhanced SuperPoint detector to generate a probability heatmap, applies NMS to retain local maxima, removes unstable boundary points, and selects the Top-K keypoints with the highest confidence for robust inter-frame matching.

Step 3: Descriptor Sampling and Normalization uses bilinear interpolation to extract local feature descriptors from the convolutional feature map and applies L2 normalization to enhance robustness against illumination and scale variations, ensuring accurate and consistent correspondence between consecutive frames.

Step 4: Affine Transformation Estimation applies the RANSAC algorithm to compute affine parameters that describe inter-frame geometric relationships while filtering mismatches.

Step 5: Motion Filtering and Compensation performs Gaussian-weighted temporal smoothing to suppress high-frequency jitter while maintaining natural camera motion, and constructs a compensation matrix to warp each frame and produce a stabilized video sequence. The overall workflow is summarized in Algorithm 1.

| Algorithm 1: SuperPoint-based video stabilization process. |

Input: Video frames Output: Stabilized video sequence - 1

Convert each frame to grayscale: . - 2

Apply Gaussian smoothing: . - 3

Generate probability heatmap with enhanced SuperPoint; apply NMS. - 4

Remove border points; keep Top-K high-confidence keypoints. - 5

Sample descriptors from feature map F at keypoints: . - 6

Normalize: . - 7

Estimate inter-frame motion with matched pairs: (use RANSAC). - 8

Smooth trajectories: , . - 9

Compensate each frame with

|

Through the integration of deep feature extraction, adaptive motion modeling, and temporal filtering, the proposed method achieves superior matching precision and motion stability compared with traditional ORB-based approaches. Preliminary evaluation indicates promising robustness and applicability in complex underground environments, although further validation under real-world deployment conditions is needed.

4. Results and Discussions

To evaluate the effectiveness of the improved SuperPoint algorithm, both qualitative and quantitative assessments were conducted, benchmarking against traditional ORB-based methods and other deep-learning approaches. The implementation was carried out in PyTorch 1.8.1 and executed on an NVIDIA GeForce RTX 3090 GPU. The model was pre-trained on the FlyingThings3D dataset, which provides diverse synthetic motion and illumination variations suitable for initializing motion estimation networks, and subsequently fine-tuned on the KITTI dataset to improve real-scene generalization. In addition, real-world mining sequences collected under different vibration intensities were used for performance validation to ensure consistency with underground operational conditions. A stepwise learning-rate decay from to was adopted based on convergence behavior during validation, balancing training stability and adaptability across domains. These configurations were selected to ensure that the network maintains robustness when transferring from generic motion datasets to low-light, vibration-prone mining environments.

The model’s robustness under various real-world motion conditions was further examined using real-world video sequences categorized into three motion-intensity regimes—high, moderate, and low—all sourced from operational mining environments, reflecting the diverse vibration conditions encountered by rescue robots in collapsed mines.

The corresponding training configuration is summarized in

Table 1.

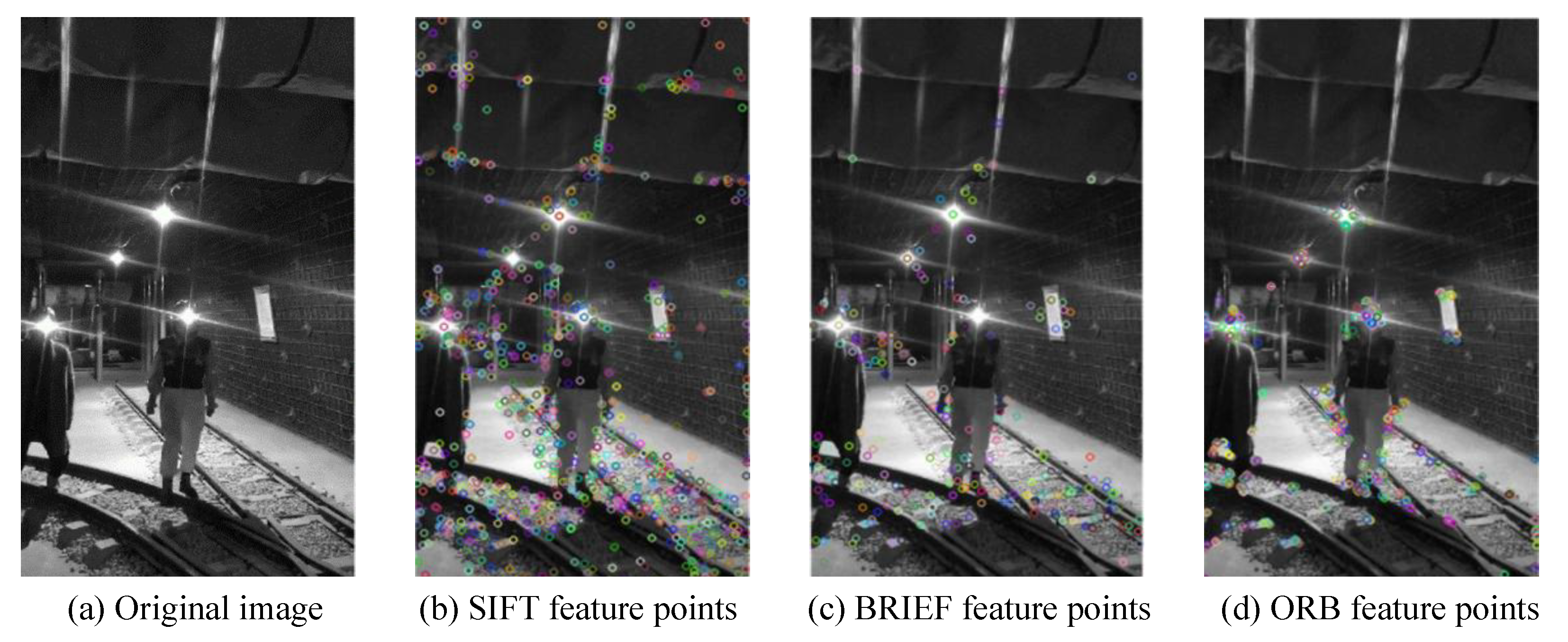

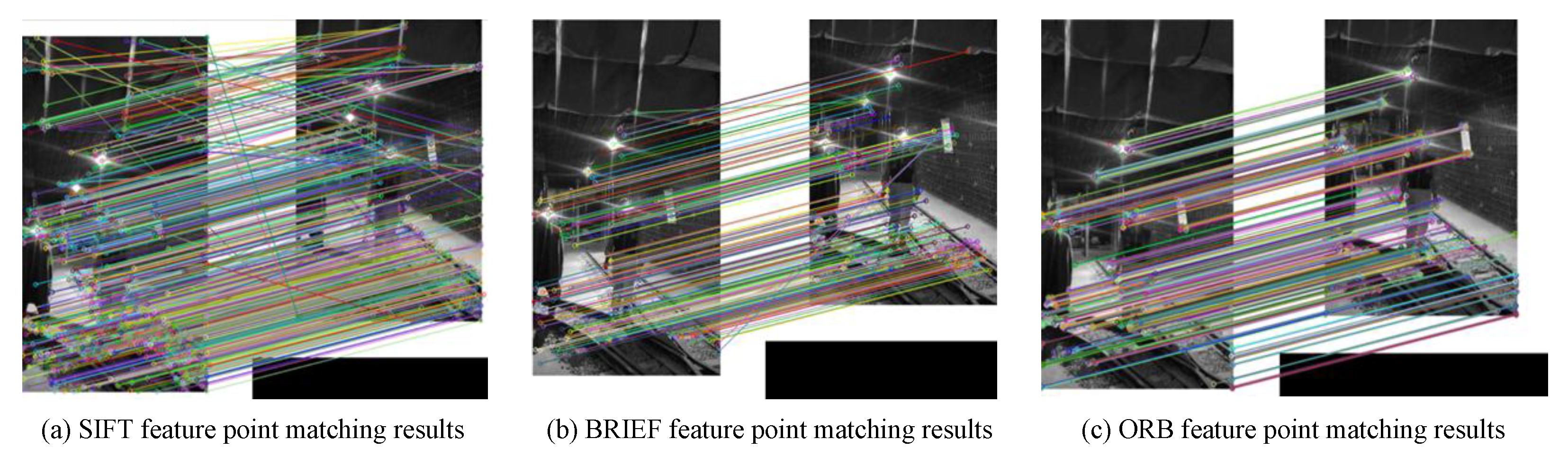

4.1. Feature Point Extraction Performance of Common Descriptors

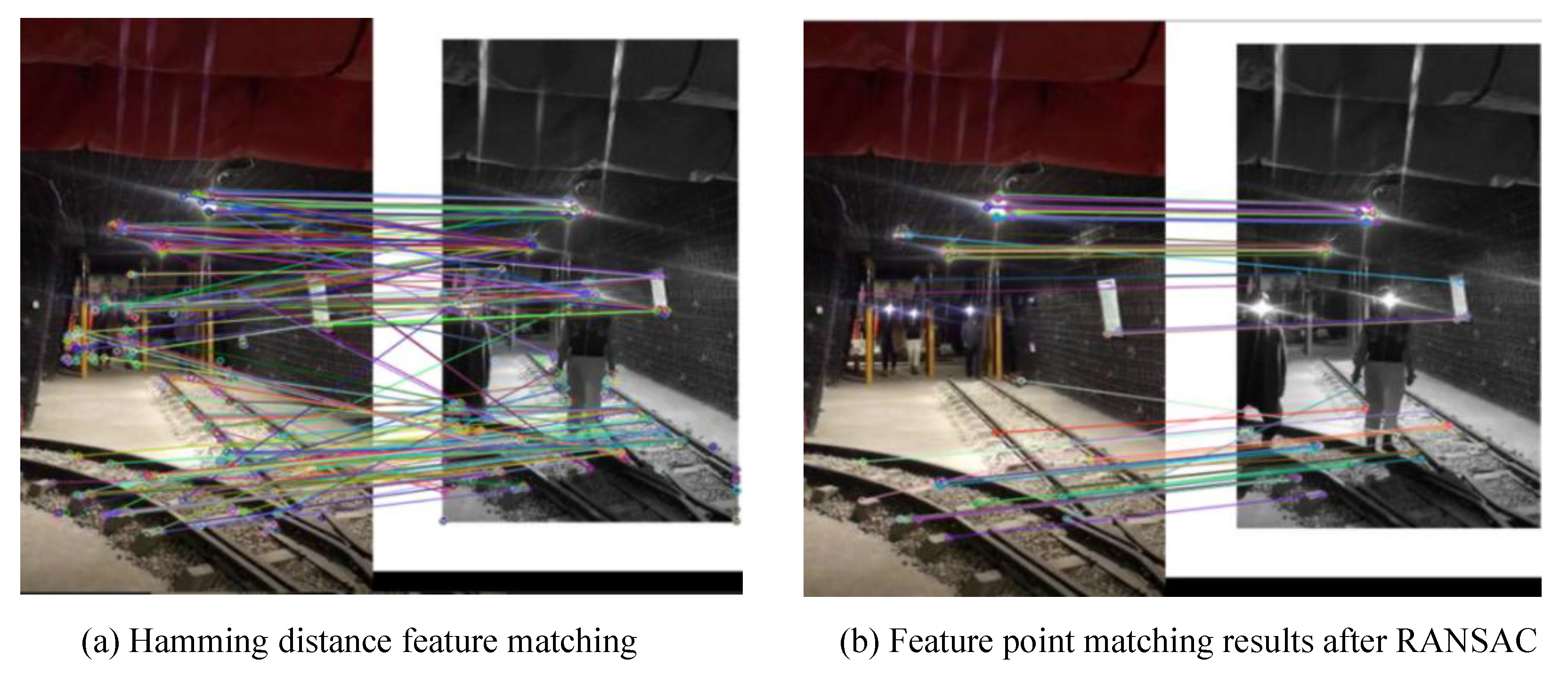

Following mine collapses, robotic vision systems frequently encounter challenges caused by mechanical walking vibrations, shaft oscillations, and falling rock impacts. To evaluate feature extraction robustness under such conditions, the performance of three commonly used descriptors—SIFT, BRIEF, and ORB—is illustrated in

Figure 3, and their corresponding feature matching results are presented in

Figure 4.

As shown in

Figure 3 and

Figure 4, binary descriptors demonstrate superior adaptability to low-illumination and uneven lighting conditions. Among them, the ORB descriptor maintains stable feature extraction under weak lighting and partial occlusion. However, in the absence of geometric constraints, ORB still produces a noticeable number of mismatches. In mine rescue scenarios, these mismatches can lead to inaccurate displacement estimation of collapsed areas, thereby increasing navigation risks and obstacle avoidance difficulties for robotic systems. To mitigate this problem, the RANSAC algorithm is employed to remove mismatches and refine geometric consistency, as illustrated in

Figure 5.

As illustrated in

Figure 5, RANSAC processing effectively eliminates mismatched correspondences, reducing the number of false matches and concentrating the valid feature distribution. This process substantially lowers motion estimation errors and enhances the stability of inter-frame matching. Quantitative results of the feature point extraction time for each algorithm are summarized in

Table 2.

4.2. Stabilization Effect

To provide an intuitive demonstration for the subjective and quantitative evaluation of the algorithms’ stabilization performance, the test videos were categorized into three motion-intensity levels based on the mean inter-frame displacement

and the robot’s motion state: low (<1 px), medium (1–3 px), and high (≥3 px). These levels correspond to three typical working conditions of the rescue robot—static monitoring, mobile operation, and impact interference, respectively. The frame counts for the three categories were 1823 for low, 532 for medium, and 380 for high motion intensity, as summarized in

Table 3.

For comparison, two baseline algorithms were included: the traditional ORB feature-based stabilization pipeline, and the deep-learning-based NeuFlow approach. All algorithms were tested under identical preprocessing and parameter configurations to ensure a fair comparison. The processing times for each algorithm under different motion-intensity levels are presented in

Table 3, which demonstrates the trade-off between computational efficiency and stabilization accuracy. Although the proposed method requires slightly more processing time than NeuFlow, the difference is minor and remains within an acceptable range for near-real-time applications. Given the notable improvements in video stability and feature robustness, the method provides a balanced compromise between computational efficiency and stabilization accuracy, suggesting its suitability for deployment in mine rescue robot platforms.

To further analyze the contribution of each component within the proposed algorithm, an ablation study was conducted, as shown in

Table 4. The experiment compares several configurations of the SuperPoint-based stabilization framework, including the baseline, border point removal, Top-K feature selection, and adaptive non-maximum suppression (NMS). The results demonstrate that each component contributes positively to performance improvement. Specifically, border point removal yields the largest increase in SSIM by effectively reducing unstable matches near image edges, while Top-K selection enhances PSNR by retaining only the most reliable keypoints. Adaptive-NMS further refines keypoint distribution, leading to a more stable motion estimation. When all three components are combined (Full configuration), the algorithm achieves the highest PSNR (40.06 dB) and SSIM (0.6776), confirming the complementary benefits of these modules.

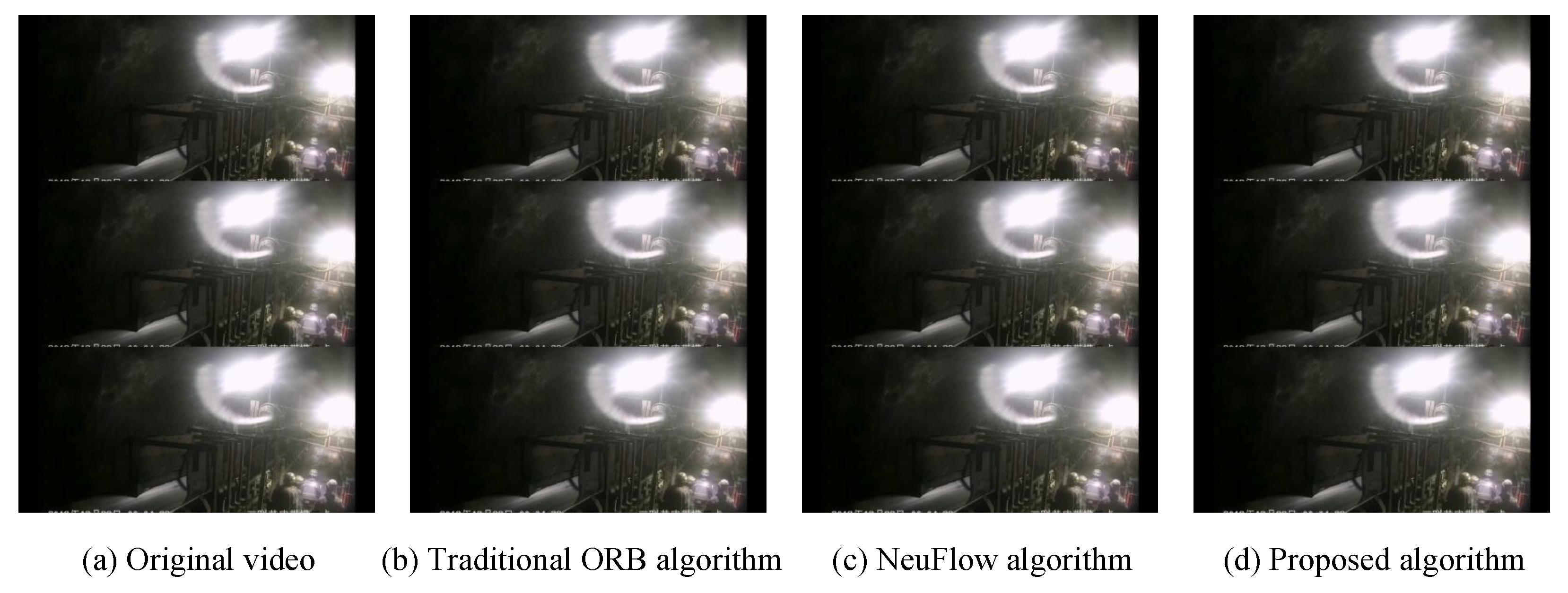

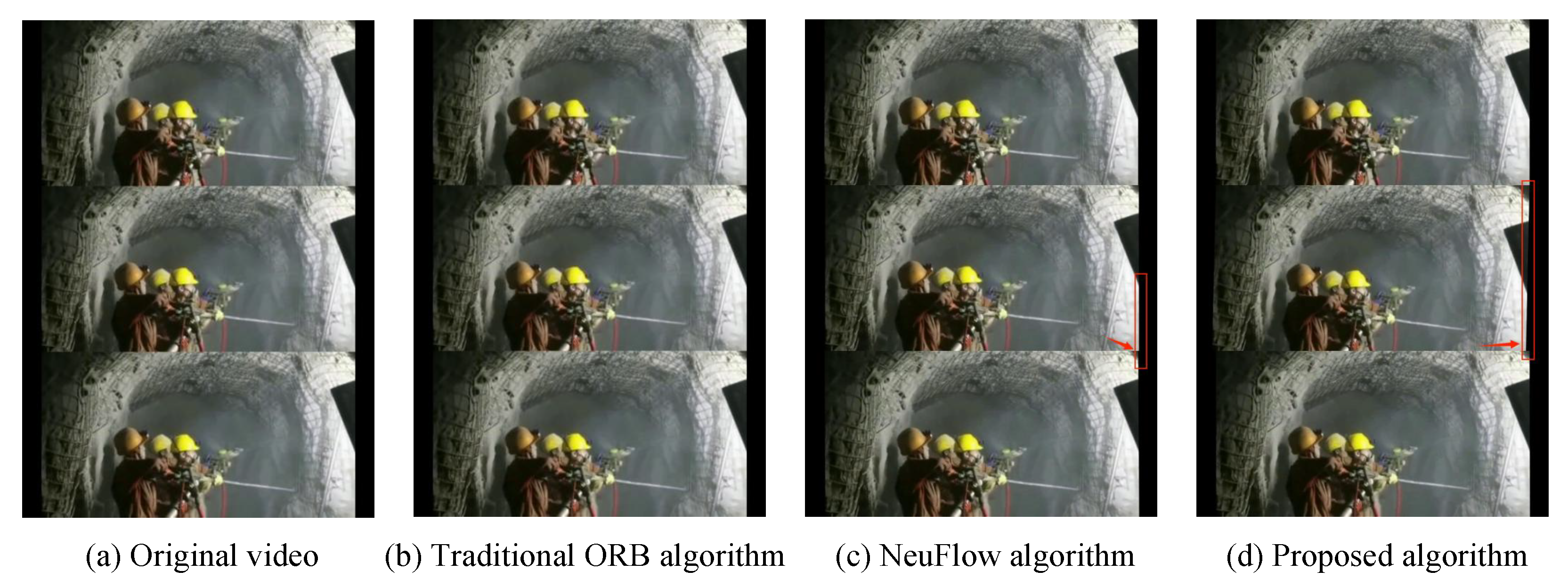

The following images illustrate the frame sequences before and after stabilization for three motion-intensity levels: low (frames 1336–1338), medium (frames 450–452), and high (frames 183–185). The leftmost frame corresponds to the original video, followed by the results from the traditional ORB-based algorithm, the NeuFlow-based algorithm, and the proposed algorithm, arranged from left to right.

After stabilization, the edges and details within the video frames remain clear, and the subject position is largely maintained. During rescue missions, this helps achieve more accurate recognition of well-wall conditions or equipment surface features, thereby reducing the likelihood of misjudgment. The proposed method maintains relatively consistent subject positioning across frame sequences while reducing edge fluctuations, which is beneficial for subsequent object detection.

As illustrated in

Figure 6,

Figure 7 and

Figure 8, the videos correspond to low-, medium-, and high-motion-intensity conditions, respectively. Videos recorded by robots during rapid maneuvers in collapsed areas or under rockfall impacts often show substantial shaking. The proposed stabilization approach alleviates subject deformation and retains key structural features across consecutive frames, even under high motion intensity.

At low motion intensity (

Figure 6), the difference between original and stabilized frames is small, and only narrow black borders are visible after stabilization. At medium motion intensity (

Figure 7), the red boxes highlight more apparent black-edge regions that arise from motion compensation and cropping. At high motion intensity (

Figure 8), the red boxes indicate further expanded black borders, as stronger vibration leads to more pronounced motion filtering and compensation.

This trend shows that increasing motion intensity enlarges stabilization-related border areas while preserving essential structural information. Such stability enables robots to obtain steady and useful visual data for disaster assessment and path planning.

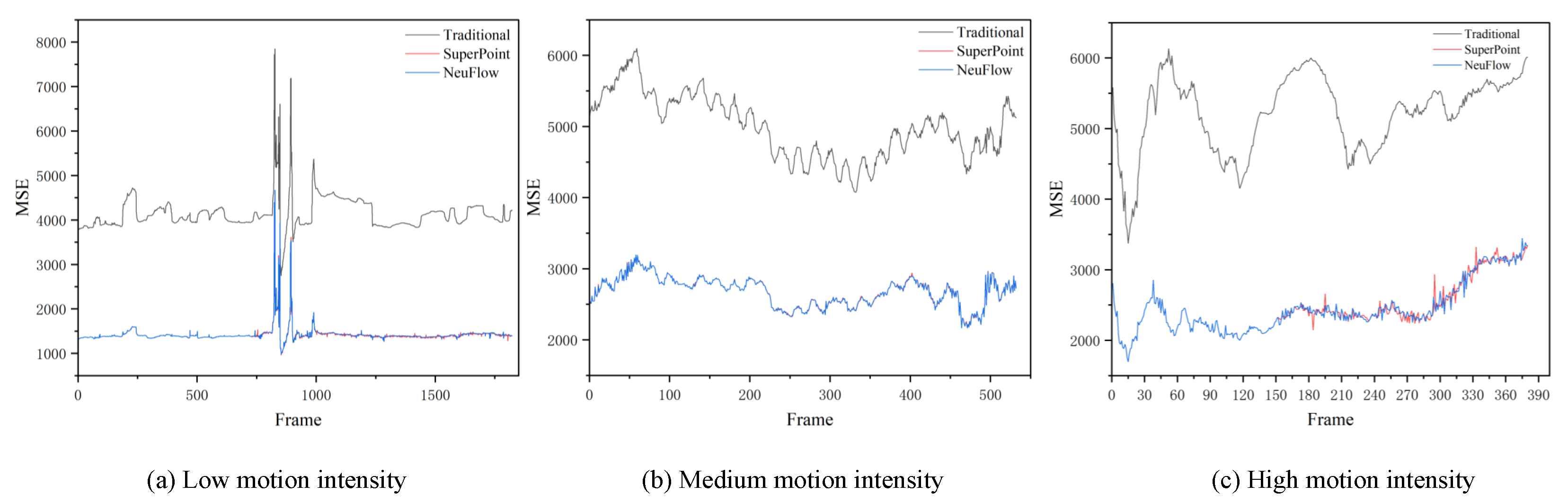

4.3. Stabilization Performance Analysis

To objectively evaluate the performance of the proposed stabilization algorithm, three quantitative metrics—Mean Squared Error (MSE), Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index (SSIM)—were comprehensively analyzed from three perspectives: signal distortion, pixel error, and structural preservation.

- (1)

Mean Squared Error (MSE)

MSE is the most fundamental pixel-difference metric for evaluating video quality. In essence, it quantifies the average squared deviation between the pixel values of the stabilized frame and the corresponding reference frame. The squaring operation amplifies the impact of pixels with large local errors, making MSE sensitive to abrupt visual variations. It therefore reflects whether the stabilization process introduces pixel-level noise, blur, or artifacts. A smaller MSE value indicates less degradation of the original image during stabilization, thus ensuring higher visual fidelity.

As illustrated in

Figure 9, the proposed algorithm achieves a lower average MSE than the traditional ORB algorithm across all three motion-intensity scenarios, and slightly outperforms NeuFlow in specific cases. Its advantage becomes particularly evident under high-motion conditions, where MSE is reduced by approximately

, demonstrating the method’s capability to stabilize video sequences with severe shaking. The traditional ORB algorithm exhibits high overall error with frequent fluctuations and pronounced peaks, particularly under high-motion conditions, resulting in poor visual stability. By contrast, the proposed SuperPoint-based approach maintains a consistently low MSE curve with minimal oscillations, while NeuFlow shows slightly higher MSE values than the proposed algorithm.

- (2)

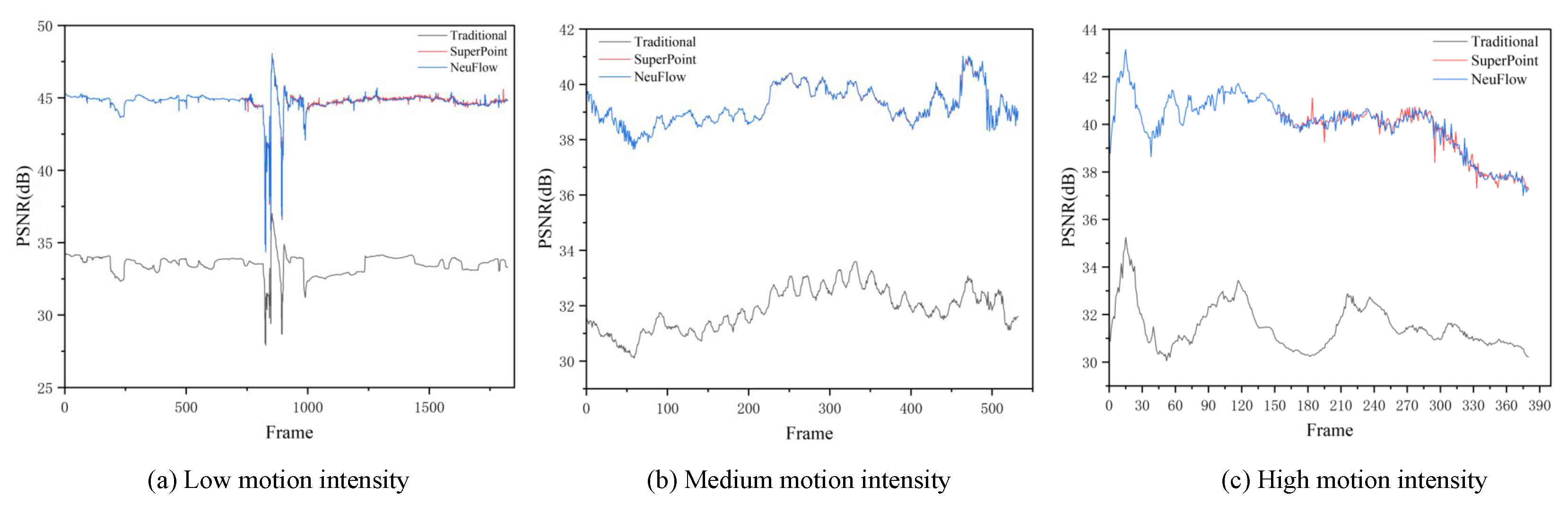

Peak Signal-to-Noise Ratio (PSNR)

PSNR quantifies the ratio between signal peaks and reconstruction error. By taking the logarithm of the error and expressing it in decibels (

), it aligns more closely with human visual perception of brightness differences. As illustrated in

Figure 10.

The PSNR curve of the traditional algorithm is generally low with significant fluctuations and no distinct peaks, indicating unstable reconstruction quality between frames and a tendency toward noticeable image distortion. The curve of the proposed algorithm maintains a high level with stable fluctuations, while NeuFlow is close but slightly lower. The average PSNR of the proposed algorithm exceeds that of the traditional algorithm across all three scenarios, and it slightly outperforms NeuFlow in some scenarios—particularly under severe shaking—where it achieves the best performance with an improvement of approximately .

- (3)

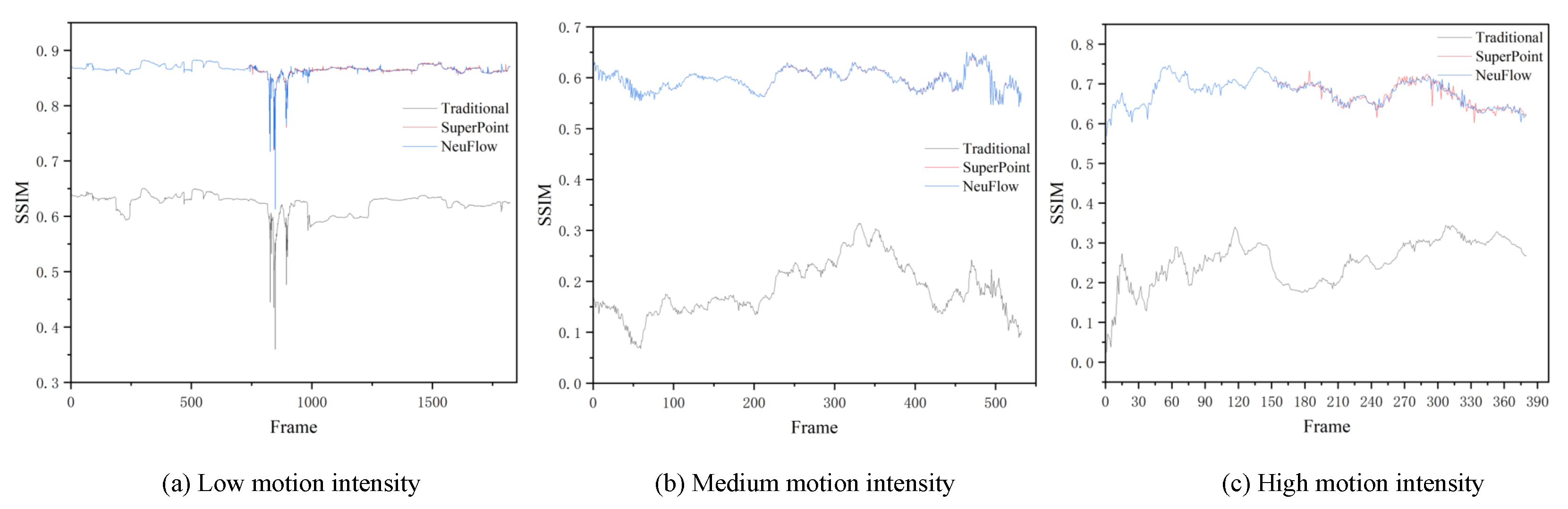

Structural Similarity Index (SSIM)

SSIM decomposes image similarity into luminance, contrast, and structure components, and synthesizes them to evaluate the similarity between two images, yielding values in the range . Values closer to 1 indicate greater similarity. This metric better reflects how well image stabilization preserves structural elements (edges, textures, etc.).

As illustrated in

Figure 11, Both the proposed algorithm and NeuFlow achieve significantly higher average SSIM values than traditional methods, effectively preserving video structural information under various shake conditions. Notably, performance improves over threefold in micro-shake scenarios, far surpassing traditional approaches. Traditional algorithms exhibit large fluctuations and significant drops in some frames, indicating unstable structural similarity. In contrast, the curves for the proposed algorithm and NeuFlow remain at high levels with minimal fluctuations and nearly overlap, demonstrating sustained structural similarity throughout the sequences. The SSIM values of the traditional algorithm cluster in a lower range, with a substantial proportion of low values, indicating loss of structural information in most frames. Conversely, the distributions for the proposed algorithm and NeuFlow shift toward higher values with strong concentration, effectively preserving structural consistency in the majority of frames.

Although the primary objective of this study is to enhance video stabilization robustness in challenging subterranean environments, computational efficiency is also a critical consideration for practical deployment on embedded rescue robot platforms. Based on

Table 3, the proposed SuperPoint-based method exhibits slightly higher processing time than traditional ORB- and NeuFlow-based approaches (e.g., 27.40 s vs. 20.21 s under high-motion intensity). This marginal increase—approximately 25–30%—stems mainly from the additional convolutional operations in the dual-head architecture. However, this cost is balanced by a substantial gain in stability and visual fidelity, as evidenced by the 27.91% PSNR improvement and over twofold SSIM enhancement. Moreover, the unified detector–descriptor framework avoids redundant computations between separate modules, partially offsetting the added complexity. Considering the overall accuracy–efficiency trade-off, the method achieves a favorable balance between computational load and stabilization quality, remaining suitable for real-time or near-real-time implementation through model compression and parallel GPU optimization.

5. Conclusions

To address stability challenges in complex subterranean environments, this study enhances the conventional ORB–RANSAC–Kalman pipeline by integrating deep feature detection based on the SuperPoint network. NMS and boundary-region filtering are introduced in the detector stage to improve keypoint localization, thereby enabling high-precision inter-frame motion estimation. During motion estimation, the framework selects the most appropriate channel according to the operating environment and supports two complementary keypoint sources (ORB or SuperPoint) to enhance matching stability and estimation accuracy. Coupled with smoothing filters, the proposed procedure effectively attenuates high-frequency, locomotion-induced jitter and reduces image drift and edge-region fluctuations.

Comprehensive experiments on mine-video sequences with low, medium, and high motion intensity demonstrate that the proposed algorithm consistently outperforms pre-stabilized inputs. On average, the PSNR increases by 27.91%, the MSE decreases by 55.04%, and the SSIM more than doubles. In the high-motion scenario, MSE decreases by approximately 65.7% and PSNR increases by around 33.58%, indicating significant improvement under severe shaking conditions. Furthermore, despite a moderate increase in inference time, the method maintains acceptable computational efficiency relative to traditional approaches, indicating that its accuracy improvements are achieved with a reasonable computational trade-off suitable for real-world rescue scenarios.

Overall, the evaluation results confirm that the proposed approach achieves both robust video stabilization and high perceptual quality while maintaining computational efficiency. Future work will focus on three directions: (i) real-time deployment on embedded platforms through model compression and adaptive feature selection; (ii) integrating the proposed algorithm into online stabilization and closed-loop control systems between underground and surface terminals; and (iii) further investigation of object detection and tracking algorithms, integrating them into the mine rescue robot vision system for joint testing and validation under real-world operational environments.

Author Contributions

Conceptualization, S.W. and Z.Z.; methodology, S.W. and Z.Z.; software, S.W. and Z.Z.; validation, S.W., Z.Z. and Y.J.; formal analysis, Z.Z. and Y.J.; investigation, S.W., Z.Z. and Y.J.; resources, S.W. and Z.Z.; data curation, S.W., Z.Z. and Y.J.; writ-ing—original draft preparation, Z.Z.; writing—review and editing, S.W.; visualization, Z.Z. and Y.J.; supervision, S.W.; project administration, S.W.; funding acquisition, S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, and further inquiries can be directed to the corresponding author.

Acknowledgments

I would like to express special thanks to Shuqi Wang for his teaching and guidance, which has benefited me greatly.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhai, G.; Zhang, W.; Hu, W.; Ji, Z. Coal Mine Rescue Robots Based on Binocular Vision: A Review of the State of the Art. IEEE Access 2020, 8, 130561–130575. [Google Scholar] [CrossRef]

- Zhang, M. Application Research on Safety Monitoring and Personnel Positioning Systems in Underground Coal Mines. Inn. Mong. Coal Econ. 2023, 18, 100–102. [Google Scholar]

- Yuan, L.; Zhang, P. Reconstruction and Reflections on Transparent Geological Conditions for Precision Coal Mining. Chin. J. Coal Sci. Eng. 2020, 45, 2346–2356. [Google Scholar]

- Liu, F.; Cao, W.; Zhang, J.; Cao, G.; Guo, L. Progress in Scientific and Technological Innovation in China’s Coal Industry and Development Directions for the 14th Five-Year Plan Period. Chin. J. Coal Sci. Eng. 2021, 46, 1–15. [Google Scholar]

- Cheng, D.; Kou, Q.; Jiang, H.; Xu, F.; Song, T. Review of Key Technologies for Intelligent Video Analysis in Entire Mines. Autom. Ind. Min. 2023, 49, 1–21. [Google Scholar]

- Grundmann, M.; Kwatra, V.; Essa, I. Auto-Directed Video Stabilization with Robust L1 Optimal Camera Paths. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 225–232. [Google Scholar]

- Wang, Y.; Huang, Q.; Jiang, C.; Liu, J.; Shang, M.; Miao, Z. Video Stabilization: A Comprehensive Survey. Neurocomputing 2023, 516, 205–230. [Google Scholar] [CrossRef]

- Guilluy, W.; Oudre, L.; Beghdadi, A. Video Stabilization: Overview, Challenges and Perspectives. Signal Process. Image Commun. 2021, 90, 116015. [Google Scholar] [CrossRef]

- Li, C.; Ma, L.; Tian, Y.; Jia, Y.; Jia, Q.; Tian, W.; Zhang, K. On-Board Video Stabilization Algorithm for Roadheader Based on CLAHE and Kalman Filter. Autom. Ind. Min. 2023, 49, 66–73. [Google Scholar] [CrossRef]

- Song, L.; Li, H.; Wang, M.; Yang, Q.; Zhang, S. Scene-Switching-Aware Electronic Image Stabilization for Foundation Imaging Systems. Opt. Precis. Eng. 2024, 32, 3383–3394. [Google Scholar] [CrossRef]

- Liu, S.; Fu, Q.; Feng, N.; Zhang, C.; He, W. Design of Electronic Image Stabilization Algorithm for Flapping-Wing Flying Robots. J. Eng. Sci. 2024, 46, 1544–1553. [Google Scholar]

- Cheng, D.; Guo, Z.; Liu, J.; Qian, J.-S. An Electronic Image Stabilization Algorithm Based on an Improved Optical Flow Method. Chin. J. Coal Sci. Eng. 2015, 40, 707–712. [Google Scholar]

- Huang, X. Research on Electronic Image Stabilization Algorithm Based on ORB Feature Matching. Master’s Thesis, China University of Mining and Technology, Xuzhou, China, 2018. [Google Scholar]

- Cheng, D.; Huang, X.; Li, H.; Wang, H.; Li, S. Research on Image Stabilization Algorithm for Mine Vehicle-Mounted Video. Autom. Ind. Min. 2017, 43, 21–26. [Google Scholar] [CrossRef]

- Kamble, S.; Dixit, M.; Nehete, D.; Joshi, D.; Potdar, G.P.; Mane, M. A Brief Survey on Video Stabilization Approaches. In Proceedings of the 2024 5th International Conference on Recent Trends in Computer Science and Technology (ICRTCST), Jamshedpur, India, 15–16 April 2024; pp. 71–81. [Google Scholar]

- Xu, S.Z.; Hu, J.; Wang, M.; Mu, T.J.; Hu, S.M. Deep Video Stabilization Using Adversarial Networks. Comput. Graph. Forum 2018, 37, 267–276. [Google Scholar] [CrossRef]

- Yang, L.; Chen, Z.; Shi, J.; Pan, H.; Yang, J. Deep Learning-Based Water-Level Video Stabilization. Mob. Commun. 2024, 48, 86–91+129. [Google Scholar]

- Lee, Y.C.; Tseng, K.W.; Chen, Y.T.; Chen, C.C.; Chen, C.S.; Hung, Y.P. 3D Video Stabilization with Depth Estimation by CNN-Based Optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10621–10630. [Google Scholar]

- Shi, Z.; Shi, F.; Lai, W.S.; Liang, C.K.; Liang, Y. Deep Online Fused Video Stabilization. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 1250–1258. [Google Scholar]

- Ali, M.K.; Im, E.W.; Kim, D.; Kim, T.H. Harnessing Meta-Learning for Improving Full-Frame Video Stabilization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 12605–12614. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).