1. Introduction

Ontologies play a key role in the development of intelligent information systems, providing a structured and formalized representation of knowledge related to specific subject areas. They facilitate efficient data integration, support accurate and meaningful information retrieval, and enable logical inference and decision-making based on semantic relationships.

One of the most important tasks when using ontologies is their enrichment and updating, which includes adding new concepts, relationships, and axioms as new knowledge emerges. Maintaining ontologies up-to-date is a complex and resource-intensive process, requiring the involvement of subject-matter experts and a significant investment of time. Traditional enrichment methods include the use of lexicographic sources and terminology databases, the integration of concepts from real-world data, automatic ontology matching and merging, and crowdsourcing approaches [

1,

2,

3]. Despite the effectiveness of these methods, their application in multilingual environments remains limited due to high labor intensity and poor scalability.

Modern developments in information technology demonstrate a steady trend toward integrating ontological modeling with natural language processing methods and large language models (LLM). This approach allows for accelerating the processes of knowledge extraction and ontology construction, automating the formation of semantic structures, and increasing their flexibility [

4,

5,

6,

7,

8]. In particular, Kollapally et al. (2025) proposed a method for placing concepts in an ontology based on lexical, semantic, and graph similarity using LLM [

6]. The integration of large language models (LLMs) into ontology engineering builds upon the Transformer architecture introduced in 2017, which underpins most modern neural language models [

7]. This architecture enables efficient contextual encoding of linguistic information and supports scalable pretraining on large corpora. Recent studies have demonstrated that LLMs can significantly contribute to semi-automatic ontology development by generating candidate classes, properties, and relations while preserving logical consistency within knowledge graphs [

8]. Such advancements highlight the growing potential of combining symbolic reasoning with neural representations to enhance the automation and semantic accuracy of ontology-based systems.

In addition, hybrid architectures that combine ontological models and LLM to implement retrieval-augmented generation (RAG) are actively developing. The OG-RAG [

9] and KROMA [

10] approaches demonstrate that the integration of ontologically structured knowledge with the generative capabilities of LLM improves the accuracy, interpretability, and robustness of results. The application of such solutions covers a wide range of tasks—from educational systems [

11] to the processing of clinical data [

12].

Several prior works have addressed Kazakh question-answering systems in limited domains, which motivates further research into hybrid and scalable solutions. At the next stage, the use of LLM algorithms for knowledge extraction from unstructured texts and automatic ontology filling was proposed [

5]. Mukanova et al. (2024) showed that the use of ChatGPT 3.5 allows for the efficient analysis of web page texts, the conversion of their content into a machine-readable format, and the automatic updating of ontological models describing the geographic features of Kazakhstan [

5].

Thus, this paper proposes a hybrid method of semantic question-answering search in the Kazakh language based on the integration of ontologies, linguistic analysis and LLM.

2. Research Questions

With the rapid development of intelligent information systems and the expanding use of semantic technologies in natural language processing, it is important to determine how the integration of ontologies, linguistic analysis, and large language models impacts the quality and accuracy of information retrieval. Therefore, this study aims to answer the following research questions:

RQ1: How can the integration of ontological knowledge, syntactic-morphological analysis and large language models improve the accuracy and semantic relevance of information retrieval in the Kazakh language?

RQ2: To what extent can large language models be effectively used to enrich ontology, including extracting new entities, relations, and facts from unstructured texts?

RQ3: How can the results of syntactic-morphological analysis be transformed into ontologically based queries to support factual question-answering retrieval?

3. Literature Review

Modern intelligent systems rely heavily on methods for extracting data from unstructured sources. Solutions have been developed for automatically generating profiles based on web data [

13] and methods for extracting disparate nodes from web pages [

14]. A review of data compression methods is provided, considering aspects of quality, encoding schemes, and data types [

15].

In the field of text recognition, an approach based on a sliding window and editing metrics has been proposed [

16]. For Chinese news data, an extraction system using readability metrics has been created [

17]. There are systematic reviews on the adaptation of data mining methodologies [

18], as well as works on assessing the impact of Industry 4.0 on professional skills using text analysis [

19]. Special attention is given to the fundamental literature on text mining [

20] and rule-based associative methods for representation materialization [

21].

NLP methods have undergone significant changes thanks to deep learning. Reviews systematize the achievements in this field [

22] and also describe the role of pre-trained models for knowledge transfer [

23]. The classical foundations of NLP are presented in the educational literature [

24].

In medical informatics, methods for processing free clinical texts [

25], machine learning for biomedical data [

26], and algorithms for matching text fragments with ontologies [

27,

28] are being actively studied. Extracting events and relationships from texts has become a separate area [

29]. Problems with the consistency of annotations [

30] are noted, as well as methods for linking anatomical entities to body parts [

31]. Seq2seq models are used to generate paths in knowledge graphs [

32].

Ontologies play a key role in knowledge management. Research demonstrates the effectiveness of ontology-oriented management of data mining models [

33] and the use of semantic technologies for industrial applications [

34]. Frameworks for automatic ontology construction [

35], methods for harmonizing heterogeneous data using word embeddings [

36], and hybrid approaches to learning ontologies for Arabic texts have been proposed [

37].

Entity linking is used to automatically populate skill ontologies based on ESCO and Wikidata [

38]. For cultural heritage, a strategy for representing metadata on CIDOC-CRM is proposed [

39]. A review of generative AI and GPT-series models is provided [

40], emphasizing their importance in ontological analysis.

The advent of LLM has accelerated semantic analysis and automation of ontological modeling. The possibility of classifying terms according to top-level ontologies using ChatGPT has been demonstrated [

41]. Modern applications of semantic web technologies in the service industry have been considered [

42]. The spatial “awareness” of LLM [

43] has been studied, as well as the integration of natural language into SQL for spatial databases [

44].

Geographic QA systems are evolving towards the integration of ontologies, open knowledge bases, and LLM. The GeoQAMap system uses maps and open knowledge graphs [

45]. QA methods based on OpenStreetMap have been proposed [

46]. Geoanalytical QA approaches using GIS are being developed [

47]. A formal grammar has been developed for interpreting geoanalytical questions as sequences of conceptual transformations [

48].

Semantic search methods in medicine [

12] can be adapted for geodata, which opens up prospects for multi-industry application.

A comparative analysis reveals the key advantages and limitations of existing approaches in this area. Most previous research has focused on developing ontology enrichment and semantic search methods. However, their adaptation to multilingual environments and geographic domains remains limited. Most solutions are focused on English and do not fully take into account the specificities of the Kazakh and Russian languages, making them difficult to apply in local contexts. Recent works such as Pawlik (2025) compared cloud-based and open-source NER tools across multiple languages, highlighting performance disparities that are also relevant when adapting entity extraction methods for low-resource languages like Kazakh [

49].

In recent years, significant progress has been made in automated information extraction, natural language processing, and the application of large language models. However, most of these methods are not adapted for multilingual semantic search in the geographic domain. This creates difficulties in enriching ontologies and reduces the accuracy and recall of searches.

In this regard, the table presents a comparison of existing methods with the proposed system (

Table 1). The comparison is based on criteria such as language coverage, ontology integration, use of LLM, focus on geographic topics, and level of automation. This analysis allows clearly identifying the scientific novelty of the presented work and determine its place among existing approaches.

The comparison was conducted using criteria such as language coverage, ontology integration, use of LLM, geographic focus, and level of automation. This analysis allows clearly identifying the scientific novelty of the presented work and determine its place among existing approaches.

4. Research Methods and Methodology

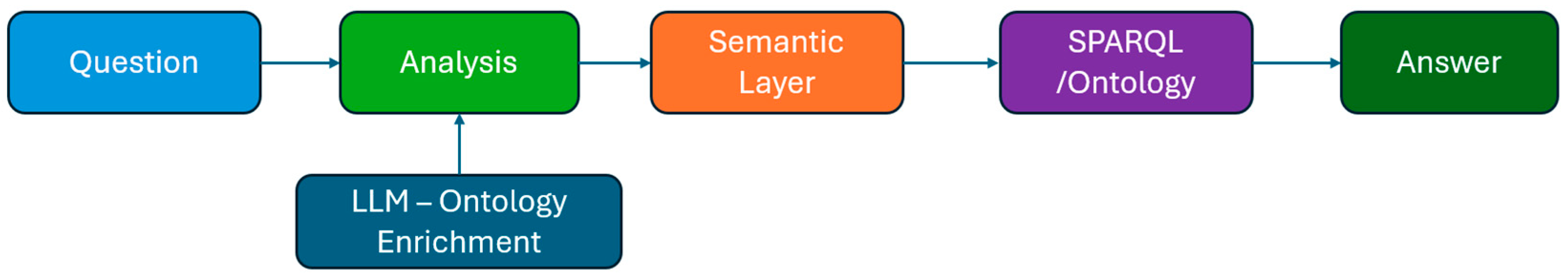

The research methods are built around the proposed hybrid method of semantic question-answering search in the Kazakh language, based on the integration of ontological models, linguistic analysis, and large language models (LLM). The general scheme of the developed approach is presented in

Figure 1 and includes a sequence of stages:

The methodology is based on a hybrid approach for semantic question answering in the Kazakh language, integrating ontological modeling, linguistic processing, and large language models (LLMs). At the first stage, the user’s query undergoes morphological and syntactic parsing, lemmatization, and semantic classification to determine its type and expected response. The query is then transformed into a formal structure containing entities, relations, and semantic roles, which are mapped to ontology concepts. Next, the system performs semantic interpretation by aligning question elements with ontology classes, properties, and SWRL rules. Logical inference enables the discovery of implicit relations and generation of new facts. A SPARQL query is then automatically generated and executed on the RDF knowledge graph to retrieve relevant information, with the reasoner ensuring logical consistency. The retrieved data are converted into a human-readable text or semantic graph. Additionally, an ontology enrichment module powered by LLMs extracts new entities and relations from unstructured text, converts them into RDF triples, and integrates them into the ontology. This enhances the completeness of the knowledge base and enables adaptive ontology enrichment.

Overall, this methodology provides a unified framework where linguistic processing ensures understanding, the ontology guarantees semantic accuracy, SPARQL enables formal retrieval, and the LLM supports continuous knowledge expansion.

4.1. System Architecture

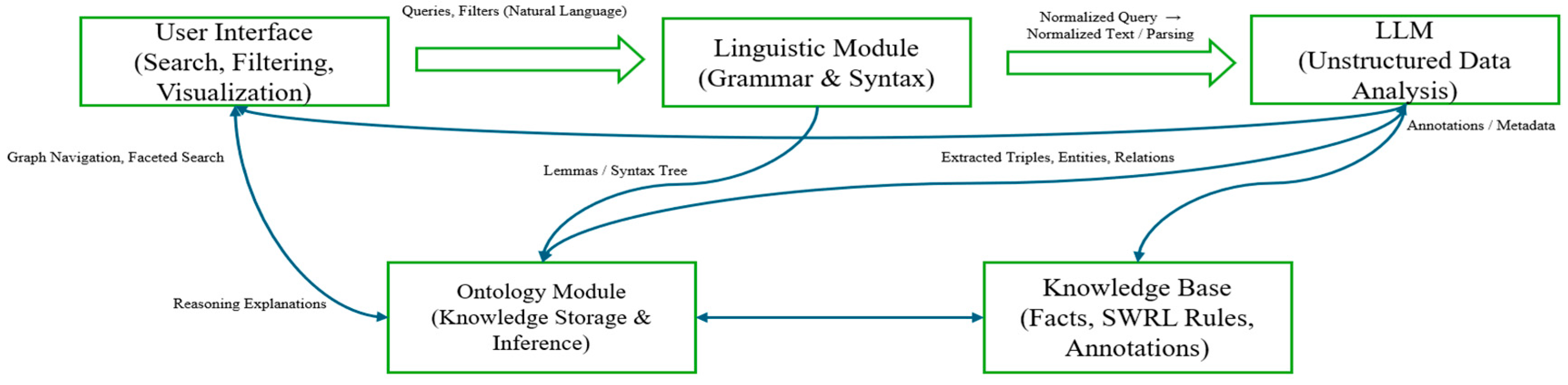

The architecture of the developed intelligent system includes five interconnected modules: a user interface, a linguistic module, a knowledge processing module based on LLM, an ontology module, and a knowledge base. The interaction of the modules is shown in

Figure 2 and reflects the full processing cycle, from user input to response.

The user interface enables question entry and results display. The linguistic module handles morphological and syntactic analysis. The LLM module performs semantic interpretation, entity extraction, and knowledge enrichment. The ontology module includes a reasoner, a SPARQL engine, and inference rules, while the knowledge base stores ontological descriptions and semantic relationships. This modular structure ensures the flexibility, extensibility, and scalability of the system.

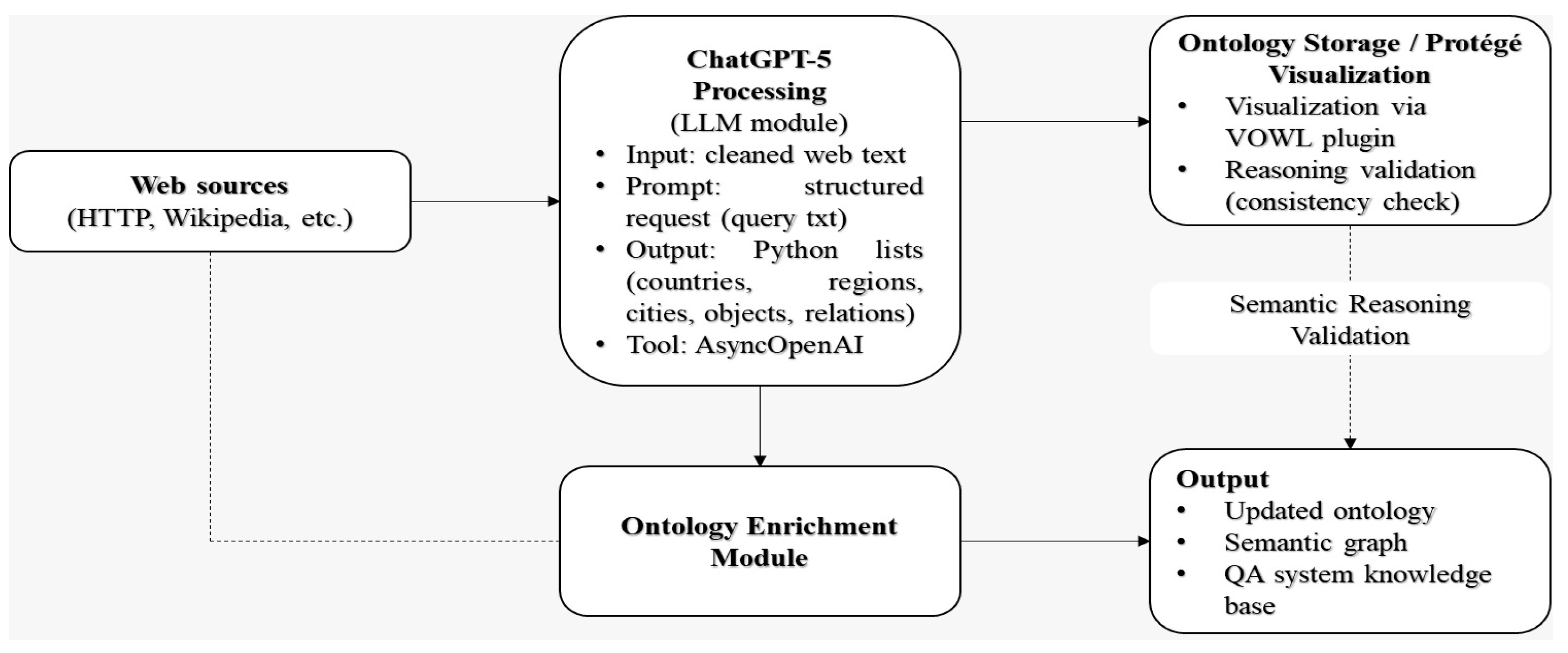

A separate part of the methodology focuses on ontology enrichment using large language models. The workflow is illustrated in

Figure 3, which shows the process of retrieving and structuring web data through asynchronous HTTP requests and ChatGPT-5 (OpenAI, 2025) processing. The extracted structured data (entities, relations, locations) are automatically integrated into the ontology and validated for consistency.

4.2. Data Generation and Preparation

This study generated a comprehensive dataset for constructing an ontological model of the subject area related to the geography of the Republic of Kazakhstan. In the first stage, terms were collected, systematized, and analyzed, their semantic relationships were determined, and the corresponding properties and attributes of objects were identified. The subject area was the administrative-territorial structure of Kazakhstan: information was collected on 17 regions, more than 90 cities, and the districts and towns within them. Additionally, data on 414 rivers and 370 lakes were systematized, for which semantic relationships were defined and descriptive characteristics were established: 19 properties for rivers and 29 properties for lakes were added to the ontological model. Based on this data, a terminology base was formed, forming the basis of the ontology, providing a holistic, formalized representation of geographic objects and the relationships between them.

To validate the system, a corpus of over 50,000 question-answer pairs was created. This corpus was used for training, testing, and evaluating the quality of semantic search. The results confirmed that the ontological representation of data improves the accuracy and relevance of the match between the query and the retrieved facts.

4.3. Formal Specification of Morphological–Ontological Mapping

The correspondence between morphological features and ontological roles is defined by a mapping function:

where

denotes the set of morphological features (root, suffix, case, etc.), and

denotes the set of ontological roles (EntityType, Relation, Attribute).

This mapping establishes a deterministic link between morphological analysis and semantic representation used in SPARQL query generation.

4.4. Question Typology and SPARQL Transformation Scheme

The system supports three main classes of factual questions: (i) entity-based, (ii) relational, and (iii) compositional. Each question type is associated with a transformation template that converts linguistic structures into SPARQL queries according to predefined semantic roles.

4.5. Formal Validation of Mapping Rules

The correctness and completeness of mapping rules are defined as follows:

where

is the set of supported question types,

is the set of generated triples, and

is the matching relation. This ensures that every valid question yields a consistent and semantically interpretable triple structure.

5. Ontology-Based Enrichment and Linguistic Integration for Semantic Information Retrieval

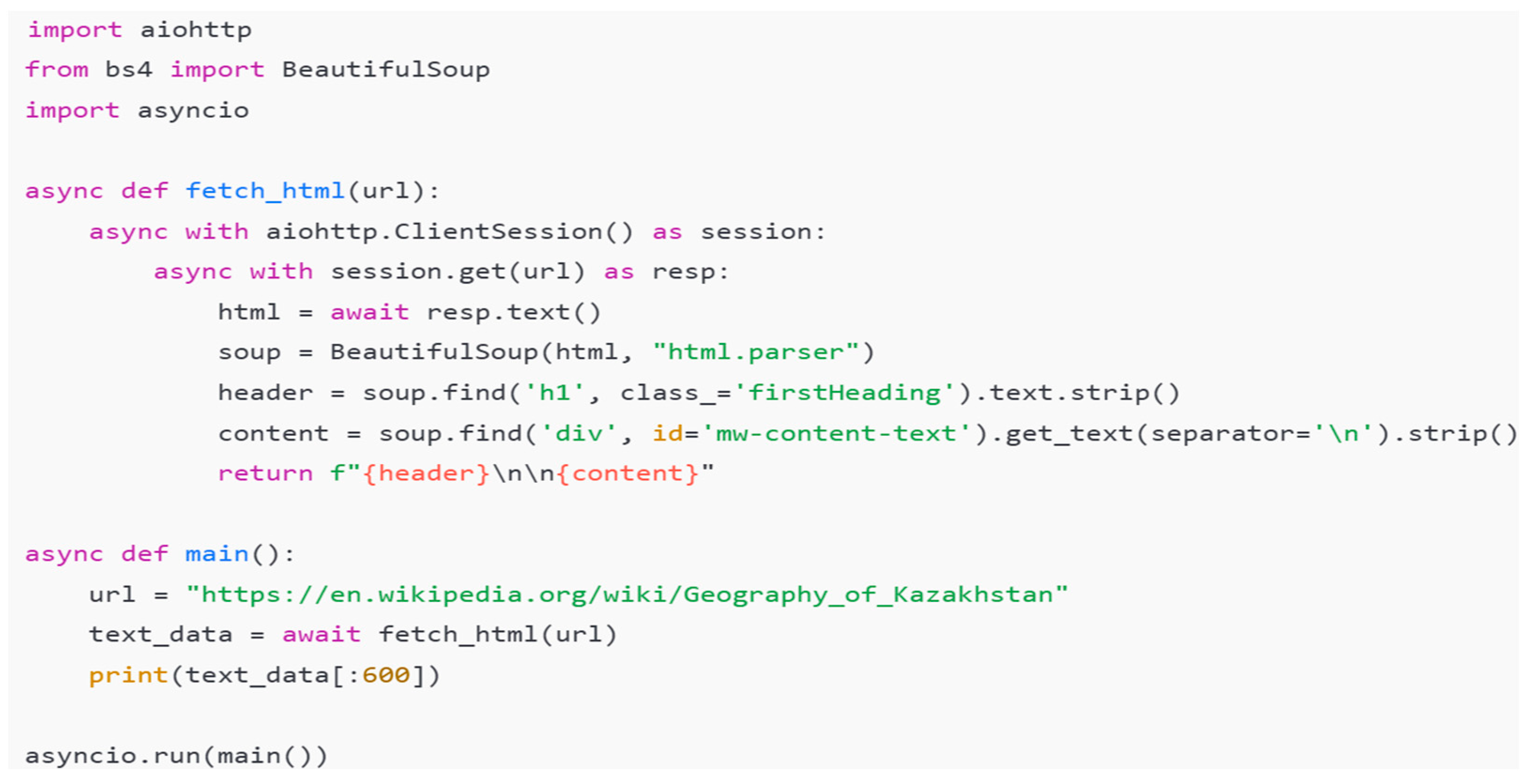

5.1. Data Extraction from the Internet via the HTTP Protocol

At this stage, data were retrieved from open web sources to support ontology enrichment. The aiohttp library was employed to perform asynchronous HTTP requests, enabling efficient parallel access to multiple online resources. The BeautifulSoup library was utilized to extract semantically relevant text from HTML documents. The data extraction workflow is illustrated in

Figure 4. As an example, the Wikipedia page “Geography of Kazakhstan” (

https://en.wikipedia.org/wiki/Geography_of_Kazakhstan, accessed on 25 June 2025) was used, from which the title and main content were obtained for subsequent semantic analysis.

At this stage, a request is formulated in natural language and may include structured data. Its purpose is to obtain information within a specified domain and represent it in a machine-readable format.

Typically, the request includes the webpage code, a brief description of the extracted data, and the desired output format. For integration convenience, the Python 3.10 format is used. Since ChatGPT operates probabilistically, the results may vary slightly between runs. A stable query template that ensures consistent output is illustrated in

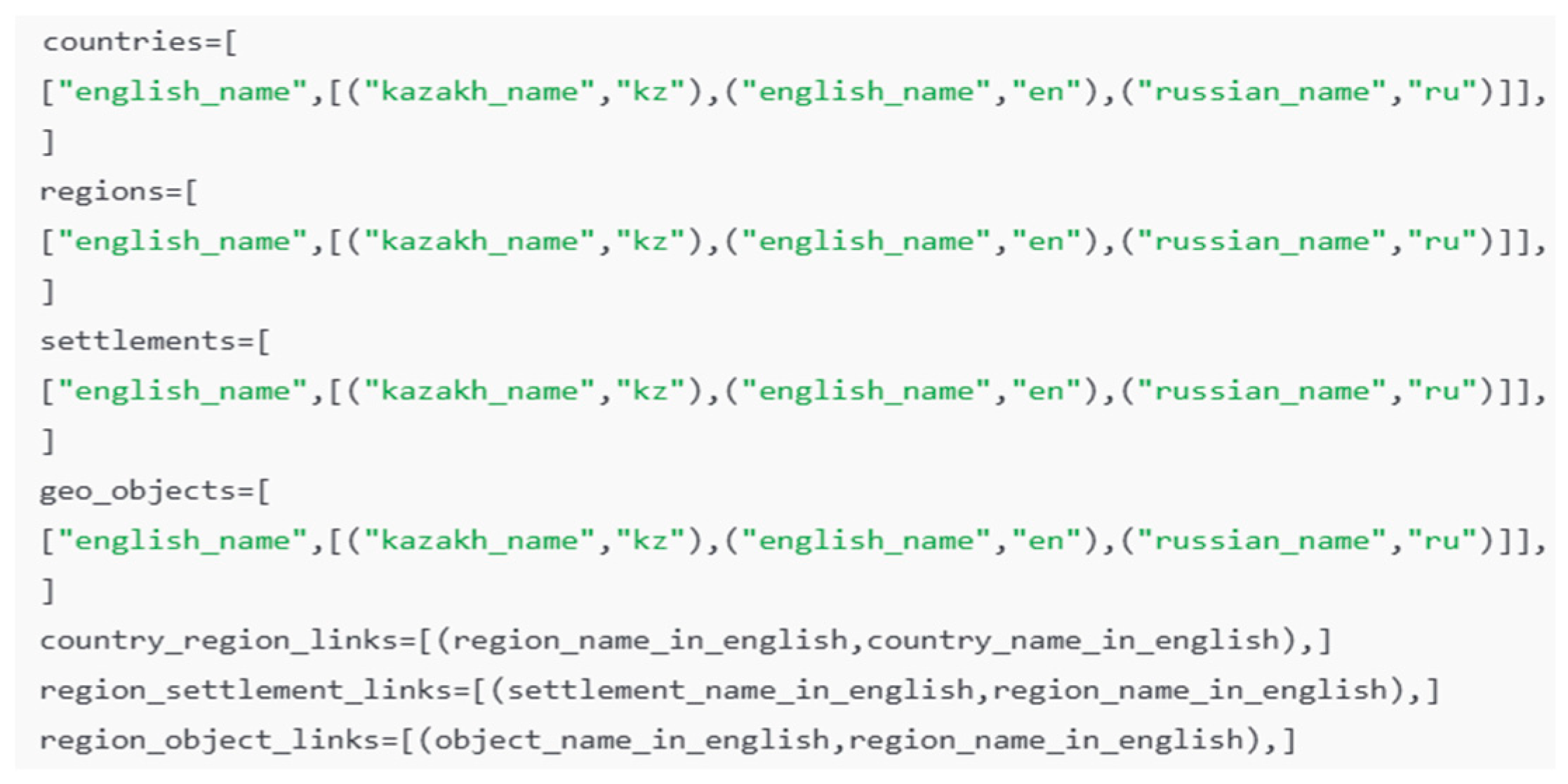

Figure 5.

In this query, a structure consisting of seven Python lists is defined. The first four lists contain instances of the classes Country, Region, Settlement, and Object. The remaining three lists describe hierarchical relationships between these entities: the association of a region with a country, a settlement with a region, and an object with a region.

Interaction with the ChatGPT language model is implemented using the asynchronous AsyncOpenAI library.

Figure 6 presents a code fragment in which an instance of the openAIclient class sends a request to the model and processes the response. The query text, containing a detailed description of the data extraction and structuring task for the ontological model, is stored in an external file named query.txt.

The response generated by the ChatGPT-5 model as a result of executing the specified query (a shortened version is shown in

Figure 7) is represented as Python code. This data format is advantageous for subsequent processing and integration into the ontology enrichment module, as it does not require additional syntactic parsing or transformation.

The resulting data structures can be directly utilized for the automatic population of ontology classes—Country, Region, Settlement, and Geographical Object—as well as for establishing hierarchical relationships among them.

The result of executing the query is a text transformed by the model in accordance with the specified Python data structure format, containing information about countries, regions, settlements, and geographical objects. The obtained data are automatically transferred to the ontology enrichment module for subsequent logical analysis and the addition of new knowledge triplets.

5.2. Enrichment of the Ontological Model with the Obtained Data

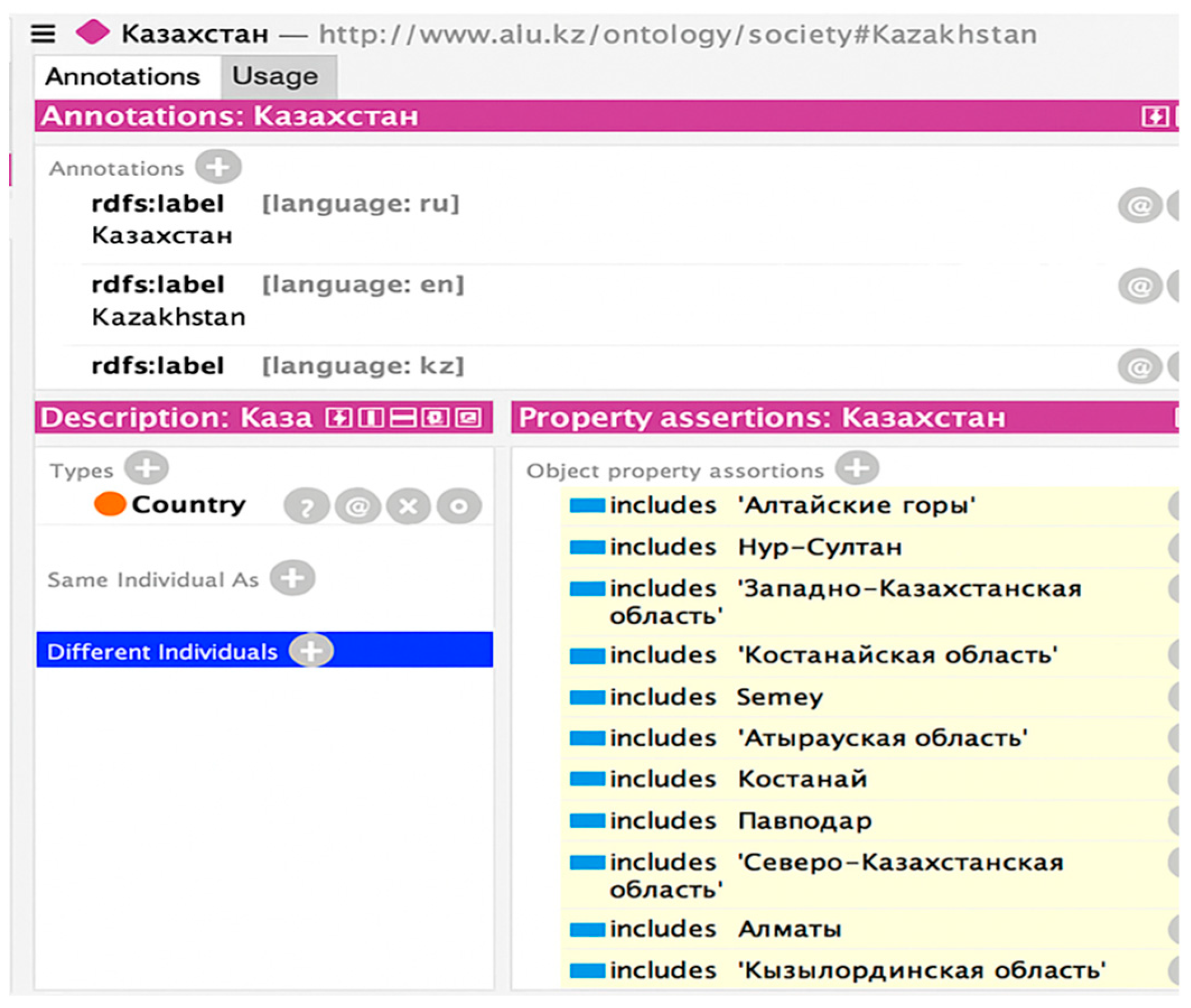

For the practical implementation of the proposed intelligent information retrieval technology, an ontological model of the geography and administrative-territorial structure of the Republic of Kazakhstan was developed.

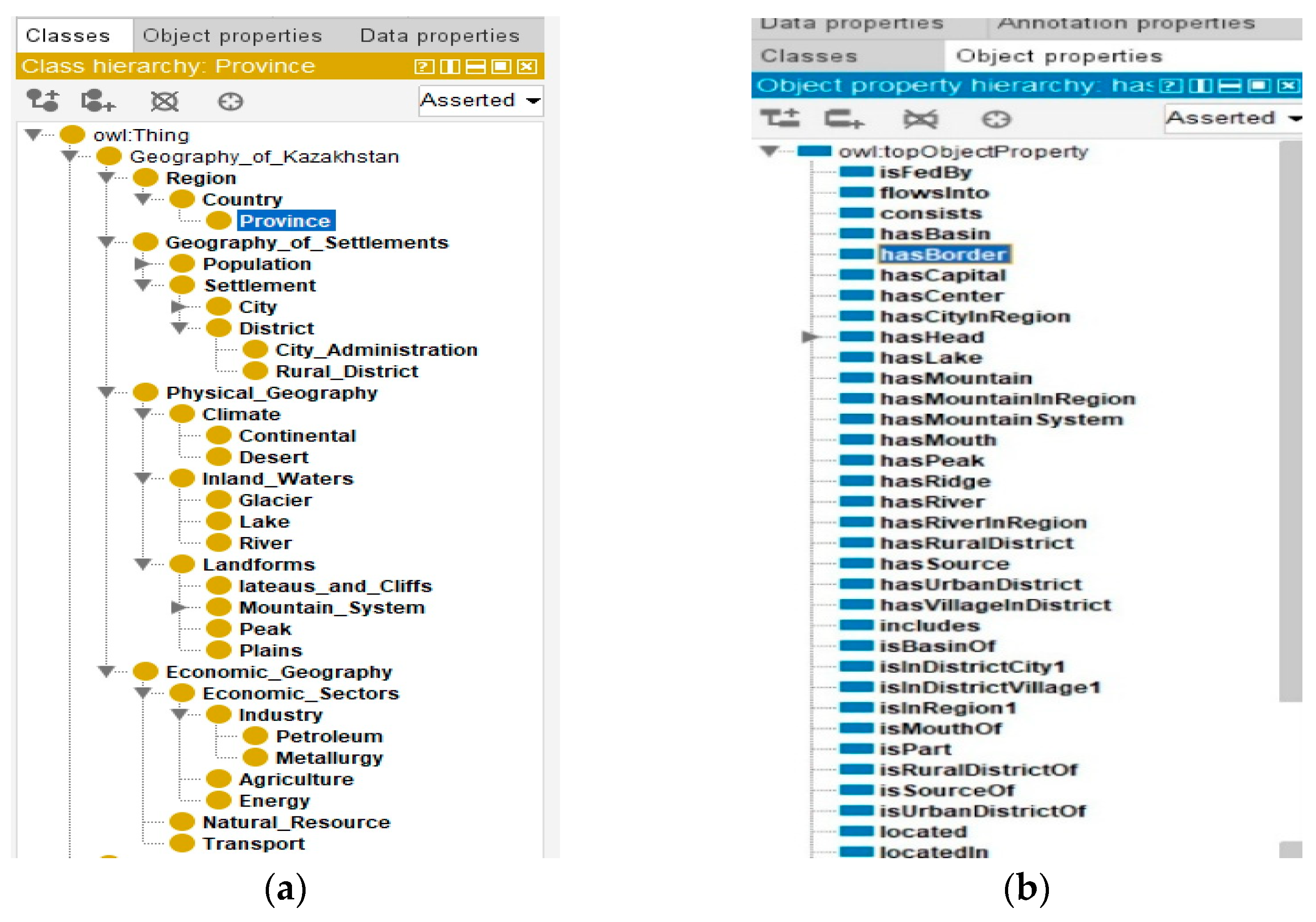

The model defines a hierarchy of classes and properties, including regions, cities, rivers, lakes, and other geographic features. This hierarchy is shown in

Figure 8.

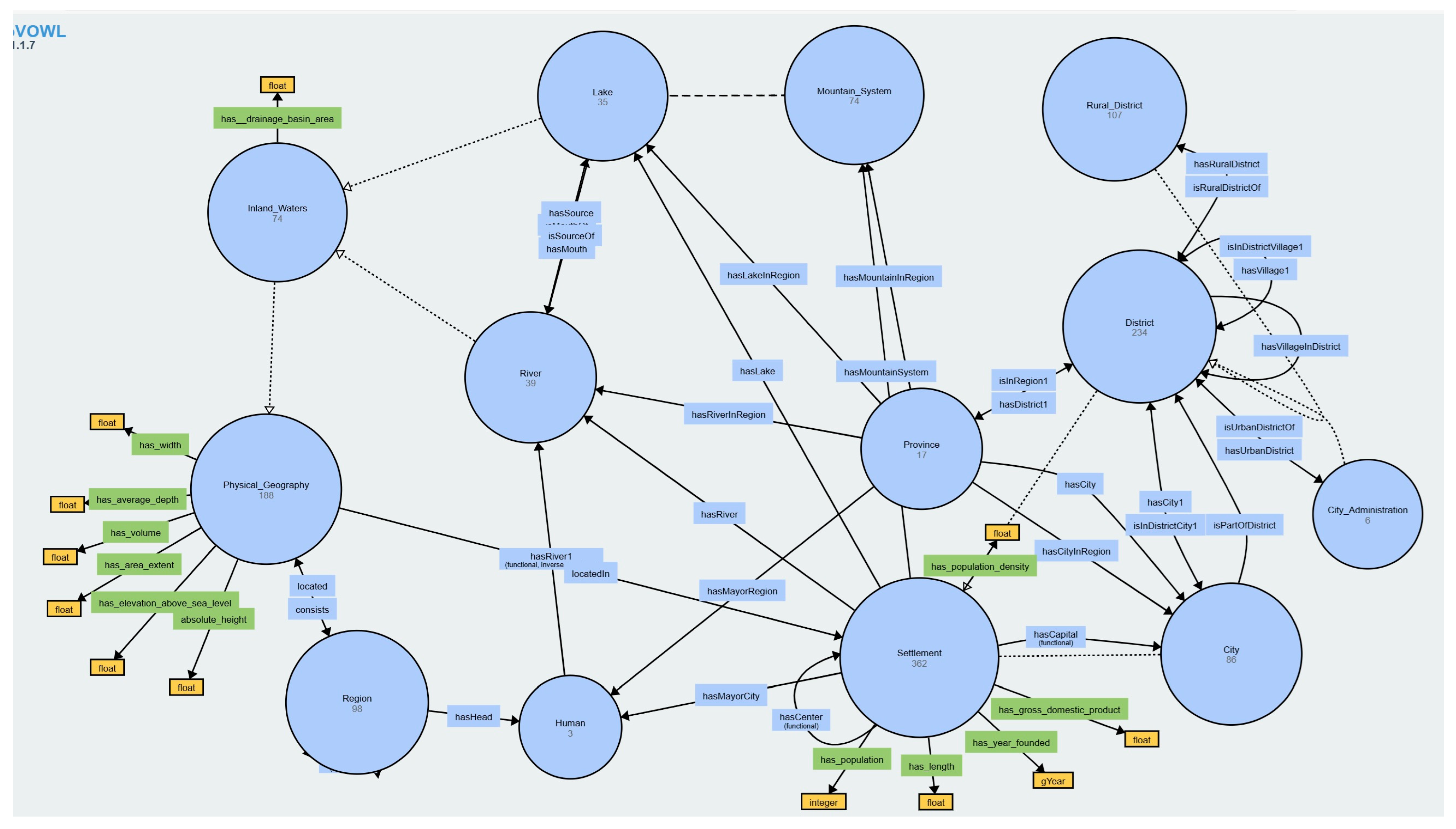

Furthermore, a semantic network graph was generated based on the ontology using the VOWL plugin in the Protégé environment, which clearly demonstrates the relationships between geographic concepts. The visualization shown in

Figure 9 serves as the basis for testing and validating the mechanisms of the question-answering system developed in this study.

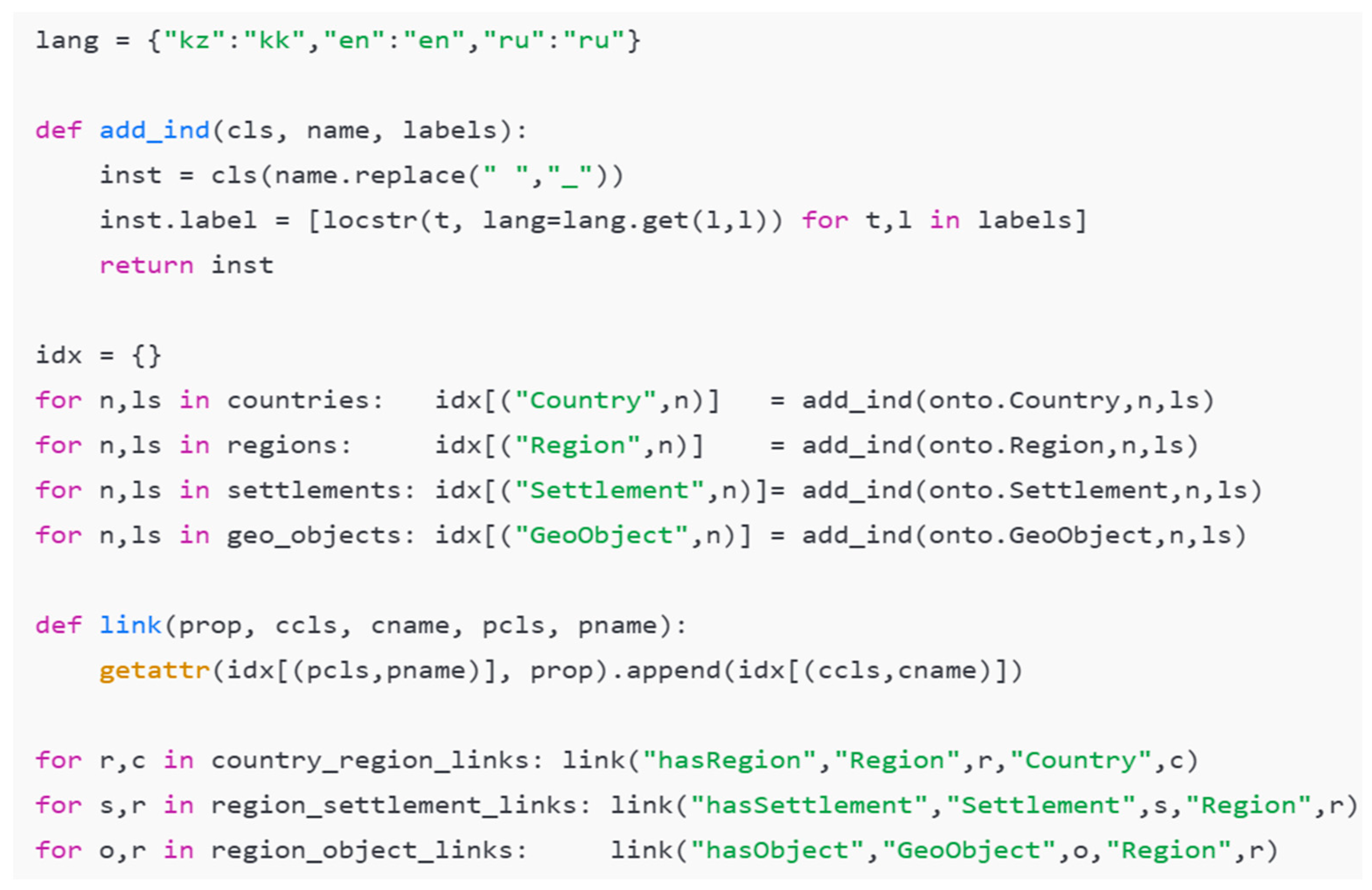

At the next stage, the ontology is enriched with data extracted from a web page and represented in Python format (

Figure 10). Interaction with the ontology is implemented through the OWLready2 library, where labels are created for each entity in Kazakh, Russian, and English.

The validation of the ontological model confirmed the correctness of its structure and the relationships between individuals. The ontology is consistent, and the reasoning mechanism operates without errors (

Figure 11).

Recent studies have also emphasized the importance of structure-aware evaluation of large language models when handling semi-structured or formatted data, such as RDF or Markdown. The MDEval framework (Chen et al., 2025) specifically evaluates and enhances the ability of LLMs to interpret such structured text, which is essential for ontology enrichment and semantic reasoning [

50].

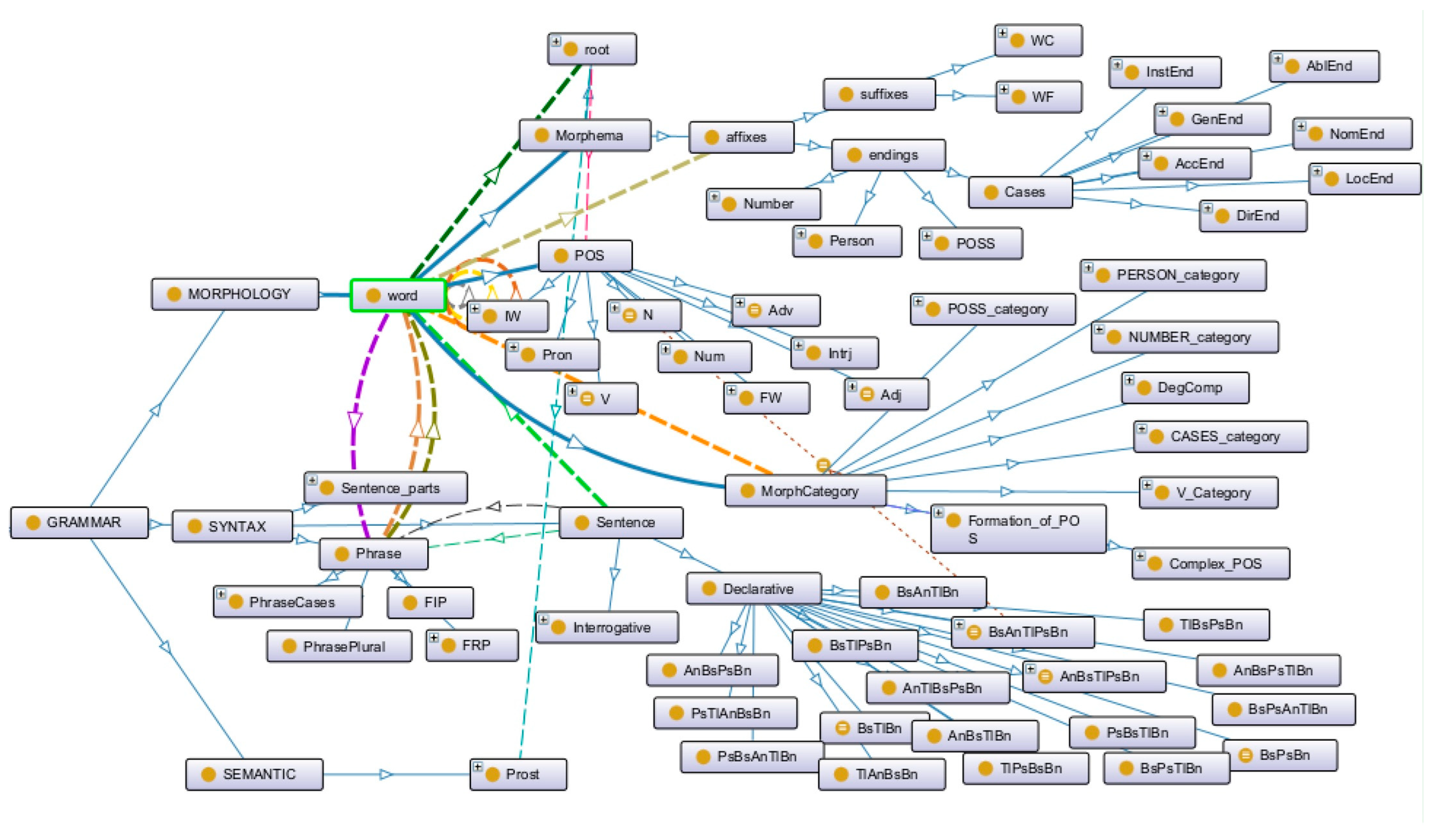

5.3. Development of Algorithms for Linguistic Processors

In the process of automatic natural language processing, the formalization of grammatical rules plays an important role, ensuring the correct morphological, syntactic, and semantic representation of linguistic structures. The Kazakh language is agglutinative and has a complex structure, so constructing a formal model of its grammar is of particular importance.

To solve this problem, an ontological model was used that describes morphological and syntactic categories, as well as their semantic relationships. Based on this model, a semantic model of the grammatical rules of the Kazakh language was developed, including:

hierarchy of basic linguistic categories (morphology, syntax, semantics, etc.);

formalization of morphological categories (number, case, person, voice, etc.);

description of syntactic relationships (combination, control, agreement, etc.);

integration of grammatical and semantic levels through ontological relationships.

The ontology of grammar rules acts as a formal metalanguage, allowing for the description of morphological and syntactic patterns in the form of semantic relations and logical axioms. The resulting model enables automatic analysis and generation of Kazakh sentences and serves as the basis for building intelligent question-and-answer systems (

Figure 12).

Syntactic rules integrated with semantics allow for the analysis of sentence structure through their semantic connections, which significantly increases the efficiency of machine translation, automatic text analysis, and natural language processing.

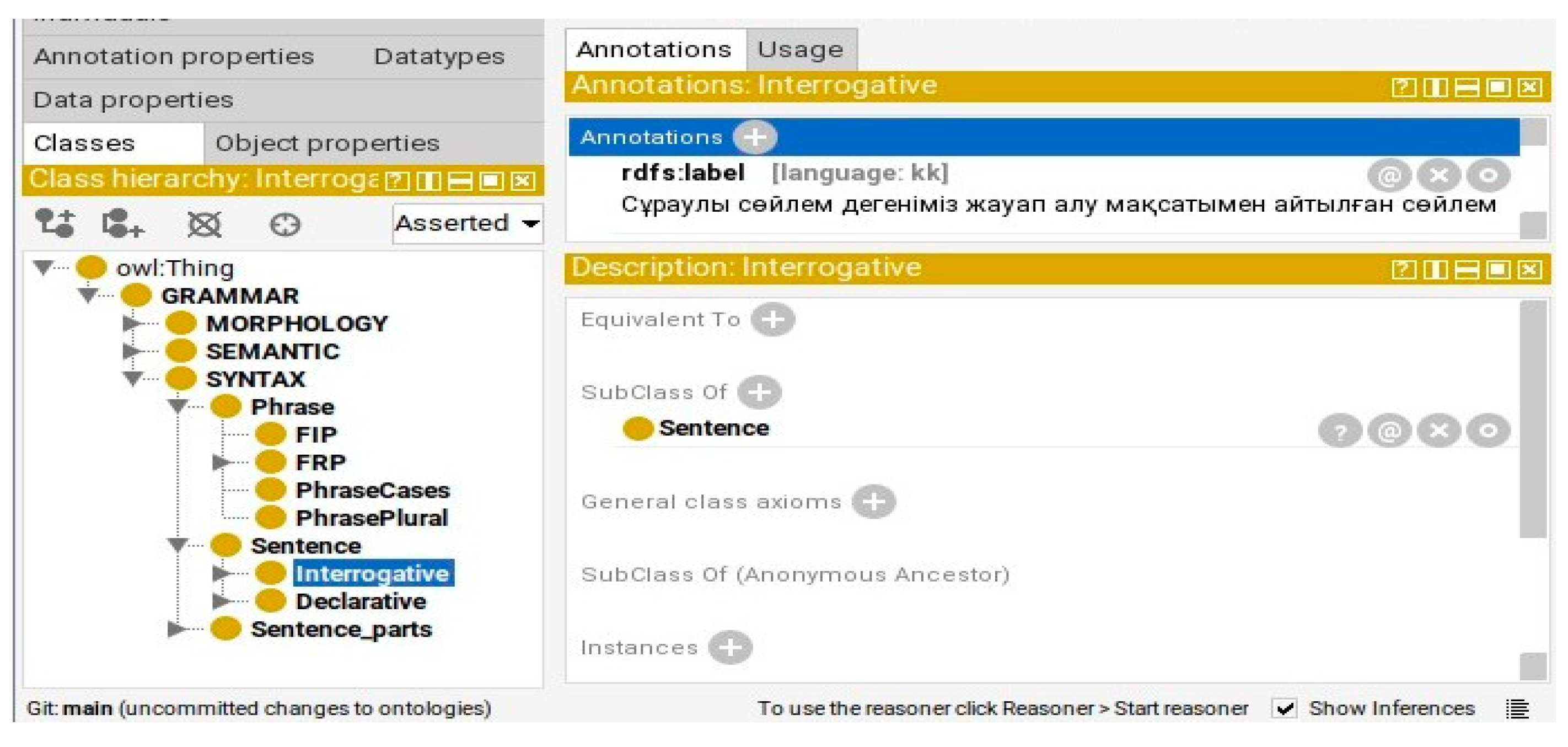

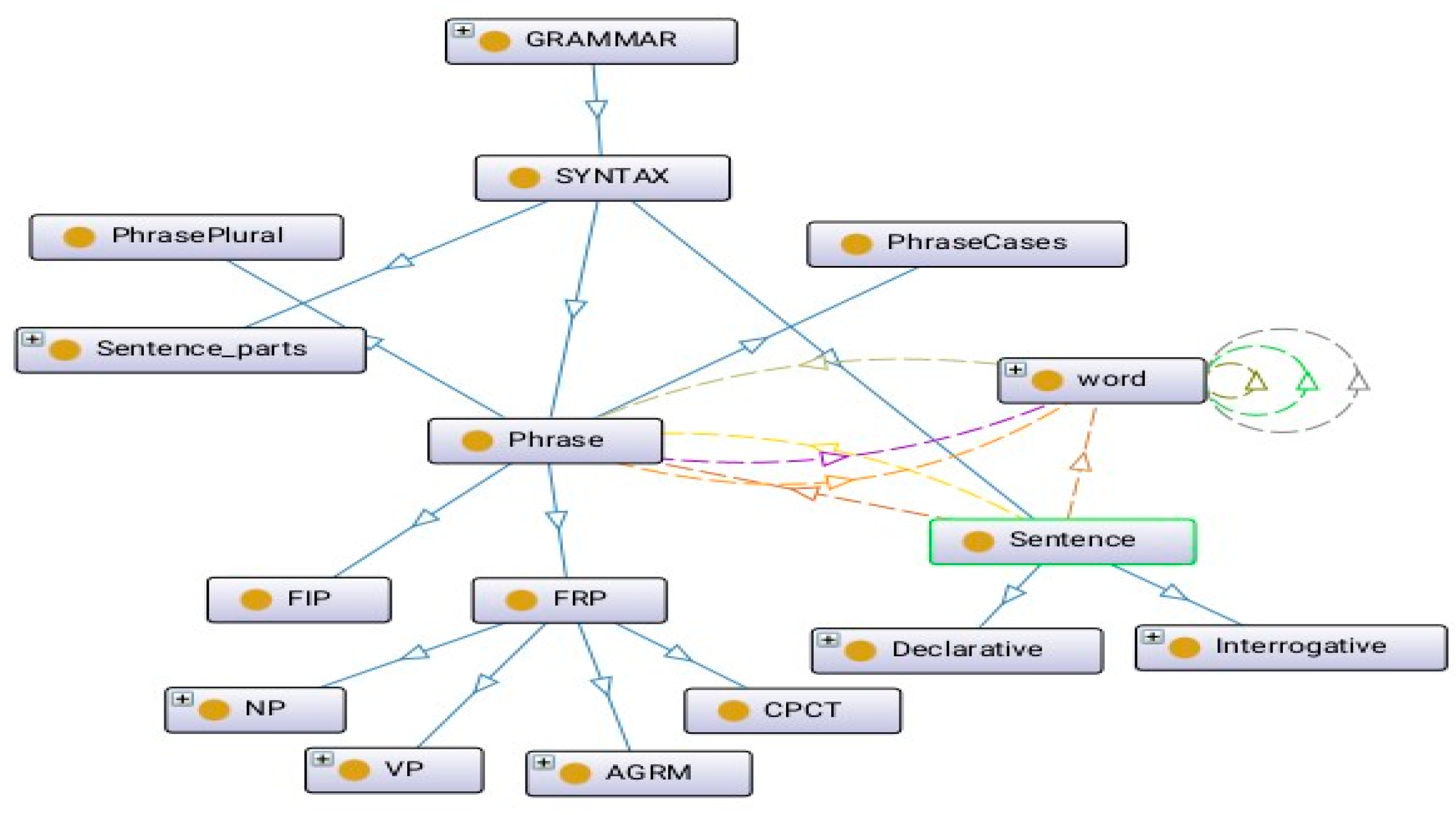

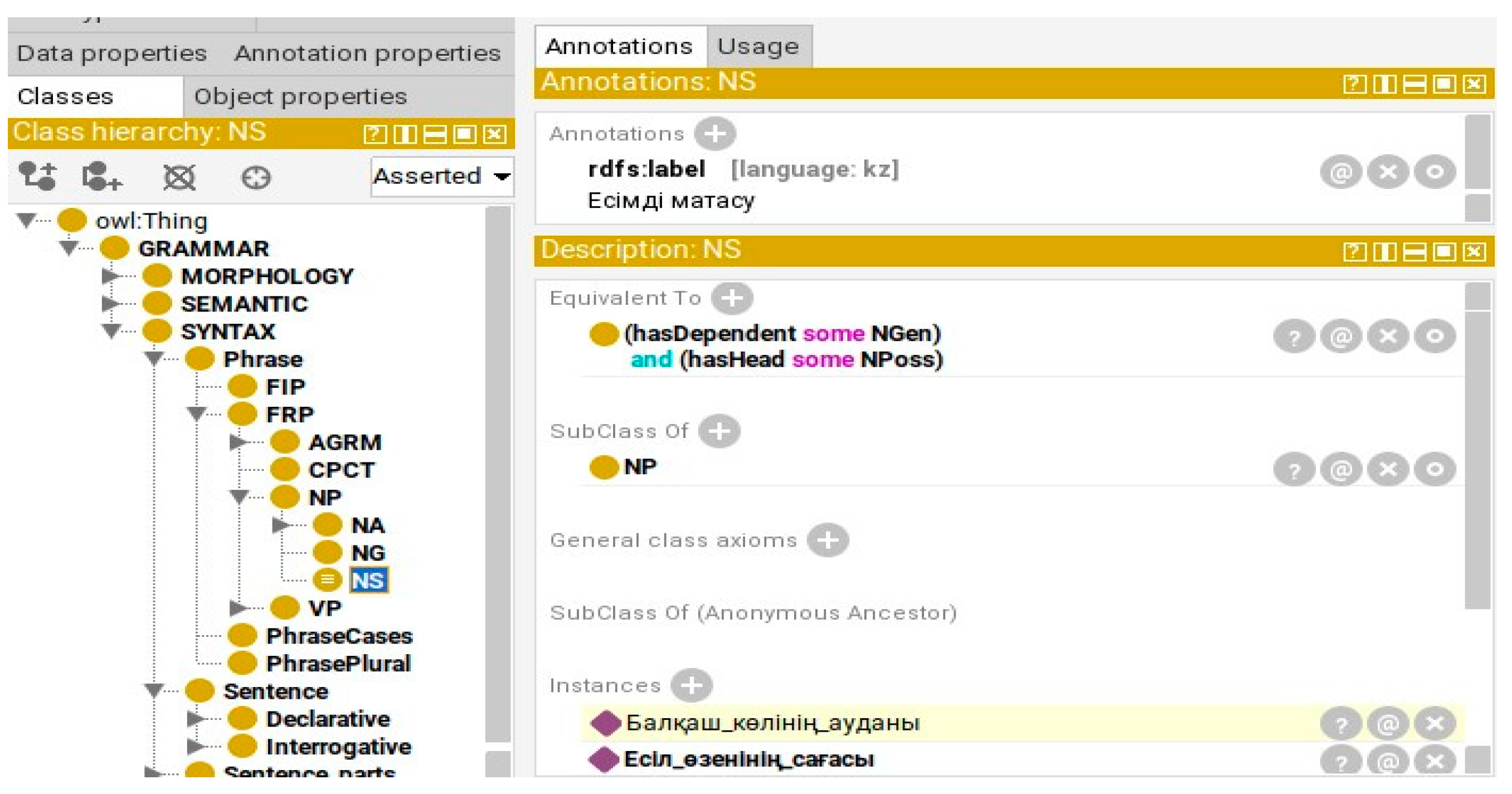

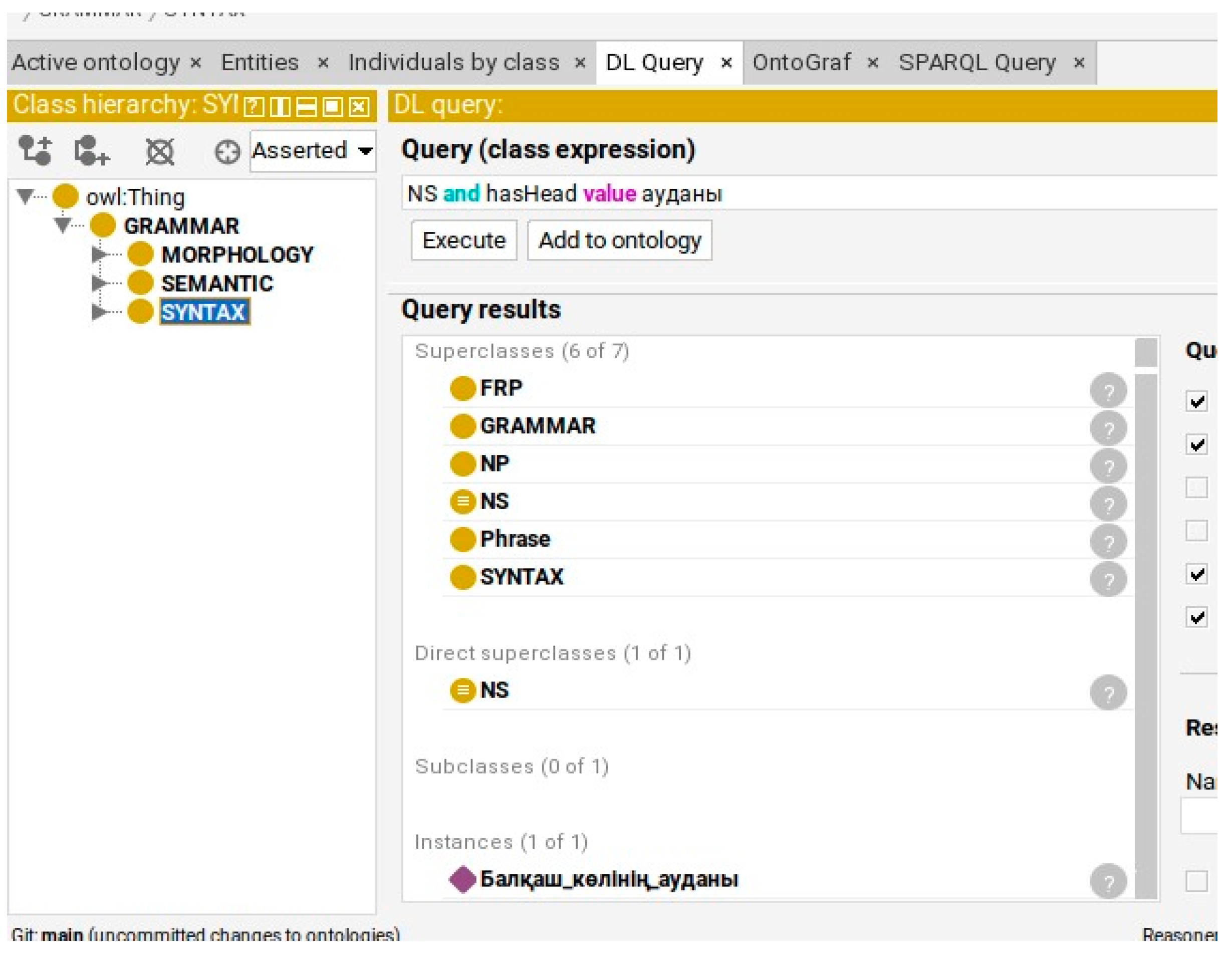

The ontological model defines the basic syntactic concepts of the Kazakh language: sentence (sentence), interrogative sentence (interrogative), phrase (phrase), free phrase (FRP), noun phrase (NP), and verb phrase (VP). These classes allow for the automatic analysis of the syntactic structure of questions and sentences (

Figure 13).

5.4. Basic Ontology Structures and Knowledge Extraction

Figure 14 shows the main classes of the ontology and the relationships between them. These relationships allow formalizing the syntactic and semantic rules used in the system.

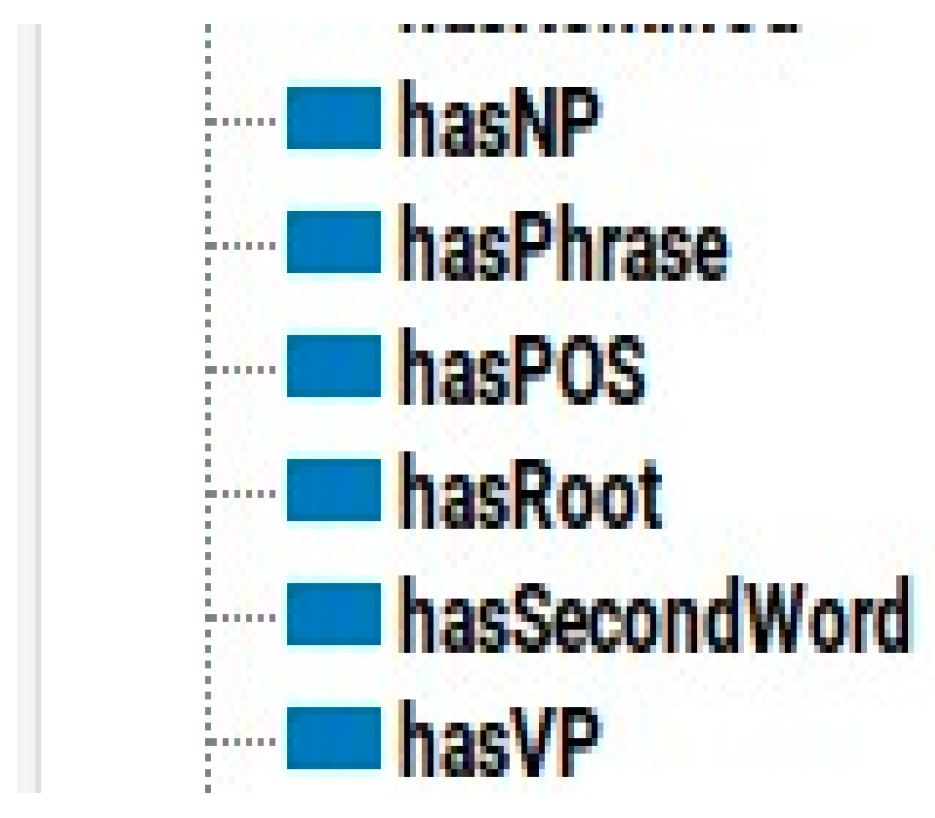

To establish grammatical relationships between classes, special object properties were defined.

Figure 15 shows a fragment of object properties.

For example, to indicate that a sentence contains noun and verb phrases, the properties hasNP (noun phrase) and hasVP (verb phrase) were introduced.

To ensure completeness of syntactic rule modeling, annotations, equivalence rules, and individuals were defined for classes (

Figure 16). This allows the ontology not only to describe the structure but also to work with specific language elements.

5.5. Extraction of New Knowledge and Logical Inference

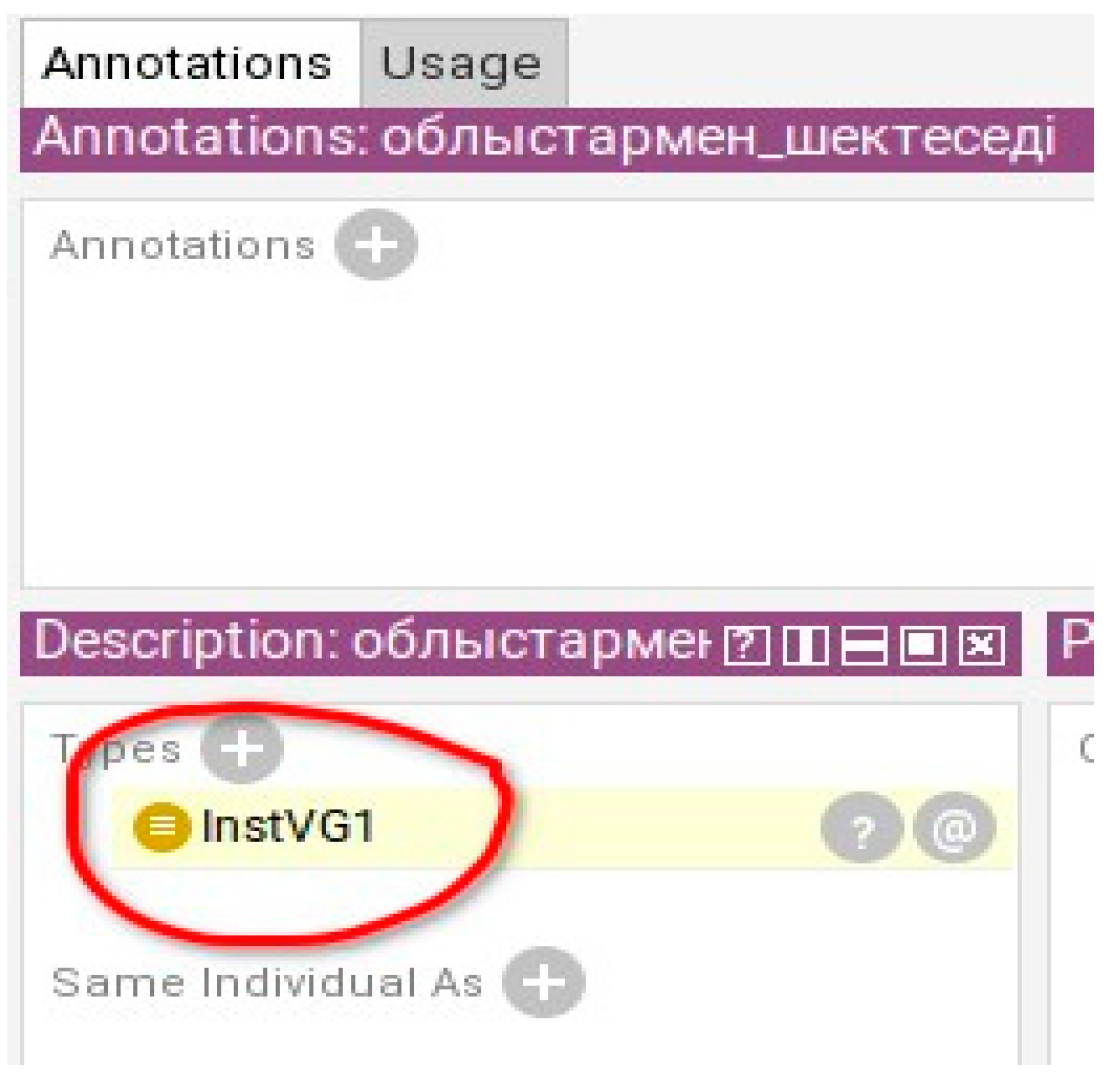

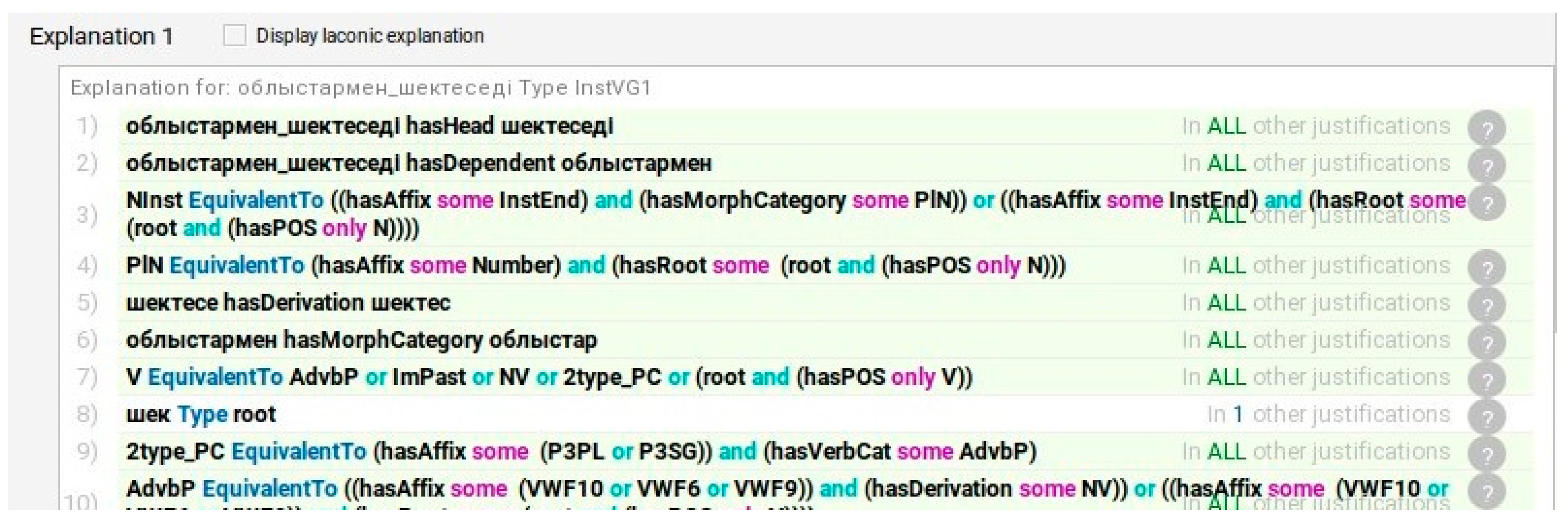

For a deeper understanding of the patterns and relationships of syntactic rules, the mechanism of logical inference (reasoning) is used.

Figure 17 shows an example where, based on existing knowledge, the ontology automatically determines that the expression “oblystramen shetesedi” (“bordered by regions”) belongs to the control type of the verb with the instrumental case (InstVG1).

Figure 18 illustrates how the reasoner (the inference engine) performs semantic-aware analysis. In this case, new relationships were inferred based on 29 syntactic and semantic knowledge. This demonstrates the ontology’s ability to expand the knowledge base without explicitly entering all the facts. The ontology reasoning component builds upon established SPARQL-based event and stream reasoning frameworks, such as EP-SPARQL (Anicic et al., 2011), enabling dynamic query generation for semantic question answering [

51].

5.6. Semantic Search

Ontology is used not only for logical inference, but also for semantic search, which allows revealing hidden connections and structured information based on specified properties and logical relationships.

Figure 19 shows an example of a semantic search for the phrase “audany” (“area”), which results in the system finding information about the object “Area of Lake Balkhash.” This example demonstrates the effectiveness of using an ontological model in intelligent information retrieval systems.

6. Results

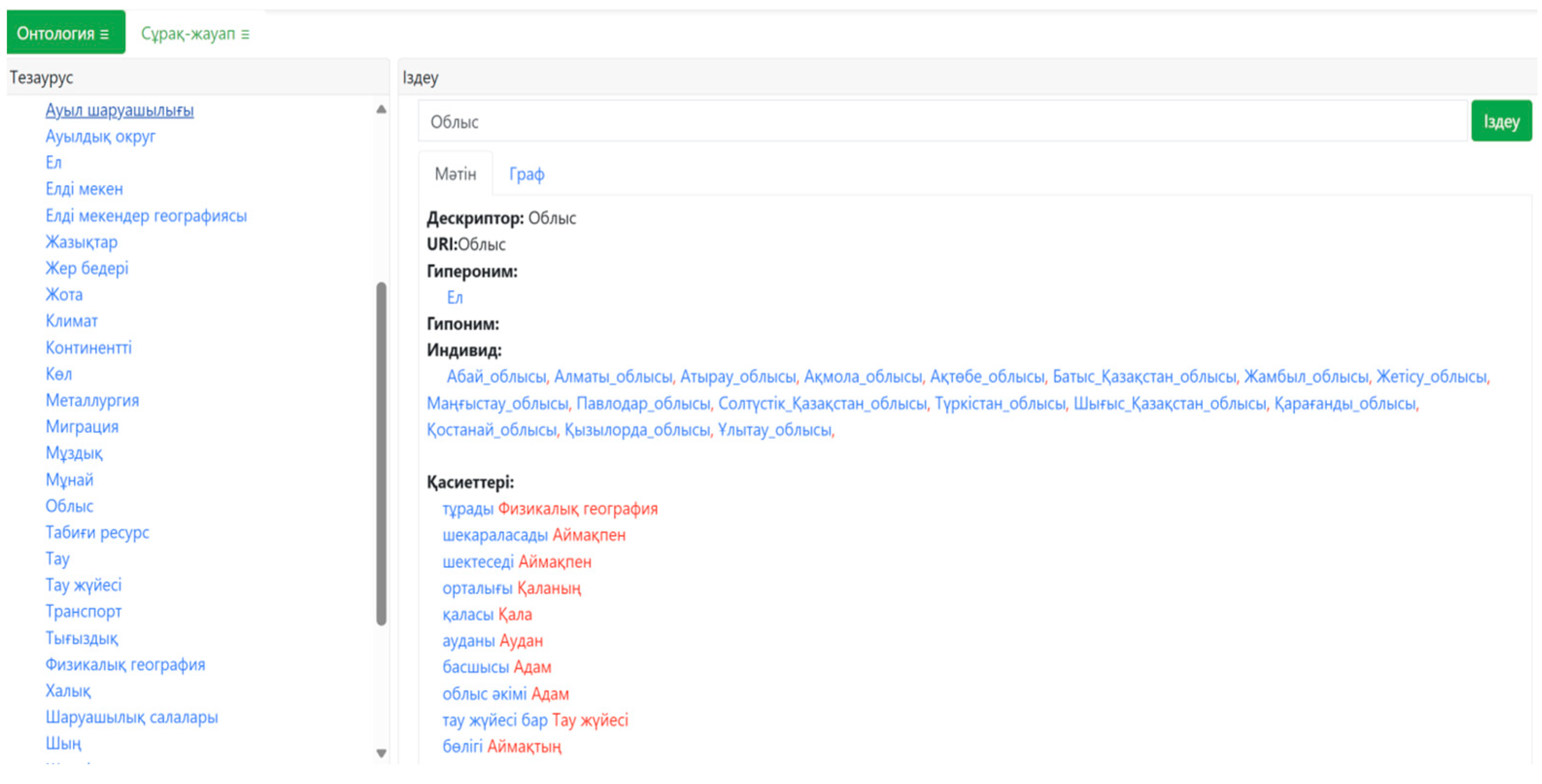

6.1. User Interface of the System

The developed system implements user and administrator interfaces

https://iqas.aiu.kz:5443/kk/ (accessed on 10 November 2025). To access the system, if the browser displays a security warning, the user must click the

‘Proceed to iqas.aiu.kz (unsafe)’ link. The main components of the user interface are shown in

Figure 20 and include:

list of thesaurus concepts;

search bar;

display information in text format;

graphical representation of concepts.

When you select any concept, detailed information about it is displayed in the left panel—hypernyms, hyponyms, individuals and related properties (

Figure 21).

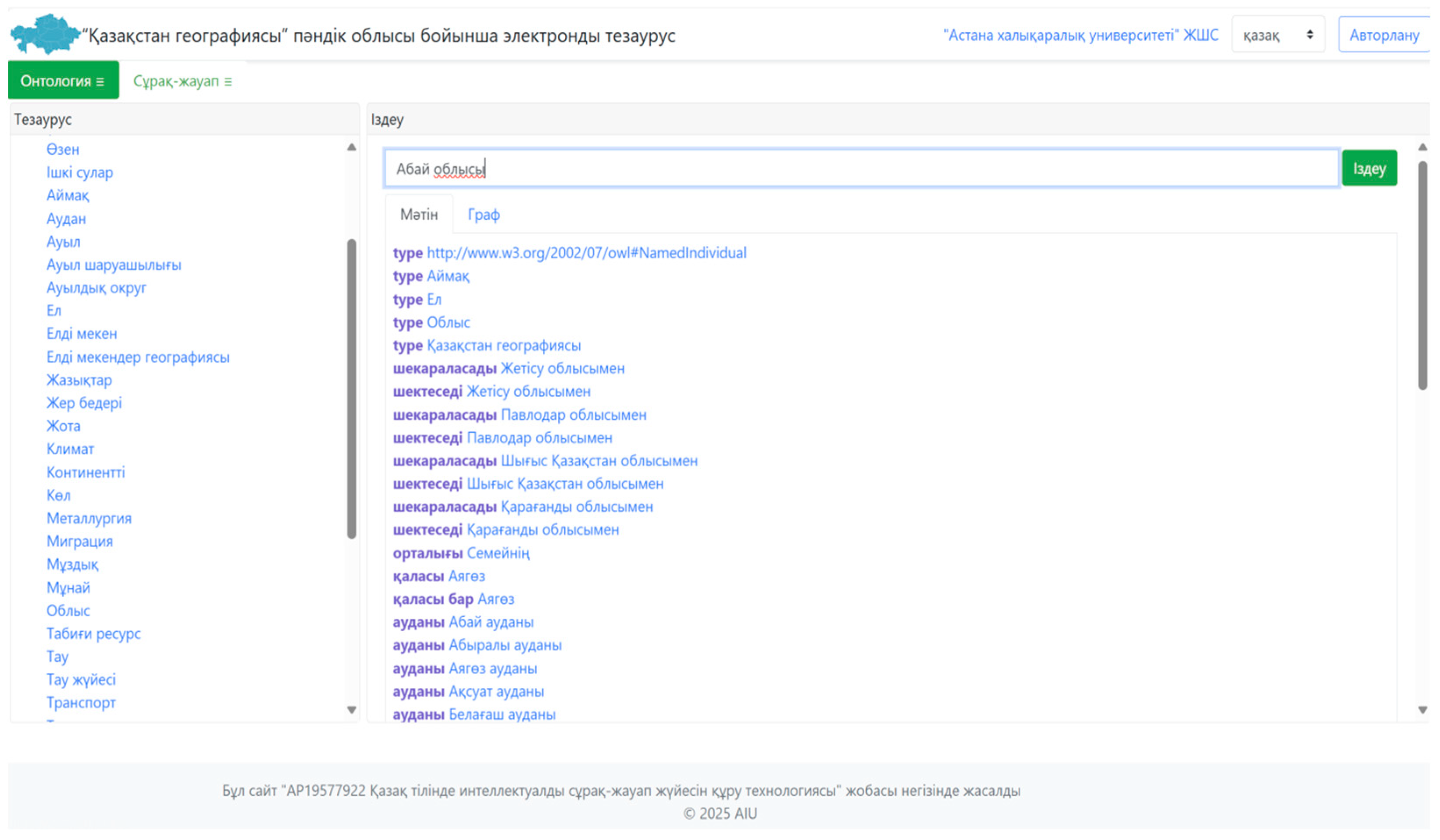

Using the search field, you can find the desired concept, for example, “Abai oblysy” (Abai region), and obtain all the related information (

Figure 22).

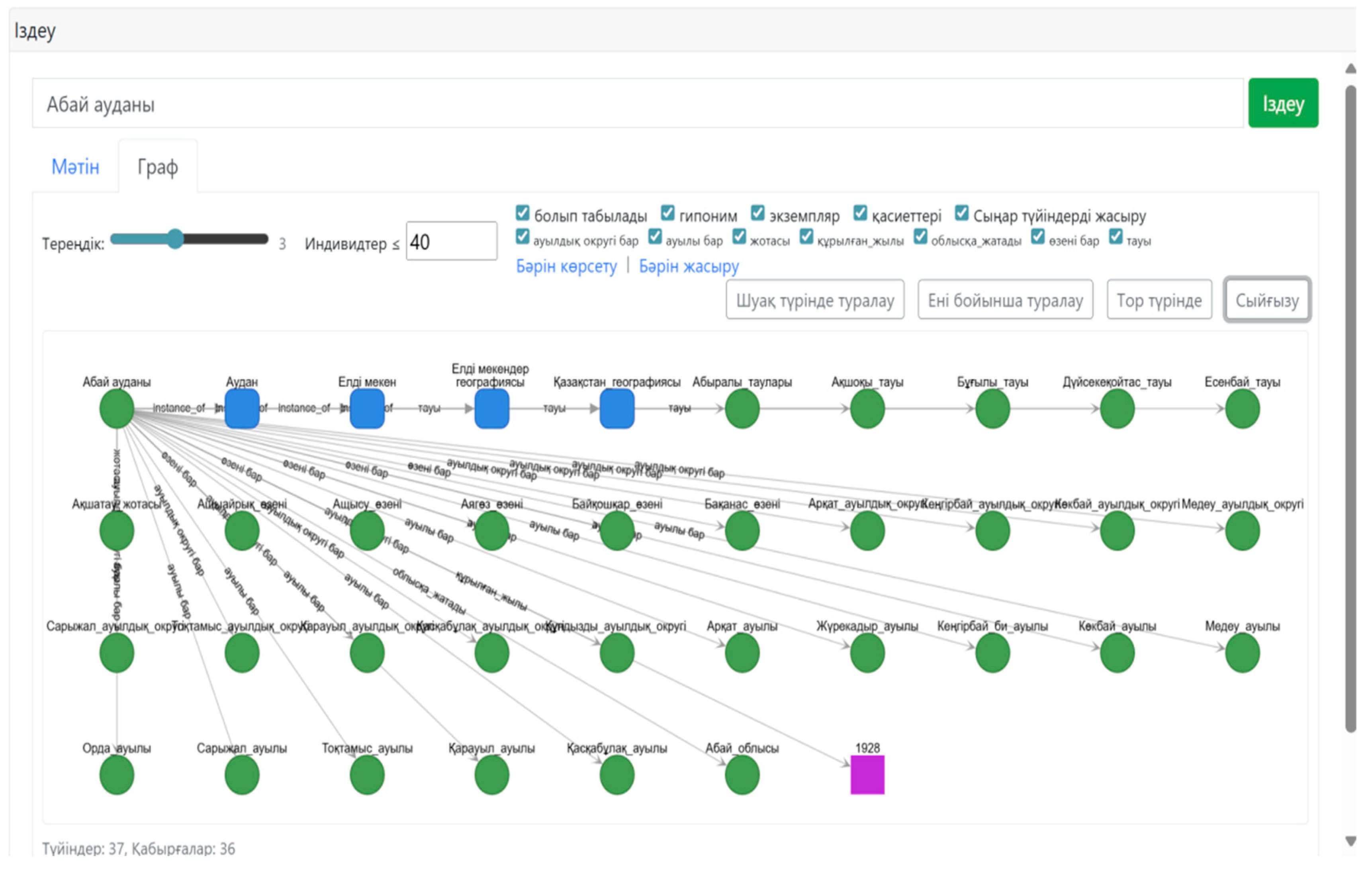

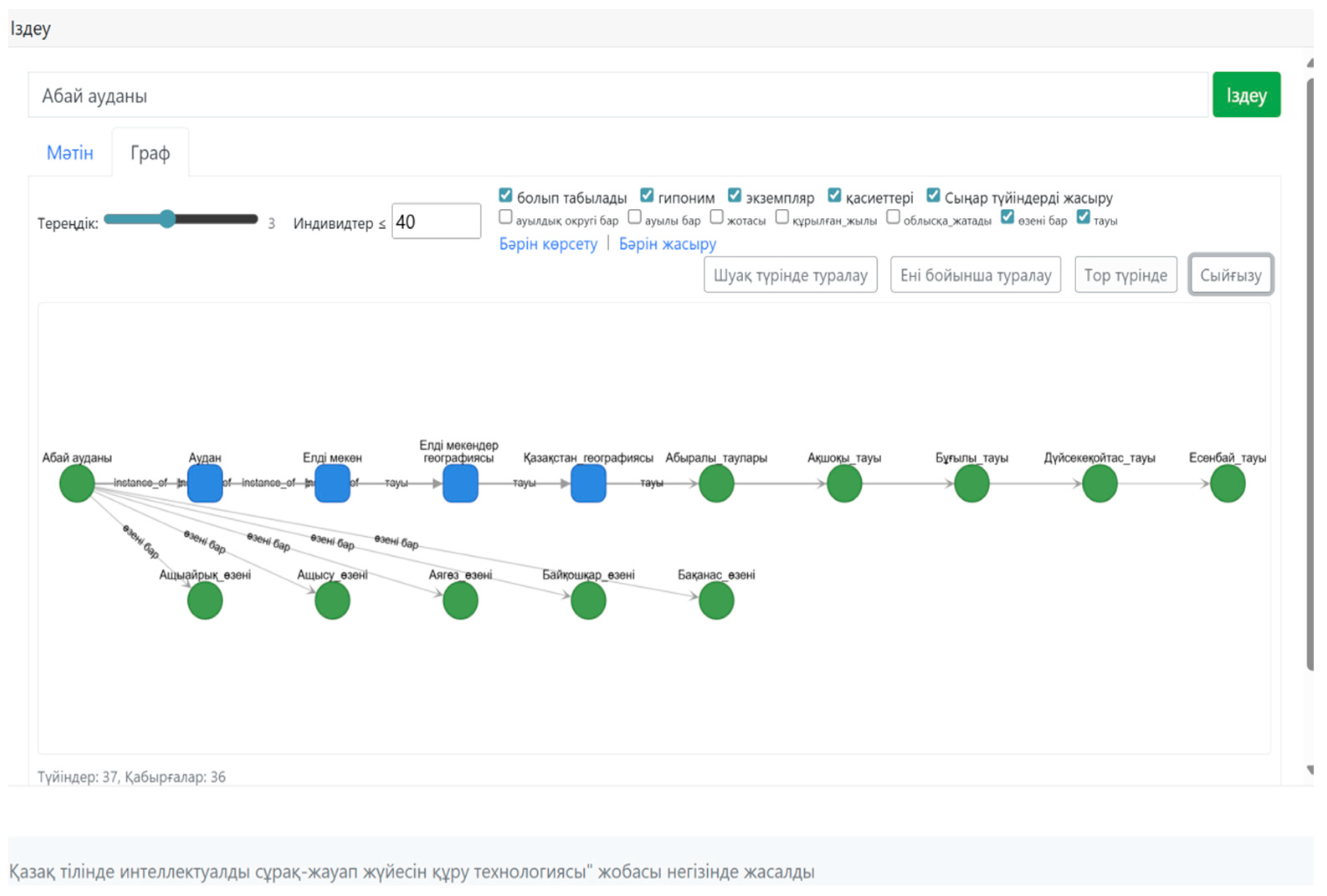

Figure 23 presents a graphical representation of information related to the Abay district. The number of nodes and edges in the graph can be seen, clearly demonstrating the complexity of the information presented. Additionally, for user convenience, various graph visualization options are provided—as rays, by width, and as a grid.

Additionally, you can filter and view only the properties you need. To do this, use the “Show All” or “Hide All” buttons, then select only the desired properties to obtain a summary. For example, to display data only on the mountains and rivers of the Abay region, simply select the corresponding properties (

Figure 24).

The following

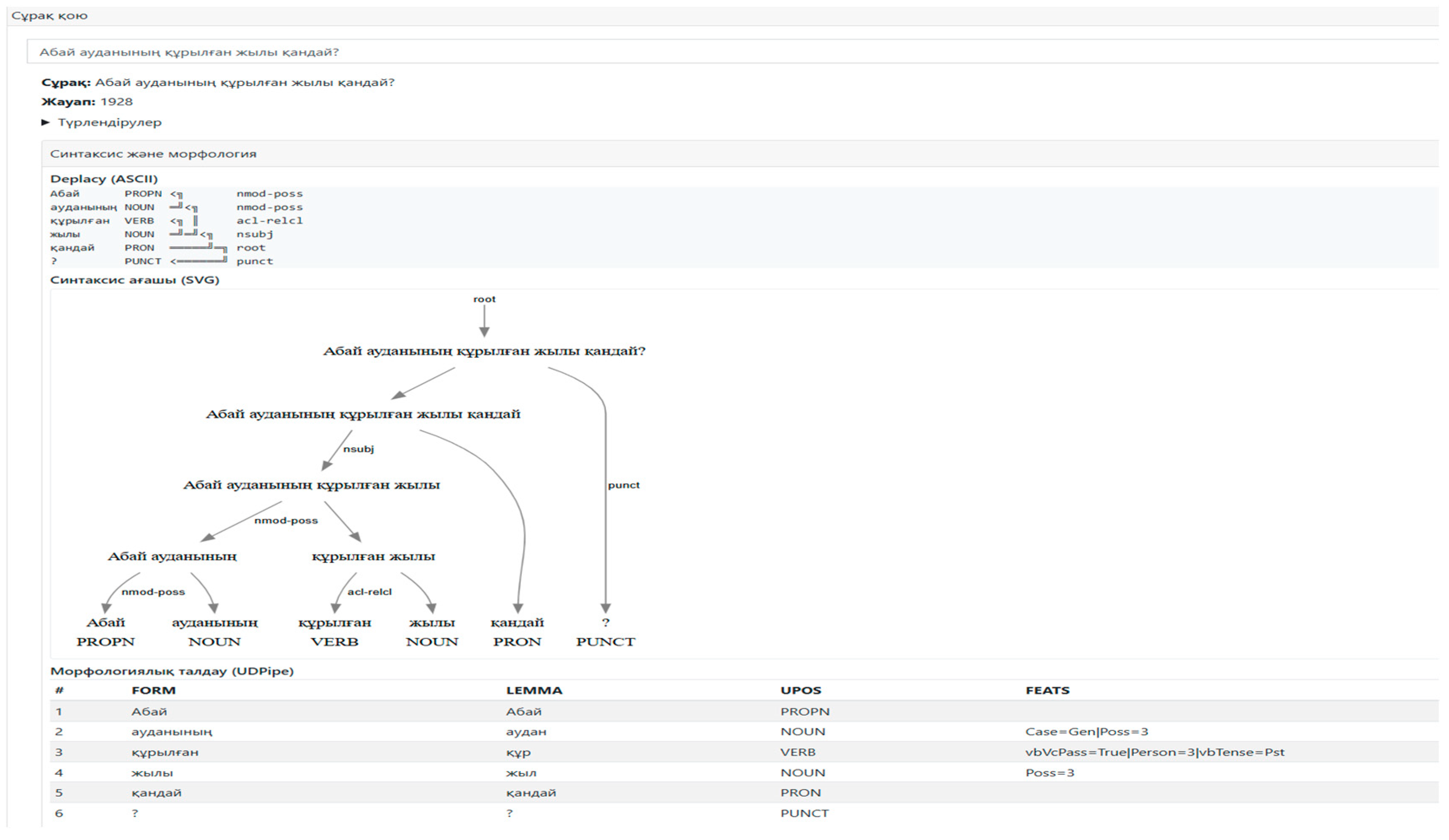

Figure 25 shows the system’s work on processing a given question and performing syntactic-morphological analysis of a sentence.

For example, for the question “In what year was the Abai district formed?” the system correctly returns the answer—“1928”.

The syntax tree reflects the sentence structure and grammatical relationships between words. The morphological table contains lemmas, parts of speech, and grammatical features for each word, providing a formal representation of linguistic characteristics and enabling further automated text processing at a higher level.

Thus, the linguistic module ensures the correct processing of interrogative sentences and generates a structured representation necessary for subsequent ontological interpretation and fact extraction. The results confirm that the chosen approach reduces grammatical ambiguity, preserves key semantic elements, and forms a stable foundation for accurate semantic search.

6.2. Statistical Evaluation

To validate the reliability of the obtained results, we conducted a detailed statistical analysis. All reported metrics (Precision, Recall, F1, and MRR) are expressed as mean ± standard deviation across five random runs, with 95% confidence intervals. Statistical significance was evaluated using a paired bootstrap resampling test (10,000 samples, p < 0.05).

Table 2 presents the averaged metrics, while

Table 3 provides per-question-type performance breakdown (Who, When, Where, Numeric Questions (how many), Relation).

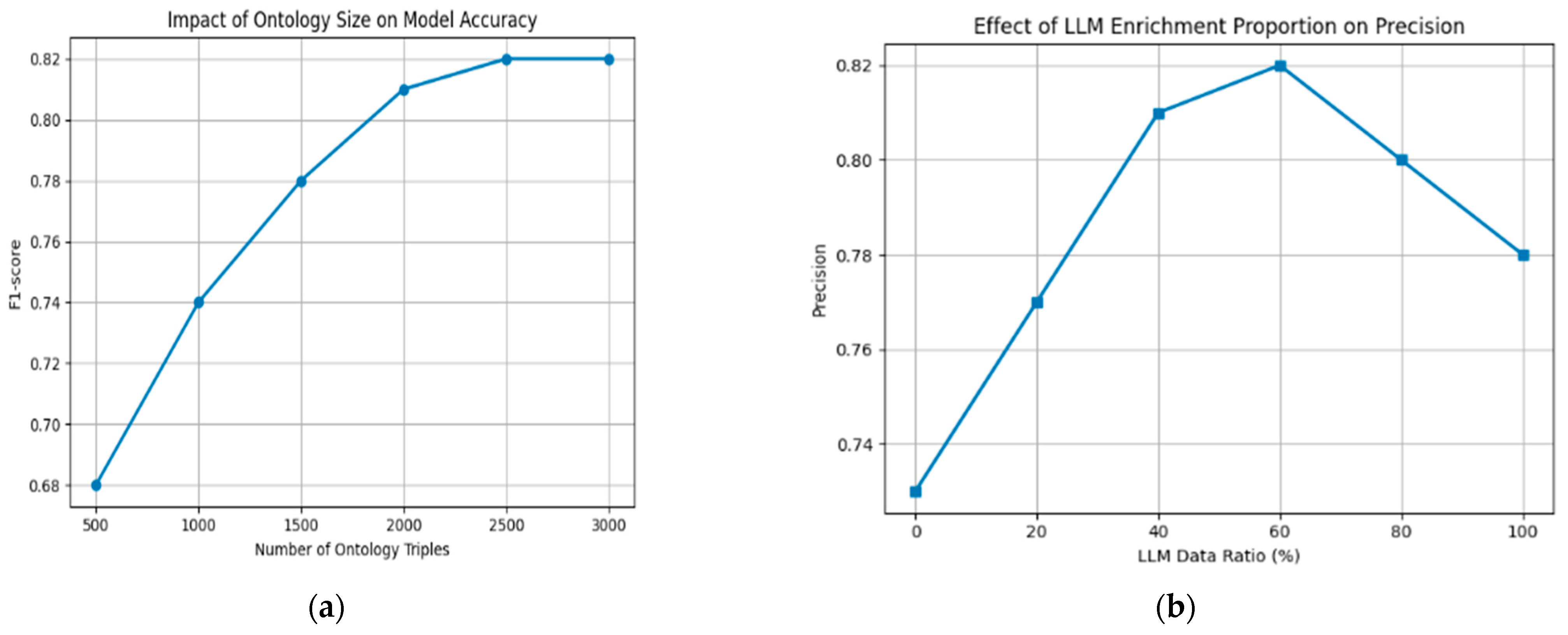

Figure 26 illustrates the dependency between model quality, ontology size, and the proportion of LLM enrichment, confirming the stability and scalability of the proposed system.

Overall, the presented statistical analysis confirms that the proposed ontology–LLM hybrid architecture achieves consistent and statistically significant improvements over baseline systems. The variance and confidence interval analysis demonstrates stable performance across multiple runs, while per-type and error-based evaluations reveal that ontology enrichment substantially enhances factual and relational reasoning.

Furthermore, the robustness experiments and dependency plots confirm that the system remains reliable under data perturbations and scales effectively with ontology size and LLM contribution. These results collectively validate the reliability, stability, and scalability of the proposed approach for semantic question answering in low-resource languages.

7. Discussion

Integrating ontological knowledge with syntactic-morphological analysis and large language models (LLMs) creates a hybrid approach for information retrieval in the Kazakh language. This approach leverages the strengths of each component to enhance accuracy and semantic relevance of search results. Below, we address each research question in turn, explaining how these integrations improve the system.

RQ1: The integration of ontological modeling, morphological-syntactic parsing, and large language models significantly enhances the semantic accuracy of information retrieval in Kazakh. Each component contributes uniquely: ontologies provide structured domain logic, linguistic analysis resolves grammatical ambiguity in agglutinative forms, and LLMs offer contextual understanding. Experimental evaluations confirm the advantage of this hybrid design over ontology-only or BERT-only systems in terms of precision, recall, and answer relevance [

7].

RQ2: Large language models have proven effective in enriching domain ontologies by automatically extracting and structuring knowledge from unstructured sources. This automated enrichment, performed through conversion into RDF triples, allows the knowledge base to evolve dynamically. Such adaptive mechanisms are especially valuable in low-resource language contexts, reducing the need for manual ontology updates and improving coverage [

8].

RQ3: The output of syntactic and morphological parsing is systematically mapped onto ontology concepts to generate SPARQL queries. This ensures that each factual question is translated into a formal, traceable query pattern grounded in domain semantics. The approach guarantees accurate retrieval of facts and preserves the transparency and explainability of the question-answering process [

51].

System Deployment and Practical Evaluation. The hybrid ontology–LLM question-answering (QA) system was deployed in an operational environment to assess its efficiency and robustness. Average response latency was 1.4 s (p50) and 2.1 s (p95), with mean SPARQL query execution time of 35 ms and reasoner memory usage of approximately 420 MB. These results confirm real-time performance and low computational cost. Ontology enrichment follows a human-in-the-loop validation workflow: extracted RDF triples are schema-validated, manually reviewed, and versioned in GraphDB 10.3, ensuring consistency and rollback capability. LLM-induced RDF inconsistencies occur in fewer than 3% of enrichment cases and are automatically detected through schema validation. The system operates within a Docker/FastAPI infrastructure and supports bilingual (Kazakh/English) queries. Operational testing demonstrated stable latency, reliable ontology synchronization, and consistent answer accuracy under noisy or incomplete inputs. These outcomes confirm that the proposed architecture is deployable, scalable, and robust for semantic QA in low-resource language environments.

System Scalability and Limitations. The proposed ontology–LLM question-answering system demonstrates stable performance under increased ontology size and query load. During testing, average response time and memory usage remained within acceptable limits, confirming that the architecture can scale to larger datasets without significant degradation. Ontology enrichment and reasoning processes operate asynchronously, which ensures efficient resource utilization. However, handling of ambiguous or incomplete queries and dynamic ontology updates has not yet been extensively tested and will be addressed in future work. These aspects are planned for further improvement to enhance robustness and adaptability of the system in real-world scenarios.

8. Conclusions

This study presents a hybrid question-answering (QA) architecture for the Kazakh language, combining ontological modeling, syntactic-morphological analysis, and large language models (LLMs). The results demonstrate that such integration significantly improves the semantic alignment between user queries and relevant factual answers. Specifically, the ontology provides structured domain knowledge, linguistic modules handle complex morphological structures, and the LLM contributes contextual interpretation and adaptive enrichment.

The system’s effectiveness was reflected in increased precision and semantic relevance during evaluation. Its design accommodates the agglutinative nature of Kazakh, which traditionally poses challenges for parsing and interpretation. Compared to approaches using only neural models or ontology-based logic, the hybrid architecture provides better control over semantic correctness while retaining flexibility in understanding varied user inputs.

In terms of computational performance, morphological parsing operates with linear time complexity O(n), while reasoning and entity matching perform at O(n·k), where n is the number of tokens and k is the number of ontology candidates. This ensures predictable processing time even in larger-scale scenarios.

Ethical and resource considerations were central during model development. Due to the limited availability of high-quality digital resources for Kazakh, all data used for training and testing were sourced from publicly accessible repositories with proper attribution. Fine-tuning of LLM components was performed under constrained resource settings to minimize environmental costs, and extra attention was paid to mitigating bias and preserving the cultural specificity of the language.

Looking forward, the system offers a foundation for expansion in several directions: broader ontology coverage, multilingual interface support, semi-automated ontology evolution using external corpora, and integration of dialog-based interaction. These improvements will further enhance the QA system’s robustness, accessibility, and applicability across other underrepresented languages.