3. Modified Approach to Hamming Distance Calculation

The Hamming distance has significant limitations as a dissimilarity metric, as it only distinguishes fully matching patterns and with while treating all other non-identical patterns equally. One argument that confirms the indistinguishability of non-matching sequences is the case of binary patterns and , for which the Hamming distance is always constant and equal to n. For example, As seen above, the Hamming distance in all the given examples is equal to , indicating the same maximum difference across all pairs of patterns. However, the structural differences between these pairs of sequences are significant. An even greater structural difference exists in the following character sequence pairs, for which the Hamming distance is . These examples highlight the need for alternative dissimilarity measures capable of capturing not only the number of differing bits but also the spatial and structural relationships within the sequences.

Let us examine the potential for extending the use of Hamming distance in comparing finite sequences of characters and , which represent test patterns consisting of n characters (elements) and , where . The alphabet of characters and can be arbitrary, as well as the number n of elements in the patterns and . Without loss of generality, we assume that the test pattern is initially a binary pattern, meaning that the characters .

The primary objective of the existing modifications to the Hamming distance calculation is to select, from among potential test pattern candidates, a test pattern that is most different from the previously included pattern .

The first modification assumes that the length of a binary test pattern is restricted to

, where

w is an integer. Such constraints frequently occur in practice when addressing diagnostic problems in computer systems. Under this condition, the original binary sequence

can be represented in

different ways denoted as

, where

. The index

specifies the number of consecutive bits that form each character in the new alphabet. For

(

), we obtain the binary alphabet:

For

(

), we obtain the quaternary alphabet:

where each character is formed from two consecutive bits of

. For larger values of

v, the construction continues in the same manner, producing

In the general case, the sequence

consists of

characters. Each character of this alphabet is obtained by concatenating two neighboring characters of the previous representation

. For instance, for

:

and, more generally,

Thus, each representation

defines a sequence over a different alphabet, offering multiple perspectives on the same original binary pattern.

The given interpretation of the original binary patterns does not prevent the determination of the Hamming distance between the patterns

and

. Just as in the case of binary vectors, Equation (

1) can also be applied here, provided that both patterns are expressed in the same chosen alphabet. Let us illustrate this with the following example for the case where

.

Example 1. As an example of binary test patterns, consider and , for which the condition is satisfied. For each pattern of binary characters and , in accordance with the above-described definitions, there are representations in the form of sequences of characters belonging to different alphabets (see Table 3). In

Table 3, the Hamming distance for the original binary patterns

and

, as well as for their representations in different alphabets with their respective characters, is presented. In this example, ASCII codes are used to represent

and

. For all cases, the value of the Hamming distance has been calculated based on Equation (

1). The resulting characteristic

, represented by the four components

, provides a more accurate assessment of the differences between these test patterns.

The requirement that the dimension of a binary pattern , where w is an integer, may not always be satisfied in practice. Consequently, for cases where , when mapping the original pattern into the sequences , the required number of bits equal to may be insufficient for the last character of the sequence , where . For example, considering the pattern , where , it can be represented as the sequences and . However, in three cases—, , and —the required number of bits is insufficient for the last character of the corresponding alphabet; specifically, one bit is missing for , and one bit is missing in both and . An obvious solution to overcome this limitation is a cyclic interpretation of the original pattern . This interpretation assumes that the bit following the last bit is the first bit , thereby using the initial bits of the pattern to obtain the required number of bits for the last character of . For the pattern , such an interpretation allows us to obtain .

The notation

above, as well as the symbols “

c” and “

[” in

Table 3, represent values in the base-256 numeral system. In each case, a group of 8 consecutive bits is interpreted as a single element of a 256-ary alphabet. Thus,

is represented by

(ASCII code for the letter

b), while

and

correspond to the ASCII symbols “

c” and “

[”, respectively. It should be emphasized that these ASCII representations are used only as illustrative examples, since the base-256 system also includes non-printable and control characters. The purpose of this notation is to demonstrate that every 8-bit block can be treated as one symbol of a base-256 alphabet.

Removing the restriction on the size n of the binary pattern by extending it to the required number of bits allows for an expansion in the number of alphabets available for different mappings of the original pattern. Naturally, considering the possibility of extending the original binary pattern to the required number of bits, the number of alphabets can be increased up to n. These alphabets consist of characters specified by one bit, two bits, three bits, four bits, and so on, up to the alphabet in which each character is determined by n consecutive bits. For example, considering the original pattern with and its cyclic extensions, it can be represented in the form of sequences obtained for different alphabets. The sequential representations are as follows: , , , , and .

Another approach to representing the original test pattern in various numerical systems with different character sets is to expand the last character of the pattern by appending, for example, all zero values. Consider the example of a test pattern , which can be represented in five different numerical systems, each with its own alphabet. To avoid potential conflicts related to the absence of a complete set of characters (or their graphical representation) in alphabets containing a large number of symbols, each character in all numerical systems will be represented in binary form and separated by spaces. Thus, the test pattern can be represented in five different numerical systems as follows: .

Let us define the binary n-bit test pattern as a pattern in a numerical system other than binary.

Definition 2. The test pattern , consisting of n binary characters, can be interpreted in a numerical system with characters as the pattern , where . This pattern consists of characters, where is expanded to a size of bits by adding zeros.

For example, the test pattern with can be represented in the octal () numerical system with characters as . To achieve this representation, zeros have been added.

Note that the above examples of interpreting the pattern

and Definition 2 allow us to consider binary test patterns in various number systems. Using the last example of representing the test pattern

in

different number systems, let us illustrate the determination of the Hamming distance

(Equation (

1)) for each interpretation of two patterns:

and

.

The below example (see

Table 4) of determining the Hamming distance demonstrates the possibility of obtaining, based on Equation (

1), several numerical assessments of the relationship between the original binary patterns

and

.

Let us now define a new measure of dissimilarity between the binary test patterns and , which consists of a set of numerical characteristics represented by the Hamming distances.

Definition 3 (Dissimilarity Measure

)

. The dissimilarity measure between two binary test patterns and , where and , is defined as an n-component vector composed of the Hamming distancescalculated according to Equation (1). The analyzed characters

and

of the test patterns

and

, according to Definition 2, are represented by

binary bits. Accordingly, using Equation (

1), the numerical values of the components

of the dissimilarity measure

are determined.

Table 5 presents examples of calculating

for various pairs of test patterns

and

in the case where

.

Note that in all three examples presented in

Table 4 and

Table 5, the same pattern

was used as the test pattern

, while three different patterns

were selected to determine the value of the measure

. Accordingly, for the three cases shown in

Table 4 and

Table 5, the measure of dissimilarity

takes the following values:

The examples presented in

Table 4 and

Table 5 demonstrate the indistinguishability of all three patterns

with respect to the reference pattern

when using the classical measure of difference—the Hamming distance—since in all three cases

. At the same time, applying the new measure of dissimilarity (see Definition 3) reveals different degrees of difference between the patterns

and

, as expressed by the varying values of the components

,

, and

of the measure

.

The measure of dissimilarity for the binary test patterns and has the following obvious properties.

- Property 1.

The minimum value of all components

of the measure

is zero, that is,

This condition occurs when the test patterns are identical, i.e., .

- Property 2.

If one component , where , equals zero, then all the others are also equal to zero. Conversely, if any component , then all other components are greater than zero as well.

- Property 3.

The maximum values of the components

depend on the number of characters in the representations

and

. Specifically,

The maximum difference between test patterns and in terms of the new dissimilarity measure is achieved when is the bitwise inverse of . In this case, all components of the measure reach their maximum values.

For example, for

and its inverse pattern

, the corresponding component values are

- Property 4.

The components of

satisfy the following relation:

The fulfillment of this property is explained by the fact that when calculating , the number of characters included in the patterns and is less than or equal to the number of characters within the patterns and . Therefore, the following inequality holds:

As noted in [

7,

13,

32], the idea of controlled random tests is as follows: the next test pattern

is generated to be as different (or distant) as possible from the previously generated patterns

in terms of predetermined measures of dissimilarity. For this purpose, at each step of forming the next test pattern, a candidate is selected from a set of potential test patterns [

7,

13,

32]. The main operation of the selection procedure is to determine the numerical value of the chosen measure of dissimilarity between two patterns:

, which is one of the test patterns, and

, which is one of the candidate test patterns. As a result, the candidate test pattern for which the measure (or measures) of dissimilarity attains the maximum value is selected as the next test pattern.

Let us explain the procedure for generating a controlled random test using the examples presented in

Table 4 and

Table 5 for the case where the Hamming distance is applied as a measure of dissimilarity. Assume that the first pattern of the controlled random test is

, and three randomly generated candidates for the next test pattern are

,

, and

. For each candidate pattern

, the value of the dissimilarity measure, as defined in Equation (

1), is calculated with respect to the test pattern

. As shown in

Table 4 and

Table 5, the value of

is equal to 3 in all three cases. The classical technique for generating controlled random tests assumes that any of the three candidate patterns—

,

, or

—can be selected as the next test pattern.

In cases where multiple test pattern candidates yield the maximum value of , the new measure of dissimilarity , introduced by the authors (see Definition 3), provides a more comprehensive way to distinguish between test pattern candidates with respect to the test pattern . To achieve this, it is necessary to analyze the values of the next component, , of the dissimilarity measure. As demonstrated in the given example, the maximum value is obtained for the pattern , which can then be selected as the next test pattern in the controlled random test.

Based on the above example and following the classical strategy for generating random tests, we will formulate one of the rules for applying the new dissimilarity measure.

Application Rule. The test pattern candidate is selected as the next test pattern if it is the only candidate, among the entire set of test pattern candidates, that has the maximum value for the minimum value of in the dissimilarity measure , specifically among the components . Otherwise, if multiple candidates have the same maximum value of , one of them is selected randomly.

Other strategies for generating controlled random tests are possible, differing from the given

application rule for the new dissimilarity measure. For example, instead of selecting the next test pattern based on a single component of the measure, one can use an integral measure of dissimilarity,

, defined as the arithmetic sum of its components, i.e.,

.

Table 6 presents the results of calculations, based on Equation (

1), of the components

of the dissimilarity measure

for the binary pattern

and for four test pattern candidates

: 11110000, 00110011, 11100010, and 10010101. The last column of

Table 6 contains the value of the integral measure

for all four candidate patterns

.

As can be seen from

Table 6, according to both criteria, namely, the

application rule and its integral value

, the pattern

will be selected as the next test pattern.

An analysis of the data presented in

Table 6 shows that as

r increases, the significance of the

component decreases significantly. This can be explained by the fact that for

(see

Property 3), all

components, except for the last

, take only three possible values: 0 if

, and either 1 or 2 if

.

The given measure of dissimilarity demonstrates its effectiveness in generating controlled random tests. It enables the selection of an optimal pattern from a set of candidates that share the same Hamming distance from the previously included test pattern . However, its application is associated with the same drawbacks as classical approaches, requiring significant computational costs. Most notably, it necessitates the determination of dissimilarity measures between candidate test patterns and previously selected test patterns.

4. Controlled Random Test Generation with the Given Hamming Distance

The significant computational complexity of generating controlled random tests has led to the development of methods for constructing such tests that do not require selecting the next test pattern from a set of possible candidates. The core idea behind these methods is to use a small number of test patterns that are maximally distant from each other in terms of the Hamming distance while avoiding the computationally expensive process of candidate selection and enumeration.

As noted in previous sections, there are approaches for constructing controlled random tests with a small number of test patterns based on formal procedures that eliminate computational costs, such as MMHD(

q) and OCRTs [

38]. The key characteristic of such tests is the relationship between the maximum–minimum Hamming distance,

, and the number of test patterns,

q. Increasing the required minimum Hamming distance,

, effectively maximizing it for the generated test, results in a reduction in the number of test patterns,

q. Unfortunately, a simultaneous increase in both parameters—namely, the required

and the number of test patterns

q—is not possible.

As an alternative to existing approaches, we propose a method based on increasing the number of test patterns q while maintaining the value of at a moderate level. The result of implementing the proposed approach is a controlled random test consisting of binary patterns , where for , and where , for , takes given values from the set . The main feature of the proposed approach is the use of a new measure of dissimilarity, (see Definition 3), introduced by the authors, which is defined for an arbitrary alphabet of test patterns. This measure allows for the estimation of the n components that quantify the dissimilarity between two arbitrary binary patterns and . Property 4 of this measure states that the components are related according to the following inequality: where . According to Definition 2, the patterns and represent the binary patterns and in a base- numerical system consisting of distinct characters.

Based on Property 4 of the new measure of dissimilarity , we formulate a statement that serves as the foundation for generating controlled random tests with a small number q of test patterns while maintaining a given value.

Statement 1. A controlled random test consisting of binary patterns, where , is the minimum value of r for which for all and has .

The limited number of test patterns, , is determined by the restricted number of characters in the alphabet, which is also equal to , in which the test patterns and are represented. Only in this case can the characters at the same positions in all q test patterns assume different values without repetition. This is the necessary condition for achieving the maximum value of the Hamming distance for all pairs of test patterns and , where . To illustrate the meaning of this statement, let us consider the following example of a controlled random test.

Example 2. In the case of , the controlled random test consisting of patterns has the following form in binary (), quaternary (), and octal () number systems (see Table 7). As can be seen from Table 7, there are no repeating characters in any digit of the quaternary and octal representations of the test patterns. This indicates that in both cases, the Hamming distance between the test patterns, according to Equation (1), takes its maximum values. Indeed, for any two patterns and in the test, , as well as . Moreover, in the quaternary case, all four characters (0, 1, 2, and 3) are used in each digit of the test patterns without repetition. Following the above statement, we can conclude that a test consisting of binary patterns with a minimum value of , for which for all , satisfies the condition . Indeed, as can be observed, and . All values of are greater than or equal to 3, which confirms that the condition stated in the statement is fulfilled.

Based on the statement, we propose a formal procedure for constructing controlled random tests with

binary patterns and a given value of

. The possible values of

depend on the number

n of bits in the binary test patterns

and

. For example, for

, the possible test configurations with a given value of

and the number

q of test patterns are presented in

Table 8.

As can be seen from

Table 8, the fixed value

n of the test pattern bit length determines the possible values of

for which a test can be constructed based on the statement. Naturally, the most interesting cases are those where

attains acceptably large values, which correspond to the smallest values of

r.

The algorithm for generating binary controlled random tests with a given Hamming distance consists of the steps outlined in Algorithm 1. An extension of this algorithm (Algorithm 1) can involve selecting not necessarily consecutive

r bits of the patterns but any arbitrary

r out of

n bits to specify the binary code of characters. The only limitation is the requirement to select non-overlapping blocks of

r bits.

| Algorithm 1 Generation of Binary Controlled Random Tests with a Given Hamming Distance |

Input data: the size n of the test patterns (in bits) and the required value of , which denotes the minimum Hamming distance between any two test patterns.

From the inequality determine the largest possible value of . Based on this condition, compute the number of test patterns as . The minimum Hamming distance between any two patterns will then satisfy Assign the first r bits of each test pattern to distinct binary codes selected randomly from an alphabet of possible r-bit combinations. Each code is assigned without repetition, starting from to . As a result, each pattern contains in the first r bits a unique binary combination corresponding to one of the possible codes. Repeat step 2 for the next blocks of r bits. In each iteration, assign the next r bits of all test patterns (e.g., bits r to , to , etc.) to new sets of unique binary codes of length r, again selected randomly without repetition. If the pattern length n is not divisible by r, i.e., , then assign the remaining bits randomly for all test patterns.

|

The described algorithm generates test patterns with a guaranteed minimum Hamming distance between any pair of test patterns. By partitioning each test pattern into independent r-bit blocks and ensuring that each block contains a unique binary code selected from a maximally distinct set, the method guarantees that the resulting test set is both compact and diverse. The final step introduces randomness in the unused bit positions, further enhancing the variability of the test without violating the distance constraint. It should be emphasized that the guaranteed minimum Hamming distance is determined solely by the disjoint allocation of unique codes in the complete r-bit blocks. When the pattern length n is not divisible by r, the remaining bits are filled by random padding. This step only affects the residual part of the patterns and does not reduce the guaranteed minimum Hamming distance between them. On the contrary, it adds additional variability to the generated tests while fully preserving the distance constraint.

The computational complexity of Algorithm 1 is , where denotes the number of generated patterns and n is the pattern length. This is significantly more efficient than classical candidate–selection approaches, which usually require operations due to pairwise comparisons.

The following example demonstrates the operation of Algorithm 1 for a specific input configuration, highlighting the structure of the generated patterns and validating the achieved minimum Hamming distance.

Example 3. Let the size n of the test patterns be 7, and let the required value of .

- 1.

Based on the inequality , we obtain . This is the largest value of r for which the inequality holds: . Therefore, the generated test T will consist of patterns, , with a guaranteed minimum Hamming distance .

- 2.

The first two bits and of the test patterns are assigned binary values corresponding to four distinct characters from the quaternary alphabet: 00, 01, 10, and 11. These binary codes are assigned randomly, without repetition, starting from to . As a result, each test pattern contains a unique 2-bit prefix: 10, 11, 00, and 01.

- 3.

Step 2 is repeated times for the next two r-bit blocks, i.e., and . For each block, values are assigned using random permutations of the quaternary alphabet.

- 4.

The remaining bit , since , is assigned randomly for all patterns.

The resulting controlled random test is presented in Table 9.

Since all values are greater than or equal to 3, the condition is fulfilled. It should be noted that the proposed algorithm was intentionally formulated in the binary domain, since it directly corresponds to the digital world at the low level of hardware implementation, where the binary alphabet is natural and fundamental. Although the theoretical framework allows for the use of higher-radix alphabets and non-binary symbols, our focus on binary patterns reflects the practical context of memory testing and built-in self-test environments. Extending the method to real non-binary alphabets remains an interesting direction for future research.

5. Experimental Investigation

This section presents a comparative analysis of the effectiveness of two types of tests: controlled random tests with a given Hamming distance (CRTs), generated using the proposed algorithm, and standard random patterns. The comparison is conducted in the context of their ability to detect multicell faults, particularly Pattern-Sensitive Faults (PSFs) occurring in RAM. Due to the size of the test patterns and the vast number of their permutations, the comparisons are based on the average values obtained from the generated test collections.

The first test collection consists of patterns generated using the proposed algorithm, based on the controlled random test generation method described earlier. Using this approach, a controlled random test of length 1024 bits was generated with

. For the input parameters

and

, the value of

r was determined to be 4, resulting in the generation of

patterns per test. The average value of the metric

(Equation (

5)) for these patterns is 287,092, with a standard deviation of 34.54.

In contrast, the second test collection consists of 16 purely random patterns of the same size. The average value of for these test sets is 273,815, with a standard deviation of 871.

The basic statistical parameters of the generated test collections are summarized in

Table 10.

The generated test collections confirm their statistical reliability, as evidenced by low relative errors () and consistent coefficient of variation (CV) values.

Similar test collections of 1024-bit size and comparable statistical parameters were generated for r = 2, 3, and 5 and will be used in further analyses.

In

Table 11, the detailed results for individual values of

and

for

are compared.

The results presented in

Table 11 highlight the comparative performance of the analyzed CRT and standard random tests across individual

values (

to

) and the overall metric

for

. On average, the CRT outperforms random tests across all tested

values, with percentage differences ranging from 0.42% to 7.27%. The highest difference (7.27%) was observed for

, which aligns with the parameter

used in generating the CRTs. This correlation underscores the effectiveness of the proposed algorithm in targeting specific test conditions based on the selected

r parameter. Although the percentage differences in

Table 11 may appear moderate, they are systematic across all evaluated parameters. More importantly, the subsequent experiments (

Table 12 and

Figure 1) confirm that these differences translate into noticeable improvements in memory fault coverage.

In the next set of experiments, conducted in a simulation environment, the focus was on evaluating the effectiveness of test patterns generated using the proposed algorithm in detecting multicell RAM faults. Multicell memory faults, such as Pattern-Sensitive Faults (PSFs), involve dependencies between any k out of N memory cells (N being the memory size). These faults are triggered when specific binary patterns are present in the related cells or when particular transitions occur based on predefined conditions. Consequently, effective detection of such faults requires generating the largest possible number of binary patterns during testing. These patterns activate the faults and enable their detection.

The simulations analyzed groups consisting of

k memory cells for

. For each group, up to

distinct

k-bit binary patterns (i.e., values ranging from 0 to

) could potentially appear. The objective was to determine the average number of unique

k-bit patterns generated during a march test, with the memory being initialized in each iteration using test patterns from the CRT with a given Hamming distance. The obtained results were compared with the results for random tests presented in Table 8.12 in [

38].

Each simulation-based test consisted of a specific number of iterations, determined by the value of

r: 4 iterations for

, 8 iterations for

, and 16 iterations for

. During each iteration, the simulated memory was initialized with a given test pattern from the analyzed set, followed by the execution of a transparent version of the MATS+ memory test. Throughout the simulation, the memory model was monitored, and the number of unique

k-bit binary patterns observed in individual groups of

k-cells was recorded. The results are presented in

Table 12.

Based on the results presented in

Table 12, it can be concluded that CRTs consistently achieve better results than random tests in most cases. The difference is most noticeable for lower values of

r and

k, where the CRT outperforms random tests by several percentage points. For instance, for

and

, the CRT achieves a fault coverage of 97.27%, while random tests reach 93.74%. Fault coverage decreases as the value of

k increases. This is expected, since the number of possible binary combinations

grows exponentially, making full coverage harder to achieve. However, the results in

Table 12 indicate that CRTs perform slightly better for larger

k compared to random tests, highlighting the greater ability of a CRT to generate diverse test patterns.

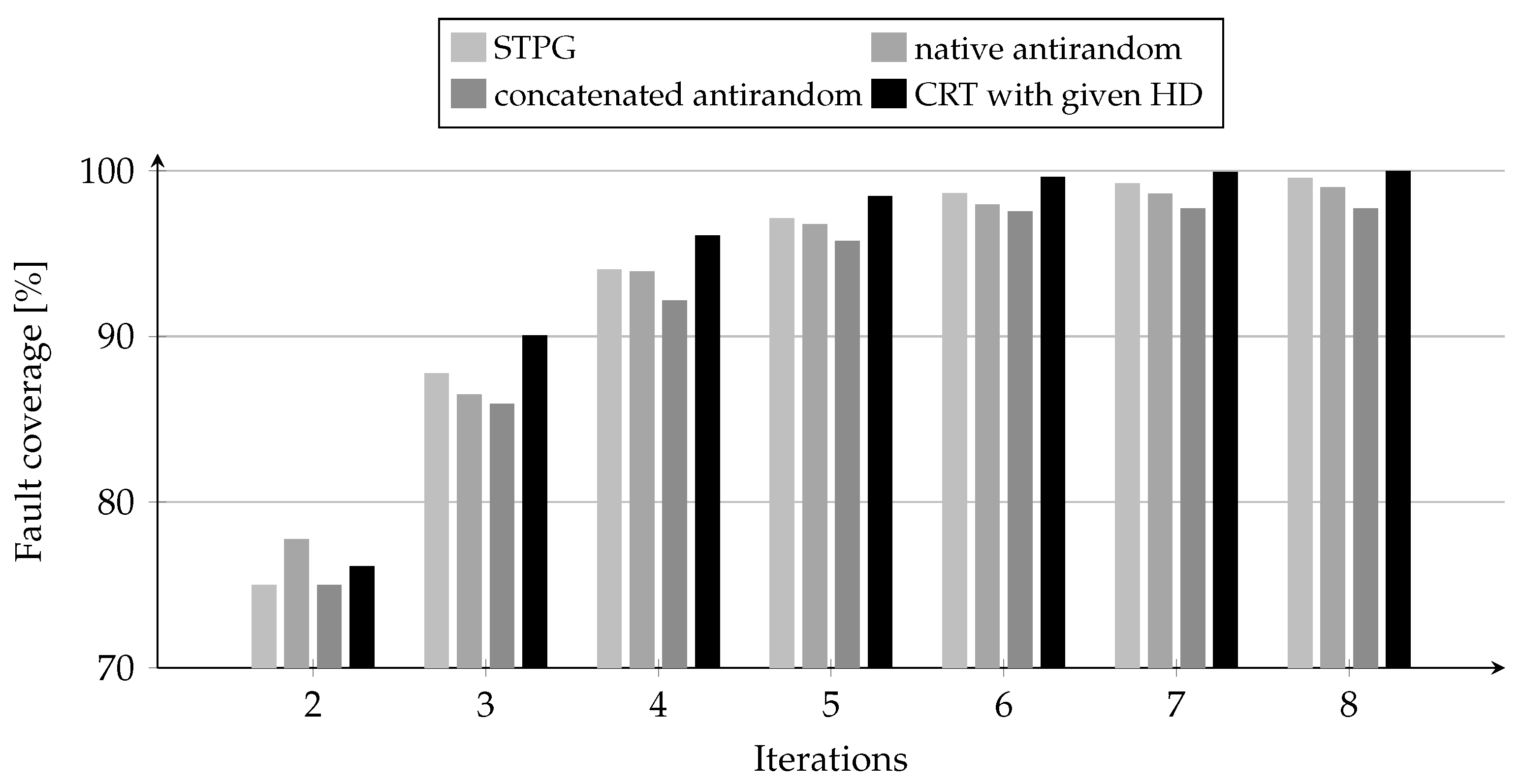

In the final experiment, the average number of unique

k-bit test patterns generated in arbitrary groups of

k out of

N memory cells using the proposed algorithm was compared with that obtained using traditional CRT generation methods, including native antirandom tests [

13], concatenated antirandom tests [

13], and STPG [

40]. The comparison was carried out for fault groups of size

, using tests generated for

(i.e., 8 iterations). During the simulation, the number of distinct

k-bit patterns generated in each iteration was recorded to assess the performance of the proposed method relative to the standard techniques. The outcomes of this analysis are presented in

Figure 1, which illustrates the differences in the number of generated

k-bit patterns across the tested methods.

The results show that the CRT method with a given Hamming distance consistently outperforms other test generation methods in terms of fault coverage, with one exception in the second iteration, where it achieves a slightly lower result (76.13%) compared to native antirandom (77.77%). However, starting from the third iteration, the CRT surpasses all other methods, demonstrating a faster increase in fault coverage (e.g., between iterations 2 and 3, the CRT rises from 76.13% to 90.05%). In the later iterations (7 and 8), the CRT approaches near-complete fault coverage, reaching 99.92% and 99.99%, respectively. Although the differences between the CRT and other methods diminish with a higher number of iterations, the CRT consistently demonstrates superior effectiveness, confirming its ability to generate diverse and efficient test patterns even in advanced stages of testing.

In summary, the experimental evaluation demonstrates that the proposed method consistently provides superior results compared to both purely random tests and classical controlled random tests. The improvements are systematic across all examined cases, particularly in terms of fault coverage and test diversity, while being achieved with significantly reduced computational effort.