Improved YOLOv8s-Based Detection for Lifting Hooks and Safety Latches

Abstract

1. Introduction

- (1)

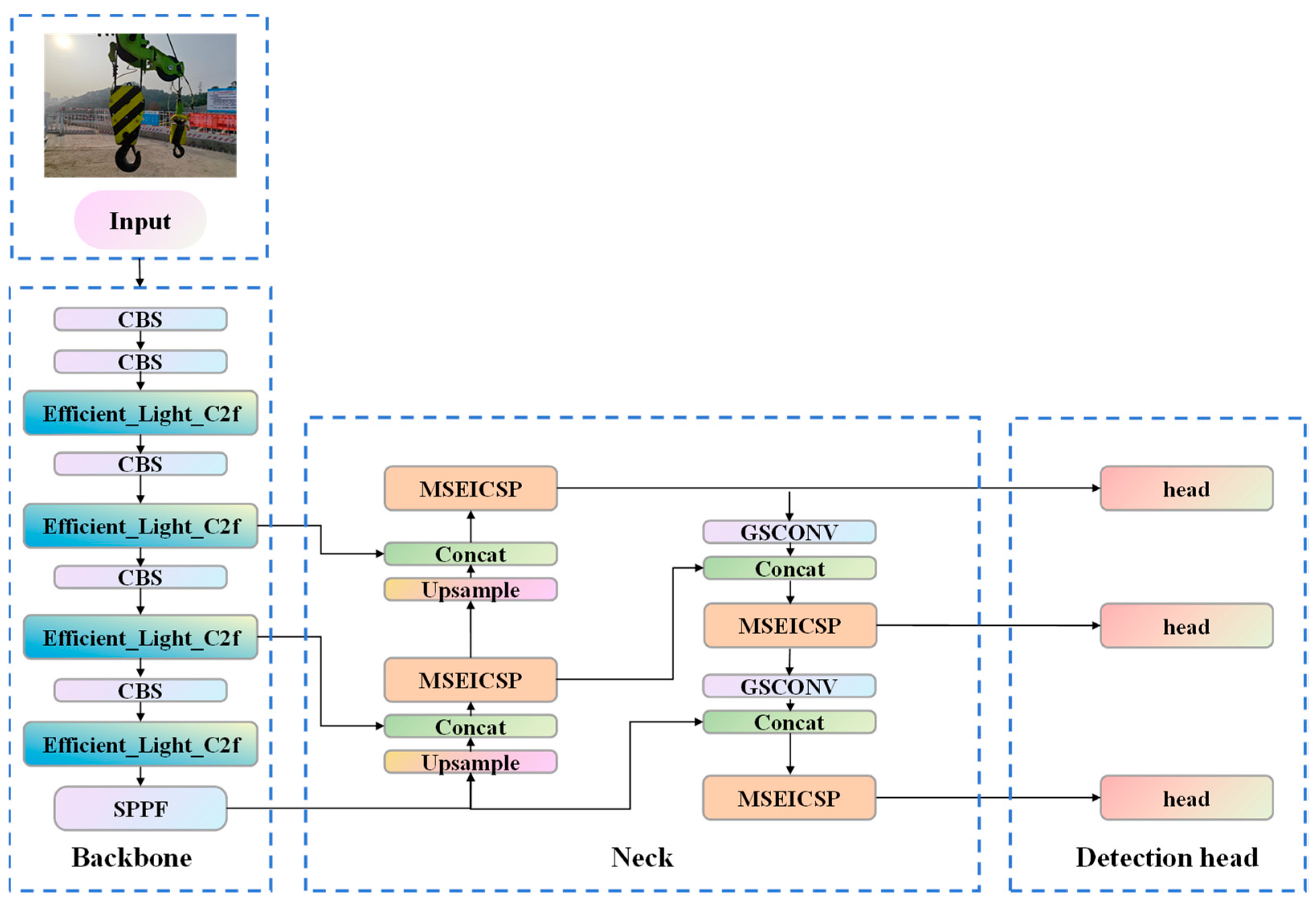

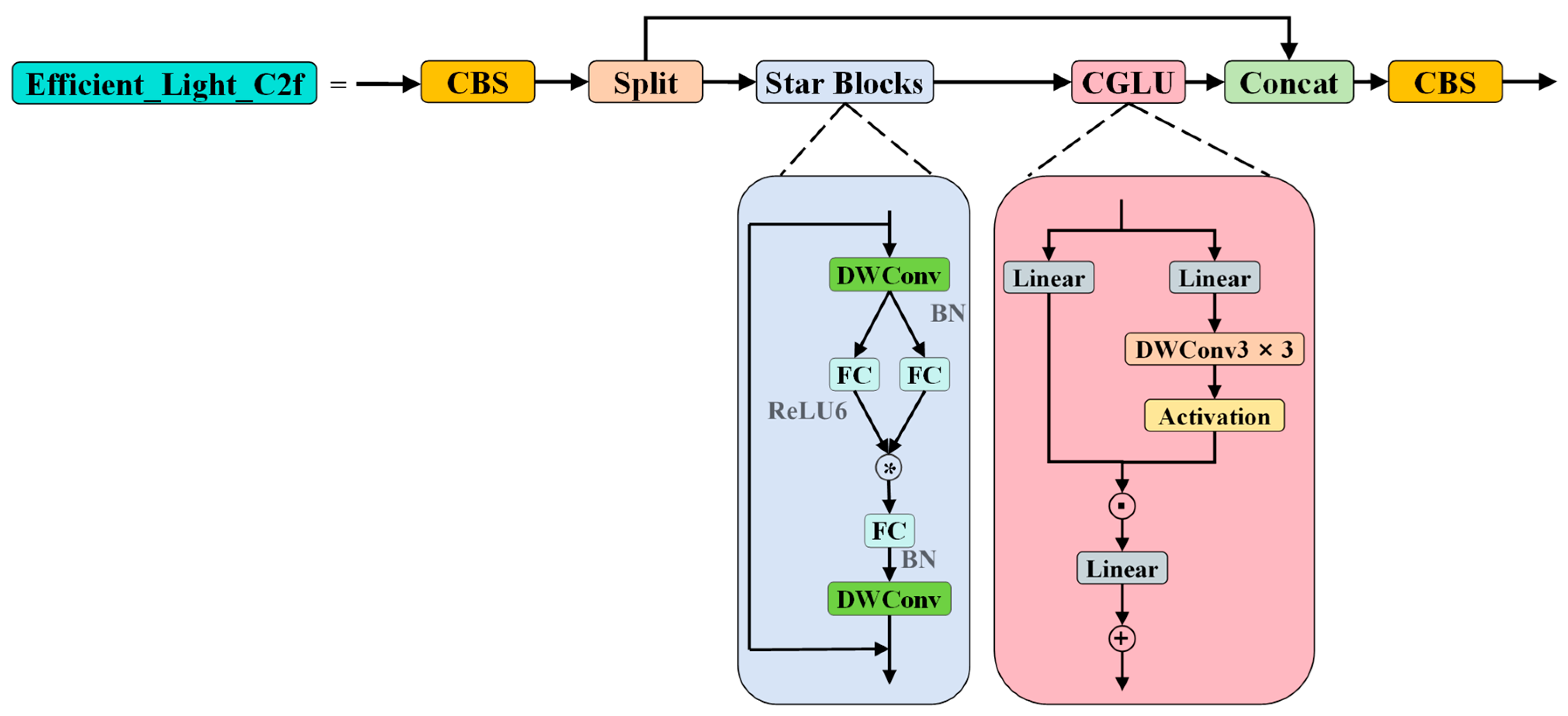

- Efficient_Light_C2f module: We integrate the lightweight Star Block and the CGLU with the C2f module, thereby proposing the Efficient_Light_C2f module. This design leverages the lightweight characteristics of the Star Block and the channel attention mechanism of CGLU, enabling the network to maintain low computational cost while enhancing feature extraction capability. Consequently, it improves the model’s robustness and adaptability to complex or variant inputs.

- (2)

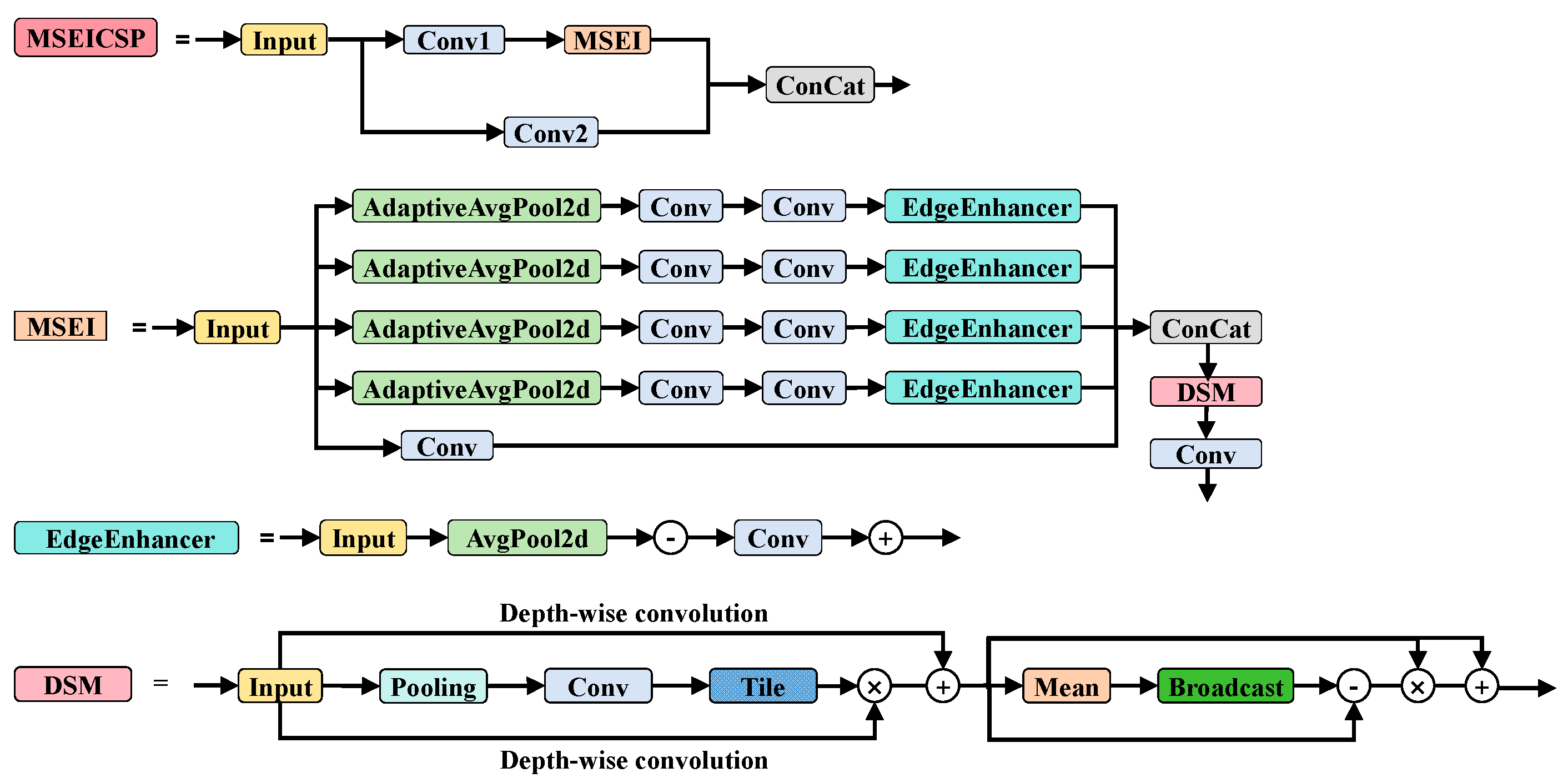

- MSEICSP module: We develop the MSEICSP module, which integrates multiple Multi-scale Efficient (MSEI) units to achieve integrated multi-scale feature acquisition, reinforced delineation of edge-associated cues, and streamlined yet effective feature amalgamation. By capitalizing on these proficiencies, the MSEICSP module significantly augments the network’s representational richness and elevates its overall operational efficacy.

- (3)

- HOOK_IoU loss function: We propose a loss function, HOOK_IoU, to improve the model’s ability to detect objects in complex scenes and recognize challenging targets. This loss function enables precise evaluation of overlap for small objects and edge-localized targets and demonstrates excellent adaptability to morphologically complex or irregular defects. It is particularly suited for fine-grained detection tasks involving imbalanced categories, such as the operational state monitoring of hooks and safety latches.

2. Related Work

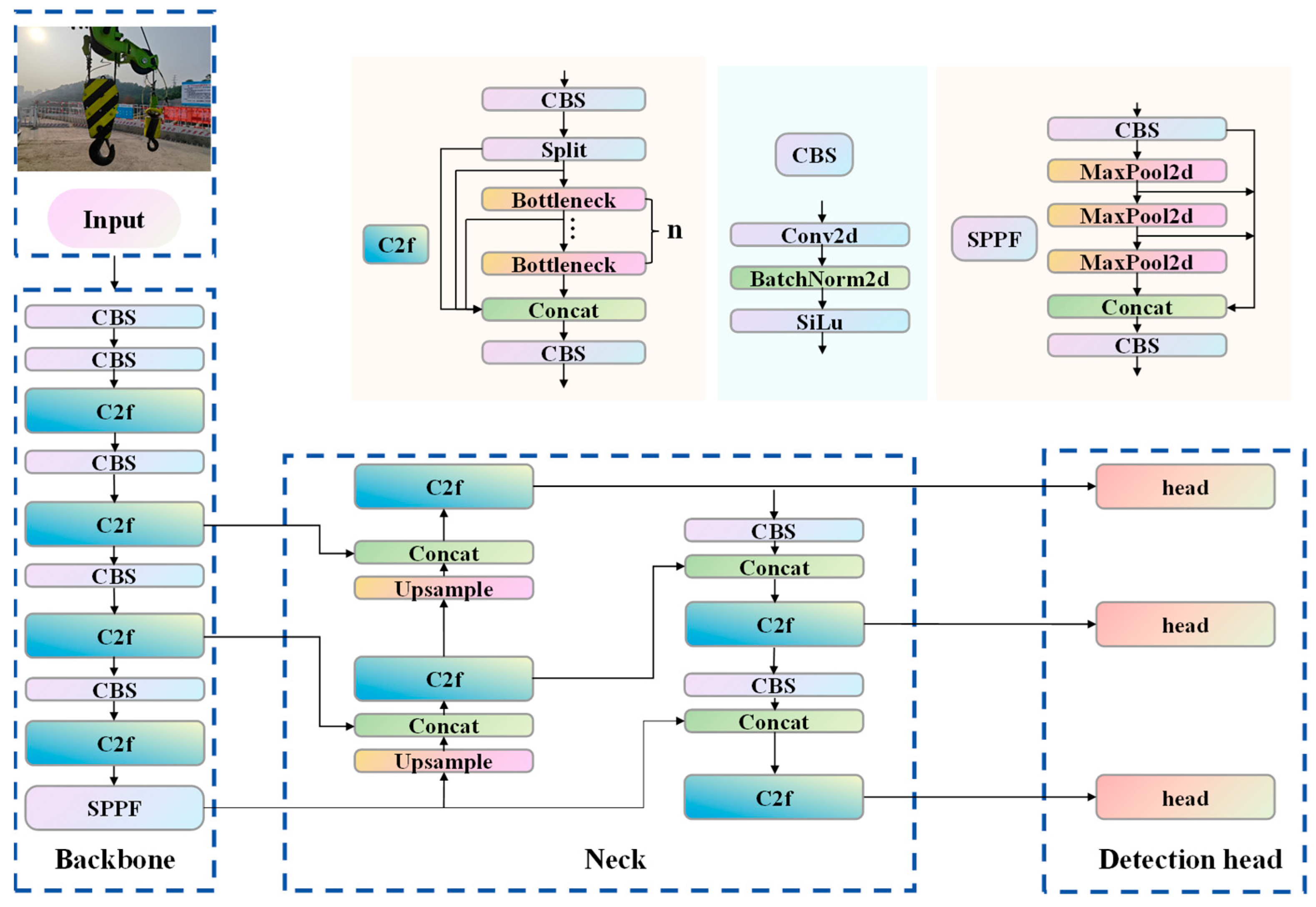

3. YOLOv8

4. Proposed Modifications to the YOLOv8s Model

4.1. Efficient_Light_C2f

4.1.1. Star Blocks

4.1.2. CGLU

4.2. MSEICSP

- (a)

- EdgeEnhancer Module

- (b)

- DSM Module

4.3. HOOK_IoU Loss Function

5. Results and Discussion

5.1. Quantitative Criteria for Assessing Model Efficacy

5.2. Experimental Setup

5.2.1. Experimental Platform and Parameter Settings

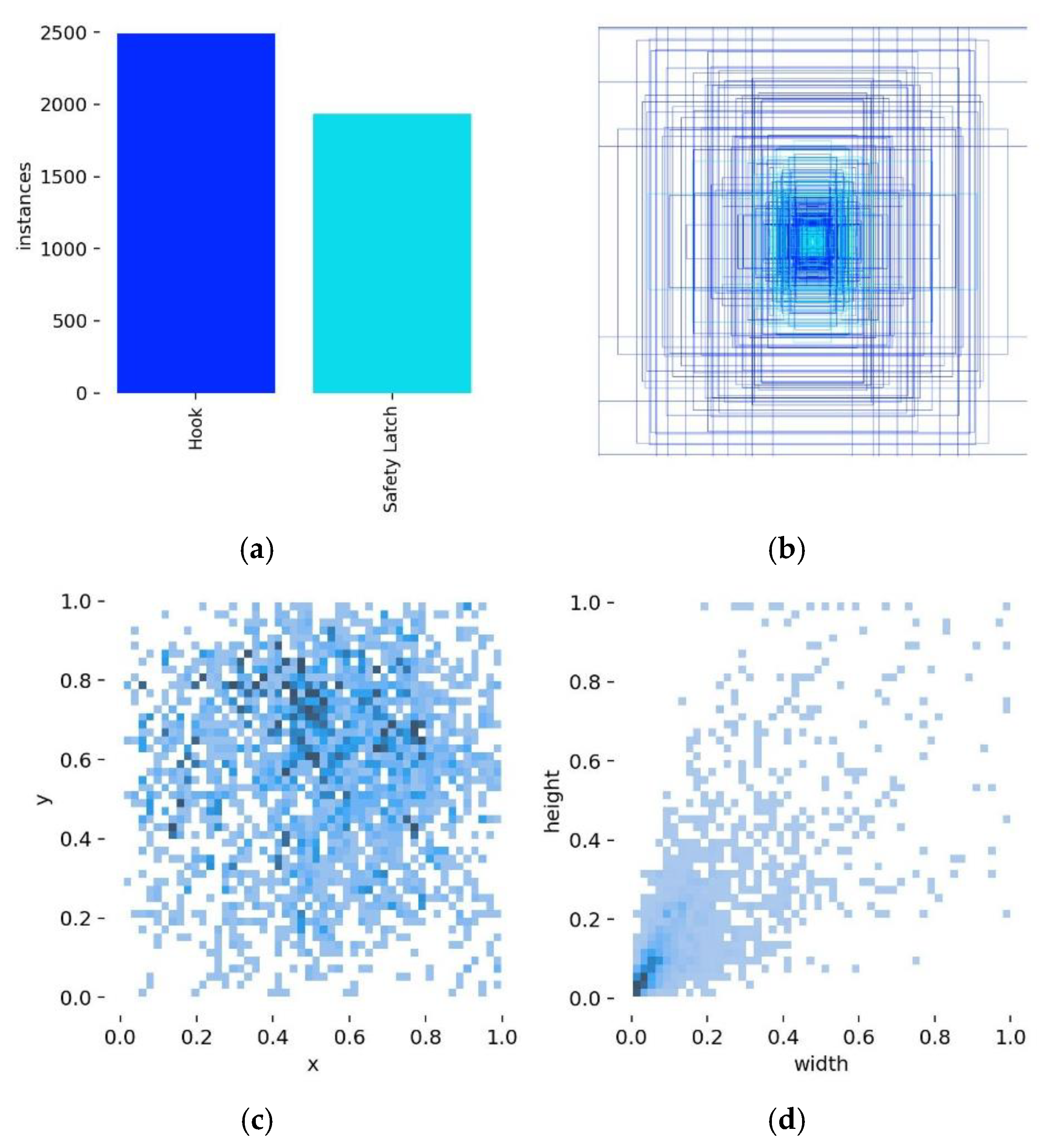

5.2.2. Dataset Preparation

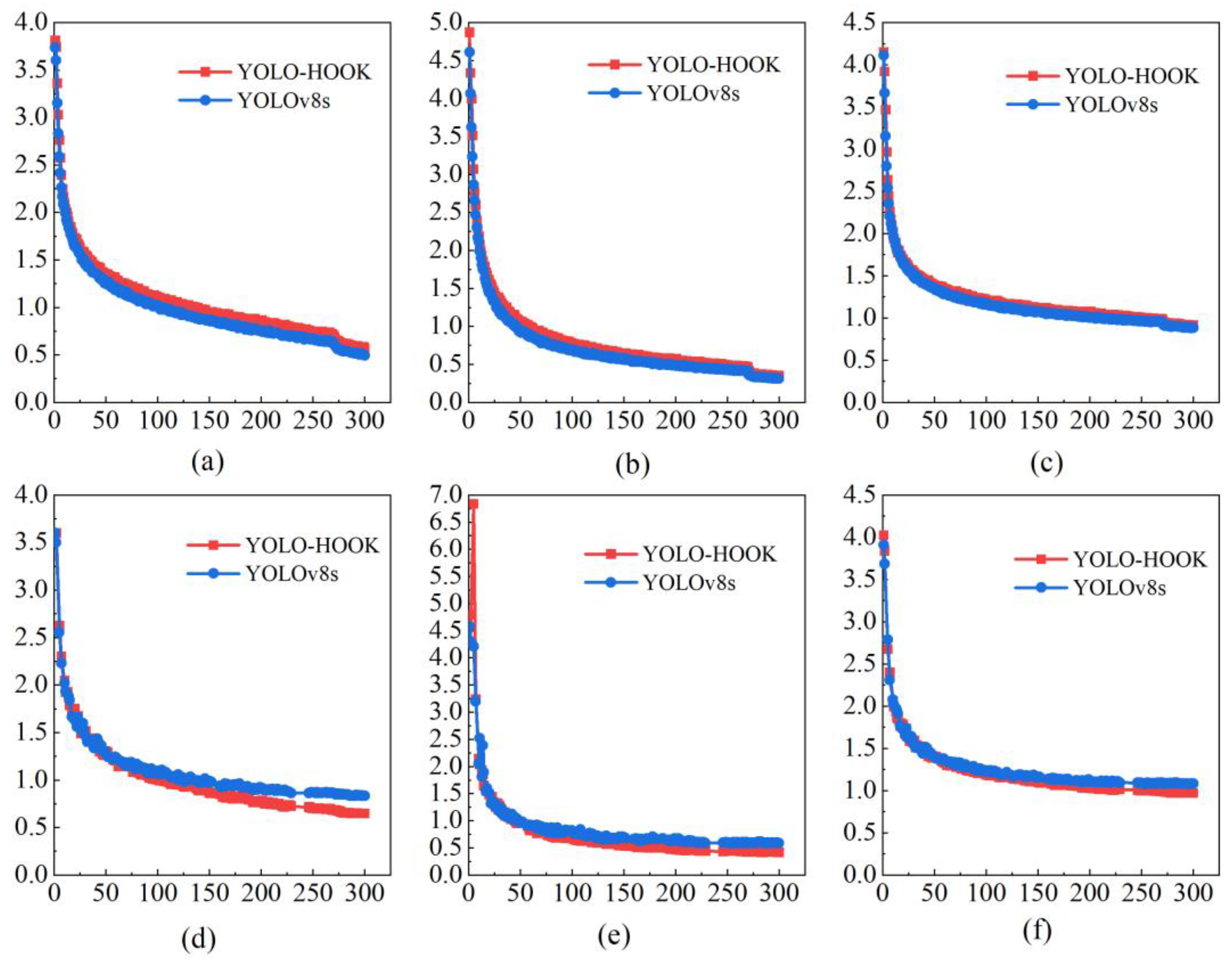

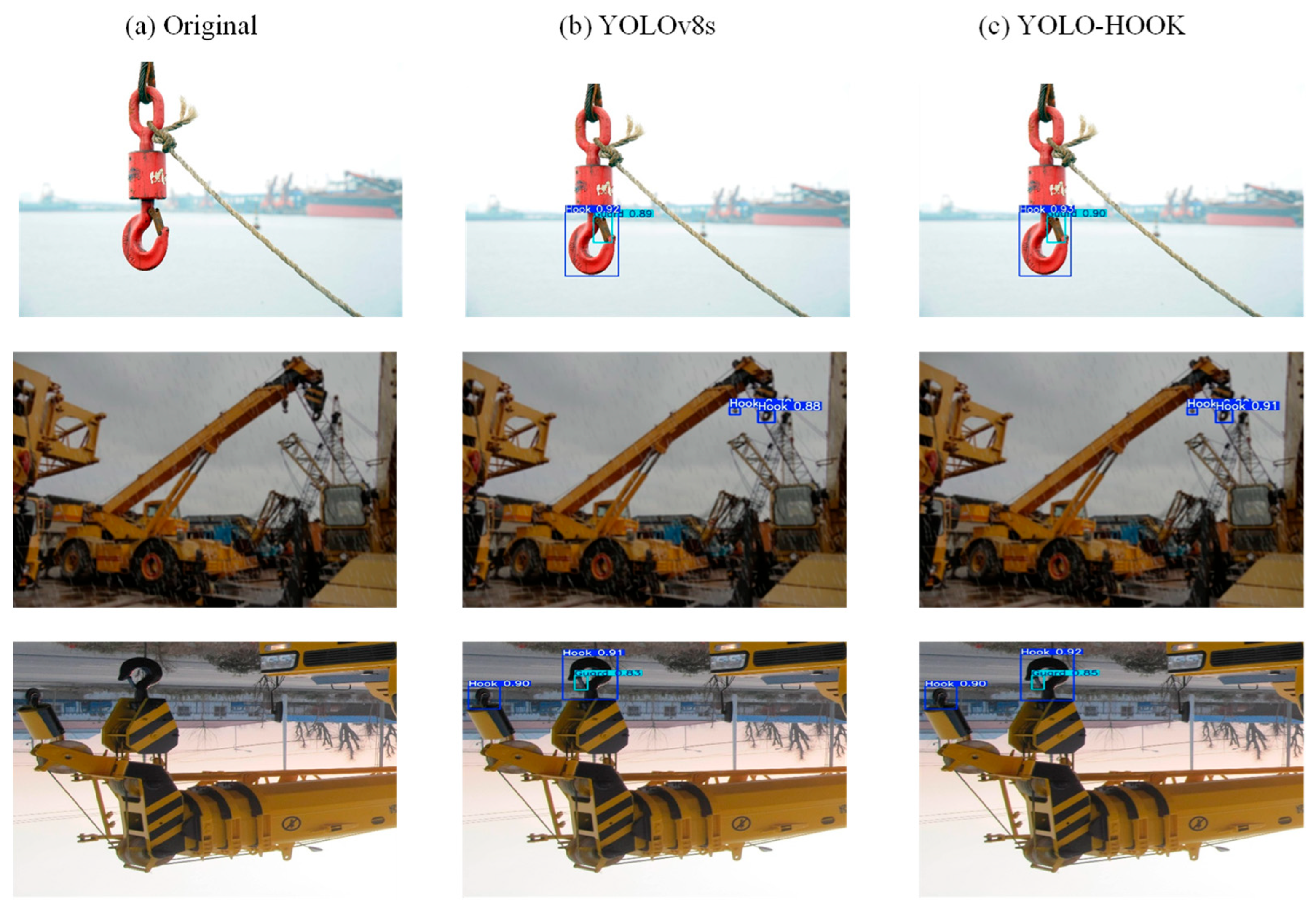

5.3. Experimental Comparison Before and After Model Improvement

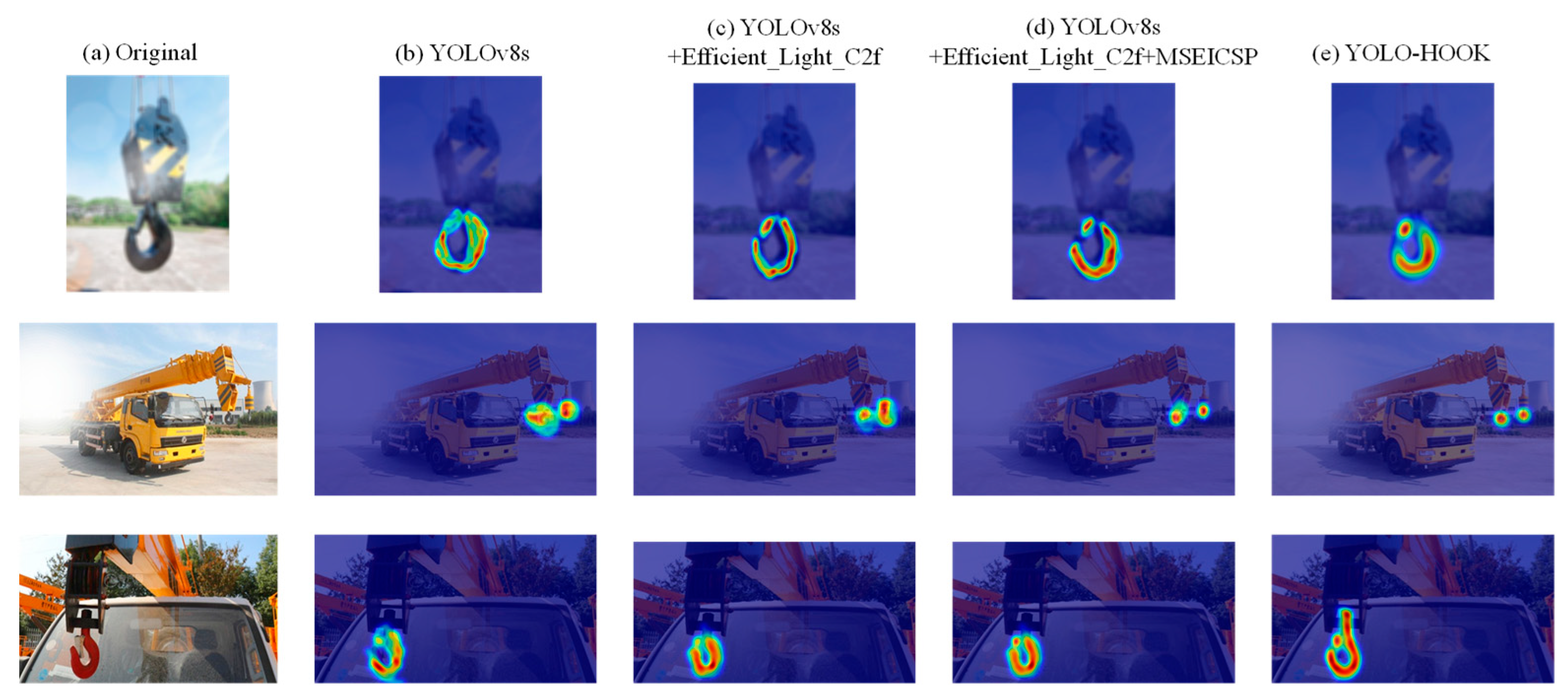

5.4. Comparison of Ablation Study Results

5.5. Performance Comparison of Different Loss Functions

5.6. Comparison of Different Object Detection Models

5.7. Comparison of the Effectiveness and Generalizability of the Improved Module

5.8. Limitations of the Methodology Used in the Current Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dipu, M.N.H.; Apu, M.H.; Chowdhury, P.P. Identification of the effective crane hook’s cross-section by incorporating finite element method and programming language. Heliyon 2024, 10, e29918. [Google Scholar] [CrossRef] [PubMed]

- Muddassir, M.; Zayed, T.; Ali, A.H.; Elrifaee, M.; Abdulai, S.F.; Yang, T.; Eldemiry, A. Automation in tower cranes over the past two decades (2003–2024). Autom. Constr. 2025, 170, 105889. [Google Scholar] [CrossRef]

- Kishore, K.; Sanjay Gujre, V.; Choudhary, S.; Sanjay Gujre, A.; Vishwakarma, M.; Thirumurgan, T.; Choudhury, M.; Adhikary, M.; Kumar, A. Failure analysis of a 24 T crane hook using multi-disciplinary approach. Eng. Fail. Anal. 2020, 115, 104666. [Google Scholar] [CrossRef]

- Sadeghi, S.; Soltanmohammadlou, N.; Rahnamayiezekavat, P. A systematic review of scholarly works addressing crane safety requirements. Saf. Sci. 2021, 133, 105002. [Google Scholar] [CrossRef]

- Wang, X.; Jin, X.; Lin, X.; Luo, Z.; Guo, H.; Lan, R. Spatial collision monitoring of cranes and workers in steel structure construction scenarios. J. Tsinghua Univ. 2025, 65, 45–52. [Google Scholar] [CrossRef]

- Simutenda, P.; Zambwe, M.; Mutemwa, R. Types of occupational accidents and their predictors at construction sites in Lusaka city. medRxiv 2022. [Google Scholar] [CrossRef]

- Shepherd, G.W.; Kahler, R.J.; Cross, J. Crane fatalities-a taxonomic analysis. Saf. Sci. 2000, 36, 83–93. [Google Scholar] [CrossRef]

- Zhang, W.; Xue, N.N.; Zhang, J.R.; Zhang, X. Identification of Critical Causal Factors and Paths of Tower-Crane Accidents in China through System Thinking and Complex Networks. J. Constr. Eng. Manag. 2021, 147, 04021174. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, W.; Jiang, L.; Zhao, T.S. Identification of Critical Causes of Tower-Crane Accidents through System Thinking and Case Analysis. J. Constr. Eng. Manag. 2020, 146, 04020071. [Google Scholar] [CrossRef]

- Xie, X.; Chang, Z.; Lan, Z.; Chen, M.; Zhang, X. Improved YOLOv7 Electric Work Safety Belt Hook Suspension State Recognition Algorithm Based on Decoupled Head. Electronics 2024, 13, 4017. [Google Scholar] [CrossRef]

- Zhang, J.T.; Ruan, X.; Si, H.; Wang, X.Y. Dynamic hazard analysis on construction sites using knowledge graphs integrated with real-time information. Autom. Constr. 2025, 170, 105938. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, P.; Liu, X. Hoisting position recognition based on BP neural network. Coal Eng. 2020, 52, 121–125. [Google Scholar]

- Fu, L.; Wu, S.; Fan, Q.; Huang, Z.; Yang, T. Hook Recognition and Detection Method Based on Visual Image Multi-Feature Constraints. Constr. Mach. Technol. Manag. 2022, 35, 32–35. [Google Scholar] [CrossRef]

- Liang, G.; Ll, X.; Rao, Y.; Yang, L.; Shang, B. A Transformer Guides YOLOv5 to ldentify lllegal Operation of High-altitude Hooks. Electr. Eng. 2023, 1–4. [Google Scholar] [CrossRef]

- Li, H.; Xue, X.; Wu, L.; Wang, Y.; Zhong, X. Protective equipment and hook testing methods for workers in lifting operations under deep learning. J. Saf. Environ. 2024, 24, 1027–1035. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Zhao, P.; Zhang, K.; Wu, Y. Real-time monitoring method for loads and hooks in lifting operations using machine vision. J. Saf. Environ. 2025, 25, 508–517. [Google Scholar] [CrossRef]

- Sun, X.; Lu, X.; Wang, Y.; He, T.; Tian, Z. Development and Application of Small Object Visual Recognition Algorithm in Assisting Safety Management of Tower Cranes. Buildings 2024, 14, 3728. [Google Scholar] [CrossRef]

- Chen, M.J.; Lan, Z.X.; Duan, Z.X.; Yi, S.H.; Su, Q. HDS-YOLOv5: An improved safety harness hook detection algorithm based on YOLOv5s. Math. Biosci. Eng. 2023, 20, 15476–15495. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; IEEE Computer Society: Los Alamitos, CA, USA, 2001; pp. 511–518. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; IEEE Computer Society: Los Alamitos, CA, USA, 2005; pp. 886–893. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: New York, NY, USA, 2008. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; Neural Information Processing Systems (Nips): La Jolla, CA, USA, 2015. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016. [Google Scholar]

- Wei, L.; Dragomir, A.; Dumitru, E.; Christian, S.; Scott, R.; Cheng-Yang, F.; Berg, A.C. SSD: Single Shot MultiBox Detector; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Müller, R.; Kornblith, S.; Hinton, G. When Does Label Smoothing Help? In Advances in Neural Information Processing Systems 32; Curran Associates Inc.: New York, NY, USA, 2019. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2020, 52, 8574–8586. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1904–1916. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; Electr Network; IEEE Computer Society: Los Alamitos, CA, USA, 2020. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.F.; Shi, J.P.; Jia, J.Y. Path Aggregation Network for Instance Segmentation. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Ultralytics. YOLOv5. 2020. Available online: https://docs.ultralytics.com/zh/models/yolov5/ (accessed on 20 August 2024).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE Computer Society: Los Alamitos, CA, USA, 2023. [Google Scholar]

- Ultralytics. YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 5 October 2024).

- Wang, C.Y.; Yeh, J.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the 18th European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024; Springer International Publishing Ag: Cham, Switzerland, 2025. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Ultralytics. YOLOv11. 2024. Available online: https://docs.ultralytics.com/zh/models/yolo11/ (accessed on 22 August 2025).

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Rehman, F.; Rehman, M.; Anjum, M.; Hussain, A. Optimized YOLOV8: An efficient underwater litter detection using deep learning. Ain Shams Eng. J. 2025, 16, 103227. [Google Scholar] [CrossRef]

- Lee, Y.-S.; Patil, M.P.; Kim, J.G.; Seo, Y.B.; Ahn, D.-H.; Kim, G.-D. Hyperparameter optimization of apple leaf dataset for the disease recognition based on the YOLOv8. J. Agric. Food Res. 2025, 21, 101840. [Google Scholar] [CrossRef]

- Ma, X.; Dai, X.Y.; Bai, Y.; Wang, Y.Z.; Fu, Y. Rewrite the Stars. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; IEEE Computer Society: Los Alamitos, CA, USA, 2024. [Google Scholar]

- Shi, D. TransNeXt: Robust Foveal Visual Perception for Vision Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; IEEE Computer Society: Los Alamitos, CA, USA, 2024. [Google Scholar]

- Cui, Y.N.; Ren, W.Q.; Cao, X.C.; Knoll, A. Focal Network for Image Restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE Computer Society: Los Alamitos, CA, USA, 2023. [Google Scholar]

- Lin, F.; Wang, B.; Chen, Z.; Zhang, X.; Song, C.; Yang, L.; Cheng, J.C.P. Efficient visual inspection of fire safety equipment in buildings. Autom. Constr. 2025, 171, 105970. [Google Scholar] [CrossRef]

- Akdoğan, C.; Özer, T.; Oğuz, Y. PP-YOLO: Deep learning based detection model to detect apple and cherry trees in orchard based on Histogram and Wavelet preprocessing techniques. Comput. Electron. Agric. 2025, 232, 110052. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. Neurocomputing 2021, 506, 146–157. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, H.; Ma, B.; Wang, N.; Chen, X. Dynamic R-CNN: Towards High Quality Object Detection via Dynamic Training. arXiv 2020, arXiv:2004.06002. [Google Scholar] [CrossRef]

- Yang, X.; Castillo, E.d.R.; Zou, Y.; Wotherspoon, L. UAV-deployed deep learning network for real-time multi-class damage detection using model quantization techniques. Autom. Constr. 2024, 159, 105254. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.Y.; Xu, S.L.; Wei, J.M.; Wang, G.Z.; Dan, Q.Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Sapkota, R.; Cheppally, R.H.; Sharda, A.; Karkee, M. RF-DETR Object Detection vs YOLOv12: A Study of Transformer-based and CNN-based Architectures for Single-Class and Multi-Class Greenfruit Detection in Complex Orchard Environments Under Label Ambiguity. arXiv 2025, arXiv:2504.13099. [Google Scholar]

| Name | Parameters |

|---|---|

| Image Size (pixels) | 640 × 640 |

| Batch Size | 32 |

| Initial Learning Rate | 0.01 |

| Optimizer | SGD |

| Learning Rate Momentum | 0.937 |

| Weight Decay Factor | 5 × 10−4 |

| Epoch | 300 |

| Model | P(%) | R(%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | GFLOPs | FPSBS=32 |

|---|---|---|---|---|---|---|---|

| YOLOv8s | 94.3 | 79.6 | 85.8 | 66.2 | 11.13 | 28.4 | 300 |

| YOLO-HOOK | 97.0 | 83.4 | 90.4 | 71.6 | 9.6 | 31.0 | 310 |

| Model | ELoc (%) | EDupe (%) | EBkg (%) | EMiss (%) |

|---|---|---|---|---|

| YOLOv8s | 4.94 | 0.37 | 1.36 | 6.48 |

| YOLO-HOOK | 3.36 | 0.16 | 0.57 | 3.53 |

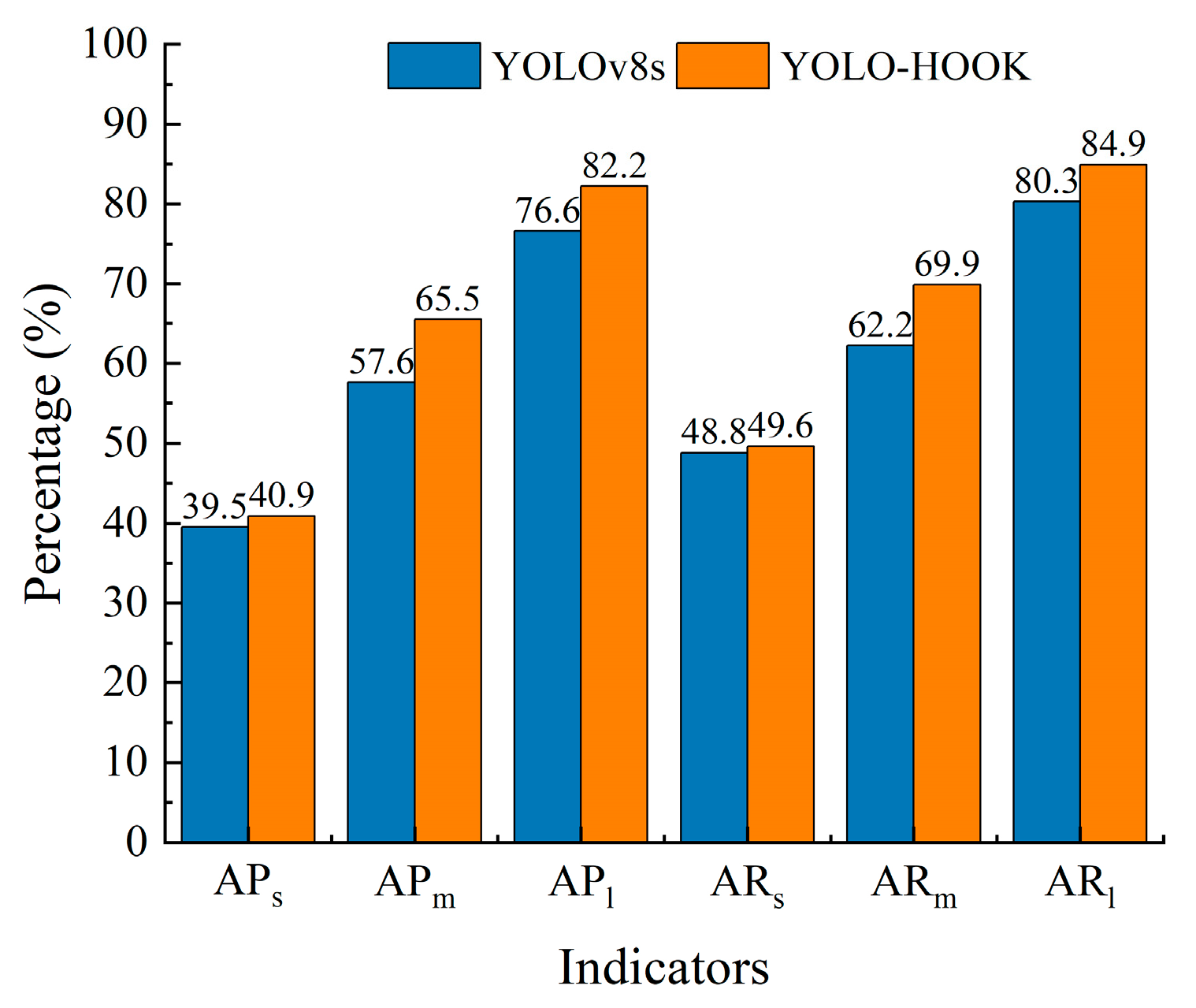

| Model | APs (%) | APm (%) | APl (%) | ARs (%) | ARm (%) | ARl (%) |

|---|---|---|---|---|---|---|

| YOLOv8s | 39.5 | 57.6 | 76.6 | 48.8 | 62.2 | 80.3 |

| YOLO-HOOK | 40.9 | 65.5 | 82.2 | 49.6 | 69.9 | 84.9 |

| Model | A | B | C | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | GFLOPs | FPSBS=32 |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8s | × | × | × | 94.3 | 79.6 | 85.8 | 66.2 | 11.13 | 28.4 | 300 |

| YOLOv8s_01 | √ | × | × | 98.5 | 82.0 | 89.0 | 69.3 | 10.16 | 25.2 | 347 |

| YOLOv8s_02 | × | √ | × | 92.4 | 82.7 | 88.0 | 67.3 | 10.28 | 32.8 | 275 |

| YOLOv8s_03 | × | × | √ | 94.9 | 80.3 | 86.4 | 67.9 | 11.13 | 28.4 | 280 |

| YOLOv8s_04 | √ | √ | × | 94.4 | 85.7 | 89.8 | 71.2 | 9.60 | 31.0 | 330 |

| YOLOv8s_05 | √ | × | √ | 95.9 | 82.8 | 89.5 | 70.7 | 10.16 | 25.2 | 320 |

| YOLOv8s_06 | × | √ | √ | 93.0 | 84.5 | 88.7 | 69.0 | 10.28 | 32.8 | 258 |

| YOLO-HOOK | √ | √ | √ | 97.0 | 83.4 | 90.4 | 71.6 | 9.60 | 31.0 | 310 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOv8s | 94.3 | 79.6 | 85.8 | 66.2 | 11.13 | 28.4 |

| YOLOv8s+EIoU | 92.3 | 79.2 | 85.7 | 62.4 | 11.13 | 28.4 |

| YOLOv8s+Wise-IoU | 96.5 | 78.0 | 86.2 | 63.9 | 11.13 | 28.4 |

| YOLOv8s+SIoU | 92.5 | 77.6 | 85.1 | 62.9 | 11.13 | 28.4 |

| YOLOv8s+HOOK_IoU | 94.9 | 80.3 | 86.4 | 67.9 | 11.13 | 28.4 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | GFLOPs | FPSBS=32 |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 83.2 | 70.8 | 77.5 | 56.3 | 41.2 | 200.1 | 60 |

| Dynamic R-CNN | 89.5 | 77.0 | 82.5 | 62.1 | 40.8 | 1988.3 | 65 |

| YOLOv5s | 93.8 | 77.8 | 85.2 | 64.5 | 9.11 | 23.8 | 350 |

| YOLOv6s | 95.6 | 76.8 | 84.5 | 64.3 | 16.30 | 44.0 | 274 |

| YOLOv9s | 89.9 | 74.5 | 82.3 | 59.7 | 7.17 | 26.7 | 206 |

| YOLOv10s | 93.1 | 71.7 | 83.0 | 60.9 | 7.21 | 21.4 | 265 |

| YOLOv11s | 92.9 | 69.5 | 81.4 | 58.7 | 9.41 | 21.3 | 290 |

| RT-DETR | 93.5 | 73.8 | 85.5 | 63.3 | 31.99 | 103.4 | 79 |

| RF-DETR | 96.5 | 78.0 | 86.2 | 63.9 | 31.12 | 120 | 96 |

| YOLOv8s | 94.3 | 79.6 | 85.8 | 66.2 | 11.13 | 28.4 | 300 |

| YOLO-HOOK | 97.0 | 83.4 | 90.4 | 71.6 | 9.60 | 31.0 | 310 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOv5s | 93.8 | 77.8 | 85.2 | 64.5 | 9.11 | 23.8 |

| YOLOv5s-HOOK | 93.6 | 83.7 | 88.2 | 69.0 | 7.59 | 20.0 |

| YOLOv6s | 95.6 | 76.8 | 84.5 | 64.3 | 16.3 | 44.0 |

| YOLOv6s-HOOK | 95.3 | 80.6 | 86.6 | 68.3 | 7.0 | 18.4 |

| YOLOv9s | 89.9 | 74.5 | 82.3 | 59.7 | 7.17 | 26.7 |

| YOLOv9s-HOOK | 93.9 | 80.0 | 86.5 | 66.1 | 4.48 | 19.4 |

| YOLOv10s | 93.1 | 71.7 | 83.0 | 60.9 | 7.21 | 21.4 |

| YOLOv10s-HOOK | 89.1 | 77.0 | 87.7 | 63.9 | 6.33 | 13.8 |

| YOLOv11s | 92.9 | 69.5 | 81.4 | 58.7 | 9.41 | 21.3 |

| YOLOv11s-HOOK | 96.6 | 81.2 | 87.6 | 65.5 | 10.05 | 27.8 |

| RT-DETR | 93.5 | 73.8 | 85.5 | 63.3 | 31.99 | 103.4 |

| RT-DETR-HOOK | 85.7 | 82.2 | 89.9 | 62.8 | 16.13 | 40.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; Xiao, D.; Ruan, X.; Li, R.; Wang, Y. Improved YOLOv8s-Based Detection for Lifting Hooks and Safety Latches. Appl. Sci. 2025, 15, 9878. https://doi.org/10.3390/app15189878

Guo Y, Xiao D, Ruan X, Li R, Wang Y. Improved YOLOv8s-Based Detection for Lifting Hooks and Safety Latches. Applied Sciences. 2025; 15(18):9878. https://doi.org/10.3390/app15189878

Chicago/Turabian StyleGuo, Yunpeng, Dianliang Xiao, Xin Ruan, Ran Li, and Yuqian Wang. 2025. "Improved YOLOv8s-Based Detection for Lifting Hooks and Safety Latches" Applied Sciences 15, no. 18: 9878. https://doi.org/10.3390/app15189878

APA StyleGuo, Y., Xiao, D., Ruan, X., Li, R., & Wang, Y. (2025). Improved YOLOv8s-Based Detection for Lifting Hooks and Safety Latches. Applied Sciences, 15(18), 9878. https://doi.org/10.3390/app15189878