1. Introduction

The Textile and Clothing (T&C) industrial sector has come under considerable scrutiny over the years. This results from the poor working conditions observed in many factories, the child labor it has been accused of in the past, and the realization of its enormous environmental footprint. The textile industry has been accused of excessive water consumption for the production of raw materials associated with excessive soil exploitation, as well as the pollution of rivers by chemicals used in its manufacturing processes. Currently, the main problem is the exaggerated increase in textile waste, a consequence of excessive consumption encouraged by fast fashion, which stimulates major consumption and the rapid disposal of clothes [

1]. Furthermore, the T&C sector is one of the major contributors to the increase in greenhouse gases (GHGs) in the atmosphere [

1]. The textile industry needs to be adapted and “reinvented” to reduce its environmental and social impacts, becoming more sustainable. Furthermore, it is necessary to increase transparency at every stage of the life-cycle of these products by implementing the Digital Product Passport (DPP).

According to the European Union (EU), the “DPP is the combination of an identifier, the granularity of which can vary throughout the life-cycle (from a batch to a single product), and data characterizing the product, processes and stakeholders, collected and used by all stakeholders involved in the circularity process” [

2]. To implement the DPP in the T&C sector, it is necessary to collect information about every stage and all the participants in the value chain and integrate this information. There are several categories of stakeholders in the T&C DPP, namely supply chain companies, brands, authorities, certification and assessment companies, retailers, marketing companies, consumers, and circularity operators [

2].

The EU has already created rules that companies wishing to trade in Europe must follow. The DPP must contain information about composition, product description (size, weight, etc.), the supply chain, transportation, social and environmental impacts, circularity, the brand, usage and maintenance of garments (customer feedback), tracking and tracing after sales, etc. [

2]. In other words, to implement the DPP, it is necessary to collect a wide variety of information involving a large number of companies and even individual consumers. Thus, the collection of this information may be subject to errors. Therefore, it is necessary to create a way to uniformly evaluate this data before it is stored and integrated into the DPP.

The strong recent investments in the area of Artificial intelligence (AI), and more particularly in its sub-field of Machine Learning (ML), have led to great and surprising advances, with a very positive impact on many areas of society. ML, with its ability to learn and make decisions based on data from previous experience, has been adopted in several business areas that touch our daily lives, including image recognition, Natural Language Processing, fraud analysis, and many others. The detection of non-standard or anomalous values is one of the areas in which ML demonstrates good results [

3,

4,

5].

In the literature review presented in

Section 3, a clear gap in data quality assurance for the T&C DPP has been identified. In this article, the main objective is to study and compare ML-based approaches for data anomaly detection using real data from the T&C value chain and integrate the results into an API that will be used to validate the data before integration into the DPP platform. We will emphasize information about the value chain and the collection of data that, in addition to enabling a product’s traceability, enables calculating the environmental and social impacts of the product. Therefore, it is necessary to receive information from and about all the companies involved in the manufacturing of a given garment, including transportation between the various companies. There are many companies involved, potentially with very different levels of digital maturity, which is why it is necessary to standardize the data collected and the validation process that it must be subjected to.

The data anomaly detection solutions studied in this article, which use ML algorithms, may be employed to validate the data collected by the various business partners involved in the T&C value chain for the implementation of the DPP. More precisely, we started by collecting data on sustainability indicators from several companies involved in different production activities. Next, we worked with this data to prepare different datasets so that different types of ML algorithms (supervised learning and unsupervised learning) could be trained. Finally, we compared the approaches and analyzed which one best suits each situation. Again, the main goal is to study and compare ML-based approaches for data anomaly detection that can be used in the future for validating data before integration into the DPP platform. The goal is not to propose a final ready-to-use implementation but to study the applicability of ML-based anomaly detection approaches to validate real textile data from the T&C value chain and provide access through an API that may be used for data quality assessment before integration with the DPP.

The remainder of this paper is structured as follows: In

Section 2, some concepts related to AI and ML, including some ML algorithms, are presented. In

Section 3, the related works previously developed in the area are analyzed, including previous works developed by the same authors. In

Section 4, the methodology used for this research is presented.

Section 5 presents the dataset (

Section 5.1) used to train the algorithms, as well as the studied solutions (

Section 5.2). For each solution, its objective and design are presented, together with a discussion of the results obtained.

Section 5.3 explains how the validation solutions may be made available to external applications through an integration Application Programming Interface (API). In

Section 6, a discussion regarding the results is presented. Finally,

Section 7 presents the conclusions and outlines future work.

2. Machine Learning Models for Data Validation

Artificial Intelligence is a field of science that focuses on creating machines that, either rule-based or data-driven, can act by taking actions hat normally require human intelligence. AI already has a long history. Since the creation of the Turing Machine by Alan Turing in 1936, which demonstrated that any computational problem could be solved by a machine with well-defined rules, AI has undergone great advances. In recent years, and especially in its sub-field of Machine Learning, the developments have been extraordinary. Nowadays, AI (particularly ML) is present in all areas of society, from finance [

6] to medical diagnostics [

7], image detection and facial recognition [

8], cyber security [

9], fraud detection [

10], Natural Language Processing (ChatGPT, Siri, etc.) [

11], machine translation (Google Translate, etc.), industrial automation [

12], autonomous vehicles [

13], etc.

ML is a branch of AI that enables machines to make decisions based on prior experiences. It achieves this by utilizing algorithms and models that facilitate learning from data stored in datasets and subsequently making intelligent decisions autonomously. ML algorithms employ statistical techniques to identify patterns and anomalies within often large datasets, allowing machines to learn from the data. The dataset size can be so extensive that it becomes challenging for humans to process all the information, which often enables ML to offer superior solutions to problems compared to humans [

14].

Since models learn from data, the data used is very important and needs to be properly prepared. There are many algorithms that can be used. Based on how they learn from data, algorithms can be categorized into four main types: supervised learning, unsupervised learning, reinforcement learning and deep learning [

15,

16,

17].

Supervised Learning—This category includes algorithms that learn from labeled data. It comprises classification algorithms, which categorize data into predefined classes, and may be binary (e.g., yes/no; correct/incorrect) or multiclass; and regression algorithms, which predict continuous values, such as the amount of energy consumed. The most common classification algorithms are Naive Bayes (NB), Support Vector Machine (SVM), Decision Tree (DT), Logistic Regression, K-Nearest Neighbor (KNN), Random Forest (RF), and Multilayer Perceptron. The most common regression algorithms are SVM, DT, Polynomial Regression, Linear Regression, KNN, and RF.

Unsupervised Learning—These algorithms learn from unlabeled data and are useful for discovering hidden patterns or structures. The common tasks include clustering, frequent pattern mining, and dimensionality reduction. This group includes algorithms such as K-means, which groups data into K clusters; Hierarchical Clustering, which creates a hierarchical structure of clusters; DBSCAN, which identifies dense regions of data items and is well-suited for unstructured data; and Isolation Forest, a method that explicitly isolates anomalies and is one of the most popular anomaly detection methods [

18,

19].

Reinforcement Learning—These algorithms learn optimal behaviors through interactions with an environment, using rewards and penalties as feedback. They are commonly applied in areas such as robotics and game playing. The popular algorithms in this category are Q-Learning, a table-based algorithm for finding the best action, Deep Q-Network (DQN), and Proximal Policy Optimization (PPO).

Deep Learning—These models use artificial neural networks with multiple layers to learn complex representations of data. So, for these algorithms to have good results, very large datasets are required. In this group, there are algorithms like Artificial Neural Network (ANN), Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), and Pre-Trained Language Models (PLMs) such as Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-Trained Transformer (GPT).

Between the supervised and unsupervised learning categories, a semi-supervised learning category can be considered, which uses a small amount of labeled data together with a large amount of unlabeled data to train ML models. The goal is to improve learning accuracy when labeled data is scarce [

20].

The best algorithm to be used in any specific case largely depends on the objectives and the training dataset.

ML models can be evaluated in several aspects. For classification models, the typical evaluation metrics include the following [

21]:

Accuracy—measures the proportion of correct predictions made by a model relative to the total.

Precision—measures how many of the items classified as positive are actually positive.

Recall—measures how many of the positive items were correctly identified.

F1-Score—an evaluation metric that combines precision and recall (2 × (precision × recall/(precision + recall))).

For regression models, the typical evaluation metrics include the following:

Mean Absolute Error (MAE)—average absolute difference between predicted and actual values.

Mean Squared Error (MSE)—average of squared differences.

Root Mean Squared Error (RMSE)—square root of MSE.

Score—also known as Coefficient of Determination. It measures the proportion of variance in the target explained by the model.

3. Literature Review

This section addresses the related work on data validation approaches in the T&C value chain, the complexity of the T&C value chain, and also other works in the area of data anomaly detection.

3.1. Textile and Clothing Value Chain

As research and innovation advance, the variety of raw materials continues to grow. In addition to traditional natural fibers such as wool, linen, silk, and cotton, there are now synthetic materials derived from petroleum, including polyester, acrylic, nylon, plastic, elastane, and lycra. Emerging alternatives also include plant-based materials like jute, bamboo, hemp, and banana leaves. More recently, with the growing emphasis on the circular economy, we can find raw materials such as recycled cotton, recycled polyester, and recycled polyester resin. Other materials are also commonly used in textile applications, such as coconut, wood, metal, seashell, leather, and vegetable ivory [

22]. Within each type of raw material, further sub-classifications may exist, such as organic silk, organic cotton, organic hemp, Merino wool. The treatment and processing of these diverse raw materials typically involve different specialized companies. As a result, the number of companies involved in the T&C value chain is increasing, becoming more diversified and spread throughout the world [

23]. Considering, for example, the production of a shirt, with a composition of 50% silk + 50% cotton, with buttons, this will involve a sequence of several value-chain activities: silk production, cotton production, transportation, silk spinning, cotton spinning, dyeing, weaving, and garment manufacturing (including cutting, sewing, and assembly). In addition, the buttons must also be produced, transported, and applied.

The size and complexity of the T&C value chain is very demanding on the quality of intermediate and final products [

24,

25,

26], and also on the quality of the industrial processes and of the waste produced [

27]. Thus, in [

24], the authors proposed a methodology that leverages Decision Trees and Naïve Bayes classifiers to predict defect types based on input material characteristics and technical sewing parameters. Since quality influences both the product’s lifespan and its suitability for recycling, the authors in [

25] presented a study aimed at developing a method for sorting and assessing the quality of textiles in household waste, with the evaluation based on product type, manufacturing method, and fiber composition. In [

26], the authors presented a state-of-the-art review on anomaly detection in wool carpets using unsupervised detection models. In [

27], the authors assessed the quality of the waste produced and examined its potential for use as raw material in the production of new fibers. While several studies have focused on validating product quality, research addressing the quality of the data collected remains scarce.

3.2. Solutions for Data Quality Assessment in the Scope of the DPP

The DPP for T&C products is currently being widely discussed as a way to increase product transparency and traceability [

28].

In the literature, discussions on quality or anomaly detection in relation to textiles and clothing typically focus on aspects such as product quality, as mentioned in the previous subsection, rather than on the quality of the data collected. Consequently, research is lacking regarding data quality assessment or data anomaly detection in terms of the metrics gathered for the DPP for T&C products. The few previously proposed solutions for validating data collected for implementing the DPP are presented next.

The DPP is part of the European Green Deal and the European Circular Economy Action Plan. The study presented in [

29] analyzed the possibilities and limitations in implementing the DPP and in its use for other fields of application.

With the aim of improving long-term sustainability, the authors in [

30] proposed a model to extend the useful lifespan of a product and close the product life-cycle loop, contributing to the circular economy. The cyclical model includes reverse logistics of components and raw materials. The model also includes some information on how to manage data at the end of each life cycle.

In [

31], the authors conducted a study on the potential benefits of implementing the DPP in the manufacturing industry and in particular in the electronics industry. The authors concluded that the implementation of the DPP and the collection of information along the value chain and manufacturing processes contribute to increased transparency in the manufacturing industry and increased sustainability. Furthermore, the information collected can be very beneficial for resource management and subsequent decision-making [

31].

3.3. Solutions for Data Quality Assessment Using ML

With the constant increase in the variety and volume of data to be analyzed, the traditional strategies for detecting data anomalies and outliers are no longer efficient. This necessitates new strategies, such as the use of ML algorithms for anomaly detection and assessing data quality.

In [

32], the authors studied existing approaches for network anomaly detection. The authors concluded that the existing approaches are not effective, mainly due to the large volumes of data involved, collected in real time through connected devices. To improve efficacy, the authors studied the use of ML algorithms for anomaly detection within a large volume of data collected in real time.

In [

5], the authors performed a systematic literature review on ML models used in anomaly detection and their applications. The study analyzed ML models from four perspectives: the types of ML techniques used, the applications of anomaly detection, the performance metrics for ML models, and the type of anomaly detection (supervised, semi-supervised, or unsupervised). The authors concluded that unsupervised anomaly detection has been adopted by more researchers than the other classification anomaly detection systems. Semi-supervised is practically not used. The authors also concluded that a large portion of the research projects employed a combination of several ML techniques to obtain better results and that SVM is the most frequently used algorithm, either alone or combined with others [

5].

Some approaches are more specific and study the use of ML in detecting anomalies in specific areas, such as data collected by IoT devices [

3,

33], water quality assessment [

34], and other areas.

Nowadays, a great deal of information is collected through the use of IoT devices. These devices continuously collect large volumes of data, which can make detecting anomalous data challenging. In [

33], the authors reviewed the literature on the main problems and challenges in IoT data and regarding the ML techniques used to solve such problems. SVM, KNN, Naive Bayes and Decision Tree were the supervised classification algorithms mentioned most often [

33]. The unsupervised Machine Learning algorithms most frequently used were K-means clustering and the Gaussian mixture model (GMM).

Uddin et al. [

35] also employed ML algorithms to assess water quality. The authors used ML algorithms to identify the best classifier to predict water quality classes. The ML algorithms used were SVM, Naïve Bayes (NB), RF, KNN, and gradient boosting (XGBoost) [

35].

Zhu et al. carried out a review on the use of ML in water quality assessment [

34]. The increasing volume and diversity of data collected on water quality has made traditional data analysis more difficult and time-consuming, which has driven the use of ML for water quality analysis. ML algorithms are used in monitoring, simulation, evaluation, and optimization of various water treatment and management systems and in different aquatic environments (sewage, drinking water, marine, etc.). The authors evaluated the performance of 45 ML algorithms, including SVM, RF, ANN, DT, Principal Component Analysis (PCA), XGBoost, etc. [

34]. The authors concluded that conditions in real systems can be extremely complex, which makes the widespread application of Machine Learning approaches very difficult. The authors suggested improving the algorithms and models so that they are more universal according to the needs of each system [

34].

Eduardo Nunes, in 2022, presented a literature review on the use of ML techniques in anomaly detection for the specific case of “Smart Shirt”, that is, shirts that have the ability to monitor and collect signals emitted by our body. These “Smart Shirts” are typically used for medical purposes. The author, among other things, studied the ML techniques that are being used for anomaly detection and concluded that the SVM algorithm is the most widely used, followed by K-Nearest Neighbor (KNN) and Naive Bayes (NB) [

3].

3.4. Previous Work by the Same Authors

In a previous study [

36] conducted by the same authors, within the same project, a non–AI rule-based approach was described for validating data collected by each business partner in the T&C value chain prior to its integration into a traceability platform for the DPP.

The approach introduced four formulas for validating sustainability indicator values [

36]. These formulas assess whether a given value lies within a specified range, defined by minimum and maximum thresholds, and evaluate its proximity to a central or average value. This central value, reflecting the central tendency of the indicator’s distribution, can be measured using the arithmetic mean, median, or mode. The arithmetic mean represents the average of all values, the median is the middle value when ordered, and the mode is the most frequently occurring value. The values closer to the central tendency are assigned higher validity, whereas those further away are assigned lower validity. The rate at which validity decreases with increasing deviation from the mean can vary depending on the metric. In cases where a value falls outside the defined minimum or maximum, it may be classified as invalid or still acceptable depending on the metric. The parameters for these validations can be adjusted for each specific metric [

36].

The study also suggested that future work could explore the application of Machine Learning algorithms to enable more dynamic and accurate validation. This suggestion was implemented and presented in [

37]. In [

37], the ML algorithms RF, DT, SVM, and KNN were studied as approaches to enable data anomaly detection in a consistent and homogeneous manner throughout the value chain.

The approach presented here builds upon the work in [

37] by reorganizing the datasets, exploring new Machine Learning algorithms, and comparing the resulting performance.

4. Methodology

As seen before, although product quality validation is firmly established in the literature, the validation of collected data remains critically overlooked and underexplored. For this research, the Design Science Research (DSR) method was used as it adapts to the needs of practical and useful research in the scientific areas of Information Systems/Information Technology (IS/IT) [

38]. The DSR method drives research forward through a series of cycles in which an artifact is produced and evaluated. The evaluation of the produced artifact can identify strengths and weaknesses for improvement, as well as generate new ideas that can be implemented in the next cycle [

38,

39]. The DSR method involves six main steps [

38,

40]:

The created artifacts (ML models and API) have several limitations, which are addressed in

Section 6, but they are still useful and innovative and may serve as a basis for a data anomaly detection solution when integrated with the DPP data from companies involved in the T&C value chain.

5. Results

This section presents the research carried out towards a solution for anomaly detection in textile data before integrating it into the DPP. The section begins by introducing real data obtained from companies to construct the datasets for training the ML models, followed by four approaches that employ different Machine Learning algorithms for data anomaly detection. Finally, the API for data validation is presented.

5.1. T&C Value Chain Dataset Preparation

Preparing a dataset is one of the most critical stages in a Machine Learning (ML) project. The goal of data preparation is to format and structure the data in a way that aligns with the requirements of the Machine Learning algorithms to be used [

41]. As outlined in [

41], dataset preparation typically follows a series of steps, beginning with data collection and followed by data cleaning, transformation, and reduction:

Data Collection—This is the initial phase of any Machine Learning pipeline. It involves identifying, selecting, and acquiring the appropriate data needed for the specific algorithm and intended outcomes.

Data Cleaning—This step involves identifying and correcting errors, such as missing values, noisy data, and anomalies, to ensure the dataset is accurate and reliable.

Data Transformation—This process involves converting raw data into a suitable format for modeling. Common tasks include normalization (scaling numerical data to a specific range) and discretization (converting continuous variables into discrete buckets or categories). These transformations are essential for improving algorithm performance and interpretability.

Data Reduction—This process is used in situations where datasets are too large or complex. In these cases, reducing data may be of aid to deal with issues like overfitting, computational inefficiency, and difficulty in interpreting models with numerous features and is crucial to enhance the efficiency and effectiveness of Machine Learning analysis.

These steps, mentioned in [

41], have been followed to create and prepare the datasets used in the study presented here. Depending on the ML approach followed, the dataset is prepared in different ways; however, the steps presented below are common to all datasets.

5.1.1. Data Collection

The data used for this study has been provided by Portugal’s Textile and Clothing Technological Center (CITEVE) and consists of real data collected from various companies representing different types of production activities involved in the textile and clothing sector, such as spinning, weaving, dyeing, and manufacturing, among others.

The data was provided through multiple Excel files, each composed of tables for a product batch/lot resulting from a production activity, where the batch identification and size were listed, along with environmental impact metrics and their respective values.

To use this information in the ML training phase, it was necessary to consolidate all the data in a structured format. Thus, a single table has been created where each row contains information about the batch size, production activity, responsible firm or organization, materials used to produce it (e.g., cotton, linen, or polyester) and the percentages of each, and the corresponding metrics information: name, unit of measurement, and value.

The information provided was simple to understand, so there was no need to group attributes to reduce their volume. Furthermore, each of the various parameters was labeled, aiding in their comprehension. Although the data supplied was of good quality and featured real information from different types of companies, the total number of records is small. Due to its complexity and reliability, it was not possible to use data augmentation methods to address the issue of a small number of records. At the end of this stage, the dataset consisted of 223 rows.

Each row contained information about the size of the product batch, the production activity that created it, the materials used for its creation, and their percentages and the name of an attribute, the unit of measurement, and their value.

In total, we received information about 28 sustainability indicators that can be used to measure the environmental impact of the batch produced by the identified production activities, i.e., regarding the transformation of the input material/product (or products) into the output product.

For a given batch, data was collected on environmental sustainability indicators related to the resources consumed and outputs produced during a specific production activity, aimed at transforming and manufacturing a product of a defined weight. The following environmental impact metrics were analyzed regarding consumption: consumption of water, non-renewable electricity, renewable electricity, self-consumption of renewable energy, biomass, coal, total electricity, natural gas, propane gas, fuel oil, water, gasoline, diesel, purchased steam, chemical products, etc. The following environmental impact metrics were analyzed regarding production: quantity of SVHC (Substance of Very High Concern) in products, quantity of non-hazardous chemicals, quantity of recovered chemicals, quantity of non-hazardous waste, quantity of solid waste, quantity of textile waste, quantity of recovered waste, quantity of recovered textiles, quantity of recycled water, and volume of liquid effluent, among others. Some environmental impact metrics make more sense for some production activities and less, or no sense at all, for others.

After collecting the data, initial data processing was carried out. These treatments were necessary for all the approaches that will be presented in

Section 5.2. However, some of the approaches presented there also required additional treatment.

5.1.2. Data Cleaning

In the case of the received data, there were no missing values or noisy data, so it was not necessary to implement replacement techniques. There was also no need for outlier treatment since all data represented real data obtained from industrial activities.

5.1.3. Data Transformation

The original data received underwent some transformations. The categorical data were encoded using label encoding, and all values were converted to numerical format to ensure greater compatibility with ML models. Proper normalization was also applied to the numerical data to ensure consistency between the different value scales to avoid loss of information.

5.1.4. Data Reduction

In the case presented here, the amount of data obtained was not too large, so this step was not performed.

After this first data processing, the dataset consisted of 223 lines. Each row contained information on the product identification and size of the product batch produced, the production activity in which the batch was produced, the materials used in its creation and corresponding percentages, the name of the metric to be evaluated, and its measurement unit and its value.

Given that we are evaluating a large number of production activities and a large number of environmental impact indicators, it is natural that the most appropriate value validation solution varies from one indicator to another. It may depend on the production activity and the indicator being evaluated.

5.2. Proposed ML-Based Solutions for Data Validation in the Context of the T&C DPP

Recognizing that data quality issues can manifest in various forms, in this section, we study four ML-based approaches for data anomaly detection and discuss their results. This multi-faceted strategy allows us to address the problem from different angles, from detecting unusual individual data points to assessing the collective consistency of an entire record. The rationale for exploring different model types for anomaly detection, namely unsupervised, supervised classification, and supervised regression, is to provide a comprehensive and flexible validation toolkit.

Using the same set of real-world data collected from companies in the T&C sector (as detailed in

Section 5.1), each approach involves distinct data preparation strategies and uses different ML algorithms for data validation and anomaly detection. An anomaly is defined as an observation that significantly deviates from the expected behavior or pattern within a dataset. Also referred to as an outlier or deviation, an anomaly represents an unusual or rare value among a collection of observed data points. Anomalies can generally be classified into three main categories [

5,

32]:

Point Anomaly: A single value or data point that is anomalous compared to the rest of the data.

Contextual Anomaly: A data point that is considered anomalous only within a specific context (e.g., time and location).

Collective Anomaly: A group of related data points that together form an anomalous pattern, even if individual points may not appear abnormal on their own.

The four proposed approaches are presented next:

Approach 1—The first approach uses unsupervised anomaly detection models combined with predefined threshold values to individually assess the reliability of each environmental impact metric.

Approach 2—The second approach uses supervised learning models to both predict values for individual metrics (regression) and to classify whether a received value is correct or not (classification).

Approach 3—The third approach focuses on analyzing the relationships among the different environmental impact metrics. All the metric values are considered together within a single record (i.e., one record includes all the metrics), and the goal is to determine whether the values within a given record are consistent with each other. Supervised learning algorithms are used to perform this consistency check.

Approach 4—In the fourth approach, the goal is to predict the value of a specific metric based on the remaining metrics in the record. The objective is to assess whether the value received for a particular metric in a new record is plausible given the values of the other metrics. Supervised models are used for this purpose.

As the dataset is relatively small, neural network-based algorithms were not considered due to their typically high data requirements.

5.2.1. Approach 1—Unsupervised Anomaly Detection Based on Individual Metrics

This approach focuses on validating the data of a certain batch according to an attribute (metric) using ML anomaly detection algorithms. Each record includes the batch size, production activity, and the name and value of the attribute to be validated. Given the objective of assessing and ensuring the quality of the data, anomaly detection provides an effective way to identify inconsistencies and deviations that may indicate errors or irregularities.

Data Preparation

This approach uses the dataset presented previously in

Section 5.1. The dataset consisted of 223 rows, each with 11 columns.

Only the organization’s column was removed from the initial dataset. Of these eleven columns, one contains the size of the lot, one specifies the production activity, two indicate the types of materials used in the lot, and two record the corresponding material percentages. Another column contains the name of the metric, followed by its unit of measurement and its recorded value. The last two columns contain information about the data’s validity. One includes a validity score (ranging from 0 to 100), and the other assigns a validity category (valid, suspect, or invalid). For ML purposes, the dataset was preprocessed to handle missing and noisy data, and all non-numeric values were converted to numeric representation.

After analyzing the created dataset, a key issue was identified concerning the validity category of the data: there were no data records categorized as “suspect”. This posed a challenge for training the models to recognize suspect data, which is important for real-world applications where such cases may occur. To solve this issue, additional data was generated based on the existing records and using the API previously developed and presented in [

36]. The API uses formulas to evaluate the validity of the data. By using existing data and modifying the values of the attributes sufficiently for the API’s formulas to classify them as “suspect”, it was possible to create new data entries. To preserve the integrity and trustworthiness of the dataset, only 19 new rows were created using this method. At the end, the dataset had 242 records.

ML Algorithms

The models chosen for this approach were selected for their ability to detect anomalies in both large and small datasets. This made them suitable for the dataset used here and for potential future datasets. Other selection criteria included scalability, widespread adoption, distinct anomaly detection mechanisms, and their established use in fields such as environmental science and industrial systems [

4,

42]. The approach presented in this subsection uses the algorithms Isolation Forest (IF), Local Outlier Factor (LOF), One-Class SVM (OCSVM), Gaussian Mixture Model (GMM), and Robust Covariance (RC), the latter employing Elliptic Envelope (EE) for covariance estimation.

The IF (Isolation Forest) is an anomaly detection algorithm that identifies outliers by isolating data points that differ significantly from the rest. It operates by randomly selecting features and recursively splitting the data. Anomalies are easily isolated because they are fewer in number and more distinct from the bulk of the data [

18].

The LOF operates by contrasting the local density of a point with the local density of

k of its surrounding neighbors. If this point has significantly lower density than its surrounding neighbors, it is considered an outlier [

43].

The OCSVM works by creating a boundary that encloses the majority of the data points while maximizing the margin around them. Any data point that falls outside this boundary is considered an anomaly [

44].

GMM models the data as a combination of multiple Gaussian distributions, assigning each data point a likelihood of belonging to each component distribution. Outliers are detected by identifying points with a low probability of belonging to any of the distributions [

45].

The RC method using Elliptic Envelope assumes that the data follows a Gaussian distribution, forming an elliptical shape. Data points that fall outside this elliptical boundary are classified as outliers [

46].

Because these are unsupervised models, their effectiveness does not rely on traditional classification metrics. Instead, they are often interpreted through visualizations such as plots. In this work, however, anomaly detection is treated as a classification task. Each record was classified as “valid”, “invalid”, or “suspect” based on its anomaly score, as predicted by the models, and according to the thresholds defined for classification.

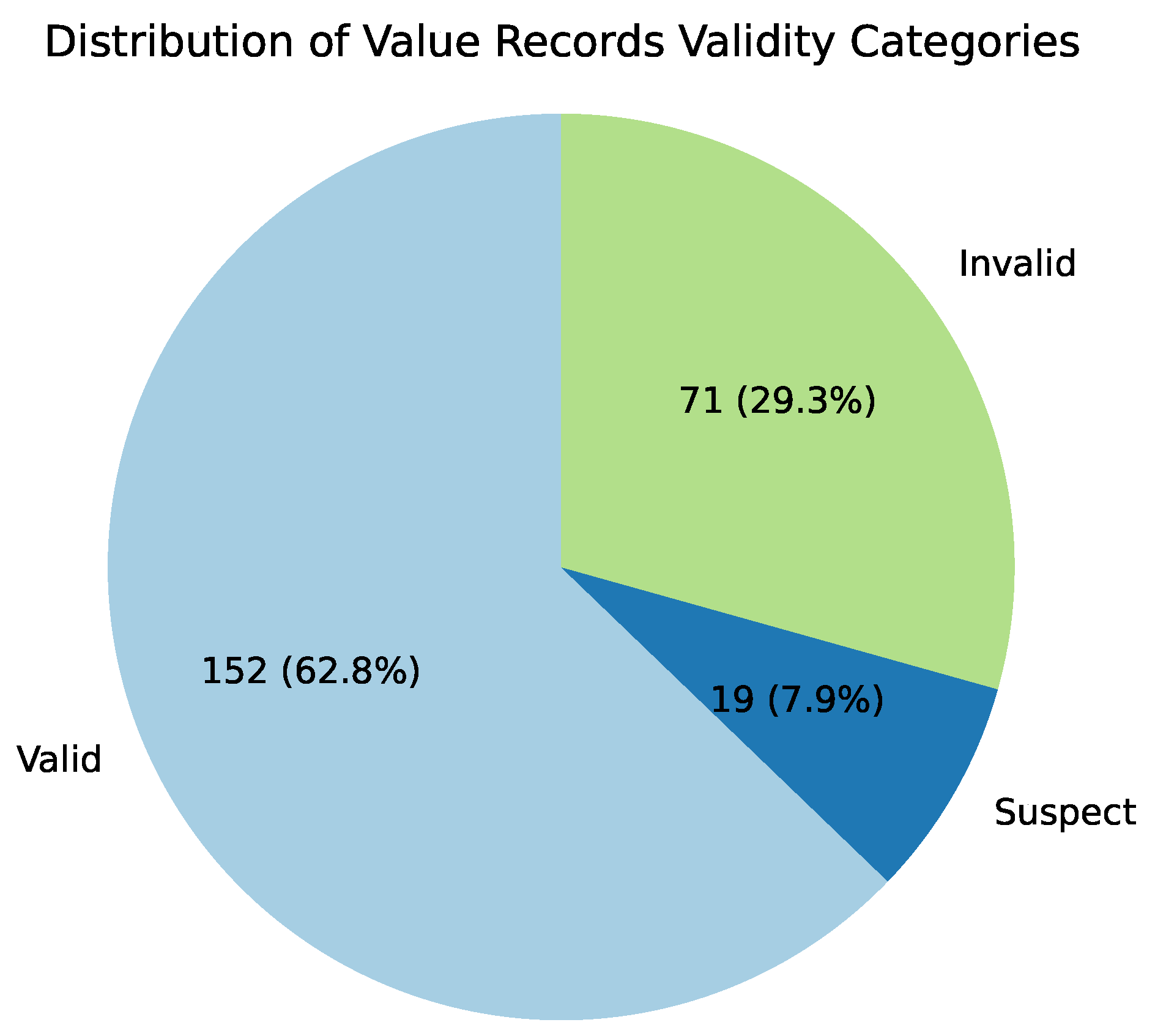

These thresholds were determined based on the proportion of data points in each validity category (29.3% invalid, 7.9% suspect, and 62.8% valid; see

Figure 1). This technique was feasible because the dataset had each row labeled according to validity (valid, suspect, or invalid).

For each model, an anomaly score has been computed for each record i. The anomaly score corresponds to

The log-likelihood of each record for GMM;

The negative of negative_outlier_factor, for LOF;

The output of decision_function for other algorithms.

From the set of all scores

two percentile-based thresholds are calculated:

where

is the empirical

p-th percentile of

S. In the dataset used for this study,

and

, chosen to match the observed proportions of invalid (29.3%), suspect (7.9%), and valid (62.8%) records (see

Figure 1).

The validity category is then assigned as

These threshold values were selected for this initial experiment because the dataset contained a small number of records (242). In the future, with a larger dataset, thresholds can be optimized in a more robust data-driven way, for example by selecting and to maximize a chosen evaluation metric, such as macro F1-score, through K-foldcross-validation over the labeled training set. This will be able to yield category boundaries more closely aligned with the model’s performance and the underlying score distribution.

The hyperparameters used in this approach were mostly the default ones for the used library, namely Scikit-learn (

https://scikit-learn.org/ accessed on 3 June 2025).

Table 1 summarizes the hyperparameters used in each model.

For LOF, setting novelty = true enables predicting on new unseen data, which is necessary for anomaly detection outside the training set. For Isolation Forest, “auto” for contamination lets the model estimate the proportion of outliers automatically.

This unsupervised approach incorporates supervised evaluation, employing labeled validity categories to assess model performance using traditional performance metrics. In this case, the accuracy, precision, recall, and F1-score of each model were measured and confusion matrices were generated to assess their performance.

Results

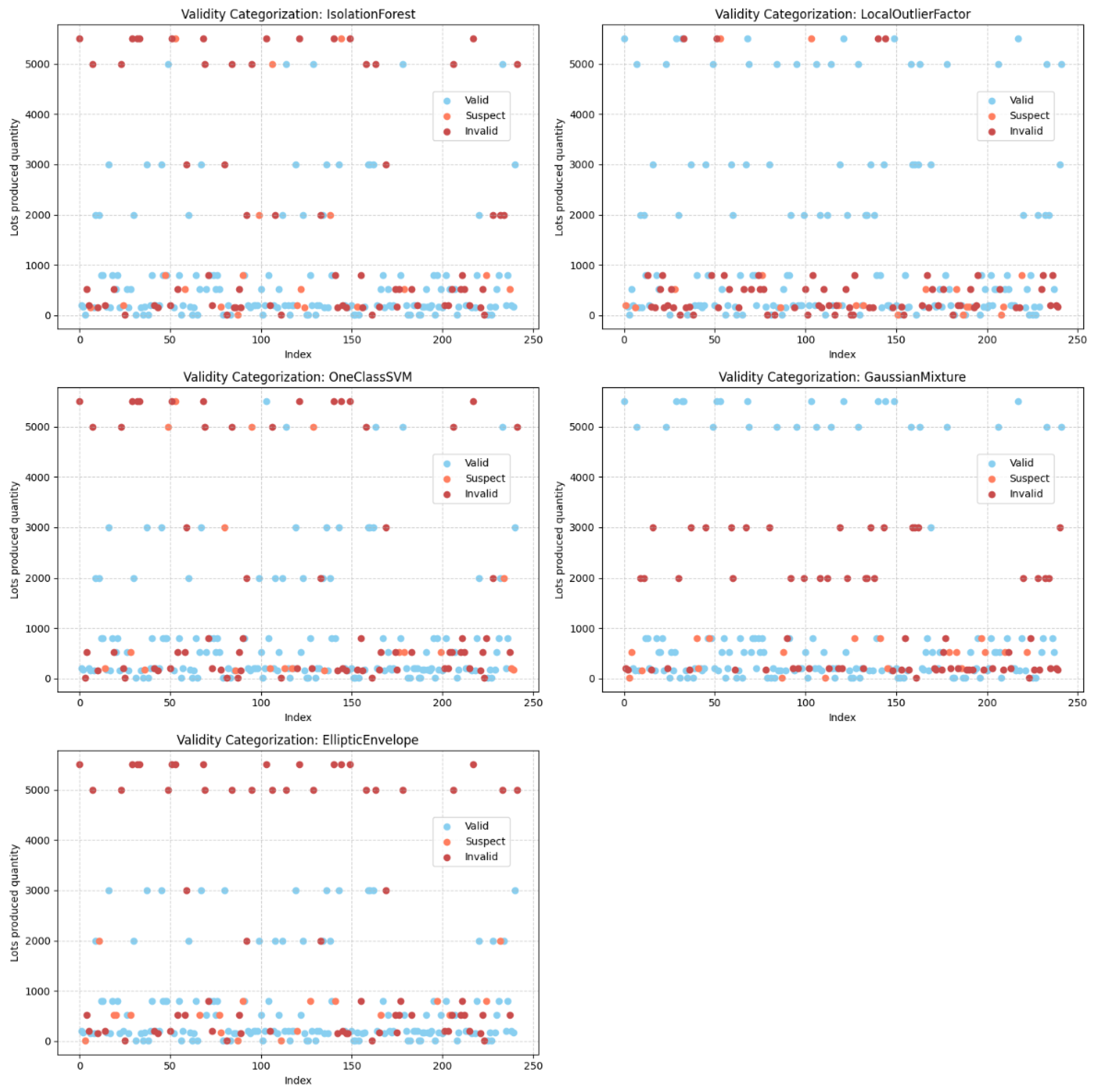

Figure 2 presents a visual representation of the output of the five anomaly detection models. In the plots, each point represents a batch, with the x-axis indicating the row index and the y-axis representing the quantity of products in each batch. The points are color-coded according to their validity category.

By analyzing

Figure 2, a noticeable pattern emerges. Indeed, suspect and invalid data points seem to be more frequently associated with lower product quantities, while there is significant variation in the detection results across the different models, indicating inconsistencies in how anomalies are identified. This diversity underscores the need for additional evaluation methods to determine which models are most suitable for the specific characteristics and requirements of this use case.

As previously mentioned, we evaluated the performance of each anomaly detection model using traditional classification metrics.

Table 2 summarizes the performance of each model. According to the results, the best-performing model was LOF with an accuracy of 52.48%, followed by OCSVM with 46.69%, IF with 45.04%, GMM with 44.63%, and RC using EE with 41.74%.

These findings suggest that, among the models tested, LOF was relatively more effective at identifying anomalous data. However, even its performance was modest, indicating limitations across all the models. This could be due to the limited size and nature of the dataset as anomaly detection models generally require large volumes of data to perform well. Additionally, more advanced data processing techniques may be needed beyond basic data normalization. Another important factor to consider is the lack of parameter tuning as the standard default values were used for all the models. These observations indicate that there is still significant room for improvement in both the data preparation and modeling stages in order to enhance the models’ performance.

To enhance model performance and better understand the impact of dimensionality reduction, PCA was applied during the data transformation stage. PCA is a dimensionality reduction technique, which transforms the original features into a new set of uncorrelated linear combinations, known as principal components, which capture the maximum variance present in the data while preserving the most significant patterns and structures. This reduces the amount of redundant or less-informative features, improving the performance of unsupervised algorithms and their ability to detect anomalies [

47].

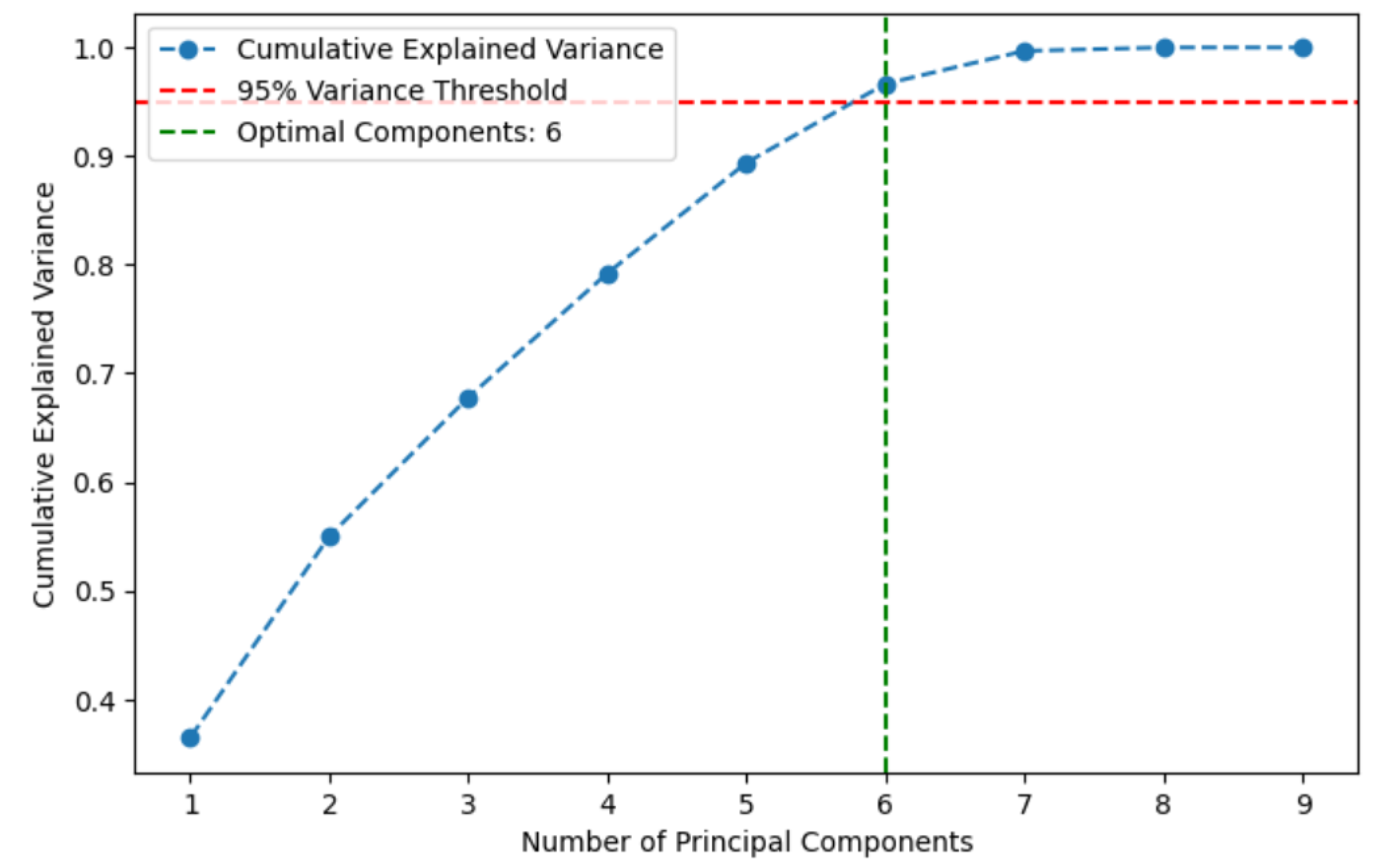

Before applying PCA, it is essential to determine the optimal number of components to reduce dimensionality while retaining most of the dataset’s variance.

Figure 3 illustrates the cumulative explained variance as a function of the number of principal components. The red dashed line indicates the 95% variance threshold, while the green line marks the minimum number of components required to capture at least 95% of the total variance. This ensures that dimensionality is effectively reduced without significant loss of information. In this case, six principal components were selected.

Table 3 presents the updated performance results of the anomaly detection models after applying PCA. As shown, LOF continues to be the best model in terms of accuracy (50.00%). However, both OCSVM and IF demonstrate comparable accuracy (49.59%) and outperform LOF in other key metrics, including F1-score, precision, and recall. On the other hand, GMM and RC with EE continue to yield the weakest results among the five models, with GMM showing the lowest overall performance.

Compared to the results in

Table 2, there was an overall improvement in the performance of the models with the use of PCA, with the exception of LOF, which demonstrated worse performance, indicating that this model works better with high-dimensional data, while the others do not.

This approach still requires further refinement, such as parameter tuning, to maximize model performance. However, this can be time-consuming, especially when working with larger or continuously updated datasets. Another problem is the use of fixed validity thresholds, which may need to be adapted for different contexts to avoid misclassifications.

The results presented above have been obtained with a single dataset split into 80% for training and 20% for testing model performance. This raises a problem related to the credibility of the results obtained. To address this issue and better understand the models’ performance, we have also used stratified K-fold validation with 100 splits over the 242 rows of the used dataset.

Table 4 shows the mean and standard deviation of the obtained models’ performance metrics.

The results obtained reveal that this approach, with anomaly detection based on individual metrics, has low performance and a high degree of variability. The accuracy around 50% for all the models indicates that the dataset is imbalanced. Precision around 43% allows us to conclude that, when the model predicts positive, it is correct 43% of the time. However, the very low recall values mean that there are many false negatives. All these are indicators of an imbalanced dataset, which may also relate to the fact that it is a rather small dataset.

Despite the lack of accuracy, these models were capable of detecting deviations without the need for labeled data, which can be helpful in scenarios where labeled datasets are difficult to obtain. In the end, the LOF, OCSVM, and IF models were chosen to be used in a validation API (refer to

Section 5.3) as they were the best-performing models.

5.2.2. Approach 2—Supervised Classification and Regression Models for Anomaly Detection Based on Individual Metrics

This approach employs both regression and classification supervised learning models to predict the degree and category of validity for the value received for a specific environmental metric related to the production of a textile product batch. In other words, it evaluates the reliability of a given metric within the context of transforming one or more raw materials into a final product. For example, the model may be used to predict the validity (both in degree and category) of the reported amount of electricity consumed in producing a 900 kg batch of fabric, where cotton and silk yarn are used as raw materials.

Data Preparation

The dataset used for this approach is the same as the one described in

Section 5.2.1, with the structure and content previously detailed in

Section 5.1 and additional preparation steps explained in

Section 5.2.1. As mentioned previously, the dataset contains 242 rows and 11 columns.

ML Algorithms

In this approach, the algorithms RF, DT, SVM, NB, and KNN are used to classify data based on their validity category. To predict the degree of data reliability (i.e., a continuous score), the models used were DT, RF, and eXtreme Gradient Boosting (XGBoost). These algorithms were selected due to their effectiveness on small datasets, as well as their popularity and scalability in practical applications. XGBoost is a widely used ML algorithm known for its high efficiency, speed, accuracy, and scalability in real-world scenarios [

48]. It is an ensemble learning method based on gradient boosting, which builds a series of Decision Trees, where each new tree tries to correct the errors made by the previous ones.

All the models were developed with Python (version 3.9.22) and Scikit-learn (version 1.6.1) and XGBoost (version 2.1.4) libraries, using the hyperparameters shown in

Table 5.

To measure the performance of these models, standard performance metrics, such as accuracy, F1-score, recall, and precision, were used to measure the effectiveness of the classification algorithms. For the regression models, we selected the score as the sole performance metric as it provides a clear indication of how well the predicted values approximate the actual degree of validity.

Results

Table 6 presents the results of the classification models using the mentioned dataset. As can be seen in the table, the best-performing model was RF with 83.67% accuracy, followed by DT and SVM with both 77.55%, then KNN with 75.51%, and finally NB with 34.69%. These results show that the models were able to correctly classify most of the data; however, they also show that there is still room for improvement since they are far from being excellent.

One important thing to note for NB is that its accuracy is lower than its precision, which means that the model has overall poor predictive performance, but, when it does predict the most dominant class (“valid”), it is often correct. This might be because of class imbalance since there are more valid data values than the other types, which can be solved by training the model with more diverse data.

Table 7 presents the results of the regression models when assessing the degree of validity of the textile data. As can be seen in

Table 7, RF is the best-performing model, with an

R2 score of 77.18%, followed by XGBoost with 76.89% and DT with 62.39%. These results are good; however, much like the classification models, there is still room for improvement, such as using more data in the training of these models.

When comparing the performance of the classification and regression models, it is possible to see that RF is the most suitable model for our data for both tasks as it was the best model overall. DT, on the other hand, is more suitable for categorizing the validity of the data rather than predicting their degree of validity. This may be due to the structure of the dataset, which may contain discrete or categorical patterns that are easier to separate into classes than to model as a continuous output.

The results above, both for classification models and for regression models, have been obtained with a single dataset split of 80%/20% for testing model performance. For confirming the reliability of the results obtained, we have also used stratified K-fold validation with 100 splits for further validating the classification models in Approach 2, and K-fold validation with 10 splits for regression models.

Table 8 presents the results of supervised classification models evaluated using stratified K-fold cross-validation.

Overall, the classifiers demonstrated strong predictive ability, with mean accuracies above 92% and F1-scores exceeding 89%. Precision and recall were also consistently high, indicating that the models were capable of correctly identifying anomalies while maintaining a low rate of false positives. Despite these strong averages, relatively large standard deviations were observed across all the metrics (ranging from to ). This variability suggests that performance is somewhat sensitive to data splits, which may reflect the limited dataset size or inherent heterogeneity in the samples. Nevertheless, the consistently high mean values across classifiers provide evidence that supervised classification constitutes an effective approach for anomaly detection based on individual metrics.

In contrast, the regression-based approaches performed poorly. As shown in

Table 9, the mean

score values were near zero or negative for all the models, with particularly poor performance for Decision Trees (−97.4% and

). Random Forest achieved a slightly positive mean

(3.5%) but with high variability (

), indicating instability and inconsistent generalization. XGBoost also underperformed, with a negative mean

(−16.4%) and large variance (

). These results suggest that regression models based on individual metrics are not suitable for anomaly detection of textile industry indicators as their predictions frequently fail to outperform a naive mean-based baseline. The discrepancy between the strong performance of classification models and the weak results from regression highlights the importance of framing anomaly detection as a classification problem rather than as a regression task when using individual metrics.

Based on the results, the models selected for implementation in the proposed ML API were the classification models RF, DT, and SVM.

5.2.3. Approach 3—Supervised Models for Anomaly Detection of Records with Collective Related Attributes

The goal of this approach is to develop ML models capable of assessing the reliability of sustainability indicator values for a given batch while also verifying the proportionality of these values in relation to other indicators. To achieve this, the dataset described in

Section 5.1 has been reused, but it has now been reorganized to enable meaningful comparisons between sustainability indicators (metrics).

The restructured dataset is designed to help the models learn relationships between the various metrics within each different production activity. For instance, when evaluating total water consumption during a production activity for producing a given product batch, the model also considers whether this value aligns with the volume of liquid effluents generated during the same process. By incorporating such relationships, the models can more effectively assess data reliability, leading to more robust and accurate validation of textile sustainability data.

Data Preparation

The data was structured so that each row aggregates all sustainability indicators related to a production activity for producing a single batch. Each row now contains detailed information about a production activity for a given batch, including the batch size, production activity, types of raw materials used along with their respective percentages, and the values of 28 environmental impact metrics (sustainability indicators). Additionally, there are columns indicating the data validity category (invalid, suspect, or valid) and the degree of validity of the information. Depending on the type of production activity, some metrics may have zero value. This happens when the metric is not measured for that production activity. With the aggregation of several records from the initial dataset within one record for the same production activity and batch reference, the total number of records is now down to 13 rows with real (valid) data, and the number of columns has risen to 37. As this meant a very small number of rows, we have created new valid rows by applying different factors to the existing valid rows, and have created some suspect and invalid rows, with a validity degree calculated the by formulas presented in [

36].

After all these preparation steps, the final dataset had forty-one rows (twenty-six valid rows (63.4%), six suspect (14.6%), and nine invalid (22.0%) and thirty-seven columns: a column with the lot produced quantity, another with the production activity name, two columns with the types of materials used, and another two representing their respective percentages, and twenty-eight columns corresponding to the twenty-eight environmental impact metrics. Columns with the organization and record validity degree and corresponding validity category complete the thirty-seven columns. The organization is dropped before running the algorithms, reducing the number of columns to thirty-six.

After the preparation of the dataset, the correlation matrix was generated to show how attribute values vary in relation to each other. The correlation matrix for the created dataset is shown in

Figure 4, and it represents the degree of relationship between the environmental impact metrics of the dataset. Possible values for each row and column of the correlation matrix range from −1 to +1. The value “−1” means that there is a negative correlation; that is, the value of one attribute increases while the other decreases. On the opposite side, the value “+1” means that there is a positive correlation; that is, the attribute values grow together. The value 0 means that there is no relationship between the attributes. The correlation matrix also demonstrates color tones that vary between blue, representing strong negative correlation, and red, representing strong positive correlation.

As can be seen in the correlation matrix, some metrics exhibit strong relationships with others (with correlation values close to 1). For example, the metrics “Non-Hazardous Waste Quantity”, “Solid Waste Quantity”, “Textile Waste Quantity”, “Recovered Waste Quantity”, and ”Recovered Textile Quantity” all show a strong positive correlation with “Total Electric Energy Consumption”. Also, “Purchased Steam Consumption” demonstrates a strong positive correlation with both “Chemical Consumption” and “Non-Hazardous Chemical Quantity” produced.

ML Algorithms

Having each record labeled as invalid, suspect, or valid, the dataset is prepared for use with supervised learning algorithms. This approach explores the application of commonly used algorithms for anomaly detection, namely RF, DT, KNN, SVM, and NB [

3]. The study is divided into classification and regression models, each analyzed separately to evaluate their effectiveness in detecting anomalies in the data.

In this approach, the algorithms and hyperparameters used to train the models are the same as those employed in Approach 2.

Results

As before, classification models have been evaluated according to standard performance metrics for classification models, namely accuracy, precision, recall, and F1-score.

Table 10 presents the results with regard to accuracy, F1-score, and precision of each model based on the created dataset.

Regression is a supervised learning technique, used to make predictions, that models a relationship between independent and dependent variables [

49]. As previously,

score has been used for assessing the performance of regression models. The results of the regression ML models trained and evaluated via this approach are represented in

Table 11. The table presents the models sorted in descending order based on their

scores. As shown, the DT model achieved the highest performance among all the evaluated models.

As before, given the very small dataset, K-fold validation has also been used to better understand the performance of the models.

Table 12 shows the performance metrics of the ML classification models studied in Approach 3 after using stratified K-fold validation with 10 splits. Moreover, in

Table 13, the

performance indicators of the regression models of this approach, obtained using K-fold validation with 10 splits, are shown.

In summary, this approach evaluates two groups of models with a dataset with multiple related features in each data record: regression and classification. The results indicate that the used classification models outperform the regression models. Random Forest and SVM are the most reliable classifiers (; strong F1 ≈ 84–85%) and show moderate variability (Std. Dev.∼12). Decision Tree also performs decently (83% accuracy) but is less stable (Std. Dev. 15.8). KNN is weaker, with lower accuracy (80.5%) and weaker precision. Naive Bayes performs poorly (accuracy 63.5%) and has large variability (Std. Dev. 19.8).

Due to the relatively small dataset, regression models struggle to predict continuous values with high accuracy. XGBoost is the strongest regressor ( = 58.7%) but still unstable. All the regressor models show very high standard deviations of (above 40). This means that model performance changes considerably depending on the fold (dataset is small and sensitive to sampling). Compared to the 80/20 split, the of DT dropped dramatically (from 85% to ∼53%). This confirms that the 80/20 results were optimistic and overfitted. Data likely needs more samples and better preprocessing.

In contrast, classification models, working with discrete categories (invalid, suspect, and valid), tend to generalize better with limited data, making them more robust and effective in this context.

5.2.4. Approach 4: Supervised Models for Anomaly Detection of Individual Metrics Based on Collective Related Attributes

This approach explores two distinct supervised learning strategies aimed at predicting the values of environmental metrics. The primary goal is to estimate the value of a specific metric, associated with a given production activity and a produced batch, by leveraging information from the remaining metrics and batch-specific attributes, such as raw material composition, batch size, water consumption, and liquid effluent volume. For example, validating the total water consumption for a batch may involve using features like the batch size, types of materials used, production activity, and other related metrics, such as the volume of liquid effluents. This method captures the interdependencies between various indicators and production characteristics to accurately validate the target metric.

Scikit-learn and XGBoost Python libraries have been used for the models in this approach, mostly with the libraries’ default hyperparameters, as can be seen in

Table 14.

Approach 4.1: Dedicated Model per Metric

The first strategy involves developing twenty-eight distinct Machine Learning (ML) regression models, one for each of the twenty-eight metrics being validated.

The dataset utilized was identical to the one created in

Section 5.2.3, inheriting the same data preparation pipeline. The key distinction lies in the model training setup: for each of the 28 metrics that can be validated, a dedicated model has been trained. In each case, the target metric was removed from the input feature set and designated as the output variable, while the remaining 27 metrics plus the batch characteristics served as input features.

This design allows each model to specialize in predicting its assigned metric, potentially leading to higher accuracy as each model is tuned to the specific nuances of its target variable within the context of the other batch data.

Following data preparation, appropriate regression models were selected. Given the relatively small dataset size, neural network architectures were not considered.

The same set of regression algorithms evaluated in

Section 5.2.3 were employed here. In total, one-hundred-twelve models were trained (one for each of the twenty-eight metrics, potentially across four different algorithms). Model performance was evaluated using the Coefficient of Determination (

score). After evaluating all models, the top five performers based on

score were identified, as shown in

Table 15.

The highest performance was observed with a Decision Tree model for predicting/validating ‘Total electricity consumption’ ( = 99%), indicating excellent predictive capability for this metric. Random Forest and XGBoost models also showed strong performance for metrics such as ‘Amount of solid waste’, ‘Consumption of chemical products’, and ‘Quantity of waste recovered’. These results suggest that tree-based methods are particularly effective for this specialized modeling task.

Approach 4.2: Single Unified Model

This second strategy aimed to develop a single ML model capable of predicting any of the 28 metrics rather than training metric-specific models.

The same dataset and preprocessing steps from

Section 5.2.3 were used as before. The key transformation in this approach involved restructuring the dataset: each original record (representing a single production activity and batch) was expanded into twenty-eight new records, one for each metric considered as a potential prediction target. A new categorical feature, ‘target_name’, was introduced to each expanded record to indicate which metric serves as the target variable for that instance. The value of the specified target metric became the output variable of that row, while the remaining twenty-seven metrics, along with batch-specific attributes (e.g., production activity, batch size, material types, and their percentages) and the ‘target_name’ itself formed the input features.

This transformation resulted in a dataset with 392 rows (based on 14 original batches, 14 × 28 = 392) and 35 columns. Among these, twenty-eight columns represented metric values (with one serving as the output and the remaining twenty-seven as inputs depending on the ‘target_name’). The rest included features for production activity, batch size, material types and proportions, and the ‘target_name’ identifier. In total, each row contained thirty-four input features and one target variable, the value of the designated target metric.

Model selection and evaluation followed the same methodology as in

Section 5.2.4—Approach 4.1.

Table 16 presents the performance of the best models identified for this unified approach.

As shown in

Table 16, DT and tree-based ensemble models (Random Forest and XGBoost) demonstrated very strong performance, achieving high overall

scores. In contrast, the K-Nearest Neighbor (KNN) algorithm performed poorly (

= 46%). This discrepancy is likely due to the challenge faced by distance-based methods like KNN in generalizing effectively across the heterogeneous nature of the target variable, which encompasses 28 different metrics with potentially diverse scales and distributions, all handled within a single model structure. The added ‘target_name’ feature aids tree-based models in partitioning the data appropriately, a task potentially harder for KNN in this context. For confirming these results, K-fold cross-validation has once again been used. The obtained results can be seen in

Table 17.

These results somewhat confirm what we had already concluded. Decision Tree and XGBoost are clearly the best models for predicting any of the 28 considered textile metrics based on the collective related metrics for the same lot. They achieve a very high (∼92–93%) and present the lowest MSE and MAE, having the most accurate predictions. Also, DT and XGBoost have the lowest standard deviation, being fairly consistent across folds.

RF also performs well (∼88%), but not as strong as DT and XGBoost as its MAE is much higher (∼81 vs. 58–62), which means that predictions are less precise. Its standard deviation is also higher (∼10).

KNN shows moderate performance (∼0.76) but high variance, meaning instability.

Comparison Between the Two Approaches

This section compares the two strategies presented in this section, Approach 4.1 (28 models, denoted 28/28) and Approach 4.2 (single unified model, denoted 1/28). The comparison considers predictive performance, maintenance effort, and scalability, as summarized in

Table 18.

Approach 4.1 (28/28) offers the potential for superior accuracy on a per-metric basis due to model specialization. However, this comes at the cost of significantly higher complexity in terms of development, deployment, and ongoing maintenance (managing 28 individual models).

Approach 4.2 (1/28) presents a much simpler and more scalable architecture. While its overall performance is excellent, the accuracy for any single specific metric might be lower than that achievable with a dedicated model from Approach 4.1. The single model must generalize across all metrics, which might compromise performance for metrics with unique patterns or weaker correlations with the input features.

Considering the operational context, where scalability and ease of maintenance are often crucial, Approach 4.2 was deemed more appropriate. The high overall

achieved suggests strong predictive power across the board, and the operational benefits of managing a single model outweigh the potential for slightly lower accuracy on some individual metrics compared to the more complex 28-model system. Therefore, the unified model strategy (

Section 5.2.4—Approach 4.2) was selected for further development and deployment regarding the validation API.

5.3. Integration API

For the DPP to serve as a trustworthy source of detailed information about a product’s origin, composition, and life-cycle activities, promoting transparency and supporting more sustainable and responsible decision-making, the data used to build the DPP must be validated prior to its integration into the DPP platform. This validation is particularly critical in the complex landscape of the T&C industry, where numerous organizations generate and manage data through different software systems before providing some of that data as indicators to be collected and incorporated into the DPP. To this purpose, an API has been developed to facilitate the validation of such indicators or metrics. The API provides endpoints that leverage a range of Machine Learning algorithms, selected based on the research and evaluation presented in

Section 5.2.1,

Section 5.2.2,

Section 5.2.3 and

Section 5.2.4, to accomplish the data validation process.

Within the context of the DPP, where both data integrity and interoperability are essential, this API-driven solution becomes indispensable. Data must be collected from various points across the T&C value chain, including Enterprise Resource Planning (ERP) systems, Internet of Things (IoT) devices, and other data collection platforms used by participating companies. The developed API enables seamless submission of product and sustainability data to the validation service, ensuring that only verified and reliable information is integrated into the DPP.

The API data validation services evaluate the submitted data for accuracy, consistency, and compliance with predefined standards, returning a validation status, ensuring that only high-quality trustworthy data is integrated into the Digital Product Passport.

Along with the data validation services, the API includes services for managing the different types of data entities, such as production activities, raw materials, and environmental indicators, which were addressed in [

36,

37]. This standardized interface enables consistent data validation practices across the textile and clothing (TC) value chain, thereby improving the reliability and usefulness of the DPP.

To implement and manage the proposed solutions, a software framework was developed using Spring Boot in Java. The system was designed as a RESTful Web API, ensuring seamless integration and scalability. Additionally, authentication and authorization mechanisms are managed via Keycloak, ensuring secure access control. To enhance operational monitoring and diagnostics, Grafana Loki and Tempo are employed for centralized log management and distributed tracing visualization. Prometheus is used for real-time metric collection and analysis, while Grafana serves as a comprehensive visualization and dashboard tool.

The validation services provided by the API include endpoints for using the ML-based validation services. These are presented in

Table 19. As can be seen in the table, there are three endpoint services for anomaly detection using the IF, OCSVM, and LOF models selected in

Section 5.2.1 to be used in the validation API.

From Approach 2 (

Section 5.2.2), the models selected for implementation in API were RF, DT, and SVM for classification tasks.

From Approach 3 (

Section 5.2.3), the services available on the API apply classification models based on DT, RF, and SVM, and regression models based on DT, RF, and XGBoost.

From Approach 4, presented in

Section 5.2.4, the unified model strategy (Approach 4.2) was the one selected for the API. The services available use DT, RF, and XGBoost.

API Integration Case Validation

The validation API for textile environmental indicators is currently being used by INFOS (

https://infos.pt/en/projetos-de-inovacao/ accessed on 25 August 2025) in the implementation of a Digital Product Passport for the textile sector. The current phase includes major contributions, such as the selection of more real data records for building larger datasets, with a wider spectrum of production activities and types of textile industries, which will enable new models to be trained better with the same algorithms. Another major contribution is the building of knowledge about which validation approach is better for each environmental indicator registered in the DPP.

6. Discussion

This section analyzes the approaches presented in the previous section.

Table 20 presents a comparative summary of the four proposed Machine Learning approaches for assessing the quality of textile data.

The table highlights each approach’s model type, dataset structure and characteristics, the key advantages and limitations, and the most appropriate scenarios for their application.

Approach 1 (anomaly detection based on individual metrics) is an unsupervised approach that may achieve good results when labeled data is scarce. It can detect anomalies using patterns in unlabeled data and is easy to scale and implement. However, it suffers from lower accuracy in well-labeled contexts, and performance heavily depends on proper threshold selection and data distribution. This approach is best suited for early-stage deployments or cases with limited annotation.

Although the results are not the best, this approach shows that unsupervised anomaly detection algorithms may be a viable approach to detect anomalies in textile data.

Nevertheless, several limitations must be acknowledged. The small dataset constrains generalizability, the thresholds were set without extensive model tuning, and class imbalance, particularly regarding the limited “suspect” cases, may have influenced its performance. These factors, along with the absence of variability measures in the reported results, should be considered in future work, specifically by expanding the datasets with more records from diverse companies in the textile value chain, introducing light or domain-informed parameter tuning, and applying stratified K-fold cross-validation to improve the reliability and robustness of the model evaluation.

Approach 2 (supervised classification and regression models for anomaly detection based on individual metrics) applies classification and regression models independently to each metric. It provides high accuracy when sufficient labeled data is available and is easier to interpret on a metric-by-metric basis. However, it fails to account for inter-metric relationships and may not scale well with many diverse metrics.

In Approach 3 (supervised models for anomaly detection of records with collective related attributes), instead of assessing individual metrics, the models assess the consistency of an entire record by considering all the metrics together. This allows for detecting systemic errors and exploiting correlations between metrics. While powerful for spotting broad inconsistencies, it is less interpretable and may struggle with isolated metric-level anomalies, especially in small datasets with many features.

Approach 4 (supervised models for anomaly detection of individual metrics based on collective related attributes) predicts each individual metric using the rest of the metrics and batch characteristics as context. It combines the benefits of both localized and contextual validation. Approach 4.1 allows for fine-tuning per metric while leveraging interdependencies. Despite its higher implementation and computational complexity, it offers strong generalization and accuracy potential across the metrics. Approach 4.1 is computationally demanding, and the models’ scalability, training latency, and inference throughput need to be critically assessed in future work. Future work should also better analyze the computationally demanding algorithms of Approach 4.1, for which K-fold validation has not been used. The possible advantage of using a specialized regression model for each metric that is able to make use of the context provided by the other metrics (Approach 4.1) must be further analyzed when compared with a unique regression model for all the metrics based on the global metrics context (Approach 4.2).

As mentioned before, the validation API for textile environmental indicators is currently being used by INFOS in the development of a DPP for textile products, enabling the gathering of real data for building a larger dataset. With the collection of new data and the construction of a new dataset from several companies in the value chain, including from companies in different countries, future work will be able to retrain all the models of the proposed approaches and solve most of the problems related to dataset scale and geographical bias, enhancing the generalizability of the findings.

Further research should also be conducted in the future regarding the exploration of data augmentation methods (e.g., SMOTE variants and GANs) to mitigate class imbalance and scale methods for larger datasets.

7. Conclusions

The implementation of the DPP is expected to contribute many benefits in terms of sustainability and the circular economy by providing transparency and traceability in the value chain and increasing social and environmental responsibility in the T&C industrial sector.

For companies to achieve returns on their investments and be able to compete fairly with each other, this implementation must be required at the global level. However, beyond the initial investment required, the rollout of the DPP presents several challenges. At the top are the difficulties involved in collecting and integrating data across companies with widely varying levels of digital maturity, as well as the complexity of obtaining certain sustainability indicators.