1. Introduction

Today, the discipline of pathology is evolving towards digital pathology (DP) due to the emergence of whole slide image (WSI) technology and the increased computing power of computers through graphics processing units (GPU). According to [

1], applications based on artificial intelligence (AI) algorithms and methods are transforming medical fields such as pathology. The results of these algorithms achieve better diagnostic accuracy and reproducibility by consistently handling a large amount of information in every case. In addition, diseases such as cancer are increasing in incidence in the population, requiring more pathologists to diagnose them. In this sense, AI can help to meet the growing demand for experienced pathologists.

In the field of AI, deep learning (DL) neural networks (NNs) have become the standard approach for many image-based tasks (e.g., classification, object detection, and segmentation). They enable the automation of processes such as tissue morphology analysis, cell and mitosis counting, biomarker quantification, and histopathological feature extraction, thereby helping pathologists reduce their workload, shorten report submission times, and standardise clinical practices. These benefits are evidenced by the introduction of clinical decision support systems (CDSS) in digital pathology (DP).

The development of an intelligent CDSS depends on the successful application of AI techniques and algorithms in WSI. This type of technology is expected to improve the performance of medical specialists in terms of precision, objectivity, and reproducibility [

2]. However, using deep neural networks to solve computer vision problems involves learning thousands of parameters to identify complex patterns and achieve greater generalisation power when presenting new images to the models. However, to obtain a good response from the models, it is necessary to have, among other things, significant annotated images to successfully train these models and a proper data augmentation strategy [

3].

Dataset annotation is very restrictive for training neural network architectures [

4,

5], considered one of the biggest problems in DP. This is due to the limited number of annotated images at the pixel level for segmentation tasks and/or at the patch level for classification tasks. In many cases, there are only global annotations, including diagnostics.

Active learning (AL) techniques focus on reducing this cost by building a small dataset with the most informative samples. This technique has been applied in many computer vision tasks (e.g., classification, semantic segmentation, pose estimation, and object detection) with successful results, both in 2D and 3D [

6,

7] environments.

Its application in classification (CL) and object detection (OD) tasks has received more attention in recent years. Several applications (e.g., digital pathology, autonomous driving) can benefit from this type of technology, where the cost of specialists is high and the size of the unlabelled dataset is also large. Many researchers focus on finding new sampling methods, selecting a constant number of images (constant budget) for each iteration. In this case, the workload of the annotators (specialists) remains similar for each iteration. Therefore, this work explores the implications of not using a constant number of images to annotate per iteration (i.e., a constant budget); and investigates the impact of adopting a variable annotation strategy across iterations, starting with a larger number of images in the initial rounds and gradually reducing this number in subsequent iterations.

This work proposes active learning with a decreasing-budget-based strategy and introduces three experiments that provide insights into its impact on the annotation process. The strategy enhances annotation efficiency by prioritising efforts in the initial iterations, optimising resource allocation, and ultimately boosting the performance of the trained model.

The rest of the work includes the following sections.

Section 2 provides a short review of relevant previous work in the areas of classification, object detection methods, active learning sampling strategies, and their application in medical imaging;

Section 3 presents the datasets used in the experiments, the decreasing-budget-based strategy, and the experiments focused on classification and object detection tasks;

Section 4 and

Section 5 present the results of the classification and object detection experiments, respectively, along with a discussion of these findings;

Finally,

Section 6 presents the conclusions of this work.

2. Related Work

In deep learning, classification consists of assigning a label or category to an input image based on its visual features. It involves training a deep neural network to learn the mapping between the input images and their corresponding labels. There are some popular DL architectures for classification applications. Two of them are MobileNetV3 [

8] and InceptionV3 [

9]. The first [

8] focuses on efficiency and performance on mobile and resource-constrained devices, utilising depth-wise separable convolutions and attention mechanisms to balance accuracy with computational cost. The second [

9] employs a multipath approach through inception modules, allowing it to capture features at various scales, and to incorporate techniques such as factorisation and auxiliary classifiers to enhance learning and convergence.

Otherwise, object detection is the process of identifying and localising objects within an input image. In the context of deep learning, object detection involves training a deep neural network to predict the bounding boxes and class labels of objects within an image. Today, several technologies such as You Only Look Once (YOLO) [

10], Detection Transformers (DETR) [

11], and others are racing to reach and maintain the state-of-the-art in the object detection task. Other well-established methods, such as Faster R-CNN [

12], are used for comparison purposes between object detection architectures. Detection Transformers is an object detection architecture that relies on direct predictions, avoiding components that encode the prior knowledge of the task [

11]. Its performance was measured using the MS-COCO dataset [

13], outperforming other architectures. However, according to the authors, the model’s performance on small objects still requires further study [

11]. The YOLO algorithm family, like YOLOv8 and its successors, is considered a cutting-edge, state-of-the-art model, supporting several artificial intelligence tasks such as classification, object detection, segmentation, pose estimation, and tracking [

10]. The experiments carried out in this work used MobileNetV3 [

8], InceptionV3 [

9] and YOLOv8 [

10] architectures as a basis, although any other model for classification and/or object detection can also be used.

2.1. Active Learning Sampling Strategies

Active learning strategies prioritise the selection of the most informative images for annotation, allowing neural network models to achieve performance similar to that of those trained on the entire dataset. By focusing on high-value samples, active learning improves efficiency, reducing annotation workload without compromising model accuracy.

The pool-based active learning scenario is well established in the active learning literature. The iterative process starts with a small training dataset (), which is used to train a first model (iteration ). Later, the model can be used to score all images that belong to the pool (P) according to an acquisition function . A total of B top-scoring images are moved from P to , allowing the execution of another training and selection iteration. The validation dataset () and the test dataset () are kept constant to decide if the model is ready to deploy or not.

Another feature of the pool-based scenario is that the pool P is fixed at the beginning of the iterations and only the images sampled are removed until the pool is empty. The stream-based scenario is another category that groups specific cases where the pool receives new images from time to time. This scenario includes the possibility of rejected instances being removed from the pool.

In [

14], the authors present a review of active learning for object detection, which summarises the most commonly used strategies and how active learning can be mixed with other learning techniques. Moreover, the review also discusses the strengths, weaknesses, opportunities, and threats (SWOT) associated with AL methods for object detection.

2.2. Active Learning for Medical Applications

Medical applications are applying active learning to reduce annotation costs and monitor the assigned budget to obtain a useful deep learning model. This technique was successfully applied in classification [

15,

16] , object detection [

17,

18,

19] and segmentation [

16,

20].

To address the problem of active learning for the Tumour-Infiltrating Lymphocytes classification task in digital pathology, the authors propose a hybrid acquisition function that highlights the diversity of tissue characteristics through the use of grouping and uncertainty through the model prediction output [

15].

Segmentation was also addressed with the active learning approach, where a U-net architecture was trained in an iterative process. This neural network was applied to whole slide images of oral cavity cancer tumours stained with H&E. For each iteration, a team of pathologists scores each image on a scale of 0–5 for each tissue class. Only poorly classified images were used to retrain the U-net model [

20].

In [

16], active learning was employed to train classification and segmentation models to overcome dataset limitations and improve model performance. Experts manually annotated images exhibiting high uncertainty, whereas they assigned pseudo-labels to the low-uncertainty images.

In [

21], a personalised AI platform was proposed for digital pathology. The platform enables pathologists to create manual annotations and employ an active learning strategy to rank and present images for validation. It also includes an application programming interface to provide an artificial intelligence service that assists pathologists in diagnosis. This platform was validated by a team of pathologists for the mitosis detection task.

3. Material and Methods

The main focus of this work is to evaluate the performance of a decreasing-budget-based strategy (

), avoiding other topics such as the acquisition function, the user interface used to annotate, and the saved time in the annotation process. In this sense, the

Section 3.1 introduces the proposed active learning with a decreasing-budget-based strategy and the

Section 3.2 presents the datasets used in the experiments. Later, the

Section 3.3 and

Section 3.4 describe the experiments.

3.1. Proposed Active Learning with a Decreasing-Budget-Based Strategy

This work explores the impact of using a decreasing budget on each active learning iteration, starting with a high budget size, and continuously reducing this budget by iteration. Next, Algorithm 1 presents the steps to implement this strategy.

| Algorithm 1 Active Learning with the Decreasing-Budget Strategy. The function represents the manual validation process |

- 1:

Input: Unlabelled dataset U, initial budget , initial iteration - 2:

Output: Trained model - 3:

Select and annotate initial set of images from U using budget - 4:

while stopping criterion not met do - 5:

Train model using dataset - 6:

Rank remaining unlabelled images in U using uncertainty-based sampling - 7:

Select a subset from U, such that - 8:

Use model to generate pseudo-labels for - 9:

Specialists validate the pseudo-labelled images, such that - 10:

- 11:

- 12:

end while - 13:

return final model

|

As mentioned above, the sampling strategy is based on the uncertainty of the model (

U), specifically entropy, but it is not unique to this image score strategy. For classification problems, the uncertainty (see Equation (

1)) depends on each class prediction confidence (

) with

j denoting the class index and

C denoting the number of classes.

For object detection problems, the entropy depends on the class count (

C) and the number of objects detected by class (

). First, it computes the uncertainty of all objects that belong to the same class

j (see Equation (

2)). Next, the uncertainty of the class is aggregated to compute the image uncertainty. This work uses the average as an aggregation method (see Equation (

3)).

3.2. Dataset

To address the problem of mitosis detection, we rely on two public datasets (i.e., Mitos & Atypia [

22] and TUPAC [

23]). Also, additional images (iPath dataset) were collected and annotated by the Computer Graphics Centre (CCG), the Faculty of Medicine at the University of Coimbra (FMUC), and the BMD Software consortium in the context of the iPath project [

3]. They were joined to form a single whole (see

Table 1).

For the classification experiment, the three datasets in

Table 1 were reviewed and adapted to create a new balanced dataset with 6214 images. (that is, 3107 mitosis images and 3107 non-mitosis images).

Figure 1 shows 12 sample images, i.e., six for each class of the dataset. Initially, we randomly split the dataset into three parts (60% for training, 20% for validation, and 20% for testing) with the condition that they are balanced.

For the object detection experiments, the three datasets were reviewed and adapted too. In this sense, the images were cropped to pixels containing the mitosis. Later, the mitosis bounding box was adjusted to its minimum size.

3.3. Classification Experiments

To analyse the behaviour of the strategy

, it is compared with three other strategies (

,

and

) where the budget remains constant on each active learning iteration. For each, the size of the dataset used to train the models increases, for each iteration, by

B images where

,

and

according to the strategies

,

and

, respectively. The

strategy reduces the budget from

to

with a step of

. The initial iteration

for the four strategies starts with 20 images (see

Figure 2). The validation and test datasets are the same for both architectures and remain constant for each iteration.

The experiment is repeated two times, observing the performance of two well-known deep learning architectures for classification tasks (i.e., MobileNetV3 [

8] and InceptionV3 [

9]) when they are trained iteratively. Both models have an input size of

and

pixels, respectively, and were trained using the Adam [

24] and RMSprop [

25] optimisers, respectively, with a constant learning rate of

during 100 epochs. For both, the same ImageNet [

26] initialised weights are used at the start of every active learning iteration (transfer learning). The accuracy and F1-score metrics were used to evaluate the performance of trained models.

3.4. Object Detection Experiments

For object detection tasks, two experiments are included. In the first experiment, the YOLOv8Nano architecture [

27] is trained iteratively, exploring its behaviour according to two variables: (1) warm-start learning or transfer learning, and (2) the use of a decreasing-budget or a constant budget. The second experiment explores: (1) the decreasing budget strategy versus the increasing-budget strategy, and (2) the performance of two YOLOv8 architectures (YOLOv8Nano or YOLOv8Medium).

For both experiments, each training goes up to epoch 100 using a batch size of 4 and the predefined YOLOv8 hyperparameters [

10]. In addition, the images of

Table 1 were randomly divided into five parts (five-fold cross-validation), repeating the active learning iterations five times (

,

,

,

and

), retaining three parts for the pool dataset, one part for validation, and the other for testing according to

Table 2. The model’s performance was assessed based on the F1-score. For each experiment, a different seed was used to divide the dataset into five folds, and 20 images were randomly selected from the pool dataset to train the model in the first iteration (

).

3.4.1. First Object Detection Experiment

In this experiment, warm-start learning means that the model

is used to initialise the parameter values of the

, while transfer learning uses the same parameter values on each initialisation. The YOLOv8Nano model was used to initialise the model

in the first iteration (

). The decreasing-budget strategy reduces the budget from

to

with a step of

, and the constant strategy uses

in each iteration (see

Figure 3).

3.4.2. Second Object Detection Experiment

In this experiment, for each active learning iteration, transfer learning is used to initialise the model parameters, i.e., the YOLOv8Nano and YOLOv8Medium models were used to initialise the models

. The

strategy was evaluated in two different scenarios. The first scenario compares the decreasing-budget-based strategy with increasing the budget per iteration and keeping the budget constant by iteration. The second scenario evaluates the performance of two object detection architectures (YOLOv8Nano and YOLOv8Medium).

Figure 4 presents the evolution of the training dataset size by iterations.

4. Classification Experiment: Results and Discussion

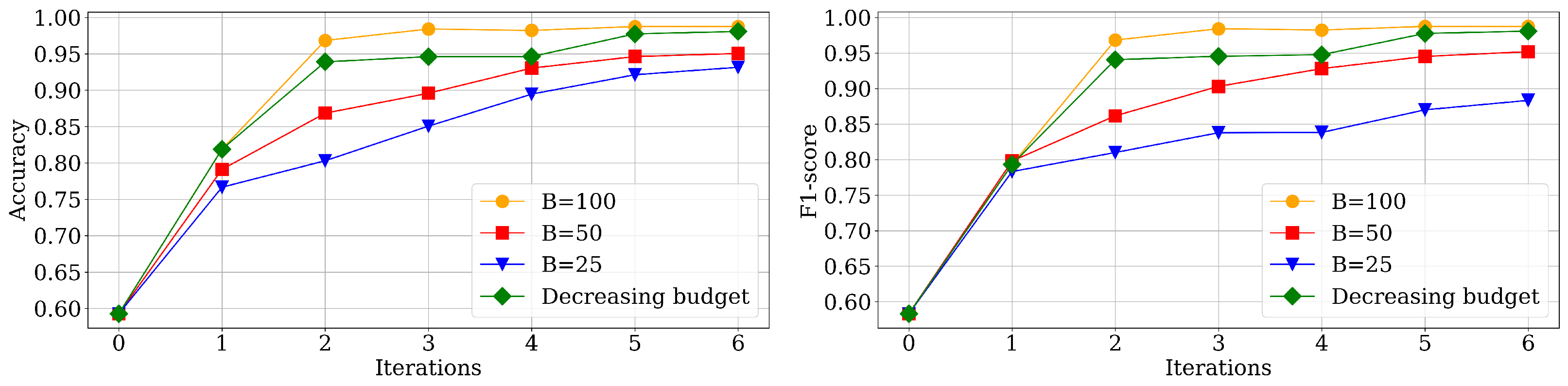

According to the

Figure 2, the learning process with

used fewer images for training followed by the experiment with

and

. The fourth experiment (

) starts with

and, in successive iterations, the budget is reduced by 25, i.e., 75, 50, and 25, and then remains constant until the last iteration.

Figure 5 shows the accuracy and evolution of the F1-score of the MobileNetV3 trained models in the test dataset. Similar behaviour is observed in trained models belonging to the InceptionV3 architecture (see

Figure 6). The active learning experiment started at iteration

for both models, using the same training dataset of 20 images. The models trained in the first iteration (

) have shown low accuracy as expected. Both models, in the last iteration (

), reached a higher accuracy and F1-score for

.

Appendix A Table A1 and

Table A2 contains the data used to generate the graphs.

Also,

Figure 7 presents the accumulated training time for each iteration, finding that the training times were similar in the initial iterations, increasing the difference at the end of the iterations.

These results show the relationship between model performance (accuracy and F1-score) and the amount of data used to train them. Experiments show that if deep learning models are trained using active learning techniques, they can achieve similar performance over several iterations using less annotated data.

The configuration in terms of accuracy and F1-score never reaches the performance of the other configurations and is therefore excluded from the following analysis. MobileNetV3 and InceptionV3, trained in loop, using show the best results compared to the rest of the settings, followed by the configuration according to the proposed strategy. The configuration performs better than and .

The analysis of the MobileNetV3 and InceptionV3 architectures, in iteration , where both models were trained with the same amount of images, shows that the configuration performs better than with the same annotation effort. Until now, , the model trained according to the proposed strategy always performs better than . At iteration , the model trained by the strategy with uses 620 images for training, while the strategy only used 320 images, approximately half of the images with a very similar result, in terms of both accuracy and F1-score.

MobileNetV3 architecture: F1-score of equal to versus F1-score of equal to .

InceptionV3 architecture: F1-score of equal to versus F1-score of equal to .

From the previous analysis, it is inferred that using a high budget by iteration should not mean a good annotation strategy thinking in the reduction in the annotation effort. Meanwhile, it is observed in this experiment that starting with a high budget and reducing this value by iteration contributes to reducing the annotation effort of the oracle, which is the main goal of any active learning strategy. However, reducing the annotator’s effort is directly related to the increase in computational resources needed to reach a useful result. Furthermore, the results obtained with a strategy that reduces the budget per iteration () showed promising performance results, as this approach consumes fewer computational resources than and obtains similar results according to the accuracy and F1-score metrics.

5. Object Detection Experiments: Results and Discussion

5.1. First Object Detection Experiment

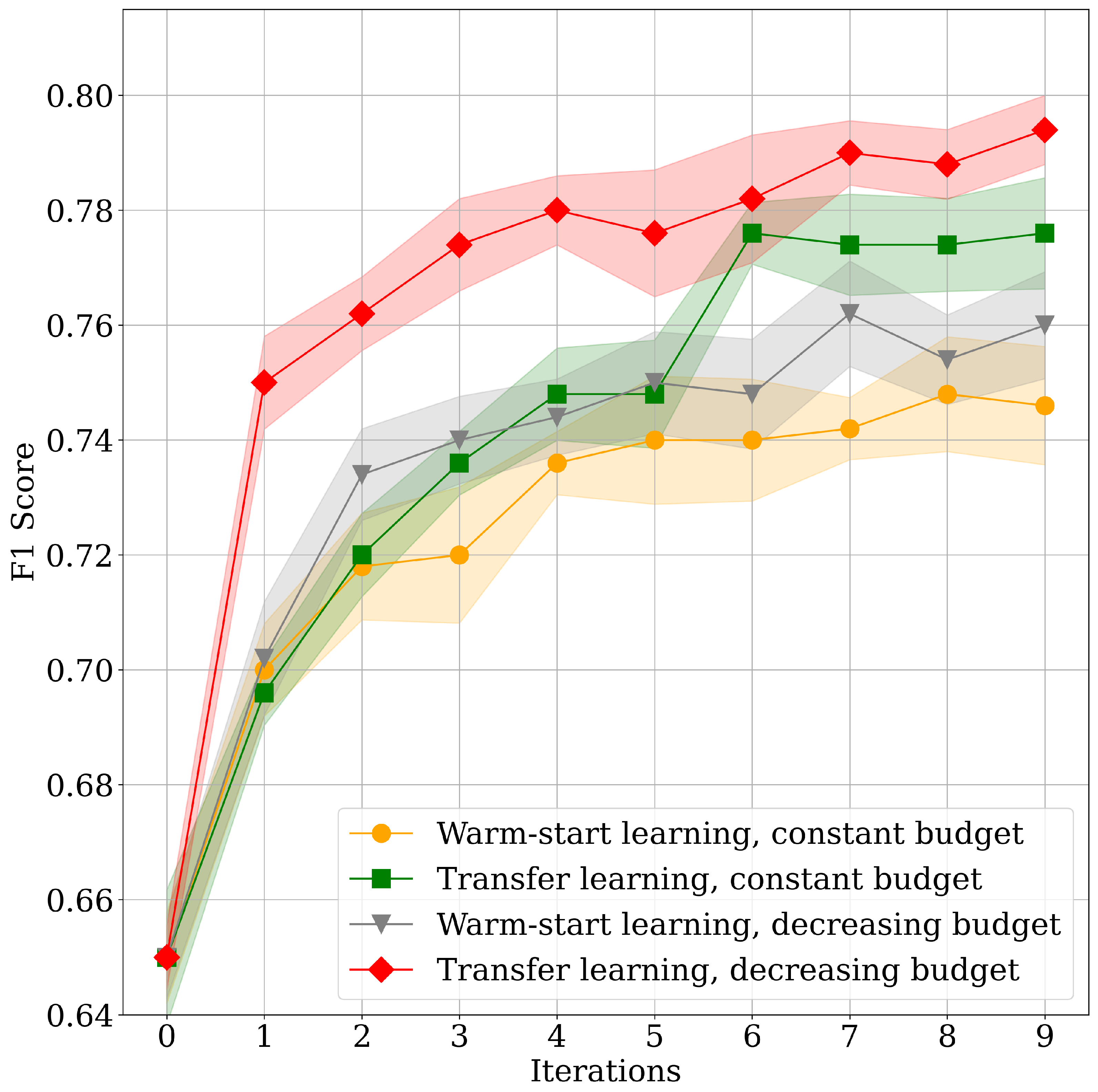

The figures in this section show the results of the five-fold cross-validation for object detection, represented as

,

,

,

, and

. The lines in the plots indicate the F1-score average, while the shaded area represents the range between the minimum and maximum F1-score values.

Appendix B,

Table A3 reports the complete data used to generate the graphs, while

Table A3 includes a graphical representation of these results. For a more comprehensive analysis of the results, the experiments are examined according to the studied variables. To this end, this section presents simplified figures derived from the detailed results provided in

Appendix B.

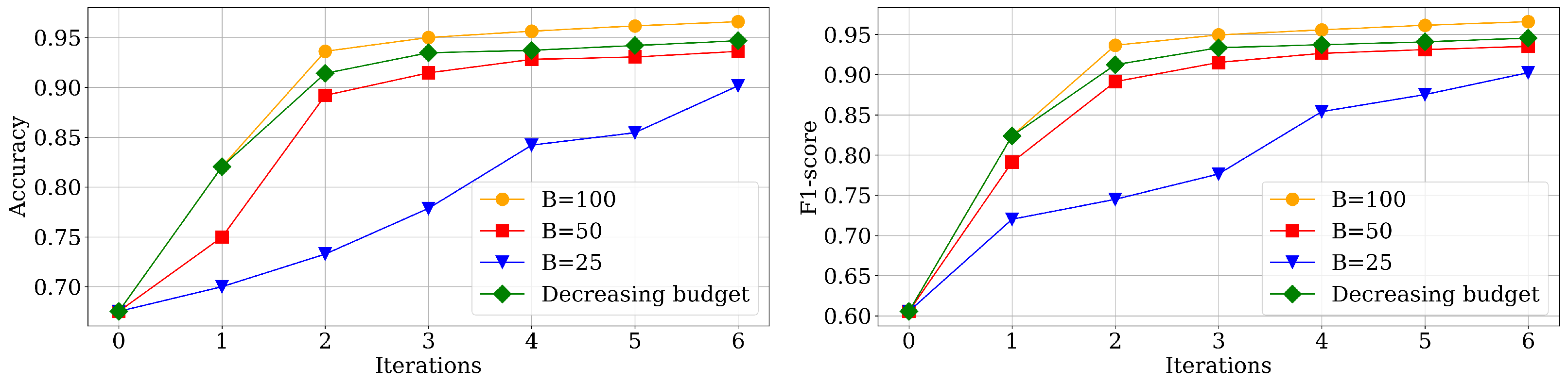

Two configurations are not directly comparable (see

Figure 8) due to differences in their settings.

The first (

Figure 8 left), the

strategy trained with warm-start learning, in the first iterations, performs better than the strategy with constant budget and the transfer learning option. This result is inverted at the end of the iterations, where the

strategy shows poor results when the models were trained using warm-start learning between iterations, i.e., transfer learning using a constant budget performs better. The second (

Figure 8 right), a decreasing budget trained using transfer learning always performs better than a constant budget trained using warm-start learning.

After exploring the previous results, we compare each strategy, keeping constant the type of strategy associated with the number of images added to the training dataset (see

Figure 9). Furthermore, the experiments demonstrate that, for this dataset, YOLOv8 performs better with transfer learning, regardless of the number of images added in the training dataset per iteration, and irrespective of whether a constant or decreasing budget is used.

Figure 10 compares the strategy

in two different scenarios: (1) warm-start and (2) transfer learning. In both cases, the strategy

achieves better results using a smaller number of annotated images, as shown in

Figure 3.

From the data collected, it was observed that the transfer learning (initialising the model between iterations with the same values, i.e., ) represents a better alternative for initialising the model than warm-start learning (that is, initialising the model from the model). Mostly because the training datasets are small and warm-start learning may produces overfitting. Also, the used is trained on large datasets, making a good starting point for model training.

The first object detection experiments showed results similar to those obtained in the previous section. In this case, in iteration using the strategy the dataset used to train the model is smaller by 200 images than the dataset formed using the strategy constant budget. However, it is observed that iterations using the strategy outperform iterations using a constant budget.

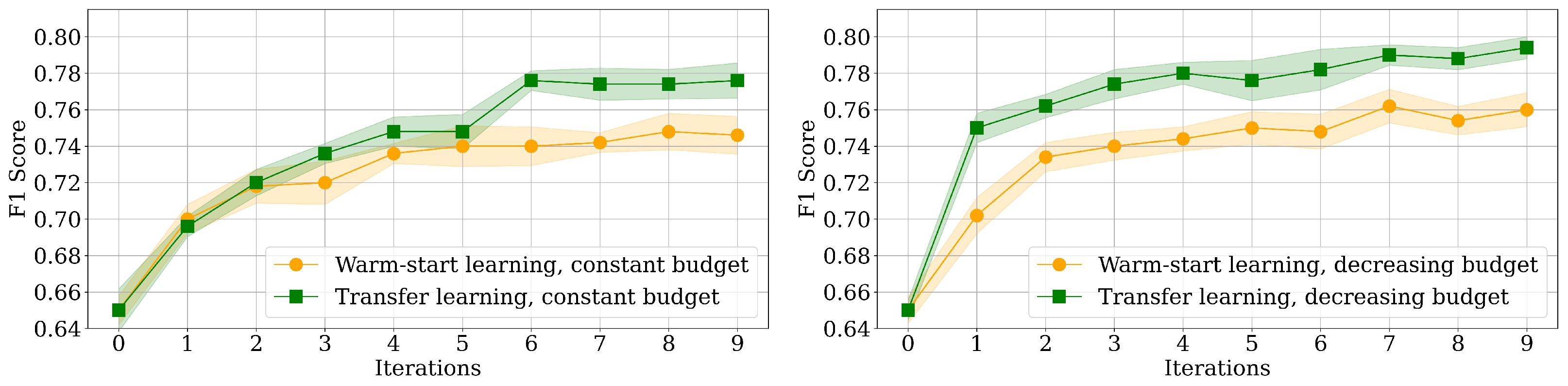

5.2. Second Object Detection Experiment

Figure 11 presents the results of the second object detection experiment. The corresponding data used to generate the graphs are provided in

Appendix C,

Table A5.

The differences in F1-score observed between the first and second object detection experiments are due to two main factors. First, a different seed is used to split the dataset, and second, the number of images sampled by this iteration is smaller than in the first experiment.

Regardless of the network architecture with which the experiments were conducted, the proposed strategies behaved similarly. The decreasing-budget-based strategy performs better than the other two strategies as active learning iterations increase. The second best strategy was the constant budget strategy, and the worst performance was the increment budget strategy.

Furthermore, the first and second object detection experiments can be compared when the training dataset contains the same images. The first, in iteration 2 () and the second at iteration 4 (), each contains 300 images in the training dataset. The first devotes the greatest effort in two iterations and the second distributes the effort over more iterations. This corroborates that most of the effort needs to be devoted to the creation of the training dataset in the initial iterations to achieve the best possible model performance.

6. Conclusions and Future Work

This work proposes an active learning with a decreasing-budget-based strategy to reduce specialists’ workload in the image annotation process. It presents three experiments that tackle different tasks: classification and object detection to understand the impact of the decreasing-budget-based strategy on the annotation process.

The proposed strategy considers that on each iteration of the process, the specialist reduces the number of images to annotate, i.e., the current iteration annotates fewer images than in the previous iteration. This strategy encourages data annotators to devote maximum effort to initial iterations, ensuring less effort by annotators in successive iterations. Therefore, budget management is more efficient, so the trained model performs at its best.

A weakness of this work is that it considers only one use case, specifically the identification of mitoses. Future work considers this approach to other applications, e.g., medical and industrial, focusing on the first iteration and looking for an unsupervised method to make a more diverse initial selection of the images that will form the training dataset.

Author Contributions

Conceptualisation, D.G.G.; methodology, D.G.G.; investigation, D.G.G.; software, D.G.G.; validation, D.G.G. and M.I.L.; writing—original draft preparation, D.G.G.; writing—review and editing, D.G.G., M.I.L., A.C. and L.M.; supervision, A.C. and L.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Fundação para a Ciência e Tecnologia, IP (FCT) within the R&D Units Project Scope: UIDB/00319/2020 (ALGORITMI).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Acknowledgments

During the preparation of this work, the author(s) used the Grammarly assistant to produce clear and precise texts. After using this tool/service, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.

Conflicts of Interest

Dibet García González was employed by the company Sentinelconcept. Other authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DP | Digital Pathology |

| DL | Deep learning |

| NN | Neural Network |

| OD | Object Detection |

| AL | Active Learning |

| CDSS | Clinical Decision Support System |

| WSI | Whole Slide Images |

| AI | Artificial Intelligence |

| YOLO | You Only Look Once |

| DETR | Detection Transformer |

Appendix A. Classification Experiments Results

Table A1.

Accuracy by iteration considering the decreasing-budget strategy ( and three different strategies based on a constant budget size , , and .

Table A1.

Accuracy by iteration considering the decreasing-budget strategy ( and three different strategies based on a constant budget size , , and .

| Iteration | MobileNetV3 | InceptionV3 |

|---|

| | | | | | | |

|---|

| 0 | 0.5927 | 0.5927 | 0.5927 | 0.5927 | 0.6753 | 0.6753 | 0.6753 | 0.6753 |

| 1 | 0.8189 | 0.7666 | 0.7911 | 0.8189 | 0.8204 | 0.7001 | 0.7498 | 0.8204 |

| 2 | 0.939 | 0.803 | 0.8684 | 0.9683 | 0.914 | 0.7328 | 0.8919 | 0.9361 |

| 3 | 0.9461 | 0.8506 | 0.8958 | 0.9841 | 0.9347 | 0.7786 | 0.9145 | 0.95 |

| 4 | 0.9462 | 0.8946 | 0.9304 | 0.9822 | 0.9371 | 0.842 | 0.928 | 0.9563 |

| 5 | 0.9774 | 0.9212 | 0.9462 | 0.9875 | 0.9419 | 0.8545 | 0.9304 | 0.9616 |

| 6 | 0.9808 | 0.9315 | 0.9505 | 0.9875 | 0.9467 | 0.9015 | 0.9361 | 0.9659 |

Table A2.

F1-score by iteration considering the decreasing-budget strategy ( and three different strategies based on a constant budget size , , and .

Table A2.

F1-score by iteration considering the decreasing-budget strategy ( and three different strategies based on a constant budget size , , and .

| Iteration | MobileNetV3 | InceptionV3 |

|---|

| | | | | | | |

|---|

| 0 | 0.6056 | 0.6056 | 0.6056 | 0.6056 | 0.5831 | 0.5831 | 0.5831 | 0.5831 |

| 1 | 0.8239 | 0.7202 | 0.7912 | 0.8239 | 0.7932 | 0.7832 | 0.798 | 0.7932 |

| 2 | 0.9124 | 0.745 | 0.8913 | 0.9366 | 0.9406 | 0.81 | 0.8616 | 0.9681 |

| 3 | 0.9335 | 0.7765 | 0.9152 | 0.9495 | 0.9454 | 0.8378 | 0.903 | 0.9842 |

| 4 | 0.9372 | 0.854 | 0.9266 | 0.9557 | 0.9476 | 0.8382 | 0.928 | 0.9823 |

| 5 | 0.9408 | 0.8752 | 0.9312 | 0.9615 | 0.9775 | 0.87 | 0.9452 | 0.9875 |

| 6 | 0.9456 | 0.9025 | 0.9352 | 0.966 | 0.9808 | 0.8833 | 0.9518 | 0.9875 |

Appendix B. First Object Detection Experiments Results

Table A3.

F1-score by iteration for the first object detection experiment. WSL: Warm-start learning, TL: Transfer learning, CB: Constant budget, DB: Decreasing budget.

Table A3.

F1-score by iteration for the first object detection experiment. WSL: Warm-start learning, TL: Transfer learning, CB: Constant budget, DB: Decreasing budget.

| Iteration | WSL-CB | TL-CB | WSL-DB | TL-DB |

|---|

| Mean | Min | Max | Mean | Min | Max | Mean | Min | Max | Mean | Min | Max |

|---|

| 0 | 0.650 | 0.642 | 0.658 | 0.650 | 0.642 | 0.658 | 0.650 | 0.638 | 0.662 | 0.650 | 0.643 | 0.657 |

| 1 | 0.700 | 0.692 | 0.708 | 0.696 | 0.688 | 0.704 | 0.702 | 0.696 | 0.708 | 0.750 | 0.740 | 0.760 |

| 2 | 0.718 | 0.709 | 0.727 | 0.720 | 0.711 | 0.729 | 0.734 | 0.727 | 0.741 | 0.762 | 0.754 | 0.770 |

| 3 | 0.720 | 0.708 | 0.732 | 0.736 | 0.724 | 0.748 | 0.740 | 0.734 | 0.746 | 0.774 | 0.766 | 0.782 |

| 4 | 0.736 | 0.731 | 0.741 | 0.748 | 0.743 | 0.753 | 0.744 | 0.736 | 0.752 | 0.780 | 0.773 | 0.787 |

| 5 | 0.740 | 0.729 | 0.751 | 0.748 | 0.737 | 0.759 | 0.750 | 0.741 | 0.759 | 0.776 | 0.767 | 0.785 |

| 6 | 0.740 | 0.729 | 0.751 | 0.776 | 0.765 | 0.787 | 0.748 | 0.743 | 0.753 | 0.782 | 0.772 | 0.792 |

| 7 | 0.742 | 0.737 | 0.747 | 0.774 | 0.769 | 0.779 | 0.762 | 0.753 | 0.771 | 0.790 | 0.781 | 0.799 |

| 8 | 0.748 | 0.738 | 0.758 | 0.774 | 0.764 | 0.784 | 0.754 | 0.746 | 0.762 | 0.788 | 0.780 | 0.796 |

| 9 | 0.746 | 0.736 | 0.756 | 0.776 | 0.766 | 0.786 | 0.760 | 0.750 | 0.770 | 0.794 | 0.785 | 0.803 |

Figure A1.

Graphical representation of the results reported in

Table A3.

Figure A1.

Graphical representation of the results reported in

Table A3.

Appendix C. Second Object Detection Experiments Results

Table A4.

F1-score by iteration for the second object detection experiment—YoloV8Nano.

Table A4.

F1-score by iteration for the second object detection experiment—YoloV8Nano.

| Iteration | YoloV8Nano |

|---|

| Increasing Budget | Constant Budget | Decreasing Budget |

|---|

| Mean | Min | Max | Mean | Min | Max | Mean | Min | Max |

|---|

| 0 | 0.524 | 0.504 | 0.545 | 0.524 | 0.504 | 0.545 | 0.524 | 0.504 | 0.545 |

| 1 | 0.606 | 0.579 | 0.638 | 0.647 | 0.638 | 0.655 | 0.660 | 0.649 | 0.676 |

| 2 | 0.659 | 0.651 | 0.669 | 0.685 | 0.675 | 0.696 | 0.694 | 0.673 | 0.705 |

| 3 | 0.681 | 0.670 | 0.695 | 0.705 | 0.691 | 0.714 | 0.712 | 0.697 | 0.721 |

Table A5.

F1-score by iteration for the second object detection experiment—YoloV8Medim.

Table A5.

F1-score by iteration for the second object detection experiment—YoloV8Medim.

| Iteration | YoloV8Medium |

|---|

| Increasing Budget | Constant Budget | Decreasing Budget |

|---|

| Mean | Min | Max | Mean | Min | Max | Mean | Min | Max |

|---|

| 0 | 0.458 | 0.414 | 0.505 | 0.458 | 0.414 | 0.505 | 0.458 | 0.414 | 0.505 |

| 1 | 0.601 | 0.567 | 0.622 | 0.626 | 0.615 | 0.642 | 0.644 | 0.636 | 0.658 |

| 2 | 0.654 | 0.634 | 0.676 | 0.660 | 0.658 | 0.663 | 0.691 | 0.686 | 0.697 |

| 3 | 0.686 | 0.680 | 0.693 | 0.681 | 0.669 | 0.689 | 0.699 | 0.691 | 0.707 |

References

- Steiner, D.F.; Chen, P.H.C.; Mermel, C.H. Closing the translation gap: AI applications in digital pathology. Biochim. Biophys. Acta (BBA) Rev. Cancer 2021, 1875, 188452. [Google Scholar] [CrossRef] [PubMed]

- Dimitriou, N.; Arandjelović, O.; Caie, P.D. Deep Learning for Whole Slide Image Analysis: An Overview. Front. Med. 2019, 6, 264. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, D.G.; Carias, J.; Castilla, Y.C.; Rodrigues, J.; Adão, T.; Jesus, R.; Magalhães, L.G.M.; de Sousa, V.M.L.; Carvalho, L.; Almeida, R.; et al. Evaluating Rotation Invariant Strategies for Mitosis Detection Through YOLO Algorithms. In Wireless Mobile Communication and Healthcare; Cunha, A., Garcia, N.M., Marx Gómez, J., Pereira, S., Eds.; Springer: Cham, Switzerland, 2023; pp. 24–33. [Google Scholar]

- Sener, O.; Savarese, S. Active Learning for Convolutional Neural Networks: A Core-Set Approach. arXiv 2017, arXiv:1708.00489. [Google Scholar] [CrossRef]

- Beluch, W.H.; Genewein, T.; Nurnberger, A.; Kohler, J.M. The Power of Ensembles for Active Learning in Image Classification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9368–9377. [Google Scholar] [CrossRef]

- Jiang, C.M.; Najibi, M.; Qi, C.R.; Zhou, Y.; Anguelov, D. Improving the Intra-Class Long-Tail in 3D Detection via Rare Example Mining. arXiv 2022, arXiv:2210.08375. [Google Scholar] [CrossRef]

- Liang, Z.; Xu, X.; Deng, S.; Cai, L.; Jiang, T.; Jia, K. Exploring Diversity-based Active Learning for 3D Object Detection in Autonomous Driving. arXiv 2022, arXiv:2205.07708. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 April 2025).

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Garcia, D.; Carias, J.; Adão, T.; Jesus, R.; Cunha, A.; Magalhães, L.G. Ten Years of Active Learning Techniques and Object Detection: A Systematic Review. Appl. Sci. 2023, 13, 667. [Google Scholar] [CrossRef]

- Meirelles, A.L.; Kurc, T.; Saltz, J.; Teodoro, G. Effective active learning in digital pathology: A case study in tumor infiltrating lymphocytes. Comput. Methods Programs Biomed. 2022, 220, 106828. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Yu, X.; Cheang, C.F.; Liang, Y.; Zhao, P.; Yu, H.H.; Choi, I.C. Transformer-based multi-task learning for classification and segmentation of gastrointestinal tract endoscopic images. Comput. Biol. Med. 2023, 157, 106723. [Google Scholar] [CrossRef] [PubMed]

- Kee, S.; del Castillo, E.; Runger, G. Query-by-committee improvement with diversity and density in batch active learning. Inf. Sci. 2018, 454–455, 401–418. [Google Scholar] [CrossRef]

- Tang, Y.P.; Wei, X.S.; Zhao, B.; Huang, S.J. QBox: Partial Transfer Learning with Active Querying for Object Detection. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 3058–3070. [Google Scholar] [CrossRef] [PubMed]

- Yun, J.B.; Oh, J.; Yun, I.D. Gradually Applying Weakly Supervised and Active Learning for Mass Detection in Breast Ultrasound Images. arXiv 2020, arXiv:2008.08416. [Google Scholar]

- Folmsbee, J.; Zhang, L.; Lu, X.; Rahman, J.; Gentry, J.; Conn, B.; Vered, M.; Roy, P.; Gupta, R.; Lin, D.; et al. Histology segmentation using active learning on regions of interest in oral cavity squamous cell carcinoma. J. Pathol. Inform. 2022, 13, 100146. [Google Scholar] [CrossRef] [PubMed]

- Jesus, R.; Bastião Silva, L.; Sousa, V.; Carvalho, L.; Garcia Gonzalez, D.; Carias, J.; Costa, C. Personalizable AI platform for universal access to research and diagnosis in digital pathology. Comput. Methods Programs Biomed. 2023, 242, 107787. [Google Scholar] [CrossRef] [PubMed]

- Racoceanu, D.; Calvo, J.; Attieh, E.; Naour, G.L.; Gloaguen, A. Detection of Mitosis and Evaluation of Nuclear Atypia Score in Breast Cancer Histological Images. In Proceedings of the 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014. [Google Scholar]

- Veta, M.; Heng, Y.J.; Stathonikos, N.; Bejnordi, B.E.; Beca, F.; Wollmann, T.; Rohr, K.; Shah, M.A.; Wang, D.; Rousson, M.; et al. Predicting breast tumor proliferation from whole-slide images: The TUPAC16 challenge. Med. Image Anal. 2019, 54, 111–121. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Tieleman, T. Lecture 6.5-rmsprop: Divide the Gradient by a Running Average of Its Recent Magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 4, 26. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

Figure 1.

Image samples. The (top row) images belong to the class mitosis. The (bottom row) images belong to the class with no mitosis.

Figure 1.

Image samples. The (top row) images belong to the class mitosis. The (bottom row) images belong to the class with no mitosis.

Figure 2.

Size of the training dataset vs iteration used for model training in the classification experiments. At the first iteration, the training dataset contains 20 images. At the sixth iteration (), the decreasing-budget strategy contains the same number of images as the strategy.

Figure 2.

Size of the training dataset vs iteration used for model training in the classification experiments. At the first iteration, the training dataset contains 20 images. At the sixth iteration (), the decreasing-budget strategy contains the same number of images as the strategy.

Figure 3.

Dataset size by iterations for model training using the constant budget (orange) and the decreasing-budget strategy (green). At the first iteration, the training dataset contains 20 images. At the fourth iteration (), the dataset formed by the decreasing-budget strategy contains less amount of images than the constant budget strategy.

Figure 3.

Dataset size by iterations for model training using the constant budget (orange) and the decreasing-budget strategy (green). At the first iteration, the training dataset contains 20 images. At the fourth iteration (), the dataset formed by the decreasing-budget strategy contains less amount of images than the constant budget strategy.

Figure 4.

Dataset size by iterations for model training using the strategies: decreasing-budget (green), constant-budget (yellow) and increasing-budget (orange). At the first iteration (), the training dataset also contains 20 images. At the fourth iteration (), the three training datasets contain the same count of images.

Figure 4.

Dataset size by iterations for model training using the strategies: decreasing-budget (green), constant-budget (yellow) and increasing-budget (orange). At the first iteration (), the training dataset also contains 20 images. At the fourth iteration (), the three training datasets contain the same count of images.

Figure 5.

MobileNetV3 accuracy and F1-score by iteration considering the decreasing-budget strategy () and three different strategies based on a constant-budget size: , and .

Figure 5.

MobileNetV3 accuracy and F1-score by iteration considering the decreasing-budget strategy () and three different strategies based on a constant-budget size: , and .

Figure 6.

InceptionV3 accuracy and F1-score by iteration considering the decreasing-budget strategy () and three different strategies based on a constant budget size: , and .

Figure 6.

InceptionV3 accuracy and F1-score by iteration considering the decreasing-budget strategy () and three different strategies based on a constant budget size: , and .

Figure 7.

Accumulated training time (minute) vs active learning iterations considering the decreasing-budget strategy () and three different strategies based on a constant budget size: , and . MobileNetV3 and InceptionV3 architecture.

Figure 7.

Accumulated training time (minute) vs active learning iterations considering the decreasing-budget strategy () and three different strategies based on a constant budget size: , and . MobileNetV3 and InceptionV3 architecture.

Figure 8.

(Left): constant budget trained using transfer learning versus decreasing budget trained using warm-start learning. (Right): constant budget trained using warm-start learning and decreasing budget trained using transfer learning.

Figure 8.

(Left): constant budget trained using transfer learning versus decreasing budget trained using warm-start learning. (Right): constant budget trained using warm-start learning and decreasing budget trained using transfer learning.

Figure 9.

Comparison between warm-start learning and transfer learning. (Left): constant budget. (Right): decreasing budget.

Figure 9.

Comparison between warm-start learning and transfer learning. (Left): constant budget. (Right): decreasing budget.

Figure 10.

Evaluation of the decreasing-budget strategy. (Left): warm-start learning. (Right): transfer learning.

Figure 10.

Evaluation of the decreasing-budget strategy. (Left): warm-start learning. (Right): transfer learning.

Figure 11.

Comparison between the decreasing-budget (green), constant-budget (orange) and increasing-budget (red) strategies considering two different deep learning architectures. (Left): YOLOv8Nano and (right): YOLOv8Medium.

Figure 11.

Comparison between the decreasing-budget (green), constant-budget (orange) and increasing-budget (red) strategies considering two different deep learning architectures. (Left): YOLOv8Nano and (right): YOLOv8Medium.

Table 1.

Mitosis detection dataset used in this work.

Table 1.

Mitosis detection dataset used in this work.

| Dataset | Images Count |

|---|

| TUPAC | 1288 |

| Mitos & Atypia | 1206 |

| iPath project | 1370 |

| Total | 3864 |

Table 2.

Mitosis detection dataset used in this work.

Table 2.

Mitosis detection dataset used in this work.

| Fold | Pool | Validation | Testing |

|---|

| , , | | |

| , , | | |

| , , | | |

| , , | | |

| , , | | |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).