1. Introduction

Optimal trajectory planning is a topic that arises in many domains of science, engineering, and technology. While reinforcement learning [

1,

2] is always a valuable route, widely employed in similar settings [

3], its two main drawbacks are its high computational cost and the large amount of data required for training purposes.

Previous work [

4] proposed an alternative route by employing neural network-based regressions informed by the Euler–Lagrange equation (ELE) for optimal velocity planning along a given path. This procedure was further improved in [

5] to compute the optimal trajectory, i.e., both the optimal path and the optimal velocity along it,

.

In the aforementioned works, the considered cost function includes a term reflecting the effect of the environment (typically wind), which can either assist or resist the agent’s movement. When addressing short paths, it is reasonable to assume that the environment is known and will remain nearly unchanged. Thus, trajectory planning can be elaborated within a deterministic framework, as considered in previous works [

4,

5].

However, when considering longer trajectories (such as ship routing), currents, sea conditions, and wind, all of which affect the cost function in the optimization problem, are known at the time of planning but are expected to evolve over time, with increasing uncertainty as the time horizon extends. Moreover, ship operations can be scheduled over many months without the possibility of refueling, especially when operating far from homeland.

Similarly, optimal trajectory planning is of utmost importance for airplanes and drones performing missions over extended distances and in foreign territories. In such missions, refueling or recharging is often impossible, while over-fueling can negatively impact the aircraft’s maneuverability. In these situations, especially those occurring far from home bases, environmental conditions such as wind velocity and orientation are only statistically known and are expected to evolve over time, rather than being deterministically available throughout the entire mission duration.

Recently, the hierarchical safe reinforcement learning with prescribed performance control is proposed, where the challenge in unpredictable obstacles is addressed for the leader–follower system. However, these approaches fail to limit travel time for a given path. A solution is proposed in [

6], where the authors proposed a finite-time solution, without limiting the path to a fixed interval when possible. Robust optimal tracking is also proposed in [

7], using a fixed-time concurrent learning-based actor–critic–identifier.

Other works did attempt the optimal trajectory planing using Gaussian process regressions for small trajectories on robot applications [

8,

9]. However, the globally optimal solution is often intractable using these techniques, they require trajectory priors, and only a plausible region is identified.

Reinforcement learning is well suited to handle such uncertainty [

2,

10,

11], while traditional ELE formulations are much less so. Nevertheless, the simplicity of applying the ELE, combined with its potential for achieving global optimization, motivates the present research, which proposes extending ELE methods to probabilistic settings, thereby enabling the consideration of evolving uncertainty in optimal trajectory planning [

12].

Moreover, many problems in mechanical engineering and manufacturing involve deterministic or stochastic trajectory optimization. For example, motion planning for robotic and mobile systems is investigated in [

13]. Other applications involve optimal path identification, such as in 3D printing [

14,

15,

16] and automated tape placement [

17,

18] using trajectory adjustments along parallel paths. While the authors in [

13] use a non-parametric approach for path planning through torque minimization, the other aforementioned methods rely on parametric or spline-based optimization for trajectory planning.

The proposed method has the advantage of being a non-parametric approach guaranteeing a global minimum of the stochastic problem at hand [

19,

20]. Although it is showcased in this work on routing applications, it can be applied to any path optimization problem, by adapting the functional cost to the selected use case.

Many recent works analyze and propose possible technologies for routing optimization [

21,

22,

23,

24]. For example, a hybrid parallel computing scheme combined with an improved inverse distance weight interpolation method was proposed for a search-and-rescue route planning model based on the Lawnmower search method in [

21]. The main drawback of that method remains the large span of the domain to be investigated by the optimization algorithm. This drawback is also shared by the authors in [

22], who proposed a two-level optimization algorithm for urban routing applied to connected and automated vehicles. Recently, a novel algorithm optimizing routing for multi-modal transport methods in urban settings was proposed, employing a stochastic optimization approach using a generalized interval-valued trapezoidal, in-time, possibility distribution [

23]. However, this method does not guarantee on-time arrival or a global optimum, though it can account for disturbances and uncertainties in multi-modal transportation. The method proposed in this work, by contrast, guarantees a global optimum at convergence by minimizing the functional that integrates the cost over the entire travel domain.

This paper begins by exploring the just-in-time routing process, where the objective is to reach the destination within a known time frame.

Section 3 then introduces the deterministic neural Euler–Lagrange (ELE) formulation. In

Section 4, this deterministic approach is generalized to a stochastic one by combining the neural ELE with a Monte-Carlo sampling strategy and is validated on a use case with a known analytical solution.

Section 5 explores several applications and examples, comparing the deterministic and stochastic approaches. Finally, conclusions are presented in

Section 6. A list of the used symbols and nomenclature is available in

Table 1.

2. Just-in-Time Deterministic Routing

The path is assumed starting at at , and should finish at position at time . At its turn, the local cost function is assumed depending on the difference of velocities between the one of the agent and the one of the environment at the agent’s position .

In what follows, for the sake of illustration, we consider three different scenarios.

2.1. Constant Environment Effect

We consider in this case the one-dimensional case with , at , and , with , and , that is, .

The selected boundary conditions are chosen without any loss of generality. Now, the ELE,

leads to

, which, integrated twice, results in

, which, taking into account the initial and final conditions, reduces to

, with a constant velocity

, independent of the the value of

. That is, for any value of

, the minimum cost enabling the fulfillment of both the initial and final conditions is the constant unit velocity.

2.2. Environment Effect Evolving in Time

Here we consider the same case as before, with the only difference being the environment effect that now grows in time, that is . Thus, , inserted into the ELE, leads to , whose solution implies the velocity , where the agent profits from the environment effect by increasing its velocity in time.

2.3. Environment Effect Evolving in Space

This section consider , which implies , which, inserted into the ELE, leads to , whose solution reads , with the value of constants a and b determined by the initial and final conditions and .

2.4. Discussion

It is also important to mention that, in the case of the just referred time-dependent environment, changing to changes both the and the velocity along it. However, in the space-dependent case, when changing by , nothing changes.

For more complex environments, the resulting ordinary differential equation (ODE) can be solved by discretizing it by using typical discretization techniques, for example, the finite differences. However, sometimes the cost function is unknown analytically, and is approximated using a surrogate model, like a neural network

where

x or

t could be absent in some particular cases. Such a cost function is determined during the agent calibration, in a wind tunnel, for example, in the case of drones, but it could also be obtained from high-fidelity solutions of aerodynamic or/and hydrodynamic software.

4. Addressing Uncertainty

This section considers the time evolution of the environment uncertainty. As soon as its statistical distribution is defined, a sampling consisting on N realizations can be performed. Then, a cost is associated with each realization .

At first view, the stochastic formulation reduces to the solution of N deterministic Euler–Lagrange equations by using the neural formulation introduced in the previous section, to compute the trajectory statistics from all the solutions.

Another possibility, specially adapted to the use of neural architectures, consists of training the network with a loss function that considers the N ELE residuals.

By defining the residual

for realization

i using

the global loss

considered in the training reads

Numerical Validation in the Deterministic Case

First we consider the deterministic 2D case with

and

. The initial and final conditions are given by

and

, at time

. The cost is assumed to be the one introduced in

Section 2.3, and given by

This cost function

is used to generate data, and to train a neural network surrogate approximating the cost:

which should replicate that cost function after the appropriate training.

First, 50 million combinations were considered for training the cost network , of which were used in the training. The remaining were used to test the trained model.

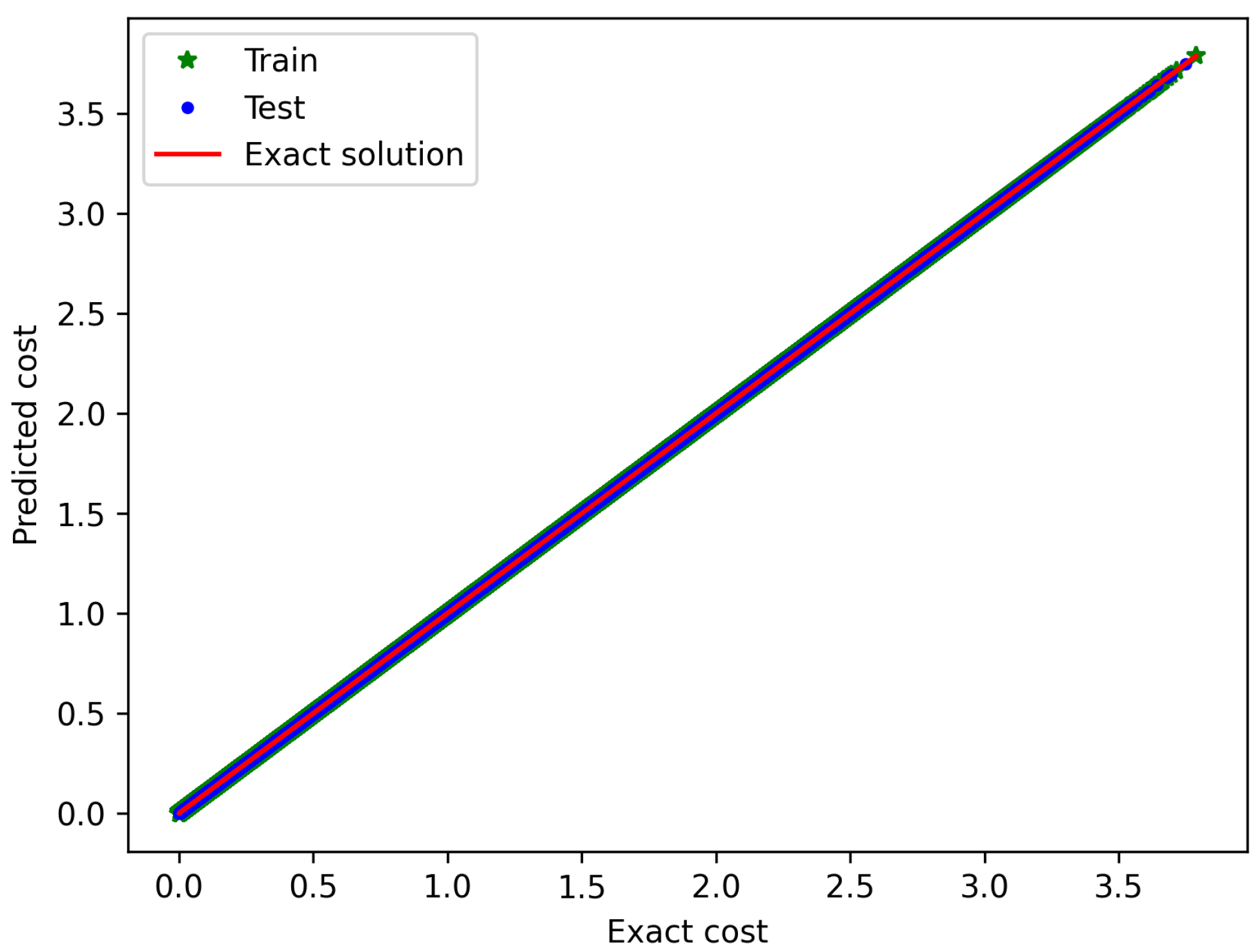

Figure 1 depicts the prediction (

8) versus the reference value (

7) of the cost function, from which an almost perfect training can be concluded (the

metrics is higher than 0.99 for both the training and the test datasets, with an absolute percentage error lower than

on both datasets).

Once the cost network

is trained, the network

providing the optimal trajectory can be trained with the loss associated with the ELE residual as previously discussed. The used network for

is a three-layer dense network with size (200, 60, 60, 60, 1) and activations (tanh,relu,relu,rely,linear), respectively. Two different networks of identical structure are used to predict the

x and

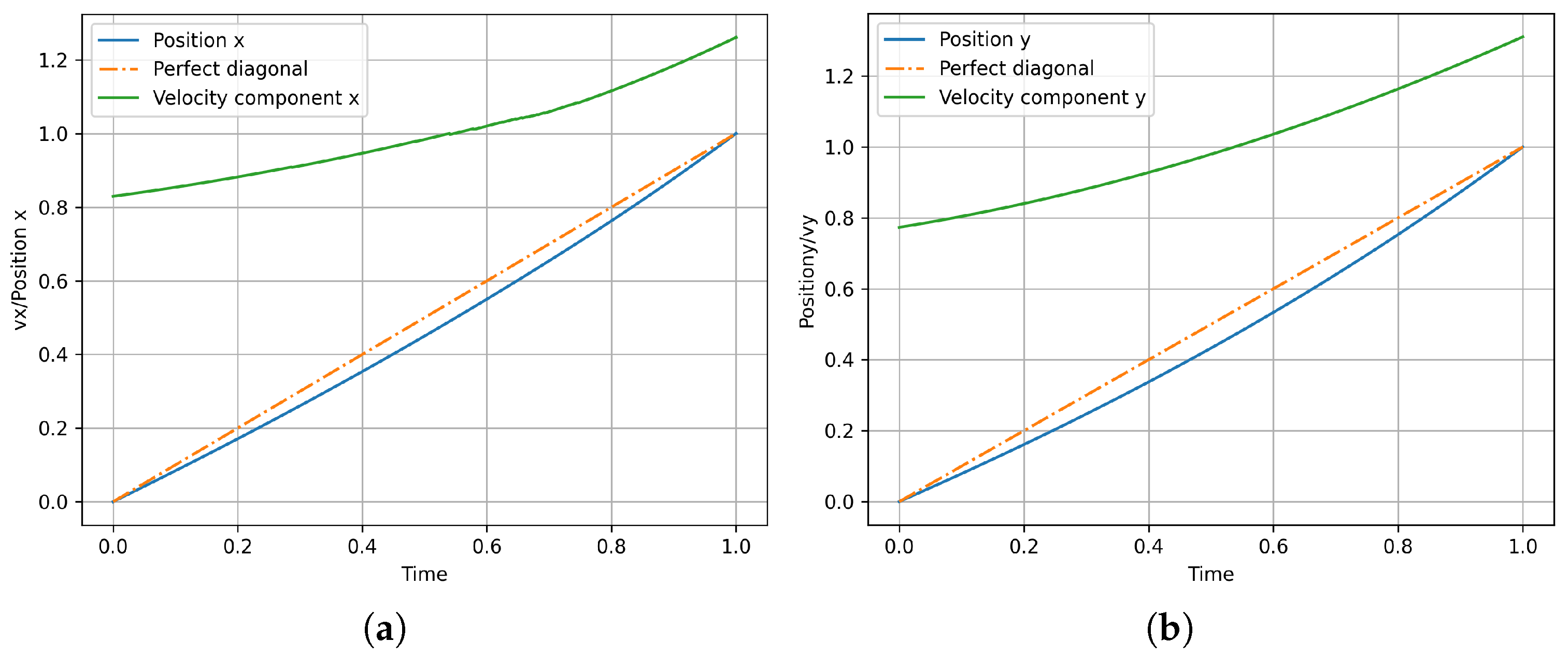

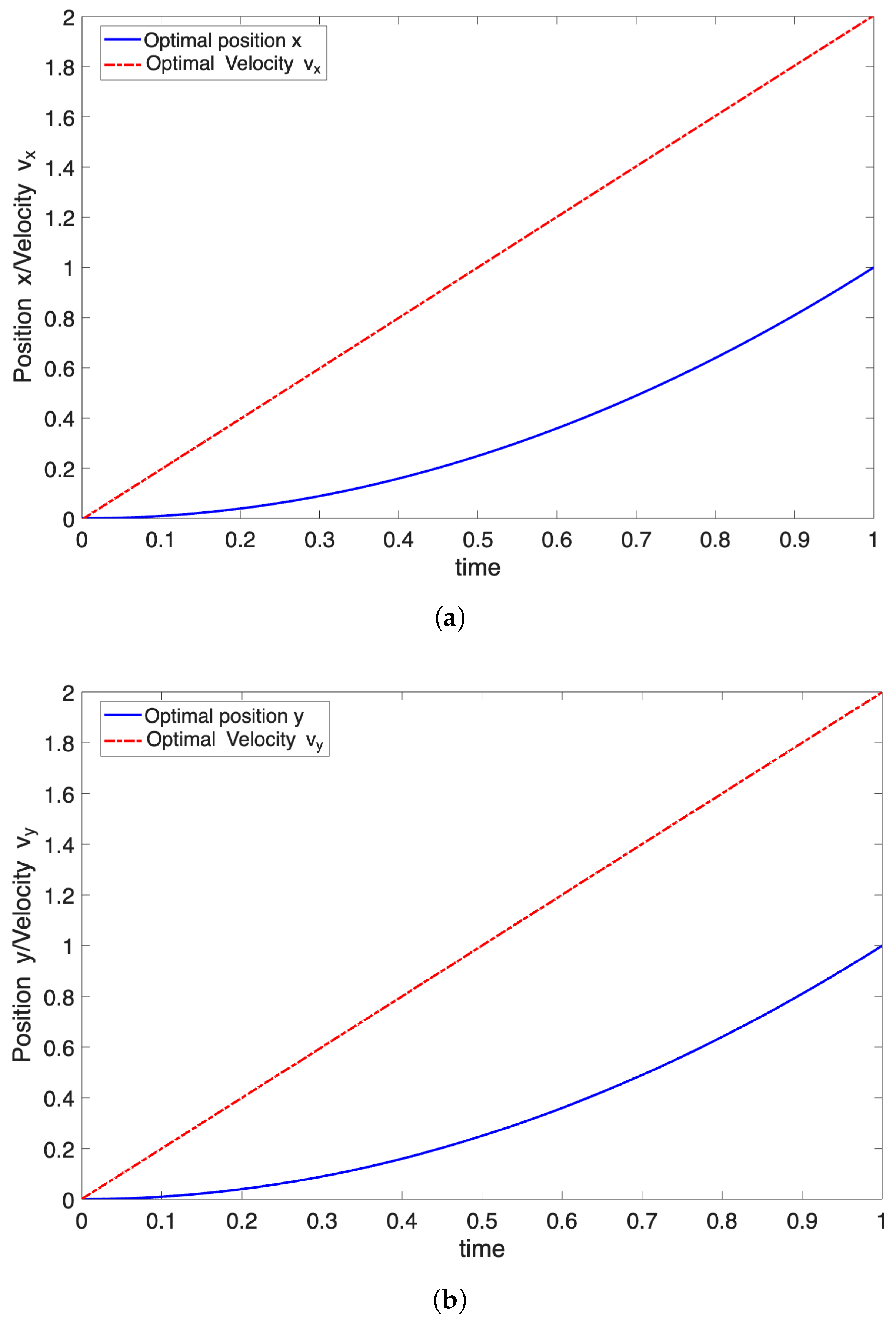

y coordinates of the path. The resulting optimal trajectory is depicted in

Figure 2 for both the

x and the

y coordinate.

Figure 2 also depicts the resulting optimal velocity.

In the present case, the problem admits an analytical solution that serves as a reference solution to validate the approach. Another possibility to validate the results in the present case, in which the cost function has an explicit expression, consists of solving the ELE by employing any discretization technique, like the finite differences methods.

The problem’s analytical solution is shown in

Figure 3 along with a comparison to the one identified by the trained network. The relative and absolute errors between the analytical solutions and the one provided by the neural approach are shown in

Figure 4.

Figure 4 demonstrates the high accuracy of the proposed planning procedure.

5. Numerical Results in a Stochastic Setting

In the following examples, we consider the cost function defined by

However, now the wind velocity

becomes stochastic, having different expressions depending on the considered use case. In all the illustrated examples in this section, Tensorflow is used in the implementation [

25]. The training of the neural network

minimizing the stochastic ELE cost function is performed using an ADAM stochastic gradient descent algorithm [

26], with a custom decreasing learning rate from

to

over the course of 1000 epochs. The learning starts with batches of 50 points over the trajectory and increases over the epochs to end with a learning over the full trajectory. The trajectory’s boundary conditions are imposed by construction using a change of variables. The same neural network architecture is also used for

. In this section, we also refer to the agent’s velocity

by

, of components

.

In the rest of this section, the potential wind trajectories are sampled a priori. Later on, each sampled potential wind history is treated as a deterministic sample.

5.1. A First Example with a Sampled Multi-Normal Wind Velocity Distribution

This section assumes a uniform environment, whose uncertainty evolves in time. Thus, the environment statistics is assumed to follow a multi-normal distribution, with the dimensionality given by a partition of the time interval. If we consider a partition defined by

M nodes uniformly distributed on the time interval

, with the partition length

, the nodal times become

The multi-normal statistic becomes fully determined using the cross-correlation matrix , and the mean-vector .

Here, without loss of generality, we consider a linear evolution of the components of vector

:

with the diagonal components of

(variances) also following a linear evolution in time, according to

with the covariance scaling (inversely) with the distance in time according to

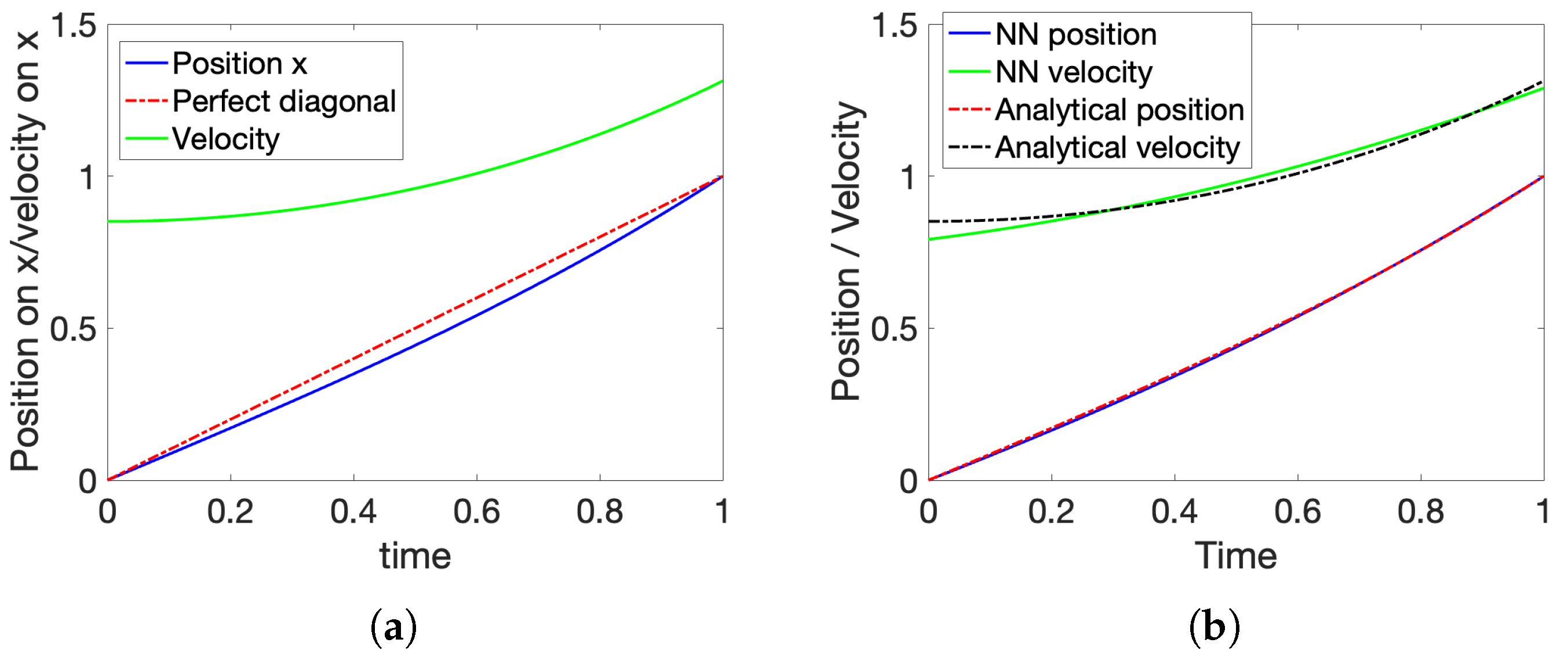

In the cases addressed below, the values , , and are considered. Now, by sampling the multi-normal distribution defined above, different trajectories of the stochastic process at the time nodes are obtained. From them, a spline can be used for determining the value in between the nodes.

In this example, the

x- and

y-components of vector

are given by

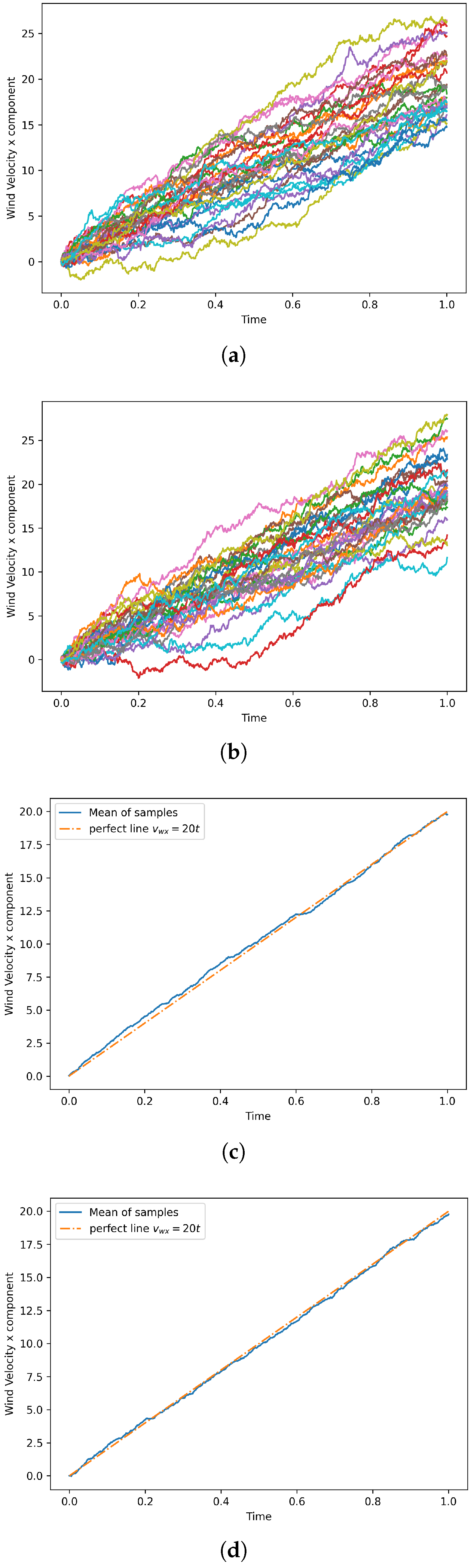

We considered 30 realizations of the multi-normal distribution illustrated (without considering a spline-based smoothing) in

Figure 5. The computed results following the rationale described in

Section 4, to address uncertainty quantification, are illustrated in

Figure 6.

5.2. A Second Case-Study Considering a Markov Chain Monte-Carlo Sampling

In this second example, the change in time of the wind velocity is sampled by using the Metropolis–Hastings algorithm, a powerful Markov chain method to simulate multivariate distributions [

27].

This section considers two cases: (i) a symmetrical probability distribution of the wind velocity in terms of amplitude and orientation, and (ii) another case where the probability distribution is slightly asymmetrical, while conserving the same mean and standard deviation.

In both sampling efforts, the sampled probability is normal of the mean

for the wind velocity magnitude

and a standard deviation of

. Regarding the orientation

, the sampled probability is normal with a mean of

and a standard deviation of

. However, for both the velocity magnitude and orientation, the acceptance probability is normal of the mean

and a standard deviation of

in the symmetric case, while in the asymmetrical one, the acceptance probability is a lognormal distribution of the same mean and standard deviation, the quantity

q being either the wind velocity amplitude

or the wind orientation

. Both sampling of the wind velocity magnitude and its orientation are performed independently, and the wind vector components are computed using

Using the symmetrical and asymmetrical probability density functions, we construct 30 wind velocity samples in each use case. The sampled wind velocities along

x and

y are illustrated in

Figure 7 along with their mean distribution.

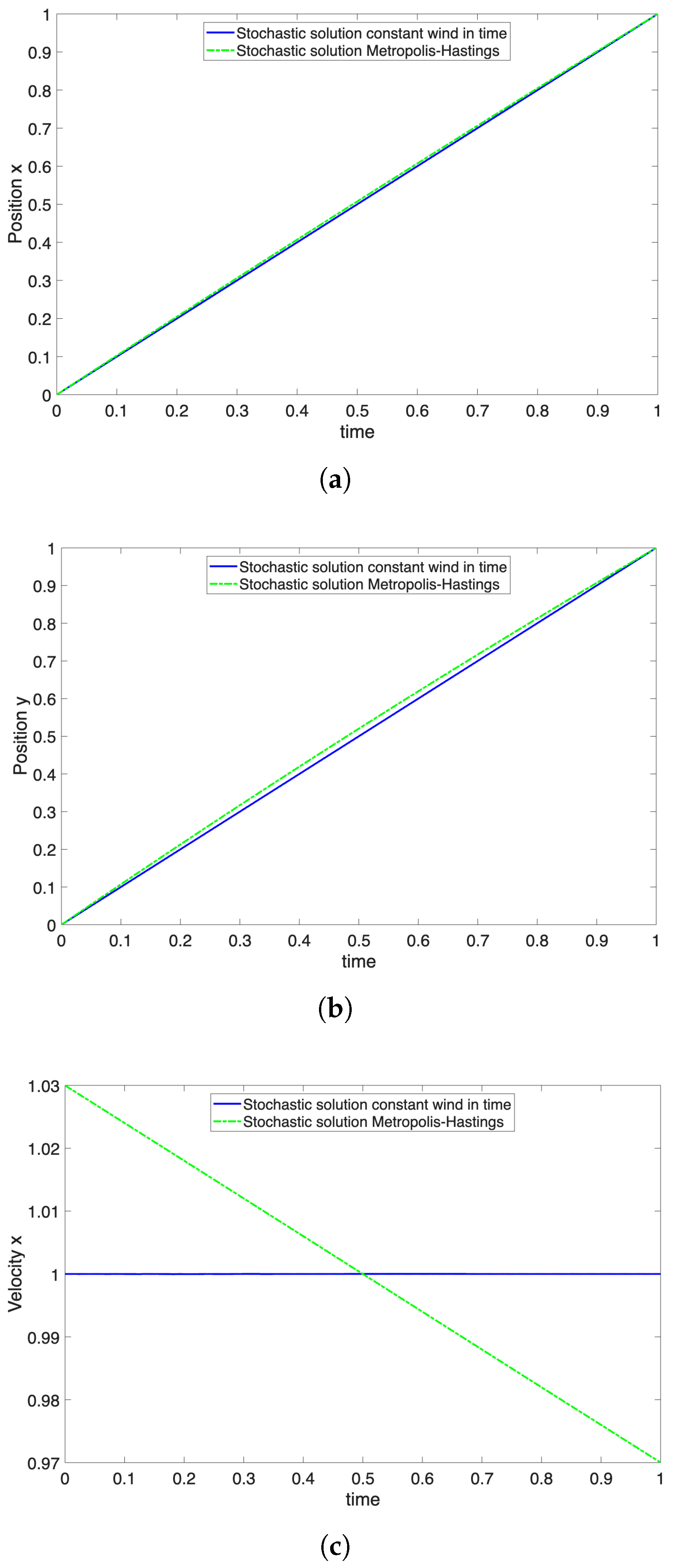

The agent’s trajectory (path and velocity) are optimized using the cost function defined in Equation (

9). The solutions obtained using the Euler–Lagrange-based stochastic procedure are illustrated in

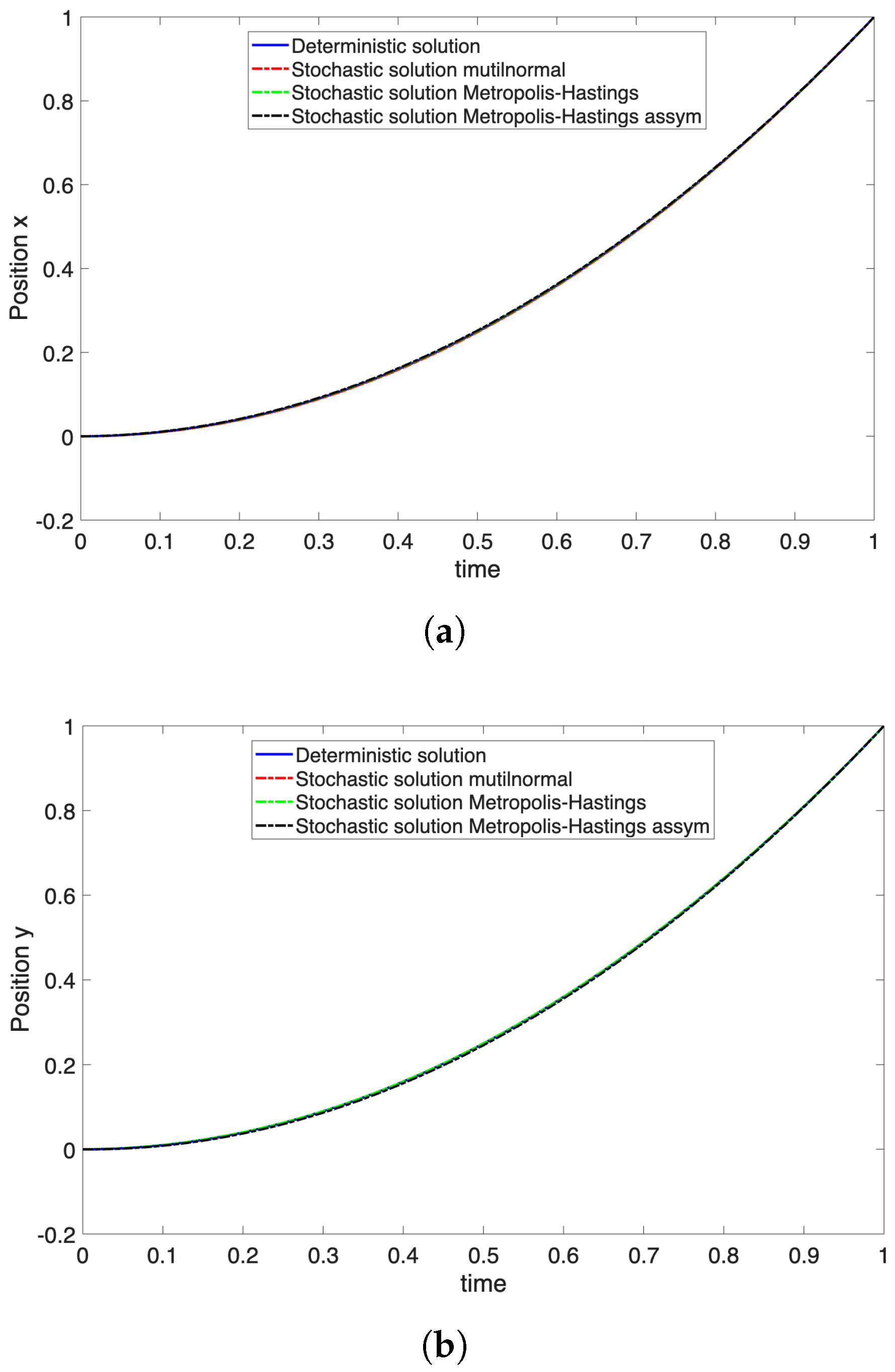

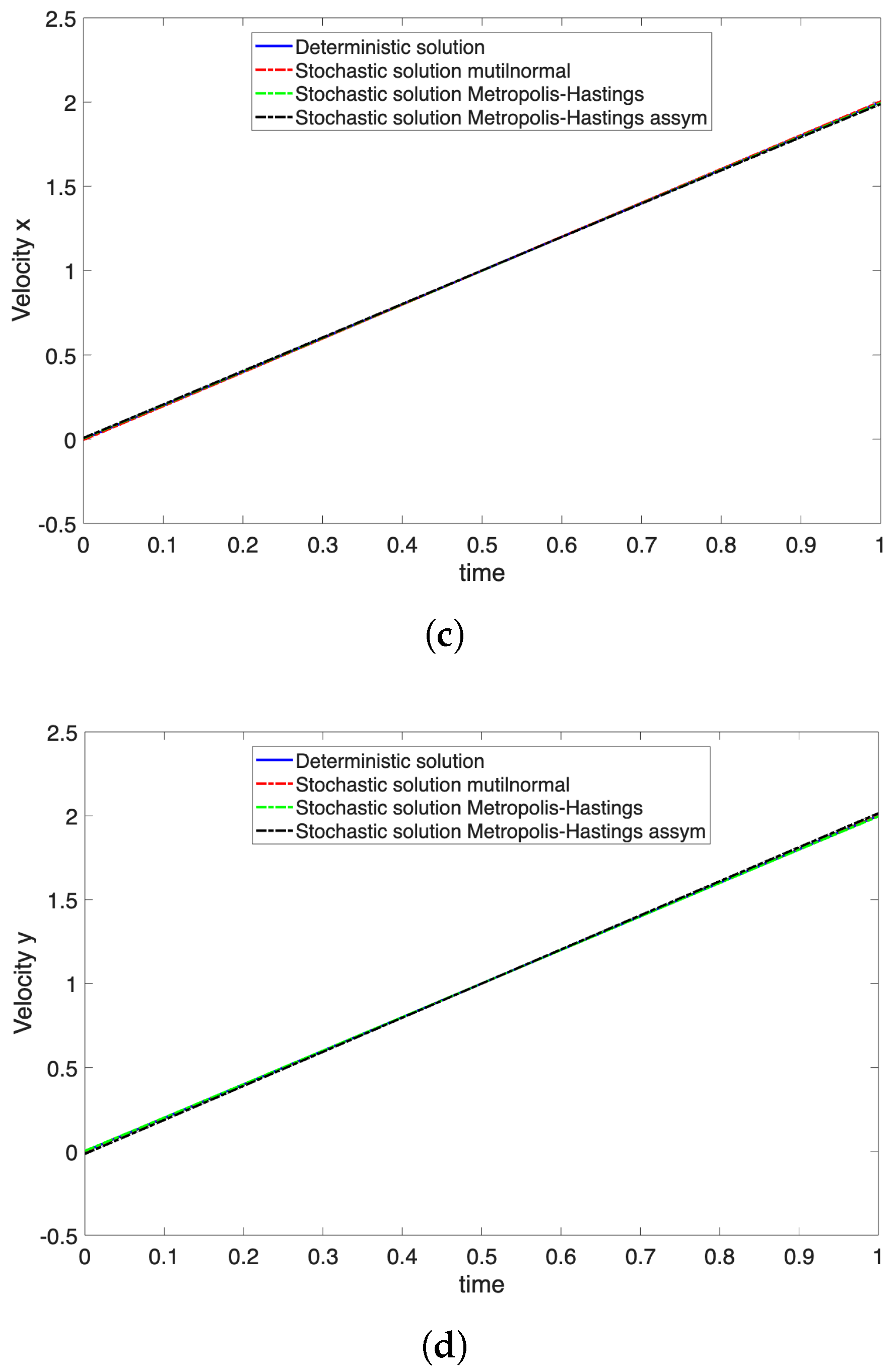

Figure 8 for the symmetric and asymmetric cases.

5.3. Discussion on the Stochastic Approaches

The present section compares the stochastic approaches using the different sampling techniques, along with a deterministic approach. The selected deterministic solution is the one minimizing the cost for the mean value of the distribution using the deterministic

framework. For instance, in this example, the deterministic solution is the optimal path for a deterministic environment where the wind velocity is defined by

which consists of the theoretical mean distribution of the wind considered in

Section 5.1 and

Section 5.2.

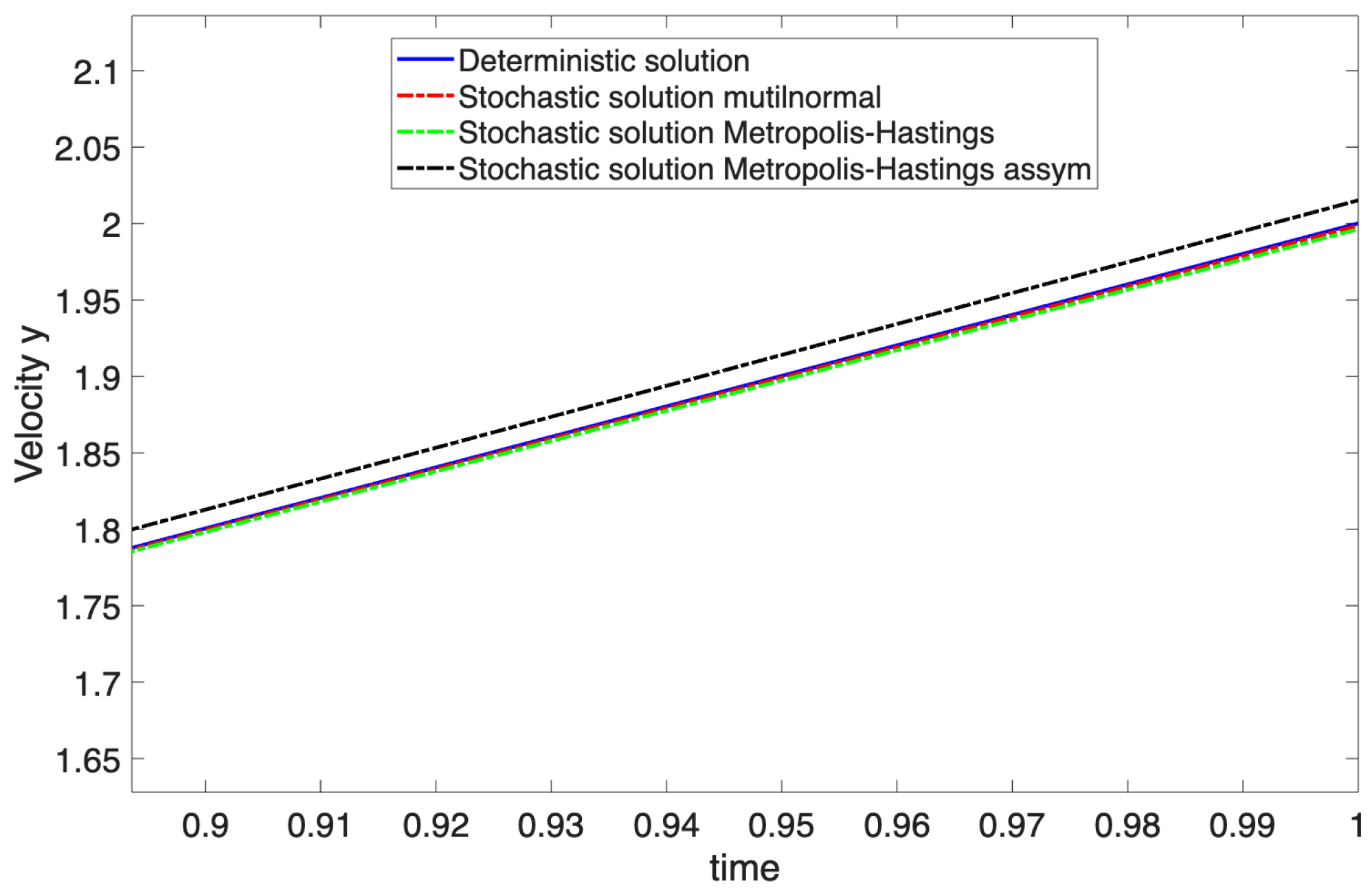

Figure 9 compares the optimal path in terms of

x and

y coordinates, as well as

and

, for different distributions.

Figure 10 shows a zoom on the identified paths. From it, we can conclude that the deterministic solution using the wind average is comparable to the probabilistic solutions, with the difference becoming more noticeable for the asymmetrical probability density functions, the nonlinearities involved in the procedure being more significant. In general, minimizing the cost for the mean value of the wind velocity in the treated examples is not optimal with respect to the cost that each wind velocity sample entails.

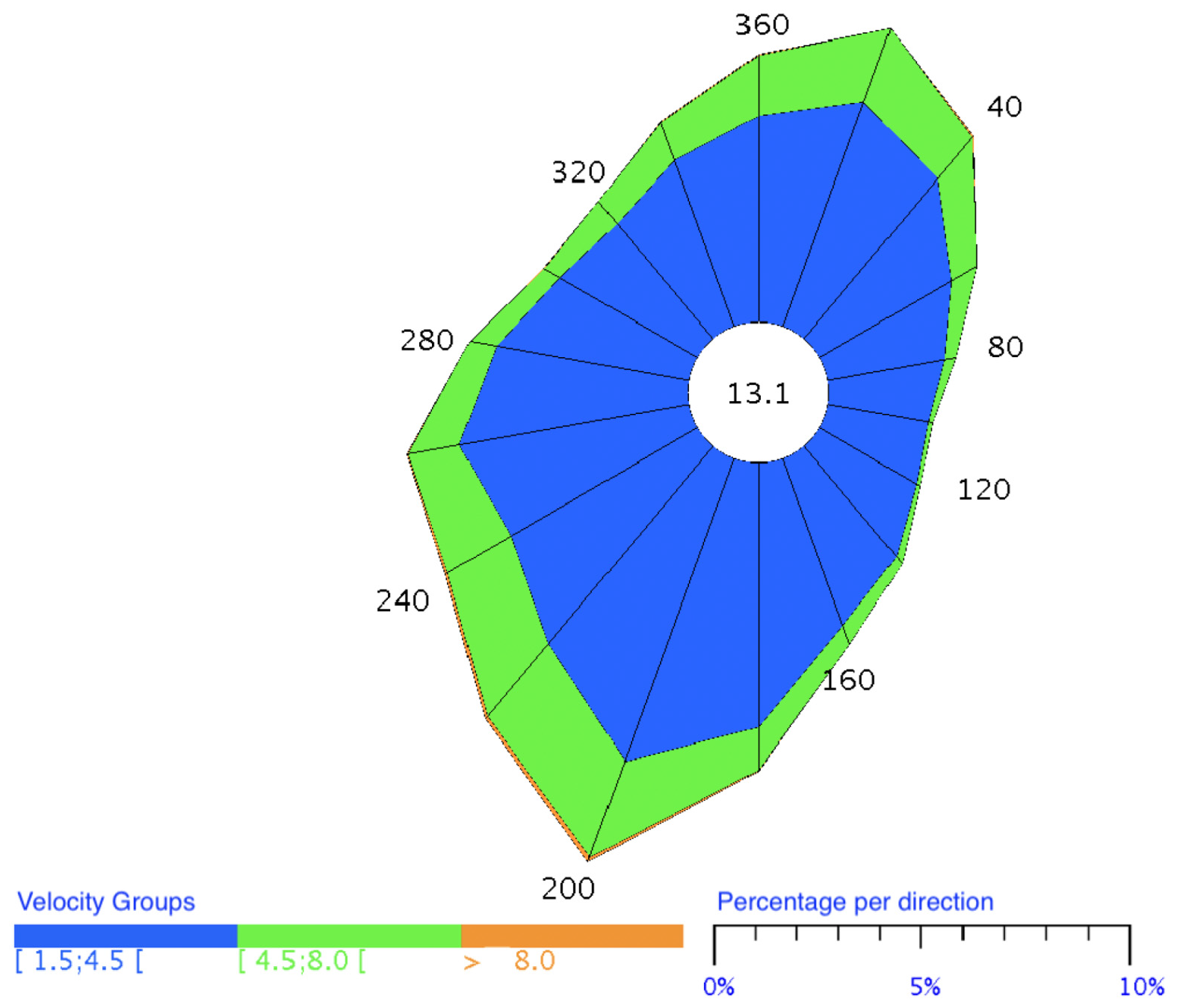

5.4. Considering Available Wind Velocity Statistics

This section considers the wind probability distribution in the city of Paris [

28] shown in

Figure 11.

The wind distribution is sampled for two cases. First set of 30 samples uses constant values along the selected time frame of one hour, picked using the probability distribution illustrated in

Figure 11. The second set of 30 samples is obtained using the Metropolis–Hastings sampling algorithm, with a sampling evolution every minute. The optimal trajectory results are illustrated in

Figure 12 for both cases.

We can note a slight difference between the different sampling procedures. A deterministic solution considering the average values of the wind velocity remains very close to the sampling of constant velocities in time shown in

Figure 12.

6. Conclusions

This work extends former proposals of computing optimal trajectories from a neural network approximation trained to minimize the Euler–Lagrange equation residual as a loss function. This extension into the stochastic setting in which the environment varies in space and its uncertainty evolves in time allowed addressing stochastic environmental evolution during routing operations using the proposed ELE framework.

The proposed strategy consists of the statistical modeling of the uncertainty, which is then sampled using a Monte-Carlo procedure. Later, the network provides the optimal trajectory trained from a loss that considers the Euler–Lagrange equation residual associated with the different stochastic process realizations.

The numerical examples enabled the procedure validation as well as comforting on the procedure simplicity. The results showcase a difference between deterministic approaches considering only the mean values of the stochastic realizations, and the EL stochastic approach using Monte-Carlo minimization of the stochastic ELE form, even if for the simplistic cases addressed, the difference is not significant. In the case where the cost function is linear, there is no difference between the deterministic solution considering the average of the environmental effect, and the proposed work where we consider the stochastic minimization of the cost function. On the contrary, when is highly non-linear, the effect becomes more pronounced.

It is important to emphasize the zero-data framework used in this routing process. No experimental nor simulated data is needed for the network training, as the network learns the optimal solution from the Euler–Lagrange residual minimization, in contrast with the vast majority of the available techniques in the literature.

The proposed work can be extended to add the availability of obstacles and dynamically evolving obstacles. Moreover, the assessment of the Monte-Carlo sampling size and the convergence analysis of the proposed Euler–Lagrange framework will be analyzed.