4.2. Implementation Details

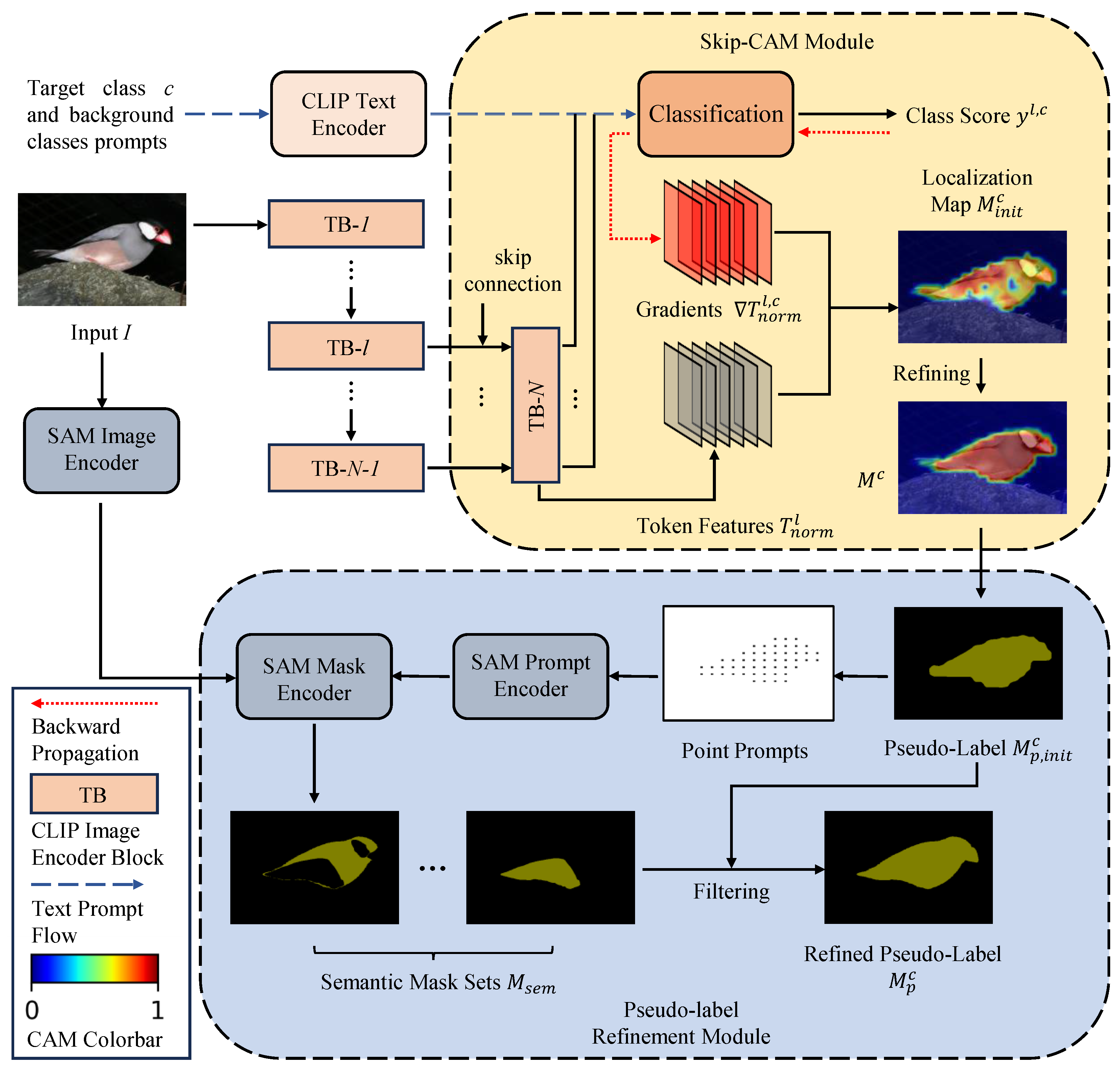

For localization map generation, we adopt the pre-trained ViT-B/16 CLIP model [

11]. The number of transformer blocks

N is 12, and the number of skip connections

is 3. The confidence threshold

is set to 0.4 on PASCAL VOC 2012 and to 0.7 on MS COCO 2014. For pseudo-label refinement, we use the pre-trained SAM ViT-B model [

13] with an input resolution of

. In our method, both CLIP and SAM are kept frozen. For candidate points, both

and

are set to 16, and the IoU filtering threshold

is 0.5.

We integrate the proposed CEP into the end-to-end WeCLIP framework [

9] for online pseudo-label generation. Therefore, the WeCLIP is used as the comparative baseline in this paper.

Table 1 reports the relevant settings. For training, following the WeCLIP setup, we use batch sizes of 4 and 8 for VOC 2012 and COCO 2014, respectively. Optimization is performed using the AdamW optimizer with an initial learning rate of

. Training is conducted for 30,000 iterations on VOC 2012 and 80,000 iterations on COCO 2014, with an input scale of

. During the testing phase, the use of multi-scale inputs and CRF is a common practice in WSSS; for example, ToCo [

8], DuPL [

35], and WeCLIP [

9] all employ these strategies. WeCLIP is used as the baseline in this paper. Specifically, for a fair comparison, we follow the testing settings of WeCLIP, which adopt multi-scale factors of

and

, along with flipping and DenseCRF [

42] post-processing.

In addition, it should be noted that the software configuration for the experiments in this study consisted of Python 3.10, PyTorch 2.1, Torchvision 0.16, and CUDA 12.1. The training is conducted on a single NVIDIA RTX 3090Ti.

4.3. Comparison with State-of-the-Art Methods

We conduct comprehensive comparisons between the proposed WeCLIP+CEP method and existing multi-stage as well as end-to-end WSSS approaches on the PASCAL VOC 2012 and MS COCO 2014 datasets. The methods that adopt CLIP belong to the category of language-supervised approaches and are denoted by L. In addition, we also indicate which methods utilize the SAM model.

Table 2 presents the performance comparison between our method and several representative state-of-the-art techniques on the PASCAL VOC 2012 dataset. As shown, the proposed WeCLIP+CEP achieves a 3.1% mIoU improvement over the WeCLIP baseline on the validation set, reaching 79.5% mIoU. Among end-to-end WSSS methods, WeCLIP+CEP sets a new performance record, outperforming recent methods such as MoRe [

43], FFR [

44], and SeCo [

45] by 3.1%, 3.5%, and 5.5% mIoU, respectively. Even when compared with multi-stage methods, WeCLIP+CEP remains highly competitive. In particular, it surpasses the recent SAM-based S2C [

15] method by 1.3% mIoU and outperforms CLIP-ES+POT [

46] by 3.4% mIoU on the VOC 2012 validation set.

Table 3 shows the results on the more challenging MS COCO 2014 dataset. WeCLIP+CEP achieves 48.6% mIoU on this dataset, yielding a 1.5% improvement over the WeCLIP baseline. This gain is consistent with the improvement trend observed on VOC, highlighting the cross-dataset generalization ability of the proposed approach. Compared with other end-to-end methods, WeCLIP+CEP also attains the highest accuracy, surpassing FFR and MoRe by 1.8% and 1.2% mIoU, respectively. Furthermore, our method outperforms many multi-stage approaches, achieving competitive performance.

Overall, across both datasets, WeCLIP+CEP consistently surpasses the WeCLIP baseline and pushes the performance boundary of end-to-end WSSS. These results demonstrate that integrating WeCLIP with the proposed CEP module yields substantial performance gains.

Table 4 presents the confidence intervals of the segmentation results for our method on the VOC 2012 and COCO 2014 validation sets. It can be observed that the 95% confidence intervals of our method on both datasets are entirely above those of the baseline, thereby confirming that our method achieves superior segmentation performance compared to the baseline.

Furthermore, we employ the bootstrap method to perform a statistical significance test to determine whether the performance improvement of our method over the baseline model is statistically significant. The results on the VOC 2012 validation set show that , further verifying that the segmentation performance of our method is significantly better than that of the baseline.

4.5. Ablation Studies

Effect of Components. We conduct ablation studies on the VOC 2012 training set to evaluate the quality of pseudo-labels and quantitatively validate the effectiveness of each component in our proposed method. Unless otherwise specified, all experiments adopt the pre-trained weights of WeCLIP to eliminate the influence of model performance on the quality of pseudo-labels.

Table 5 demonstrates the effects of the pseudo-label refinement and Skip-CAM modules. Since VOC 2012 does not provide ready-made boundary annotations, for mean Boundary IoU (mBIoU) evaluation, we apply a morphological erosion operation to the given class segmentation masks. The boundary of each class is then obtained by computing the difference between the original segmentation region and its eroded version, where the kernel size of erosion is set to 23. As shown in the table, the pseudo-label refinement module based on SAM brings improvements of 2.5% in mIoU and 10.1% in mBIoU. This demonstrates that the SAM refinement module not only enhances the accuracy of pseudo-labels but also substantially improves their quality, thereby achieving the purpose of refinement. Furthermore, the Skip-CAM module further contributes an additional improvement of 1.4% in mIoU and 0.5% in mBIoU, as it enriches the semantic information of features through skip connections, leading to more precise localization maps and further improving the quality of pseudo-labels.

To qualitatively validate the effectiveness of our CEP method, we conduct a visual comparison of pseudo-labels generated by CEP and WeCLIP [

9]. To ensure a fair comparison of pseudo-label quality, we adopt the original training weights of WeCLIP, thereby eliminating the influence of model performance differences on the pseudo-labels. The experiments are conducted on the VOC 2012 training set.

Figure 3 presents the visualization results. Compared with the baseline method (i.e., WeCLIP), pseudo-labels produced by CEP exhibit higher quality and more precise object boundaries. For instance, in the

sheep image of the second column, the pseudo-label generated by CEP delineates the head contour with greater clarity. This demonstrates that our approach can effectively improve pseudo-label quality. Furthermore, in the

cat image of the first column, the pseudo-label generated by CEP not only captures more accurate object boundaries but also covers a more complete target region.

Effect of SAM Variants. Table 6 presents a comparison of the pseudo-label quality generated by the proposed CEP combined with different versions of SAM on the VOC 2012 training set. The experimental results show that as the scale of the SAM model increases, the mIoU score of the generated pseudo labels gradually improves. However, larger-scale SAM models also significantly increase the number of parameters, leading to higher computational overhead. Considering both performance and efficiency, we adopt the ViT-B version of SAM as the default configuration in this work.

Effect of . Furthermore, we compare the impact of different numbers of skip connections

on the quality of localization maps.

Table 7 reports the effect of the number of skip connections

in the Skip-CAM module, as defined in Equation (

2). Experimental results show that increasing the number of skip connections to 3 leads to a significant performance boost, owing to the fusion of richer semantic information. However, when the number of skip connections exceeds 3, the improvement becomes marginal or even degrades. This may be due to the large distribution gap between features from shallow and deep blocks, which negatively impacts classification performance and introduces noisy gradients, thus affecting the quality of localization maps. Additionally, increasing the number of skip connections incurs higher computational costs. Therefore, in this work, we set

to 3.

Effect of Candidate Point Settings. In the pseudo-label refinement module, the image region is first divided into

grids, with the center of each grid taken as a candidate point. Subsequently, the target-class regions in the initial pseudo labels are used as guidance to filter these candidate points.

Table 8 presents the effect of different candidate point settings on pseudo-label generation performance on the VOC 2012 training set. The results show that when

increases from

to

, the mIoU of the pseudo labels improves from 76.6% to 80.0%, but the average computation time per image also increases from 0.07 s to 0.19 s. In other words, within a certain range, denser candidate point settings lead to higher mIoU but also significantly higher computational costs. Considering both performance and efficiency,

is set to

in this work.

Effect of Prompt Settings. Both our method and the baseline (i.e., WeCLIP [

9]) follow CLIP-ES [

12] to design text prompts for CLIP. Taking VOC 2012 as an example, the background class set includes [

ground,

land,

grass,

tree,

building,

wall,

sky,

lake,

water,

river,

sea,

railway,

railroad,

keyboard,

helmet,

cloud,

house,

mountain,

ocean,

road,

rock,

street,

valley,

bridge,

sign], while the foreground class set includes [

aeroplane,

bicycle,

bird avian,

boat,

bottle,

bus,

car,

cat,

chair seat,

cow,

diningtable,

dog,

horse,

motorbike, {

person with clothes, people, human},

pottedplant,

sheep,

sofa,

train,

tvmonitor screen]. The prompt prefix set is [

a clean origami {}.], and we denote the prompt settings from CLIP-ES as Prompt1.

Following the text prompt templates adopted in CLIP, we replace the prompt prefix set in Prompt1 with [itap of a {}., a bad photo of the {}., a origami {}., a photo of the large {}., a {} in a video game., art of the {}., a photo of the small {}.], denoted as Prompt2. On the basis of Prompt1, we further add synonyms or attributes to foreground classes, e.g., adding the synonym airplane to aeroplane and the attribute wings to bird, denoted as Prompt3. Finally, we combine the settings of Prompt2 and Prompt3 to obtain Prompt4.

Table 9 reports the pseudo-label generation performance under different prompt settings. As observed, the text prompt setting from CLIP-ES (Prompt1) achieves the best performance. The use of synonyms or attributes for class labels in Prompt3 yields slightly worse performance than Prompt1. Introducing diverse prompt prefixes in Prompt2 leads to a noticeable performance drop, and the performance of Prompt4 is almost identical to that of Prompt2. Therefore, both our work and the baseline adopt the text prompt setting from CLIP-ES.

Effect of . Table 10 presents the impact of the hyperparameter

in Equation (

6). The value of

increases from 0.1 to 0.9 with a step size of 0.1. The mIoU of the pseudo-labels first increases and then decreases as

becomes larger. A larger

implies that fewer pixels are retained in the initial localization maps. When

, the proposed method achieves the highest pseudo-label accuracy on the VOC 2012 training set, reaching 79.4% mIoU. For a fair comparison, following the settings of WeCLIP, we set

for VOC 2012 and

for COCO 2014.

Effect of . Table 11 shows the impact of the hyperparameter

in Equation (

8). We incrementally increased

from 0.1 to 0.9 with a step size of 0.1 and evaluated the variation in mIoU of the pseudo-labels generated by the proposed method on the VOC 2012 training set. The experimental results indicate that as

increases, the mIoU first rises and then falls. When

and

, the difference in mIoU is only 0.1%, which is almost negligible. Although

shows slightly better performance, considering the generality and robustness of the method, we finally set

for both the VOC 2012 and COCO 2014 datasets.