Video-Based CSwin Transformer Using Selective Filtering Technique for Interstitial Syndrome Detection

Abstract

1. Introduction

2. Materials and Methods

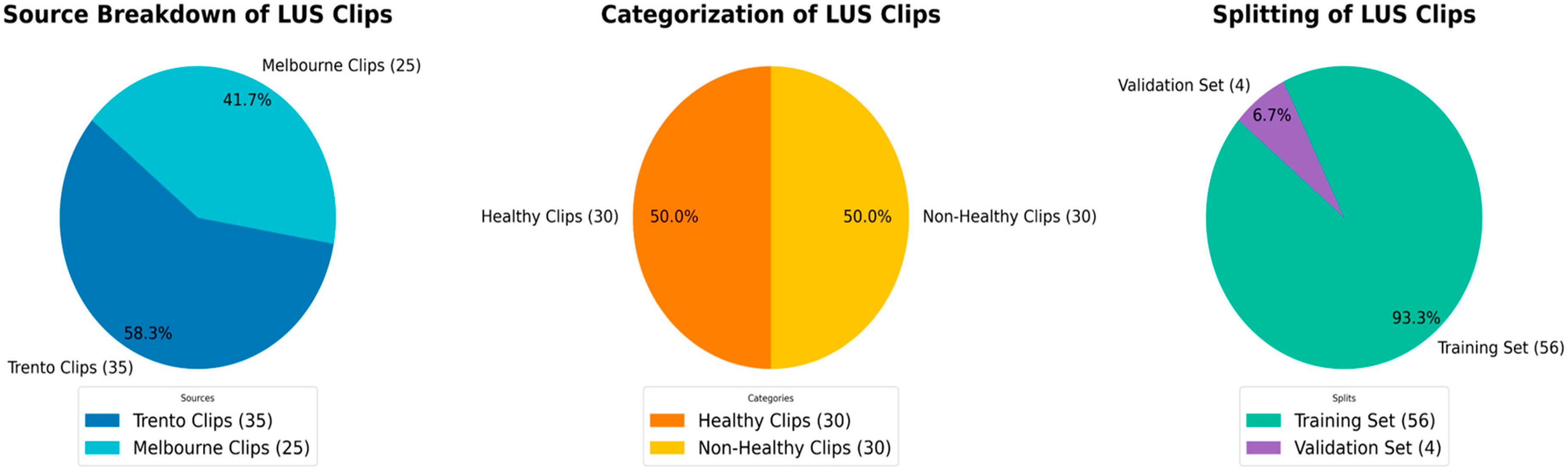

2.1. Datasets

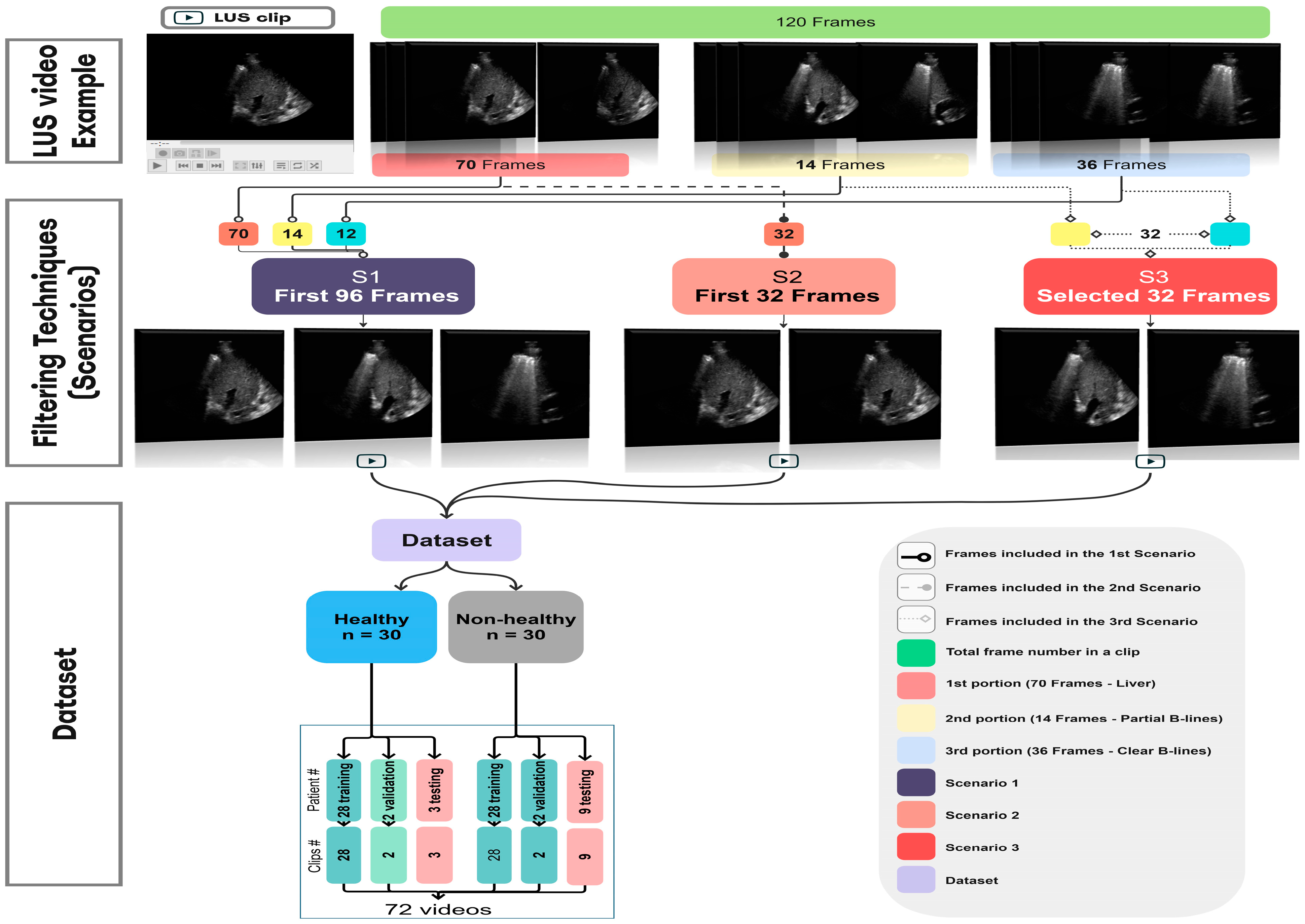

2.2. Filtering Techniques

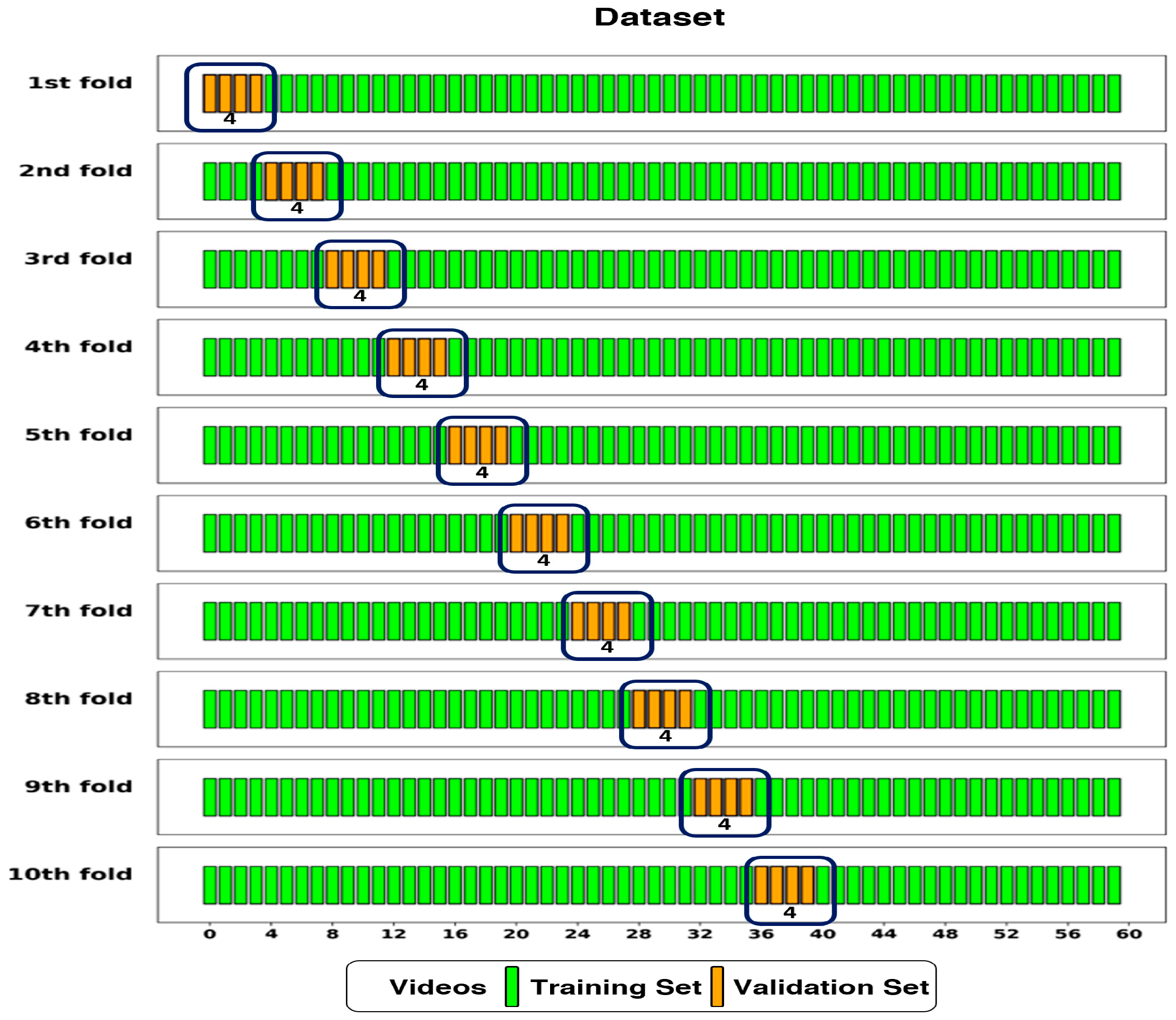

2.3. Dataset Splitting

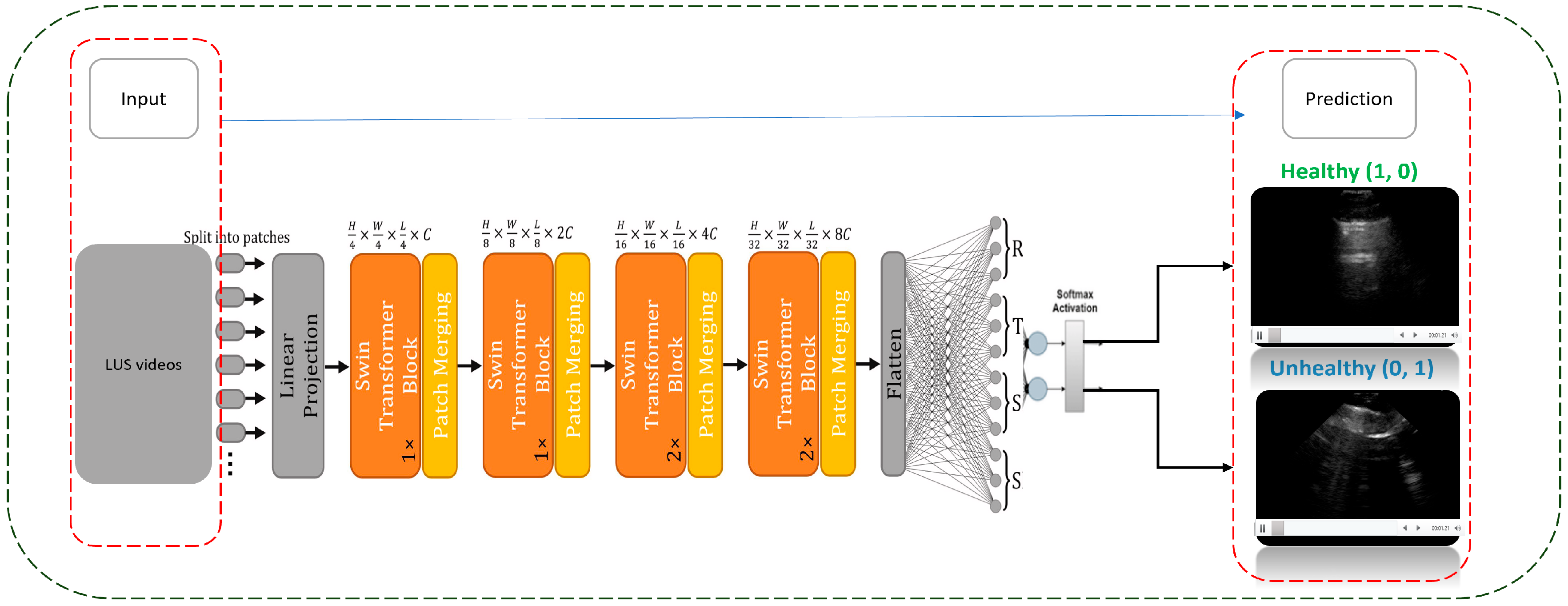

2.4. DL Implementation

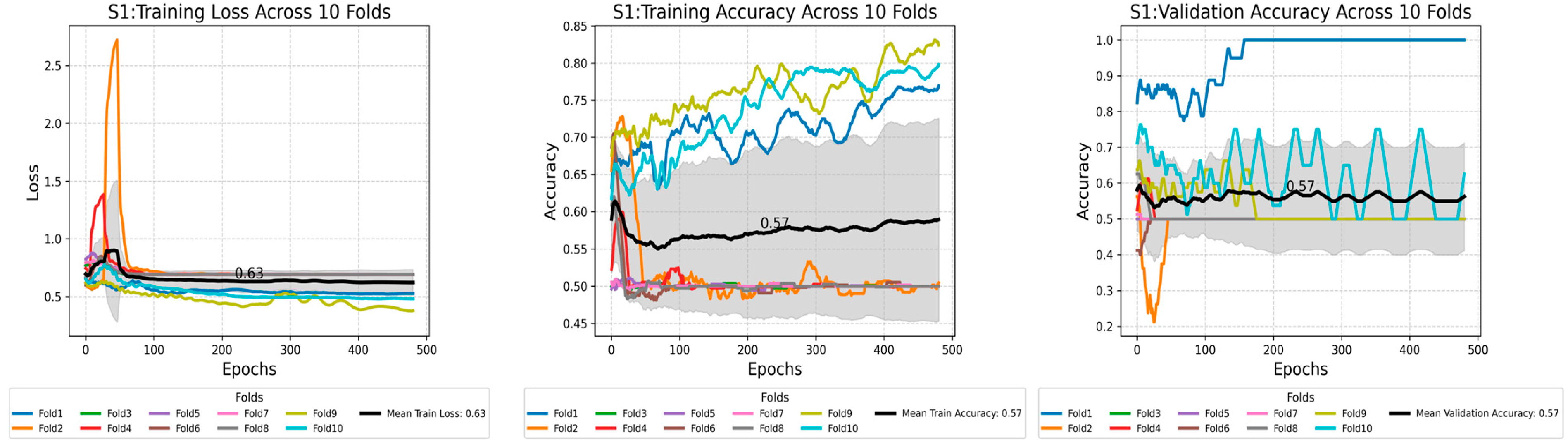

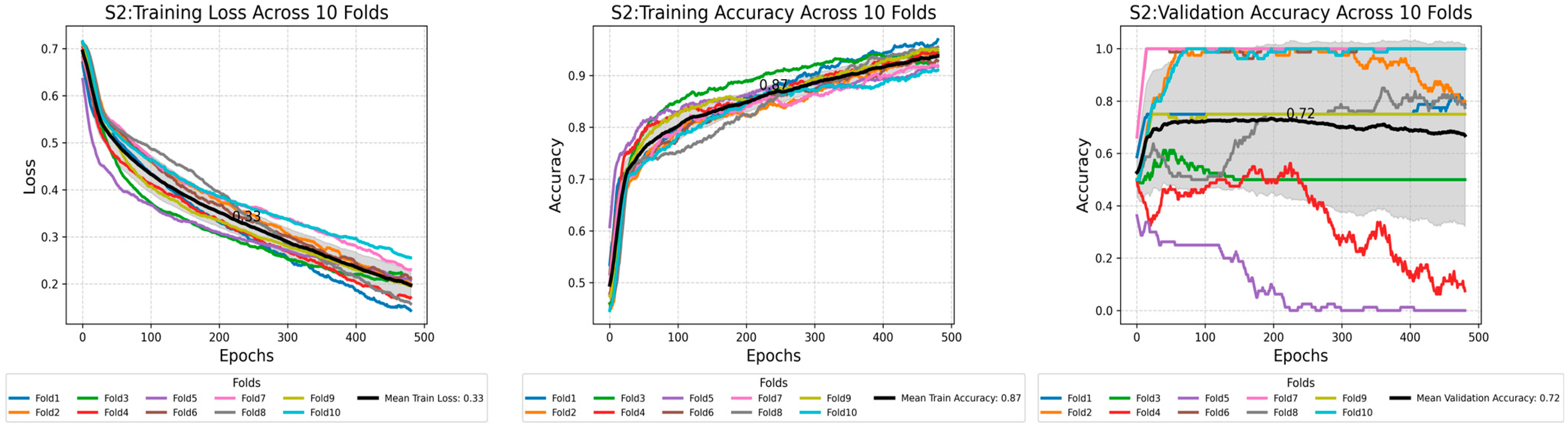

2.5. Training Loss Across Scenarios

2.6. Testing Methods

3. Results

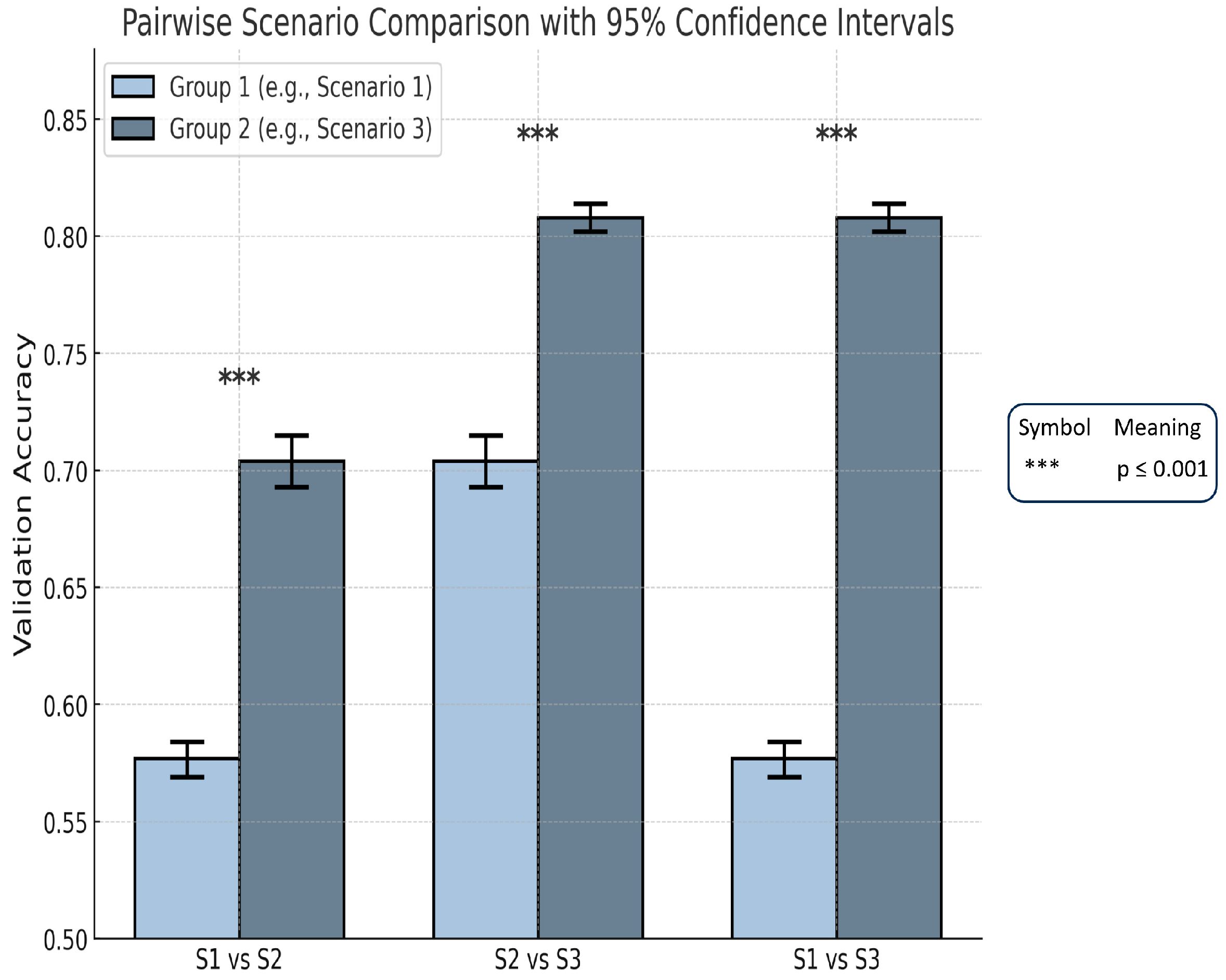

3.1. Performance Across Scenarios: Training Phase

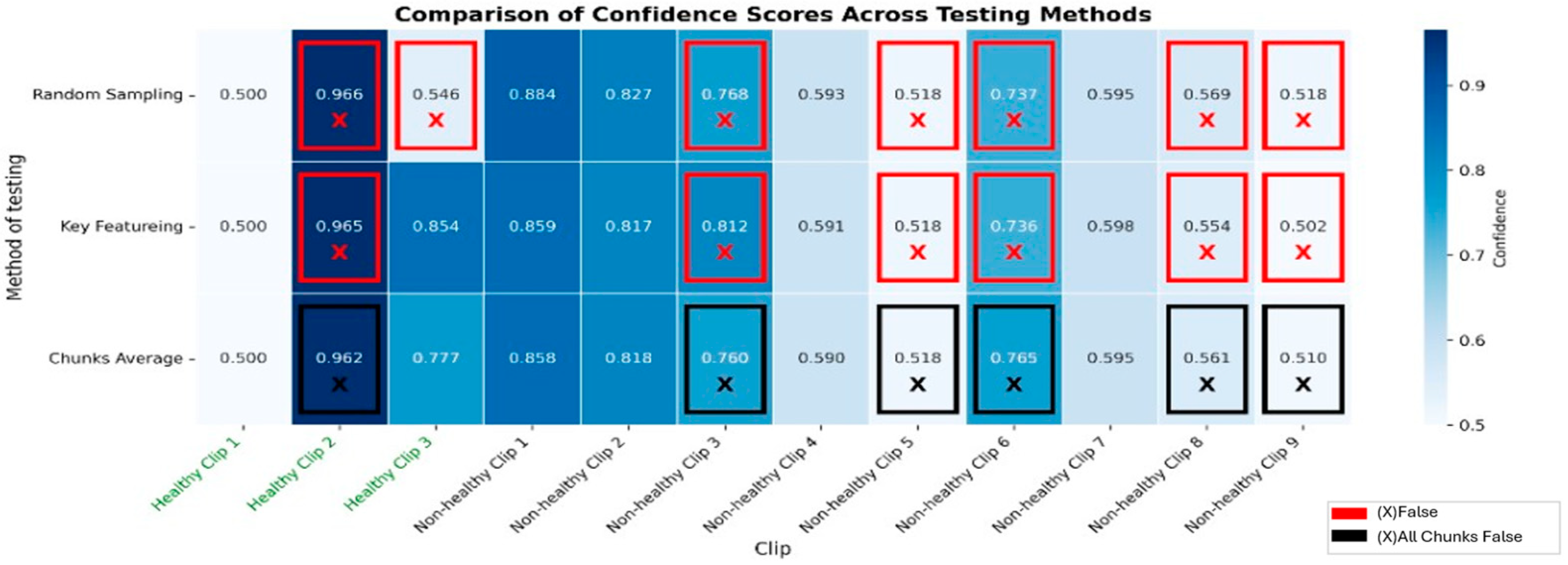

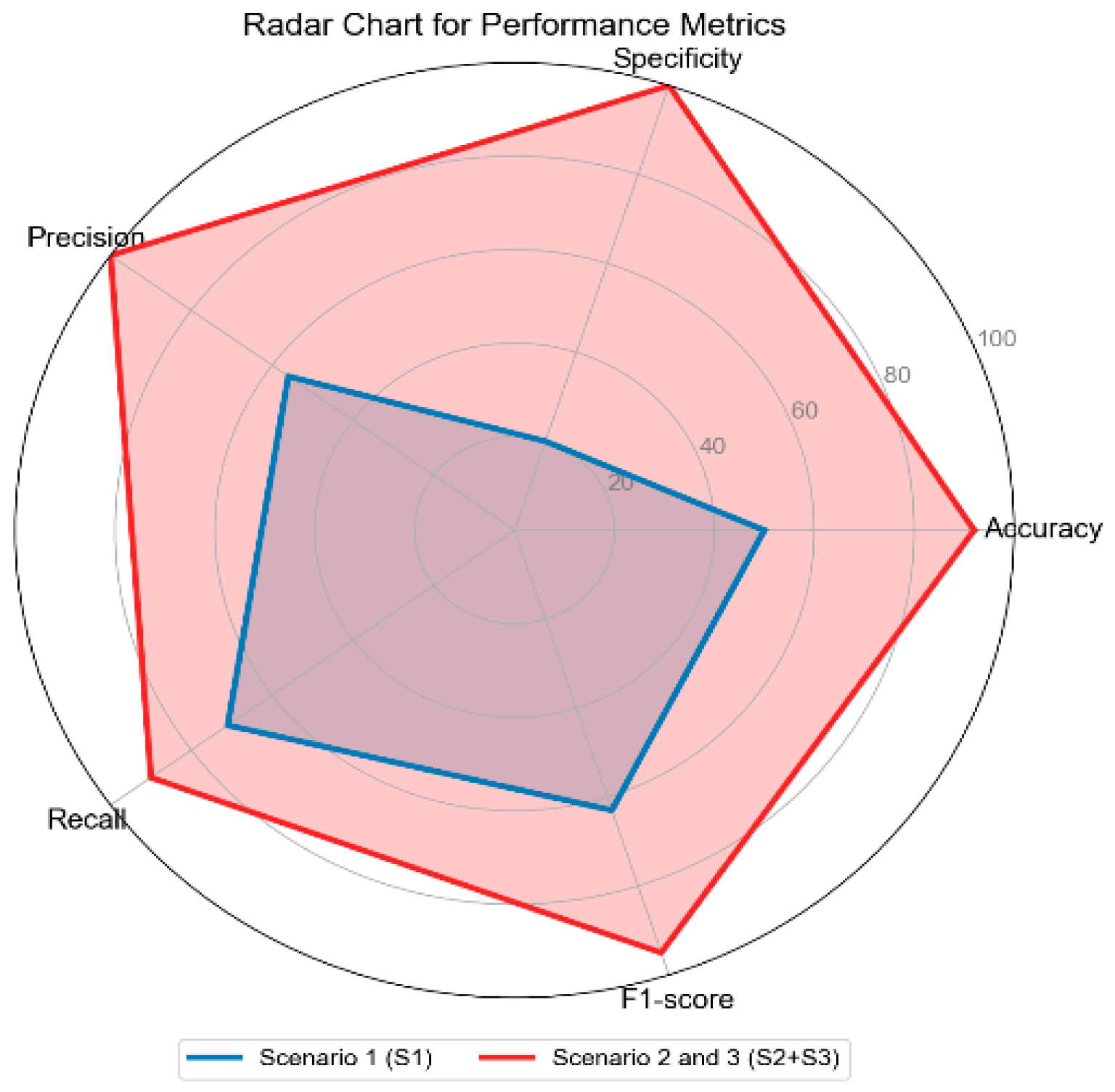

3.2. Performance Across Scenarios: Testing Phase

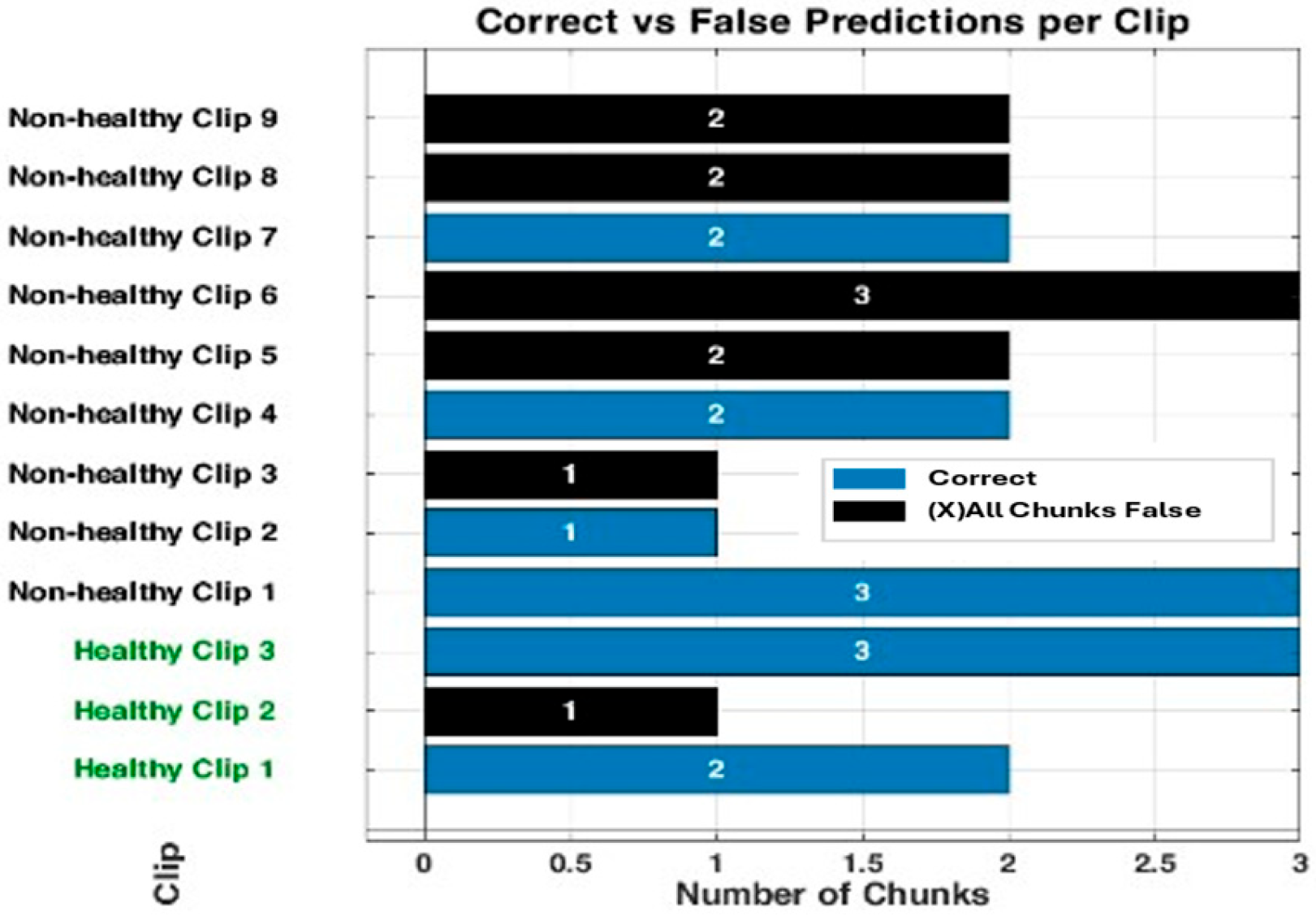

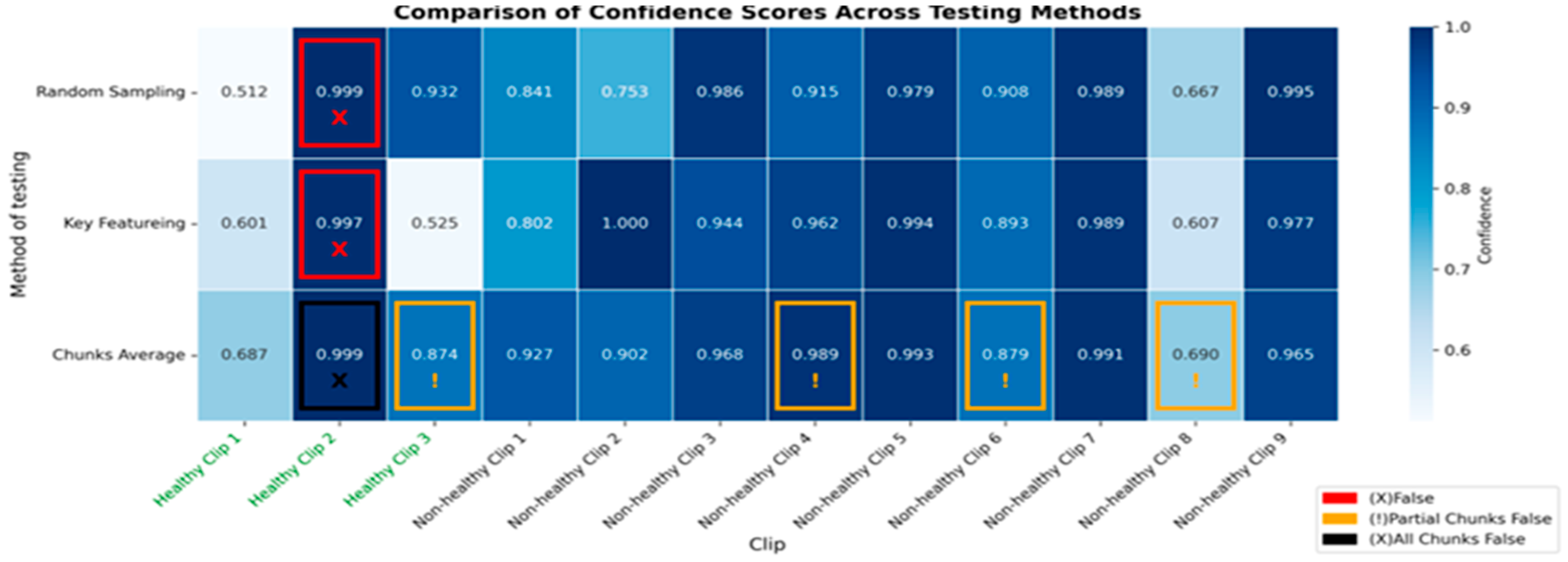

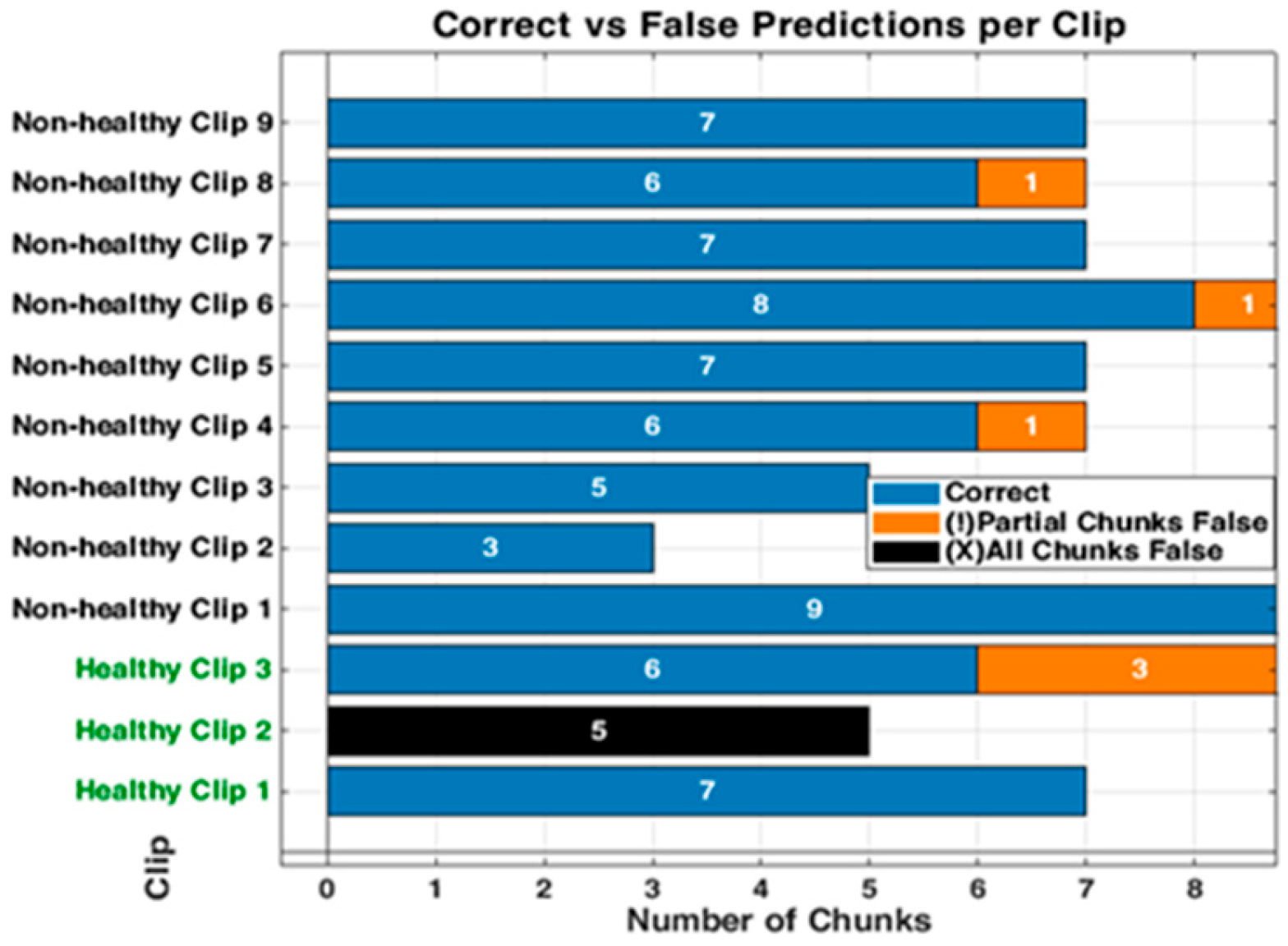

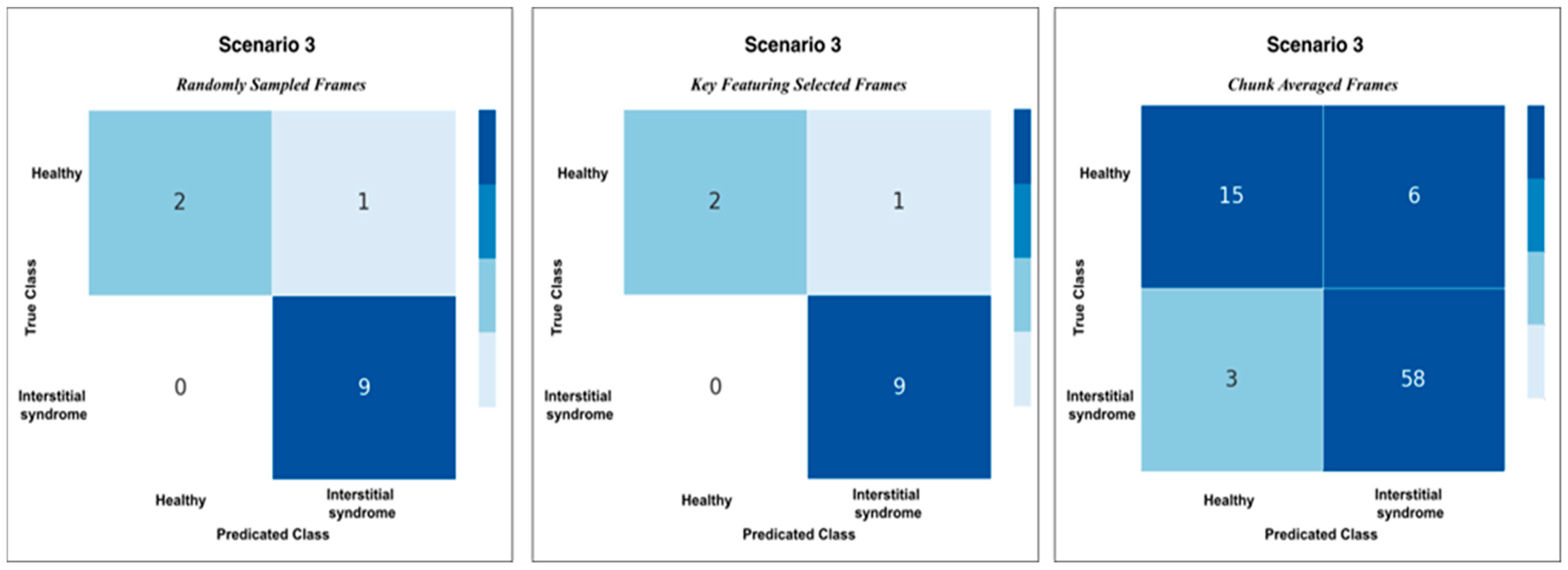

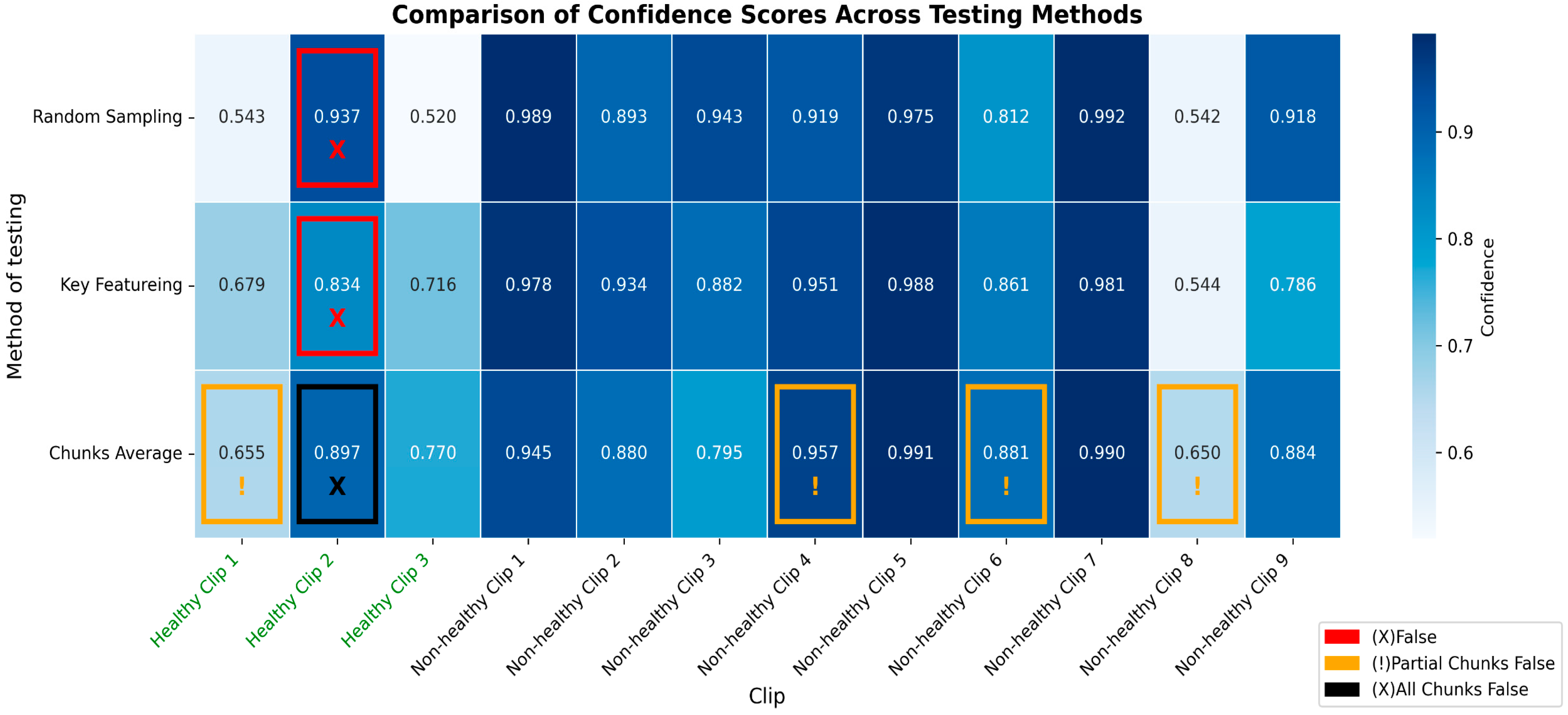

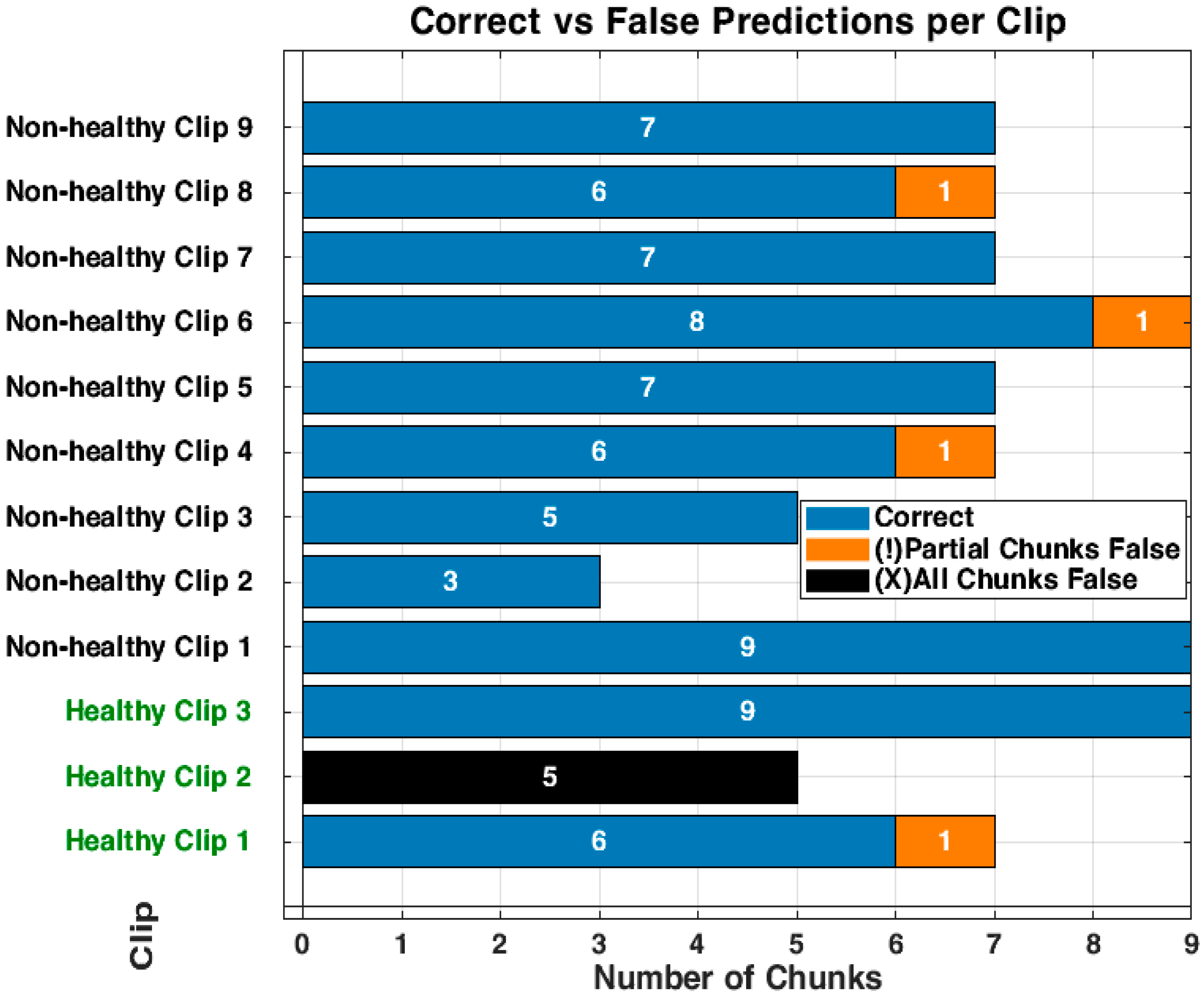

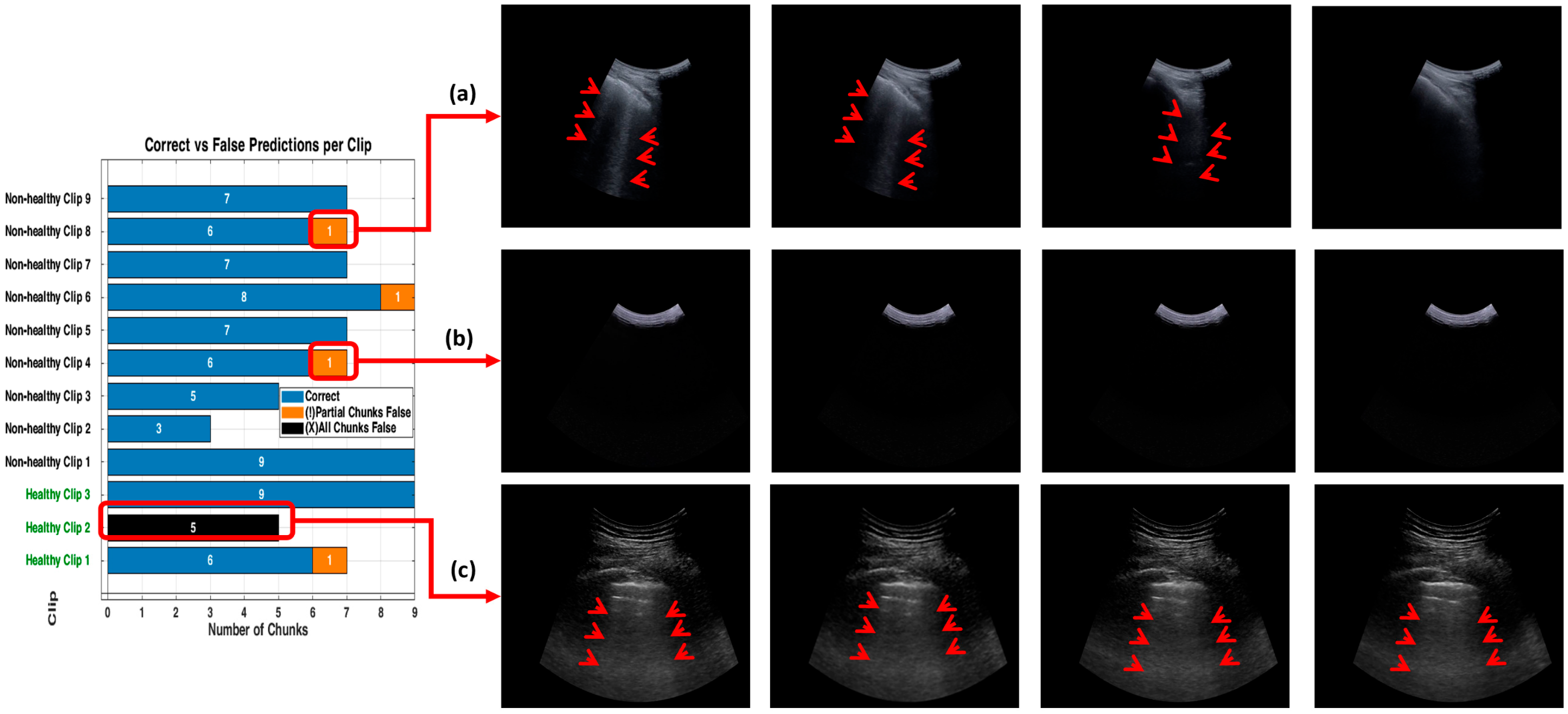

3.3. Detailed Performance of Scenario 3 (S3)

3.4. Inference Time per Video (Real-Time Detection)

4. Discussion

5. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

Appendix C

References

- Wang, Y.; Gargani, L.; Barskova, T.; Furst, D.E.; Cerinic, M.M. Usefulness of lung ultrasound B-lines in connective tissue disease-associated interstitial lung disease: A literature review. Arthritis Res. Ther. 2017, 19, 206. [Google Scholar] [CrossRef] [PubMed]

- Jeganathan, N.; Corte, T.J.; Spagnolo, P. Editorial: Epidemiology and risk factors for interstitial lung diseases. Front. Med. 2024, 11, 1384825. [Google Scholar] [CrossRef] [PubMed]

- Dietrich, C.F.; Mathis, G.; Blaivas, M.; Volpicelli, G.; Seibel, A.; Wastl, D.; Atkinson, N.S.; Cui, X.-W.; Fan, M.; Yi, D. Lung B-line artefacts and their use. J. Thorac. Dis. 2016, 8, 1356–1365. [Google Scholar] [CrossRef] [PubMed]

- Mento, F.; Khan, U.; Faita, F.; Smargiassi, A.; Inchingolo, R.; Perrone, T.; Demi, L. State of the Art in Lung Ultrasound, Shifting from Qualitative to Quantitative Analyses. Ultrasound Med. Biol. 2022, 48, 2398–2416. [Google Scholar] [CrossRef]

- Volpicelli, G.; Elbarbary, M.; Blaivas, M.; Lichtenstein, D.A.; Mathis, G.; Kirkpatrick, A.W.; Melniker, L.; Gargani, L.; Noble, V.E.; Via, G.; et al. International evidence-based recommendations for point-of-care lung ultrasound. Intensiv. Care Med. 2012, 38, 577–591. [Google Scholar] [CrossRef]

- Ziskin, M.C.; Thickman, D.I.; Goldenberg, N.J.; Lapayowker, M.S.; Becker, J.M. The comet tail artifact. J. Ultrasound Med. 1982, 1, 1–7. [Google Scholar] [CrossRef]

- Lichtenstein, D.; Mézière, G.; Biderman, P.; Gepner, A.; Barré, O. The Comet-tail Artifact. An ultrasound sign of alveolar-interstitial syndrome. Am. J. Respir. Crit. Care Med. 1997, 156, 1640–1646. [Google Scholar] [CrossRef]

- Volpicelli, G.; Lamorte, A.; Villén, T. What’s new in lung ultrasound during the COVID-19 pandemic. Intensive Care Med. 2020, 46, 1445–1448. [Google Scholar] [CrossRef]

- Soldati, G.; Smargiassi, A.; Inchingolo, R.; Sher, S.; Nenna, R.; Valente, S.; Inchingolo, C.D.; Corbo, G.M. Lung Ultrasonography May Provide an Indirect Estimation of Lung Porosity and Airspace Geometry. Respiration 2014, 88, 458–468. [Google Scholar] [CrossRef]

- Volpicelli, G.; Fraccalini, T.; Cardinale, L. Lung ultrasound: Are we diagnosing too much? Ultrasound J. 2023, 15, 17. [Google Scholar] [CrossRef]

- Marini, T.J.; Rubens, D.J.; Zhao, Y.T.; Weis, J.; O’Connor, T.P.; Novak, W.H.; Kaproth-Joslin, K.A. Lung ultrasound: The essentials. Radiol. Cardiothorac. Imaging 2021, 3, e200564. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Zhou, B.; Sohn, J.J.; Zhou, J.; Jacob, J.T.; Higgins, K.A.; Bradley, J.D.; Liu, T. Review of Machine Learning in Lung Ultrasound in COVID-19 Pandemic. J. Imaging 2022, 8, 65. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Bell, M.A.L. A Review of Deep Learning Applications in Lung Ultrasound Imaging of COVID-19 Patients. BME Front. 2022, 2022, 9780173. [Google Scholar] [CrossRef] [PubMed]

- Baloescu, C.; Rucki, A.A.; Chen, A.; Zahiri, M.; Ghoshal, G.; Wang, J.; Chew, R.; Kessler, D.; Chan, D.K.; Hicks, B.; et al. Machine Learning Algorithm Detection of Confluent B-Lines. Ultrasound Med. Biol. 2023, 49, 2095–2102. [Google Scholar] [CrossRef] [PubMed]

- Brusasco, C.; Santori, G.; Bruzzo, E.; Trò, R.; Robba, C.; Tavazzi, G.; Guarracino, F.; Forfori, F.; Boccacci, P.; Corradi, F. Quantitative lung ultrasonography: A putative new algorithm for automatic detection and quantification of B-lines. Crit. Care 2019, 23, 288. [Google Scholar] [CrossRef]

- Ebadi, S.E.; Krishnaswamy, D.; Bolouri, S.E.S.; Zonoobi, D.; Greiner, R.; Meuser-Herr, N.; Jaremko, J.L.; Kapur, J.; Noga, M.; Punithakumar, K. Automated detection of pneumonia in lung ultrasound using deep video classification for COVID-19. Inform. Med. Unlocked 2021, 25, 100687. [Google Scholar] [CrossRef]

- Kulhare, S.; Zheng, X.; Mehanian, C.; Gregory, C.; Zhu, M.; Gregory, K.; Xie, H.; Jones, J.M.; Wilson, B. Ultrasound-Based Detection of Lung Abnormalities Using Single Shot Detection Convolutional Neural Networks. In Simulation, Image Processing, and Ultrasound Systems for Assisted Diagnosis and Navigation; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Liu, R.B.; Tayal, V.S.; Panebianco, N.L.; Tung-Chen, Y.; Nagdev, A.; Shah, S.; Pivetta, E.; Henwood, P.C.; Nelson, M.J.; Moore, C.L.; et al. Ultrasound on the Frontlines of COVID-19: Report from an International Webinar. Acad. Emerg. Med. 2020, 27, 523–526. [Google Scholar] [CrossRef]

- Lucassen, R.T.; Jafari, M.H.; Duggan, N.M.; Jowkar, N.; Mehrtash, A.; Fischetti, C.; Bernier, D.; Prentice, K.; Duhaime, E.P.; Jin, M.; et al. Deep Learning for Detection and Localization of B-Lines in Lung Ultrasound. IEEE J. Biomed. Health Inform. 2023, 27, 4352–4361. [Google Scholar]

- Pare, J.R.; Gjesteby, L.A.; Telfer, B.A.; Tonelli, M.M.; Leo, M.M.; Billatos, E.; Scalera, J.; Brattain, L.J. Transfer Learning for Automated COVID-19 B-Line Classification in Lung Ultrasound. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 1675–1681. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Y.; He, Q.; Liao, H.; Luo, J. Quantitative Analysis of Pleural Line and B-Lines in Lung Ultrasound Images for Severity Assessment of COVID-19 Pneumonia. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2022, 69, 73–83. [Google Scholar] [CrossRef]

- Arntfield, R.; VanBerlo, B.; Alaifan, T.; Phelps, N.; White, M.; Chaudhary, R.; Ho, J.; Wu, D. Development of a convolutional neural network to differentiate among the etiology of similar appearing pathological B lines on lung ultrasound: A deep learning study. BMJ Open 2021, 11, e045120. [Google Scholar] [CrossRef]

- Perera, S.; Adhikari, S.; Yilmaz, A. Pocformer: A Lightweight Transformer Architecture for Detection of COVID-19 Using Point Of Care Ultrasound. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 195–199. [Google Scholar] [CrossRef]

- Hu, Z.; Fauerbach, P.V.N.; Yeung, C.; Ungi, T.; Rudan, J.; Engel, C.J.; Mousavi, P.; Fichtinger, G.; Jabs, D. Real-time automatic tumor segmentation for ultrasound-guided breast-conserving surgery navigation. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1663–1672. [Google Scholar] [CrossRef]

- Kumar, V.; Webb, J.M.; Gregory, A.; Denis, M.; Meixner, D.D.; Bayat, M.; Whaley, D.H.; Fatemi, M.; Alizad, A.; Fan, Y. Automated and real-time segmentation of suspicious breast masses using convolutional neural network. PLoS ONE 2018, 13, e0195816. [Google Scholar]

- Wei, Y.; Yang, B.; Wei, L.; Xue, J.; Zhu, Y.; Li, J.; Qin, M.; Zhang, S.; Dai, Q.; Yang, M. Real-time carotid plaque recognition from dynamic ultrasound videos based on artificial neural network. Ultraschall Med.-Eur. J. Ultrasound 2024, 45, 493–500. [Google Scholar] [CrossRef] [PubMed]

- Nurmaini, S.; Nova, R.; Sapitri, A.I.; Rachmatullah, M.N.; Tutuko, B.; Firdaus, F.; Darmawahyuni, A.; Islami, A.; Mandala, S.; Partan, R.U.; et al. A Real-Time End-to-End Framework with a Stacked Model Using Ultrasound Video for Cardiac Septal Defect Decision-Making. J. Imaging 2024, 10, 280. [Google Scholar] [CrossRef]

- Zhang, T.-T.; Shu, H.; Tang, Z.-R.; Lam, K.-Y.; Chow, C.-Y.; Chen, X.-J.; Li, A.; Zheng, Y.-Y. Weakly supervised real-time instance segmentation for ultrasound images of median nerves. Comput. Biol. Med. 2023, 162, 107057. [Google Scholar] [CrossRef]

- Ou, Z.; Bai, J.; Chen, Z.; Lu, Y.; Wang, H.; Long, S.; Chen, G. RTSeg-net: A lightweight network for real-time segmentation of fetal head and pubic symphysis from intrapartum ultrasound images. Comput. Biol. Med. 2024, 175, 108501. [Google Scholar] [CrossRef]

- Khan, U.; Afrakhteh, S.; Mento, F.; Mert, G.; Smargiassi, A.; Inchingolo, R.; Tursi, F.; Macioce, V.N.; Perrone, T.; Iacca, G.; et al. Low-complexity lung ultrasound video scoring by means of intensity projection-based video compression. Comput. Biol. Med. 2024, 169, 107885. [Google Scholar] [CrossRef]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Guo, B. CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows. arXiv 2021. [Google Scholar] [CrossRef]

- Al-Hammuri, K.; Gebali, F.; Kanan, A.; Chelvan, I.T. Vision transformer architecture and applications in digital health: A tutorial and survey. Vis. Comput. Ind. Biomed. Art 2023, 6, 14. [Google Scholar] [CrossRef]

- He, K.; Gan, C.; Li, Z.; Rekik, I.; Yin, Z.; Ji, W.; Gao, Y.; Wang, Q.; Zhang, J.; Shen, D. Transformers in Medical Image Analysis: A Review. arXiv 2022, arXiv:2202.12165. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Tang, Y.; Wang, C.; Landman, B.A.; Zhou, S.K. Transforming medical imaging with Transformers? A comparative review of key properties, current progresses, and future perspectives. Med. Image Anal. 2023, 85, 102762. [Google Scholar] [CrossRef]

- Vafaeezadeh, M.; Behnam, H.; Gifani, P. Ultrasound Image Analysis with Vision Transformers—Review. Diagnostics 2024, 14, 542. [Google Scholar] [CrossRef]

- Liao, Y.; Lin, Y.; Xing, Z.; Yuan, X. Privacy Image Secrecy Scheme Based on Chaos-Driven Fractal Sorting Matrix and Fibonacci Q-Matrix. Vis. Comput. 2025, 41, 6931–6941. [Google Scholar] [CrossRef]

- Lin, Y.; Xie, Z.; Chen, T.; Cheng, X.; Wen, H. Image privacy protection scheme based on high-quality reconstruction DCT compression and nonlinear dynamics. Expert Syst. Appl. 2024, 257, 124891. [Google Scholar] [CrossRef]

- Zeng, W.; Zhang, C.; Liang, X.; Xia, J.; Lin, Y.; Lin, Y. Intrusion detection-embedded chaotic encryption via hybrid modulation for data center interconnects. Opt. Lett. 2025, 50, 4450–4453. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, S.; Yomono, K.; Yamamoto, S.; Suzuki, M.; Gono, T.; Kuwana, M. Lung ultrasound in the assessment of interstitial lung disease in patients with connective tissue disease: Performance in comparison with high-resolution computed tomography. Mod. Rheumatol. 2025, 35, 79–87. [Google Scholar] [CrossRef]

- Chen, J.; Frey, E.C.; He, Y.; Segars, W.P.; Li, Y.; Du, Y. TransMorph: Transformer for unsupervised medical image registration. Med. Image Anal. 2022, 82, 102615. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Seabold, S.; Perktold, J. Statsmodels: Econometric and Statistical Modeling with Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010. [Google Scholar]

- Goffi, A.; Kruisselbrink, R.; Volpicelli, G. The sound of air: Point-of-care lung ultrasound in perioperative medicine. Can. J. Anaesth. 2018, 65, 399–416. [Google Scholar] [CrossRef]

- Smargiassi, A.; Zanforlin, A.; Perrone, T.; Buonsenso, D.; Torri, E.; Limoli, G.; Mossolani, E.E.; Tursi, F.; Soldati, G.; Inchingolo, R. Vertical Artifacts as Lung Ultrasound Signs: Trick or Trap? Part 2-An Accademia di Ecografia Toracica Position Paper on B-Lines and Sonographic Interstitial Syndrome. J. Ultrasound Med. 2023, 42, 279–292. [Google Scholar] [CrossRef]

| Healthy Patients (H) | Non-Healthy Patients (NH) | Training Set | Validation Set | Testing Set (Unseen) | Total | |||

|---|---|---|---|---|---|---|---|---|

| % | no | % | no | % | no | Videos/ Patients | ||

| 33 | 39 | ≈ 78% | 56 (26H + 26NH) | ≈ 6% | 4 (2H + 2NH) | ≈ 17% | 12 (3H +9 NH) | 72 |

| Scenario | Mean Accuracy | 95% Confidence Interval | Compared to | Mean Difference | p-Value | Cohen’s d | Effect Size Interpretation |

|---|---|---|---|---|---|---|---|

| Scenario 1 | 0.577 | [0.569, 0.584] | S2 | 0.127 | *** | 9.69 | Extremely large |

| Scenario 2 | 0.704 | [0.693, 0.715] | S3 | 0.104 | *** | 8.54 | Extremely large |

| Scenario 3 | 0.808 | [0.8020.814] | S1 | 0.231 | *** | 24.10 | Extremely large |

| Performance Metrics | |||||

|---|---|---|---|---|---|

| Accuracy | Specificity | Precision | Recall | F1-Score | |

| Scenario 1 (S1) | 50% | 20% | 56% | 71% | 63% |

| Scenario 2 (S2) | 92% | 100% | 100% | 90% | 95% |

| Scenario 3 (S3) | 92% | 100% | 100% | 90% | 95% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moafa, K.; Antico, M.; Edwards, C.; Steffens, M.; Dowling, J.; Canty, D.; Fontanarosa, D. Video-Based CSwin Transformer Using Selective Filtering Technique for Interstitial Syndrome Detection. Appl. Sci. 2025, 15, 9126. https://doi.org/10.3390/app15169126

Moafa K, Antico M, Edwards C, Steffens M, Dowling J, Canty D, Fontanarosa D. Video-Based CSwin Transformer Using Selective Filtering Technique for Interstitial Syndrome Detection. Applied Sciences. 2025; 15(16):9126. https://doi.org/10.3390/app15169126

Chicago/Turabian StyleMoafa, Khalid, Maria Antico, Christopher Edwards, Marian Steffens, Jason Dowling, David Canty, and Davide Fontanarosa. 2025. "Video-Based CSwin Transformer Using Selective Filtering Technique for Interstitial Syndrome Detection" Applied Sciences 15, no. 16: 9126. https://doi.org/10.3390/app15169126

APA StyleMoafa, K., Antico, M., Edwards, C., Steffens, M., Dowling, J., Canty, D., & Fontanarosa, D. (2025). Video-Based CSwin Transformer Using Selective Filtering Technique for Interstitial Syndrome Detection. Applied Sciences, 15(16), 9126. https://doi.org/10.3390/app15169126