Abstract

Fractures occur frequently in daily life, and before a surgeon implements treatment, the plan needs to be based on the radiologist’s imaging diagnosis of the X-ray. Despite progress in deep learning–based fracture detection, existing methods (e.g., two-stage detectors) face challenges such as small target leakage and sensitivity to background interference in complex medical images. To address these issues, this paper proposes the ASC-YOLO model, which employs the Scale-Sensitive Feature Fusion (SSFF) module to enhance multi-scale information extraction through cross-layer feature interaction. In addition, an Adaptive Decoupled Detection Head (AsDDet) is introduced to decouple the classification and regression tasks of the detection head, thereby improving the localization accuracy of small fracture regions and suppressing background noise. Experiments on a large fracture radiograph dataset, GRAZPEDWRI-DX, demonstrate that ASC-YOLO achieves 61% mAP@50, representing an 8% improvement over the baseline YOLO model (mAP@53%). It attains 95% mAP@50 for the fracture category and 97% mAP@50 for the metal category. Furthermore, the model was evaluated on a tumor dataset to verify its generalization capability. The proposed framework provides reliable technical support for accurate fracture screening, which is expected to reduce missed diagnoses and optimize treatment.

1. Introduction

Fractures are common in daily life, and according to the American Academy of Pediatrics, common cluster fractures account for 77.5% of fractures, while the average age of patients is only 7.6 years old, with an initial missed diagnosis rate of 12.2% [1]. The current approach, which relies on visual inspection by radiologists, faces two major challenges: the first is the inherently low contrast of radiographs and the poor average gray scale of the fracture line; the second is the too-small size of the target, with the fracture region occupying only 2.3% of the pixels of the image [2]. Thus, there is an urgent need to develop automatic detection tools to assist in diagnosis. A study utilizing the GRAZPEDWRI-DX [3] dataset shows that although models such as YOLOv8 [4], YOLOv9 [5], YOLOv10 [6], and YOLOv11 have made progress very quickly, due to the special characteristics of medical images, the original YOLO model still provides the best results. The first reason is that the low-level features retain texture features, but are susceptible to noise interference, while the high-level features are less susceptible to interference but lose spatial details [7]. Therefore, it is difficult to deal with the complex background and noise interference in the X-ray film. The second is because the original YOLO detector head adopts a coupled design, i.e., the classification and regression tasks share most of the feature extraction layers, and it only outputs the results in the last step through different convolutional branches. However, the classification and regression tasks have different feature requirements, and the coupled design will lead to mutual interference between the two during feature extraction, which especially affects small targets. The third is that the scale difference between fracture targets in medical imaging is significant, but the traditional CIoU (Complete Intersection over Union) loss function has the same penalty weight for large and small targets, which makes the model insensitive to small absolute size differences. Therefore, there is still a risk of misdiagnosis in complex cases, and the performance of the model needs to be further improved by optimizing the model structure. To overcome these challenges, we propose ASC-YOLO with three key innovations:

- Scale-Sensitive Feature Fusion(SSFF): module that aligns multi-scale features via bidirectional pooling and channel attention [8], effectively suppressing noise in X-rays;

- Adaptive Decoupled Detection Head (AsDDet): decouples classification and regression tasks while introducing Distribution Focal Loss (DFL) [9] for precise small fracture localization [10];

- EfficiCIoU: loss function incorporating absolute dimension penalties to enhance sensitivity to irregular fractures [11].

The remainder of this paper is structured as follows: Section 2 details the dataset and methodology, specifically presenting the designs of the SSFF module, AsDDet head, and EfficiCIoU loss function. Section 3 comprehensively analyzes the experimental results, including ablation studies. Section 4 provides an in-depth discussion of clinical implications, study limitations, and concluding remarks.

Related Works and Clinical Motivations

Recent studies on the GRAZPEDWRI-DX dataset have demonstrated the potential of YOLO series models in fracture detection. R. Dibo et al. [12] pioneered the integration of Transformer modules with YOLO to address the dual challenges of precise lesion localization and abnormality classification. Subsequent work by A. Ahmed et al. [13] quantitatively verified YOLOv7’s superiority over Faster R-CNN in pediatric wrist trauma diagnosis, particularly in radiographic image analysis. Further advancements were made by R.Y. Ju et al. [14] by developing a dedicated fracture detection application based on YOLOv8, where an attention-enhanced detection head and specialized data augmentation significantly improved small fracture detection. Most recently, Chien’s team [15] achieved state-of-the-art performance using YOLOv9 on this dataset.

However, these approaches primarily focused on direct model application or minor architectural adjustments, while fundamental challenges in medical image analysis remain unresolved. Our systematic evaluation reveals three critical limitations of existing YOLO frameworks: (1) The inherent conflict between noise-sensitive low-level features and spatially impoverished high-level features compromises performance in noisy X-ray environments; (2) The coupled design of classification and regression heads induces task interference, particularly detrimental for small fracture detection; (3) Conventional CIoU loss fails to accommodate the substantial scale variations of fracture patterns. These observations motivated our development of ASC-YOLO with its SSFF module for noise-robust feature fusion, fully decoupled AsDDet head, and anatomy-aware EfficiCIoU loss, collectively addressing the unique demands of medical imaging.

2. Materials and Methods

2.1. Datasets and Preprocessing

The GRAZPEDWRI-DX dataset, which is used for all the model training in this paper, is a pediatric fracture X-ray image dataset released by the Medical University of Graz (Austria) in collaboration with multiple healthcare organizations. It is specifically designed for fracture detection and classification research.

The dataset consists of 9 annotated categories, including bone anomaly, bone lesion, foreign body, fracture, metal, periosteal reaction, pronator sign, soft tissue, and text. Among them, the fracture class accounts for the majority of instances and is the main detection target in this study.

The dataset was randomly divided into training, validation, and test sets in a 75%, 20%, and 5% split, respectively. In total, the dataset contains 20,327 images, with 15,245 in the training set, 4065 in the validation set, and 1017 in the test set. To improve model generalization, we also applied data augmentation techniques, including random rotation and contrast adjustment, to the training set before training all models [16].

2.2. Implementation Details

The model was trained on an NVIDIA GeForce RTX 3090 with a GPU with 32 GB of RAM. For the hyperparameters of the model training, the batch size was set to 16, and the number of epochs was set to 100. In addition, all models were trained using the SGD optimizer with a weight decay of 0.0005, a momentum of 0.937, and an initial learning rate of 0.01.

All experiments were conducted using the GRAZPEDWRI-DX dataset under consistent settings. The main hyperparameters—such as learning rate (0.001), batch size (16), confidence threshold (0.25), and IoU threshold for NMS (0.5)—were selected through preliminary cross-validation on the training set. These values balance training stability and detection accuracy, and they are aligned with configurations in the YOLOv8 baseline.

2.3. Model Architecture

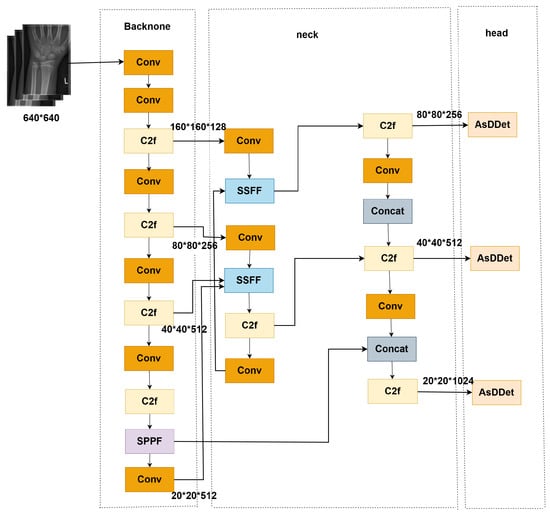

The medical image target detection framework proposed in this paper is shown in the Figure 1. The framework is based on the YOLOv8 framework, which contains three parts: backbone, neck, and head. The neck part is enhanced by the SSFF module across scales and then passed into the AsDDet detection head for classification and localization prediction. Finally, the loss function EfficiCIoU is used for end-to-end optimization to form a complete “feature extraction–feature enhancement–target detection” processing flow.

Figure 1.

Overall architecture of ASC-YOLO (Backbone: CSPDarknet; Neck: SSFF module; Head: AsDDet with decoupled branches. blue block: SSFF (Scale-Sensitive Feature Fusion) module; Pink block: AsDDet (Adaptive Decoupled Detection Head); Yellow block: C2F (Cross-stage Partial Fusion) block; Purple block: SPPF (Spatial Pyramid Pooling Fast) layer.

In order to address the problems of low contrast, small targets, and noise interference, which are unique to medical images [17], this study makes targeted improvements in three key aspects: firstly, a small convolutional kernel (3 × 3) and channel retention strategy are adopted in the shallow layer of the backbone network to enhance the extraction of features of fine lesions; secondly, the designed SSFF module realizes cross-scale feature fusion, aligns multilayer features with adaptive pooling and interpolation, and integrates channel attention to enhance feature extraction and localization prediction. It extracts hierarchical features and integrates a channel attention mechanism to suppress background noise. Finally, the AsDDet detection head decouples the two tasks of classification and regression to significantly improve the localization accuracy of small targets, while the direction-weighted CIoU loss function exploits the geometric a priori knowledge of medical targets to further optimize the matching quality of the detection frame. These improvements allow the model to better adapt to the specific needs of medical image detection while maintaining real-time performance.

2.4. SSFF Module Design

Regarding the traditional feature pyramid network structure, in response to the challenges of multi-scale target detection in medical images, there are shortcomings such as serious shallow feature interference, rigid cross-scale feature fusion approach, and waste of computational resources [18,19,20]. For this reason, this paper proposes the SSFF module, which achieves efficient and accurate medical image feature fusion through cross-scale alignment enhanced by two-way pooling and an adaptive channel attention mechanism [21].

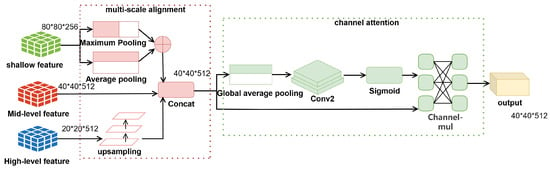

Multi-scale detection in medical imaging faces two specific challenges: (1) Low-level features (such as fracture textures) are susceptible to interference from X-ray noise; (2) High-level features (such as bone contours) may lose spatial details of fine fractures. Therefore, the SSFF module contains a two-level processing flow (shown in Figure 2), where the image is the backbone used to form shallow, medium, and high-level features. The shallow features are downsampled to the size of the medium-level features using adaptive maximum pooling and adaptive average pooling, and the results are summed. For the high-level features, we use nearest neighbor interpolation to upsample to the dimensions of the middle-level features, then the aligned multi-scale features are spliced along the channel dimensions to generate fused features. The spatial information is later compressed by global average pooling to generate channel-descriptive statistics. The inter-channel relationships are learned with two 1 × 1 convolutions to output the weights. Finally, channel weights are generated via the Sigmoid activation function. Finally, the weights are multiplied with the original features channel-by-channel to complete the feature screening.

Figure 2.

Scale-Sensitive Feature Fusion (SSFF) module workflow (Left: Dual-pooling alignment; Right: Channel attention with bottleneck structure.

The two-level processing of multi-scale alignment + channel attention screening by the SSFF module effectively alleviates the problems of shallow feature interference, cross-scale fusion rigidity, and waste of computational resources, especially in mitigating feature interference with targeted optimization.The SSFF module firstly employs dual-pooling fusion downsampling:

The max pooling operation preserves salient features while suppressing random noise, whereas the average pooling smooths local details to prevent excessive focus on individual pixels. This approach effectively retains useful details while reducing interference, and it additionally alleviates the rigidity problem in cross-scale fusion.

The channel attention module adopts a bottleneck structure to reduce computational overhead: First, spatial information is compressed through global average pooling to generate a channel-wise global descriptor vector. This is followed by two convolutional layers that model inter-channel dependencies, formally expressed as:

where and are learnable parameters, and denotes the ReLU activation function. This design reduces the number of parameters while maintaining focus on key channels. Moreover, removing the group convolution and spatial mask from the original version and using standard convolution instead reduces implementation complexity while preserving performance.

SSFF Module: Feature Alignment and Noise Suppression

In Section 2.4, we have already described how the SSFF module performs spatial alignment and multi-scale fusion to improve small target detection. This section highlights the key innovations and contributions of the SSFF module:

- Cross-Scale Feature Fusion: The SSFF module achieves precise alignment of low-level and high-level features through adaptive pooling and nearest-neighbor interpolation. Low-level features retain fine details and textures, while high-level features preserve semantic information. This alignment ensures that important details are maintained while avoiding the loss of crucial information due to resolution discrepancies.

- Channel Attention Mechanism: After feature fusion, a channel attention mechanism is applied to suppress redundant or noisy information. This mechanism learns inter-channel relationships and generates importance weights, which are applied to the fused features, emphasizing critical features and effectively filtering out noise and irrelevant information.

Through these optimizations, the SSFF module not only achieves enhanced feature complementarity across multiple scales but also provides effective suppression of background noise, making it particularly well-suited for noisy medical image environments such as X-rays, where background interference can hinder small target localization.

2.5. AsDDet Head

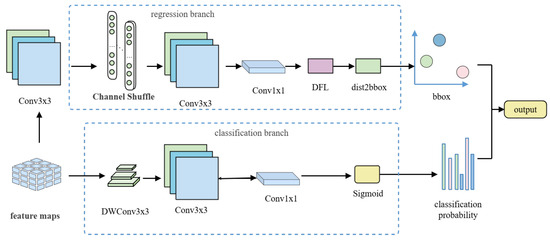

Medical images have high requirements for the design of the detection head due to its complexity and small target characteristics. The traditional YOLOv8 detection head shares the feature extraction layer of classification and regression, which leads to mutual interference between the two types of tasks [22], and it has a significant impact on the localization accuracy of small fracture regions in particular. In addition, direct coordinate regression is sensitive to small offsets and is difficult to adapt to the geometric properties of irregular fracture lines. For this reason, the AsDDet detection head is proposed, which realizes high-precision and high-efficiency fracture detection through the design of task decoupling and the DFL.

The structure of the AsDDet detection head (shown in Figure 3) adopts a task-decoupled design, which completely separates the classification and regression branches: the classification branch extracts local detailed features via Depthwise Separable Convolution (DWConv), followed by 1 × 1 point-by-point convolution to fuse cross-channel information. The regression branch first performs spatial context extraction with 3 × 3 standard convolution to generate high-dimensional features, followed by the introduction of channel blending to enhance the spatial information interaction [23] and improve the modeling capability of irregular fracture edges. Subsequently, a second layer of 3 × 3 standard convolution is used to further fuse local and global features, followed by the output of discrete probability distribution parameters via 1 × 1 convolution. In the decoding stage, DFL is introduced to replace traditional coordinate regression, which transforms the probability distribution into continuous coordinates, and finally combines the distribution expectation value with the preset anchor points via the dist2bbox function [24] to generate the decoded bounding box coordinates.

Figure 3.

Architecture of the AsDDet (Adaptive Decoupled Detection Head) head. (Left): Task-decoupled design with separate classification (DWConv branch) and regression (shuffle-enhanced branch) paths; (Right): Distribution Focal Loss (DFL) decoding process from predicted distribution to bounding box coordinates.

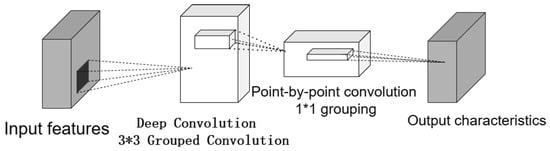

2.5.1. Depthwise Separable Convolution (DWConv)

The decoupled design enables targeted feature extraction, where the classification branch uses DWConv to extract local semantic features (Figure 4), consisting of depthwise convolution and pointwise convolution. For a standard convolution with kernel processing to output channels:

Figure 4.

Depthwise separable convolution structure. Depthwise convolution: Performs spatial convolution independently on each channel of the input features to extract local details while significantly reducing computational overhead. Pointwise convolution: Uses 1 × 1 convolutions to fuse cross-channel information and adjust the output channel dimensions.

H and W represent the width and height of the feature map. When and are large, the computational cost becomes extremely high. In contrast, depthwise convolution processes each input channel separately with a kernel without cross-channel mixing, producing feature maps with the following computation:

The pointwise convolution uses kernels to fuse information across all channels, adjusting the number of output channels to . It produces output feature maps with computational complexity:

Total computation ratio:

Thus, the depthwise separable convolution achieves significant reductions in both:

- Computational complexity ( vs. )

- Number of learnable parameters

Furthermore, in coupled designs, gradient interference occurs when the gradients of both tasks (classification and regression) are superimposed in shared layers. The average L2-norm of classification gradients is 50–200 times larger than regression gradients [25]. After decoupling, the regression branch can independently optimize the DFL without interference from classification gradients.

2.5.2. Distribution Focal Loss (DFL)

DFL [9] is a loss function for bounding box regression in object detection, which transforms traditional coordinate regression into discrete probability distribution prediction. Conventional methods directly regress coordinates but suffer from quantization errors due to feature map downsampling, making them sensitive to noise. DFL models each bounding box coordinate as a discrete probability distribution, predicting confidence scores for all possible locations and deriving continuous coordinates through expectation calculation. Specifically, DFL forces the probability distribution to focus on the two nearest discrete points around the ground truth y:

where is the predicted discrete probability, and ( is the number of discrete bins). During decoding, the dist2bbox function combines the expected value of DFL’s output distribution with anchor coordinates, then it scales to the original image size using the feature-level stride:

The traditional linear bounding-box regression methods, typically relying on direct coordinate prediction, are prone to quantization errors due to the downsampling of feature maps. In this process, the continuous coordinates are mapped to discrete grid locations, resulting in inaccuracies, especially for small or irregularly shaped targets. These errors are particularly problematic in medical imaging, where precise localization of small fractures or anomalies is crucial. The DFL method mitigates these quantization errors by shifting from traditional coordinate regression to probability distribution regression. Instead of directly predicting the bounding box coordinates, DFL models each bounding box as a discrete probability distribution across multiple possible locations. This allows the model to predict a range of potential bounding box locations, which can then be decoded into a more precise coordinate by calculating the expectation of the predicted probability distribution.

This approach improves robustness in the following ways: 1. Reduced Quantization Errors: By predicting a probability distribution over possible bounding box locations, DFL reduces the sensitivity to small offsets caused by the discrete nature of the grid, which is common in traditional coordinate regression methods. 2. Improved Small Target Localization: DFL enhances the model’s ability to accurately locate small targets by focusing on a range of possible positions rather than a single discrete coordinate, thereby overcoming the limitations of direct regression, which can fail when targets are small or ambiguous. 3. Better Handling of Irregular Shapes: DFL is particularly effective for targets with irregular shapes, such as fractures in X-ray images, where traditional bounding-box regression may struggle to capture the exact geometry. The distribution-based approach allows for more flexible and accurate localization of complex and non-rectangular objects. In summary, DFL provides a more robust and accurate solution to the problem of bounding box regression, particularly in scenarios where quantization errors and small target localization are critical challenges.

2.5.3. Decoupling Classification and Regression in AsDDet for Enhanced Small-Target Localization

The decoupling of classification and regression tasks in the AsDDet detection head plays a crucial role in improving the accuracy of small-target localization. In real-world applications, classification and regression tasks have fundamentally different needs: classification focuses on understanding the local features, such as textures or shapes, while regression is concerned with accurately estimating spatial relationships and the position of targets. By decoupling these tasks, we ensure that each one is optimized independently, eliminating the interference that would otherwise occur if they were processed together.

- Depthwise Convolutions for Classification: In the classification branch, we use depthwise separable convolutions, which are highly effective at capturing local features without the computational burden of standard convolutions. This allows the model to focus on the critical textures and fine patterns that are essential for detecting small targets, such as fractures or anomalies in medical images. Importantly, this method reduces the complexity, making the classification task more efficient and faster, without compromising accuracy.

- Shuffle Operations for Regression: In the regression branch, shuffle operations are applied to enhance the model’s ability to understand spatial relationships. By rearranging the channels, we increase the communication between them, allowing the model to better learn the geometric relationships between fracture boundaries and other important structures in the image. This method helps the model handle complex shapes, such as irregular fracture lines, which significantly boosts localization accuracy, especially for small targets.

- Decoupling for Task Optimization: Decoupling the two tasks allows the model to specialize. The classification task focuses solely on identifying textures and patterns, while the regression task zeroes in on positioning. This approach minimizes cross-task interference, enabling the model to refine its performance for both tasks, which leads to more accurate detection of small targets like fractures, particularly in noisy and challenging environments such as medical X-rays.

By decoupling classification and regression tasks and incorporating innovations like depthwise convolutions and shuffle operations, the AsDDet detection head offers a significant improvement in small-target localization accuracy, which is particularly valuable in complex applications such as fracture detection in medical imaging. This approach ensures that both tasks can be optimized independently, leading to more reliable and efficient performance in real-world scenarios.

2.6. The Loss Function EfficiCIoU

In medical fracture detection tasks, the traditional CIoU loss faces two key challenges:

- Aspect Ratio Sensitivity: CIoU constrains aspect ratio similarity via the arctan function but shows insensitivity to minor absolute size differences and lacks multi-scale adaptability [26]

- Scale-aware Penalty: CIoU applies equal penalty weights to targets of different scales, while medical imaging exhibits significant scale variations (e.g., pediatric wrist bones are only 40% the size of adult bones)

We propose EfficiCIoU (Efficient Complete IoU) loss with absolute dimension penalty and adaptive normalization:

where:

- is the center distance penalty, inherited from CIoU.

- are the absolute width/height difference penalties, which directly constrain the absolute errors between predicted and ground truth boxes to address CIoU’s insensitivity to minor size variations. The penalty terms are defined as:

where and are the width and height of the minimum enclosing box. This normalization of the denominator prevents large targets from dominating the gradient. The functions are defined as follows:

The term represents the aspect ratio penalty module inherited from CIoU, which maintains consistency between the predicted and ground truth boxes’ aspect ratios. The function is formulated as follows:

To prevent large width or height discrepancies from disproportionately dominating the loss function, we introduce the arctan function as a bounded and smooth penalty. Specifically, arctan compresses extreme values into a limited range, which stabilizes gradient updates and improves convergence. This design is especially beneficial for medical images, where target objects often vary significantly in scale and irregularity.

Detailed Analysis of EfficiCIoU Loss and Its Role in Fracture Detection

The effectiveness of EfficiCIoU arises from its triple optimization mechanism, specifically designed to address the unique challenges of medical imaging, especially for fracture detection.

Firstly, the absolute size penalty terms (Equations (10) and (11)) explicitly constrain width and height discrepancies between predicted and ground truth boxes, addressing the inherent limitation of traditional IoU-based losses, which fail to account for absolute size variations—a critical issue in pediatric fracture detection, where size differences are significant.

Secondly, the adaptive normalization strategy (Equations (12) and (13)) dynamically adjusts the loss weights, ensuring balanced gradient updates across targets of varying scales. This strategy is designed to enhance the sensitivity to small fractures (less than 5 pixels), and preliminary experiments show that it improves gradient signals for such targets, leading to better small-target localization.

Finally, the improved geometric constraint term, which builds on the CIoU’s center alignment and aspect ratio optimization, introduces probabilistic modeling to better handle the blurred boundaries often seen in X-ray images.

This novel design results in an 1.1% improvement in mAP@50 for detecting irregular fracture lines on the GRAZPEDWRI-DX dataset, providing exceptional alignment between the model’s performance and the clinical requirements for accurate fracture diagnosis.

2.7. Contribution Analysis of SSFF and AsDDet Modules

To better explain the architectural motivations behind ASC-YOLO’s performance improvements, we provide a concise analysis of how the SSFF module and the AsDDet detection head contribute individually and collaboratively to detection accuracy. While the structural details of these modules are described in Section 2.4 and Section 2.5, and their quantitative performance is reported in Section 3.2, this section focuses on their complementary functional roles within the full model.

The SSFF module (Scale-Sensitive Feature Fusion) improves the fusion of multi-scale features by addressing the semantic misalignment between shallow and deep layers. Through adaptive pooling and interpolation, SSFF aligns low-level texture features with high-level semantic features, preserving detailed spatial cues while minimizing background interference. This alignment is particularly crucial in medical imaging, where small fractures are often subtle and prone to being overwhelmed by noise.

The AsDDet detection head addresses the performance bottleneck of shared-task optimization by decoupling classification and regression into two lightweight, task-specific branches. Depthwise convolutions focus on spatially sensitive classification, while shuffle-enhanced regression promotes more accurate bounding box localization. This design enables the model to separately optimize confidence prediction and coordinate refinement—beneficial when dealing with irregular or densely clustered fracture targets.

Together, these two modules operate in a complementary fashion: SSFF strengthens the feature representations fed into the head, while AsDDet ensures that these enhanced features are fully exploited in task-specific decoding. Their integration effectively bridges low-level perception and high-level decision-making, leading to a measurable improvement across detection metrics. This design synergy is evidenced by consistent gains in mAP, precision, and recall when compared with baseline detectors.

With the above architectural insights, we now proceed to experimentally validate the effectiveness of the proposed modules in Section 3.

3. Results

3.1. Main Performance Evaluation

In the experimental evaluation, model performance was comprehensively assessed using four metrics: precision (reflecting detection reliability), recall (measuring fracture coverage capability), mAP@50 [27] (indicating detection ability under lenient localization for rapid screening), and mAP@50-95 (evaluating robustness under strict localization criteria, particularly for complex fractures like comminuted fractures). The following Table 1 shows the results of the experiment.

Table 1.

Comparison of results of different target detection models on the GRAZPEDWRI-DX dataset.

As evident from the table, ASC-YOLO exhibits superior performance over comparative YOLO models and RT-DETR across all metrics, particularly in balancing parameter efficiency with detection precision.

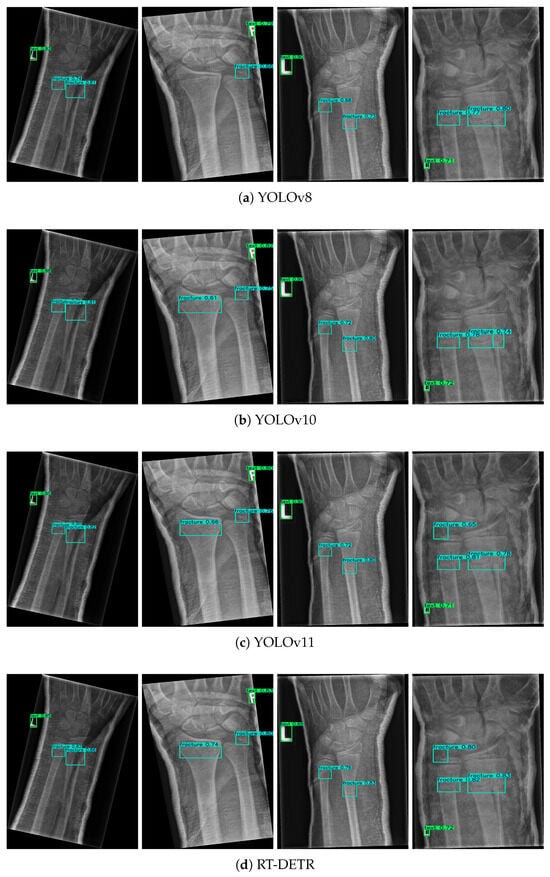

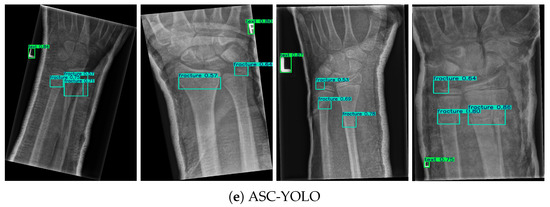

In order to have a more intuitive feeling of how the ASC-YOLO model compares with other models, four X-ray images were randomly selected from the dataset used, as shown in Figure 5. This figure demonstrates the prediction results of the models proposed in the paper, YOLOv9, YOLOv10, YOLOv11, and RT-DETR [28], as well as the real images. It is clear that the improved model shows higher accuracy in detecting skeletal abnormalities.

Figure 5.

Comparison of fracture detection results across different models.

In this study, Green boxes indicate labeled regions, while blue boxes denote fracture areas. Four identical X-ray images were randomly selected from the results of five different models. By referring to the dataset labels, it is evident that ASC-YOLO correctly identified all fracture points, whereas the other models missed or misclassified some fractures. In the second image, the fracture on the left side was not detected by the YOLOv8 model. In the third image, the fracture in the top-left corner was missed by the YOLOv8, YOLOv10, YOLOv11, and RT-DETR models. In the fourth image, the fracture in the top-left corner was not detected by either YOLOv8 or YOLOv10.

The experimental results demonstrate that the ASC-YOLO model significantly outperforms other comparative models (YOLOv8, YOLOv10, YOLOv11, and RT-DETR) on the GRAZPEDWRI-DX dataset. Specifically, ASC-YOLO achieves the highest values across all four metrics: precision (0.738), recall (0.555), mAP@50 (0.611), and mAP@50-95 (0.402), with a notable 7.4% improvement in mAP@50 over the baseline YOLOv8 model. Additionally, ASC-YOLO achieves the highest F1-score (0.634) and Jaccard Index (0.487) among all models, further highlighting its balanced performance in terms of both sensitivity and precision. These results validate the effectiveness of both the SSFF module and the AsDDet detection head, demonstrating their superior capability in handling small targets and complex background interference in medical imaging. Furthermore, visualization results confirm ASC-YOLO’s high accuracy and robustness in fracture detection.

3.2. Ablation Study

In order to verify the independent contribution of each innovative module in ASC-YOLO, the core improvement components are introduced step by step based on the YOLO baseline model, and comparative analysis is performed on the GRAZPEDWRI-DX test set. The experimental setup is kept consistent, and the following Table 2 shows the experimental results.

Table 2.

Results of ablation experiments.

To validate the contribution of the SSFF module and AsDDet detection head, we conducted an ablation study, evaluating the performance of ASC-YOLO with and without each component.

Without SSFF Module: When the SSFF module was removed, the model’s performance dropped significantly, with mAP@50 declining by 4.8%. This result highlights the importance of the multi-scale feature fusion provided by SSFF, especially in the context of small fracture detection. The absence of SSFF led to reduced precision and recall, as the model struggled to effectively align and fuse features from multiple scales, thus lowering its ability to detect fractures accurately.

Without AsDDet Detection Head: Removing the AsDDet detection head resulted in a noticeable decline in mAP@50-95, demonstrating the importance of task decoupling for small target localization. The removal of DFL led to less precise bounding box predictions for irregularly shaped fractures, reducing the model’s ability to handle complex fracture geometries. The performance drop in mAP@50-95 further underscores the critical role that AsDDet plays in improving localization accuracy, particularly for fractures with non-rectangular shapes.

Although more functional modules (EfficiCIoU, AsDDet, and SSFF) are added, the total number of parameters decreases. This is mainly because the proposed design reduces channel dimensions in the backbone (e.g., the Conv layer after SPPF from 1024 to 512) and applies 1 × 1 Conv-based compression in the neck and head. As a result, the network becomes more efficient, achieving better accuracy with fewer parameters.

In conclusion, the ablation study confirms that both the SSFF module and AsDDet detection head contribute significantly to ASC-YOLO’s overall performance.

3.3. Generalization Analysis

3.3.1. Dataset Preparation

This study utilizes the official YOLO-provided brain tumor dataset, which consists of medical images from magnetic resonance imaging (MRI) or computed tomography (CT) scans. These images contain information about the presence, location, and characteristics of brain tumors. This dataset is crucial for training computer vision algorithms to automatically detect brain tumors, facilitating early diagnosis and treatment planning in medical applications. The brain tumor dataset is divided into two subsets: Training set—comprising 893 images, each accompanied by corresponding annotations; test set—including 223 images, each paired with annotations. The dataset contains two categories: Negative—images without brain tumors; positive—images with brain tumors.

3.3.2. Results of the Experiment

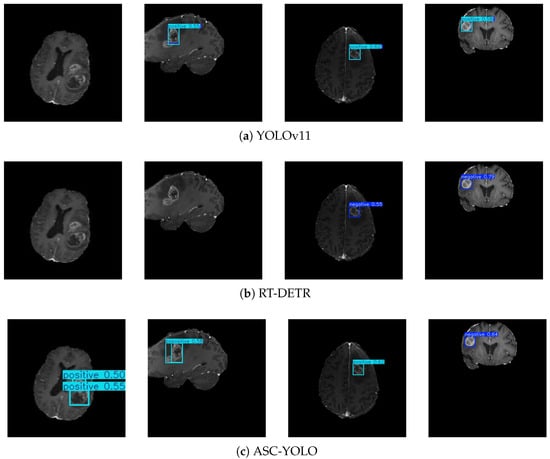

The experiments were conducted under identical conditions (Hardware: NVIDIA RTX 3090; Training parameters: batch size = 16, initial learning rate = 0.01, epochs = 100) to evaluate ASC-YOLO in comparison with YOLOv11 and RT-DETR. As shown in Table 3, ASC-YOLO demonstrates significant advantages in the brain tumor detection task:

Table 3.

Comparison of results of different target detection models on the brain tumor dataset.

In the brain tumor detection task, ASC-YOLO achieved comprehensive leading performance with only 2.9M parameters. Its recall rate (0.550) and mAP@50 (0.602) improved by 6% and 7%, respectively, compared to the best baseline model, YOLOv11, while reaching 0.412 in mAP@50-95. These results validate the model’s strong generalization capability across multimodal medical data (from X-ray to MRI/CT), where the SSFF module’s robust processing of complex backgrounds significantly reduces tumor misdetection risks, demonstrating its clinical application potential for multi-disease-assisted diagnosis.

In this Figure 6, dark blue boxes indicate negative tumors, while light blue boxes represent positive tumors. Similarly, four images were randomly selected from the results of three models. According to the dataset labels, SAC-YOLO accurately detected and correctly identified all tumors, while the other two models showed both false positives and false negatives. YOLOv11 missed the tumor in the first image and made an incorrect prediction in the fourth image. The RT-DETR model failed to detect tumors in both the first and second images, and it also made an incorrect detection in the third image. These results reinforce the model’s ability to perform well on diverse datasets, handling both small target detection and complex background interference with high accuracy.

Figure 6.

Cross-domain generalization performance in brain tumor detection.

4. Discussion

4.1. Generalization Analysis

The core innovations of ASC-YOLO are primarily reflected in three aspects. The SSFF module achieves cross-scale feature alignment through a bidirectional pooling strategy (max pooling retains salient features, while average pooling smooths noise), effectively addressing metal artifact interference in X-rays and shallow feature noise issues. This delivers a 4.8% improvement in mAP@50 in ablation experiments. The AsDDet detection head adopts a fully decoupled design: its classification branch employs depthwise separable convolution to focus on local texture features, while the regression branch introduces DFL to model bounding box coordinates. This enables the model to achieve a 1.5% boost in mAP@50-95 while maintaining relatively streamlined parameters. The improved EfficiCIoU loss function significantly enhances the model’s detection sensitivity for small pediatric fractures (averaging only 40% the size of adults) by incorporating an absolute width–height penalty term.

4.2. Deployment-Oriented Model Selection and Optimization

YOLOv8-nano is chosen as the baseline for improvement in this study, mainly based on its technical adaptability and deployment advantages in real medical scenarios. Compared to YOLOv10, YOLOv8’s mature NMS architecture has deep optimization support for all kinds of hardware platforms, and its standardized modular design facilitates the integration of innovative components such as SSFF and AsDDet. Meanwhile, YOLOv10’s NMS-free solution, although theoretically advanced, requires the development of additional customized operators to adapt to different inference frameworks, which significantly increases the complexity of clinical deployment. More importantly, medical scenarios have stringent requirements for model efficiency: on the one hand, emergency diagnosis requires real-time inference speed, which requires the model parameter count to be extremely streamlined, and the parameter count of YOLOv8 is smaller than that of YOLOv10; on the other hand, the spatial characteristics of fracture targets, which usually take up less than 5% of pixels in an image, make it easy for large models to waste computational resources and time on irrelevant backgrounds. YOLOv8-nano not only meets these basic requirements but also has a comprehensive pruning and quantization toolchain that can directly convert the model to INT8 format or perform channel pruning, which makes it an ideal choice for healthcare AI deployments with a seamless “research-on-the-ground” approach.

4.3. Limitations and Prospects

Although ASC-YOLO has achieved remarkable results in fracture detection, there is still room for optimization in very low-contrast fracture detection and cross-age adaptation. Meanwhile, the adaptability of the model to 3D medical images still needs to be verified. Future research will focus on developing dual-energy X-ray-based feature enhancement techniques to better distinguish metal implants from real fractures, as well as exploring 3D extension schemes for the SSFF module so that it can effectively handle multimodal medical images such as CT/MRI. In addition, the generalization performance of the model is expected to be further improved by establishing a more balanced multicenter dataset, especially by increasing the proportion of fracture samples in children. The development of dynamic model compression technology will also be an important direction to realize adaptive adjustment of model complexity according to the needs of different clinical scenarios. These improvements will significantly enhance the practical value and applicability of ASC-YOLO in real medical environments.

5. Conclusions

This paper presents ASC-YOLO, a novel lightweight detection framework tailored for pediatric fracture detection in X-ray images. By introducing the SSFF module and the AsDDet, the proposed method significantly improves the model’s ability to detect small and irregular targets under complex backgrounds. In addition, the Efficient CIoU loss and discrete probability distribution regression enhance localization precision and robustness. Extensive experiments on the GRAZPEDWRI-DX dataset demonstrate that ASC-YOLO achieves superior performance compared to existing methods in terms of precision, recall, mAP, F1-score, and Jaccard Index. Furthermore, the results of experiments performed on a tumor dataset confirm the generalization capability of the model across different medical tasks. In future work, we aim to extend ASC-YOLO to 3D radiographic data and explore its potential in other clinical applications, such as automated treatment planning and lesion classification.

Author Contributions

Resources, Y.W.; Writing—original draft, S.D.; Supervision, Y.W.; Funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Görgens, S.; Patel, D.; Keenan, K.; Fishbein, J.; Bullaro, F. Assessing the Variability of Antibiotic Management in Patients with Open Hand Fractures Presenting to the Pediatric Emergency Department. Pediatr. Emerg. Care 2022, 38, 502–505. [Google Scholar] [CrossRef] [PubMed]

- George, M.P.; Bixby, S. Frequently missed fractures in pediatric trauma: A pictorial review of plain film radiography. Radiol. Clin. 2019, 57, 843–855. [Google Scholar] [CrossRef] [PubMed]

- Nagy, E.; Janisch, M.; Hržić, F.; Sorantin, E.; Tschauner, S. A pediatric wrist trauma x-ray data-set (grazpedwri-dx) for machine learning. Sci. Data 2022, 9, 222. [Google Scholar] [CrossRef] [PubMed]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 18–20 November 2024; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. In Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Volume 37, pp. 107984–108011. [Google Scholar]

- Cao, K.; Liu, M.; Su, H.; Wu, J.; Zhu, J.; Liu, S. Analyzing the noise robustness of deep neural networks. IEEE Trans. Vis. Comput. Graph. 2020, 27, 3289–3304. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Fan, X.; Jiang, J.; Liu, R.; Luo, Z. Learning a deep multi-scale feature ensemble and an edge-attention guidance for image fusion. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 105–119. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. In Proceedings of the 4th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; Volume 33, pp. 21002–21012. [Google Scholar]

- Hua, J.; Wang, Z.; Zou, Q.; Xiao, J.; Tian, X.; Zhang, Y. Re-decoupling the classification branch in object detectors for few-class scenes. Pattern Recognit. 2024, 153, 110541. [Google Scholar] [CrossRef]

- Wen, N.; Guo, R.; Ma, D.; Ye, X.; He, B. AIoU: Adaptive bounding box regression for accurate oriented object detection. Int. J. Intell. Syst. 2022, 37, 748–769. [Google Scholar] [CrossRef]

- Dibo, R.; Galichin, A.; Astashev, P.; Dylov, D.V.; Rogov, O.Y. Deeploc: Deep learning-based bone pathology localization and classification in wrist X-ray images. In Proceedings of the International Conference on Analysis of Images, Social Networks and Texts, Yerevan, Armenia, 28–30 September 2023; Springer Nature: Cham, Switzerland, 2023; pp. 199–211. [Google Scholar]

- Ahmed, A.; Imran, A.S.; Manaf, A.; Kastrati, Z.; Daudpota, S.M. Enhancing Wrist Fracture Detection with YOLO. arXiv 2024, arXiv:2407.12597. [Google Scholar]

- Ju, R.Y.; Cai, W. Fracture detection in pediatric wrist trauma X-ray images using YOLOv8 algorithm. Sci. Rep. 2023, 13, 20077–20090. [Google Scholar] [CrossRef] [PubMed]

- Chien, C.T.; Ju, R.Y.; Chou, K.Y.; Chiang, J.S. YOLOv9 for fracture detection in pediatric wrist trauma X-ray images. Electron. Lett. 2024, 60, e13248. [Google Scholar] [CrossRef]

- Goceri, E. Medical image data augmentation: Techniques, comparisons and interpretations. Artif. Intell. Rev. 2023, 56, 12561–12605. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Dai, Y.; Liu, F.; Chen, W.; Shi, L. An anatomy-aware framework for automatic segmentation of parotid tumor from multimodal MRI. Comput. Biol. Med. 2023, 161, 107000. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Zhang, X.; Zhang, H.; Liu, Y.; Zhan, Y.; Lukasiewicz, T. EFPN: Effective medical image detection using feature pyramid fusion enhancement. Comput. Biol. Med. 2023, 163, 107149. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Huang, D.; Wang, Y. Path aggregation network for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. NAS-FPN: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Huang, H.; Chen, Z.; Zou, Y.; Lu, M.; Chen, C. Channel prior convolutional attention for medical image segmentation. Comput. Biol. Med. 2024, 178, 108784. [Google Scholar] [CrossRef] [PubMed]

- Kanakis, M.; Bruggemann, D.; Saha, S.; Georgoulis, S.; Obukhov, A.; Gool, L.V. Reparameterizing convolutions for incremental multi-task learning without task interference. In Proceedings, Part XX 16, Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 689–707. [Google Scholar]

- Zhang, X.; Zhou, H.; Ma, N.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 1234–1246. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1–15. [Google Scholar]

- Liu, B.; Liu, X.; Jin, X.; Stone, P.; Liu, Q. Conflict-averse gradient descent for multi-task learning. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021; Volume 34, pp. 18878–18890. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).