1. Introduction

Hyperspectral imaging (HSI) technology, which can capture spectral information of ground objects across hundreds of contiguous narrow bands, has become an important means of identifying subtle material differences on the Earth’s surface in the field of remote sensing, yet its unsupervised analysis remains highly challenging because of spectral redundancy and spatial complexity. It plays a critical role in various application scenarios such as precision agriculture [

1,

2,

3,

4,

5], environmental monitoring, and urban planning [

6,

7,

8,

9,

10]. In recent years, with the continuous advancement of imaging devices and the significant reduction in data acquisition costs, HSI data has become increasingly accessible, leading to the rapid accumulation of large-scale unlabeled hyperspectral images. However, such unlabeled data cannot be directly used in supervised learning, which severely limits the deep mining of hyperspectral information and its practical value. Therefore, how to effectively explore the internal structure of HSI data without relying on manual annotations has become a key research issue [

11,

12].

In this context, hyperspectral image clustering, as a typical unsupervised learning paradigm, has gradually emerged as an important direction for addressing this problem due to its ability to automatically discover potential categories and reveal spatial semantic structures. Traditional clustering algorithms typically treat each pixel in a hyperspectral image as an independent point, ignoring the spatial correlations between neighboring pixels. This often leads to fragmentation of spatially coherent regions and the loss of critical local structural information [

13,

14]. In contrast, graph neural networks (GNNs) can naturally integrate spectral and spatial information, model complex pixel relationships, and require relatively less labeled data [

6].

Building on these observations, recent research has increasingly focused on combining traditional clustering heuristics [

1] (e.g., K-means) with deep learning techniques. The former provides rapid, unsupervised initial partitioning, which serves as a “weak supervision” signal for the latter. Deep models then refine these initial results by learning expressive representations that capture nonlinear relationships and enhance clustering performance. This iterative optimization strategy effectively mitigates the limitations posed by scarce annotations and significantly improves clustering accuracy and robustness in large-scale HSI scenarios [

15,

16].

Despite these notable advances, existing methods still face challenges such as high spectral dimensionality, redundancy, noise, and insufficient integration of spatial priors. While several approaches attempt to address these issues individually, they often overlook the synergy between local representation refinement and global structural modeling. Moreover, the decoupling of clustering and representation learning stages can lead to sub-optimal feature alignment, especially in complex HSI scenes.

To this end, we propose a Joint Spectral–Spatial Representation Learning (JSRL) framework for robust HSI clustering. JSRL adopts a hierarchical two-stage pipeline that progressively captures both fine-grained pixel-level features and global structural dependencies. In the first stage, we perform unsupervised spectral clustering to generate pseudo-labels, which guide a residual Graph Attention Network (GAT) for learning refined spectral-spatial representations. In the second stage, we aggregate pixels into superpixels and construct a region-level graph, where a Variational Graph Autoencoder (VGAE) captures latent structural relationships. To further optimize the global topology, we apply a Quantum-behaved Particle Swarm Optimization (QPSO) strategy to fine-tune the learned embeddings. This framework jointly integrates pseudo-supervision, local context refinement, and global structure modeling, effectively mitigating spectral redundancy, enhancing spatial consistency, and improving clustering generalization.

Our main contributions are as follows:

We propose JSRL, a novel hierarchical clustering framework that unifies pixel-level pseudo-supervision and superpixel-level structural modeling to effectively capture both local spectral–spatial patterns and global contextual dependencies.

We design a two-stage learning pipeline where residual GATs are used for context-aware refinement of pixel-wise representations, and a VGAE-QPSO module is introduced to learn and optimize structure-aware embeddings at the region level.

Extensive experiments on three benchmark hyperspectral datasets demonstrate that JSRL consistently outperforms existing state-of-the-art clustering methods in terms of accuracy, robustness, and generalization under varying data conditions.

2. Related Works

Hyperspectral image (HSI) clustering has been extensively studied through a variety of approaches, which can be broadly categorized into: (1) traditional clustering methods and (2) representation learning-based methods. While traditional methods provide intuitive clustering priors, they often struggle with the high dimensionality and complex spatial patterns inherent in HSI data. Consequently, recent research has increasingly focused on learning-based approaches that aim to improve feature representation and clustering robustness.

2.1. Traditional Clustering Methods

Traditional HSI clustering methods mainly include centroid-based, density-based, bio-inspired, and graph-based approaches. Centroid-based algorithms, such as K-means [

17], assign pixels to clusters by minimizing intra-cluster spectral distances. Although simple and efficient, these methods are highly sensitive to initialization and noise and fail to exploit spatial context, resulting in fragmented clusters [

13,

18,

19]. Density-based methods like DBSCAN [

20] and Density Peak Clustering [

21] detect clusters based on data density, offering robustness to outliers [

22]. However, their effectiveness diminishes on high-dimensional HSI data due to difficulty in estimating appropriate density thresholds. Bio-inspired algorithms, such as genetic algorithms and particle swarm optimization [

23], provide global search capabilities to avoid local optima. Yet, these methods are typically computationally expensive and sensitive to hyperparameter settings [

13]. Graph-based methods construct affinity graphs where each node corresponds to a pixel, and edges encode similarity. Sparse Subspace Clustering [

24] and Low-Rank Representation [

25] are popular for their ability to capture global data structure. However, their scalability is limited by high computational cost. Efficient variants using anchor graphs [

26] or superpixel segmentation [

27] reduce complexity but still suffer from insufficient spectral-spatial fusion and sensitivity to noise. In summary, although traditional clustering methods lay a solid foundation for HSI analysis, their limited ability to handle high-dimensional spectral redundancy, spatial correlation, and data heterogeneity motivates the exploration of more advanced, learning-based approaches.

2.2. Deep Representation Learning-Based Methods

The advent of deep learning has enabled powerful representation learning techniques that automatically extract meaningful spectral–spatial features for HSI clustering in an end-to-end manner. Early convolutional neural network (CNN) architectures [

6] exploit local spatial continuity and have shown effectiveness in feature extraction. However, CNNs generally struggle to model non-local dependencies and complex spectral variations. Subspace learning approaches [

28] aim to embed high-dimensional spectral data into compact latent spaces, improving efficiency and feature compactness. Nevertheless, they often overlook the spatial consistency among neighboring pixels, limiting their representational power. To overcome limitations of handcrafted priors and supervision scarcity, self-supervised and contrastive learning methods [

29,

30] have been introduced to enhance feature discriminability without requiring manual annotations. While promising, these approaches depend heavily on the quality of pseudo-labels or augmentation strategies, which are difficult to design for complex HSI datasets. More recently, graph neural networks have emerged as a powerful paradigm to jointly model spectral similarity and spatial topology via edge-weighted message passing. Methods incorporating residual Graph Attention Networks [

31] or spectral-spatial co-attention mechanisms have achieved improved clustering performance by effectively fusing local and global contextual information. Despite these advances, most existing approaches focus on either pixel-level feature refinement or treat clustering and representation learning as separate stages, which restricts their ability to capture multi-scale structural dependencies comprehensively. Some recent work has increasingly adopted graph contrastive objectives to bolster unsupervised feature learning. Qi et al. [

32] introduce SPGCC, which designs semantic-invariant augmentations at the superpixel level and achieves state-of-the-art gains on Indian Pines and Salinas. To further reduce model complexity, Yang et al. [

33] propose S

2GCL, a lightweight MLP-based framework that removes data augmentation entirely yet maintains competitive accuracy on four public datasets. Complementarily, Wang et al. [

34] present MLGSC, a multi-level subspace contrastive scheme that unifies local–global graph representations and reports OA above 99% on Pavia University. These approaches highlight the trend toward contrastive, graph-centric representation learning, but they all rely on fixed affinity graphs, whereas our JSRL jointly optimizes the graph topology during training.

These limitations underscore the need for a unified and scalable framework that integrates local spectral-spatial refinement with global structural modeling. Motivated by this, we propose the Joint Spectral-Spatial Representation Learning (JSRL) framework, which hierarchically combines weak supervision, residual graph modeling, and global topology optimization to address the core challenges of Hyperspectral image clustering.

3. Methodology

3.1. Overview

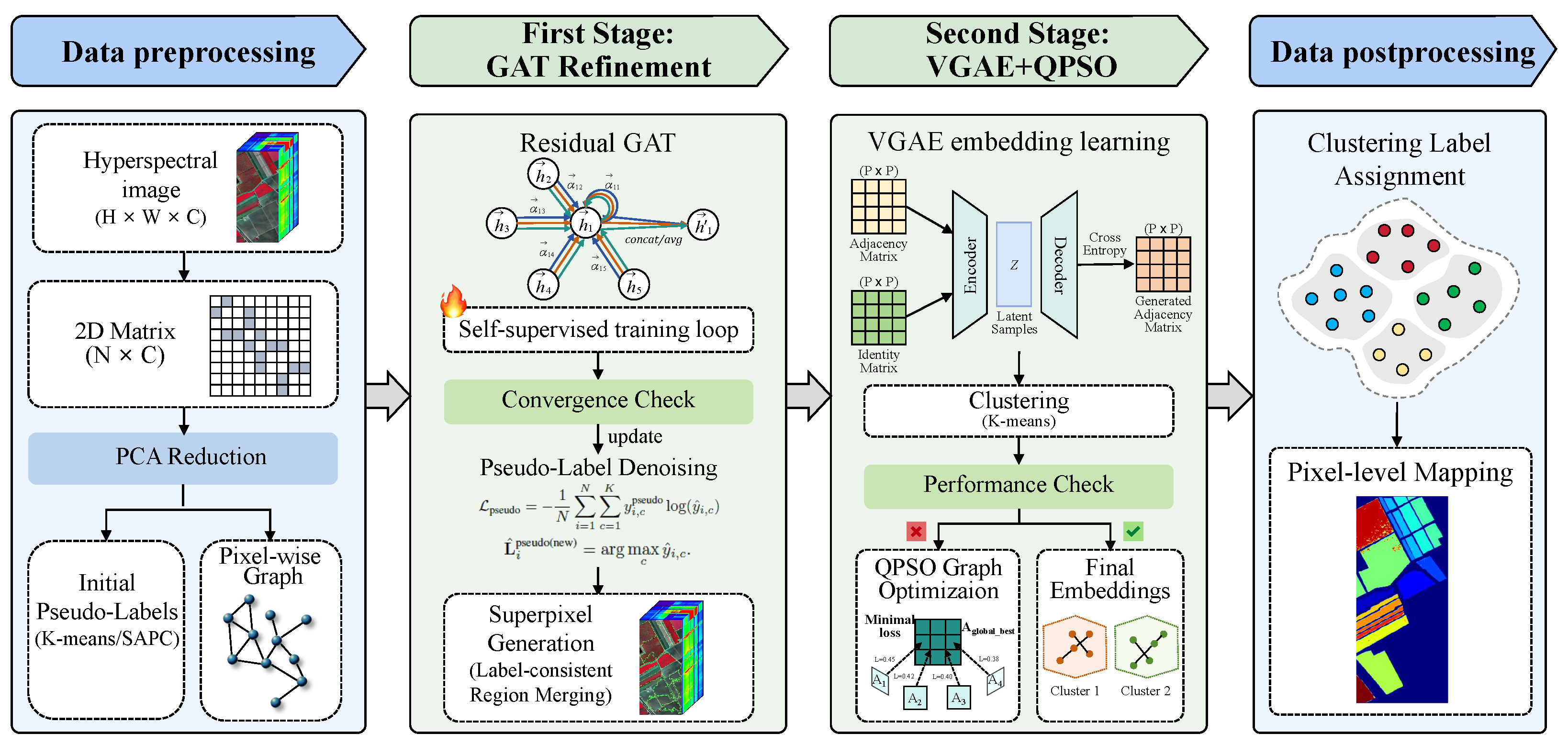

HSI clustering remains a challenging task due to the high spectral dimensionality, redundancy among bands, and the scarcity of labeled data. These factors often result in noisy feature representations, ambiguous cluster boundaries, and unstable clustering outcomes. To address these challenges, we propose a Joint Spectral–Spatial Representation Learning (JSRL) framework that hierarchically integrates pseudo-supervised pixel-level refinement and structure-aware global modeling for robust and generalizable clustering. As illustrated in

Figure 1, our approach proceeds in two stages. The first stage obtains context-aware spectral-spatial pixel embeddings guided by pseudo-labels derived from unsupervised clustering on PCA-reduced features. A residual Graph Attention Network (GAT) refines these embeddings by aggregating local neighborhood information, enhancing feature robustness and discrimination. The second stage aggregates refined pixel features into superpixels to build a spatial adjacency graph. A Variational Graph Autoencoder (VGAE) encodes the graph topology into latent embeddings, which are further optimized via Quantum-behaved Particle Swarm Optimization (QPSO) to improve cluster separability. Finally, fused representations combining topology-aware embeddings and superpixel features are clustered to generate the final labels. This hierarchical design effectively combines pseudo-supervision, local spectral-spatial context, and global topological structure, enabling stable clustering performance across diverse HSI datasets.

3.2. Spectral Feature Compression and Pseudo-Label Initialization

Direct clustering of raw hyperspectral pixels is hindered by the curse of dimensionality and the substantial redundancy among spectral bands, which not only inflates computational cost but also amplifies noise and overfitting risk. To obtain a compact and denoised representation, we first perform dimensionality reduction using Principal Component Analysis (PCA), a linear technique well-suited for HSI data due to its robustness, interpretability, and efficiency.

Formally, given a hyperspectral image

, where

H and

W denote spatial dimensions and

C is the number of spectral bands, we reshape it into a two-dimensional matrix:

where each row corresponds to the spectral vector of a single pixel. To reduce spectral redundancy and noise, we apply Principal Component Analysis (PCA), a linear dimensionality reduction technique well-suited for hyperspectral data due to its interpretability and computational efficiency:

where

is chosen to retain a high proportion (e.g., 95–99%) of the total spectral variance. The resulting low-dimensional representation

is both compact and more robust to noise, facilitating subsequent clustering.

In the absence of ground-truth labels, we utilize unsupervised clustering to obtain initial pseudo-labels. Rather than relying solely on spectral similarity, we adopt Spatially Adaptive Probabilistic Clustering (SAPC), which incorporates spatial priors into a probabilistic clustering framework to improve label smoothness and local consistency:

where

K denotes the number of clusters. SAPC ensures that pixels within spatially adjacent regions with similar spectral signatures are more likely to share the same label, mitigating the fragmentation problem of purely spectral-based methods. The resulting pseudo-labels provide a coarse but informative initialization for the GAT-based refinement stage, enabling spatial context to be gradually infused into the learned representations.

3.3. Pixel-Level Representation Refinement via Residual GAT

To incorporate spatial context into spectral features and enhance pixel-wise clustering, given the PCA-reduced spectral features and the initial pseudo-labels from SAPC, we model the hyperspectral image as a graph and iteratively refine pseudo-labels via a residual Graph Attention Network (GAT) under self-supervision.

Each pixel

i is represented as a graph node with an initial feature vector formed by concatenating its PCA-reduced spectral vector

and its normalized spatial coordinate

:

An undirected graph

is constructed by connecting spatially adjacent and spectrally similar pixels. Specifically, an edge is formed between pixels

i and

j if they are within each other’s

k-nearest neighbors in the image plane and satisfy the condition:

where

is a spectral similarity threshold. The resulting binary adjacency matrix

is symmetrically normalized to ensure numerical stability:

where

is the degree matrix of

.

Based on the constructed graph, we apply a multi-layer residual GAT to refine node features by aggregating neighborhood context. At the

l-th layer, the feature of node

i is updated as follows:

where

is the number of attention heads,

denotes the learned attention coefficient from node

j to

i in head

a,

is a projection matrix, and

is a non-linear activation function (e.g., ReLU). The residual connection stabilizes training and preserves original information.

Without access to ground-truth labels, we train the GAT in a self-supervised fashion to predict the pseudo-labels

generated by SAPC. The training objective is the standard cross-entropy loss:

where

if pixel

i belongs to cluster

c, and

is the GAT-predicted probability.

After each training iteration, pseudo-labels are updated as follows:

The resulting pseudo-labels incorporate both spectral discrimination and spatial consistency, forming a robust foundation for the subsequent superpixel-level clustering and topological graph modeling.

Intuitively, the residual Graph Attention Network can be viewed as a learned edge-aware filter: each pixel first listens to its immediate neighborhood through attention-weighted aggregation, then adds this contextual message back to its own feature via a skip connection, much like sharpening an image with information from surrounding pixels. This mechanism jointly promotes spectral discrimination and local spatial coherence while keeping the original signal intact.

3.4. Superpixel-Level Clustering and Graph Optimization

To preserve meaningful spectral–spatial information, we adopt a superpixel-based strategy that aggregates pixel-level features into spatially coherent regions, effectively reducing data complexity.

Based on the refined pixel-level pseudo-labels

, we first generate superpixels by merging spatially connected pixels sharing the same label. For each superpixel

, a representative feature vector

is extracted by averaging the original spectral features and normalized spatial coordinates of its constituent pixels:

where

is the spectral vector, and

is the spatial coordinate. To ensure numerical stability, we further normalize or project the aggregated features using PCA. Small superpixels below a threshold

are merged into their nearest larger neighbors to ensure compact and balanced graph nodes.

We then construct a superpixel-level graph , where nodes represent superpixels and edges link spatially adjacent superpixels with similar spectral features. An edge is formed between superpixels and if they share a boundary and satisfy , where is a spectral similarity threshold. The resulting binary adjacency matrix encodes spatial and spectral connectivity.

To capture high-level graph structure, we adopt a Variational Graph Autoencoder (VGAE) [

35] to encode each node into a latent representation

, which reconstructs adjacency via

where

is the sigmoid activation. However, the manually constructed adjacency matrix

may still suffer from threshold sensitivity and noisy connectivity. To alleviate this, we introduce a topology refinement step using Quantum-behaved Particle Swarm Optimization (QPSO), which seeks an optimized adjacency matrix that enhances the reconstruction ability of VGAE while maintaining sparsity:

where

controls the trade-off between reconstruction accuracy and connectivity sparsity.

This joint modeling and refinement pipeline results in a topology-aware embedding space , which encodes compact, structure-preserving representations suitable for final clustering. Compared to traditional clustering on raw spectral features, our approach yields more stable clusters by exploiting multi-scale contextual and topological cues.

Think of the VGAE encoder as compressing the superpixel graph into an “interactive map”: superpixels that look alike (similar spectra) and live next door (share a boundary) are placed close together, whereas dissimilar or distant regions are mapped farther apart. The decoder then attempts to redraw the road network (edges) from these coordinates; if two superpixels end up far apart on the map, it assigns a low connection probability. Minimizing the reconstruction loss therefore yields a latent space that faithfully preserves spectral–spatial structure while filtering out noise.

3.5. Quantum-Driven Optimization of Superpixel Graph Structures

Although the superpixel graph provides a compact and spatially coherent abstraction of the hyperspectral scene, the initial adjacency matrix is typically constructed via hand-crafted criteria, relying on fixed thresholds of spectral similarity and spatial adjacency. This heuristic construction may lead to sub-optimal graph topology that fails to accurately reflect the intrinsic structural relationships between regions.

To address this issue, we propose a quantum-inspired optimization strategy based on Quantum-behaved Particle Swarm Optimization (QPSO). QPSO enables a data-driven refinement of the graph structure by iteratively updating candidate adjacency matrices in a high-dimensional latent space. The goal is to discover an optimized graph topology that facilitates accurate reconstruction and meaningful representation learning via a Variational Graph Autoencoder (VGAE).

Formally, each particle in the QPSO swarm represents a candidate adjacency matrix

. For each particle

p, a VGAE is trained to encode node features into latent embeddings

, and the reconstruction loss is defined as follows:

where

denotes the sigmoid activation function. To encourage sparsity and reduce over-connectivity, we impose an

-norm regularization, resulting in the total optimization objective:

where

controls the balance between structure fidelity and sparsity.

The QPSO algorithm iteratively updates each by sampling from a quantum potential field centered around the global best adjacency matrix . Compared to conventional PSO, QPSO allows more diverse exploration in non-convex search spaces and alleviates the risk of premature convergence. The optimization process terminates when the global loss stagnates or a maximum number of iterations is reached.

Upon convergence, the optimized adjacency matrix

is used to retrain the VGAE, yielding refined latent embeddings

. These embeddings are then concatenated with the spectral–spatial descriptors

of each superpixel to form the final representation:

K-means is then applied to the fused features to generate the final cluster assignments .

The success of this optimization process fundamentally relies on the quality of the initial superpixel generation. The preceding residual GAT module ensures that each superpixel aggregates pixels with coherent spectral-spatial semantics, providing a strong prior for region-level modeling. This hierarchical pipeline—combining pixel-wise refinement, superpixel aggregation, and quantum-driven graph optimization—enables progressive enhancement of representation quality and clustering robustness.

Quantum-behaved Particle Swarm Optimisation (QPSO) can be pictured as a flock of candidate adjacency matrices “flying” through the search space. Each particle proposes a slightly rewired graph; after a short VGAE training session, we measure how well that graph can be reconstructed and how sparse it remains. The best-performing particle pulls the rest of the flock towards promising regions, while random quantum jumps maintain exploration. Iterations continue until the overall loss change becomes negligible, producing an adjacency matrix that reflects the scene topology yet avoids spurious connections.

4. Experiments

To comprehensively validate the effectiveness and generality of our proposed JSRL framework, we conduct extensive experiments on three widely used hyperspectral image datasets: Salinas, Pavia University, and Pavia Center. These datasets encompass diverse spectral and spatial characteristics, providing a robust testbed for evaluating clustering methods.

4.1. Datasets and Implementation Details

We evaluate JSRL on three widely-used hyperspectral datasets with diverse spectral and spatial characteristics. All datasets are preprocessed by removing noisy bands (e.g., water absorption), followed by normalization and PCA to retain 99% spectral energy. Salinas (AVIRIS sensor) contains pixels, 204 bands, and 16 agricultural classes. Pavia University and Pavia Center (ROSIS sensor) include and pixels with 103 and 102 bands retained, respectively, each containing 9 urban classes.

In JSRL, the minimum superpixel size is set to 20, and is used for graph construction. Both GAT and VGAE are trained for 200 epochs with a learning rate of 0.001. Experiments are run on a CPU with 16 GB RAM, and all results are averaged over 10 runs for reliability.

4.2. Evaluation Metrics and Compared Methods

To comprehensively evaluate clustering performance, we adopt there widely used metrics: Overall Accuracy (ACC), Normalized Mutual Information (NMI), and Adjusted Rand Index (ARI). Together, these metrics provide a multifaceted assessment of clustering quality by measuring label agreement with ground truth (ACC,) and cluster compactness and separation (NMI, ARI). This comprehensive evaluation ensures a robust and fair comparison of different clustering methods under various criteria.

We benchmark our proposed JSRL framework against a diverse set of conventional and state-of-the-art clustering approaches. Classical methods include K-means [

17] and FCM [

36], which serve as strong baselines for spectral clustering. In addition, we compare with more recent graph- and superpixel-based algorithms such as FSCAG [

37], SGCNR [

38], NCSC [

39], SGLSC [

40], and S

3AGC [

41], which exploit spatial and spectral contextual information for improved clustering. Furthermore, we utilize SAPC [

42] as a base pseudo-label generator, leveraging its ability to produce initial superpixel-level cluster assignments. Our JSRL framework then refines these pseudo-labels through hierarchical spectral–spatial representation learning, enabling more accurate and semantically consistent clustering results.

4.3. Experimental Results

Table 1 shows the quantitative clustering results of our JSRL framework and several state-of-the-art baselines on three hyperspectral datasets: Salinas, Pavia University, and Pavia Center. Across all datasets and metrics—Accuracy (ACC), Normalized Mutual Information (NMI), and Adjusted Rand Index (ARI)—JSRL consistently outperforms competing methods, demonstrating strong robustness and generalization. On the Salinas dataset, JSRL achieves the highest ACC of 79.39%, surpassing baselines like NCSC (74.97%) and SGLSC (76.64%). Despite the greater challenge of Pavia University due to lower inter-class variance, JSRL still leads with ACC of 66.53% and NMI of 58.51%. On Pavia Center, which has clearer spatial boundaries, JSRL attains an ACC of 93.75% and ARI of 95.02%, significantly improving over prior results. These gains come from JSRL’s hierarchical design. Unlike methods treating pixels independently or relying on fixed superpixel boundaries, JSRL refines pseudo-labels from lightweight base clusterers (e.g., K-means, SAPC [

42]) via a two-stage process. Pixel-wise GAT enhances spectral–spatial features, while superpixel-level VGAE with QPSO jointly optimizes global cluster structure, boosting semantic coherence and separability.

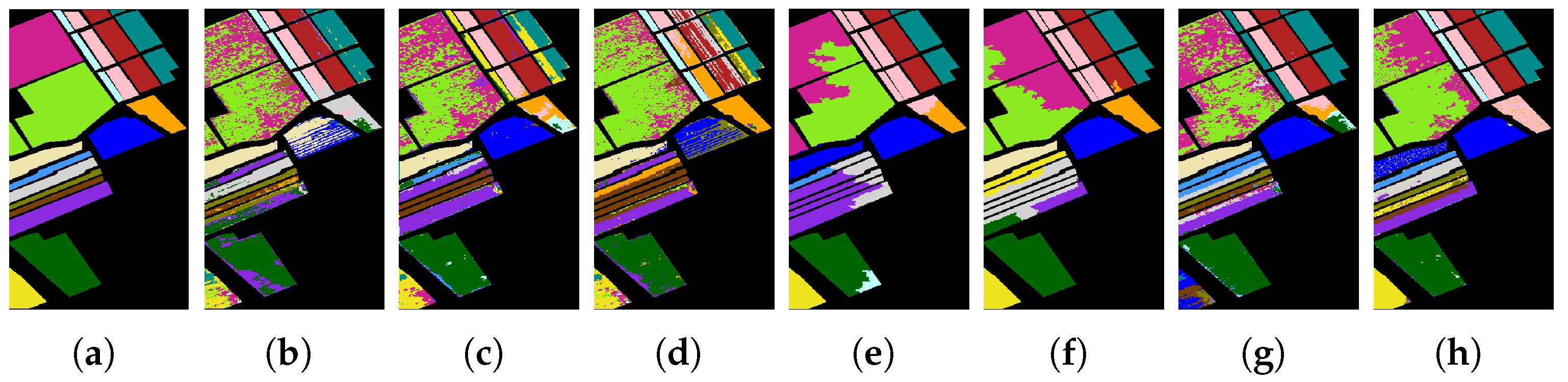

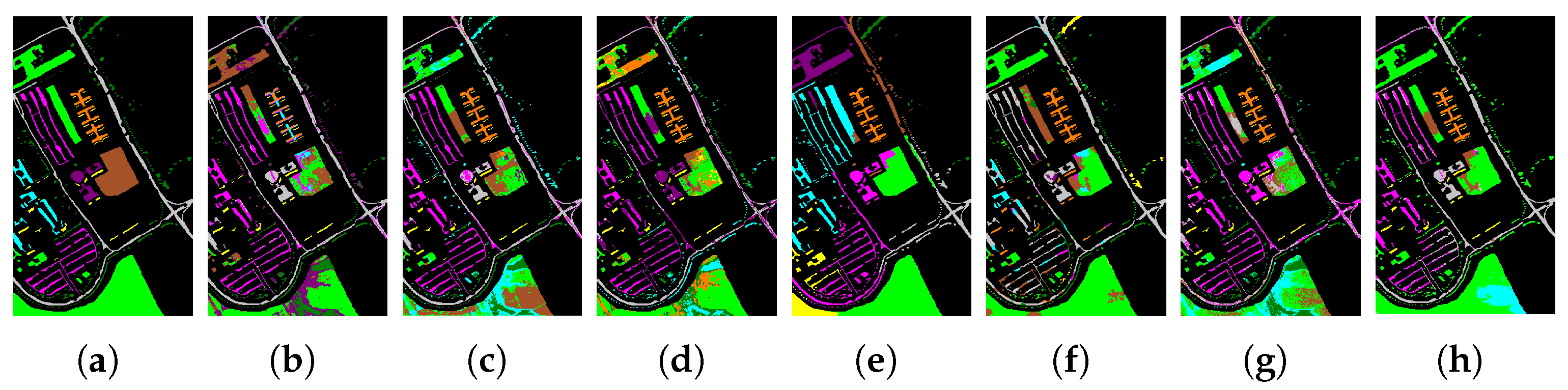

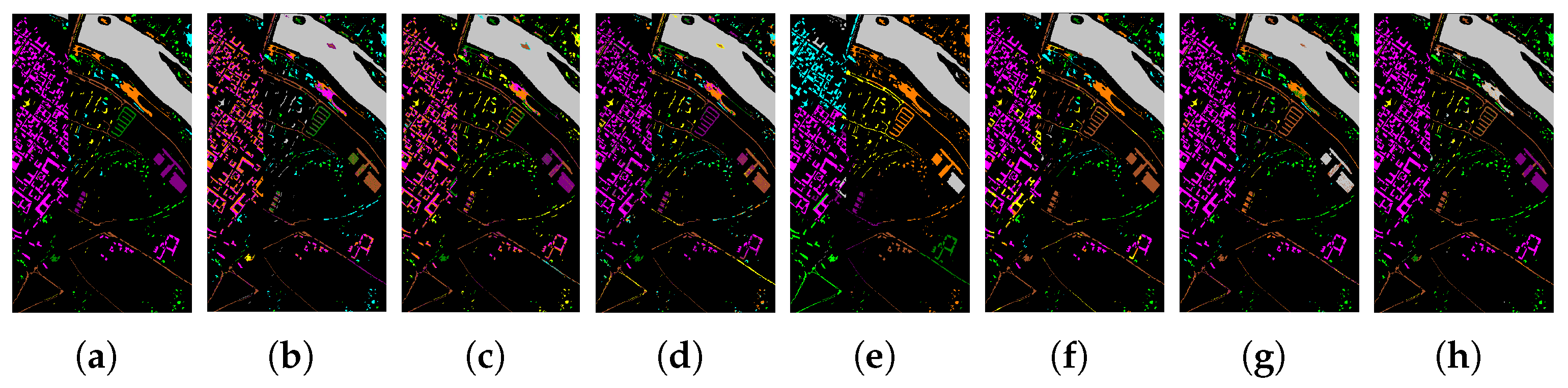

Figure 2,

Figure 3 and

Figure 4 illustrate the visual clustering results on the Salinas, Pavia University, and Pavia Center datasets, respectively. Across all three datasets, the proposed JSRL method consistently produces more coherent segmentation maps with clearer region boundaries and fewer fragmented regions compared to competing approaches. In particular, on the Pavia Center dataset, JSRL yields significantly cleaner structures, which aligns with its superior performance in quantitative metrics. These visual results further demonstrate JSRL’s ability to effectively capture the intrinsic spectral–spatial structure of hyperspectral data. Taken together, the quantitative and qualitative results jointly validate the robustness and effectiveness of the proposed JSRL framework for hyperspectral image clustering.

4.4. Ablation Results

To further understand the contribution of each JSRL component, we conduct an ablation study by removing GAT, VGAE, and QPSO modules, respectively.

Table 2 summarizes the ablation study of our JSRL framework across three hyperspectral image datasets. The results show that removing the GAT module consistently leads to the most significant performance degradation across all datasets (e.g., ACC drops from 79.39% to 75.03% on Salinas), underscoring its vital role in refining pixel-level representations via attention-based spectral–spatial aggregation. Eliminating the VGAE component also causes notable drops (e.g., ARI from 45.10% to 42.56% on Pavia University), confirming the importance of structure-aware encoding in modeling global topology. While the performance decline from removing QPSO is relatively modest, it is consistent across metrics and datasets, highlighting the benefit of graph structure refinement in boosting clustering separability. Overall, the ablation results validate that GAT, VGAE, and QPSO each play a distinct yet complementary role, and their integration is crucial for the effectiveness and generalizability of our spectral–spatial clustering framework.

4.5. Parameter Sensitivity and Runtime Results

For the hyperparameter study, we examine three key hyperparameters: the QPSO sparsity weight

, the number of residual GAT layers

L, and the latent dimension

d of VGAE. Each factor is swept while the others are fixed to their default values (

,

,

).

Table 3 shows that

JSRL is robust: accuracy varies by less than 3 percentage points across all tested settings and remains within

of the peak when

,

, and

. These observations justify the default configuration adopted throughout the paper and indicate a low risk of over-tuning.

We benchmark the wall-clock time required to cluster three benchmark scenes on a single NVIDIA RTX 3080 (CUDA 12, 16 GB RAM).

Table 4 reports averages over three runs. The full JSRL pipeline finishes the

Salinas scene in 38 s,

Pavia University in 66 s, and

Pavia Center in 71 s. Removing the Quantum-behaved Particle Swarm Optimization (–QPSO) eliminates the graph-refinement loop and reduces runtime by roughly one-third, while omitting the Variational Graph Auto-Encoder (–VGAE) further shortens training time. In contrast, discarding the residual Graph Attention Network (–GAT) yields only modest savings, because the model still executes two forward passes per epoch for feature normalization. These figures show the following: (i) VGAE training accounts for 40% of total time; (ii) QPSO adds a controllable overhead (25%) that can be parallelized; (iii) The complete JSRL remains practical—under 1 min—for city-scale HSI scenes, while the memory footprint never exceeds 1.8 GB.

5. Conclusions

We present a hierarchical Joint Spectral–Spatial Representation Learning (JSRL) framework for hyperspectral image clustering that effectively addresses challenges of high spectral dimensionality, redundancy, and limited labeled data. By integrating pseudo-supervised pixel-level feature refinement via a residual Graph Attention Network with global topology-aware modeling through a Variational Graph Autoencoder, our method robustly captures both local spectral–spatial context and global structural information. The introduction of Quantum-behaved Particle Swarm Optimization further enhances graph topology optimization, improving cluster separability. Extensive experiments demonstrate that JSRL achieves stable and superior clustering performance across multiple hyperspectral datasets, highlighting its potential as a scalable and generalizable solution for unsupervised HSI analysis.

Despite the encouraging results, the present JSRL framework still exhibits several limitations that merit further study:

Computational footprint. Although QPSO accelerates graph optimization relative to exhaustive search, the two-stage training (GAT + VGAE) is still ≈1.8× slower than lightweight spectral–spatial CNN baselines on a single CPU. Future work will explore surrogate-model acceleration and mixed-precision training.

Sensitivity to graph-construction hyperparameters. Performance declines when the superpixel size or the k-nearest-neighbor radius is badly tuned for scenes with extreme object-scale variability. We are investigating Bayesian optimization and meta-learning schemes to auto-tune these parameters online.

Over-segmentation in highly textured regions. The residual GAT occasionally produces fragmented clusters where local contrast is very high, leading to superfluous boundaries. Adaptive attention radii or texture-aware regularizers may mitigate this issue.

These observations not only delineate the current boundaries of JSRL but also chart clear directions for subsequent research and optimization.

Author Contributions

Conceptualization, X.L. and X.W.; methodology, X.L.; software, X.L.; validation, X.L., T.W. and X.W.; formal analysis, X.L.; investigation, X.L.; resources, X.W.; data curation, X.L.; writing—original draft preparation, X.L.; writing—review and editing, X.L. and T.W.; visualization, X.L.; supervision, X.W.; project administration, X.W.; funding acquisition, X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Hubei Key Laboratory of Intelligent Geo-Information Processing, grant number KLIGIP-2019B08.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Demir, M. Artificial Afterimage Algorithm: A New Bio-Inspired Metaheuristic Algorithm and Its Clustering Application. Appl. Sci. 2025, 15, 1359. [Google Scholar] [CrossRef]

- Du, B.; Shan, L.; Shao, X.; Zhang, D.; Wang, X.; Wu, J. Transform Dual-Branch Attention Net: Efficient Semantic Segmentation of Ultra-High-Resolution Remote Sensing Images. Remote Sens. 2025, 17, 540. [Google Scholar] [CrossRef]

- Li, R.; Hu, Y.; Li, L.; Guan, R.; Yang, R.; Zhan, J.; Cai, W.; Wang, Y.; Xu, H.; Li, L. Smwe-gfpnnet: A High-Precision and Robust Method for Forest Fire Smoke Detection. Knowl.-Based Syst. 2024, 289, 111528. [Google Scholar] [CrossRef]

- Zhang, X.; Hu, T.; He, J.; Yan, Q. Efficient Content Reconstruction for High Dynamic Range Imaging. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 7660–7664. [Google Scholar]

- Hou, X.; Wang, J.; Jiang, C.; Meng, Z.; Chen, J.; Ren, Y. Efficient Federated Learning for Metaverse via Dynamic User Selection, Gradient Quantization and Resource Allocation. IEEE J. Sel. Areas Commun. 2024, 42, 850–866. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A.; Liu, L.; Li, P.; Chen, J. Deep Hyperspectral Image Classification with Stacked Autoencoders. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7180–7192. [Google Scholar]

- Luo, Z.; Pan, J.; Hu, Y.; Deng, L.; Li, Y.; Qi, C.; Wang, X. RS-Dseg: Semantic Segmentation of High-Resolution Remote Sensing Images Based on a Diffusion Model Component with Unsupervised Pretraining. Sci. Rep. 2024, 14, 18609. [Google Scholar] [CrossRef] [PubMed]

- Scopacasa, B.; Candeloro, P. Asymmetric Distance in K-Means Clustering Enhances Quality of Cells Raman Imaging. Appl. Sci. 2025, 15, 4461. [Google Scholar] [CrossRef]

- Wang, J.; Chen, T.; Zheng, L.; Tie, J.; Zhang, Y.; Chen, P.; Luo, Z.; Song, Q. A Multi-Scale Remote Sensing Semantic Segmentation Model with Boundary Enhancement Based on UNetFormer. Sci. Rep. 2025, 15, 14737. [Google Scholar] [CrossRef]

- Niu, W.; Wang, H.; Zhuang, C. Adaptive Multiview Graph Convolutional Network for 3-D Point Cloud Classification and Segmentation. IEEE Trans. Cogn. Dev. Syst. 2024, 16, 2043–2054. [Google Scholar] [CrossRef]

- Wang, Z.; Yi, J.; Chen, A.; Chen, L.; Lin, H.; Xu, K. Accurate Semantic Segmentation of Very High-Resolution Remote Sensing Images Considering Feature State Sequences: From Benchmark Datasets to Urban Applications. ISPRS J. Photogramm. Remote Sens. 2025, 220, 824–840. [Google Scholar] [CrossRef]

- Cai, S.; He, W.; Huang, Z.; Li, Q.; Li, H.; Tao, D. A Novel Superpixel-Based Neighborhood Contrastive Learning for Hyperspectral Image Clustering. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5617–5630. [Google Scholar]

- Li, W.; Prasad, S.; Fowler, J.E.; Bruce, L.M. Hyperspectral Image Classification Using Gaussian Mixture Models and Markov Random Fields. IEEE Geosci. Remote Sens. Lett. 2015, 11, 153–157. [Google Scholar] [CrossRef]

- Xu, F.; Zhang, N.; Chen, Z.; Peng, P.; Xu, T. Panchromatic and Hyperspectral Image Fusion Using Ratio Residual Attention Networks. Appl. Sci. 2025, 15, 5986. [Google Scholar] [CrossRef]

- Chen, W.; Cheng, J.; Yang, S.; Sun, L. Explainable Two-Layer Mode Machine Learning Method for Hyperspectral Image Classification. Appl. Sci. 2025, 15, 5859. [Google Scholar] [CrossRef]

- Zeng, M.; Zhu, X.; Wan, L.; Xu, J.; Shen, L. Data-Driven Prediction of Grape Leaf Chlorophyll Content Using Hyperspectral Imaging and Convolutional Neural Networks. Appl. Sci. 2025, 15, 5696. [Google Scholar] [CrossRef]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A K-Means Clustering Algorithm. J. R. Stat. Soc. Ser. C Appl. Stat. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Dessureault, J.S.; Massicotte, D. DPDRC, a novel machine learning method about the decision process for dimensionality reduction before clustering. AI 2021, 3, 1–21. [Google Scholar] [CrossRef]

- Xu, K.; Wang, X.; Kong, C.; Feng, R.; Liu, G.; Wu, C. Identification of hydrothermal alteration minerals for exploring gold deposits based on SVM and PCA using ASTER data: A case study of Gulong. Remote Sens. 2019, 11, 3003. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining (KDD), Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Rodriguez, A.; Laio, A. Clustering by Fast Search and Find of Density Peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef]

- Xie, J.; Girshick, R.; Farhadi, A. Unsupervised Deep Embedding for Clustering Analysis. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; pp. 478–487. [Google Scholar]

- Jiao, L.; Yang, S.; Liu, F.; Wang, X.; Sun, T.; Schumacher, M. Self-Organizing Interval Type-2 Fuzzy Neural Network for Hyperspectral Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6799–6812. [Google Scholar]

- Elhamifar, E.; Vidal, R. Sparse Subspace Clustering: Algorithm, Theory, and Applications. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2765–2781. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yu, Y. Robust Subspace Segmentation by Low-Rank Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 171–184. [Google Scholar] [CrossRef]

- Liu, W.; He, J.; Chang, S.F. Large Graph Construction for Scalable Semi-Supervised Learning. In Proceedings of the 27th International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 679–686. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Lei, J.; Li, X.; Peng, B.; Fang, L.; Ling, N.; Huang, Q. Deep Spatial-Spectral Subspace Clustering for Hyperspectral Image. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2686–2697. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Cai, Y.; Li, S.; Deng, B.; Cai, W. Self-Supervised Locality Preserving Low-Pass Graph Convolutional Embedding for Large-Scale Hyperspectral Image Clustering. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Cai, W.; Yang, N.; Hu, H.; Cai, W. Unsupervised Self-Correlated Learning Smoothy Enhanced Locality Preserving Graph Convolution Embedding Clustering for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Peng, B.; Yao, Y.; Lei, J.; Fang, L.; Huang, Q. Graph-Based Structural Deep Spectral-Spatial Clustering for Hyperspectral Image. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Qi, J.; Jia, Y.; Liu, H.; Hou, J. Superpixel Graph Contrastive Clustering With Semantic-Invariant Augmentations for Hyperspectral Images. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 11360–11372. [Google Scholar] [CrossRef]

- Yang, A.; Li, M.; Ding, Y.; Xiao, X.; He, Y. An Efficient and Lightweight Spectral–Spatial Feature Graph Contrastive Learning Framework for Hyperspectral Image Clustering. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Wang, J.; Guan, R.; Gao, K.; Li, Z.; Li, H.; Li, X.; Tang, C. Multi-level Graph Subspace Contrastive Learning for Hyperspectral Image Clustering. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Maastricht, The Netherlands, 21–26 June 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Variational Graph Auto-Encoders. arXiv 2016, arXiv:1611.07308. [Google Scholar] [CrossRef]

- Bezdek, J.C.; Ehrlich, R.; Full, W. FCM: The Fuzzy C-Means Clustering Algorithm. Comput. Geosci. 1984, 10, 191–203. [Google Scholar] [CrossRef]

- Wang, Y.; Ji, Q.; Yuan, Q.; Zhang, H.; Li, X.; Wei, Y. Fast Spectral Clustering Using Anchor Graph for Large-Scale Hyperspectral Image. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1617–1621. [Google Scholar]

- Wang, R.; Nie, F.; Wang, Z.; He, F.; Li, X. Scalable Graph-Based Clustering with Nonnegative Relaxation for Large Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7352–7364. [Google Scholar] [CrossRef]

- Cai, Y.; Zhang, Z.; Ghamisi, P.; Ding, Y.; Liu, X.; Cai, Z.; Gloaguen, R. Superpixel Contracted Neighborhood Contrastive Subspace Clustering Network for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Zhao, H.; Zhou, F.; Bruzzone, L.; Guan, R.; Yang, C. Superpixel-Level Global and Local Similarity Graph-Based Clustering for Large Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Feng, X.; Jiang, X.; Cai, Z. Spectral-Spatial Superpixel Anchor Graph-Based Clustering for Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Jiang, G.; Zhang, Y.; Wang, X.; Jiang, X.; Zhang, L. Structured Anchor Learning for Large-Scale Hyperspectral Image Projected Clustering. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 2328–2340. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).